Paraphrase Identification with Lexical, Syntactic and Sentential Encodings

Abstract

1. Introduction

2. Related Work

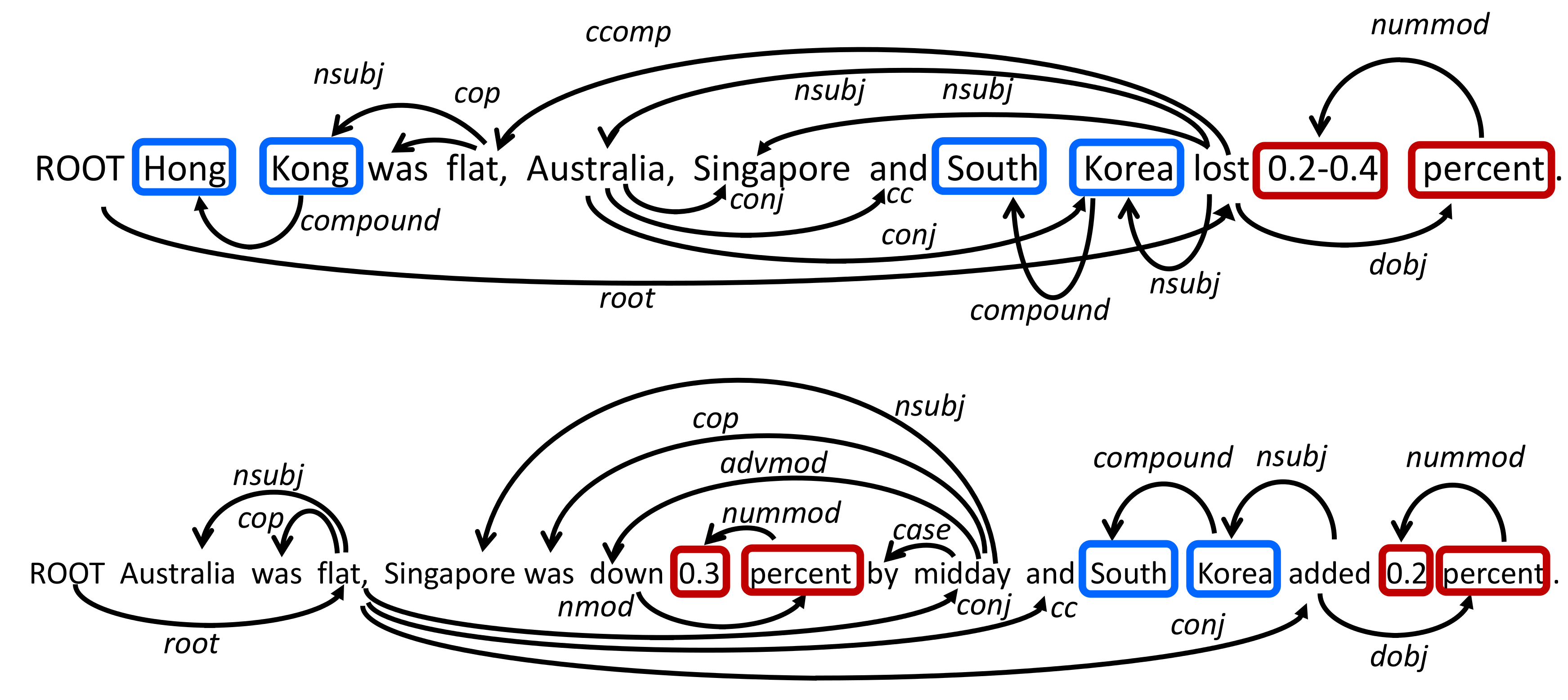

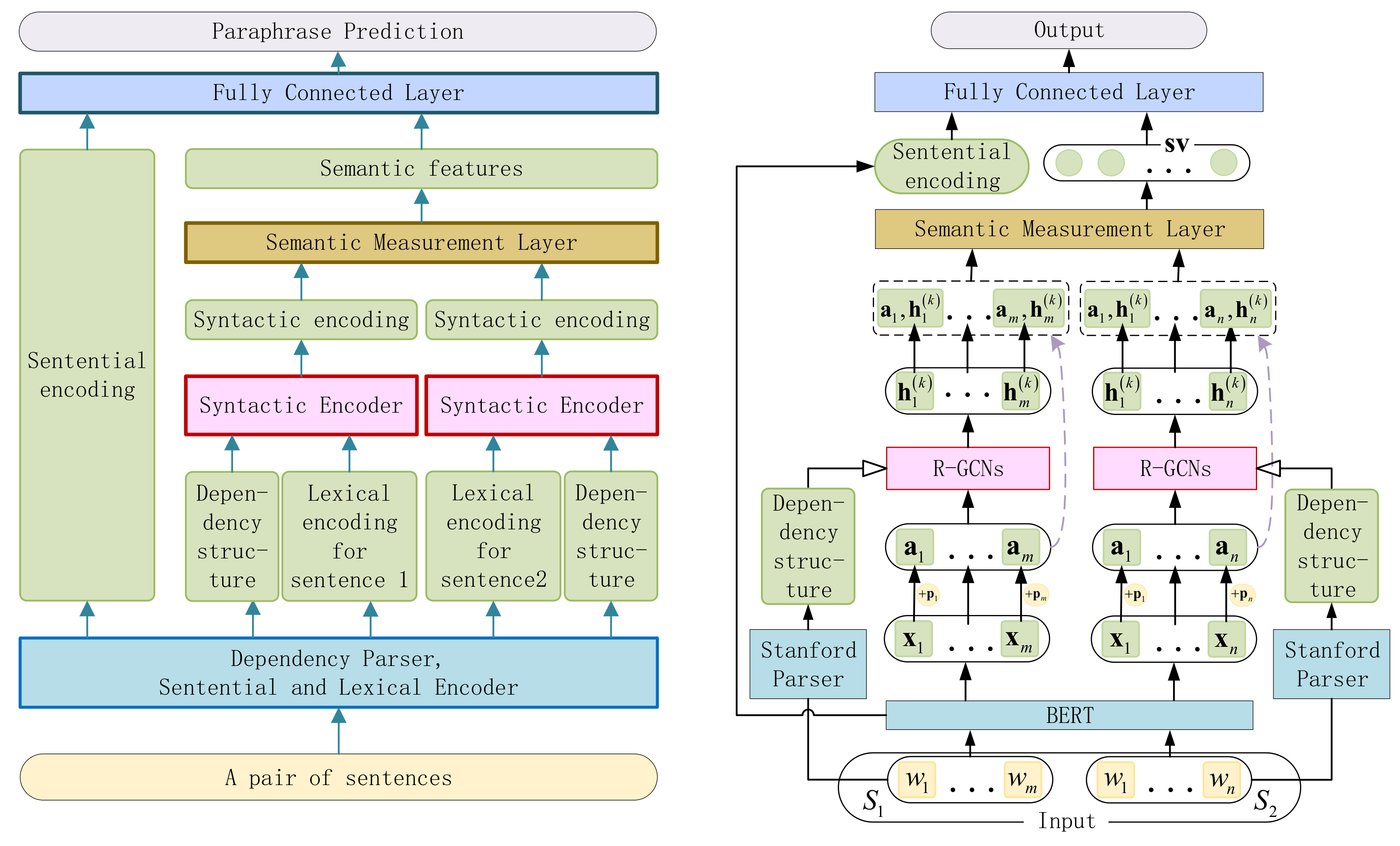

3. LSSE Learning Model

3.1. Lexical and Sentential Contexts Learning with BERT

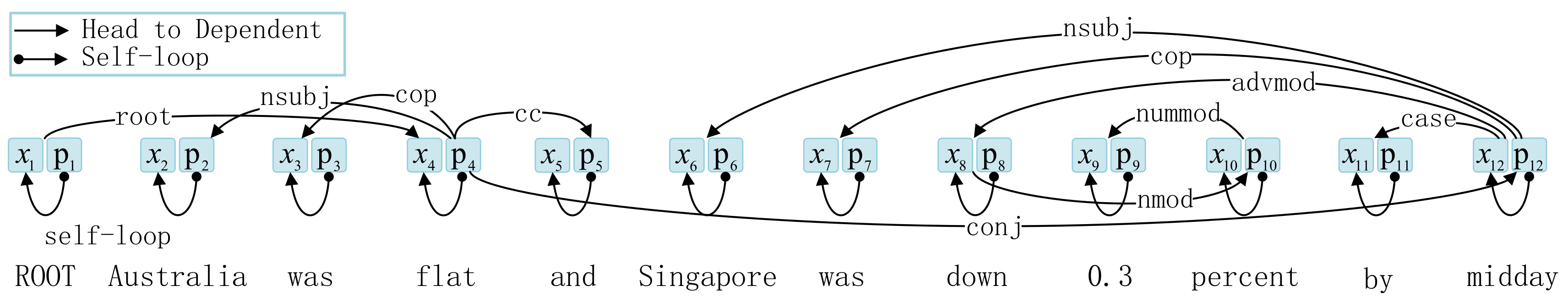

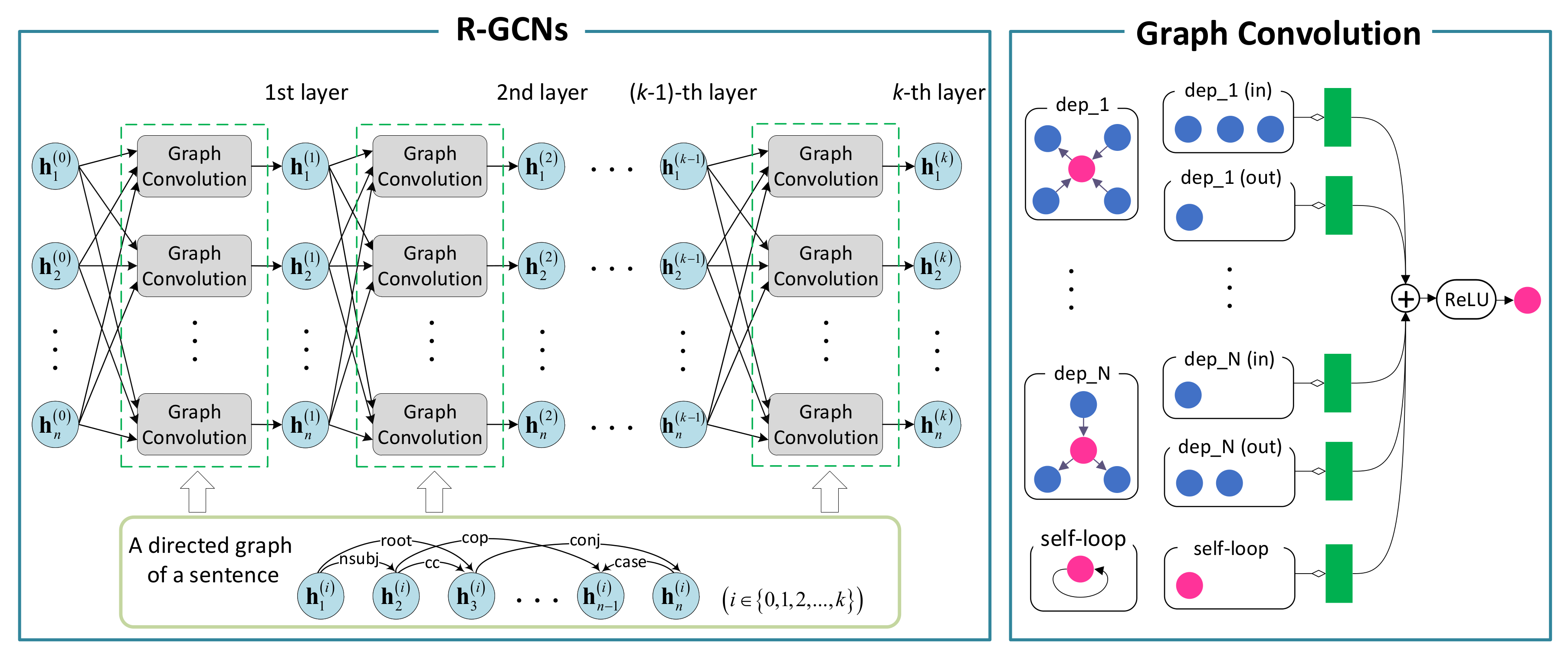

3.2. Syntactic Context Learning with R-GCNs

3.3. Paraphrase Identification

4. Experiments

4.1. Experimental Settings

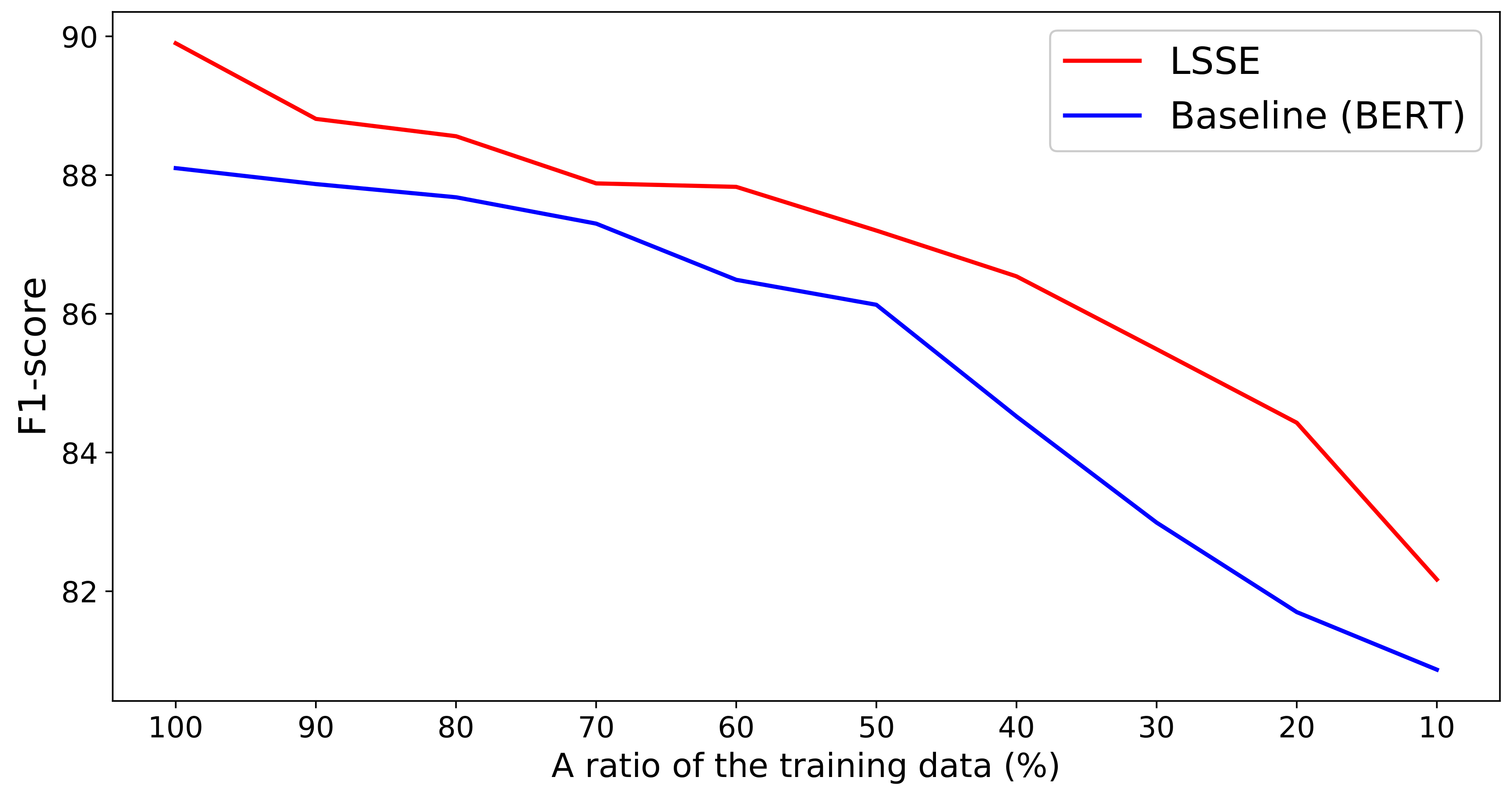

4.2. Main Results

4.3. Comparison with Related Work

- Str AlignStructural Alignment (Str Align) uses a hybrid representation, attributed relational graphs to encode lexical, syntactic and semantic information [48]. To create a relational graph, they used token, lemma, Part-of-Speech (POS) tag, Named Entity Recognition (NER) tag, and Word2Vec word embedding as an attribute of a node, and the dependency label by Stanford CoreNLP is attached to the edge as an attribute. Given two attributed relational graphs, the structural aligner generates an alignment. Then, the similarity score between the two graphs is applied to judge whether they are equivalent or not.

- BERT_base modelBERT is pre-train deep bidirectional representations from the unlabeled text by jointly conditioning on both left and right context in all layers [11]. We used BERT_base model which contains 12-layers, 12 self-attention heads and 768-dimensional of hidden size.

- GenSenGenSen is multi-task learning for sentence representations where a single recurrent sentence encoder is shared across multiple tasks, i.e., multi-lingual NMT, natural language inference, constituency parsing, and skip-thought vectors [49]. The model for multi-task learning is a sequence-to-sequence model. We compared GenSen which utilizes BERT_base model.

- ERNIE 2.0Enhanced Representation through kNowledge IntEgration (ERNIE) 2.0 is a multi-task learning model that learns pre-training tasks incrementally [25]. The architecture consists of pre-training and fine-tuning that is the same manner as BERT models. In the process of pre-training, ERNIE 2.0 continually construct unsupervised pre-training tasks with big data and prior knowledge involved, and then incrementally update the model through multi-task learning. In the fine-tuning with task-specific supervised data, the pre-trained model is applied to ten different NLP tasks in English and nine tasks in Chinese. We compared our model with ERNIE 2.0 using BERT_base model.

- Trans FTTransfer Fine-Tuning (Trans FT) is an extended model of BERT to handle phrasal paraphrase relations. The model can generate suitable representations for semantic equivalence assessment instead of increasing the model size [50]. The authors inject semantic relations between a sentence pair into a pre-trained BERT model through the classification of phrasal and sentential paraphrases. After the training, the model can be fine-tuned in the same manner as BERT models. The model achieves improvement on downstream tasks that only have small amounts of training datasets for fine-tuning.

- StructBERT_baseStructBERT_base incorporates language structures into pre-training BERT_base model [51]. The architecture uses a multi-layer bidirectional Transformer network. It amplifies the ability of the masked language model task by shuffling a certain number of tokens after token masking and predicting the right order. To capture the relationship between sentences, StructBERT randomly swaps the sentence order and predicts the next sentence and the previous sentence as a new sentence prediction task. The model learns the inter-sentence structure in a bidirectional manner as well as to capture the fine-grained word structure in every sentence. In the fine-tuning process, the pre-trained model is applied to a wide range of downstream tasks including GLUE benchmark, Stanford Natural Language inferences (SNLI corpus) and extractive question answering (SQuADv1.1) with good performance.

- FreeLB-BERTFree-Large-Batch aims to improve the generalization of pre-trained language models such as BERT, RoBERTAa [52], ALBERT [53] and T5 [54] by enhancing their robustness in the embedding space during finetuning on the downstream language understanding tasks [55]. The method adds norm-bounded adversarial perturbations to the embeddings on the input sentences by using a gradient-based method. Their technique on embedding-based adversaries can manipulate word embeddings which makes it produce powerful pre-trained language models. The results achieved new state-of-the-art on GLUE and AI2 Reasoning Challenge (ARC) benchmark datasets.

- ELECTRA-Base“Efficiently Learning an Encoder that Classifies Token Replacements Accurately” (ELECTRA) pre-trains the network as a discriminator that predicts for every token whether it is an original or a replacement. The model trains two neural networks, a generator, and a discriminator. For a given position, the discriminator predicts whether the token of this position comes from the data rather than the generator distribution. The generator is trained to perform masked language modeling. After pre-training, the model fine-tune the discriminator on downstream tasks. ELECTRA-Base that we compared it with our LSSE model is pre-trained in the same manner as BERT_base model.

- BiMPMA Bilateral Multi-Perspective Matching (BiMPM) model [46] encodes given two sentences with a BiLSTM encoder and the two encoded sentences are matched two directions. In each matching direction, each time step of one sentence is matched against all time-steps of another sentence from multiple perspectives. Then, another BiLSTM layer is utilized to aggregate the matching results into a fixed-length matching vector. Finally, a decision is made through a fully connected layer. The authors reported that the experimental results on standard benchmark datasets including QQP showed that the model achieved state-of-the-art performance on all the tasks.

- SSEShortcut-Stacked Sentence Encoder Model (SSE) is a model which enhances multi-layer BiLSTM with skip connection to avoid training error accumulation [57,58]. The input of the k-th BiLSTM layer which is the combination of outputs from all previous layers represents the hidden state of that layer in both directions. The final sentence embedding is the row-based max pooling over the output of the last BiLSTM layer. The experimental results by using eight benchmark datasets including QQP dataset shows that SSE improvs overall performance compared with the three baselines, InferSent [59], Pairwise word interaction model [60], and the decomposable attention model [61], especially it works well in the case that the number of training data is small.

4.4. Ablation Study

4.5. Qualitative Analysis of Errors

- Inclusion relation between sentences: As we mentioned in Table 3, this error is that two sentences share the same contents but one sentence has more detailed information of the other.

- (1)

- “There’s a Jeep in my parents’ yard right now that’s not theirs”, said Perry, whose parents are vacationing in North Carolina.

- (2)

- “There’s a Jeep in my parents’ yard right now that’s not theirs”, she said.

Sentence (1) and (2) are similar content and our model identified these sentences as paraphrases. However, according to the Microsoft Research definitions, https://www.microsoft.com/en-us/download/details.aspx?id=52398 these sentences should be identified as “non-paraphrase” because the sentence (1) includes the information marked with the underlined that “Perry’s parents are vacationing in North Carolina” and it is a significantly larger superset of the sentence (2). We observed that 39 pairs were classified into this type. - Dependency relation: Dependency relation within a sentence is not correctly analyzed. For example, in the sentence (3), “<.DDJ>” is divided into four tokens(“<”, “.”, “DDJ”, and “>”) by BERT tokenizer. As a result, the Stanford parser incorrectly analyzed that “>” modifies “added” with adverb modifier (advmod) relation. In total, 10 pairs of sentences were classified into this type.

- (3)

- The Dow Jones industrial average <.DJI> added 28 points, or 0.27 percent, at 10,557, hitting its highest level in 21 months.

- Inter-sentential relations: Two sentences which have inter-sentential relations are difficult to interpret correctly whether these sentences are paraphrase or not.

- (4)

- British Airways’ New York-to-London runs will end in October.

- (5)

- British Airways plans to retire its seven Concordes at the end of October.

Sentences (4) and (5) have the same sense, while different expressions such as “New York-to-London” and “Concordes” are used and they are co-referred entities. To identify these sentences as “paraphrases” correctly, it requires not only local dependency, i.e., dependency structure within a sentence but also non-local dependency between sentences. There were nine pairs classified into this type.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- He, H.; Gimpel, K.; Lin, J. Multi-perspective Sentence Similarity Modeling with Convolutional Neural Networks. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1576–1586. [Google Scholar]

- Yin, W.; Schütze, H.; Xiang, B.; Zhou, B. ABCNN: Attention-based Convolutional Neural Network for Modeling Sentence Pairs. Trans. Assoc. Comput. Linguist. 2016, 4, 259–272. [Google Scholar] [CrossRef]

- Liu, P.; Qiu, X.; Huang, X. Modelling Interaction of Sentence Pair with Coupled-LSTMs. arXiv 2016, arXiv:1605.05573. [Google Scholar]

- Chen, Q.; Zhu, X.; Ling, Z.; Wei, S.; Jiang, H.; Inkpen, D. Enhanced LSTM for Natural Language Inference. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 1657–1668. [Google Scholar]

- Wieting, J.; Gimpel, K. Revisiting Recurrent Networks for Paraphrastic Sentence Embeddings. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 2078–2088. [Google Scholar]

- Wang, Y.; Huang, H.; Chong, F.; Zhou, Q.; Jiahui, G.; Xiong, G. CSE: Conceptual Sentence Embeddings based on Attention Model. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 505–515. [Google Scholar]

- Oren, M.; Jacob, G.; Ido, D. Context2vec: Learning Generic Context Embedding with Bidirectional LSTM. In Proceedings of the 20th SIGNLL Conference on Computational Natural Language Learning, Berlin, Germany, 11–12 August 2016; pp. 51–61. [Google Scholar]

- Sanjeev, A.; Yingyu, L.; Tengyu, M. A Simple but Tough-to-Beat Baseline for Sentence Embeddings. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Mark, N.; Mohit, I.; Matt, G.; Christopher, C.; Kenton, L.; Luke, Z. Deep Contextualized Word Representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; pp. 2227–2237. [Google Scholar]

- Cer, D.; Yang, Y.; Kong, S.; Hua, N.; Limtiaco, N.; St. John, R.; Constant, N.; Guajardo-Cespedes, M.; Yuan, S.; Tar, C.; et al. Univeral Sentence Encoder. arXiv 2018, arXiv:1803.11175. [Google Scholar]

- Jacob, D.; Ming-Wei, C.; Kenton, L.; Kristina, T. BERT: Pre-training on Deep Bidirectional Transfomers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Michaël, D.; Xavier, B.; Pierre, V. Convolutiona Neural Networks on Graphs with Fast Localized Sectral Filtering. In Proceedings of the 30th Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 3844–3852. [Google Scholar]

- Thomas, K.; Max, W. SEMI-Supervised Classification with Graph Convolutional Networks. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Felix, W.; Tianyi, Z.; de Souza, A.H., Jr.; Christopher, F.; Tao, Y.; Weinberger, K.Q. Simplifying Graph Convolutional Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6861–6871. [Google Scholar]

- Socher, R.; Bauer, J.; Manning, C.D.; Ng, A.Y. Parsing with Compositional Vector Grammars. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 455–465. [Google Scholar]

- Danqi, C.; Manning, C.D. A Fast and Accurate Dependency Parser using Neural Networks. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 740–750. [Google Scholar]

- Socher, R.; Huang, E.H.; Pennington, J.; Ng, A.Y.; Manning, C.D. Dynamic Pooling and Unfolding Recursive Autoencoders for Paraphrase Detection. In Advances in Neural Information Processing Systems; The MIT press: Cambridge, MA, USA, 2011; pp. 801–809. [Google Scholar]

- Hu, B.; Lu, Z.; Li, H.; Chen, Q. Convolutional Neural Network Architectures for Matching Natural Language Sentences. In Advances in Neural Information Processing Systems; The MIT press: Cambridge, MA, USA, 2015; pp. 2042–2050. [Google Scholar]

- Tai, K.S.; Socher, R.; Manning, C.D. Improved Semantic Representations from Tree-structured Long Short-term Memory Networks. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics, Beijing, China, 26–31 July 2015; pp. 1556–1566. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Wang, A.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S.R. GLUE: A Multi-task Benchmark and Analysis Platform for Natural Language Understanding. arXiv 2018, arXiv:1804.07461. [Google Scholar]

- Lample, G.; Conneau, A. Cross-lingual Language Model Pretraining. arXiv 2019, arXiv:1901.07291. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2017; pp. 5998–6008. [Google Scholar]

- Subramanian, S.; Trischler, A.; Bengio, Y.; Pal, J.C. Learning General Purpose Distributed Sentence representations via Large Scale Multi-task Learning. In Proceedings of the 6th International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Sun, Y.; Wang, S.; Li, Y.; Feng, S.; Tian, H.; Wu, H.; Wang, H. ERNIE 2.0: A Continual Pre-training Framework for Language Understanding. arXiv 2019, arXiv:1907.12412. [Google Scholar]

- Liu, X.; He, P.; Chen, W.; Gao, J. Multi-task Deep Neural Networks for Natural Language Understanding. arXiv 2019, arXiv:1901.11504. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. arXiv 2019, arXiv:1901.00596. [Google Scholar] [CrossRef] [PubMed]

- Michael, S.; Nipf, T.N.; Peter, B.; Rianne, V.D.B.; Ivan, T.; Max, W. Modeling Relational Data with Graph Convolutional Networks. In Proceedings of the European Semantic Web Conference, Crete, Greece, 3–7 June 2018; pp. 593–607. [Google Scholar]

- Zhijiang, G.; Yan, Z.; Zhiyang, T.; Wei, L. Densely Connected Graph Convolutional Networks for Graph-to-Sequence Learning. Trans. Assoc. Comput. Linguist. 2019, 7, 297–312. [Google Scholar]

- Joost, B.; Ivan, T.; Wilker, A.; Diego, M.; Khalil, S. Graph Convolutional Encoders for Syntax-aware Neural Machine Translation. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1957–1967. [Google Scholar]

- Beck, D.; Haffari, G.; Cohn, T. Graph-to-Sequence Learning using Gated Graph Neural Networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 273–283. [Google Scholar]

- Yinchuan, X.; Junlin, Y. Look Again at the Syntax: Relational Graph Convolutional Network for Gendered Ambiguous Pronoun Resolution. In Proceedings of the 1st Workshop on Gender Bias in Natural Language Processing, Florence, Italy, 2 August 2019; pp. 99–104. [Google Scholar]

- Zhijiang, G.; Yan, Z.; Wei, L. Attention Guided Graph Convolutional Networks for Relation Extraction. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 241–251. [Google Scholar]

- Diego, M.; Ivan, T. Encoding Sentences with Graph Convolutional Networks for Semantic Role Labeling. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1506–1515. [Google Scholar]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Inductive representation learning on large graphs. In Proceedings of the 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 1024–1034. [Google Scholar]

- Peter, V.; Guillem, C.; Arantxa, C.; Adriana, R.; Pietro, L.; Yoshua, B. Graph Attention Networks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Liang, Y.; Chengsheng, M.; Yuan, L. Graph Convolutional Networks for Text Classification. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 7370–7377. [Google Scholar]

- Wu, Y.; Schuster, M.; Chen, Z.; Le, Q.V.; Norouzi, M. Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation. arXiv 2016, arXiv:1609.08144. [Google Scholar]

- Shikhar, V.; Manik, B.; Prateek, Y.; Piyush, R.; Chiranjib, B.; Partha, T. Incorporating Syntactic and Semantic Information in Word Embeddings using Graph Convolutional Networks. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3308–3318. [Google Scholar]

- Van den Oord, A.; Kalchbrenner, N.; Espeholt, L.; Vinyals, O.; Graves, A. Conditional Image Generation with PixelCNN Decoders. In Proceedings of the 30th Conference on Neural Information Processin System, Barcelona, Spain, 5–10 December 2016; pp. 4790–4798. [Google Scholar]

- Li, Q.; Han, Z.; Wu, X.M. Deeper Insights into Graph Convolutional Networks for Semi-Supervised Learning. In Proceedings of the 32nd AAAI conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 3538–3545. [Google Scholar]

- Yang, L.; Kang, Z.; Can, X.; Jin, D.; Yang, B.; Guo, Y. Topology Optimization based Graph Convolutional Network. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 4054–4061. [Google Scholar]

- Kingma, D.P.; Ba, J. ADAM: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Dolan, W.B.; Brockett, C. Automatically Constructing a Corpus of Sentential Paraphrases. In Proceedings of the Third International Workshop on Paraphrasing, Jeju Island, Korea, 14 October 2005; pp. 9–16. [Google Scholar]

- Shankar, I.; Nikhil, D.; Kornél, C. First Quora Dataset Release: Question Pairs. 2016. Available online: https://data.quora.com/First-Quora-Dataset-Releasee-Question-Pairs (accessed on 1 March 2020).

- Zhiguo, W.; Wael, H.; Radu, F. Bilateral Multi-Perspective Matching for Natural Language Sentences. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 4144–4150. [Google Scholar]

- Phang, J.; Fevry, T.; Bowman, S.R. Sentence Encoders on STILTs: Supplementary Training on Intermediate Labeled-data Tasks. arXiv 2019, arXiv:1811.01088. [Google Scholar]

- Liang, C.; Paritosh, P.K.; Rajendran, V.; Forbus, K.D. Learning Paraphrase Identification with Structural Alignment. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 2859–2865. [Google Scholar]

- Subramanian, S.; Trischler, A.; Bengio, Y.; Pal, C.J. Learning General Purpose Distributed Sentence Representations via Large Scale Multi-task Learning. arXiv 2018, arXiv:1804.00079. [Google Scholar]

- Yuki, A.; Junichi, T. Transfer Fine-Tuning: A BERT Case Study. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 5393–5404. [Google Scholar]

- Wei, W.; Bin, B.; Ming, Y.; Chen, W.; Zuyi, B.; Liwei, P.; Luo, S. StructBERT: Incorporating Language Structures into Pre-training for Deep Language Understanding. arXiv 2019, arXiv:1908.04577. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for Self-supervised Learning of Language Representations. In Proceedings of the 8th International Conference on Learning Representations, Addis Ababa, Ethiopia, 26 April–1 May 2020. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. arXiv 2019, arXiv:1910.10683. [Google Scholar]

- Chen, Z.; Yu, C.; Zhe, G.; Siqi, S.; Thomas, G.; Jing, L. FreeLB: Enhanced Adversarial Training for Natural Language Understanding. arXiv 2019, arXiv:1909.11764. [Google Scholar]

- Clark, K.; Luong, M.T.; Le, Q.V.; Manning, C.D. ELECTRA: Pre-Training Text Encoders as Discriminators Rather than Generators. In Proceedings of the 8th International Conference on Learning Representations, Addis Ababa, Ethiopia, 26 April–1 May 2020. [Google Scholar]

- Nie, Y.; Bansal, M. Shortcut-stacked Sentence Encoders for Multi-Domain Inference. In Proceedings of the 2nd Workshop on Evaluating Vector Space Representations for NLP, Copenhagen, Denmark, September 2017; pp. 41–45. [Google Scholar]

- Wuwei, L.; Wei, X. Neural Network Models for Paraphrase Identification Semantic Textual Similarity Natural Language Inference and Question Answering. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 3890–3902. [Google Scholar]

- Conneau, A.; Kiela, D.; Schwenk, H.; Barrault, L.; Bordes, A. Supervised Learning of Universal Sentence Representations from Natural Language Inference Data. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 670–680. [Google Scholar]

- He, H.; Lin, J. Pairwise Word Interaction Modeling with Deep Neural Networks for Semantic Similarity Measurement. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 937–948. [Google Scholar]

- Parikh, A.; Tom, O.; Das, D.; Uszkoreit, J. A Decomposable Attention Model for Natural Language Inference. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 2249–2255. [Google Scholar]

- Moschitti, A. Efficient Convolution Kernels for Dependency and Constituent Syntactic Trees. In Proceedings of the 17th European Conference on Machine Learning and the 10th European Conference on Principles and Practice of Knowledge Discovery in Databases, Berlin, Germany, 18–22 September 2006; pp. 318–329. [Google Scholar]

- Moschitti, A.; Chu-Carroll, J.; Patwardhan, S.; Fan, J.; Riccardi, G. Using Syntactic and Semantic Structural Kernels for Classifying Definition Questions in Jeopardy! In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Scotland, UK, 27–31 July 2011; pp. 712–724. [Google Scholar]

- Papernot, N.; Abadi, M.; Úlfar, E.; Goodfellow, I.; Talwar, K. Semi-Supervised Knowledge Transfer for Deep Learning from Private Training Data. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

| Data | #1 ID | #2 ID | #1 String | #2 String | Label |

|---|---|---|---|---|---|

| MRPC | 2108705 | 2108831 | Yucaipa owned Dominick’s before selling the chain to Safeway in 1998 for USD 2.5 billion. | Yucaipa bought Dominick’s in 1995 for USD 693 million and sold it Safeway for USD 1.8 billion in 1998. | 0 |

| 702876 | 702977 | Amrozi accused his brother, whom he called “the witness”, of deliberately distorting his evidence. | Referring to him as only “the witness”, Amrozi accused his brother of deliberately distorting his evidence. | 1 | |

| QQP | 364011 | 490273 | What causes stool color to change to yellow? | What can cause stool to come out as little balls? | 0 |

| 536040 | 536041 | How do I control my horny emotions? | How do you control your horniness? | 1 |

| Model | Baseline | LSSE | ||

|---|---|---|---|---|

| Acc | F1 | Acc | F1 | |

| MRPC | 84.3 | 88.1 | 86.3 | 89.9 |

| QQP | 88.7 | 88.4 | 90.6 | 90.4 |

| MRPC Dataset | ||||

|---|---|---|---|---|

| #1 String | #2 String | LSSE | BERT | N |

| Licensing revenue slid 21 percent, however, to USD 107.6 million. | License sales, a key measure of demand, fell 21 percent to USD 107.6 million. | TP | FN | 70 |

| For the entire season, the average five-day forecast track error was 259 miles, Franklin said. | The average track error for the five-day (forecast) is 323 nautical miles. | FN | TP | 19 |

| By Sunday night, the fires had blackened 277,000 acres, hundreds of miles apart. | Major fires had burned 264,000 acres by early last night. | TN | FP | 36 |

| Other countries and private creditors are owed at least USD 80 billion in addition. | Other countries are owed at least USD US80 billion (USD 108.52 billion). | FP | TN | 53 |

| QQP Dataset | ||||

| #1 String | #2 String | LSSE | BERT | N |

| What are the most intellectually stimulating movies you have ever seen? | What are the most intellectually stimulating films you have ever watched? | TP | FN | 331 |

| How do I get business ideas? | How can I think of a business idea? | FN | TP | 212 |

| How do I remove dry paint from my clothes? | How do I get acrylic paint out of my clothes? | TN | FP | 201 |

| How do Champcash make money from Chrome? | How do a Champcash customer make money from Chrome? | FP | TN | 130 |

| MRPC Dataset | ||

|---|---|---|

| Model | Acc | F1 |

| Str Align [48] | 78.3 | 84.9 |

| BERT_base [11] | 84.8 | 88.9 |

| GenSen [49] | 78.6 | 84.4 |

| ERNIE 2.0 [25] | 86.1 | 89.9 |

| Trans FT [50] | - | 89.2 |

| StructBERT_base [51] | 86.1 | 89.9 |

| FreeLB-BERT [55] | 83.5 | 88.1 |

| ELECTRA-Base [56] | 86.7 | - |

| LSSE (Our model) | 86.3 | 89.9 |

| QQP Dataset | |

|---|---|

| Model | Acc |

| BiMPM [46] | 88.2 |

| SSE [58] | 87.8 |

| LSSE (Our model) | 90.6 |

| MRPC Dataset | ||

|---|---|---|

| Model | Acc | F |

| LSSE (Our model) | 86.3 | 89.9 |

| –PE | 85.3 | 89.0 |

| –SentE | 84.2 | 88.3 |

| –SentE and –PE | 83.5 | 88.1 |

| –R-GCNs | 85.7 | 89.5 |

| –R-GCNs and –SentE | 83.0 | 87.3 |

| –R-GCNs and –BERT tokenE | 84.3 | 88.1 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, S.; Shen, X.; Fukumoto, F.; Li, J.; Suzuki, Y.; Nishizaki, H. Paraphrase Identification with Lexical, Syntactic and Sentential Encodings. Appl. Sci. 2020, 10, 4144. https://doi.org/10.3390/app10124144

Xu S, Shen X, Fukumoto F, Li J, Suzuki Y, Nishizaki H. Paraphrase Identification with Lexical, Syntactic and Sentential Encodings. Applied Sciences. 2020; 10(12):4144. https://doi.org/10.3390/app10124144

Chicago/Turabian StyleXu, Sheng, Xingfa Shen, Fumiyo Fukumoto, Jiyi Li, Yoshimi Suzuki, and Hiromitsu Nishizaki. 2020. "Paraphrase Identification with Lexical, Syntactic and Sentential Encodings" Applied Sciences 10, no. 12: 4144. https://doi.org/10.3390/app10124144

APA StyleXu, S., Shen, X., Fukumoto, F., Li, J., Suzuki, Y., & Nishizaki, H. (2020). Paraphrase Identification with Lexical, Syntactic and Sentential Encodings. Applied Sciences, 10(12), 4144. https://doi.org/10.3390/app10124144