Abstract

Mycobacterial infections continue to greatly affect global health and result in challenging histopathological examinations using digital whole-slide images (WSIs), histopathological methods could be made more convenient. However, screening for stained bacilli is a highly laborious task for pathologists due to the microscopic and inconsistent appearance of bacilli. This study proposed a computer-aided detection (CAD) system based on deep learning to automatically detect acid-fast stained mycobacteria. A total of 613 bacillus-positive image blocks and 1202 negative image blocks were cropped from WSIs (at approximately 20 × 20 pixels) and divided into training and testing samples of bacillus images. After randomly selecting 80% of the samples as the training set and the remaining 20% of samples as the testing set, a transfer learning mechanism based on a deep convolutional neural network (DCNN) was applied with a pretrained AlexNet to the target bacillus image blocks. The transferred DCNN model generated the probability that each image block contained a bacillus. A probability higher than 0.5 was regarded as positive for a bacillus. Consequently, the DCNN model achieved an accuracy of 95.3%, a sensitivity of 93.5%, and a specificity of 96.3%. For samples without color information, the performances were an accuracy of 73.8%, a sensitivity of 70.7%, and a specificity of 75.4%. The proposed DCNN model successfully distinguished bacilli from other tissues with promising accuracy. Meanwhile, the contribution of color information was revealed. This information will be helpful for pathologists to establish a more efficient diagnostic procedure.

1. Introduction

According to a report from the World Health Organization, 1.6 million people died from Mycobacterium tuberculosis infection in 2017 [1]. Although most mycobacterial infections occur in developing countries, they can spread easily via global transportation. The clinical patterns of mycobacterial infection in immunocompromised patients are usually nonspecific and atypical from those in healthy individuals, leading to a delayed diagnosis or misdiagnosis due to inadequate biopsy [2]. Inadequate biopsy and poor culture or smear techniques may cause a delayed diagnosis and subsequently death in patients [3]. Therefore, a correct and timely diagnostic tool is important for mycobacterial disease control.

Diagnostic tools for mycobacterial infection include microscopy examination, smears, bacterial cultures, histopathological examination, and molecular technology. However, none of these tools is perfect [4]. Examination of both histopathologic and microbiologic results is necessary for an accurate diagnosis of mycobacterial infection [5]. For cutaneous mycobacteria infection, histopathology is essential for diagnosis [5,6]. Acid-fast staining has been shown to have good sensitivity and specificity for the diagnosis of mycobacterial infection [7]. However, screening for acid-fast stained bacilli is a highly laborious task for pathologists. Bacilli are easily missed in paucibacillary disease, and pathologists often must review several tissue sections for accurate diagnosis [8]. Tissue slides are examined under 400× magnifications or even higher magnification (1000×) with oil immersion, which is a very laborious and time-consuming task [3,9].

With advances in slide scanning technology, full digitalization of stained tissue sections in histopathology has the potential to transform clinical practice [10], where fully digitalized histopathology systems have been approved by the US Food and Drug Administration for primary diagnosis [11]. Whole-slide images contain a large amount of information and is very convenient for image processing, as pathologists can easily change image magnification and focus on regions of interest. Other advantages include the ease of interactive learning and navigation and digital images that do not bleach or break [12,13]. Whole-slide images (WSIs) can be used to perform automatic image analysis and reduce high inter- and intraobserver variability [10]. Numerous large studies have revealed that digital pathology images are not inferior to traditional glass slides for clinical diagnosis [14,15,16]. According to previous investigations, applying WSIs for mycobacterial infection slides is practical. The availability of WSIs has made digital pathology a very popular area of application for computer-aided diagnosis [17,18,19].

Conventional computer-aided diagnosis (CAD) is a well-established tool in the medical imaging field, whose procedures usually include segmentation, feature extraction, and classification [20]. CAD systems based on numerous image processing algorithms have been developed for digital pathology slides, especially to assist with laborious tasks such as detection and quantification in pattern recognition. The primary goal of implementing a CAD system is to increase sensitivity while reducing false negative rates due to fatigue of observers [16,21].

With the recent success of deep learning methods, the applications for computer-aided diagnosis in different clinical fields can be improved [10,21,22]. Previous works related to digital pathology have applied deep learning techniques to detect, segment, and classify diseases of interest from the nucleus and cell level all the way to the organ level [23]. In digital pathology, deep learning methods have been applied to whole-slide image analysis for predicting lung and prostate cancer diagnoses and the detection of breast cancer metastases [17,18,19]. Thus, a deep convolutional neural network (DCNN), which uses automatic convolutions to extract very large numbers of image features, was proposed in this study to detect bacilli in whole-slide pathology images. In the experiment, machine-generated features were separated into color and noncolor features to explore how the DCNN could distinguish bacilli from other tissues in the images. To the best of our knowledge, this is the first study to apply a DCNN using the transfer learning method for bacillus detection in whole-slide pathology images.

2. Materials and Methods

2.1. Histopathological Whole-Slide Images

Histopathology sections were prepared in the Department of Pathology, Taipei Medical University Hospital and Mackay Memorial Hospital. The dermatopathological samples in the database are reserved for teaching, case reviews and discussion. Formalin-fixed, paraffin-embedded skin tissue samples were prepared in 5-μm-thick sections. Skin incisional biopsies were obtained, and specimens were 1–2 cm in length and 0.5–1 cm in width. Ziehl–Neelsen stained slides in the database that were digitally scanned to be included in the study. The selected specimens had a staining quality adequate for a definite diagnosis. Nine samples of cutaneous mycobacterial infection were selected, which included atypical mycobacterial infection, paucibacillary leprosy, lepromatous leprosy, and erythema nodosum leprosum. The histopathological features were suppurative inflammation, granuloma formation, and foamy cell aggregation. Differential inflammatory cell infiltration, including histiocytes, neutrophils, and mast cells, were noted in these WSIs (Table 1). Histochemical stain preparation was performed with a standard protocol for Ziehl–Neelsen staining (Muto Pure Chemicals Co., Tokyo, Japan). Ziehl–Neelsen staining emphasizes acid-fast bacilli in the histopathological slides. The presence of mycolic acid in the cell walls of the mycobacteria bacilli appears reddish after staining. The cell walls of the mycobacteria bacilli retain red carbol fuchsin, which shows high contrast to the bluish color of the background [7]. These histopathological slides were prepared according to standard protocols and digitized using a ScanScope XT whole-slide scanner (Aperio, Vista, CA, USA). The image navigation software used was Aperio ImageScope v9.1.19 (Aperio, Vista, CA, USA). This study was approved by the Macky Memorial Hospital Institutional Review Board (IRB Number: 18MMHIS121).

Table 1.

Histopathological features of the nine selected skin incisional biopsy tissue samples.

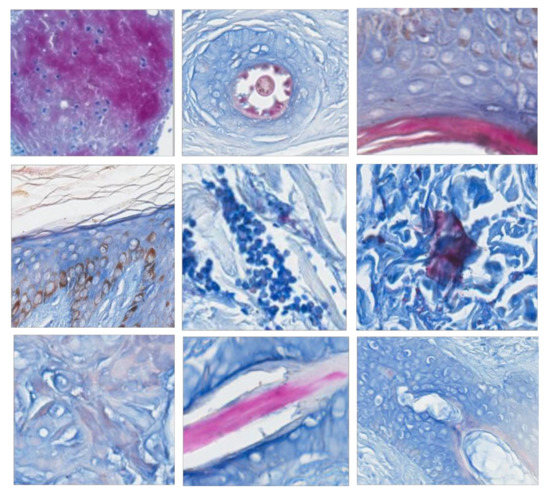

To establish gold standards, the whole-slide images were subsequently cropped to 20 × 20 pixel (a pixel equals 0.017 mm wide), nonoverlapping image blocks, which is the optimal size for presenting bacilli [24]. One international board-certified dermatopathologist and one international dermatopathology fellow reviewed the whole histopathological slide images and identified acid-fast positive stained bacilli. The ground-truth was established by the consensus of both during the review. Positive mycobacterial bacilli image blocks showed the presence of reddish rod-shaped bacilli with a bluish to whitish background. Negative image blocks were chosen from noninfectious areas with stained reddish, bluish or whitish colors. In the process of establishing consensus, the discordance happened to some samples with incomplete fragmented rod-shaped looking, which may be contamination or a part of bacilli. These blocks were excluded in the experiment to reduce the uncertainty. A total of 613 representative positive image blocks, including various orientations of mycobacterial bacilli and different bacillus counts, were selected (Figure 1). These positive image blocks were used in each of the following experiments, while negative samples were individually selected according to the experiment purpose. Positive and negative image blocks were then fed into a transferred DCNN for classification.

Figure 1.

Acid-fast stained bacilli samples cropped as positive blocks (20 × 20 pixels).

2.2. Effects of Color Information

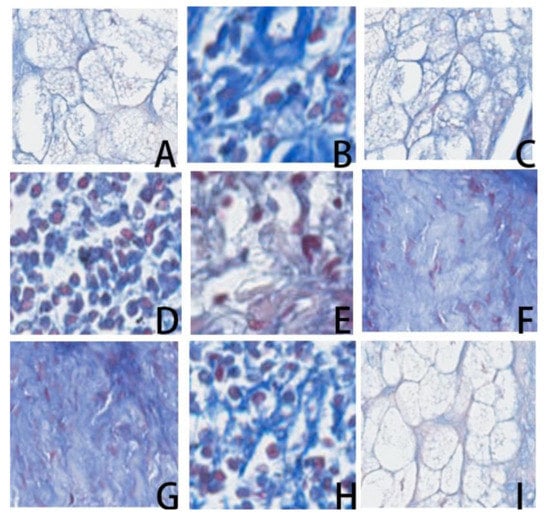

Due to enormous variations in the color and structural layouts of bacillus-negative image areas, three groups of bacillus-negative block sets were selected with the same positive block set mentioned above to evaluate the proposed CAD system. Acid-fast positive staining showed reddish colors with a bluish background. In addition to mycobacterial bacilli, other tissue was stained a reddish color as well. For example, hair shaft cortices stain a splendid reddish color and appear positive for bacilli. On the other hand, granules within mast cells stain a faint reddish color. Bacillus-negative images with reddish colors were subclassified into splendid and faint color groups according to the above criteria (Figure 2 and Figure 3, the magnification is used to illustrate the local pattern rather than the actual size). First, the splendid group showed that negative images with a splendid reddish color may be misclassified as positive. Examples among the 1022 negative image blocks showing splendid reddish-stained features such as hair follicles, corneal layers of the epidermis, foreign bodies, and amorphous background stains are shown in Figure 2.

Figure 2.

Reddish and violaceous tissues collected as negative samples in the splendid group.

Figure 3.

From left to right and top to bottom to have A–I images, they are contamination (images A, F), hair follicle and hair shaft (images B, H), corneal layer of epidermis (images C, D), amorphous background staining (images E, G, and I) collected as negative samples in the faint group.

Next, negative image blocks with a faint reddish color and structures with similar appearances to positive bacilli were extracted. These image blocks represented a great clinical challenge to physicians for differentiating from bacilli. A total of 1383 negative image blocks composed of sebaceous glands, infiltration of mast cells, granules from mast cells, and background stain that showed silk-like features with a faint reddish color were collected as the faint group (Figure 3).

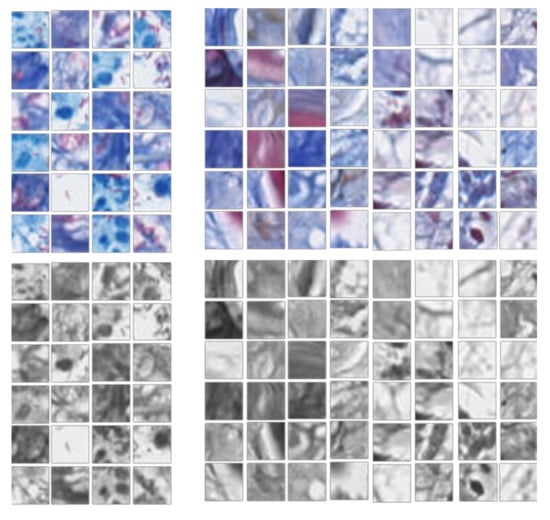

Finally, a grayscale image experiment was proposed to evaluate the overall contribution of color features to the success of the proposed convolutional neural network. In this group, the 1202 negative image blocks were first selected from the previous two groups. Then, both the original color samples and the grayed samples were prepared for model training and testing, as shown in Figure 4. For grayed samples, only morphological structure information and relative intensities compared to surrounding tissues could be used. The result of this experiment would further illustrate the contribution of color information used in stained bacillus detection and make the performance of the diagnostic method more promising for clinical use.

Figure 4.

Color and grayscale training samples. The left group is positive blocks and the right group is negative blocks.

2.3. Transferred Convolutional Neural Network

Deep learning-based artificial intelligence methods powered by very large datasets and advanced computations have recently shown success in image classification and object recognition [25]. The most successful types of deep learning models for image analysis are DCNNs, which have been applied successfully in objection detection, segmentation and recognition [25]. Unlike traditional pattern recognition techniques, DCNNs function by learning features from a large image database without manually defining extractors [25,26]. A DCNN contains many layers that transform their input according to local connections, shared weights, and pooling, and subsequently discover representative hierarchical features through several hidden layers [22,25]. At first, lower-level filters of the neural network learn general features such as edges and shapes from the datasets. Higher-level filters then learn more specific features, such as objects [27].

Works based on DCNNs have been successful in performing several medical image tasks, such as diagnosing fundoscopic images for ophthalmologists [28] and dermatoscopic images of skin cancer for dermatologists, and have shown performances rivaling those of human specialists [29]. In diagnosing disease skin pictures, it has been shown that a DCNN could achieve a performance comparable with that of dermatologists [30].

In digital pathology, a DCNN has been used to increase accuracy, efficiency, and consistency for pathologists [17]. Automated histopathological systems have been reported to be valuable in predicting various diseases for supporting clinical decisions. With the introduction of digital whole-slide images, there is an opportunity to decrease pathologists’ workloads and the number of labor-intensity tasks. Some deep learning networks have achieved performances in detecting lymph node metastases that rival those of human pathologists [19].

However, for medical image classification, the number of training samples is generally limited. Collecting and annotating a large number of medical images remains a challenge. Several approaches have been proposed to build models from limited samples without overfitting: training from scratch, using off-the-shelf pretrained DCNN features, unsupervised DCNN pretraining with supervised fine-tuning, and transfer learning, which uses pretrained networks from a very large number of nonmedical, natural image datasets to fine-tune the network [22]. Although medical and natural images show various differences, objects from low-level edges to high-level shapes can be recognized. DCNNs trained from the large, well-annotated ImageNet still achieve good performance on medical image recognition tasks [20]. Transfer learning is now commonly used in various classification tasks for medical images such as Pap smear images and radiologic images [31]. The theory of replacing layers in pre-DCNN architectures has already been suggested to be useful in CAD systems with limited training datasets [20]. Given the amount of available data in this study, using transfer learning for the classification of histopathological images is appropriate.

In the experiment, AlexNet was applied to the proposed transfer learning task. AlexNet is a pretrained convolutional neural network based on ImageNet, which consists of over 15 million labeled natural images with over 22,000 categories. AlexNet achieved significantly improved performance over other methods in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) [26,32,33]. The architecture of AlexNet consists of five convolution layers, three pooling layers, and two fully connected layers.

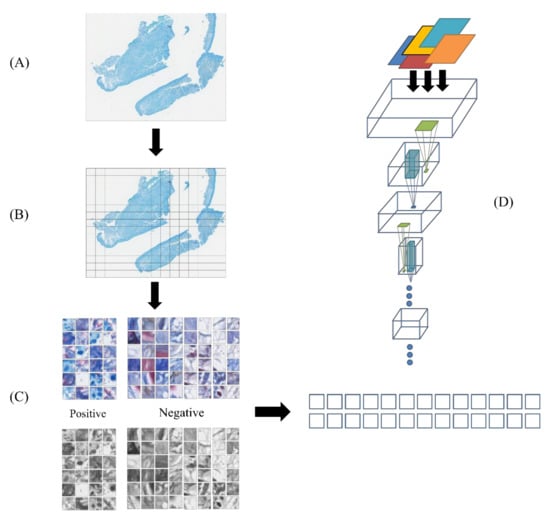

To reuse the pretrained network, the final three layers were removed from the AlexNet network and replaced with the target tasks. During the training, image blocks were resized from 20 × 20 to 227 × 227 pixels to make them compatible with the AlexNet architecture. The datasets were randomly partitioned into an 80% training set and a 20% testing set to diagnose whether an image block contained mycobacteria (Figure 5).

Figure 5.

The computer-aided diagnosis workflow. (A) A whole-slide pathology image. (B) Cropped samples fitting to mycobacteria bacilli (20 × 20 pixels) (C) Positive and negative sample selection. (D) Transferred deep convolutional neural network (DCNN) model.

3. Results

In the first two experiments, whether the proposed DCNN can distinguish bacilli from splendid negatives and faint negatives is shown in Table 2. According to the gold standard, the DCNN model generated the likelihood of each image block being a bacillus as a probability. A probability higher than 0.5 was regarded as a bacillus. As a result, the DCNN model yielded an accuracy of 96.6%, a sensitivity of 94.3%, and a specificity of 98.0% for the splendid group and an accuracy of 95.3%, a sensitivity of 91.1%, and a specificity of 97.1% for the faint group, respectively. By mixing the two groups into the color group as shown in Table 3, the DCNN achieved an accuracy of 95.3%, a sensitivity of 93.5%, and a specificity of 96.3%.

Table 2.

Performance from incorporating 613 positive blocks and two negative groups, showing the differentiating ability of the proposed deep convolutional neural network (DCNN).

Table 3.

Performance from incorporating 613 positive blocks and 1202 negative blocks with and without color information using the proposed DCNN.

In the last experiment, grayscale images were used to emphasize the importance of color information. The stain initially used for the slides is composed of two main colors, red and blue, which are the two primary colors for distinction. After the color information was removed, the DCNN achieved an accuracy of 73.8%, with a sensitivity of 70.7% and a specificity of 75.4%. The result implies that morphological features can be used to obtain at least 70% accuracy without color features.

4. Discussion

The conventional CAD system is a well-established diagnostic tool in the medical image field. The procedures it is used for usually include segmentation, feature extraction, and classification [20]. CAD systems based on numerous image processing algorithms have already been developed for digital pathology slides, especially for laborious tasks such as detection and quantification in pattern recognition. The primary goal of CAD systems is to increase sensitivity while reducing false negative rates due to fatigue of the observers [16,21]. Mycobacteria infection histopathology remains a diagnostic challenge because it costs physicians time and effort to look for bacilli under the microscope. Numerous CAD systems for automatic bacillus detection have been developed since the 1990s [34]. Previous CAD systems identified mycobacteria bacilli following image segmentation and feature extraction defined by the physician. Published methods use segmentation based on color thresholding followed by the classification of sizes and shapes. Stained positive bacilli are reddish, while the counterstained background is blue, and the shape of the bacillus is expected to be rod-like [21]. Although the rules of the morphological features of bacilli could be described by trained pathologists, developing algorithms for identifying bacilli is a difficult task, as the bacillus shape and orientation vary under the microscope. Therefore, using handcrafted features for classification is intuitive, but developing algorithms is challenging.

For the past few years, research on mycobacterial bacillus detection has focused on sputum smears and cytology specimens. Computerized workflows have been developed to identify the bacilli from sputum smear images [34,35,36,37,38,39,40], but few studies have focused on histopathological sections. Biopsy tissue samples of mycobacteria-infected organs remain critical for diagnosis, especially for extrapulmonary mycobacterium infectious diseases [5]. Furthermore, sputum smears and tissue sections differ in several ways. First, a rod-like bacillus is expected to have a fluid-like shape under the smear pressure. In tissue sections, bacilli are suspended in paraffin wax in different orientations. This results in variations of bacillus shapes from rod-like to point-like under different levels of tissue sections.

Previous studies used tissue images captured from cameras on light microscopes with limited resolutions compared to whole-slide scanning [41,42]. In the experiments, the proposed DCNN yielded both good sensitivity and specificity regardless of the kind of negative image blocks compared with true bacillus-positive image blocks. This result indicates that even with respect to the dying issues, the classifier showed a high accuracy of 95.3%. The pretrained CNN model, which learned image features from natural images, performed well in classifying pathological images of acid-fast stained mycobacteria bacilli. Particles stained reddish to violaceous may simulate positive acid-fast stain bacilli. For instance, contamination particles, background stains, hair shafts and the corneal layer of the epidermis stain reddish and sometimes show fragment-like morphologies. Sebaceous gland tissues with foam-like structures that simulate bacillus morphology under high resolution could also confuse observers.

After the image blocks were transformed into grayscale, the performance dropped to 73.8% accuracy. The possible reason for this could be that color information is essential for bacillus detection. Nevertheless, the remaining morphological properties and intensities still provided approximately 73.8% accuracy. This result implies that morphological features and sharpness are also crucial factors for detection. Similar to past studies, shape analysis of mycobacterial infectious images was implemented in the CAD system. From the results, we attempted to reveal the effectiveness of color information in bacillus detection, which could help pathologists gradually realize how the model works and accept its use in clinical situations.

In the past, the main challenge for WSI studies was extremely large image sizes. For example, one of the collected WSIs is as large as 51,757 × 12,624 pixels. In this study, the whole slides were separated into blocks with the size of 20 × 20 pixel for the training of the detection model. A smaller size cannot show the complete morphological properties while a bigger size cannot focus on the target due to too many background tissues. In clinical use, blocks compose the whole slide can be evaluated by the trained model and be given a probability being a bacilli. Then, blocks with higher probabilities are placed prior to those with low probabilities for pathologists to observe. By this way, the pathologist can have the potential to find a bacilli faster. Once a block with high probability having bacilli is examined as having true bacilli by a pathologist, no further examination is required. Such as an example having the original order: 0.1, 0.3, 0.2, 0.4, 0.6, 0.2, 0.9 and the modified order: 0.9, 0.6, 0.4, 0.3, 0.2, 0.2, 0.1 can have chance to detect a bacilli (probability = 0.9) at the first block rather than the original seventh block.

The use of whole-slide pathology images can be promising in future applications. However, few related studies could be found that had a limited sample size and that could perform deep learning with limited computational resources. Consequently, this study proposed to use transfer learning to solve the problem of limited samples and to use AlexNet to show that simpler layers of convolutional neural networks could still perform well with less computational power and time. We believe that the results shown in the manuscript have demonstrated this possibility and can provide suggestions to many future studies focusing on using limited medical images for deep learning. Additionally, it will be meaningful for clinical use. Other DCNN architectures for image classification, such as Inception-v3 [43], ResNet-101 [44], and DenseNet-201 [45], were also explored. The accuracy of bacillus detection for these architectures were 93%, 94%, and 95%, respectively, comparing well to AlexNet (95%). All of them achieved promising accuracy in bacillus detection with very subtle differences among the performances. When considering the use of these architectures in real clinical situations, it should be noted that the complicated ResNet-101 and DenseNet-201, each containing substantial numbers of layers, have high computational costs and may therefore be impractical. In the clinical use, specific circuit would help accelerate the detection time [46].

The first limitation to future development is the lack of a universal, standardized scanning system for digital pathology. A full histopathology digitalization system was recently approved by the US Food and Drug Administration for primary diagnosis [11]. Consensus on WSI scanning preparation would provide guidelines for future studies. Second, the various scanning machines and histopathological staining quality may differ among hospitals. When collecting additional cases for future studies, more pathological images from multiple centers with different staining qualities are necessary for building a comprehensive prediction model. Additionally, if enough cases are acquired, the whole image can be used for testing to simulate the real clinical use of the system and evaluate whether the proposed system can improve reader performance. Then, the proposed CAD could be generalized to other diagnosis tasks and pathogens, such as deep fungal cutaneous diseases and parasitic infectious disease. With advances of radiomics, future work may include the integration of radiological and histopathological images.

5. Conclusions

A CAD system based on a proposed DCNN architecture was used in the detection of mycobacteria bacilli in whole-slide pathology images with an accuracy of more than 95%. After classification, the CAD system can specifically indicate and localize the potential bacilli, which would decrease the examination time and oversight of the pathologist. The aim of this preliminary study was to first use available teaching materials to determine whether the transferred DCNN could distinguish bacilli from other tissues in cases similar to those used to train junior physicians. With more cases collected from various sources and situations, the proposed method can be evaluated further. Other acid-fast stain pathogens (e.g., Norcadia species) can be included in the next CAD system.

Author Contributions

Conceptualization, C.-M.L., C.-C.L.; Data curation, Y.-H.W., C.-C.L.; Methodology, C.-M.L., Y.-C.L.; Validation, Y.-C.L.; Writing—original draft, C.-M.L.; Writing—review and editing, C.-C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Ministry of Science and Technology, Taiwan, grant number MOST 109-2622-E-004-001-CC3 and 108-2221-E-004-010-MY3. The APC was funded by MOST 108-2221-E-004-010-MY3.

Acknowledgments

The authors would like to thank the Ministry of Science and Technology in Taiwan (MOST 109-2622-E-004-001-CC3 and 108-2221-E-004-010-MY3) for financially supporting this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- World Health Organization. Global Tuberculosis Report; World Health Organization: Geneva, Switzerland, 2017. [Google Scholar]

- Henkle, E.; Winthrop, K.L. Nontuberculous mycobacteria infections in immunosuppressed hosts. Clin. Chest Med. 2015, 36, 91–99. [Google Scholar] [CrossRef]

- Griffith, D.E.; Aksamit, T.; Brown-Elliott, B.A.; Catanzaro, A.; Daley, C.; Gordin, F.; Holland, S.M.; Horsburgh, R.; Huitt, G.; Iademarco, M.F.; et al. An Official ATS/IDSA Statement: Diagnosis, Treatment, and Prevention of Nontuberculous Mycobacterial Diseases. Am. J. Respir. Crit. Care Med. 2007, 175, 367–416. [Google Scholar] [CrossRef] [PubMed]

- Reller, L.B.; Weinstein, M.P.; Hale, Y.M.; Pfyffer, G.E.; Salfinger, M. Laboratory Diagnosis of Mycobacterial Infections: New Tools and Lessons Learned. Clin. Infect. Dis. 2001, 33, 834–846. [Google Scholar] [CrossRef][Green Version]

- Sharma, S.; Mohan, A. Extrapulmonary tuberculosis. Indian J. Med. Res. 2004, 120, 316–353. [Google Scholar]

- Hernandez Solis, A.; Herrera Gonzalez, N.E.; Cazarez, F.; Mercadillo Perez, P.; Olivera Diaz, H.O.; Escobar-Gutierrez, A.; Cortes Ortiz, I.; Gonzalez Gonzalez, H.; Reding-Bernal, A.; Sabido, R.C. Skin biopsy: A pillar in the identification of cutaneous Mycobacterium tuberculosis infection. J. Infect. Dev. Ctries 2012, 6, 626–631. [Google Scholar] [CrossRef]

- Laga, A.C.; Milner, D.A., Jr.; Granter, S.R. Utility of acid-fast staining for detection of mycobacteria in cutaneous granulomatous tissue reactions. Am. J. Clin. Pathol. 2014, 141, 584–586. [Google Scholar] [CrossRef]

- Renshaw, A.A.; Gould, E.W. Thrombocytosis is associated with Mycobacterium tuberculosis infection and positive acid-fast stains in granulomas. Am. J. Clin. Pathol. 2013, 139, 584–586. [Google Scholar] [CrossRef]

- Lewinsohn, D.M.; Leonard, M.K.; LoBue, P.A.; Cohn, D.L.; Daley, C.L.; Desmond, E.; Keane, J.; Lewinsohn, D.A.; Loeffler, A.M.; Mazurek, G.H.; et al. Official American Thoracic Society/Infectious Diseases Society of America/Centers for Disease Control and Prevention Clinical Practice Guidelines: Diagnosis of Tuberculosis in Adults and Children. Clin. Infect. Dis. 2017, 64, e1–e33. [Google Scholar] [CrossRef]

- Griffin, J.; Treanor, D. Digital pathology in clinical use: Where are we now and what is holding us back? Histopathology 2017, 70, 134–145. [Google Scholar] [CrossRef]

- Silver Spring, M. FDA Allows Marketing of First Whole Slide Imaging System for Digital Pathology [News Release]. Available online: https://www.fda.gov/NewsEvents/Newsroom/PressAnnouncements/ucm552742.htm (accessed on 27 December 2018).

- Ho, J.; Ahlers, S.M.; Stratman, C.; Aridor, O.; Pantanowitz, L.; Fine, J.L.; Kuzmishin, J.A.; Montalto, M.C.; Parwani, A.V. Can digital pathology result in cost savings? A financial projection for digital pathology implementation at a large integrated health care organization. J. Pathol. Inform. 2014, 5, 33. [Google Scholar] [CrossRef]

- Higgins, C. Applications and challenges of digital pathology and whole slide imaging. Biotech. Histochem. 2015, 90, 341–347. [Google Scholar] [CrossRef] [PubMed]

- Snead, D.R.; Tsang, Y.W.; Meskiri, A.; Kimani, P.K.; Crossman, R.; Rajpoot, N.M.; Blessing, E.; Chen, K.; Gopalakrishnan, K.; Matthews, P.; et al. Validation of digital pathology imaging for primary histopathological diagnosis. Histopathology 2016, 68, 1063–1072. [Google Scholar] [CrossRef] [PubMed]

- Kent, M.N.; Olsen, T.G.; Feeser, T.A.; Tesno, K.C.; Moad, J.C.; Conroy, M.P.; Kendrick, M.J.; Stephenson, S.R.; Murchland, M.R.; Khan, A.U.; et al. Diagnostic Accuracy of Virtual Pathology vs Traditional Microscopy in a Large Dermatopathology Study. JAMA Dermatol. 2017, 153, 1285. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.; Feldman, M.D.; Abels, E.; Ashfaq, R.; Beltaifa, S.; Cacciabeve, N.G.; Cathro, H.P.; Cheng, L.; Cooper, K.; Dickey, G.E.; et al. Whole Slide Imaging Versus Microscopy for Primary Diagnosis in Surgical Pathology: A Multicenter Blinded Randomized Noninferiority Study of 1992 Cases (Pivotal Study). Am. J. Surg. Pathol. 2017. [Google Scholar] [CrossRef]

- Litjens, G.; Sanchez, C.I.; Timofeeva, N.; Hermsen, M.; Nagtegaal, I.; Kovacs, I.; Hulsbergen-van de Kaa, C.; Bult, P.; van Ginneken, B.; van der Laak, J. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci. Rep. 2016, 6, 26286. [Google Scholar] [CrossRef]

- Yu, K.H.; Zhang, C.; Berry, G.J.; Altman, R.B.; Ré, C.; Rubin, D.L.; Snyder, M. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat. Commun. 2016, 7, 12474. [Google Scholar] [CrossRef]

- Ehteshami Bejnordi, B.; Veta, M.; Johannes van Diest, P.; van Ginneken, B. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 2017, 318, 2199–2210. [Google Scholar] [CrossRef]

- Hoo-Chang, S.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 2016, 35, 1285. [Google Scholar]

- Madabhushi, A.; Lee, G. Image analysis and machine learning in digital pathology: Challenges and opportunities. Med. Image Anal. 2016, 33, 170–175. [Google Scholar] [CrossRef]

- Shen, D.; Wu, G.; Suk, H.-I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Xiong, Y.; Ba, X.; Hou, A.; Zhang, K.; Chen, L.; Li, T. Automatic detection of mycobacterium tuberculosis using artificial intelligence. J. Thorac. Dis. 2018, 10, 1936. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2009, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Brown, J.M.; Campbell, J.P.; Beers, A.; Chang, K.; Ostmo, S.; Chan, R.P.; Dy, J.; Erdogmus, D.; Ioannidis, S.; Kalpathy-Cramer, J. Automated Diagnosis of Plus Disease in Retinopathy of Prematurity Using Deep Convolutional Neural Networks. JAMA Ophthalmol. 2018, 136, 803–810. [Google Scholar] [CrossRef]

- Haenssle, H.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Hassen, A.; Thomas, L.; Enk, A. Man against machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Nguyen, L.D.; Lin, D.; Lin, Z.; Cao, J. Deep CNNs for microscopic image classification by exploiting transfer learning and feature concatenation. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Veropoulos, K.; Learmonth, G.; Campbell, C.; Knight, B.; Simpson, J. Automated identification of tubercle bacilli in sputum: A preliminary investigation. Anal. Quant. Cytol. Histol. 1999, 21, 277–282. [Google Scholar]

- Forero, M.G.; Sroubek, F.; Cristóbal, G. Identification of tuberculosis bacteria based on shape and color. Real Time Imaging 2004, 10, 251–262. [Google Scholar] [CrossRef]

- Khutlang, R.; Krishnan, S.; Dendere, R.; Whitelaw, A.; Veropoulos, K.; Learmonth, G.; Douglas, T.S. Classification of Mycobacterium tuberculosis in images of ZN-stained sputum smears. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 949–957. [Google Scholar] [CrossRef] [PubMed]

- Sadaphal, P.; Rao, J.; Comstock, G.W.; Beg, M.F. Image processing techniques for identifying Mycobacterium tuberculosis in Ziehl-Neelsen stains. Int. J. Tuberc. Lung Dis. 2008, 12, 579–582. [Google Scholar] [PubMed]

- Law, Y.N.; Jian, H.; Lo, N.W.S.; Ip, M.; Chan, M.M.Y.; Kam, K.M.; Wu, X. Low cost automated whole smear microscopy screening system for detection of acid fast bacilli. PLoS ONE 2018, 13, e0190988. [Google Scholar] [CrossRef] [PubMed]

- Chang, J.; Arbeláez, P.; Switz, N.; Reber, C.; Tapley, A.; Davis, J.L.; Cattamanchi, A.; Fletcher, D.; Malik, J. Automated Tuberculosis Diagnosis Using Fluorescence Images from a Mobile Microscope. In Lecture Notes in Computer Science, Proceedings of the MICCAI International Conference on Medical Image Computing and Computer-Assisted Intervention, Nice, France, 1–5 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; Volume 15, pp. 345–352. [Google Scholar]

- Forero, M.G.; Cristobal, G.; Desco, M. Automatic identification of Mycobacterium tuberculosis by Gaussian mixture models. J. Microsc. 2006, 223, 120–132. [Google Scholar] [CrossRef] [PubMed]

- Tadrous, P.J. Computer-assisted screening of Ziehl-Neelsen-stained tissue for mycobacteria. Algorithm design and preliminary studies on 2000 images. Am. J. Clin. Pathol. 2010, 133, 849–858. [Google Scholar] [CrossRef] [PubMed]

- Priya, E.; Srinivasan, S. Automated object and image level classification of TB images using support vector neural network classifier. Biocybern. Biomed. Eng. 2016, 36, 670–678. [Google Scholar] [CrossRef]

- Liu, Y.; Gadepalli, K.; Norouzi, M.; Dahl, G.E.; Kohlberger, T.; Boyko, A.; Venugopalan, S.; Timofeev, A.; Nelson, P.Q.; Corrado, G.S. Detecting cancer metastases on gigapixel pathology images. arXiv 2017, arXiv:1703.02442. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; p. 4. [Google Scholar]

- Islam, J.; Zhang, Y.; Initiative, A.S.D.N. Deep Convolutional Neural Networks for Automated Diagnosis of Alzheimer’s Disease and Mild Cognitive Impairment Using 3D Brain MRI. In Proceedings of the International Conference on Brain Informatics, Arlington, TX, USA, 7–9 December 2018; pp. 359–369. [Google Scholar]

- Arena, P.; Bucolo, M.; Fortuna, L.; Occhipinti, L. Cellular neural networks for real-time DNA microarray analysis. IEEE Eng. Med. Biol. Mag. 2002, 21, 17–25. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).