1. Introduction

With the aim of making every facet of citizens’ lives easy, various technologies and solutions have been studied for intelligent transportation system (ITS) applications in recent years. The aim of an ITS is to provide users with traffic information that enables them to make safer and smarter use of transportation networks. Among many ITS technologies, vision-based vehicle detection has played a vital role in many applications, such as traffic control, traffic surveillance, and autonomous driving. Compared to other sensors such as lidar and radar [

1,

2,

3], camera-based sensors are more preferable owing to such benefits as easy installation, low-cost maintenance, and high flexibility. Furthermore, the emergence of parallel computing, including multicore processing and graphical processing units, has allowed the pursuit of the real-time implementation of vision-based vehicle detection methods.

To detect on-road vehicles, most vehicle detection methods use vehicle appearances as the main features [

4]. In the absence of details regarding vehicle appearance, detecting vehicles at nighttime is more challenging than in the daytime. In the daytime, vehicle detection is based on the color, shape, shadow, corners, and edges of vehicles [

5]. On the other hand, at night, the abovementioned features are not visible because of the low contrast and luminosity of nighttime images. Under dark conditions, the most salient features are light sources, and vehicle headlights or taillights become important characteristics that can be used for identifying vehicles. Depending on the purpose of the specific system, a vehicle detection algorithm may be constructed based on headlights or taillights only or both headlights and taillights. For instance, traffic surveillance systems only detect oncoming vehicles (headlights), whereas advanced driver-assistance systems monitor preceding vehicles (taillights) or both preceding and oncoming vehicles (taillights and headlights). For nighttime vehicle detection, most existing studies execute two main steps: vehicle light identification followed by vehicle light pairing.

Various methods have been proposed to identify headlights in a captured image. Most of the methods first segment the image to find bright blobs that may be headlights. By using a fixed or adaptive threshold, a set of pixels of bright spots whose gray intensity values are higher than the threshold is retrieved. Then, to classify whether a bright blob is a headlight, rule-based or machine-learning-based methods are applied, the former of which are the most commonly used. In studies on rule-based methods [

6,

7], rules are constructed based on prior knowledge and statistical laws of contrast, position, size, and shape to classify headlights and other objects. The difficulty concerning rule-based methods is that the rules should be defined carefully and cover all scenarios to obtain highly accurate results. Alternatively, machine-learning-based methods have been researched recently because of their good discrimination and superior adaptability. In [

8,

9], a support vector machine (SVM) is applied to classify headlights and nuisance lights (reflections). Additionally, the Adaboost classifier and Haar features are combined to discriminate headlights from non-headlights in [

10,

11].

Unlike headlights, the dominant color of taillights is red; the redness of rear lights can be easily filtered out using color spaces. In [

12], a set of thresholds for filtering red color in taillights is directly derived from automotive regulations and adapted for real-world conditions in the hue-saturation-value (HSV) color space. A Y’UV color space [

13] is used to extract potential taillight candidates in an image. In [

14], the mean of red intensity of vehicle taillight are used to verify whether an extracted bright blob is a vehicle.

After identifying vehicle lights, the light pairing process is conducted, since a vehicle is indicated by a pair of vehicle lights. This process can be performed by finding the similarity between two vehicle lights. The similarity of two lights is evaluated with respect to certain characteristics such as symmetry condition, size, shape, and cross-correlation [

6,

7,

12,

13]. A pairing method using optimization is proposed in [

10,

11], which applies motion information from the tracking and spatial context to find the correct pairs. However, this method carries high computation cost for optimizing over the entire set of candidate light pairs. Light pairing faces certain difficulties: one vehicle light may be shared by more than one light pair if two vehicles are at the same distance from the host vehicle. Moreover, the pairing process may fail when various complex situations occur on the road, such as partial vehicle occlusion, blooming effects, or turning vehicles.

To resolve the abovementioned problems, in this paper, we propose a vehicle detection and tracking system that perceives both preceding and oncoming vehicles on the road. The contributions of this work are three-fold: first, a machine-learning-based approach for detecting both headlights and taillights at night is proposed; second, a combination of motion information, correlation coefficient, and size of vehicle light is defined to pair the vehicle lights; finally, partial vehicle occlusion is solved by retrieving and analyzing tracking information from subsequent frames.

The remainder of this paper is organized as follows.

Section 2 describes the entire process of the system. It contains two main blocks: vehicle detection, which detects preceding and oncoming vehicles, and vehicle tracking with occlusion handling. Then, the experimental setup and evaluation results based on a real dataset are presented in

Section 3. Finally, the conclusions are drawn in

Section 4.

2. Proposed Vehicle Detection and Tracking System

In contrast to a surveillance system which is stationary, the proposed system uses a camera mounted inside a moving vehicle. Therefore, the proposed system must cope with more complex scenarios than those faced by a traffic surveillance system. Since there is no recent studies that detect and track both oncoming and preceding vehicles in the nighttime, the proposed system is mainly derived from the studies of Chen, Y. et al. [

6,

7].

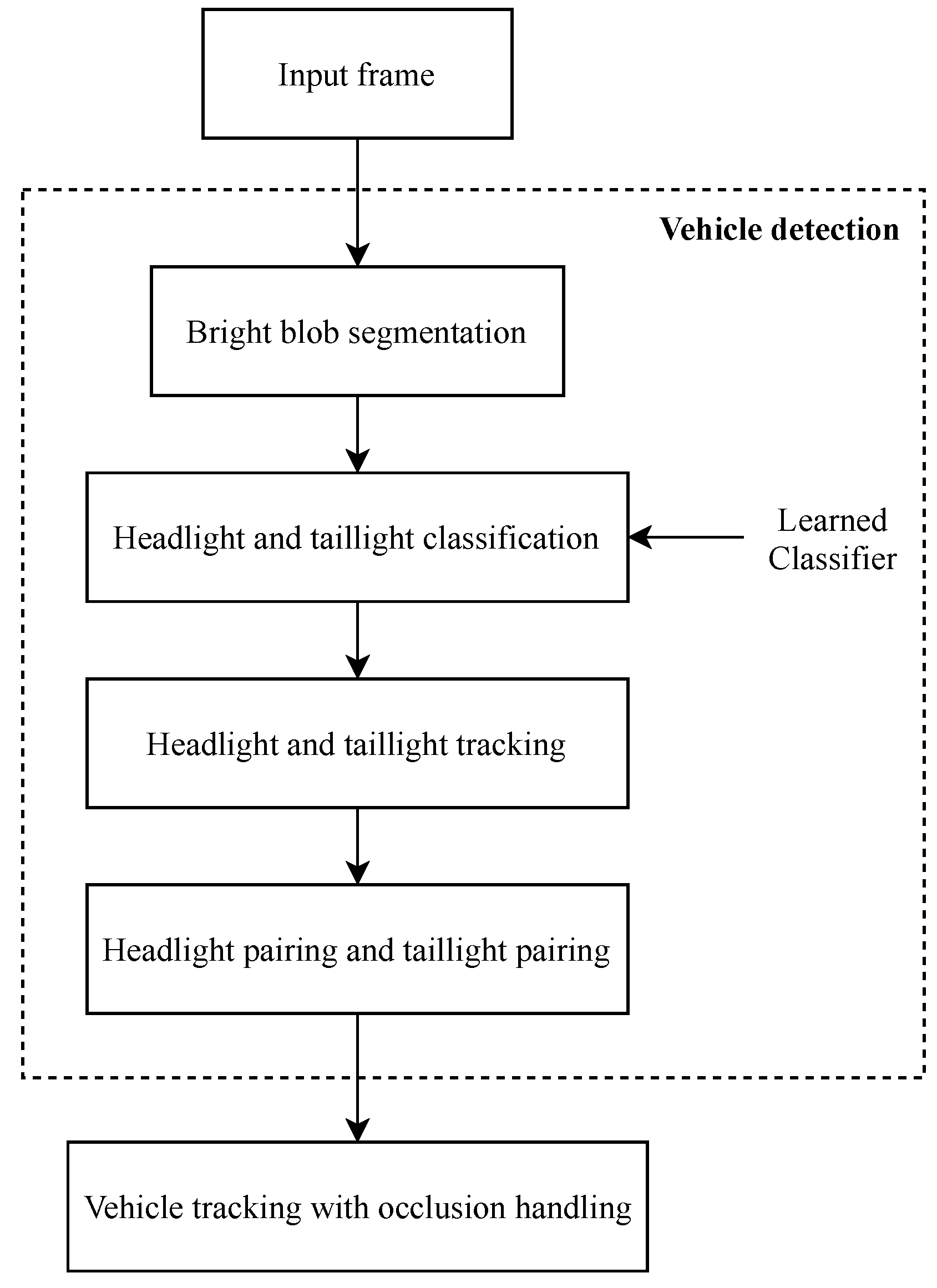

In the proposed system, five main modules are implemented sequentially, including bright blob segmentation, headlight and taillight classification, headlight and taillight tracking, headlight pairing and taillight pairing, and vehicle tracking with occlusion handling. However, before executing the online process that detects and tracks vehicles in the input image, a pre-trained offline classifier is generated to prepare for the headlight and taillight classification module. All the modules of the system are addressed in separate subsections in this paper. The overview of the proposed system is introduced in

Figure 1.

2.1. Pre-Trained Offline Classifier

Based on the study of Zou [

10], which outperforms other methods, in this section, a machine- learning-based method is proposed to classify both headlights and taillights in nighttime images. In the previous study, a learning headlight detector is created to discriminate headlights from non-headlights by fusing the Adaboost classifier and Haar features. However, we cannot use the previous work directly in our context, because the Adaboost classifier is a binary classifier and it has limitations in classifying multiclass data. Thus, we present a new method that combines the multiclass Adaboost classifier [

15], Haar features, and a color feature extracted from the L*a*b* color space to discriminate head and tail lights. The proposed learning method is described as follows, according to the flowchart illustrated in

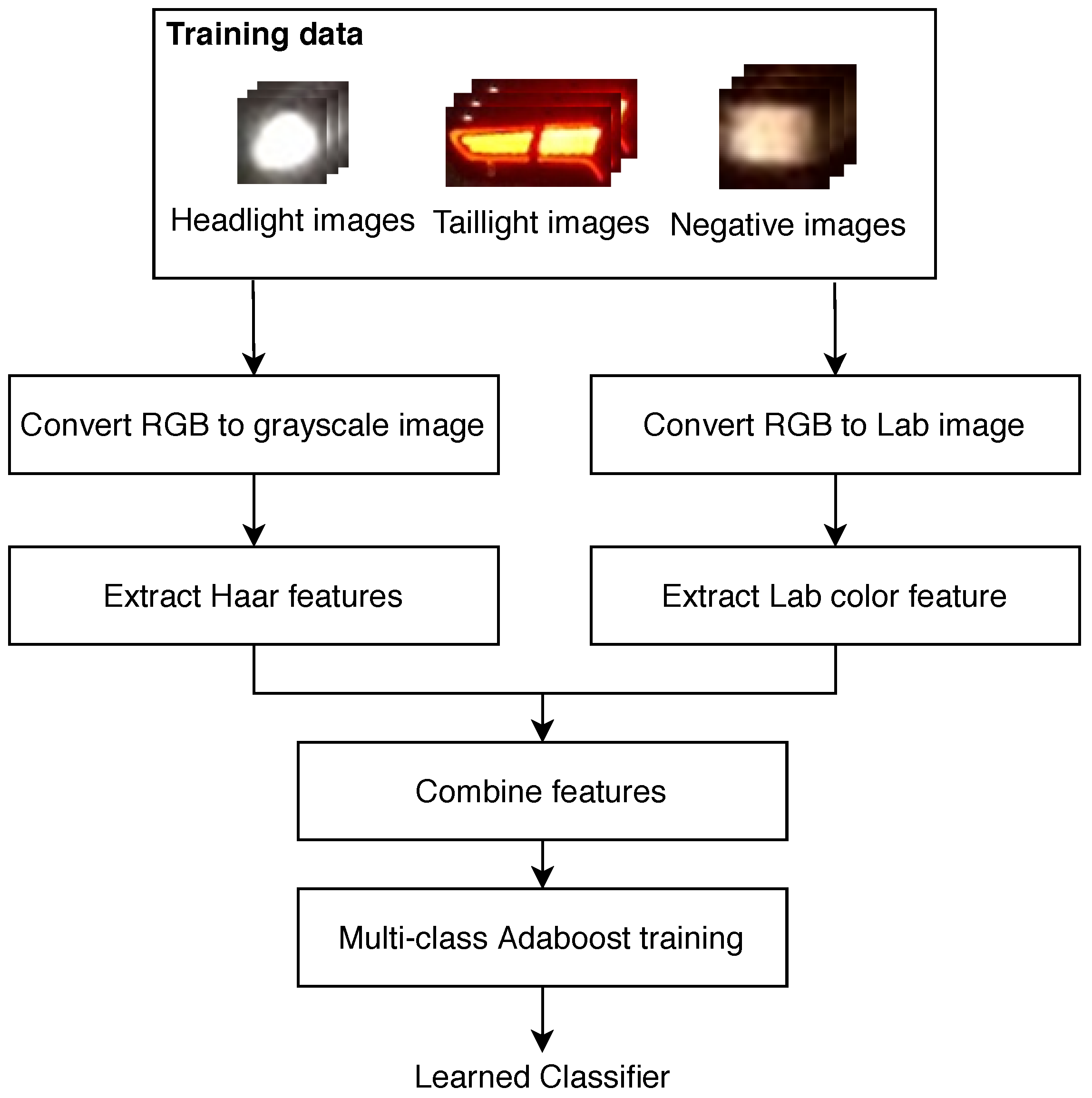

Figure 2. First, a training dataset consisting of the headlight, taillight, and negative images is annotated from the captured images. Negative samples that do not contain any headlights or taillights are randomly taken. These include reflections on vehicle bodies, road surfaces, and road signs. Before extracting features, all the training images must be resized to the same dimensions, which are selected from the experiment. Next, each training image is converted from RGB to grayscale and L*a*b* color space.

For the grayscale image, we use two-rectangle and three-rectangle features to extract Haar features. The process for extracting these features is not mentioned in this paper, as they are described in particular detail in [

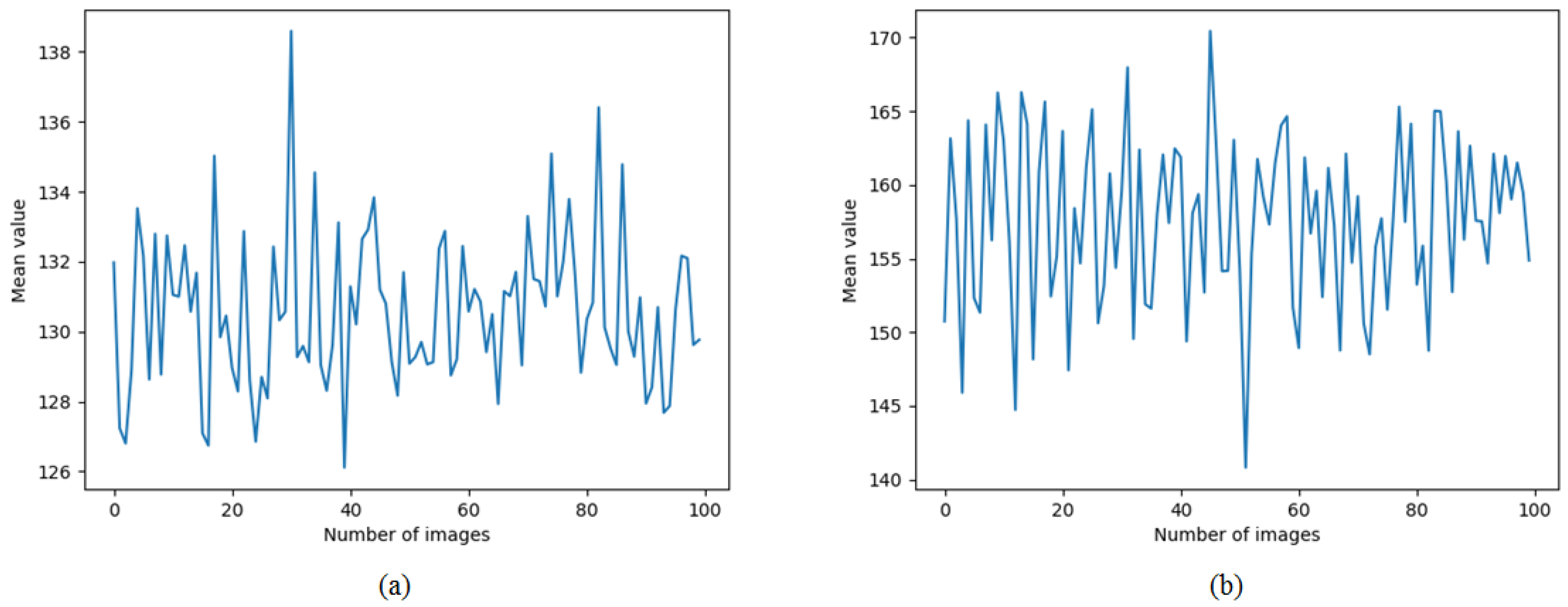

16]. For the L*a*b* image, only the a* channel representing the green–red color is considered. This channel is chosen statistically in the experiment and the mean value of the a* channel is calculated as the Lab color feature. The statistics of the mean values of the a* channel from 100 headlight images and 100 taillight images are depicted in

Figure 3. It is clearly observable that the mean values of the a* channel of headlight images are lower than 140, whereas thoses of the a* channel of taillight images are higher than 140. Moreover, when the mean values of the a* channel from 300 headlight images and 300 taillight images are calculated, they are still at the same distribution as in the

Figure 3. Therefore, the Lab color feature might be a promising feature to discriminate headlights and taillights. Finally, the combination of Haar features and the Lab color feature is input into a multiclass Adaboost classifier to train the learned classifier.

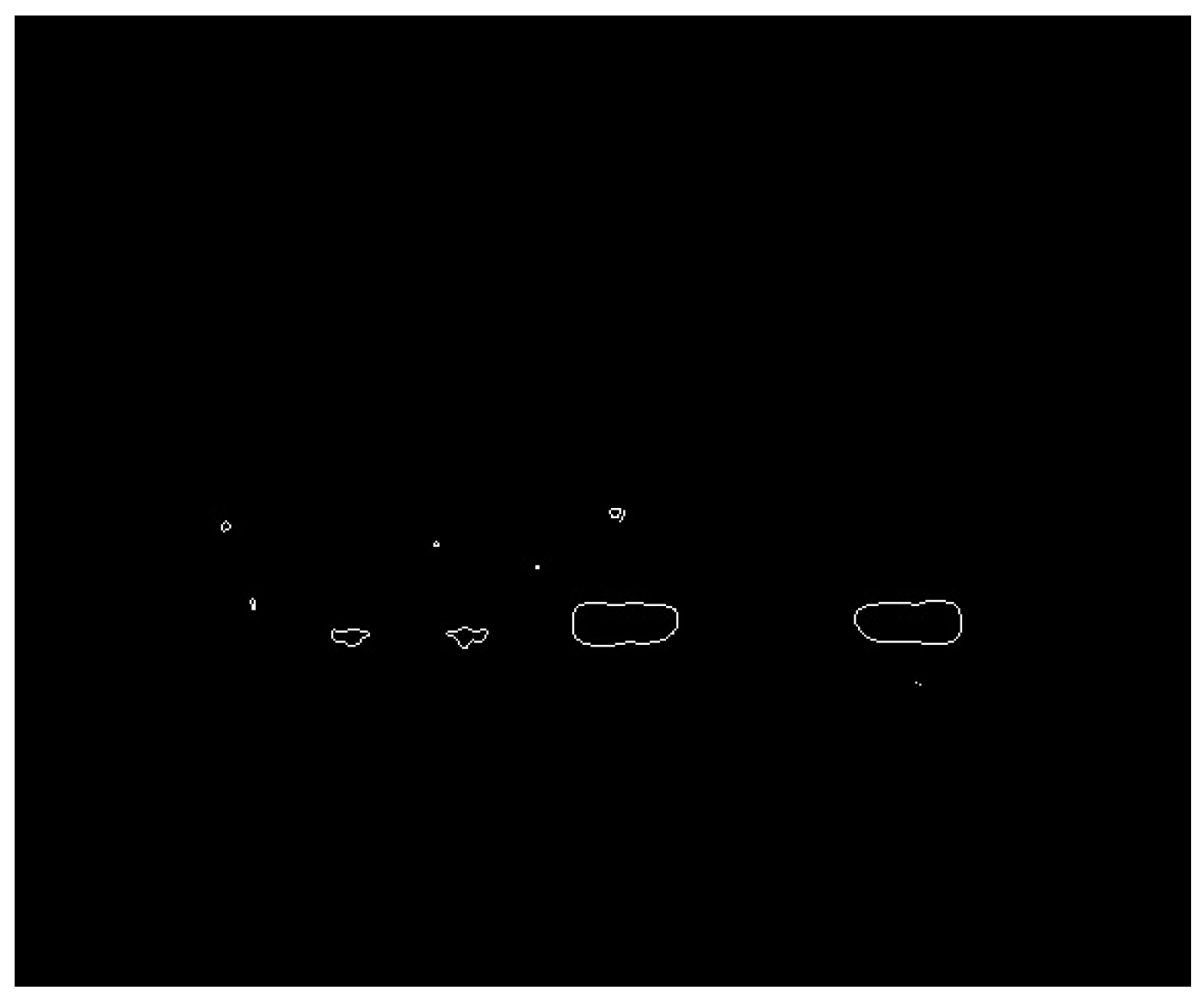

2.2. Bright Blob Segmentation

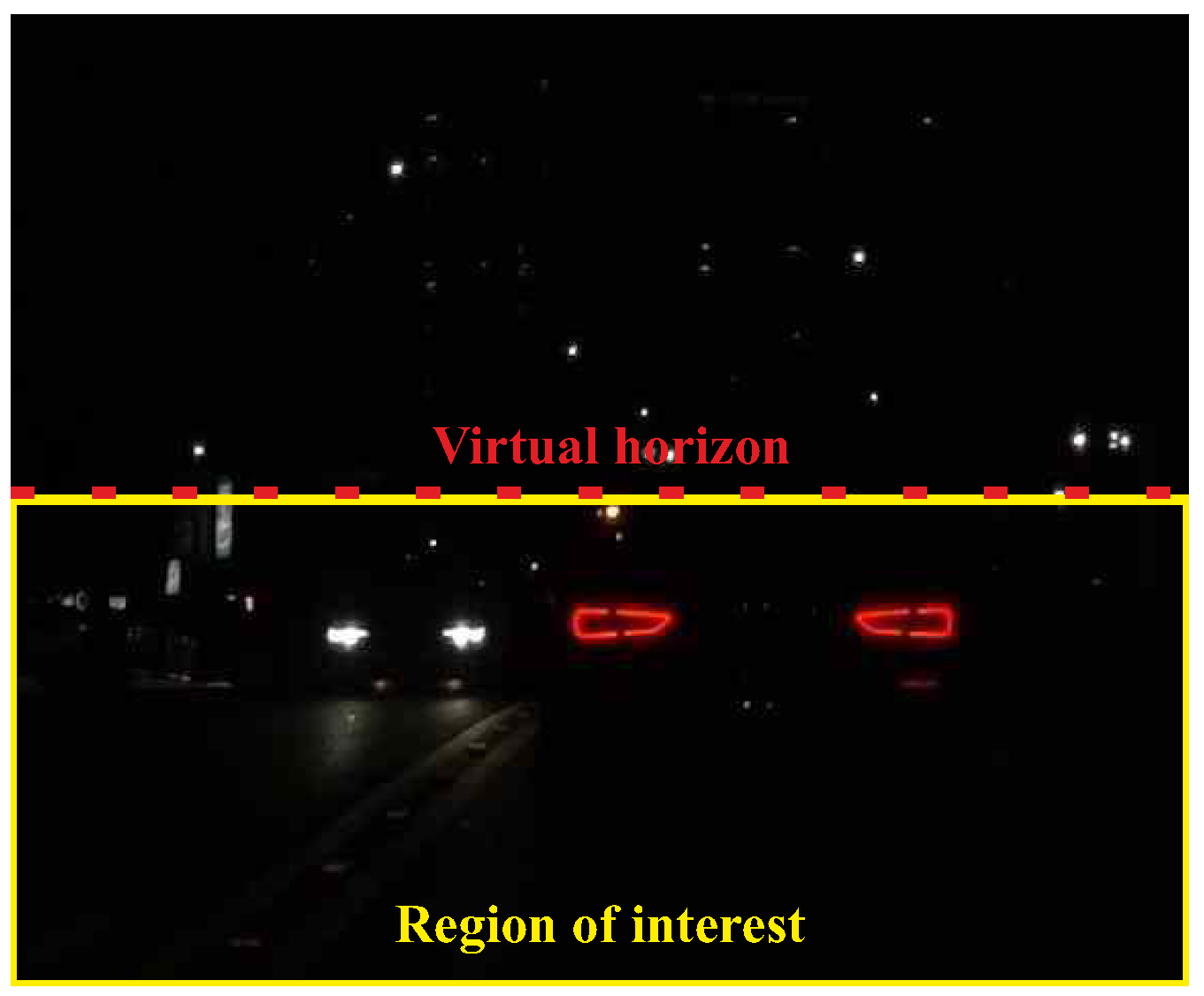

Given an RGB image retrieved from a vehicle-mounted camera, the vision-based system first extracts bright blobs that are potential vehicle lights. Because the main focus of this system is to detect and track vehicles based on vehicle lights, the nonvehicle lights such as street lamps and traffic lights should be removed from the road scene image. To filter out nuisance lights and save the computational cost, a region of interest (ROI) in which the bright blob segmentation is performed is determined. Based on the observation and the study [

7], all the objects located above the virtual horizon are screened out. Thus, the ROI is the region below the virtual horizon (see

Figure 4). After locating the ROI, an automatic multilevel thresholding [

17] and HSV color thresholding [

12] are applied to preliminarily extract potential bright blobs that might be headlights or taillights. As a result, a binary image containing the extracted bright blobs is created (see

Figure 5). Then, the contours of extracted blobs are retrieved by using a border following algorithm [

18] (see

Figure 6).

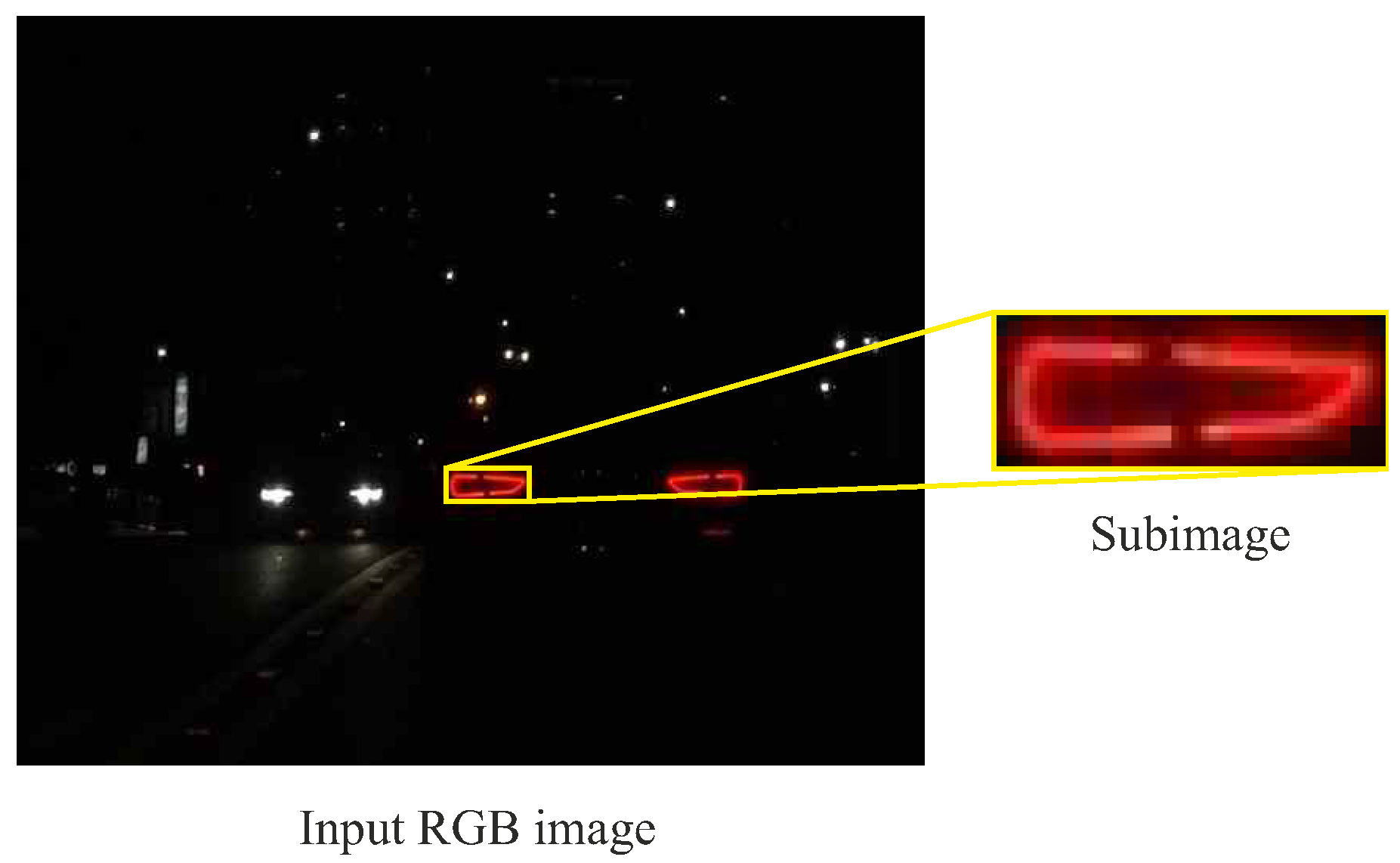

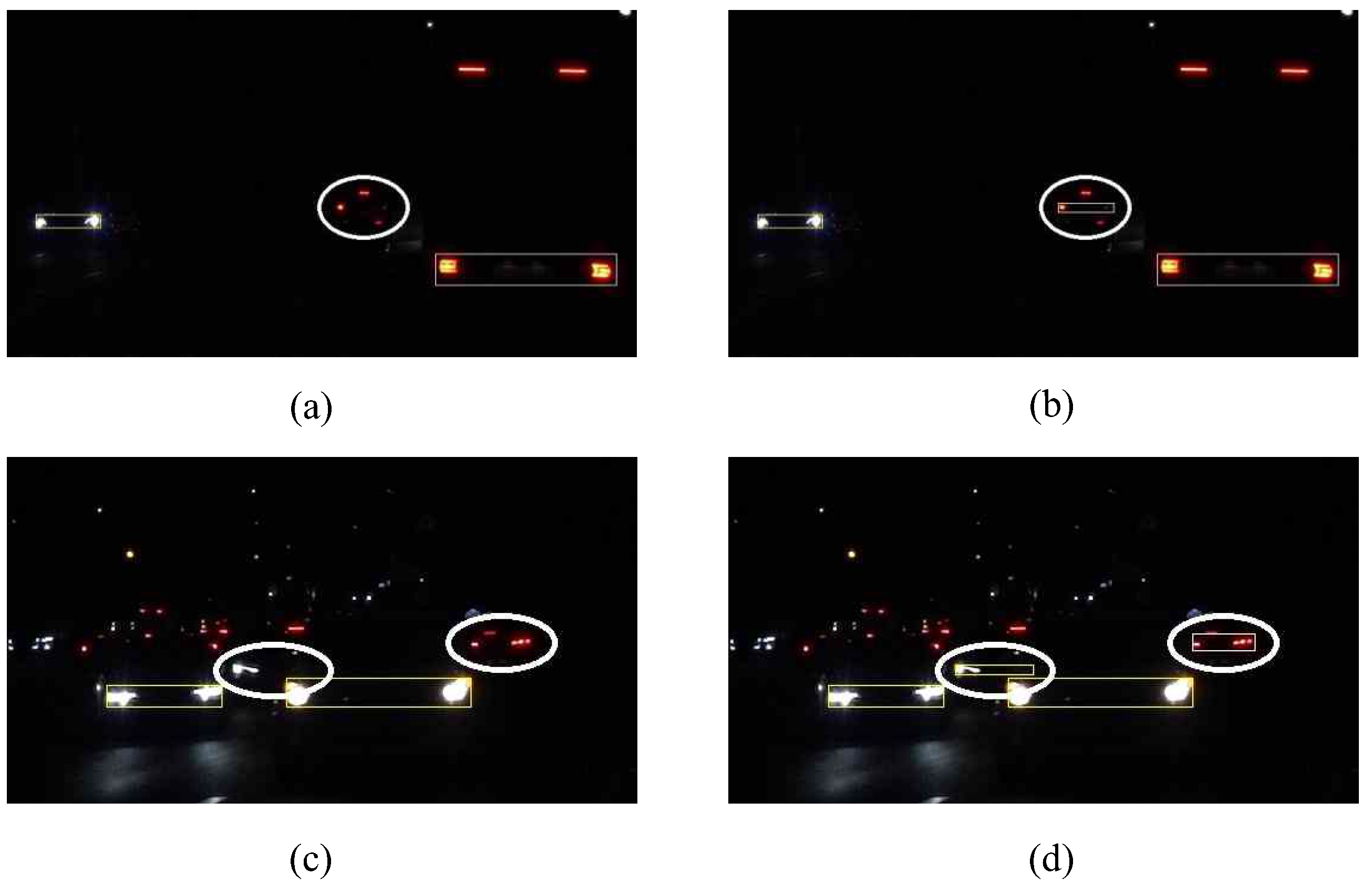

2.3. Headlight and Taillight Classification

After executing the bright blob segmentation process, the contours of bright blobs are retrieved and the location of each bright blob is determined by the position of the bounding box surrounding the contour. The position of a bounding box is defined by the left, top, right, and bottom coordinates. In this section, to remove the nonvehicle illuminant objects and identify vehicle lights, the pre-trained classifier is applied to the extracted bright blobs. First, the position of the bounding box surrounding the extracted bright blob is used to extract a subimage from the input RGB image (see

Figure 7). Then, the combined features of the bright blob are calculated by using the feature extraction process presented in

Section 2.1. Finally, the pre-trained classifier is used to assign a class label (headlight, taillight, or nuisance) to the combined features. The result of the classification process applied to an input RGB image is depicted in

Figure 8. In

Figure 8, headlights and taillights are marked by yellow and white rectangles, respectively.

2.4. Headlight and Taillight Tracking

To refine the detection results and facilitate the vehicle light pairing and vehicle tracking steps, this section presents a vehicle light tracking mechanism by considering spatiotemporal information in consecutive images. After performing the headlight and taillight classification, a headlight set and a taillight set that include the positions of bounding boxes surrounding vehicle lights are retrieved. Although the results of the previous step still contain false positives, they are going to be eliminated in subsequent steps. Because the light tracking, light pairing, and vehicle tracking mechanisms are all applied equally to both headlights and taillights, the term “vehicle lights” is used and implicitly understood as headlights and taillights.

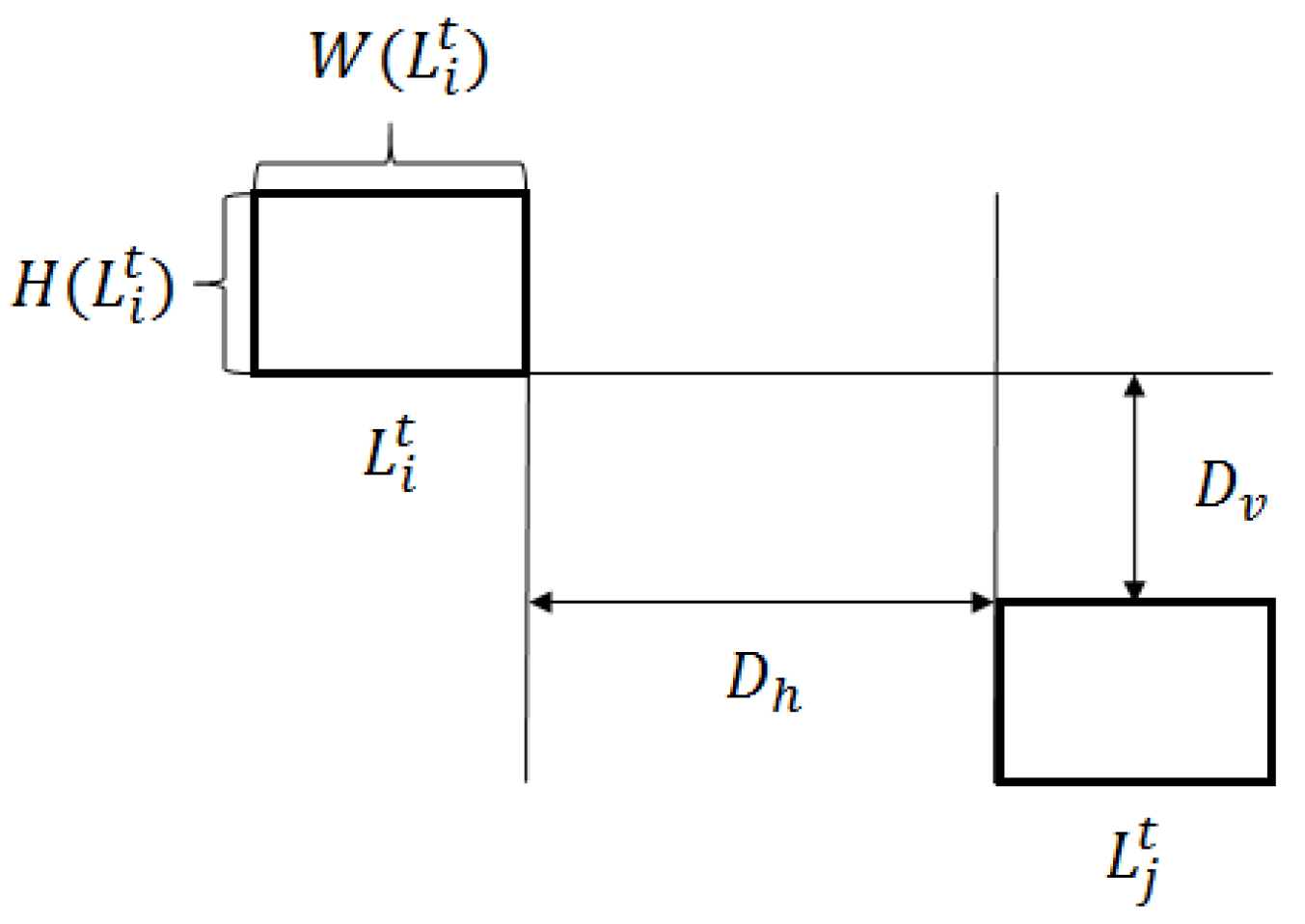

To depict the light tracking process, the following terms are first defined:

and denote the ith vehicle light in the ROI and the number of detected vehicle lights in frame t, respectively.

denote the location of vehicle light , and are the top, left, bottom, and right coordinates of the bounding box enclosing , respectively.

The width and height of vehicle light are denoted as and , respectively.

The horizontal distance

and vertical distance

between two vehicle lights

and

in frame

t are defined as

The value of the distance is negative if two vehicle lights are overlapped in the horizontal or vertical projection.

The degrees of overlap between the horizontal projections or vertical projections of two vehicle lights

and

in frame

t are

The defined terms are illustrated in

Figure 9.

To obtain information including the position, size, and velocity of the same vehicle light in subsequent frames, a light tracker is initialized if a vehicle light appears for the first time in the ROI. Let

denote the light tracker representing for the trajectory of

. The light tracker

tracks the vehicle light

appearing from the first frame to frame

t.

denotes the set of detected vehicle lights in frame

t.

denotes the set of light trackers in frame

. Based on the condition in the study [

6], which tracks vehicle headlights in a traffic surveillance system, two detected vehicle lights in two consecutive frames belong to one light tracker if their bounding boxes overlap to each other. Applying this condition for tracking vehicle taillights, the system is still able to track taillights, whether the preceding vehicles are moving at high or low speed. However, applying the condition for tracking vehicle headlights, the system may fail when the oncoming vehicle’s velocity is higher than 50 km/h. Therefore, a modified version of the tracking mechanism in the study [

3] is presented in this section. First, the overlapping score

of two vehicle lights (two bounding boxes)

and

is defined, where these lights are detected in different frames

t and

.

where

A is the area of the bounding box enclosing the light region.

is used to determine the tracking state of newly detected vehicle lights in the incoming frame. It is assumed that the time interval between two consecutive frames is short (33ms for 30 frames per second), such that the vehicle velocity does not change significantly. Therefore, the motion information is used to predict the position of an incoming vehicle light. For a vehicle light that has been tracked in at least two frames, its motion vector can be calculated as follows:

The motion vector is equal to 0 when the vehicle light appears for the first and second times. Let

denote the predicted position of the vehicle light tracker

in the next frame:

In the light tracking process, the vehicle light tracker might be in one of three possible states:

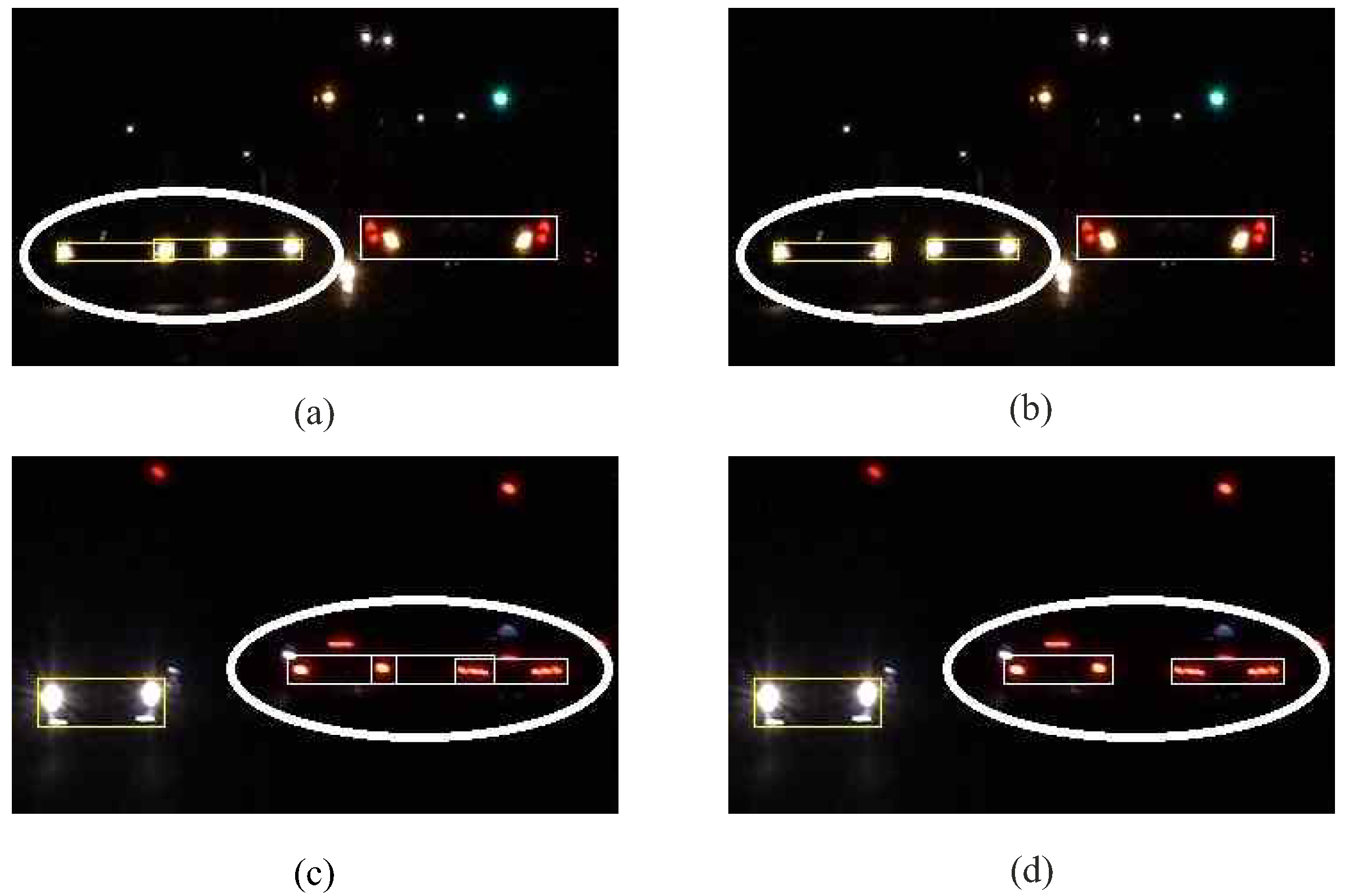

2.5. Headlight Pairing and Taillight Pairing

After performing the headlight and taillight tracking process, the set of vehicle light trackers is retrieved. As the vehicle lights of one vehicle are located in pairs and symmetrical, the light tracker set is used to determine the position of on-road vehicles by pairing the vehicle lights. Existing works [

7,

19,

20] only use the spatial features within one frame, such as the area, width, height, vertical coordinate, and correlation, to check the similarity between two vehicle lights; these features are not sufficient for pairing lights. When the multiple vehicles in front are moving at the same distances from the host vehicle, incorrect light pairing may occur because the spatial features of the lights in these vehicles are similar. To reduce the false detection rate, a light pairing method using spatiotemporal information is proposed. The proposed light pairing method is separated into two stages: potential light pair generation and light pair verification.

To generate the set of candidate light pairs, the pairing criteria are given as follows:

Two vehicle lights are highly overlapped in vertical projections:

Two vehicle lights have similar heights:

The ratio of pair width to pair height must satisfy the following condition:

In the criteria and are the thresholds that determine the characteristics of pairing vehicle lights. These values are statistically chosen as 0.7, 0.7, 2.0, and 14.0, respectively.

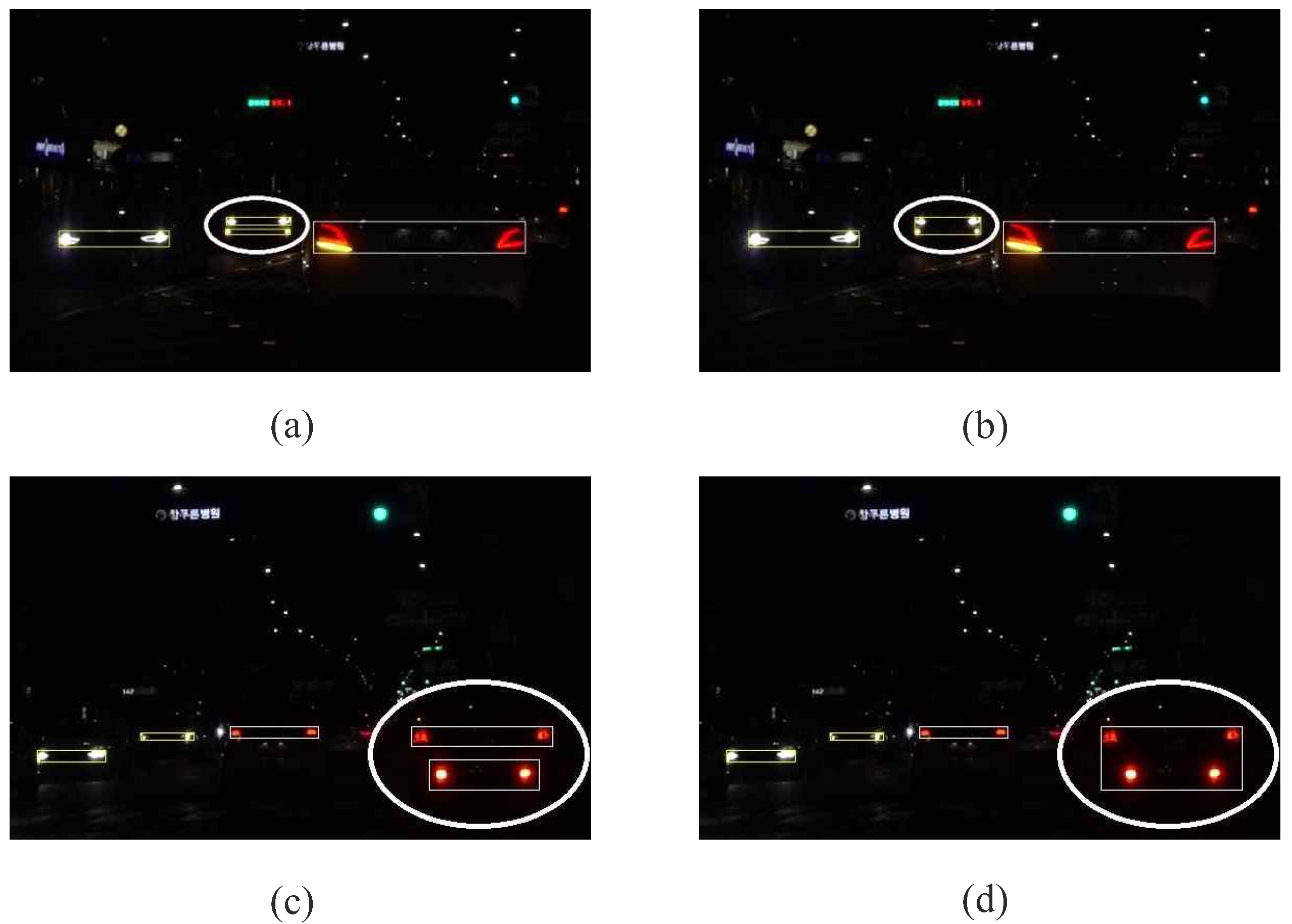

The result of the first stage is the set of candidate light pairs, but this set may include some falsely detected pairs because one vehicle light can be shared in more than one pair. Therefore, light pair verification is performed to remove the false pairs.

To reduce the false pairs, a

pairing score of the candidate light pair is used to perform a comparison between the conflicting pairs. Among the conflicting pairs, the pair with the highest

pairing score is retained to describe a vehicle, and the other pairs are removed from the candidate pair set. The

pairing score is a combination of spatiotemporal features of two vehicle lights (

and

) in a pair, including the number of tracked frames, displacement, size, and correlation. It is defined as follows:

where

is the ratio of the number of tracked frames of two vehicle lights;

is the ratio of the total displacements

d of vehicle lights (

and

) in the four most recent frames. As both lights in a pair should move coherently in time, their motion vectors are used to calculate the displacements;

is the ratio of the sizes of two vehicle lights;

is the correlation between histograms of vehicle lights

and

. Herein, the Bhattacharyya coefficient [

13] is used to compare the 3-D histograms of the left and right lights.

In Equation (

12), the coefficients

and

are experimentally chosen as 0.2, 0.2, 0.3, and 0.3, respectively. The results of light pair verification are illustrated in

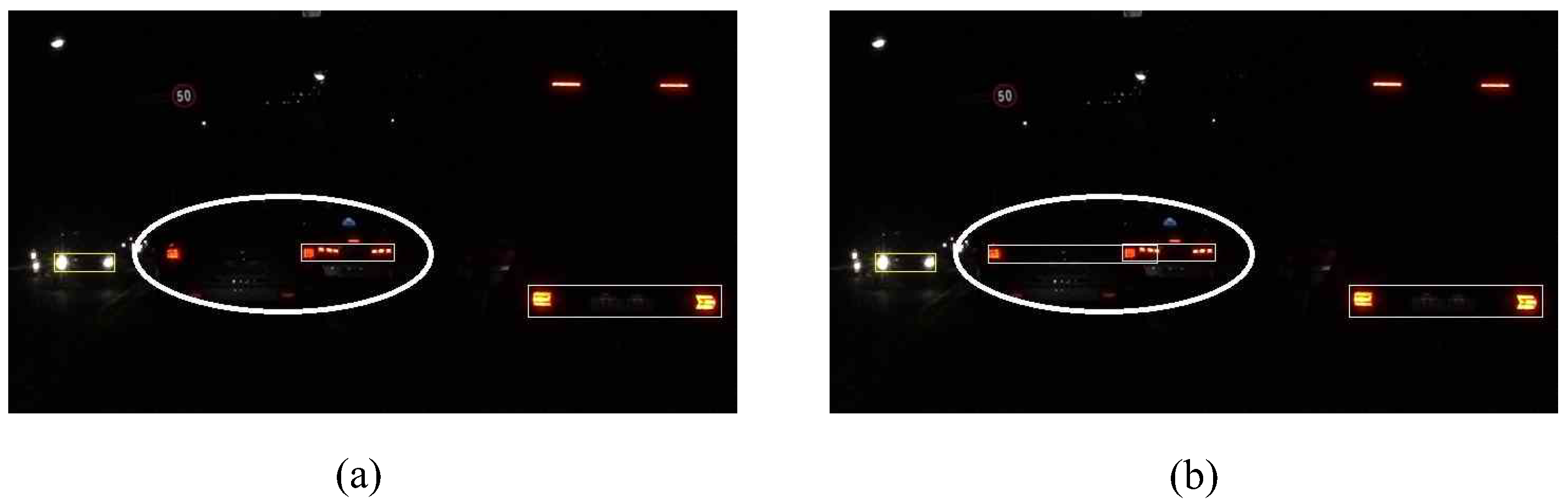

Figure 10. After dismissing the conflicting pairs by using the pairing score, a set of light pairs that describe the vehicles in front is retrieved and all the light trackers that are not used for pairing are retained for processing in the next phase.

2.6. Vehicle Tracking with Occlusion Handling

In the previous section, a light pairing process is proposed to find all the possible vehicles in front of the host vehicle, which it can reduce the falsely detected vehicles. On the other hand, missed pairings still occur and cannot be fixed if the system only considers vehicle detection in single frames. Missed detection happens because of several problems, which may cause either the disappearance or the distorted appearance (size, shape, or color) of one lamp in a light pair. The following problems may lead to the pairing failure: (1) partial vehicle occlusion; (2) two vehicle lights of two different vehicles are detected as one connected region when one vehicle is too close to another vehicle; (3) left-turning or right-turning vehicles; (4) blooming effect of only one light in oncoming vehicles. To solve these problems, a vehicle tracking method with occlusion handling is proposed. In the vehicle tracking process, the detection results are refined by associating the spatiotemporal features of vehicles in sequential frames. It is assumed that a pair of vehicle lights form a vehicle, such that any vehicles that have two light pairs will be put into the post-processing step to group two pairs into one pair. To facilitate describing the vehicle tracking process, the following terms are defined:

and denote the set of light pairs and the remaining light trackers retrieved from the light pairing step, respectively.

denotes the light pair tracker representing for the trajectory of light pair .

The location of light pair

is determined by the bounding box of

:

denotes the set of light pair trackers in the previous frame

In the vehicle tracking process, a light pair tracker might be in one of four possible states:

Update: if a light pair

in the current frame

t matches a light pair tracker

in the previous frame

. Then, the light pair tracker

is associated with

and it is added to the set of light pair trackers

in the current frame. The matching condition for light pair is defined based on the overlapping score in Equation (

5) and the ratio of pair widths of two frames

and

t:

where

and

are the matching thresholds for verifying whether

can be associated with

. The values of

and

are chosen experimentally as 0.3 and 0.7, respectively.

Appear: if a light pair in the current frame t does not match any light pair trackers in the previous frame . Then, a new light pair tracker is created and it is added to the set of light pair trackers in the current frame.

Disappear: a light pair tracker is determined to have disappeared if it satisfies the following two conditions. First, an existing light pair tracker cannot be matched by any light pairs in the current frame t. Second, the predicted positions of the left and right lights in do not match any light trackers . Thus, the light pair tracker is not added to the set of light pair trackers in the current frame.

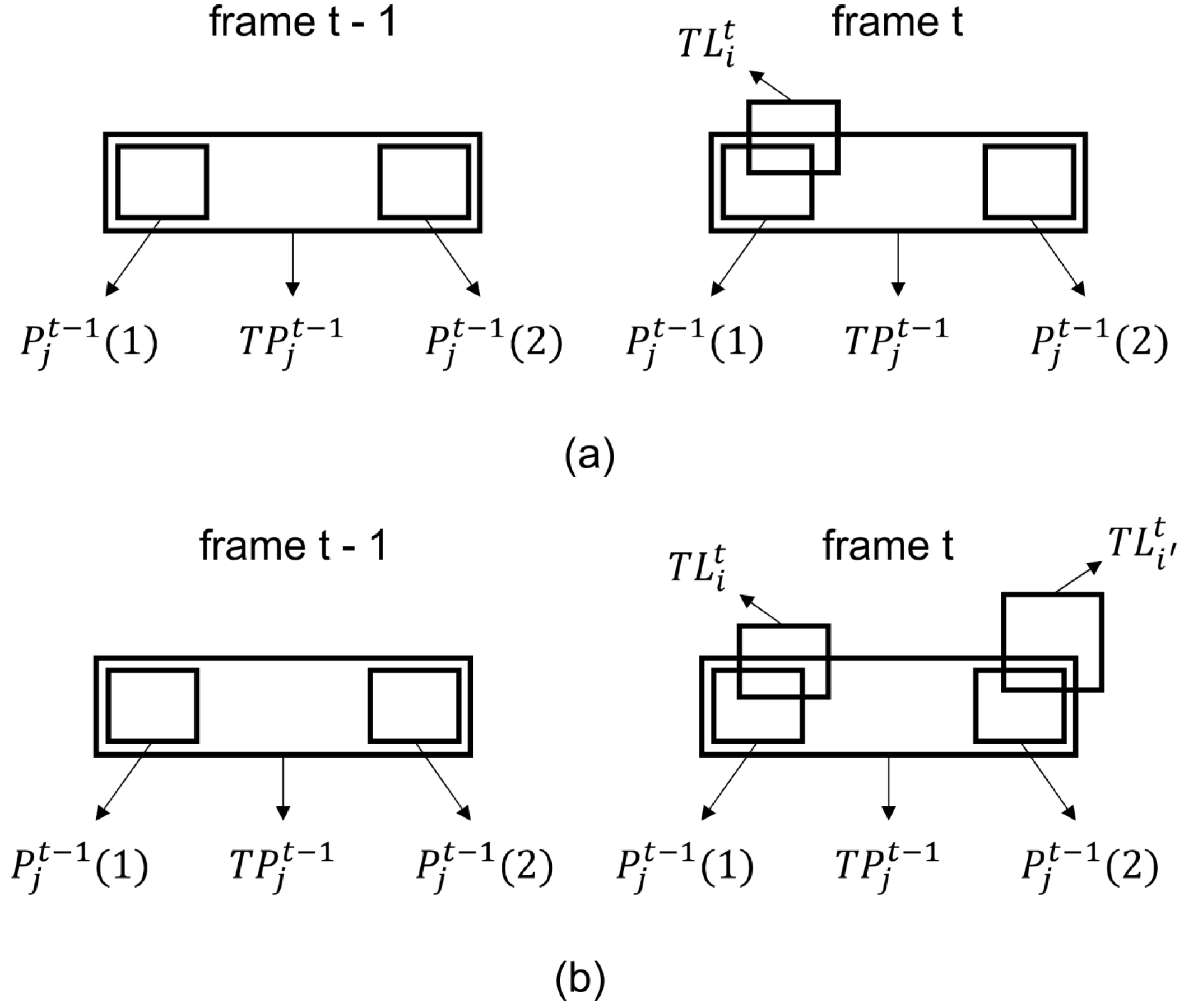

Occlude: a light pair tracker is determined as occluded if it satisfies the following two conditions. First, an existing light pair tracker

cannot be matched by any light pairs

in the current frame

t. Second, in the case of a pairing failure due to the disappearance of one lamp in a light pair as shown in

Figure 11a, the predicted position of the left or right light in

matches a light tracker

(see Equation (

8)). Otherwise, in the case of the distorted appearance of one lamp in a light pair as shown in

Figure 11b, the predicted positions of both the left and right lights in

match two light trackers

. Then, depending on the whether the first or second case occurs, the light pair tracker

is updated by associating

with one or two light trackers in

and it is added to the set of light pair trackers

.

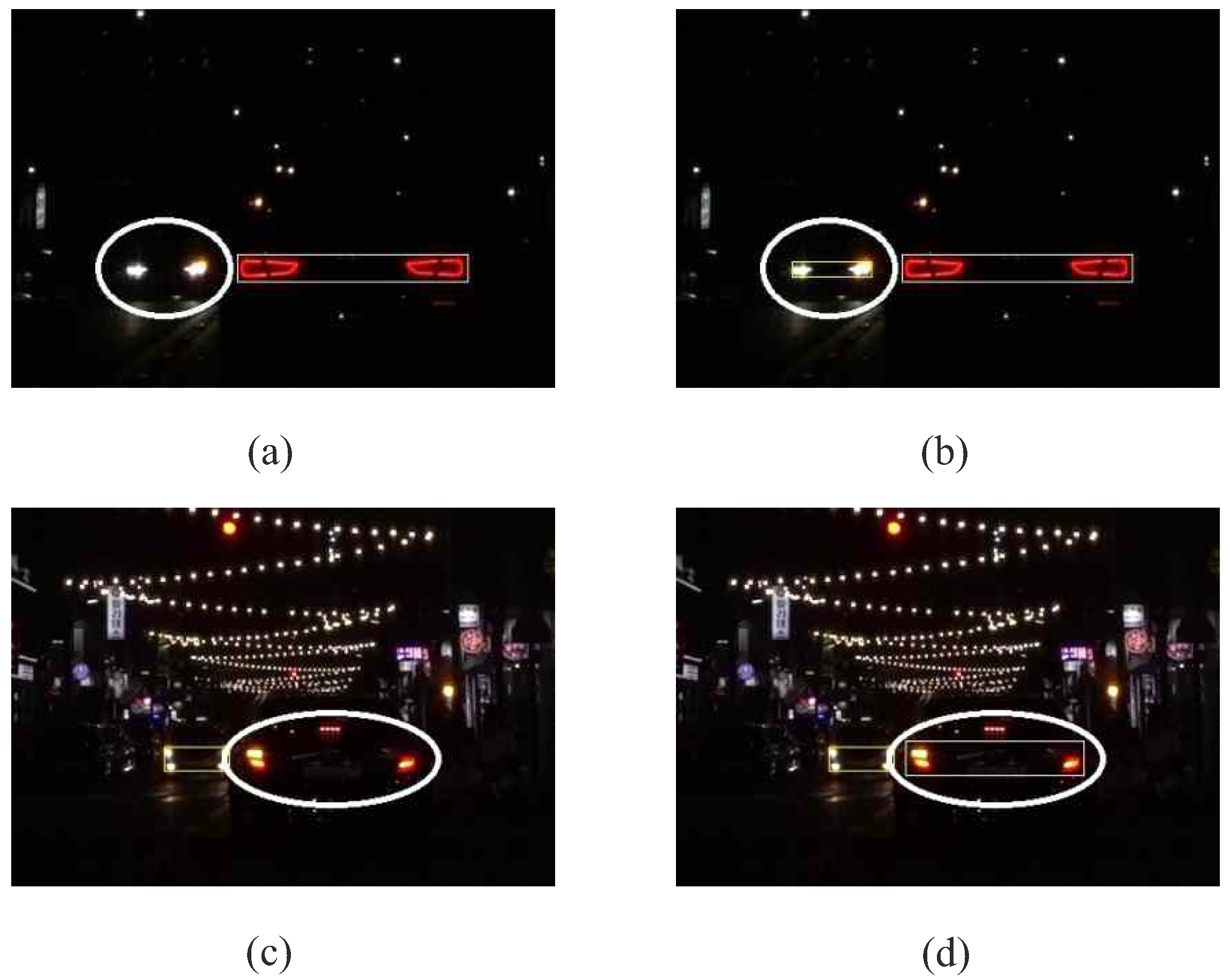

At the end of the vehicle tracking step, the set of light pair trackers in the current frame t is retrieved. Next, for any vehicles with two light pairs, we apply the rules for grouping two light pairs into one pair. Two light pairs and are grouped into one pair if they satisfy the following rules:

They are vertically close to each other:

Two pairs have highly overlapped horizontal projections:

They have similar widths:

In the post-processing step,

and

are the thresholds for grouping multiple pairs that form one vehicle. They are chosen statistically as 0.9 and 0.7, respectively. The results of the post-processing are illustrated in

Figure 12.

4. Conclusions

In this paper, a nighttime vehicle detection system for detecting both oncoming vehicles and preceding vehicles based on headlights and taillights is proposed. Initially, a bright blob segmentation is performed to extract all the possible regions that may be vehicle lights. Then, a machine-learning-based method is proposed to classify headlights and taillights, and to remove false detections in the captured image. Next, each vehicle light is tracked in subsequent frames by using a light tracking process. As one vehicle is indicated by one or two light pairs, a modified light pairing process using spatiotemporal information is applied to the sets of detected headlights and taillights to determine the positions of the potential vehicles. Finally, the vehicle tracking process is applied to refine the vehicle detection rate under various complex situations on the road. The experimental results demonstrate that the proposed method significantly improves vehicle detection rate by identifying the vehicles in some situations that previous studies cannot solve.

It is interesting to apply the proposed algorithm to the daytime condition in the future. We also investigate various weather scenarios, such as rainy or cloudy conditions. Furthermore, motorcycle detection and tracking will be examined for integration into the current system.