1. Introduction

Digital images are often corrupted by noise during the acquisition or transmission of the images [

1], rendering these images unsuitable for vision applications such as remote sensing and object recognition. Therefore, image denoising is a fundamental preprocessing step that aims at suppressing noise and reproducing the latent high quality image with fine image edges, textures, and rich details. A corrupted noisy image can be generally described as:

where the column vector

x denotes the original clean image, and the

v denotes the additive noise. There are many possible solutions for

x of a noisy image

y because the noise

v is unknown. This is a fact that encourages scholars to continue seeking for new methods to achieve better denoising results. Various image denoising studies assume

v to be additive white gaussian noise (AWGN). Considering that AWGN is stationary and uncorrelated among pixels, we made the same assumption for this study.

Denoising methods can be classified into two types [

2], internal methods and external ones. The internal methods denoise an image patch using other noisy image patches within the noisy image, whereas the external methods denoise a patch using externally clean image patches. In the past several years, the internal sparsity and the self-similarity of images were usually utilized to achieve better denoising performance. Non-local Means (NLM), proposed by Baudes et al. [

3,

4], is the first filter that utilizes the non-local self-similarity in images. NLM obtains a denoised patch by first finding similar patches and obtaining their weighted average. Because searching for similar patches in various noise levels may be computationally impractical, typically, only a small neighborhood of the patch is considered for searching possible matches. BM3D [

5], a characteristic benchmark method, builds on the strategy of NLM by grouping similar patches together, and suggests a two-step denoising algorithm. First, the input image is roughly denoised. Then, the denoising is refined by collecting similar patches to accomplish a collaborative filtering in the transform domain. This two-step process contributes to the effectiveness of the BM3D, making it a benchmark denoising algorithm. The nuclear norm minimization (NNM) method was proposed in [

6] for video denoising; nevertheless, it was greatly restricted by its capability and flexibility in handling many practical denoising problems. In [

7], Gu et al. presented weighted nuclear norm minimization (WNNM). This was a low rank image denoising approach based on non-local self-similarity; however, it suppressed the low rank parts and shrank the reconstructed data. The K-SVD [

8] denoising utilizes the sparse and redundant representations of an over-complete learned dictionary to produce a high-quality denoised image. Such a dictionary was initially learned from a large number of clean images. Later, it was directly learned from the noisy image patches [

9]. Motivated by the idea of similar image patches sharing similar subdictionaries, Chatterjee et al. [

10] proposed the K-LLD. Instead of learning a single over-complete dictionary for an entire image, K-LLD first performs a clustering step based on the patches using the local weight function presented in [

11]. Then, it separately finds the most optimal dictionary for each cluster to denoise the patches from each cluster. Similarly, the authors of learned simultaneous sparse coding (LSSC) [

12,

13] exploit self-similarities of image patches combined with sparse coding to further improve the performance of image denoising methods based on dictionary learning using a single dictionary. Taking advantage of the noise properties of local patches and different channels, a scheme called trilateral weight sparse coding (TWSC) was proposed in [

14]. In this model, the noise statistics and sparsity priors of images are adaptively characterized by two weight matrices. Based on the idea of nonlocal similarity and sparse representation of image patches, Dong et al. introduced the nonlocally centralized sparse representation (NCSR) model [

15] and the concept of sparse coding noise, thereby changing the objective of image denoising to suppressing the sparse coding noise. K-means clustering is applied to cluster the patches obtained from the given image into K clusters; then, a PCA sub-dictionary is adaptively learned for each cluster, leading to a more stable sparse representation. It is a fact that NCSR is efficient in capturing image details and adaptively representing them with a sparse description. However, since each image patch is considered as an independent unit of the sparse representation in the dictionary learning and sparse coding stages, ignoring the relationships among the patches can result in inaccurate sparse coding coefficients.

There was a major leap in denoising performance with the revival of neural networks, which are trained on large collections of external noisy–clean image priors. Zoran and Weiss [

16] presented gaussian mixture models (GMMs) using a gaussian mixture prior learned from a database of clean natural image patches to reproduce the latent image. PG prior based denoising (PGPD), a method developed based on GMMs, was proposed in [

17] to exploit the non-local self-similarity of clean natural images. A convolutional neural network (CNN) for denoising was proposed in [

18], where a five-layer convolutional network was specifically designed to synthesize training samples from abundantly available clean natural images. Subsequently, fully connected denoising auto-encoders [

19] were suggested for image denoising. Nevertheless, the early CNN-based methods and the auto-encoders cannot compete with the benchmark BM3D [

5] method. In [

20], the plain multi-layer perceptron method is used to tackle image denoising with a multi-layer perceptron trained using training examples. This achieves a performance that is comparable with that of the BM3D method. Schmidt and Roth introduced the cascade of shrinkage fields (CSF) [

21], which combines a random field-based model and half-quadratic optimization into a single learning framework to efficiently perform the denoising. Chen et al. [

22,

23] further presented the trainable nonlinear reaction diffusion (TNRD) method for image denoising problems. It learns the parameters from training data through a gradient descent inference approach. Both the CSF and TNRD show promise in narrowing the gap between denoising performance and computational efficiency. However, the specified forms of the priors adopted by these methods are limited with regard to capturing all the features related to image structure. Inspired by combining learning-based approaches with the traditional methods, Yang et al. [

24] defined a network known as the BM3D-Net by unrolling the computational pipeline of the classical BM3D algorithm into a CNN structure. It achieves competitive denoising results and significantly outperforms the traditional BM3D method. With regard to the development of deep CNNs, some prevalent deep CNN-based approaches are favorably compared to many other state-of-the-art methods both quantitatively and visually (e.g., recursively branched deconvolutional network (RBDN) [

25], fast and flexible denoising convolutional neural network (FFDNet) [

26], and residual learning of deep CNN (DnCNN) [

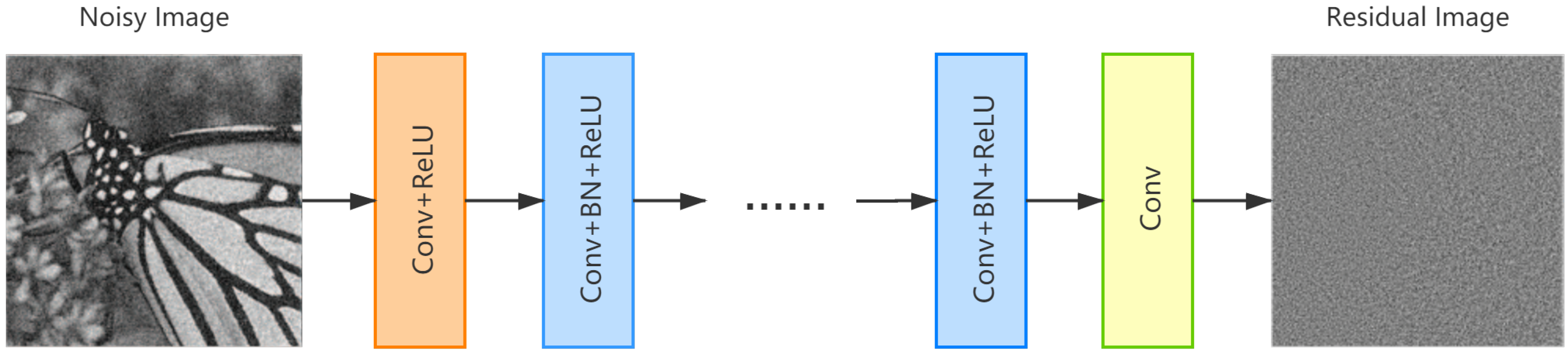

27]). Santhanam et al. [

25] developed the RBDN for denoising as well as general image-to-image regression. Proposed by Zhang et al. in [

26], by inputting an adjustable noise level map, the FFDNet is able to achieve visually convincing results on the trade-off between detail preservation and noise reduction with a single network model. Rather than outputting the denoised image

x directly, in the case of the DnCNN, a residual mapping

is employed to estimate the noise existent in the input image, and the denoising result is

. Taking advantage of batch normalization [

28] and residual learning [

29], the DnCNN can handle several prevailing denoising tasks with high efficiency and performance.

Various image denoising algorithms have produced highly promising results; however, the experimental results and bound calculations in [

30] showed that there is still room for improvement for a wide range of denoising tasks. Some image patches inherently require external denoising; however, external image patch prior-based methods do not make good use of the internal self-similarity. Further improvement of the existing methods or the development of a more effective one using a single denoising model remains a valid challenge. Therefore, we are interested in combining both internal and external information to achieve better denoising results. To this end, we choose NCSR and DnCNN as the initial denoisers by considering their performance and complementary strengths. NCSR, a powerful internal denoising method that combines nonlocal similarity and sparse representation, demonstrates exceptionally high performance in terms of denoising regular and repeated images. The DnCNN possesses an external prior modeling capacity with a deep architecture. This is better for denoising irregular and smooth regions and is complementary to the internal prior employed by the NCSR. In other words, the combination of NCSR and DnCNN can strongly explore both the internal and external information of a given region in the initially denoised images.

In this study, we introduce a denoising effect boosting method based on an image fusion strategy. The objective is to further improve performance by fusing images that are originally denoised by NCSR and DnCNN. These methods have complementary strengths and can be chosen to represent the internal and external denoising methods. Note that, the proposed denoising effect boosting method is simpler than the deep learning-based one introduced in [

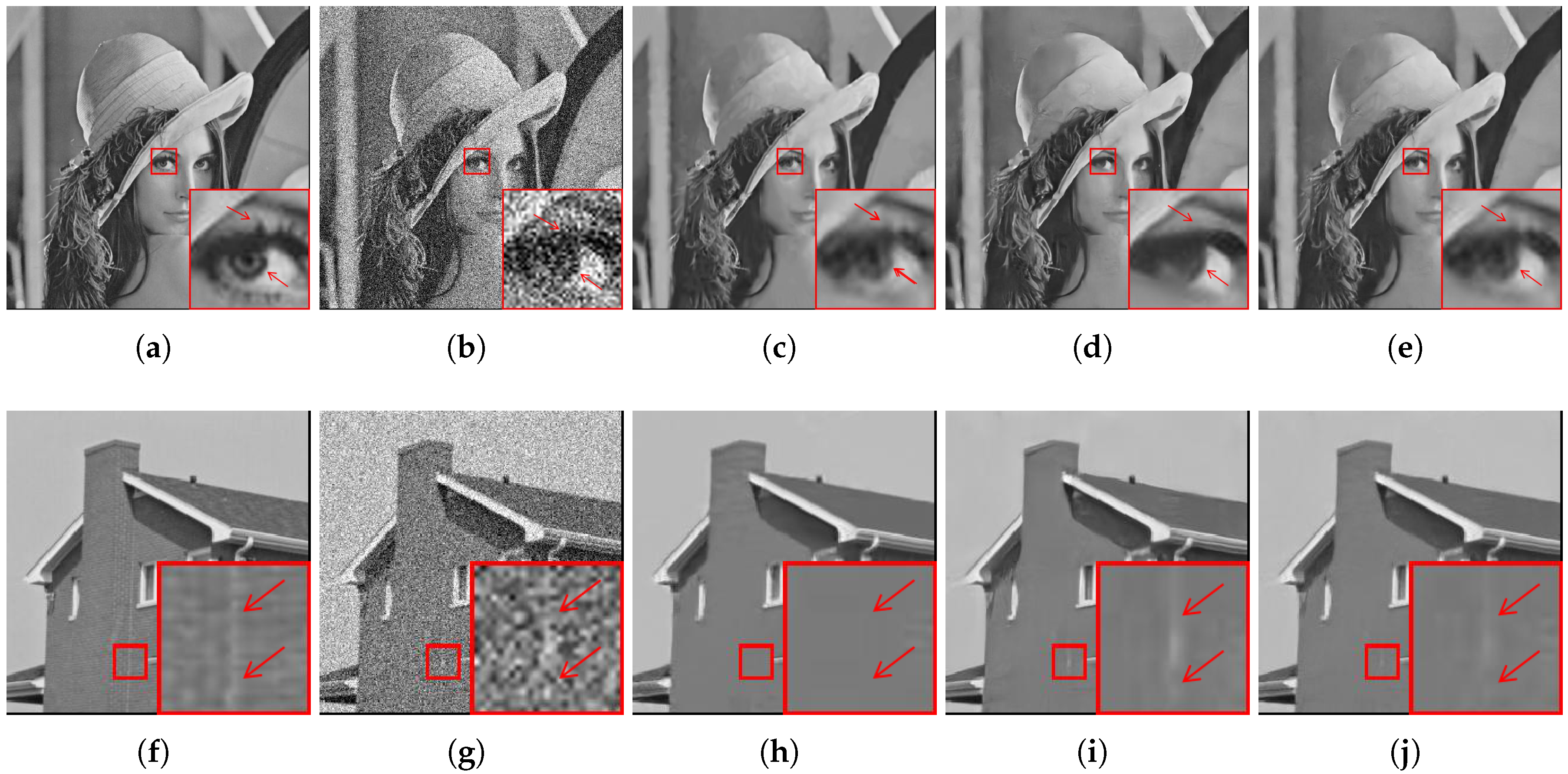

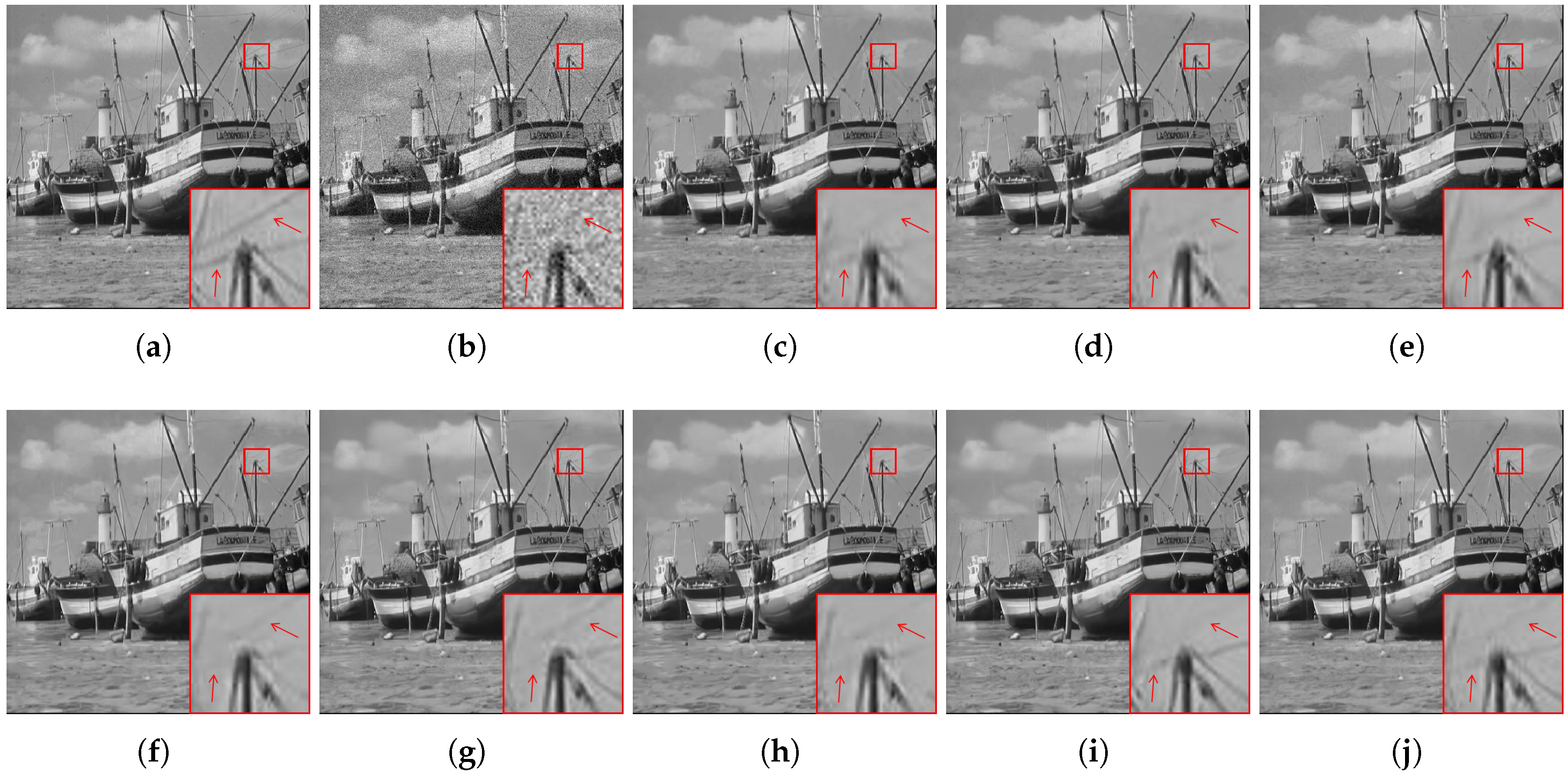

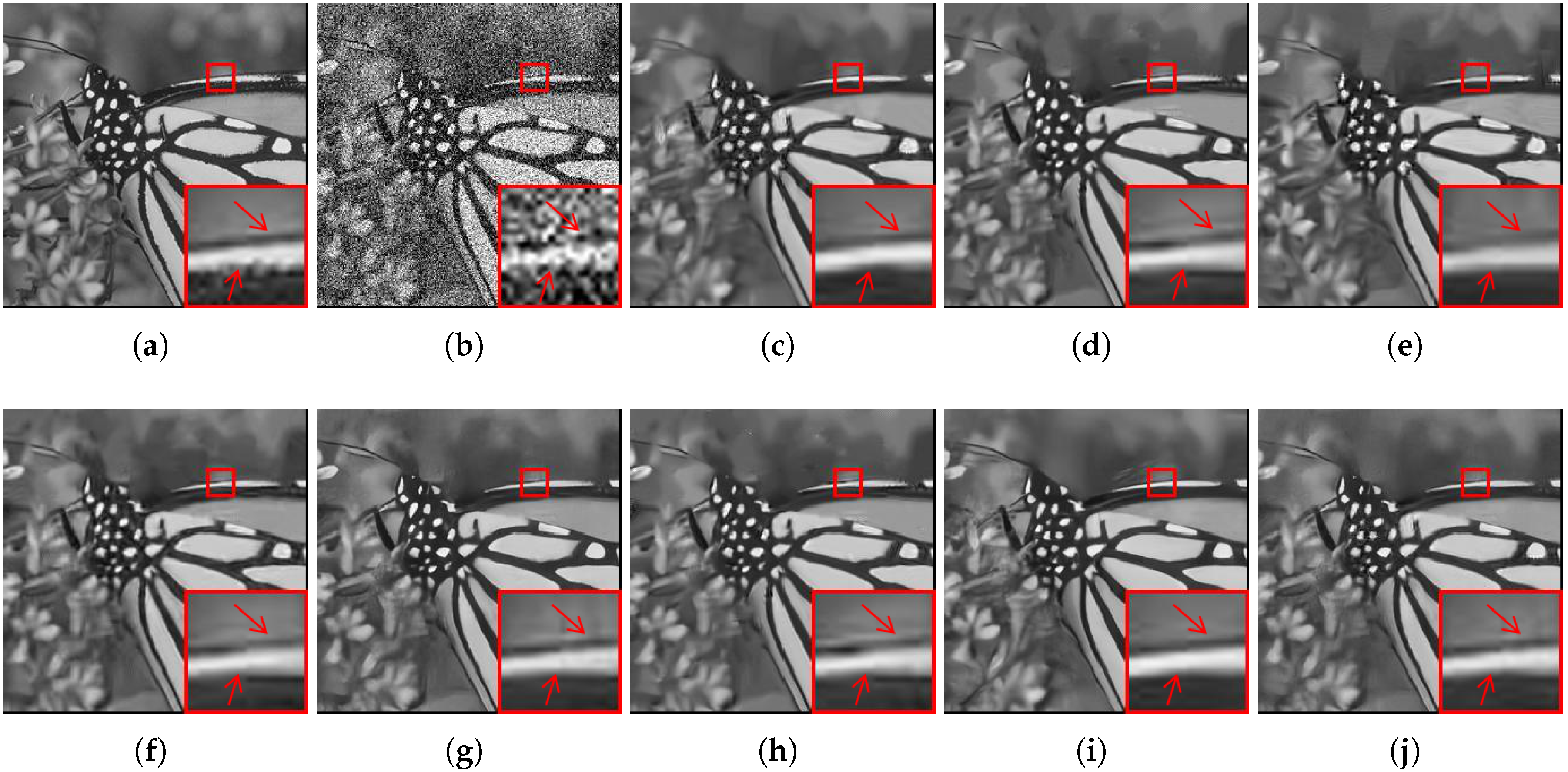

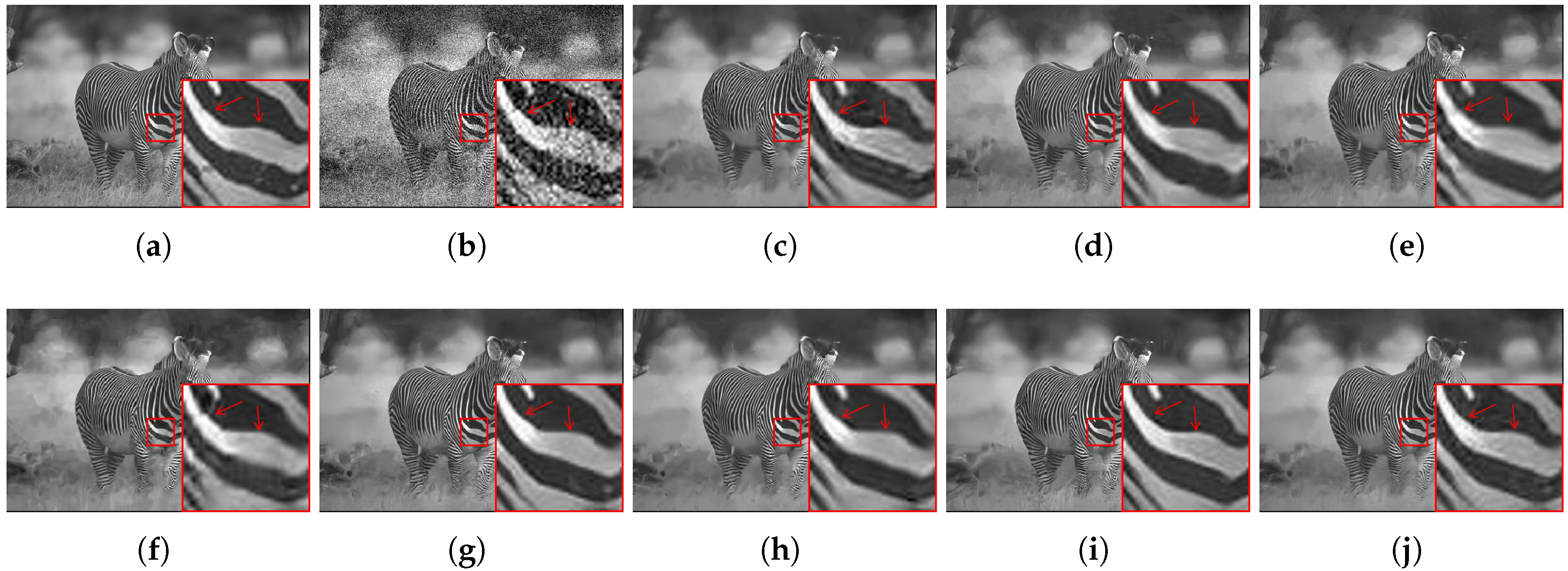

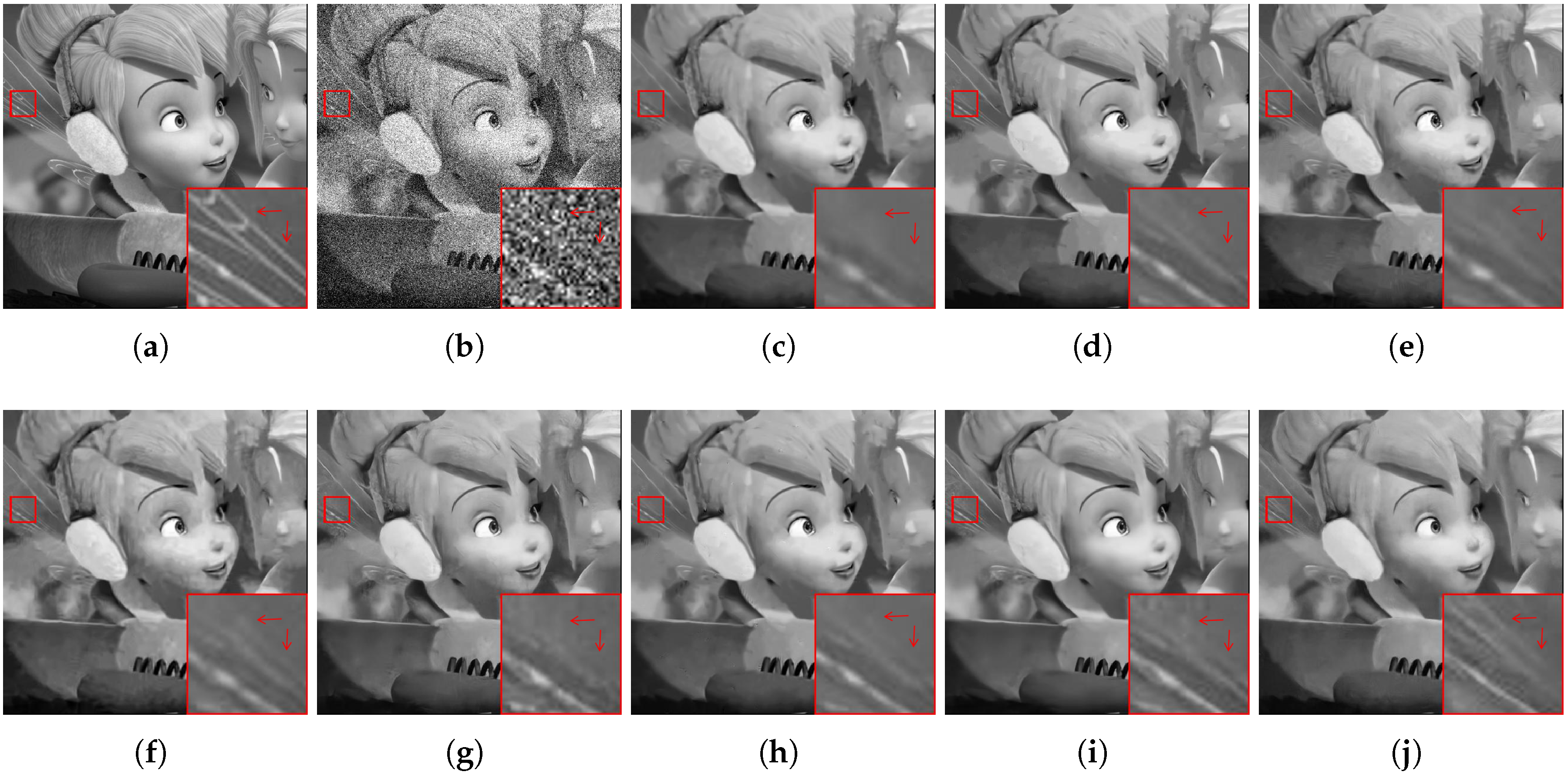

31]. In the latter method, a CNN is leveraged to iteratively learn the denoising model in each stage in the deep boosting method; this requires massive images for training to achieve an appropriate final result. In contrast, our method boosts the denoising effect using the image fusion strategy. Without using any training samples, we compute the weight map along each image pixel to fuse two initially denoised images for an enhanced denoising effect. In summary, the novelty of our method lies in three aspects. First, our method combines complementary information from images denoised using two state-of-the-art methods via a fusion strategy. Second, the strategy is excellent in terms of the preservation of details via a simple fusion structure. Third, it does not involve a computationally expensive training step. The DnCNN model used in this study was trained by its original developers, and the parameters are set using the source code of the model. Furthermore, NCSR is based on the nonlocal self-similarity and sparse representation of image patches, which need not be learned from external samples. Therefore, our method does not involve any loop iterations for processing images. The effectiveness of the proposed denoising booster can be seen in

Figure 1, where some test images and the corresponding denoised images are shown. The proposed booster performs well with regard to the preservation of the image details. In the

image, the NCSR can recover the eyelashes; however, it produces artifacts on the eyeball. Though DnCNN produces less artifacts, it tends to create an over-smooth region, with the eyelashes being almost invisible. However, by combining the strengths of these two methods, our method can preserve more details without generating many artifacts in the same region. We can also observe that the line in the

image has a gray intensity in the result obtained using the NCSR. Nevertheless, it becomes brighter after boosting is performed by combining the denoising performance of the DnCNN with that of the NCSR.

The boosting process is formulated as an adaptive weight-based image fusion problem to enhance the contrast and preserve the image details of the initially denoised images. Specifically, unlike many existing conventional pixel-wise image fusion methods that employ one weight to reflect the pixel value in the image sequence, our method applies a weight map to adaptively reflect the relative pixel intensity and the global gradient of the initially denoised images obtained using the NCSR and the DnCNN, respectively. Taking the overall brightness and neighboring pixels into consideration, two kinds of weights are designed as follows:

The relative pixel intensity based weight is designed to reflect the importance of the processed pixel value relative to the neighboring pixel intensity and the overall brightness.

The global gradient based weight is designed to reflect the importance of the regions with largely variational pixel values and to suppress the saturated pixels in the initial denoised images.

A linear combination of these two kinds of weights determines the final weight. Two initially denoised images are incorporated into the fusion framework, and the boosting method can significantly combine the complementary strengths of the two aforementioned methods to achieve better denoising results. Several extensive experimental results demonstrate that the proposed method visually and quantitatively outperforms many other state-of-the-art denoising methods. The key contributions of this study are summarized as follows:

Optimal combination. We introduce a denoising effect boosting method to improve the denoising performance of a single method, NCSR or DnCNN. Each denoiser has its own characteristics. The NCSR performs well on images with abundant texture regions and repeated patterns. Owing to the strategies of residual learning [

29] and batch normalization [

28], the DnCNN is better for denoising irregular and smooth regions. A linear combination of NCSR and DnCNN is better than either of the individual methods as well as a number of other state-of-the-art denoising methods. To the best of our knowledge, the proposed denoising effect boosting method is the first of its kind in image denoising.

Weight design. We introduce two adaptive weights to reflect the relative pixel intensity and global gradient. One is to emphasize the processed pixel value according to the surrounding pixel intensities and the overall brightness. The other is to emphasize the areas where pixel values vary significantly and to suppress saturated pixels in the initial denoised images. Therefore, the weight design is powerful in preserving image details and enhancing the contrast when denoising.

In

Section 2, we first review two denoising methods, NCSR and DnCNN, and highlight their contributions to our study. In

Section 3, we describe the proposed method in detail and present the proposed adaptive combination algorithm. In

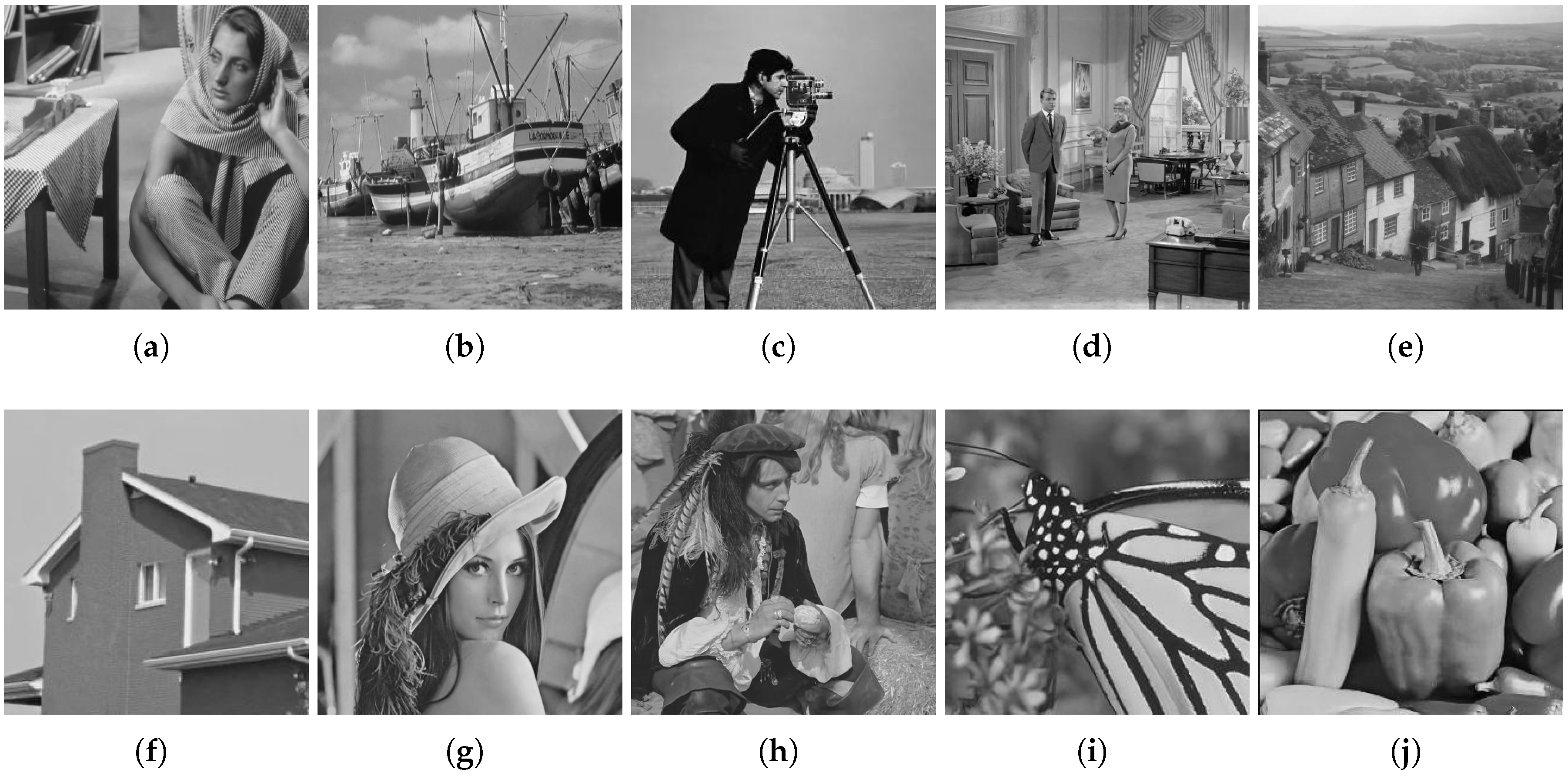

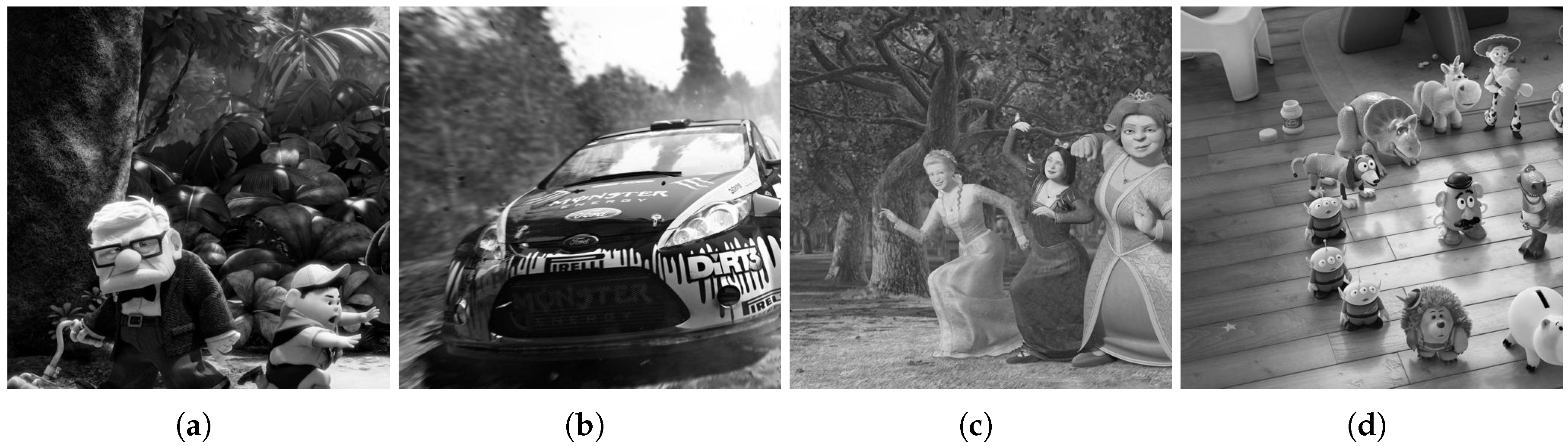

Section 4, the experimental results obtained using the proposed method are compared with those of other state-of-the-art methods. In

Section 5, we discuss the results in detail. Finally, we conclude the study and discuss the directions for future research in

Section 6.

5. Discussion

There are two important indicators of denoising performance: the denoising effect and computational complexity. Unfortunately, a high denoising performance is often obtained at the cost of computational complexity; therefore, the development of denoising methods is a spiraling process. The current denoising models must seek a reasonable trade-off between denoising performance and run time. This encourages researchers to continue to focus attention on improving the current state-of-the-art models. The computation time of our method comprises the fusion time of the initially denoised images and the running time of NCSR and DnCNN; therefore, it is longer than the running time of the single denoiser. However, unlike several deep learning-based boosting methods, the fusion step in the proposed method does not involve the training stage, which is time-consuming. The fusion times of our method for processing six images selected from the ten commonly used test images employed in this study, with sizes of

and

, are listed in

Table 9. We evaluate the fusion time by denoising the ten images with noise levels of 10, 30, and 60. It can be seen that the fusion process takes very little time; therefore, the computational complexity mainly depends on the two algorithms to be fused. Our goal is to introduce a novel method for boosting the denoising effect using an image fusion strategy. With the evolution of the denoising methods to be fused, the efficiency of our method will increase. The proposed method allows the combination of the initial denoised images generated by any two image denoisers; thus, one can train two complementary algorithms that are different from the ones employed in this study and use our method to boost the denoising effect. In summary, the proposed method achieves optimal results at a reasonable computational cost; furthermore, it allows for an effective performance/complexity trade-off in the future.

Whereas image denoising algorithms have produced highly promising results over the past decade, it is worth mentioning that it has become increasingly difficult for several denoising methods to achieve even minor performance improvements. According to Levin et al. [

49], when compared over the BSD dataset, for

, the predicted maximal possible improvement (over the performance of BM3D) for external denoising tasks is bounded by 0.7 dB. However, the proposed method exceeds the performance of BM3D by 0.77 dB, as shown in

Table 5, which is a substantial improvement. Through the image fusion strategy, our method offers a solution to further improve individual internal or external denoising algorithms. The fused image can provide a visually better output image that contains more information. Therefore, it is worth achieving a more specific and accurate result using our method at the reasonable computational cost. In fact, there are abundant real-world applications (e.g., machine vision, remote sensing, and medical diagnoses) that can benefit from the proposed method. Specifically, in digital medical treatment, the detailed features in images may be ignored by the NCSR algorithm, which is based on the non-local self-similarity of images; however, such features can be preserved by the external denoising method DnCNN. Thus, the proposed method can output better and more comprehensive images by combing the complementary information of the medical images denoised by the two methods, thereby providing more accurate data for clinical diagnosis and treatment. This will be crucial for feature extraction from images of lesions, three-dimensional reconstruction and multi-source medical image fusion, and other technologies that assist in diagnosis. Thus, the proposed method could be of immense value with regard to providing an alternative for boosting the denoising effect.

The boosting algorithm developed in this study can be interpreted as an algorithm for the fusion of two initially denoised images. Thus, it is not limited to the noise models of algorithms such as AWGN, and can be adapted to other types of noise if it is allowed by the constituent denoising algorithms. In addition, a good discrimination between noise and image texture information can significantly improve the noise reduction effect, which is also the goal of many traditional denoising algorithms. Currently, researchers are continuing to improve the performance of the state-of-the-art denoising methods. In the future, we will determine complementary algorithms with better performances to deal with various denoising tasks by using our fusion strategy.