An Object-Oriented Color Visualization Method with Controllable Separation for Hyperspectral Imagery

Abstract

1. Introduction

2. Materials and Methods

2.1. Design Goals and Display Strategy

- It can simultaneously display global data information, interclass information, and in-class information, and the balance between the above information can be adjusted by the separation factor.

- Consistent with the sensory characteristics of human eyes, hue is used to represent different categories to obtain good separability of classes, and the pixels in the output image also have good distance-preserving properties.

- The hyperspectral color visualization method can make full use of the supervised information. It can solve the nonlinear problem and the large-scale processing problem of manifold algorithms to a certain extent.

2.2. Applications of Class Data and Dimension Reduction within Classes

2.3. Determination of the Pixel Color

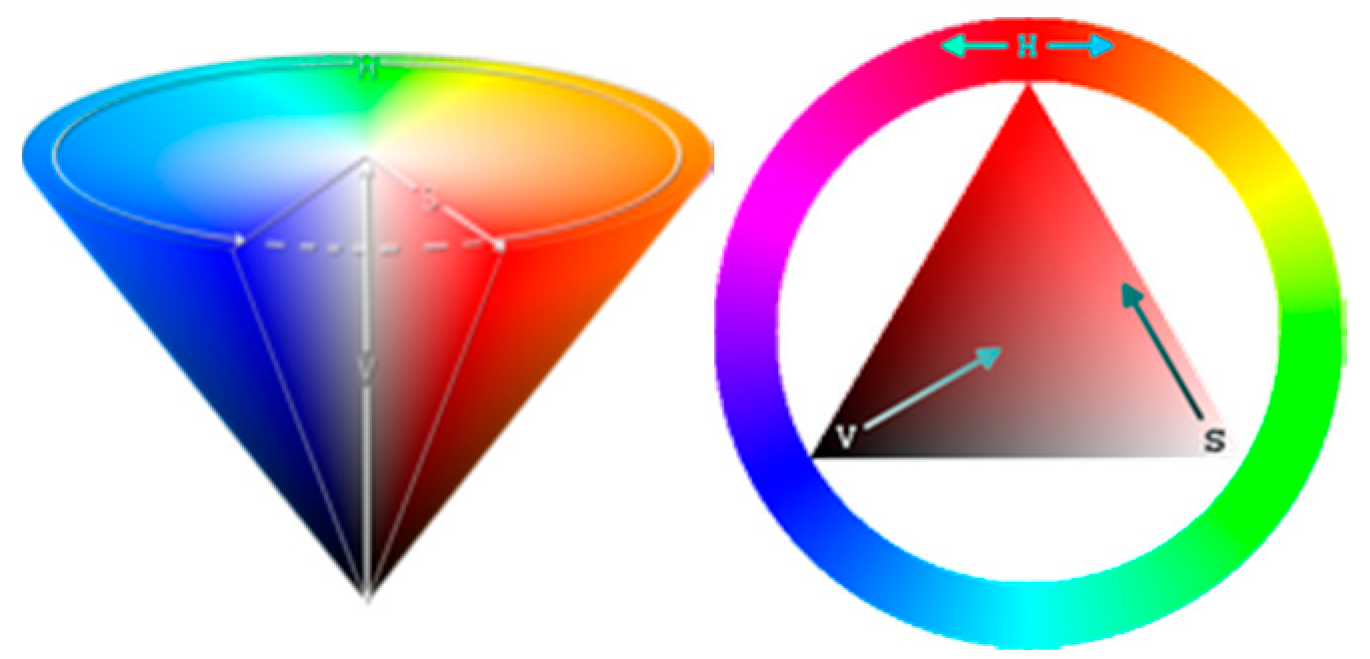

2.3.1. Color Space

2.3.2. Determining the Hues of Classes

2.3.3. Determining the Hues of Each Pixel

2.4. The Whole Data Display in the Color Space

3. Experiments and Results

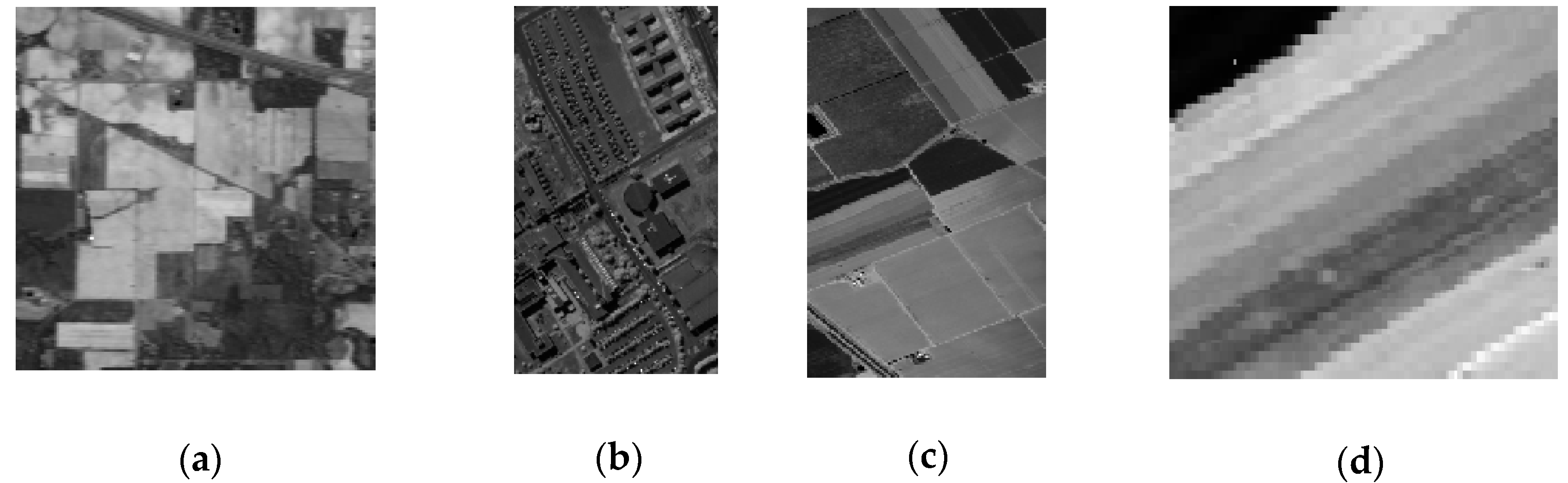

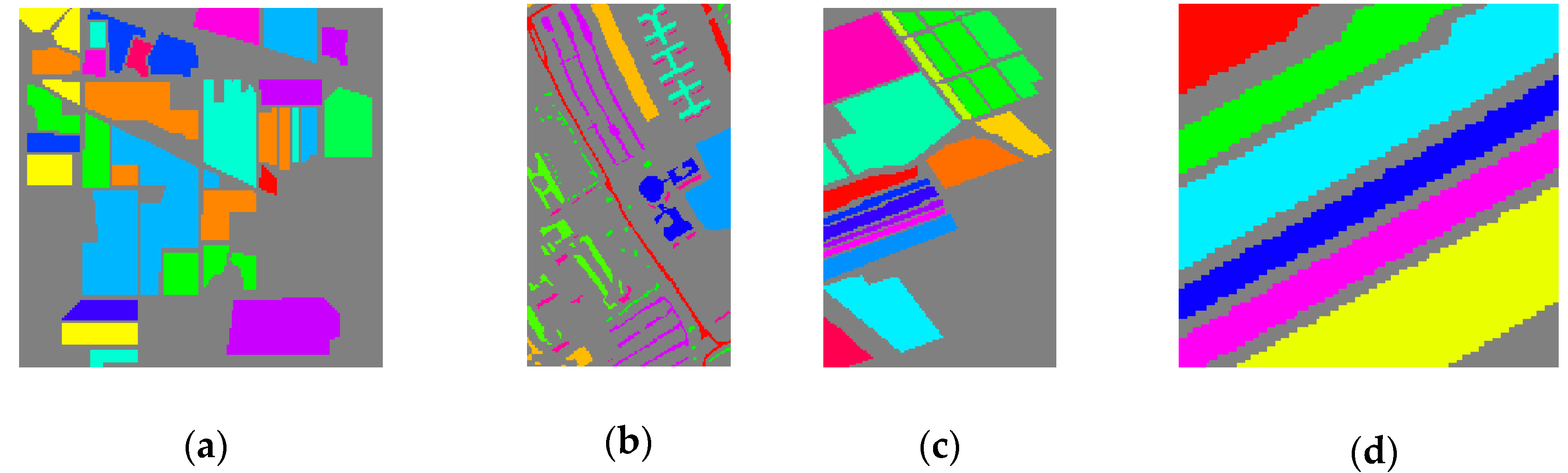

3.1. Hyperspectral Data Sets

3.2. Evaluation Criteria

- The optimum index factor has often been used in the literature [23] for band selection. The OIF comprehensively considers the information of single-band images and the relevance between various bands. The method of information/redundancy was used in this study to measure the usefulness of the information located in the images. The larger the OIF, the more information the image contains. It is formulated as follows:where is the standard deviation of Euclidean distance in the color space of the i-th band of image data C, and is the correlation coefficient between the i-th band and the j-th band.

- The distance-preserving property ρ means that the differences in distance between each pixel of the generated images are as correlated as possible between spectral vectors in the HSI data. The image spectral distance-preserving property is good when ρ is closer to 1. The distance-preserving property can be represented as follows [3]:where vector X is the spectral angle vector of each pair of pixels in the original hyperspectral space. Vector Y is the Euclidean distance between each pair of pixels in the CIELab color space, is the mean of , is the cardinality of , and std(X) is the standard deviation of X.

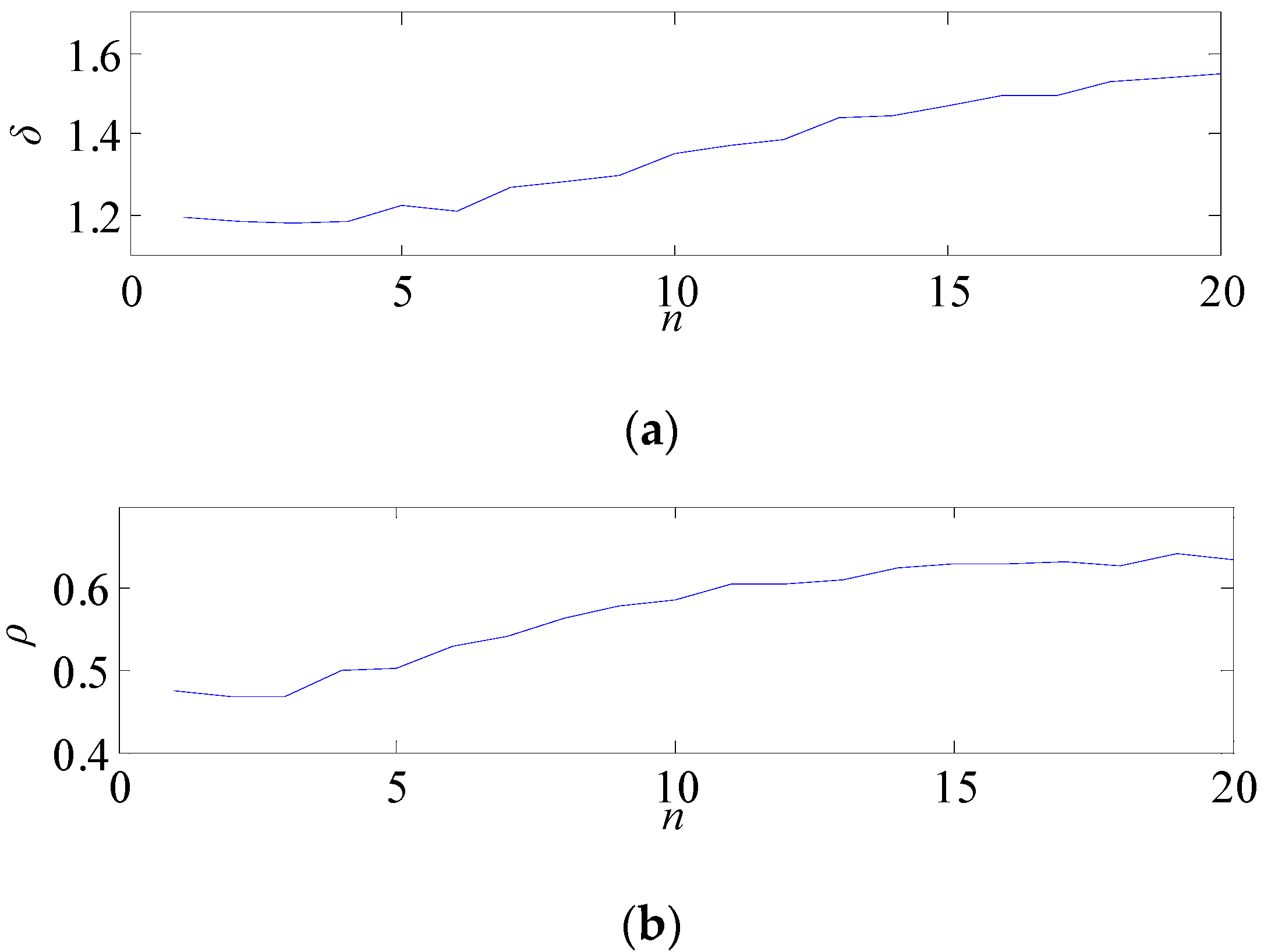

- Pixels of the output image should not only show the relationship between pixels but also make the different pixels easily distinguishable. Pixel separability is the evaluation criterion of this property. Pixel separability can be evaluated by the average value δ of the color difference between pixels. The bigger the δ, the more obvious the difference between individual elements and better the between-class separability. The δ can be computed as follows:where |Y|1 and |Y| are the L1 norm and the cardinality of vector Y, respectively.

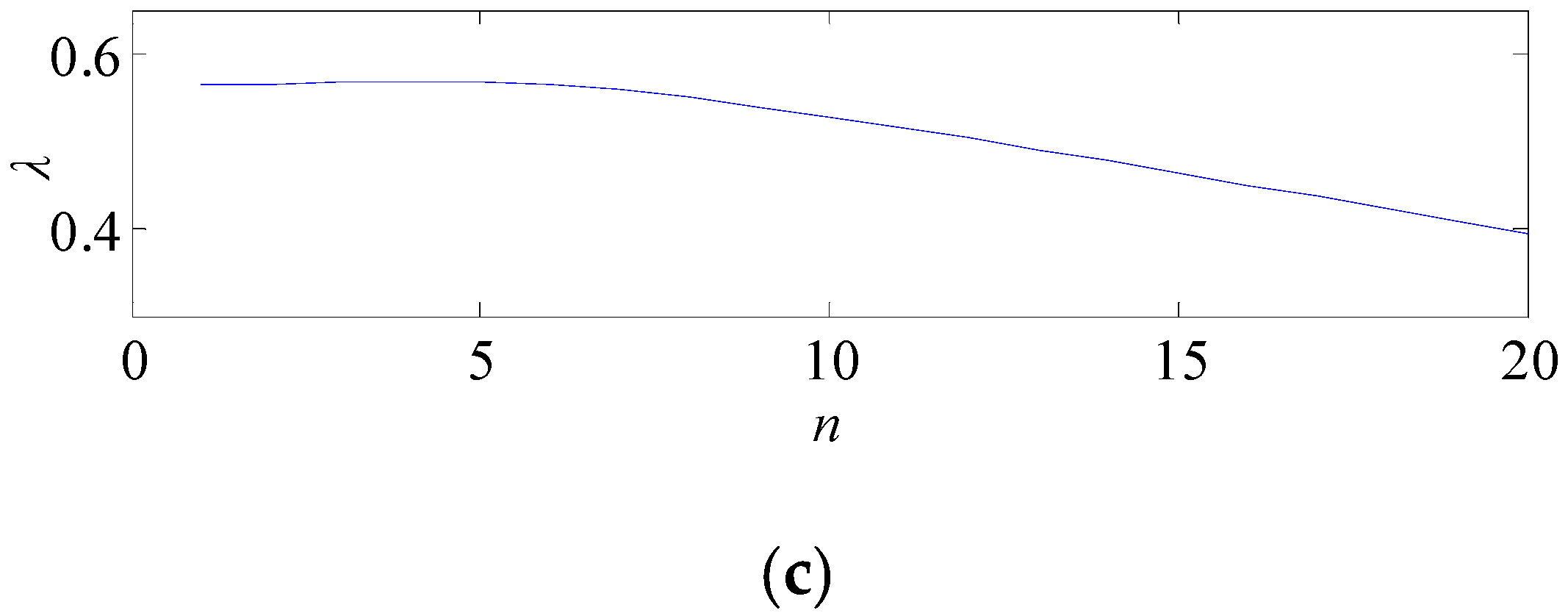

- λ, the average Euclidean distance between classes, is used to compare the separability between all classes. Larger λ indicates that the categories are more distinguishable. It can be represented as follows:where is the Euclidean distance between two different classes and .

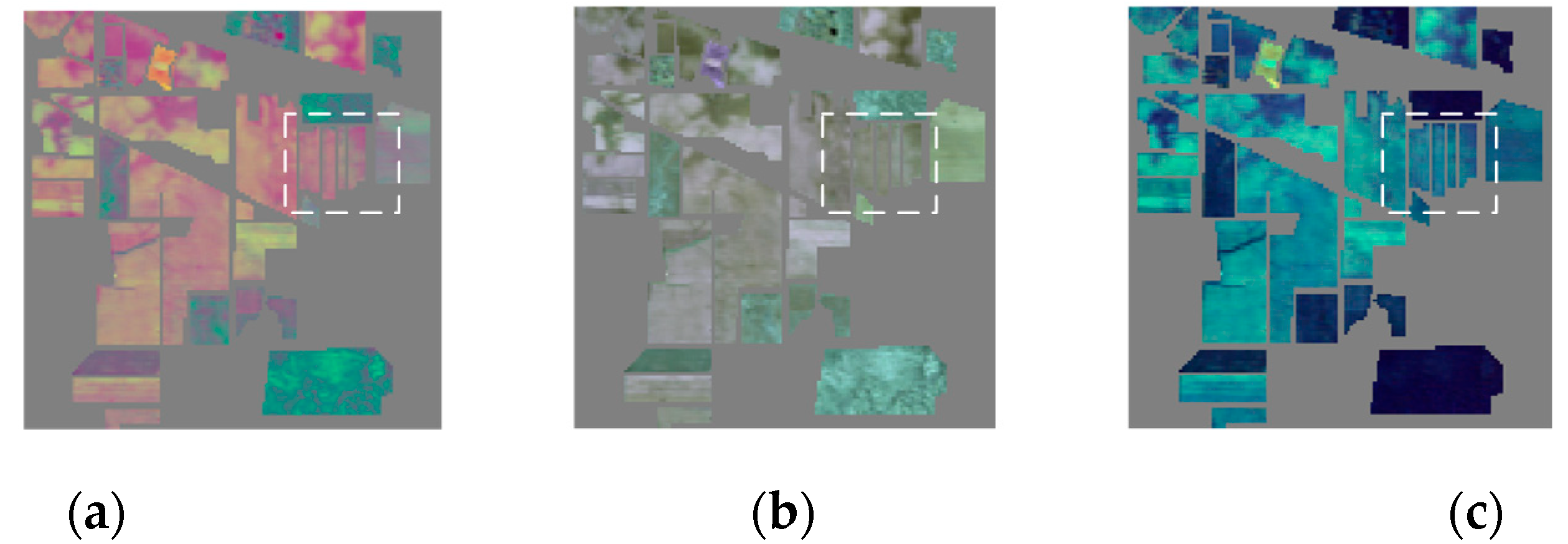

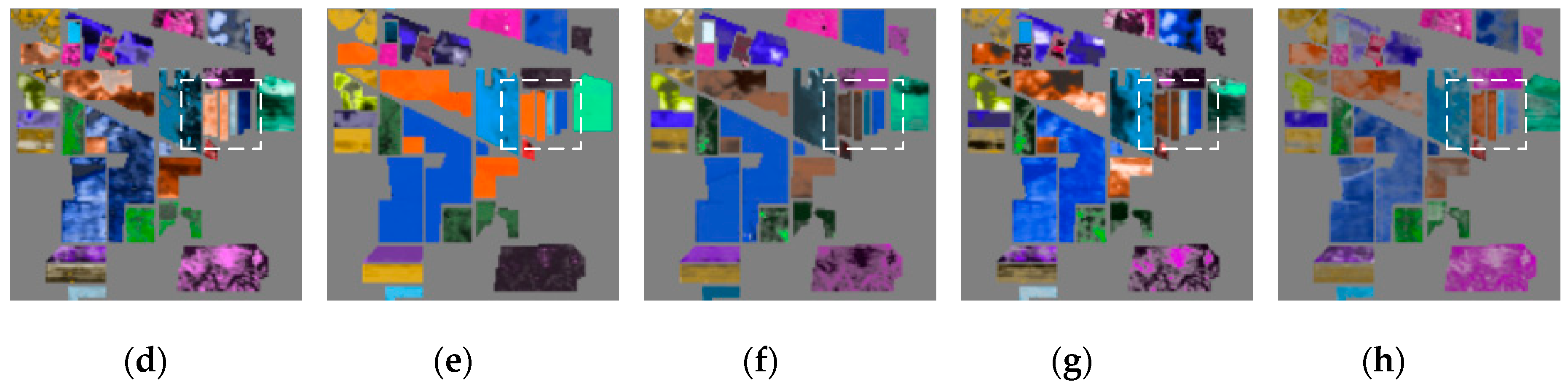

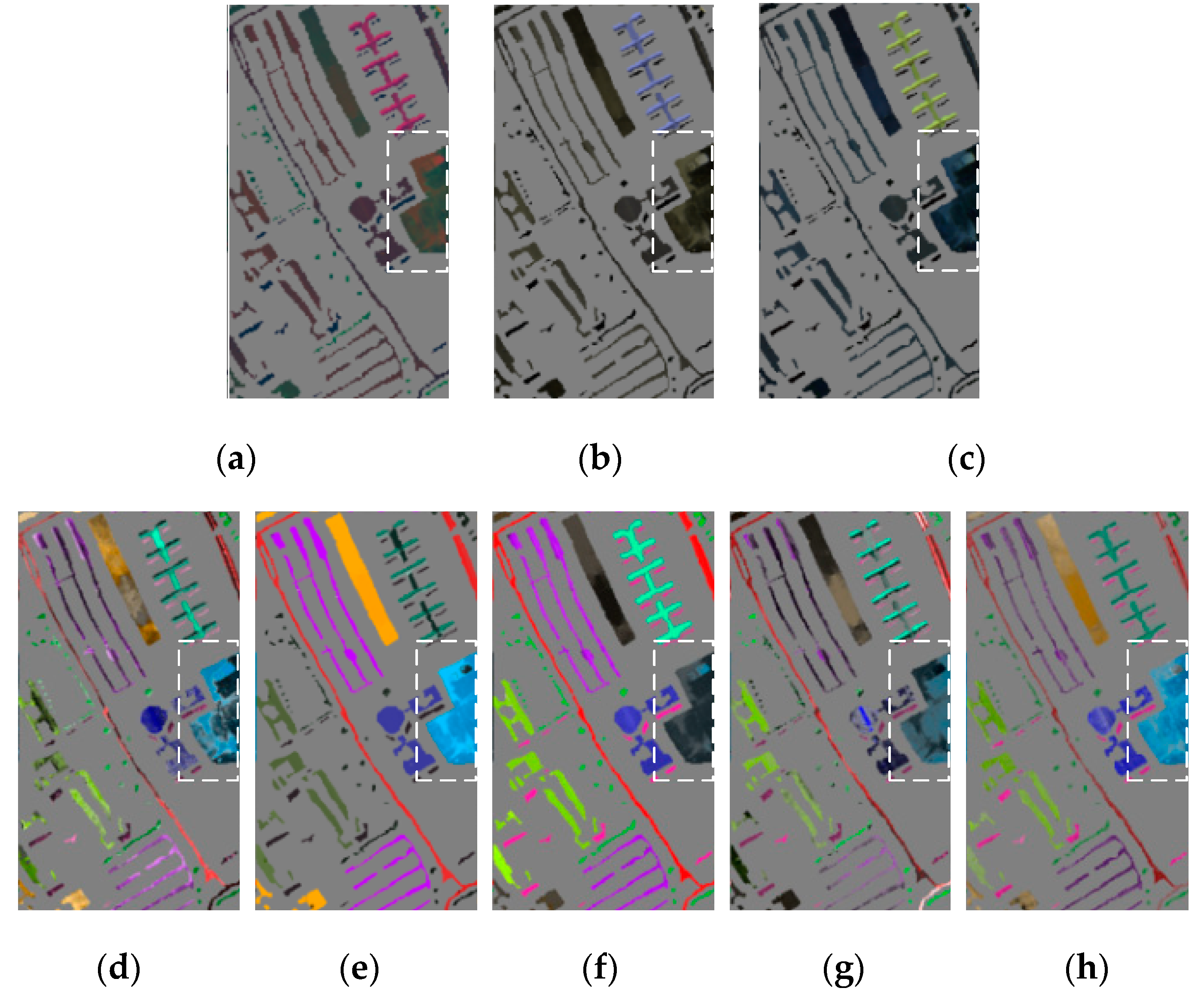

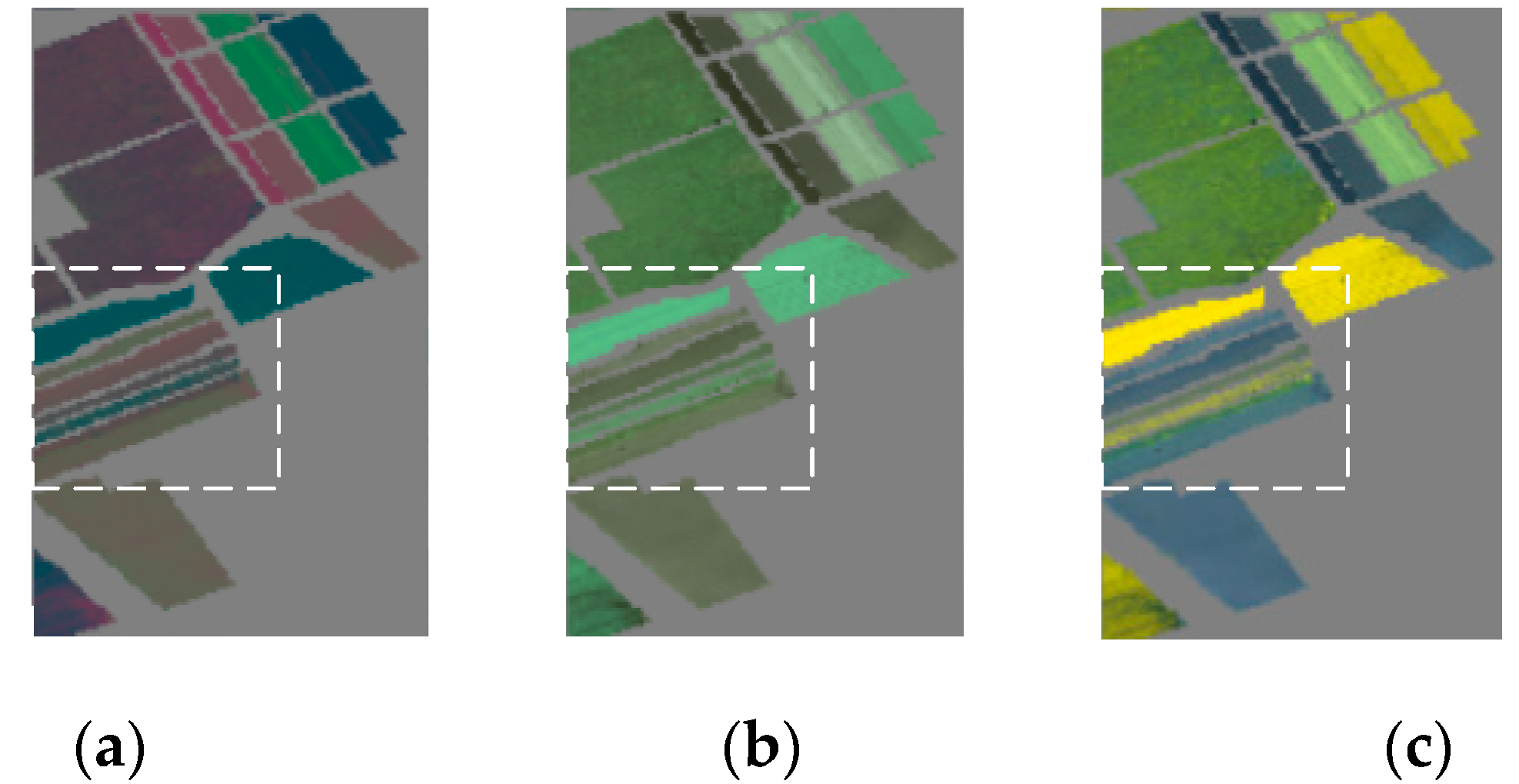

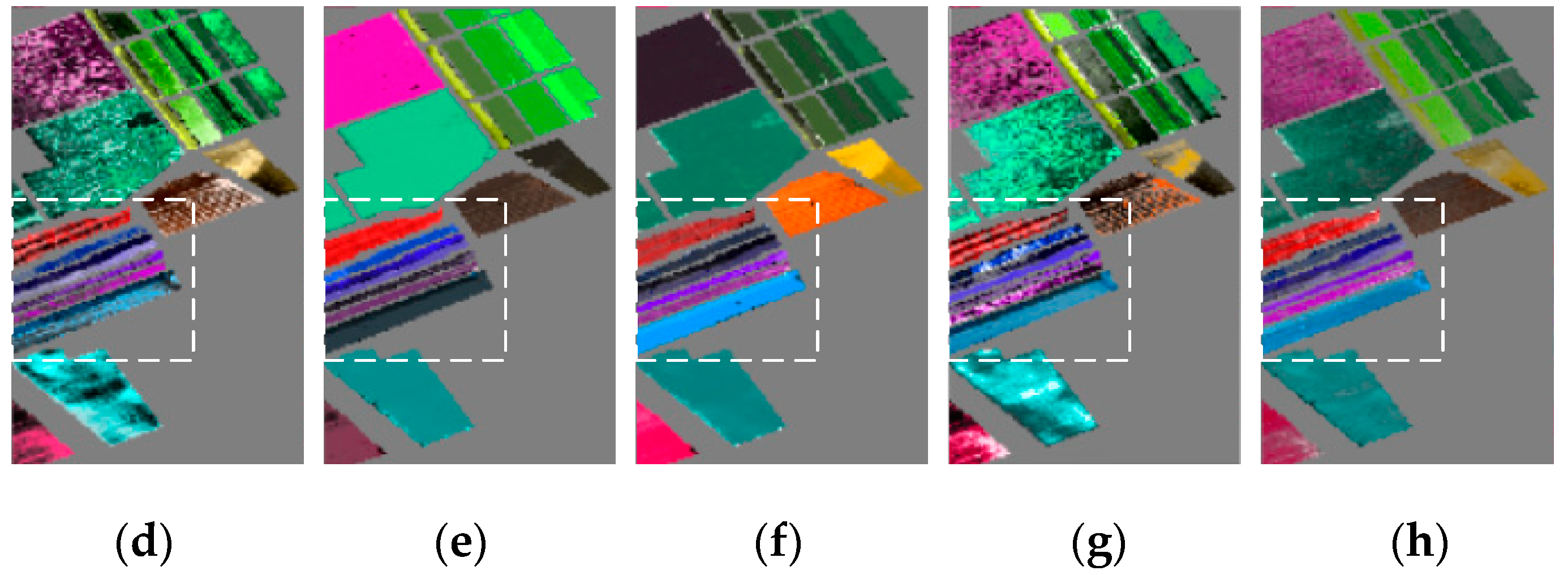

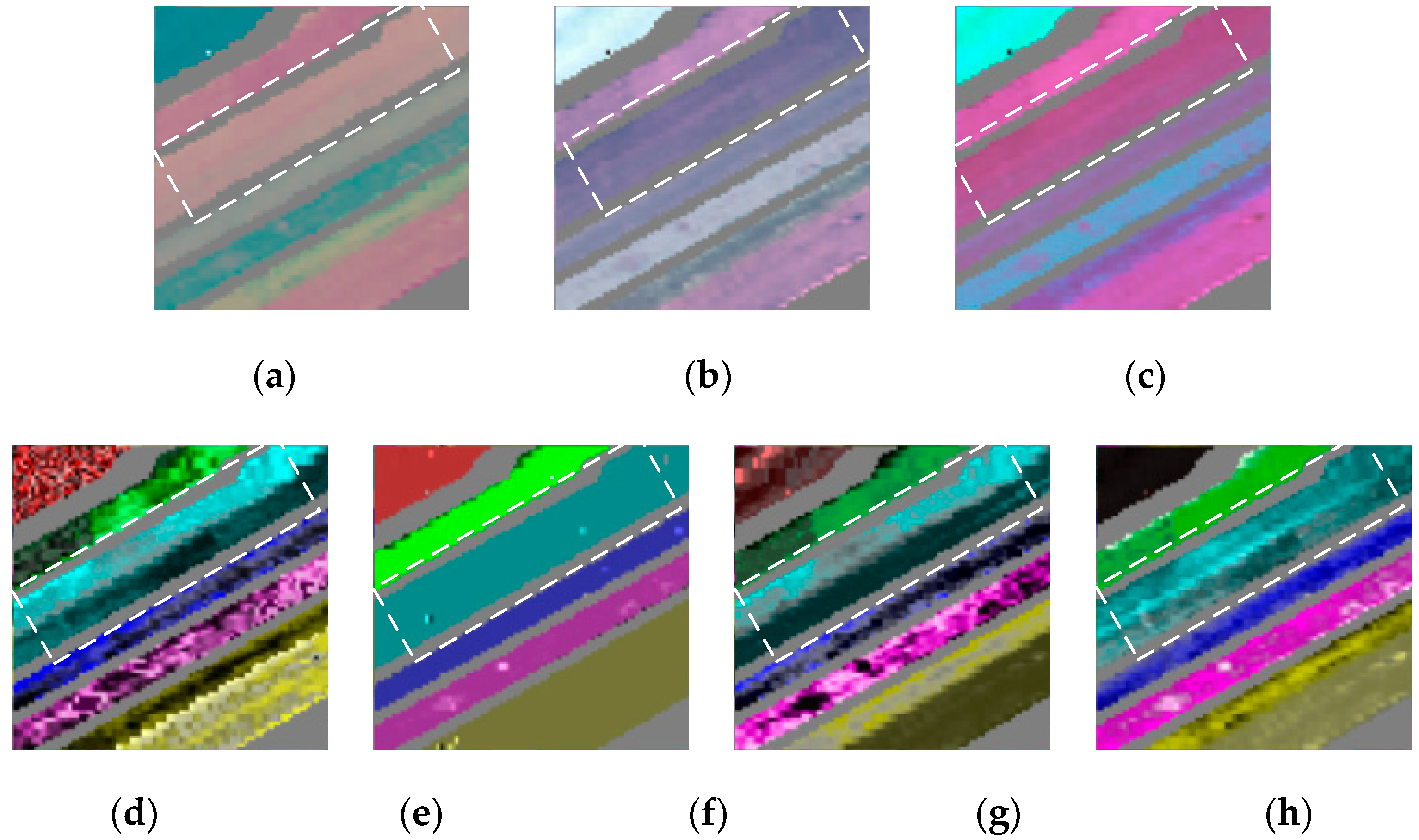

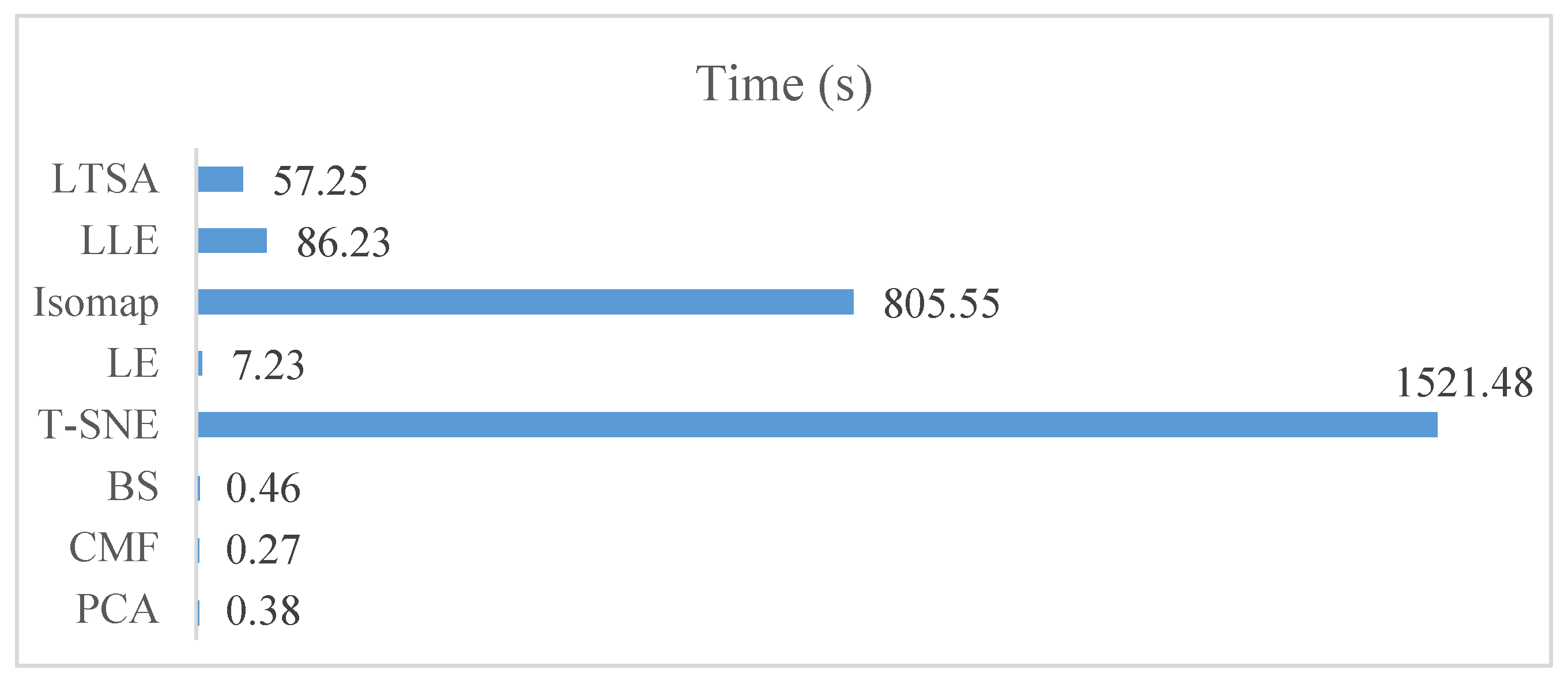

3.3. Experimental Results

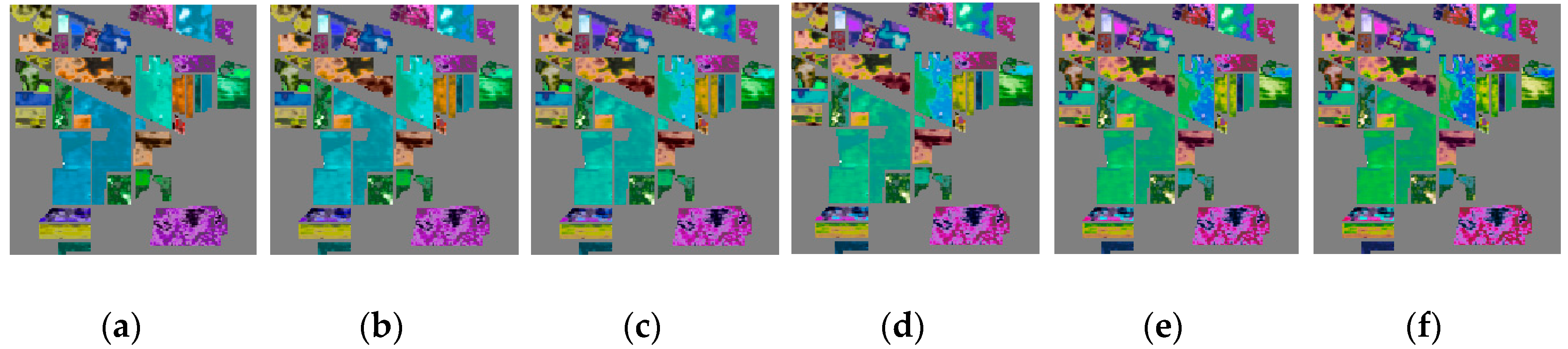

3.4. The Influence of the Separation Factor on the Images

4. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Su, H.; Du, Q.; Du, P. Hyperspectral image visualization using band selection. IEEE Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2647–2658. [Google Scholar] [CrossRef]

- Du, Q.; Raksuntorn, N.; Cai, S.; Moorhead, R. Color display for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1858–1866. [Google Scholar] [CrossRef]

- Jacobson, N.P.; Gupta, M.R. Design goals and solutions for display of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2684–2692. [Google Scholar] [CrossRef]

- Liao, D.; Qian, Y.; Tang, Y.Y. Constrained manifold learning for hyperspectral imagery visualization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1213–1226. [Google Scholar] [CrossRef]

- Kang, X.; Duan, P.; Li, S.; Benediktsson, J.A. Decolorization-based hyperspectral image visualization. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4346–4360. [Google Scholar] [CrossRef]

- Duan, P.; Kang, X.; Li, S. Convolutional neural network for natural color visualization of hyperspectral images. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 3372–3375. [Google Scholar]

- Liu, D.; Wang, L.; Benediktsson, J.A. Interactive multi-image colour visualization for hyperspectral imagery. Int. J. Remote Sens. 2017, 38, 1062–1082. [Google Scholar] [CrossRef]

- Cui, M.; Razddan, A.; Hu, J.; Wonka, P. Interactive hyperspectral image visualization using convex optimization. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1673–1684. [Google Scholar]

- Mignotte, M. A bicriteria-optimization-approach-based dimensionality-reduction model for the color display of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 501–513. [Google Scholar] [CrossRef]

- Duan, P.; Kang, X.; Li, S.; Ghamisi, P. Multichannel pulse-coupled neural network-based hyperspectral image visualization. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1–13. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.Q.; Chan, J.C.W.; Xiao, L. A multi-scale wavelet 3D-cnn for hyperspectral image super-resolution. Remote Sens. 2019, 11, 1557. [Google Scholar] [CrossRef]

- Cai, S.; Du, Q.; Moorhead, J.R. Feature-driven multilayer visualization for remotely sensed hyperspectral imagery. IEEE Geosci. Remote Sens. 2010, 48, 3471–3481. [Google Scholar] [CrossRef]

- Wang, L.; Liu, D.; Zhao, L. A color visualization method based on sparse representation of hyperspectral imagery. Appl. Geophys. 2013, 10, 210–221. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. A new deep convolutional neural network for fast hyperspectral image classification. ISPRS J. Photogramm. Remote Sens. 2018, 145, 120–147. [Google Scholar] [CrossRef]

- Lunga, D.; Prasad, S.; Crawford, M.M.; Ersoy, O. Manifold-learning-based feature extraction for classification of hyperspectral data: A review of advances in manifold learning. IEEE Signal Process. Mag. 2014, 31, 55–66. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Sci. Mag. 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Zha, H. Principal manifolds and nonlinear dimension reduction via tangent space alignment. J. Shanghai Univ. 2004, 8, 406–424. [Google Scholar] [CrossRef]

- Bachmann, C.M.; Ainsworth, T.L.; Fusina, R.A. Improved manifold coordinate representations of large-scale hyperspectral scenes. IEEE Geosci. Remote Sens. 2006, 44, 2786–2803. [Google Scholar] [CrossRef]

- van der Maaten, L.J.P.; Hinton, G.E. Visualizing high-dimensional data using T-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Belkin, D.M.; Niyogi, P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- Healey, C.G. Effective Visualization of Large Multidimensional Datasets. Ph.D. Thesis, The University of British Columbia, Vancouver, BC, Canada, 1996. [Google Scholar]

- Plataniotis, K.N.; Venetsanopoulos, A.N. Color Image Processing and Applications; Springer: Berlin, Germany, 2000. [Google Scholar]

- Dwivedi, R.S.; Rao, B.R.M. The selection of the best possible Landsat TM band combination for delineating salt-affected soils. Int. J. Remote Sens. 1992, 13, 2051–2058. [Google Scholar] [CrossRef]

| OIF | Data-Oriented Method | Object-Oriented Method | ||||||

|---|---|---|---|---|---|---|---|---|

| PCA | CMF | BS | T-SNE | LE | ISOMAP | LLE | LTSA | |

| Indian | 0.1008 | 0.0091 | 0.0300 | 0.4608 | 0.2641 | 0.1120 | 0.1335 | 0.1476 |

| Pavia | 0.0125 | 0.0133 | 0.0162 | 0.3992 | 0.3405 | 0.2571 | 0.1701 | 0.0903 |

| Salinas | 0.0214 | 0.0099 | 0.0400 | 0.5108 | 0.4568 | 0.1190 | 0.1213 | 0.0957 |

| SalinasA | 0.3940 | 0.0165 | 0.0281 | 0.5962 | 0.2533 | 0.0904 | — | 0.1008 |

| Data-Oriented Method | Object-Oriented Method | |||||||

|---|---|---|---|---|---|---|---|---|

| PCA | CMF | BS | T-SNE | LE | ISOMAP | LLE | LTSA | |

| Indian | 0.9306 | 0.7815 | 0.9641 | 0.8326 | 0.8273 | 0.7567 | 0.8956 | 0.9705 |

| Pavia | 0.8482 | 0.7245 | 0.8362 | 0.9707 | 0.9645 | 0.9363 | 0.8823 | 0.8880 |

| Salinas | 0.7167 | 0.5129 | 0.9461 | 0.8544 | 0.8440 | 0.7327 | 0.6638 | 0.6769 |

| SalinasA | 0.6574 | 0.9158 | 0.6915 | 0.8578 | 0.8455 | 0.7478 | — | 0.6272 |

| Data-Oriented Method | Object-Oriented Method | |||||||

|---|---|---|---|---|---|---|---|---|

| PCA | CMF | BS | T-SNE | LE | ISOMAP | LLE | LTSA | |

| Indian | 8.76 | 6.15 | 14.09 | 23.83 | 19.80 | 14.54 | 15.78 | 18.98 |

| Pavia | 7.66 | 6.32 | 9.65 | 29.16 | 29.28 | 25.10 | 29.46 | 33.29 |

| Salinas | 4.66 | 10.16 | 23.39 | 19.79 | 21.07 | 14.89 | 19.12 | 13.36 |

| SalinasA | 6.73 | 19.65 | 19.65 | 16.48 | 14.07 | 11.73 | — | 11.58 |

| Data-Oriented Method | Object-Oriented Method | |||||||

|---|---|---|---|---|---|---|---|---|

| PCA | CMF | BS | T-SNE | LE | ISOMAP | LLE | LTSA | |

| Indian | 0.2109 | 0.1982 | 0.5483 | 0.4244 | 0.4441 | 0.4152 | 0.5601 | 0.7734 |

| Pavia | 0.1762 | 0.3934 | 0.3848 | 0.3986 | 0.3599 | 0.4958 | 0.9241 | 0.7948 |

| Salinas | 0.1930 | 0.3463 | 0.6002 | 0.4397 | 0.4932 | 0.5262 | 0.6637 | 0.6632 |

| SalinasA | 0.2402 | 0.5261 | 0.6168 | 0.3972 | 0.3572 | 0.6018 | — | 0.6276 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, D.; Wang, L.; Benediktsson, J.A. An Object-Oriented Color Visualization Method with Controllable Separation for Hyperspectral Imagery. Appl. Sci. 2020, 10, 3581. https://doi.org/10.3390/app10103581

Liu D, Wang L, Benediktsson JA. An Object-Oriented Color Visualization Method with Controllable Separation for Hyperspectral Imagery. Applied Sciences. 2020; 10(10):3581. https://doi.org/10.3390/app10103581

Chicago/Turabian StyleLiu, Danfeng, Liguo Wang, and Jón Atli Benediktsson. 2020. "An Object-Oriented Color Visualization Method with Controllable Separation for Hyperspectral Imagery" Applied Sciences 10, no. 10: 3581. https://doi.org/10.3390/app10103581

APA StyleLiu, D., Wang, L., & Benediktsson, J. A. (2020). An Object-Oriented Color Visualization Method with Controllable Separation for Hyperspectral Imagery. Applied Sciences, 10(10), 3581. https://doi.org/10.3390/app10103581