Evolutionary Neural Architecture Search (NAS) Using Chromosome Non-Disjunction for Korean Grammaticality Tasks

Abstract

:Featured Application

Abstract

1. Introduction

- (1)

- a. John-i Mary-lul coahanta.John-subject Mary-object like‘John likes Mary.’b. Mary-lul Johin-i coahanta.Mary-object John-subject like‘John likes Mary.’

- (2)

- a. Mary-lul coahanta.b. John-i coahanta.c. coahanta.

2. Background

2.1. Automated Machine Learning (AutoML)

2.2. Neural Architecture Search (NAS)

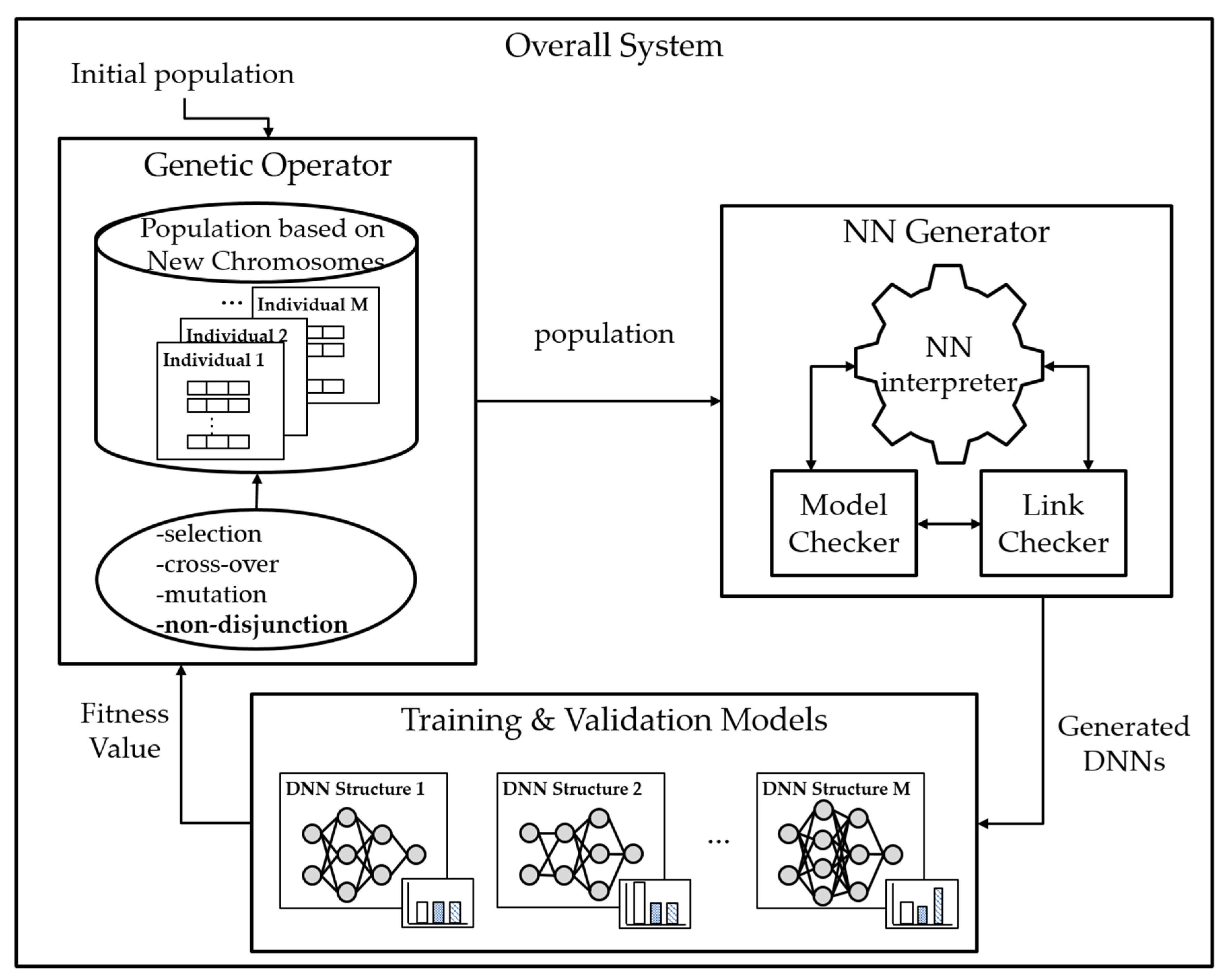

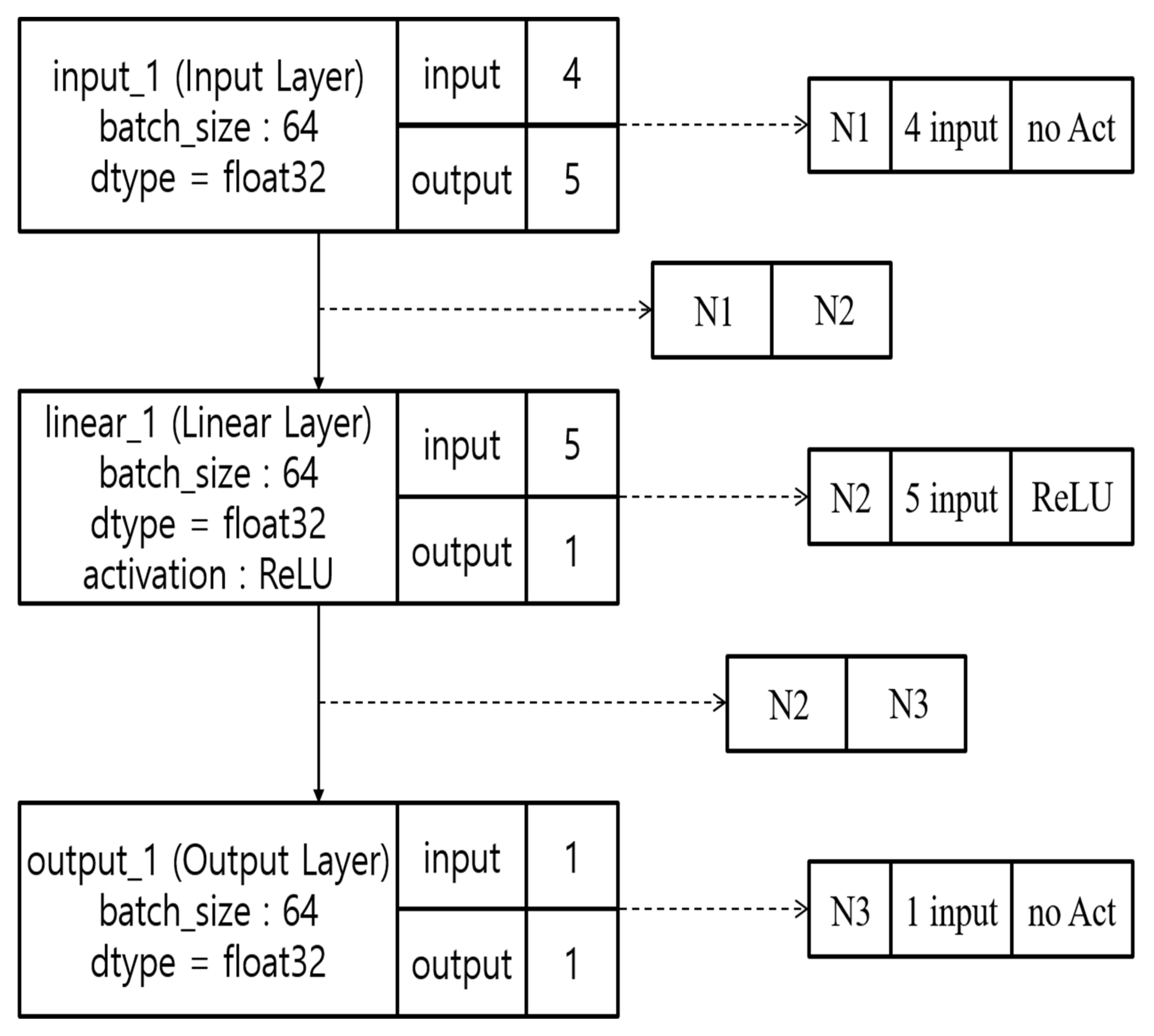

3. Methodology

4. Experiment

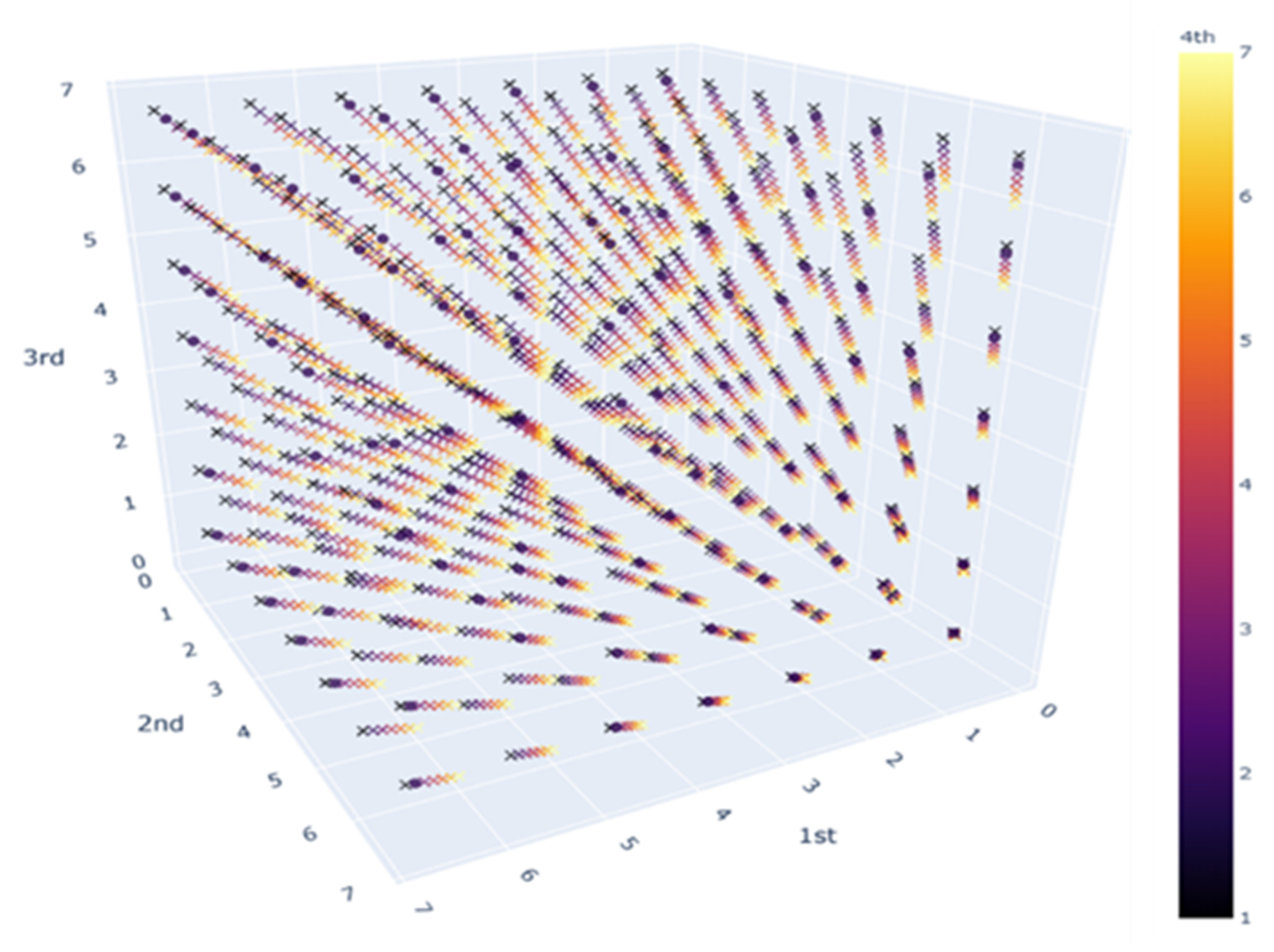

4.1. Distribution of Grammatical Sentences in Four-Word Level Sentences in Korean

4.2. Experiment Setups

4.3. Experiment Results

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural architecture search: A survey. arXiv 2018, arXiv:1808.05377. [Google Scholar]

- Hutter, F.; Kotthoff, L.; Vanschoren, J. Automated Machine Learning; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Adam, G.; Lorraine, J. Understanding neural architecture search techniques. arXiv 2019, arXiv:1904.00438. [Google Scholar]

- Saito, M. Some Asymmetries in Japanese and Their Theoretical Implications. Ph.D. Thesis, NA Cambridge, Cambridge, UK, 1985. [Google Scholar]

- Kim, S. Sloppy/strict identity, empty objects, and NP ellipsis. J. East Asian Linguist. 1999, 8, 255–284. [Google Scholar] [CrossRef]

- Park, K.; Shin, D.; Chi, S. Variable chromosome genetic algorithm for structure learning in neural networks to imitate human brain. Appl. Sci. 2019, 9, 3176. [Google Scholar] [CrossRef] [Green Version]

- Wang, T.; Wen, C.-K.; Jin, S.; Li, G.Y. Deep learning-based CSI feedback approach for time-varying massive MIMO channels. IEEE Wirel. Commun. Lett. 2018, 8, 416–419. [Google Scholar] [CrossRef] [Green Version]

- Hohman, F.; Kahng, M.; Pienta, R.; Chau, D.H. Visual analytics in deep learning: An interrogative survey for the next frontiers. IEEE Trans. Vis. Comput. Graph. 2018, 25, 2674–2693. [Google Scholar] [CrossRef] [PubMed]

- Li, A.A.; Trappey, A.J.; Trappey, C.V.; Fan, C.Y. E-discover state-of-the-art research trends of deep learning for computer vision. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 1360–1365. [Google Scholar]

- Han, X.; Laga, H.; Bennamoun, M. Image-based 3D object reconstruction: State-of-the-art and trends in the deep learning era. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 1, 1. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lopez, M.M.; Kalita, J. Deep learning applied to NLP. arXiv 2017, arXiv:1703.03091. [Google Scholar]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Justesen, N.; Bontrager, P.; Togelius, J.; Risi, S. Deep learning for video game playing. IEEE Trans. Games 2019, 12, 1. [Google Scholar] [CrossRef] [Green Version]

- Hatcher, W.G.; Yu, W. A survey of deep learning: Platforms, applications and emerging research trends. IEEE Access 2018, 6, 24411–24432. [Google Scholar] [CrossRef]

- Simhambhatla, R.; Okiah, K.; Kuchkula, S.; Slater, R. Self-driving cars: Evaluation of deep learning techniques for object detection in different driving conditions. SMU Data Sci. Rev. 2019, 2, 23. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Linzen, T.; Dupoux, E.; Goldberg, Y. Assessing the ability of LSTMs to learn syntax-sensitive dependencies. Trans. Assoc. Comput. Linguist. 2016, 4, 521–535. [Google Scholar] [CrossRef]

- Rebortera, M.A.; Fajardo, A.C. An enhanced deep learning approach in forecasting banana harvest yields. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 275–280. [Google Scholar] [CrossRef]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Real, E.; Aggarwal, A.; Huang, Y.; Le, Q.V. Regularized evolution for image classifier architecture search. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 4780–4789. [Google Scholar]

- Zoph, B.; Cubuk, E.D.; Ghiasi, G.; Lin, T.-Y.; Shlens, J.; Le, Q.V. Learning data augmentation strategies for object detection. arXiv 2019, arXiv:1906.11172. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Feurer, M.; Hutter, F. Hyperparameter optimization. In Automated Machine Learning; Springer: Berlin/Heidelberg, Germany, 2019; pp. 3–33. [Google Scholar]

- Zhang, Y.; Clark, S. Syntax-based grammaticality improvement using CCG and guided search. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Association for Computational Linguistics, Edinburgh, UK, 27–29 July 2011; pp. 1147–1157. [Google Scholar]

- Liu, Y.; Zhang, Y.; Che, W.; Qin, B. Transition-based syntactic linearization. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; pp. 113–122. [Google Scholar]

- Schmaltz, A.; Kim, Y.; Rush, A.M.; Shieber, S.M. Adapting sequence models for sentence correction. arXiv 2017, arXiv:1707.09067. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, CA, USA, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Miikkulainen, R.; Liang, J.; Meyerson, E.; Rawal, A.; Fink, D.; Francon, O.; Raju, B.; Shahrzad, H.; Navruzyan, A.; Duffy, N. Evolving deep neural networks. In Artificial Intelligence in the Age of Neural Networks and Brain Computing; Elsevier: Amsterdam, The Netherlands, 2019; pp. 293–312. [Google Scholar]

- Wong, C.; Houlsby, N.; Lu, Y.; Gesmundo, A. Transfer learning with neural automl. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 8356–8365. [Google Scholar]

- Wicaksono, A.S.; Supianto, A.A. Hyper parameter optimization using genetic algorithm on machine learning methods for online news popularity prediction. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 263–267. [Google Scholar] [CrossRef]

- Weng, Y.; Zhou, T.; Li, Y.; Qiu, X. NAS-Unet: Neural architecture search for medical image segmentation. IEEE Access 2019, 7, 44247–44257. [Google Scholar] [CrossRef]

- Kandasamy, K.; Neiswanger, W.; Schneider, J.; Poczos, B.; Xing, E.P. Neural architecture search with bayesian optimisation and optimal transport. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 2016–2025. [Google Scholar]

- Ma, L.; Cui, J.; Yang, B. Deep neural architecture search with deep graph bayesian optimization. In Proceedings of the 2019 IEEE/WIC/ACM International Conference on Web Intelligence (WI), Thessaloniki, Greece, 14–17 October 2019; pp. 500–507. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural architecture search with reinforcement learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

| Yepputako | John | Cipeysey | Malhassta | ||

|---|---|---|---|---|---|

| Jane | pretty | John | Mary | home | said |

| 1st input | 2nd input | 3rd input | 4th input | ||

| “At home, John said to Mary that Jane is pretty.” | |||||

| Parameter | Value |

|---|---|

| Population | 50 |

| Generations | 30 |

| Mutant rate | 0.05 |

| Cross-over rate | 0.05 |

| Non-disjunction rate | 0.1 |

| Learning rate | 0.01 |

| Criterion | MSELoss |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, K.-m.; Shin, D.; Yoo, Y. Evolutionary Neural Architecture Search (NAS) Using Chromosome Non-Disjunction for Korean Grammaticality Tasks. Appl. Sci. 2020, 10, 3457. https://doi.org/10.3390/app10103457

Park K-m, Shin D, Yoo Y. Evolutionary Neural Architecture Search (NAS) Using Chromosome Non-Disjunction for Korean Grammaticality Tasks. Applied Sciences. 2020; 10(10):3457. https://doi.org/10.3390/app10103457

Chicago/Turabian StylePark, Kang-moon, Donghoon Shin, and Yongsuk Yoo. 2020. "Evolutionary Neural Architecture Search (NAS) Using Chromosome Non-Disjunction for Korean Grammaticality Tasks" Applied Sciences 10, no. 10: 3457. https://doi.org/10.3390/app10103457

APA StylePark, K.-m., Shin, D., & Yoo, Y. (2020). Evolutionary Neural Architecture Search (NAS) Using Chromosome Non-Disjunction for Korean Grammaticality Tasks. Applied Sciences, 10(10), 3457. https://doi.org/10.3390/app10103457