Automatic Test Data Generation Using the Activity Diagram and Search-Based Technique

Abstract

:1. Introduction

- Automate the entire test data generation process. For this purpose, we introduce a search-based approach using a GA with a fitness function designed to reward maximum coverage of def-use pairs.

- In addition to automating the test data generation process, this approach, unlike our previous study on manually annotating the flow of data in an AD and converting it into an intermediate test model [24], can directly generate test cases from models of the SUT without transforming them into intermediate test models.

- We have empirically compared and contrasted the effectiveness of AutoTDGen with that of DFAAD and EvoSuite.

2. Related Work

2.1. AD-Based Test Case Generation

2.2. Evolutionary Approaches Using Metaheuristic Algorithms

2.3. Revisiting Data Flow Representation and Concepts in ADs

- -

- A = {a1, a2, …., an} is a set of executable nodes (actions are the only kind of executable node, and object nodes are attached to actions as action Pins by executable nodes; we indicate the lower-level steps in the overall activities).

- -

- E denotes a set E of edges, where E ⊆ {A x A}.

- -

- C = {c1, c2, …., cn} = DN ∪ JN ∪ FN ∪ MN is a set of control nodes such that DN is a set of decision nodes; JN is a set of join nodes; FN is a set of fork nodes, and MN is a set of merge nodes. In terms of data flow, DN is analogous with predicate use.

- -

- aI is an initial activity node, where aI ⊆ A and aI ≠ Ø.

- -

- aF is a set of final nodes, where aF ⊆ A and aF ≠ Ø.

- -

- V = {v1, v2, …., vi} is a set of variables s.t. V includes both object variables and structural attributes (variables).

- -

- Variable vi ∈ V is defined in node an of M, indicated as , meaning that variable vi is defined in node an if vi is initialized in the corresponding node or its value has been modified by the execution of a certain action.

- -

- Variable vi ∈ V is in predicate use (pu) in decision node dn of M, indicated as , meaning that variable vi is in pu in decision node dn if it appears as a constraint of DN.

- -

- Variable vi ∈ V is in computation use (cu) in node an of M, indicated as , meaning that variable vi is in cu in node an if the corresponding node behavior is involved in any sort of computation.

3. AutoTDGen Approach

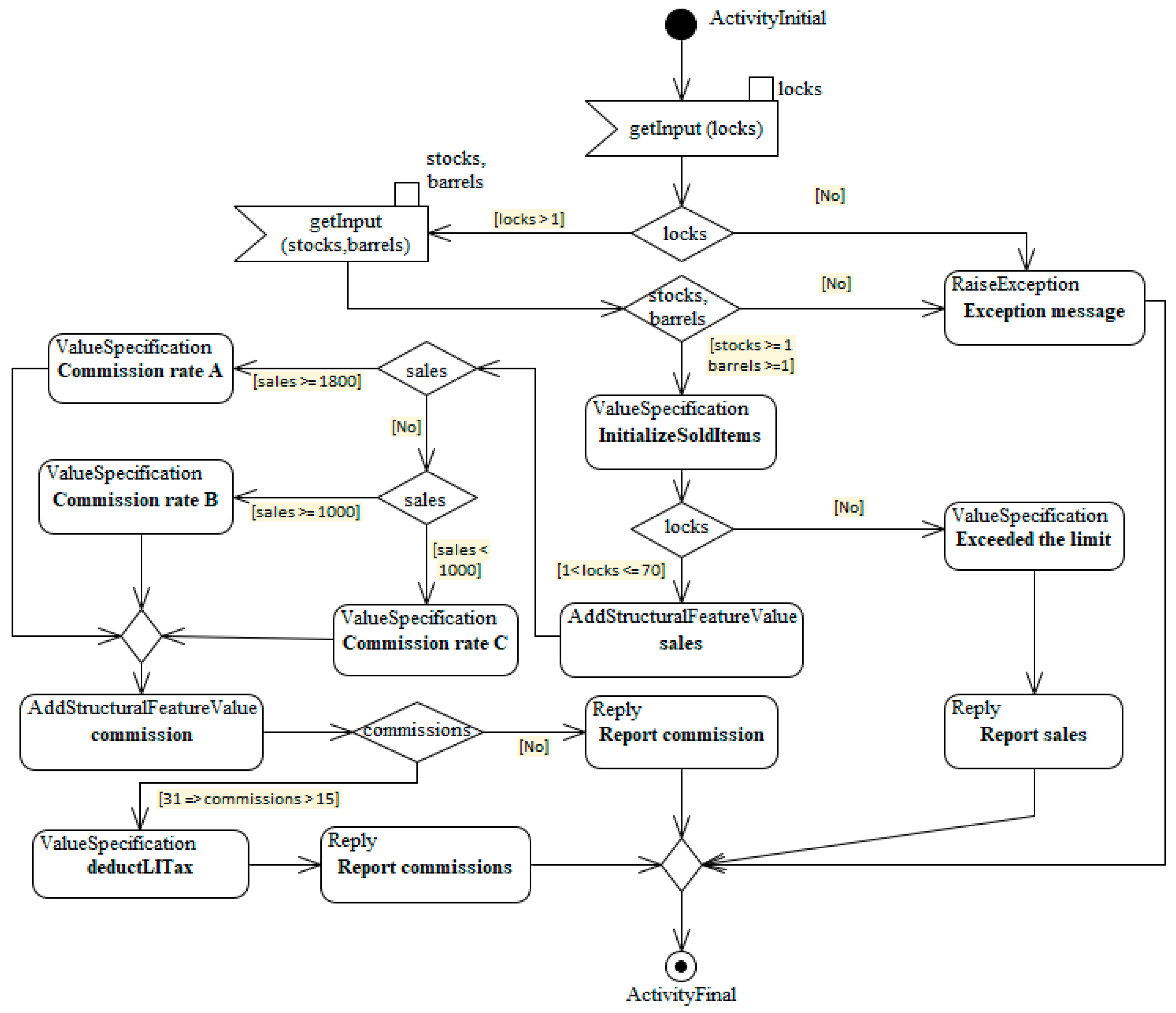

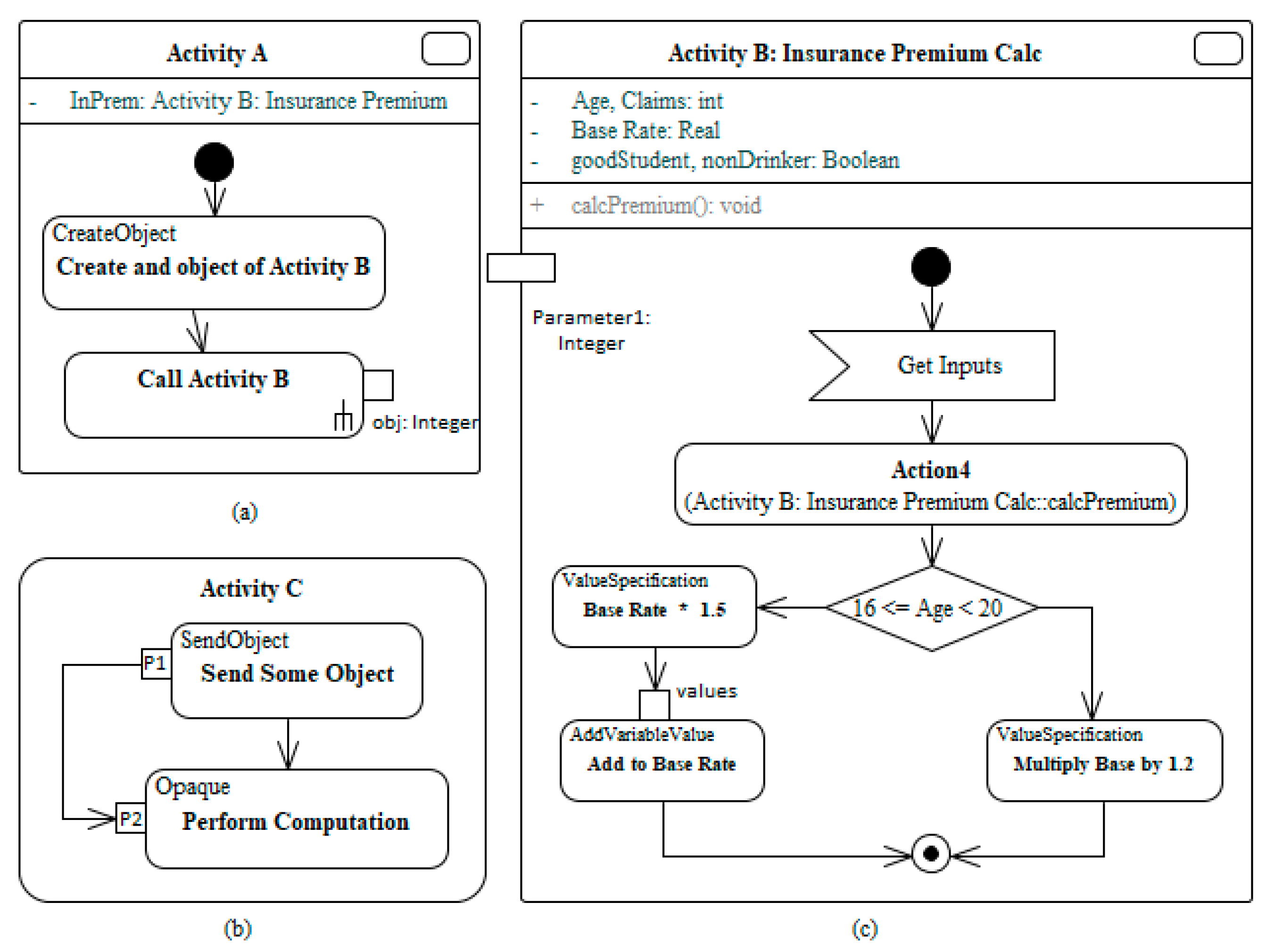

3.1. Convert ADs into XML

3.2. Parse XML to ElementTree

3.3. Automatic Data Flow Analysis and Test Data Generation

3.3.1. Data Flow Analysis

| Algorithm 1.ExtractDuPairs (M, c, ai, v, P[]: The algorithm for automatically generating the def—use pair paths. |

|

3.3.2. Test Data Generation

4. Experiment Description

4.1. Experimental Subjects

4.2. Experiment Planning

4.2.1. Research Questions

- RQ1: How do the tests generated by the proposed AutoTDGen perform, compared with alternative approaches, in terms of statement and branch coverage?

- RQ2: What is the difference in fault detection effectiveness between the tests generated by the proposed AutoTDGen and alternative approaches?

- RQ3: Is there any variation in the types of faults detected by the proposed AutoTDGen and alternative approaches?

- RQ4: What is the interaction relationship between the test coverage and fault detection effectiveness of the adopted techniques and subject properties?

4.2.2. Baseline Selection

4.2.3. Fault Seeding

4.2.4. Variable Selection

4.2.5. Parameter Setting

- Population size: The initial population is 2000, a set of valid integer test data in the range of 1 to 2000.

- Crossover: As a crossover operator, we use a single-point crossover, and the crossover probability is determined based on the fitness value.

- Mutation: We perform the mutation using a uniform mutation strategy, with the mutation probability p = (1/population size). Where the population size is the number of test data to be mutated.

- Selection: The selection strategy we use here in this experiment is the tournament selection, with the pool size equal to 20.

4.2.6. Experimental Protocol

5. Experimental Results

6. Discussion

7. Threats to Validity

8. Conclusion and Future Work

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- Ali, S.; Iqbal, M.Z.; Arcuri, A.; Briand, L. A search-based OCL constraint solver for model-based test data generation. In Proceedings of the 11th International Conference on Quality Software (IEEE 2011), Madrid, Spain, 13–14 July 2011; pp. 41–50. [Google Scholar]

- Utting, M.; Legeard, B.; Bouquet, F.; Fourneret, E.; Pereux, F.; Vernotte, A. Recent advances in model-based testing. Adv. Comput. 2016, 101, 53–120. [Google Scholar]

- Utting, M.; Legeard, B. The challenge. In Practical Model-Based Testing: A Tools Approach; Elsevier Inc.: San Francisco, CA, USA, 2007; pp. 1–18. [Google Scholar]

- Schieferdecker, I. Model-Based Testing. IEEE Softw. 2012, 29, 14–18. [Google Scholar] [CrossRef]

- Jorgensen, P.C. The Craft of Model-Based Testing; CRC Press: Boca Raton, FL, USA, 2017; pp. 3–13. [Google Scholar]

- Shirole, M.; Kumar, R. UML behavioral model based test case generation: A survey. ACM SIGSOFT Softw. Eng. Notes 2013, 38, 1–13. [Google Scholar] [CrossRef]

- Ahmad, T.; Iqbal, J.; Ashraf, A.; Truscan, D.; Porres, I. Model-based testing using UML activity diagrams: A systematic mapping study. Comp. Sci. Rev. 2019, 33, 98–112. [Google Scholar] [CrossRef] [Green Version]

- Felderer, M.; Herrmann, A. Comprehensibility of system models during test design: A controlled experiment comparing UML activity diagrams and state machines. Softw. Qual. J. 2019, 27, 125–147. [Google Scholar] [CrossRef] [Green Version]

- Boghdady, P.N.; Badr, N.L.; Hashim, M.A.; Tolba, M.F. An enhanced test case generation technique based on activity diagrams. In Proceedings of the 2011 International Conference on Computational Science (IEEE ICCS 2011), Singapore, 1–3 June 2011; pp. 289–294. [Google Scholar]

- Kansomkeat, S.; Thiket, P.; Offutt, J. Generating test cases from UML activity diagrams using the Condition-Classification Tree Method. In Proceedings of the The 2nd International Conference on Software Technology and Engineering (ICSTE 2010), San Juan, PR, USA, 3–5 October 2010; pp. V1-62–V1-66. [Google Scholar]

- Kundu, D.; Samanta, D. A novel approach to generate test cases from UML activity diagrams. J. Object Technol. 2009, 8, 65–83. [Google Scholar] [CrossRef]

- Nayak, A.; Samanta, D. Synthesis of test scenarios using UML activity diagrams. Softw. Syst. Model. 2011, 10, 63–89. [Google Scholar] [CrossRef]

- Arora, V.; Singh, M.; Bhatia, R. Orientation-based Ant colony algorithm for synthesizing the test scenarios in UML activity diagram. Inf. Softw. Technol. 2020, 123, 106292. [Google Scholar] [CrossRef]

- Badlaney, J.; Ghatol, R.; Jadhwani, R. An Introduction to Data-Flow Testing; North Carolina State University Department of Computer Science: Raleigh, NC, USA, 2006. [Google Scholar]

- Frankl, P.G.; Weyuker, E.J. An applicable family of data flow testing criteria. IEEE Trans. Softw. Eng. 1988, 14, 1483–1498. [Google Scholar] [CrossRef] [Green Version]

- Xiang, D.; Liu, G.; Yan, C.; Jiang, C. Detecting data-flow errors based on Petri nets with data operations. IEEE/CAA J. Autom. Sin. 2017, 5, 251–260. [Google Scholar] [CrossRef]

- Su, T.; Wu, K.; Miao, W.; Pu, G.; He, J.; Chen, Y.; Su, Z. A Survey on Data-Flow Testing. ACM Comput. Surv. 2017, 50. [Google Scholar] [CrossRef]

- OMG. Unified Modeling Language® (OMG UML®); Version 2.5.1; OMG: Needham, MA, USA, 2017. [Google Scholar]

- Rodrigues, D.S.; Delamaro, M.E.; Correa, C.G.; Nunes, F.L.S. Using Genetic Algorithms in Test Data Generation: A Critical Systematic Mapping. ACM Comput. Surv. 2018, 51, 1–23. [Google Scholar] [CrossRef]

- Fraser, G.; Arcuri, A. EvoSuite: Automatic test suite generation for object-oriented software. In Proceedings of the 19th ACM SIGSOFT Symposium and the 13th European Conference on Foundations of Software Engineering, Szeged, Hungary, 5–9 September 2011; pp. 416–419. [Google Scholar]

- Fraser, G.; Arcuri, A.; McMinn, P. A memetic algorithm for whole test suite generation. J. Syst. Softw. 2015, 103, 311–327. [Google Scholar] [CrossRef] [Green Version]

- Panichella, A.; Kifetew, F.M.; Tonella, P. Automated test case generation as a many-objective optimisation problem with dynamic selection of the targets. IEEE Trans. Softw. Eng. 2017, 44, 122–158. [Google Scholar] [CrossRef] [Green Version]

- Jaffari, A.; Yoo, C.-J.; Lee, J. Automatic Data Flow Analysis to Generate Test Cases from Activity Diagrams. In Proceedings of the 21st Korea Conference on Software Engineering (KCSE 2019), Pyeongchang, Korea, 28–30 January 2019; pp. 136–139. [Google Scholar]

- Jaffari, A.; Yoo, C.-J. An Experimental Investigation into Data Flow Annotated-Activity Diagram-Based Testing. J. Comput. Sci. Eng. 2019, 13, 107–123. [Google Scholar] [CrossRef]

- Heinecke, A.; Brückmann, T.; Griebe, T.; Gruhn, V. Generating Test Plans for Acceptance Tests from UML Activity Diagrams. In Proceedings of the 17th IEEE International Conference and Workshops on Engineering of Computer-Based Systems (ECBS 2010), Oxford, UK, 22–26 March 2010; pp. 57–66. [Google Scholar]

- Chandler, R.; Lam, C.P.; Li, H. AD2US: An automated approach to generating usage scenarios from UML activity diagrams. In Proceedings of the 12th Asia-Pacific Software Engineering Conference (APSEC 2005), Taipei, Taiwan, 15–17 December 2005; p. 8. [Google Scholar]

- Dong, X.; Li, H.; Lam, C.P. Using adaptive agents to automatically generate test scenarios from the UML activity diagrams. In Proceedings of the 12th Asia-Pacific Software Engineering Conference (APSEC 2005), Taipei, Taiwan, 15–17 December 2005; p. 8. [Google Scholar]

- Hettab, A.; Kerkouche, E.; Chaoui, A. A Graph Transformation Approach for Automatic Test Cases Generation from UML Activity Diagrams. In Proceedings of the Eighth International C* Conference on Computer Science & Software Engineering, Yokohama, Japan, 13–15 July 2015; pp. 88–97. [Google Scholar]

- Linzhang, W.; Jiesong, Y.; Xiaofeng, Y.; Jun, H.; Xuandong, L.; Guoliang, Z. Generating test cases from UML activity diagram based on gray-box method. In Proceedings of the 11th Asia-Pacific Software Engineering Conference (APSEC 2004), Busan, Korea, 30 November–3 December 2004; pp. 284–291. [Google Scholar]

- Tiwari, S.; Gupta, A. An approach to generate safety validation test cases from uml activity diagram. In Proceedings of the 20th Asia-Pacific Software Engineering Conference (APSEC 2013) IEEE, Bangkok, Thailand, 2–5 December 2013; pp. 189–198. [Google Scholar]

- Bai, X.; Lam, C.P.; Li, H. An approach to generate the thin-threads from the UML diagrams. In Proceedings of the 28th International Computer Software and Applications Conference (COMPSAC 2004), Hong Kong, China, 27–30 September 2004; pp. 546–552. [Google Scholar]

- Störrle, H. Semantics and verification of data flow in UML 2.0 activities. Electron. Notes Theor. Comput. Sci. 2005, 127, 35–52. [Google Scholar] [CrossRef] [Green Version]

- Fraser, G.; Arcuri, A. Whole test suite generation. IEEE Trans. Softw. Eng. 2012, 39, 276–291. [Google Scholar] [CrossRef] [Green Version]

- Xiao, M.; El-Attar, M.; Reformat, M.; Miller, J. Empirical evaluation of optimization algorithms when used in goal-oriented automated test data generation techniques. Empir. Softw. Eng. J. 2007, 12, 183–239. [Google Scholar] [CrossRef]

- Kalaee, A.; Rafe, V. Model-based test suite generation for graph transformation system using model simulation and search-based techniques. Inf. Softw. Technol. 2019, 108, 1–29. [Google Scholar] [CrossRef]

- Sharma, C.; Sabharwal, S.; Sibal, R. Applying genetic algorithm for prioritization of test case scenarios derived from UML diagrams. Int. J. Comput. Sci. Issues 2014, 8, 433–444. [Google Scholar]

- Mahali, P.; Acharya, A.A. Model based test case prioritization using UML activity diagram and evolutionary algorithm. Int. J. Comput. Sci. Inform. 2013, 3, 42–47. [Google Scholar]

- Nejad, F.M.; Akbari, R.; Dejam, M.M. Using memetic algorithms for test case prioritization in model based software testing. In Proceedings of the 1st Conference on Swarm Intelligence and Evolutionary Computation IEEE (CSIEC 2016), Bam, Iran, 9–11 March 2016; pp. 142–147. [Google Scholar]

- Shirole, M.; Kommuri, M.; Kumar, R. Transition sequence exploration of UML activity diagram using evolutionary algorithm. In Proceedings of the 5th India Software Engineering Conference (ISEC 2012), Kanpur, India, 22–25 February 2012; pp. 97–100. [Google Scholar]

- Kramer, O. Chapter 2 Genetic Algorithms. In Genetic Algorithm Essentials; Springer: Berlin, Germany, 2017. [Google Scholar]

- Arcuri, A. It Does Matter How You Normalise the Branch Distance in Search Based Software Testing. In Proceedings of the Third International Conference on Software Testing, Verification and Validation, Paris, France, 6–9 April 2010; pp. 205–214. [Google Scholar]

- Malhotra, R. Empirical Research in Software Engineering: Concepts, Analysis, and Applications; Chapman & Hall/CRC: London, UK, 2015. [Google Scholar]

- Kitchenham, B.A.; Pfleeger, S.L.; Pickard, L.M.; Jones, P.W.; Hoaglin, D.C.; El Emam, K.; Rosenberg, J. Preliminary guidelines for empirical research in software engineering. IEEE Trans. Softw. Eng. 2002, 28, 721–734. [Google Scholar] [CrossRef] [Green Version]

- Wohlin, C.; Runeson, P.; Höst, M.; Ohlsson, M.C.; Regnell, B.; Wesslén, A. Experimentation in Software Engineering; Springer: Berlin, Germany, 2012. [Google Scholar]

- Mouchawrab, S.; Briand, L.C.; Labiche, Y.; Di Penta, M. Assessing, Comparing, and Combining State Machine-Based Testing and Structural Testing: A Series of Experiments. IEEE Trans. Softw. Eng. 2011, 37, 161–187. [Google Scholar] [CrossRef]

- Fraser, G.; Arcuri, A. Evolutionary Generation of Whole Test Suites. In Proceedings of the 11th International Conference on Quality Software, Madrid, Spain, 13–14 July 2011; pp. 31–40. [Google Scholar]

- Fraser, G.; Rojas, J.M.; Campos, J.; Arcuri, A. EvoSuite at the SBST 2017 tool competition. In Proceedings of the 10th International Workshop on Search-Based Software Testing, Buenos Aires, Argentina, 22–23 May 2017; pp. 39–41. [Google Scholar]

- Andrews, J.H.; Briand, L.C.; Labiche, Y. Is mutation an appropriate tool for testing experiments? In Proceedings of the 27th International Conference on Software Engineering, St. Louis, MO, USA, 15–21 May 2005; pp. 402–411. [Google Scholar]

- Kintis, M.; Papadakis, M.; Papadopoulos, A.; Valvis, E.; Malevris, N.; Le Traon, Y. How effective are mutation testing tools? An empirical analysis of Java mutation testing tools with manual analysis and real faults. Empir. Softw. Eng. 2018, 23, 2426–2463. [Google Scholar] [CrossRef] [Green Version]

| Variable | Def-Use Path Set | Def-Use Pair Paths | Prefix |

|---|---|---|---|

| Locks | (1, locks) (5, locks) | [1, locks ≥ 1] [1, locks ≥ 1, 3, 4, 5] | Y N |

| [5, 1 < locks ≤ 70] [5, 1 < locks ≤ 70, 7] | Y N | ||

| Stocks | (3, stocks) | [3, stocks ≥ 1] [3, stocks ≥ 1, 5, 6, 7] | Y N |

| Barrels | (3, barrels) | [3, barrels ≥ 1] [3, barrels ≥ 1, 5, 6, 7] | Y N |

| Commissions | (14, commissions) | [14, 31≥commissions && commissions >15, 16] | N |

| Sales | (7, sales) | [7, sales ≥ 1800] [7, sales ≥ 1800, 9] [7, sales ≥ 1800, 9, 13, 14] [7, 9, sales ≥ 1000] [7, 8, sales ≥ 1000, 11, 13, 14] [7, 8, 10, sales ≤ 999, 12, 13, 14] | Y Y N Y N N |

| Variable | Dup Path Set | Test Data | Guard Condition | #Dup Covered |

|---|---|---|---|---|

| locks | (1, locks) (5, locks) | 1 | [locks ≥ 1] | 2 |

| locks | 24 | [1 ≤ locks && locks ≤ 70] | 2 | |

| stocks | (3, stocks) | 1 | [stocks ≥ 1] | 2 |

| Barrels | (3, barrels) | 2 | [barrels ≥ 1] | 2 |

| commission | (14, commissions) | 20 | [15 < commission ≤ 31] | 1 |

| sales | (7, sales) | 19, 18, 18 | [sales ≥ 1800] | 3 |

| sales | 14, 11, 3 | [sales ≥ 1000 | 2 | |

| sales | 8, 4, 20 | [sales < 1000] | 1 |

| Systems | #LOC | #Classes | Branches | Statements | Mutants | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Min | Mean | Max | Min | Mean | Max | Min | Mean | Max | |||

| Cruise Control | 358 | 4 | 10 | 16.5 | 28 | 31 | 41.5 | 62 | 15 | 27.25 | 48 |

| Elevator | 581 | 8 | 0 | 17.5 | 72 | 8 | 45.75 | 152 | 2 | 30.9 | 111 |

| Coffee Maker | 393 | 4 | 16 | 26.7 | 48 | 42 | 52.7 | 72 | 24 | 39 | 68 |

| Subject Systems | DFAAD | EvoSuite | AutoTDGen | ||||||

|---|---|---|---|---|---|---|---|---|---|

| SC | BC | FD | SC | BC | FD | SC | BC | FD | |

| Cruise Control | 97.6 | 77.3 | 67.9 | 97 | 95.5 | 44 | 99 | 83.3 | 73 |

| Elevator | 96.2 | 86.4 | 69.6 | 77 | 63 | 24.3 | 96.5 | 87.4 | 85.82 |

| Coffee Maker | 98 | 90 | 84.6 | 100 | 100 | 83.8 | 99.4 | 98.7 | 88 |

| Mean | 97.3 | 84.6 | 74 | 91.25 | 86.13 | 50.7 | 98.3 | 89.8 | 82.33 |

| Mutation Operators | Total Mutants | Cruise Control | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AutoTDGen | DFAAD | EvoSuite | ||||||||

| Detected | Failed | Pct. (%) | Detected | Failed | Pct. (%) | Detected | Failed | Pct. (%) | ||

| IM | 0 | 0 | 0 | 0 | 0 | 0 | 0% | 0 | 0 | 0 |

| VMCM | 26 | 23 | 3 | 88 | 19 | 7 | 73 | 1 | 25 | 3.8 |

| RVM | 20 | 20 | 0 | 100 | 18 | 2 | 90 | 18 | 2 | 90 |

| MM | 20 | 10 | 10 | 50 | 6 | 14 | 30 | 3 | 17 | 15 |

| NCM | 33 | 32 | 1 | 97 | 31 | 2 | 94 | 26 | 7 | 78.8 |

| INM | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| CBM | 10 | 0 | 10 | 0 | 0 | 10 | 0 | 0 | 10 | 0 |

| Mutation Operators | Total Mutants | Elevator | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AutoTDGen | DFAAD | EvoSuite | ||||||||

| Detected | Failed | Pct. (%) | Detected | Failed | Pct. (%) | Detected | Failed | Pct. (%) | ||

| IM | 4 | 4 | 0 | 100 | 4 | 0 | 100 | 3 | 1 | 75 |

| VMCM | 76 | 54 | 22 | 71 | 45 | 31 | 59 | 11 | 65 | 14 |

| RVM | 45 | 39 | 6 | 87 | 34 | 11 | 76 | 16 | 29 | 35 |

| MM | 30 | 26 | 4 | 87 | 14 | 16 | 47 | 0 | 30 | 0 |

| NCM | 70 | 69 | 1 | 99 | 62 | 8 | 89 | 18 | 43 | 26 |

| INM | 1 | 1 | 0 | 100 | 1 | 0 | 100 | 0 | 1 | 0 |

| CBM | 21 | 19 | 2 | 90 | 12 | 9 | 57 | 12 | 9 | 57 |

| Mutation Operators | Total Mutants | Coffee Maker | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AutoTDGen | DFAAD | EvoSuite | ||||||||

| Detected | Failed | Pct. (%) | Detected | Failed | Pct. (%) | Detected | Failed | Pct. (%) | ||

| IM | 6 | 6 | 0 | 100 | 6 | 0 | 100 | 6 | 0 | 100 |

| VMCM | 12 | 12 | 0 | 100 | 12 | 0 | 100 | 7 | 5 | 58 |

| RVM | 26 | 25 | 1 | 96 | 25 | 1 | 96 | 26 | 0 | 100 |

| MM | 9 | 9 | 0 | 100 | 9 | 0 | 100 | 4 | 5 | 44 |

| NCM | 40 | 40 | 0 | 100 | 40 | 0 | 100 | 40 | 0 | 100 |

| INM | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| CBM | 24 | 11 | 13 | 46 | 7 | 17 | 29 | 15 | 9 | 62 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jaffari, A.; Yoo, C.-J.; Lee, J. Automatic Test Data Generation Using the Activity Diagram and Search-Based Technique. Appl. Sci. 2020, 10, 3397. https://doi.org/10.3390/app10103397

Jaffari A, Yoo C-J, Lee J. Automatic Test Data Generation Using the Activity Diagram and Search-Based Technique. Applied Sciences. 2020; 10(10):3397. https://doi.org/10.3390/app10103397

Chicago/Turabian StyleJaffari, Aman, Cheol-Jung Yoo, and Jihyun Lee. 2020. "Automatic Test Data Generation Using the Activity Diagram and Search-Based Technique" Applied Sciences 10, no. 10: 3397. https://doi.org/10.3390/app10103397