Recent Developments Regarding Painting Robots for Research in Automatic Painting, Artificial Creativity, and Machine Learning

Abstract

:1. Introduction

E-David Goals

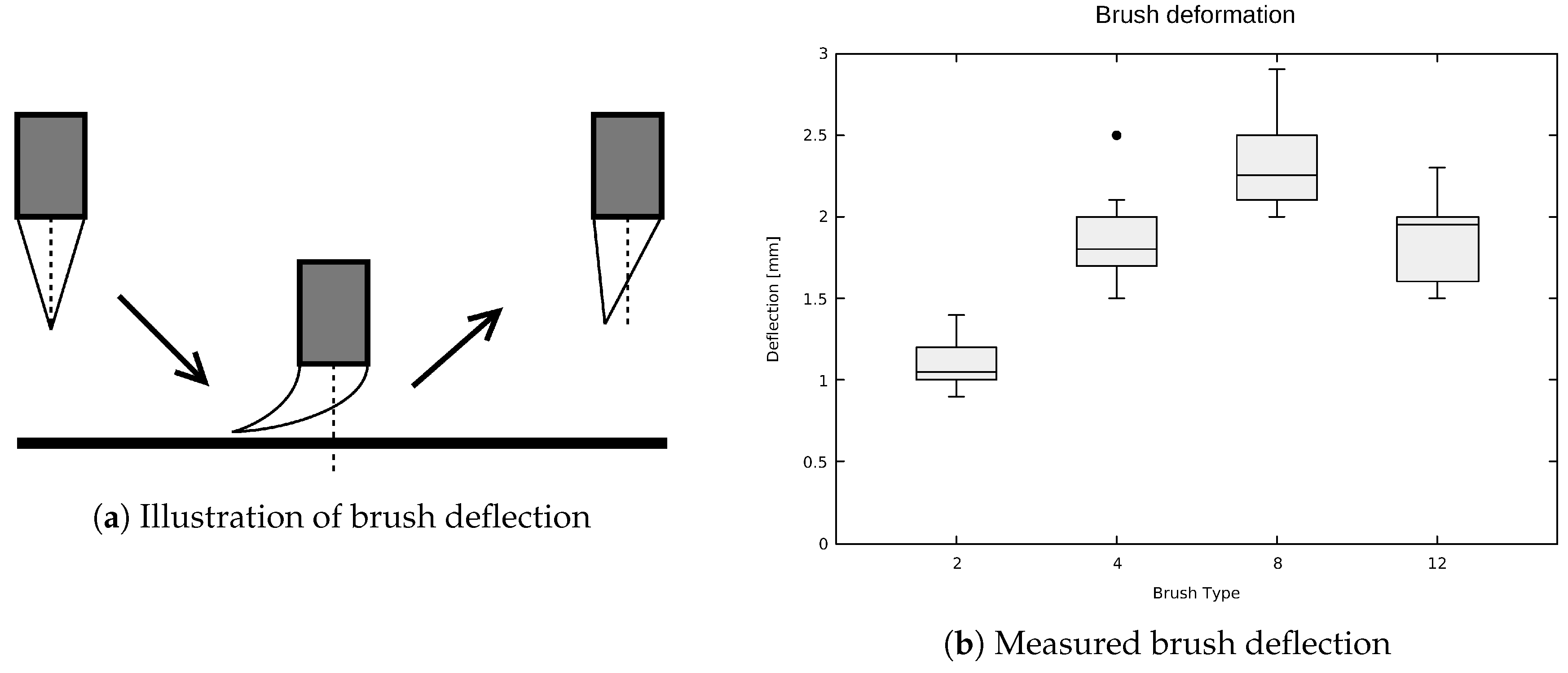

- Improved Precision: One of the fundamental issues in robotic painting, as it is performed with e-David, is handling its brushes. While the industrial robots used in this project have a repeatability of <±0.02 mm [6,7], the lack of a suitable system to hold brushes rigidly has introduced errors in stroke placement. For example, brushes were wrapped in tape and then stuck into a tube held by the robot. Due to this imprecision the brush tip often deviated from the programmed tool center point (TCP)), had concentricity issues, and tended to slowly drift as the brush was used. By painting along known trajectories, we measured errors up to an amount of 1 mm to 3 mm (see Appendix A). This has been compensated by the painting algorithm, which detected incorrectly placed strokes and attempted to overpaint them in the next pass. However, this approach can lead to artifacts in the produced image due to constant overpainting, as the robot is unable to achieve a required feature on the canvas. Finding a new way to hold a brush rigidly will allow for precise and predictable control of stroke placement on the canvas.

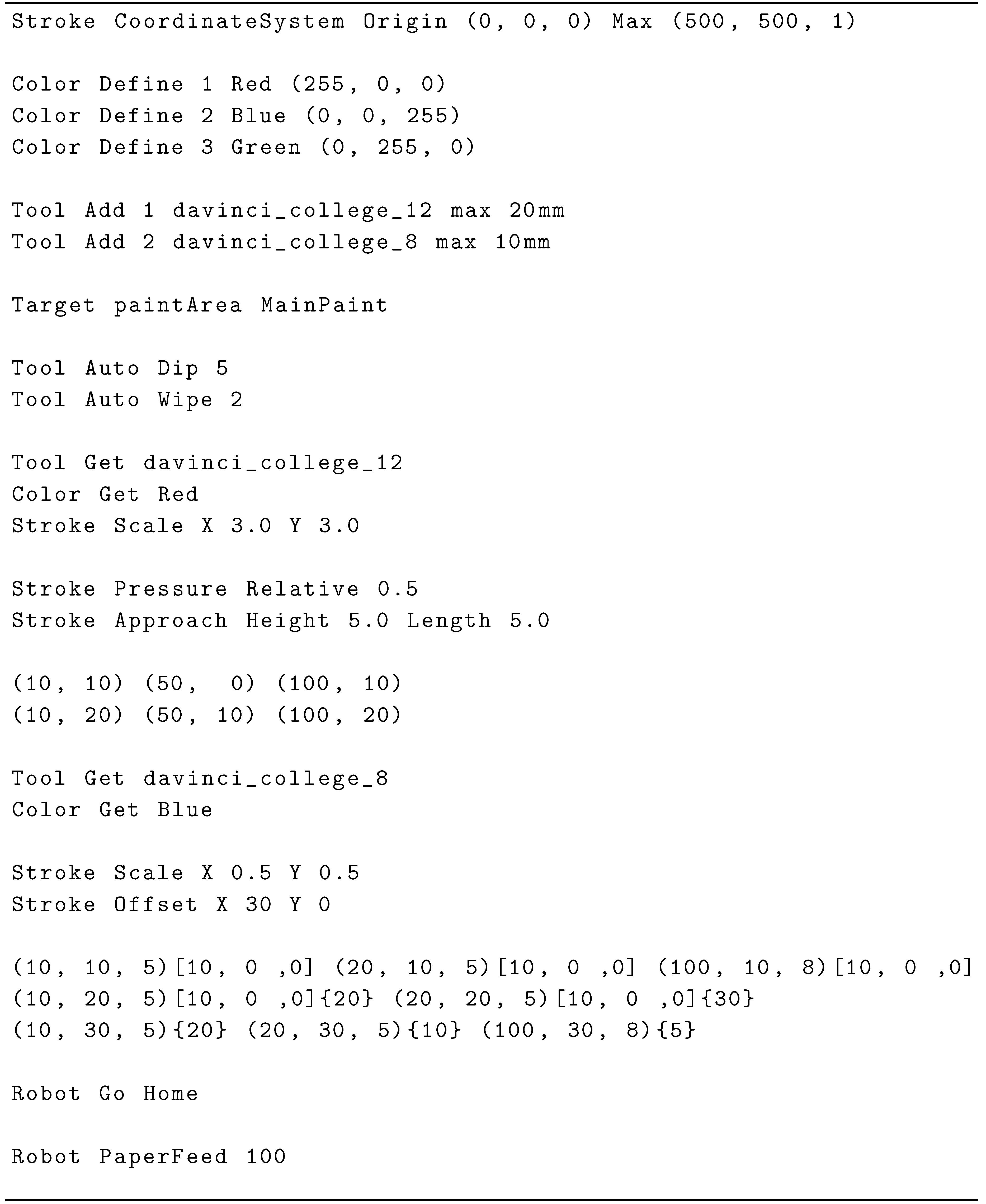

- Definition of Core Data: The previous software system did not have a rigid formalism to handle stroke data, which created some issues with consistent use of units and reference systems as well as stroke serialization. To remedy this, consistent data structures, which represent the primitives occurring in the painting process and allow the construction of higher-level concepts, should be used. For example, we defined strokes and basic painting actions as primitives. A certain sequence of strokes with a preceding paint pickup command can be combined to a high-level “fill region” command. The same stroke sequence without the pickup command, applied over a previously painted region can be used for a blur effect. Moreover, explicitly associating these commands with units, their reference canvas, and other additional data allows for a more controlled and understandable painting process.

- Data Recording: Upon attempting to implement first machine learning systems to predict brush behavior, we encountered a lack of training data and the inability to record it in sufficient volume. The painting process previously produced only debug output and canvas feedback pictures, without recording the associated strokes that lead to the observed result. In order to make use of the already acquired knowledge about the used tool or employ more general machine learning approaches, data must be reliably collected and stored in a usable format. This is enabled by having a precise machine with good repeatability and consistent data definitions, as discussed above. Moreover, as painting on a canvas is non-reversible with our current set-up, we had to find a way to provide the robot with a clean canvas for producing strokes. In the old system, this was done by manually exchanging the canvas with a new one. The new system should be able to automatically change to fresh materials and to acquire data by itself for long periods of time. It should be possible to record both single strokes and the results of multiple actions within an entire painting.

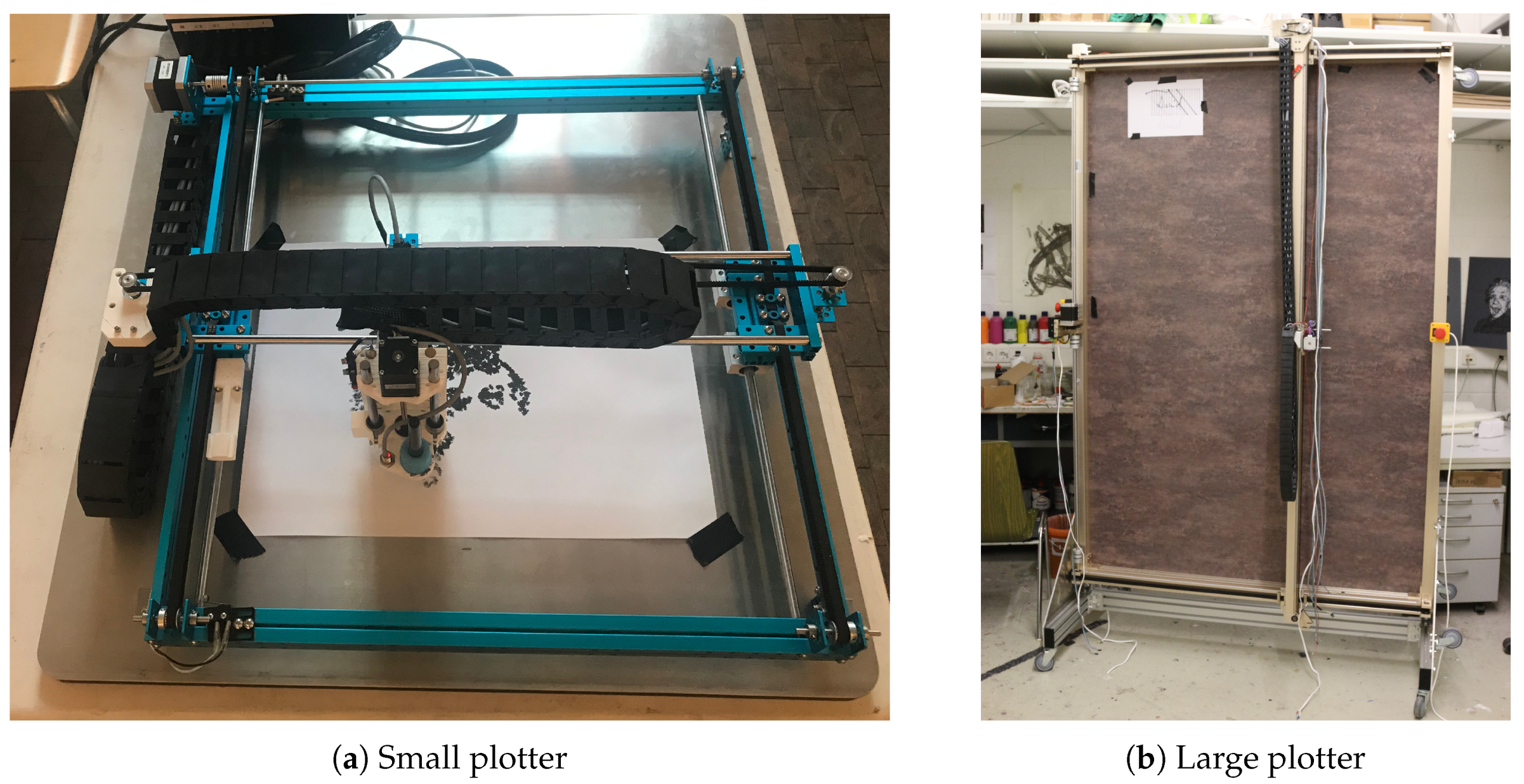

- Hardware Flexibility: As industrial robots are expensive, difficult to set up, and require experience to operate, e-David should also be usable on much simpler machines. For this purpose, hardware should be mostly abstracted and it should be possible to integrate new machines that are potentially built in the future. One new type of machine already integrated into e-David are XY-plotters. They allow users to work with a subset of e-Davids capabilities and provide a better transition into the project for students working on their thesis in the context of e-David.

- Open Interfaces: Until now, e-David has only been used at the University of Konstanz. Due to the necessity of using an industrial robot set up very similar to our machine and our tailor-made software, it has been very difficult for others to replicate our results or to reuse them. The new software should allow both simple input of painting commands and a usable output of feedback images. This will make e-David accessible for collaborating researchers, as development done on one painting process will carry over to all other machines in use. New machines are controllable by existing painting processes if a basic driver program is added to the e-David system.

- Usability for Artists: In the past, a couple of successful collaborations happened with artists, who worked with e-David. This resulted in several exhibitions. However, using the robot was made difficult by very technical interfaces, which are often more suited for development and not optimized for a productive painting workflow. In order to keep the collaborative momentum going, a user-friendly and flexible interface must be created, which allows artists to control the system.

- Modular Painting Process: The previous e-David software suffered from a monolithic architecture, which structurally included a single painting process. By creating multiple interfaces for painting, several modules could exist in parallel and should be easily exchangeable. The application of separate, specialized painting programs to form a single painting will ideally lead to better results.

- Machine Learning Groundwork: Through the enhanced data collection methods we plan to build a corpus of brushstrokes, which consist of pairs of trajectories and resulting strokes. Using this dataset we intend to improve upon previous efforts at static stroke analysis [2] and use state-of-the-art machine learning approaches to allow the system to continuously improve its painting abilities on a technique level.

- Improved Autonomy: Finally, as an extension beyond current machine learning, the robot should be able to investigate unknown aspects of painting autonomously; this, e.g., includes searching the corpus of known strokes for gaps and then running autonomous experiments to close these gaps.

2. Related Work

3. Changes Made to e-David

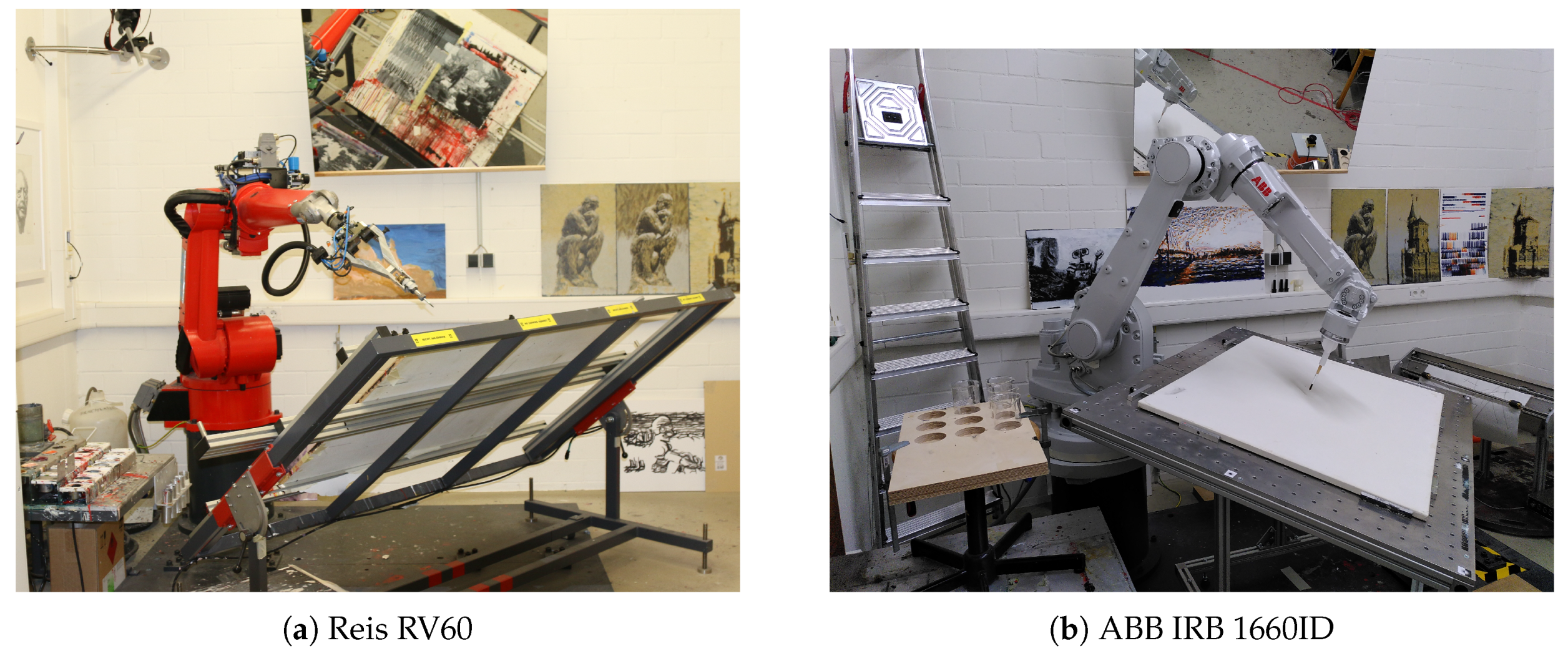

3.1. Robot Replacement

3.2. Brush Holder

3.3. Painting Hardware Improvements

- The containers for the painting fluids used to be made from steel. As these were very difficult to clean and replace in case of loss, standard glass containers are now used.

- The holder for the paint containers, the palette, used to be a steel construction with Teflon lining and complex geometry to accommodate lids for the containers. This has been replaced with a flat wooden board with holes in it, to make palette layout more flexible. Furthermore, despite the Teflon, the lids tended to accumulate old paint and became unreliable. This resulted in at least one major crash. The wooden boards can be easily replaced when too much old paint accumulates. We have also determined that there is no need for robot-operable lids on the paint containers, as the regular stirring is sufficient to keep them from drying out.

- Previously, the robot was placed in front of the canvas, which made it occlude the painting result for the camera. A feedback picture was only taken once every 300 to 1000 strokes, to speed up painting. We have reoriented the table, so that it now slopes away from the machine and the arm has to reach over to paint on the surface. This allows the camera to be placed on the opposite side, granting it a clear view of the canvas, whenever the robot picks up new paint. As this usually happens every 5–10 strokes, feedback frequency can be increased significantly.

3.4. Camera System Rework

3.5. Painting Software Improvements

- The required brush

- The paint used for the stroke

- The associated canvas object

- Approach and departure mode, which defines the angle at which the stroke is placed onto or removed from the painting surface

- The stroke trajectory given as a sequence of XYZ coordinates, in millimeters relative to the associated canvas object

3.6. Machine Learning Groundwork

4. Applications

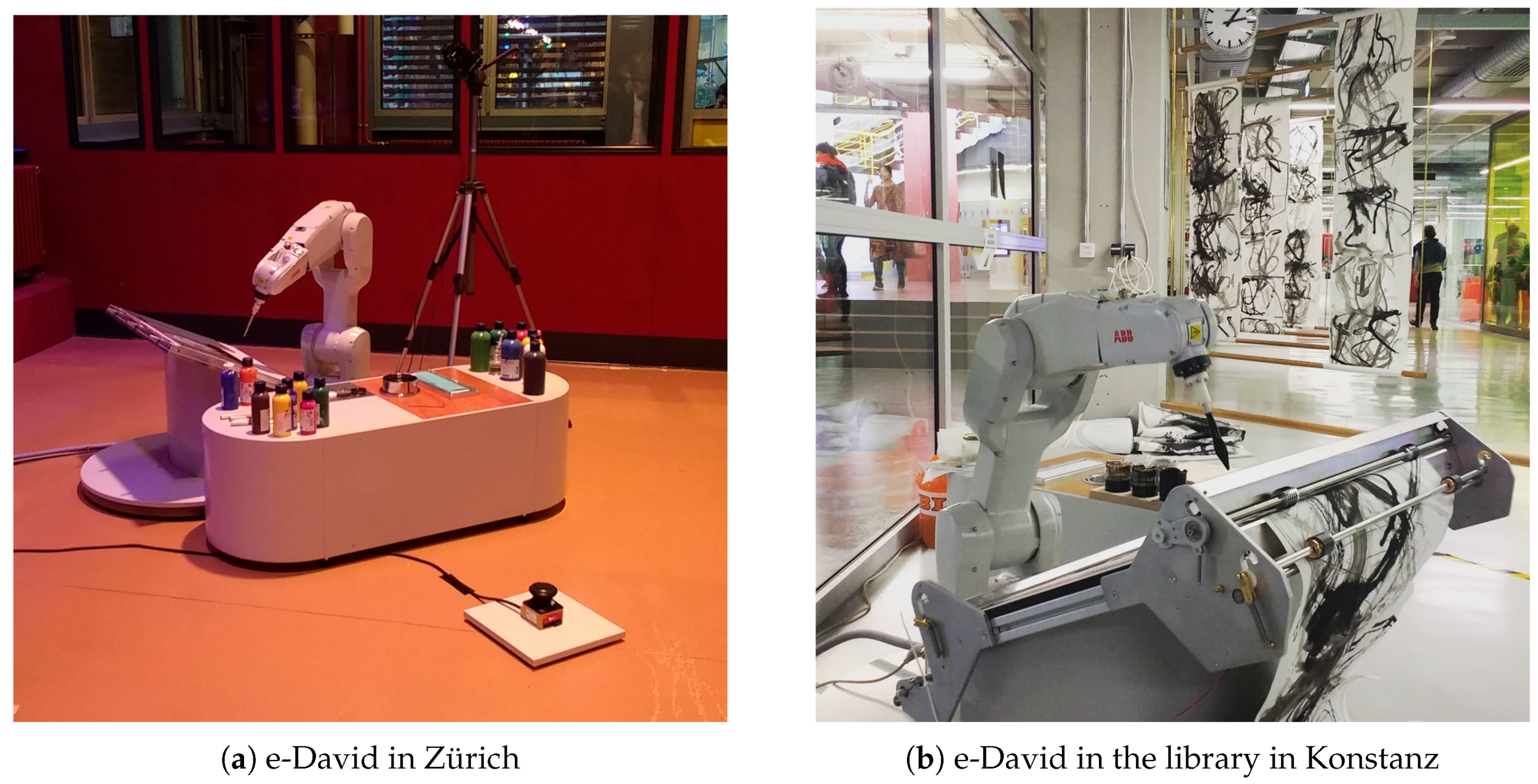

4.1. Work with Artists

4.2. e-David Exhibitions

5. First Steps towards Deep Learning in the e-David Project

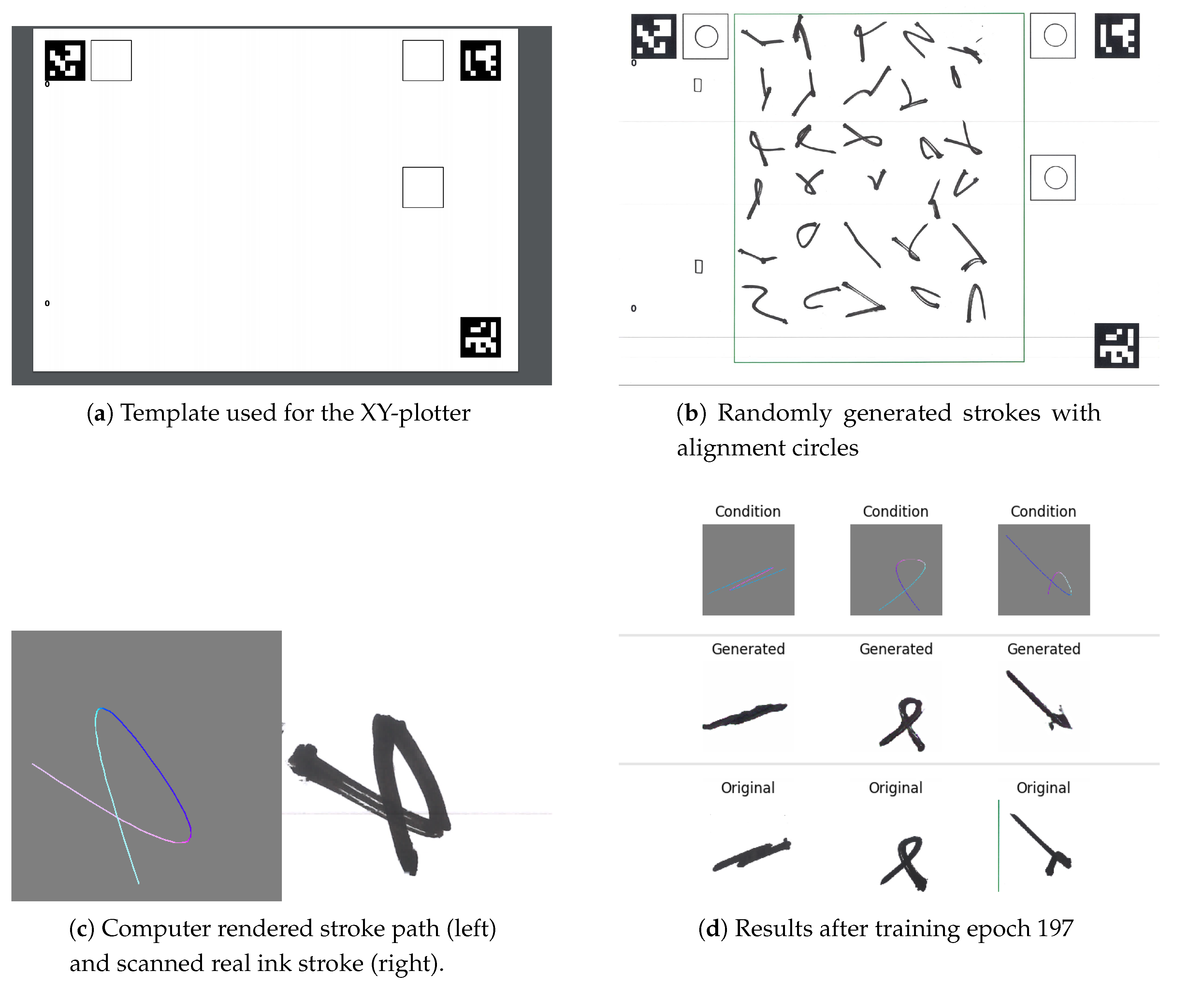

5.1. Data Generation and Data Gathering

5.2. Deep Learning Model

5.3. Deep Learning Results

5.4. Future Plans

6. Conclusions

Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| DoF | Degree of Freedom |

| TCP | Tool Center Point |

| ArUco | Library for marker detection developed by the Ava gRoup of the University of Cordoba [40,41] |

| ChArUco | Chessboard + ArUco Marker |

| GRBL | Project name of an Arduino based G-Code Parser (https://github.com/gnea/grbl) |

| CNN | Convolutional Neural Network |

| GAN | Generative Adversarial Network |

| cGAN | Conditional Generative Adversarial Nets |

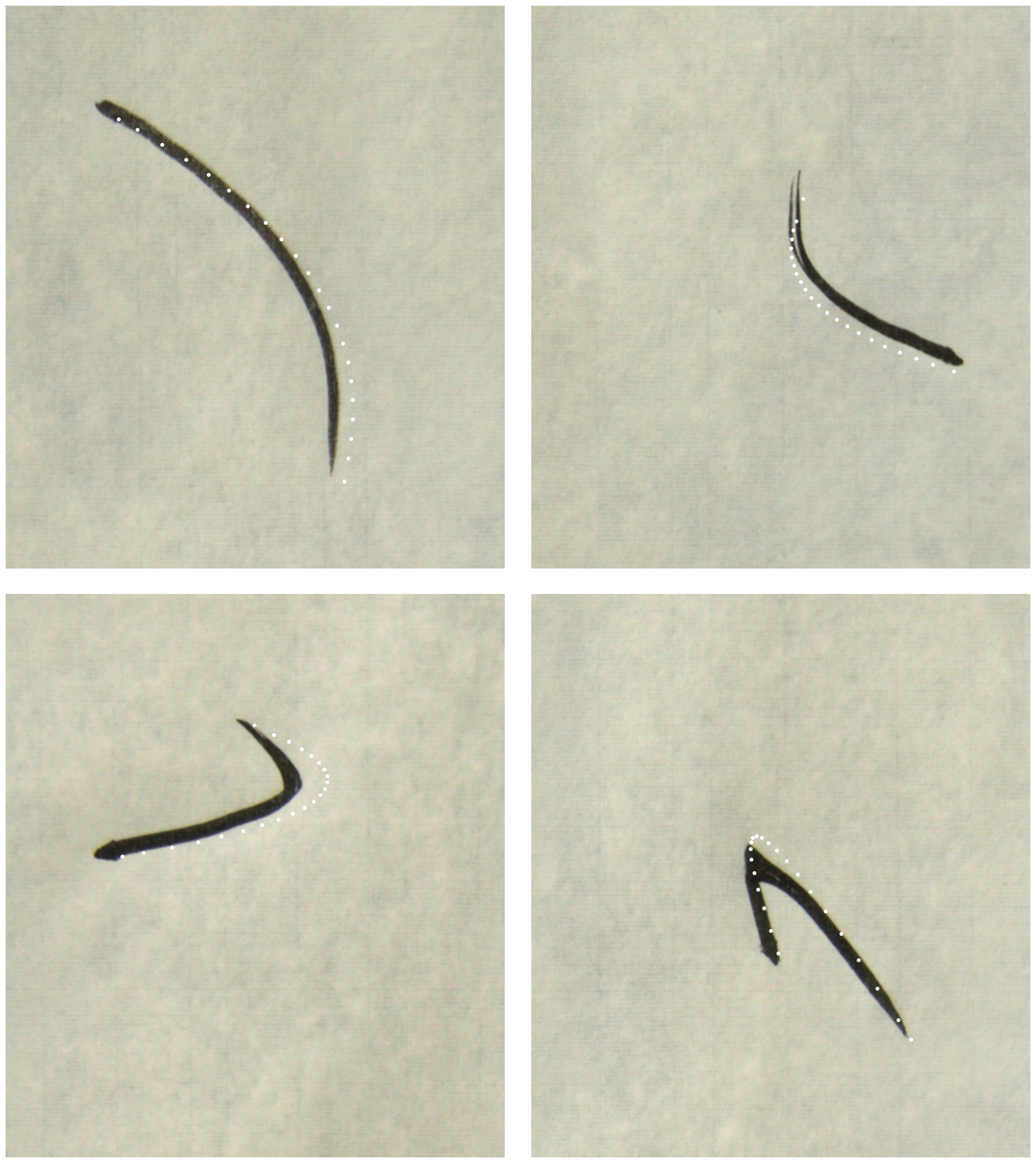

Appendix A. Brush Deflection Data

| Brush Type | Width (mm) | Bristle Length (mm) | Application Distance (mm) |

|---|---|---|---|

| Davinci College 2 | 1.5 | 10 | 7.5 |

| Davinci College 4 | 3 | 14 | 10.5 |

| Davinci College 8 | 5 | 22.5 | 16.9 |

| Davinci College 12 | 5.5 | 26 | 19.5 |

References

- Reis Roboter-Baureihe RV Technische Daten; Reis Robotics GmbH: Obernburg, Bavaria, Germany, 2012.

- Gülzow, J.; Grayver, L.; Deussen, O. Self-improving robotic brushstroke replication. Arts 2018, 7, 84. [Google Scholar] [CrossRef] [Green Version]

- Hertzmann, A. Painterly rendering with curved brush strokes of multiple sizes. In Proceedings of the 25th Annual Conference on Computer Graphics and Interactive Techniques, Orlando, FL, USA, 19–24 July 1998; pp. 453–460. [Google Scholar]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2018, 37, 421–436. [Google Scholar] [CrossRef]

- Xie, N.; Hachiya, H.; Sugiyama, M. Artist agent: A reinforcement learning approach to automatic stroke generation in oriental ink painting. IEICE Trans. Inf. Syst. 2013, 96, 1134–1144. [Google Scholar] [CrossRef] [Green Version]

- Product Specification IRB 1200. ABB Document ID 3HAC046982. 2017. Available online: https://library.e.abb.com/public/695d95569cdc46b5a2722f7d1efacb1d/3HAC046982%20PS%20IRB%201200-de.pdf (accessed on 9 November 2019).

- Product Specification IRB 1660ID. ABB Document ID 3HAC023604. 2017. Available online: https://library.e.abb.com/public/f021934fe70f41e9872b10d833d9ed54/3HAC023604%20PS%20IRB%201600-de.pdf (accessed on 12 January 2020).

- Scalera, L.; Seriani, S.; Gasparetto, A.; Gallina, P. Non-photorealistic rendering techniques for artistic robotic painting. Robotics 2019, 8, 10. [Google Scholar] [CrossRef] [Green Version]

- Scalera, L.; Seriani, S.; Gasparetto, A.; Gallina, P. Watercolour robotic painting: A novel automatic system for artistic rendering. J. Intell. Robot. Syst. 2018, 95, 871–886. [Google Scholar] [CrossRef]

- Scalera, L.; Seriani, S.; Gasparetto, A.; Gallina, P. Busker robot: A robotic painting system for rendering images into watercolour artworks. In IFToMM Symposium on Mechanism Design for Robotics; Springer: Cham, Switzerland, 2018; pp. 1–8. [Google Scholar]

- Berio, D.; Calinon, S.; Leymarie, F.F. Learning dynamic graffiti strokes with a compliant robot. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 3981–3986. [Google Scholar]

- Dong, X.L.; Li, W.J.; Xin, N.; Zhang, L.P.; Lu, Y.X. Stylized Portrait Generation and Intelligent Drawing of Portrait Rendering Robot. DEStech Trans. Eng. Technol. Res. 2018. [Google Scholar] [CrossRef] [Green Version]

- Song, D.; Lee, T.; Kim, Y.J. Artistic pen drawing on an arbitrary surface using an impedance-controlled robot. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4085–4090. [Google Scholar]

- Xie, N.; Zhao, T.; Tian, F.; Zhang, X.H.; Sugiyama, M. Stroke-based stylization learning and rendering with inverse reinforcement learning. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Huang, Z.; Heng, W.; Zhou, S. Learning to paint with model-based deep reinforcement learning. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8709–8718. [Google Scholar]

- Jia, B.; Fang, C.; Brandt, J.; Kim, B.; Manocha, D. Paintbot: A reinforcement learning approach for natural media painting. arXiv 2019, arXiv:1904.02201. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. A neural algorithm of artistic style. arXiv 2015, arXiv:1508.06576. [Google Scholar] [CrossRef]

- CLAVEL, R. Robots Parallèles: Du Packaging à Cadence élevée à la Production D’ultra Haute Précision. 2011. Available online: http://jnrr2011.irccyn.ec-nantes.fr/presentations/ReymondClavel.pdf (accessed on 19 February 2020).

- Das, M.T.; Dülger, L.C. Mathematical modelling, simulation and experimental verification of a scara robot. Simul. Model. Pract. Theory 2005, 13, 257–271. [Google Scholar] [CrossRef]

- Product Specification IRB 14000 YuMi. ABB Document ID 3HAC052982. 2018. Available online: https://library.e.abb.com/public/5056ab1df62c47f7ae78cc5c1218533c/3HAC052982%20PS%20IRB%2014000-de.pdf (accessed on 12 January 2020).

- Neunte Verordnung zum Produktsicherheitsgesetz (9. ProdSV). BGBl. I S. 2178, 2202. 2011. Available online: https://www.gesetze-im-internet.de/gsgv_9/9._ProdSV.pdf (accessed on 7 February 2020).

- Sungeun, K.J. GRBL Project Repository. 2020. Available online: https://github.com/grbl/grbl (accessed on 18 February 2020).

- Weck, M. Werkzeugmaschinen 2: Konstruktion und Berechnung; Springer: Cham, Switzerland, 2006. [Google Scholar]

- Lynch, K.M.; Park, F.C. Modern Robotics; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Operating Manual: IRC5 with FlexPendant for RobotWare 6.05. ABB Document ID 3HAC050941. 2017. Available online: https://library.e.abb.com/public/eb165ae225d9e01fc1257c0c003bbe3a/3HAC041344-de.pdf (accessed on 14 December 2019).

- Chu, N.H.; Tai, C.L. An efficient brush model for physically-based 3D painting. In Proceedings of the 10th Pacific Conference on Computer Graphics and Applications, Beijing, China, 9–11 October 2002; pp. 413–421. [Google Scholar]

- Baxter, W.; Govindaraju, N. Simple data-driven modeling of brushes. In Proceedings of the 2010 ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games, Washington, DC, USA, 19–21 February 2010; pp. 135–142. [Google Scholar]

- Baxter, W.V.; Lin, M.C. A versatile interactive 3D brush model. In Proceedings of the 12th Pacific Conference on Computer Graphics and Applications, PG 2004, Seoul, Korea, 6–8 October 2004; pp. 319–328. [Google Scholar]

- Stango, R.; Heinrich, S.; Shia, C. Analysis of constrained filament deformation and stiffness properties of brushes. J. Eng. Ind. 1989, 111, 238–243. [Google Scholar] [CrossRef]

- Detection of ChArUco Corners. Available online: https://docs.opencv.org/4.2.0/df/d4a/tutorial_charuco_detection.html (accessed on 28 January 2020).

- Varda, K. Cap’n Proto. 2015. Available online: Https://capnproto.org (accessed on 25 November 2019).

- European Parliament. Directive 2006/42/EC of the European Parliament and of the Council of 17 May 2006. Off. J. Eur. Union 2006. Available online: http://data.europa.eu/eli/dir/2006/42/oj (accessed on 9 June 2006).

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. arXiv 2016, arXiv:1611.07004. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems; NIPS: Montréal, QC, Canada, 2014; pp. 2672–2680. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Florensa, C.; Held, D.; Wulfmeier, M.; Zhang, M.; Abbeel, P. Reverse curriculum generation for reinforcement learning. arXiv 2017, arXiv:1707.05300. [Google Scholar]

- Still, S.; Precup, D. An information-theoretic approach to curiosity-driven reinforcement learning. Theory Biosci. 2012, 131, 139–148. [Google Scholar] [CrossRef] [PubMed]

- Abdulla, W. Mask R-CNN for Object Detection and Instance Segmentation on Keras and TensorFlow. 2017. Available online: https://github.com/matterport/Mask_RCNN (accessed on 1 January 2020).

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep face recognition. 2015. Available online: https://ora.ox.ac.uk/objects/uuid:a5f2e93f-2768-45bb-8508-74747f85cad1 (accessed on 4 March 2020).

- Romero-Ramirez, F.; Muñoz-Salinas, R.; Medina-Carnicer, R. Speeded Up Detection of Squared Fiducial Markers. Image Vis. Comput. 2018, 76. [Google Scholar] [CrossRef]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.; Medina-Carnicer, R. Generation of fiducial marker dictionaries using Mixed Integer Linear Programming. Pattern Recognit. 2015, 51. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gülzow, J.M.; Paetzold, P.; Deussen, O. Recent Developments Regarding Painting Robots for Research in Automatic Painting, Artificial Creativity, and Machine Learning. Appl. Sci. 2020, 10, 3396. https://doi.org/10.3390/app10103396

Gülzow JM, Paetzold P, Deussen O. Recent Developments Regarding Painting Robots for Research in Automatic Painting, Artificial Creativity, and Machine Learning. Applied Sciences. 2020; 10(10):3396. https://doi.org/10.3390/app10103396

Chicago/Turabian StyleGülzow, Jörg Marvin, Patrick Paetzold, and Oliver Deussen. 2020. "Recent Developments Regarding Painting Robots for Research in Automatic Painting, Artificial Creativity, and Machine Learning" Applied Sciences 10, no. 10: 3396. https://doi.org/10.3390/app10103396

APA StyleGülzow, J. M., Paetzold, P., & Deussen, O. (2020). Recent Developments Regarding Painting Robots for Research in Automatic Painting, Artificial Creativity, and Machine Learning. Applied Sciences, 10(10), 3396. https://doi.org/10.3390/app10103396