1. Introduction

Software defect prediction (SDP) is important to identify defects in the early phases of software development life cycle [

1,

2]. This early identification, and thereby removal of software defects, is crucial to yield a cost-effective and good quality software product. It usually focuses on estimating the defect proneness of software modules, and helps software practitioners allocate limited testing resources to those parts which are most likely to contain defects. This effort is particularly useful when the whole software system is too large to be tested exhaustively or the project budget is limited.

Software defect datasets are typically characterized by an imbalanced class distribution where the defective samples are fewer than the non-defective samples [

3]. The quality of data is usually the most critical factor to determine the performance of a classification model. The class imbalance of defect datasets will seriously affect the prediction performance, especially for extreme imbalance data classification. The prediction model will pay more attention to the non-defect samples, which makes the prediction model more inclined to the non-defect samples, and ignores the cost of error identification of the defective samples. Although the misclassification of defective samples does not significantly reduce the global classification accuracy, the accuracy of defective samples will decline, which is inconsistent with the goal of software defect prediction. Zhou et al. proposed a model which combined attribute selection, sampling technologies and ensemble algorithm to solve the class imbalance problem [

4]. Huda et al. introduced a new mixed sampling strategy to generate more pseudo samples from defective classes, and combined random oversampling, Majority Weighted Minority Oversampling Technique, and Fuzzy-Based Feature-Instance Recovery to construct an integrated classifier [

5]. It was proven that the prediction performance of Heterogeneous Defect Prediction (HDP) can be improved by balancing defect dataset.

At present, the research on SDP is mainly based on the defect prediction of homogeneous projects, which uses historical data of other projects to construct prediction model. The historical data have the same metrics as the target project, but they are distributed differently. Sufficient historical data are provided for the project to be predicted. However, the programming languages and application fields of different projects are often different, and the corresponding features and distribution are various. It is very difficult to construct a model with homogeneous defect prediction method to have good prediction performance. Therefore, how to use the historical data of other heterogeneous projects to establish a prediction model and predict whether the target project module contains defects, is a research hotspot in the field of software defect prediction.

HDP uses the data of other projects with different measurement standards to realize the defect tendency prediction of the target project. However, due to the different measurement standards and data differences between projects, it cannot be directly used for model construction. Turhan et al. increased the data similarity between different projects by taking advantage of the common features of source and target projects [

6]. Nam and Kim et al. used feature selection and feature matching to build the predictor with heterogeneous projects [

7]. Jing et al., who combined Unified Metric Representation (UMR) and Canonical Correlation Analysis (CCA), proposed CAA+ to make the distributions of source and target projects similar [

8]. However, these methods have three limitations. Firstly, the discarded features may contain the discrimination information of constructing the classification model. Secondly, if the number of common features is less, there may not be enough useful information for accurate prediction. Thirdly, heterogeneous projects may not have common features.

Researchers began to focus on the common potential space between the source project and the target project to settle a matter of great difference in features between heterogeneous projects. Li et al. mapped the source project and the target project to the high-dimensional kernel space, and reduced the difference of data distribution through kernel correlation alignment method [

9]. Xu et al. embedded the data from the two domains into a comparable feature space with a low dimensional, measures the difference between the two mapped domains of data using the dictionaries learned from them with the dictionary learning technique [

10]. Xu et al. used the spectrum embedding to map the source project and the target project from the high-dimensional space to the low-dimensional consistent space [

11].

Transfer learning is introduced into HDP to reduce the problem of data difference, which no longer requires two projects have the same feature dimension and distribution. Transfer learning is an important branch of machine learning. Its goal is to learn knowledge from an existing domain to solve a different but related domain problem. There are three aspects different from traditional machine learning: (1) training and test data can be subject to different distribution. (2) Sufficient labeled data is not required. (3) The model can be transferred between different tasks. It can be used to construct an HDP model with good effect.

However, not all the features can improve the transfer effect in the source project. Only the features contain important information and similar to the distribution of the target project, which are conducive to the construction of a good HDP model. The researchers focused on data processing before transfer learning. Yu et al. achieve feature transfer from the source project to the target project by designing a feature matching algorithm to convert the heterogeneous features into the matched features according to the ‘distance’ of different distributing curves [

12]. Ma et al. proposed Kernel Canonical Correlation Analysis based transfer learning algorithm to improve the adaptive ability of prediction model [

13]. Wen et al. adopted feature selection and source selection strategies, combined with Transfer Component Analysis to get better prediction performance [

14]. Chen et al. proposed a new heterogeneous transfer learning method based on neural network [

15]. The instances were transferred to generate quasi real instances. The high credibility quasi real instances were selected to expand the target project data and construct the prediction model. Tong et al. found a series of potential common kernel feature subspaces of source project and target project by combining kernel spectral embedding, transfer learning, and ensemble learning [

16]. The above approaches fully proved that the combination of feature selection and feature transferring can improve the performance of an HDP model.

In this paper, the main idea of the proposed approach is to generate samples from the density curve and balance the dataset. It not only reduces the influence of imbalanced data on the classification surface, but also avoids the generation of new samples in the dense area of non-defect samples. Ensemble learning selects some data and establishes multiple Classification and Regression Trees (CART) [

17]. The dimensionality reduction of nonlinear data in manifold space can well maintain the complete information of complex structure high-dimensional data, and the inverse mapping of low-dimensional data can also maintain most of the data information [

18]. The distortion and deformation of the local feature neighborhood can be reduced by transfer learning in the manifold space. The proposed approach is called Grassmann manifold optimal transfer defect prediction (GMOTDP). A new sample is generated according to the relative density curve of the defective samples of the source project, which can balance the dataset. The optimal subset of source project is constructed by combining with the importance ordering. Joint similarity measurement is used to construct the optimal subset of the target project. Transfer learning in the manifold space is realized by using the optimal subsets of source and target projects. Its main contributions are as follows:

A new sample data is generated to balance source project. According to the hyperellipticity density curve of the defective samples.

Use ensemble learning and joint similarity measure to obtain the optimal subsets of source project and target project, respectively.

Map the non-linear data to Grassmann manifold space, and geodesic flow kernel (GFK) is used to transfer the source project to the same distribution space of the target project.

2. Proposed Framework

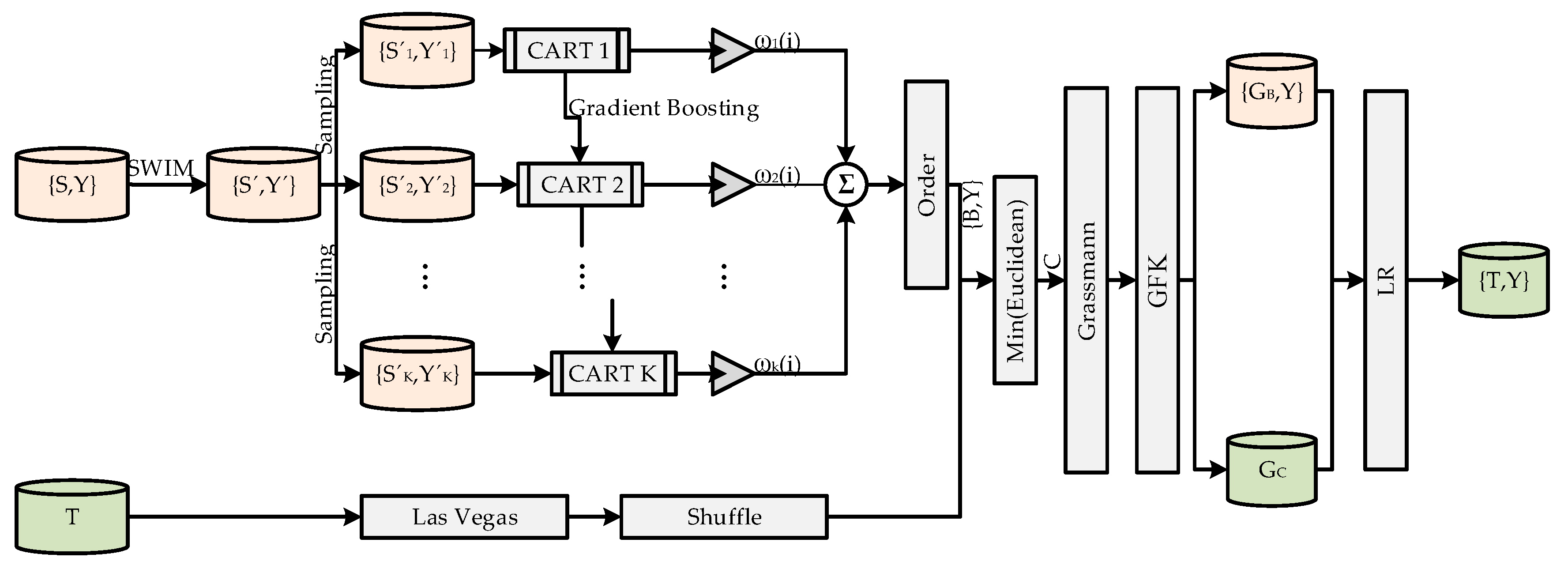

The proposed approach framework of GMOTDP is shown in

Figure 1. The algorithm implementation includes the following three parts.

(1) In the oversampling phase, Sampling With the Majority (SWIM) is used to generate new samples along the hyperelliptically dense contour of each defective sample, which is helpful to overcome the limitation of SMOTE, that is, to generate samples outside the convex hull formed by defective samples, and prevents them from being generated in higher probability areas of the non-defect class. Imbalanced source project {S,Y} are oversampled to obtain a balanced dataset {S‘,Y‘}.

(2) In the feature selection phase, the irrelevant features of the source project are firstly removed. The importance of each feature is quantified by using Classification and Regression Tree (CART). The ensemble learning is adopted. The gradient boosting algorithm is used to reduce the loss of CART, and a new tree structure model is generated to ensure the reliability of the final decision. The weighted average of different features in all trees determines the optimal subset of source project to be transferred. For the objective function, the complexity of the tree model is added to the regular term to avoid over fitting. The loss function is expanded by Taylor expansion, and the first derivative and the second derivative are used to quickly optimize the objective. Get the optimal subset of the source project through integration learning. Within a specified number of times, the features of the target project are randomly sorted. By calculating the Euclidean distance of the optimal subset of source projects to determine the similarity, the optimal subset of the target project to be transferred is jointly determined.

(3) In the feature transferring phase, the traditional Euclidean space measurement is difficult to be used in the real-world nonlinear data; thus, it is necessary to introduce new hypothesis to the data distribution. Manifolds are locally Euclidean spaces, which can find low-dimensional embeddings hidden in high-dimensional data. From the perspective of the topologic, it is locally linear and homeomorphic with low dimensional Euclidean space topology. From the perspective of differential geometry, any tiny part is regarded as Euclidean space. All samples are mapped to the Grassmann manifold. The source project is mapped to the low dimensional common space with the target project GC by local neighborhood similarity, and the source project {GB,Y} data similar to the distribution of the target project is obtained with Geodesic Flow Kernel (GFK). Source and target project datasets are inversely mapped. The transformed source project data are trained, and the target project prediction is realized by using Logistic Regression (LR).

2.1. Sampling with the Majority

Generating the samples outside the convex hull formed by the defective samples and preventing them from generating in the dense area of the non-defect samples, SWIM makes full use of the relative distribution information. The Mahalanobis distance (MD) of each given minority class instance corresponds with a hyperelliptical density contour around the majority class, and the minority class is inflated by generating synthetic samples along these contours. New samples are generated along these density curves to expand the defective class. The MD of a sample

x from the mean

is calculated by the inverse matrix

of covariance

as:

Centering the data simplifies the calculation of the distances; this will be evident in a following step, when we generate a new sample point. The mean vector

of the defect free samples is calculated, and the defect free samples

and

are centralized, respectively.

The MD is equivalent to the Euclidean distance in the whitened space of a distribution. Thus, we simplify the calculations for generating samples by whitening the space. Let

denote the covariance matrix of A, we whiten the centered minority class as:

Select a defective sample as the reference datum randomly, generate samples that are at the same Euclidean distance from the mean of the defective class. For each feature

in

Bw, we find its mean

and standard deviation

. The bounds of each feature are a random number between

and

.

controls the number of standard deviations we want the bounds to be. An upper and lower bound on its value,

and

, as follows:

Center the data, this implies that the new sample will have the same Euclidean norm as the defective sample. We transform

as:

Transform each new sample to the original space, where the new sample

will be in the same density curve as the reference datum:

This process can be repeated t times, where t is the desired number of samples to be generated based on the reference datum. SWIM is summarized as in Algorithm 1.

| Algorithm 1. Sampling with the Majority |

Input: imbalanced and labeled source dataset S, Sampling rate .

Output: balanced and labeled source dataset .

Method:N = number of samples (undefect class defected class ). Compute and covariance matrix with. Whiten as , compute mean and standard deviation for each feature . fori = 1 to t, do select a sample randomly from . Generate new sample , each feature is . transform s back into original space, end for return

|

In order to verify the effectiveness of SWIM and produce representative results, an imbalanced training set with 10 minority samples and 88 majority samples and a balanced test set with 300 samples are created. The demonstration is presented in

Figure 2. The left figure shows the results of oversampling using SWIM with an imbalanced dataset. The right figure shows the classification results of the support vector machine without oversampling. The majority class training samples are shown as red squares with black outlines, and the corresponding test samples are shown as red circles. The minority class training samples are shown as blue squares with white outlines, and the corresponding test samples are shown as blue circles. The new samples by SWIM are shown as cyan squares with white outlines. It can be seen from the

Figure 1 that the samples generated by SWIM are spread along the density curve corresponding of the minority data from the majority class. From the decision surfaces generated by the two classifiers (represented by the shading in the plot), it can be seen that using the information in majority class to generate samples can lead more representative decision surface, which obtains better classification performance.

In order to prove the effectiveness of this method, the classification results of the training set without sampling and SWIM are calculated. We divided a defect dataset into a training set and test set with a ratio of 7:3, which is classified by SVM. The average results of 10 times are shown in

Table 1. It shows that SWIM has a significant advantage when the relative and absolute imbalance is very high.

2.2. Feature Selection

This phase selects the optimal subset of the source and target projects for feature transfer. Quantify the importance of each feature to select features by using the tree model. In the process of CART construction, select the feature with the maximum gain to segment to the maximum depth, and achieve the minimum cost of CART segmentation. When constructing the next tree using the ensemble learning, the objective function adds complexity. The first and the second derivatives are used to reduce the loss of cart, minimize the objective function and ensure the reliability of the final decision. All features of all trees are weighted and averaged to determine their importance.

During the construction of cart, the idea of minimizing the objective function is as follows:

Here,

is a differentiable convex loss function, which is used to measure the difference between the prediction

and the target

. The second term

penalizes the complexity of the model, which helps to smooth the final learning weights to avoid over-fitting.

is the number of leaf nodes,

is the magnitude of leaf node vector,

represents the difficulty of sharding a node, and

represents the L2 regularization coefficient.

where

is the prediction of the

-th sample at the

-th iteration.

is the structure function of each tree that maps an example to the corresponding leaf index. The objective function greedily adds

. Each

corresponds to an independent tree structure

and leaf weights

.

Second-Order approximation optimizes the target quickly, where

and

are the first and the second order gradient statistics of the loss function, and removes the constant term of the objective function.

The weight

of each leaf in each tree is obtained, which is used to calculate the feature importance:

Las Vegas is a typical randomization method, namely, one of probability algorithms. It has the characteristics of probability algorithm, which allows the algorithm to randomly select the next step in the process of execution. In many cases, when the algorithm is faced with a choice in the process of execution, the randomness choice spends less time than the optimal choice, thus, the probability algorithm can greatly reduce the complexity of the algorithm. In this paper, Las Vegas is used to randomly sort the features of the target project, and calculate the Euclidean distance with the source project to measure the similarity. In a certain number of times, the subset with the highest distribution similarity is selected as the optimal subset of the target project for subsequent transfer learning. Feature selection is summarized as in Algorithm 2.

| Algorithm 2. Feature Selection |

- 1.

Input: feature , label , , the number and depth of CART , . imbalanced and unlabeled source dataset , random number R

Output: Similar dataset ,

Method:

- 2.

for k = 1 to K, do - 3.

for d = 1 to D, do - 4.

sampling() - 5.

→ - 6.

select to split - 7.

end for - 8.

prediction label: , complex index: , feature weight, - 9.

- 10.

second-order approximation - 11.

, . - 12.

end for - 13.

avg(, )→sort()→ - 14.

for r = 1 to , do - 15.

shuffle() - 16.

select dataset , col() = col() - 17.

euclidean(, ) - 18.

end for - 19.

, by min(euclidean(, ))

|

2.3. Transfer Learning in Manifold Space

Manifold is homeomorphic spaces in local and Euclidean spaces. It uses Euclidean distance to calculate the distance, which overcomes the feature distortion of transfer learning in original space. The Grassmann manifold can take the original d-dimensional subspace as the basic element to help learning classifier. It usually has an effective numerical form in feature transformation, which can be very efficient representation and solution in the transfer learning problem. In addition, the transfer of source project to target project, or the transfer of source and target projects to a common space are two main methods of feature-based transfer learning. Li et al. found that the performance of the transfer of source project to target project is better than the latter [

19]. In this paper, the optimal subset of source and target projects are transformed into the Grassmann manifold. Geodesic Flow Kernel (GFK) method is used to construct geodesic flow to make the source domain close to the target domain. It integrates the space function of the manifold where these two points are located of the source project with the same distribution as the target project is obtained.

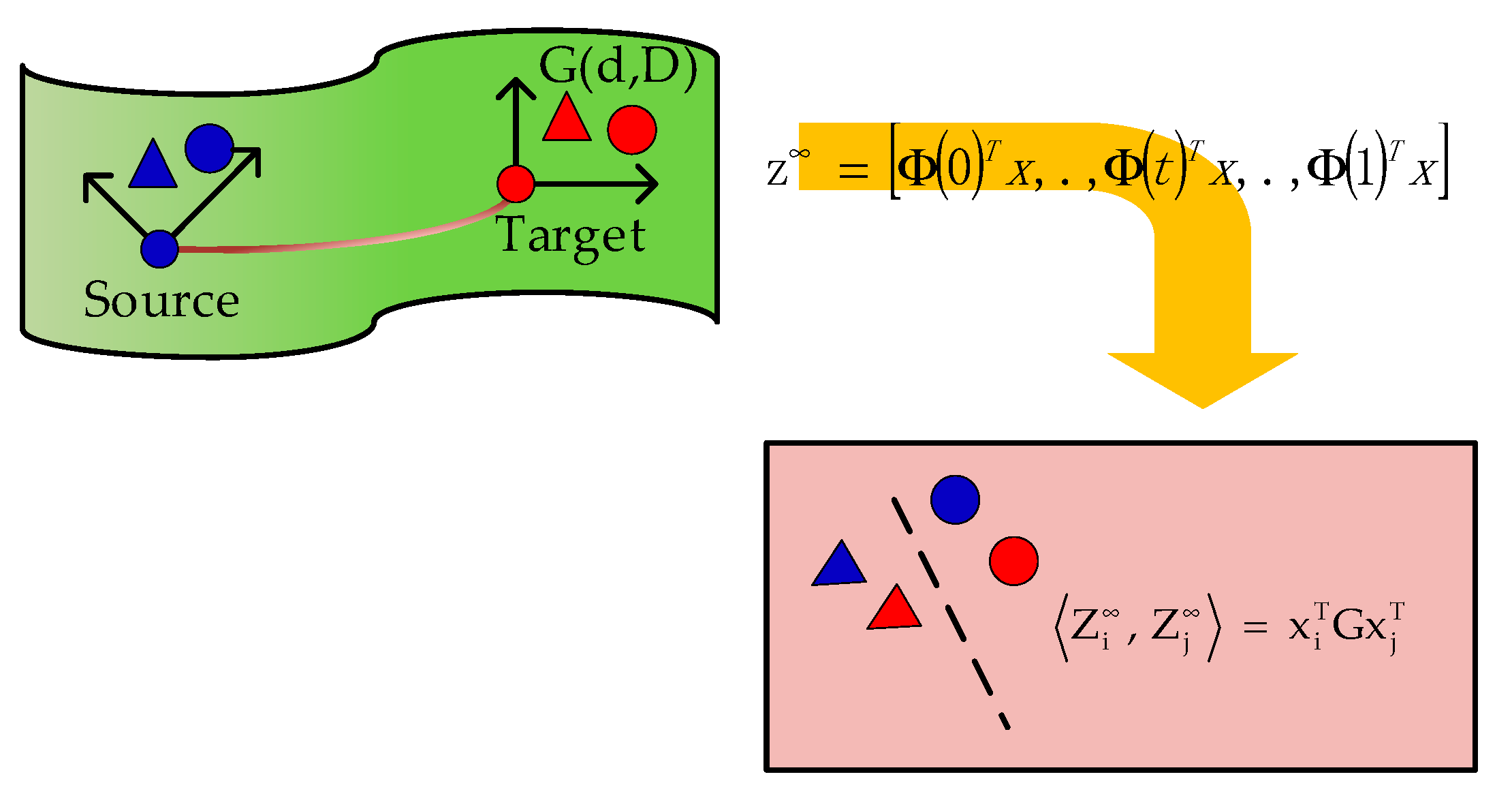

As shown in the

Figure 3, GFK tries to embed the

dimension subspace

,

after dimensionless reduction of the source domain and target domain into the manifold G.

is the source domain representation of manifold G,

is the target domain representation of manifold G, and the geodesic flow between

and

is equivalent to transforming the original feature space into an infinite dimension space, reducing the drift phenomenon between domains. The parameterization is shown as follows:

is represented as follows, where

,

are orthogonal matrices obtained by SVD.

is the feature of manifold space, the inner product of transformed features gives rise to positive semidefinite geodesic flow kernel:

Thus, the features in the original space can be transformed into the Grassmann manifold with

.

G can be effectively calculated by singular value decomposition.

Logistic regression is used as classifier to train the source project with the same distribution as the target project, and use the model to distinguish the defect type of the target item module.

4. Conclusions

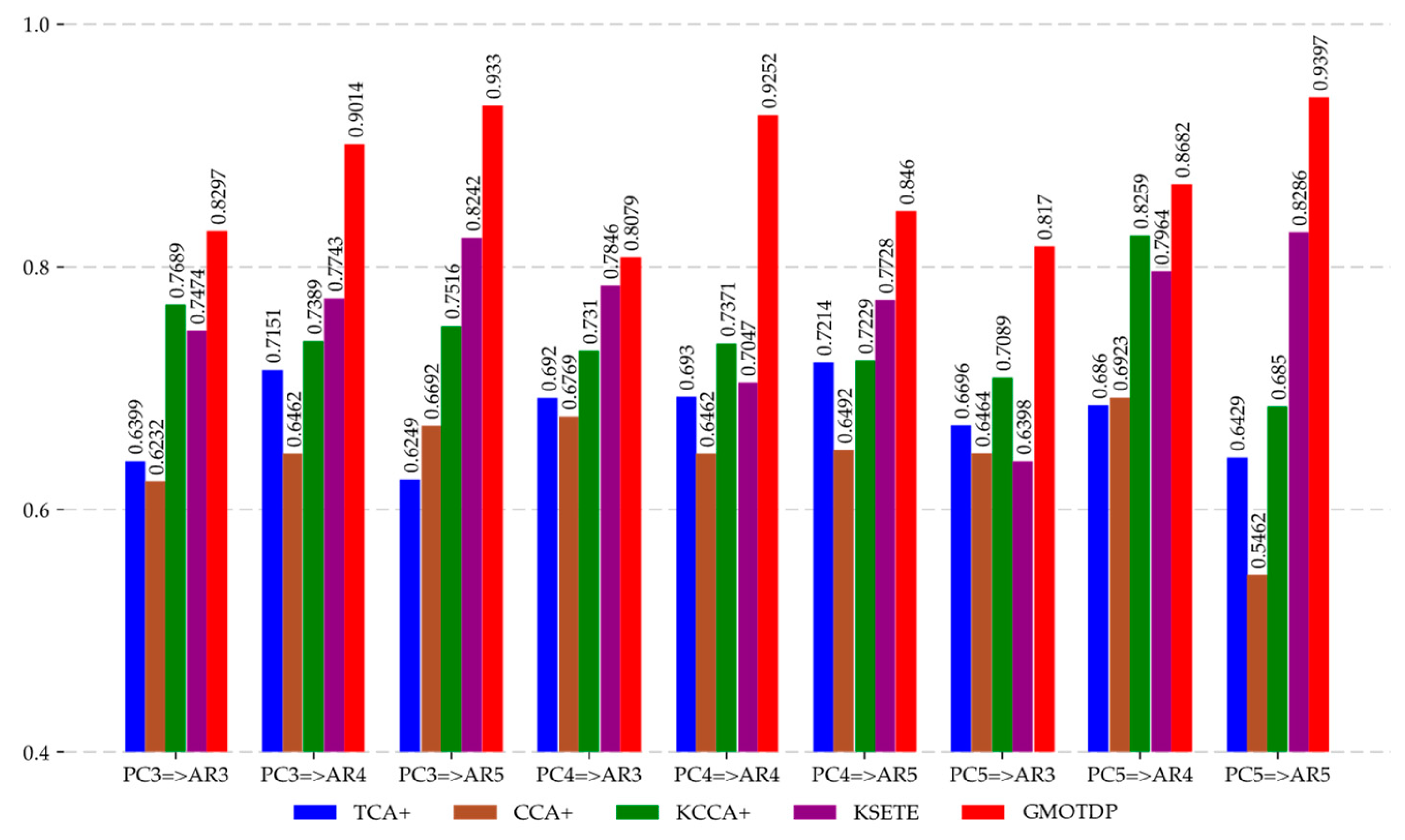

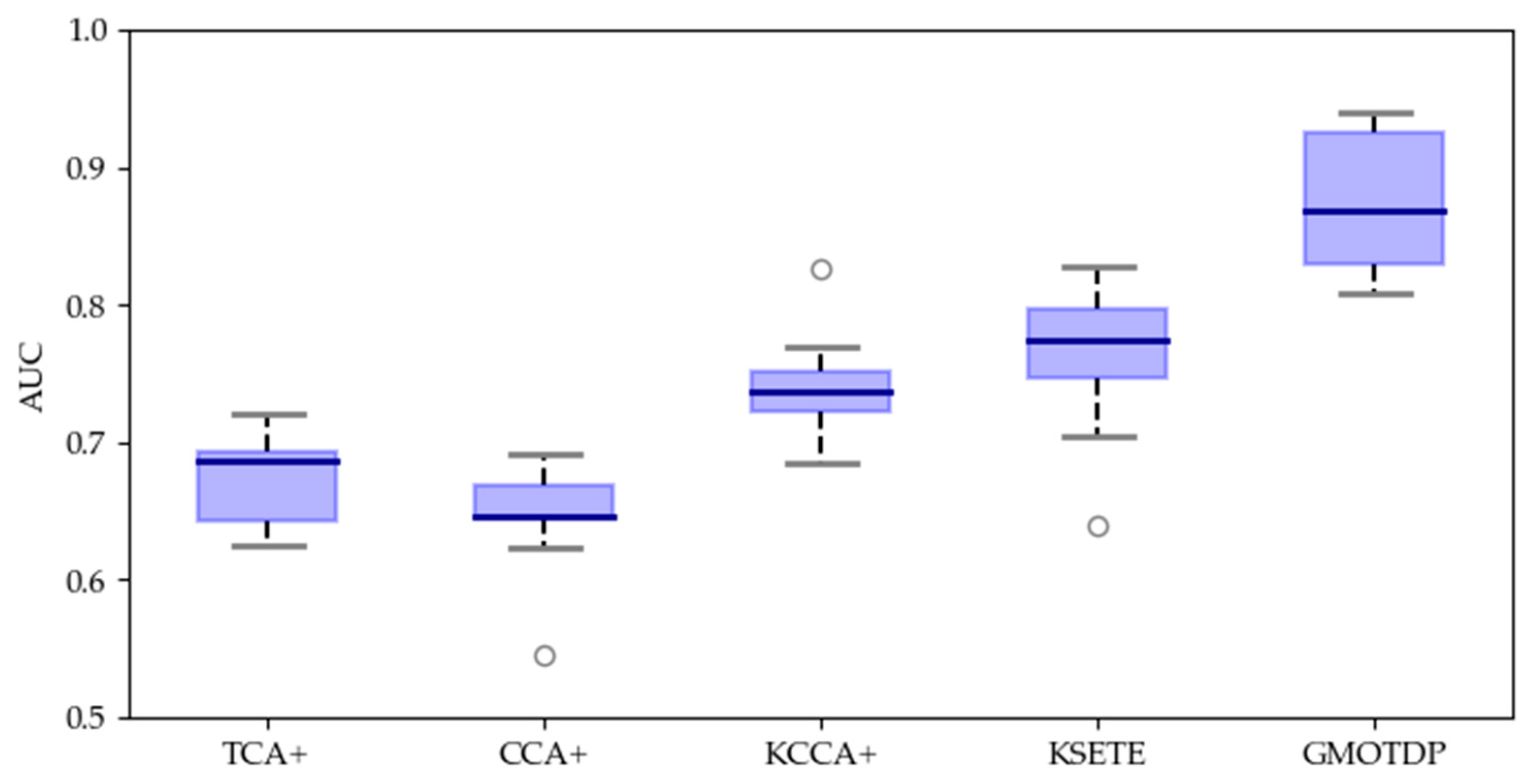

This paper proposes a three-phase heterogeneous software prediction method-GMOTDP, which includes SWIM oversampling, feature selection and transfer learning. New minority samples are generated to balance the source project dataset based on Mahalanobis distance. CART-based ensemble learning is used to determine the optimal subset of source project. The joint similarity measure is used to obtain the optimal subset of the target project. According to the transfer of optimal subsets in manifold space, the source project with the same distribution as the target project are obtained, which reaches the condition of traditional classification and predicts the defect tendency of the target project module.

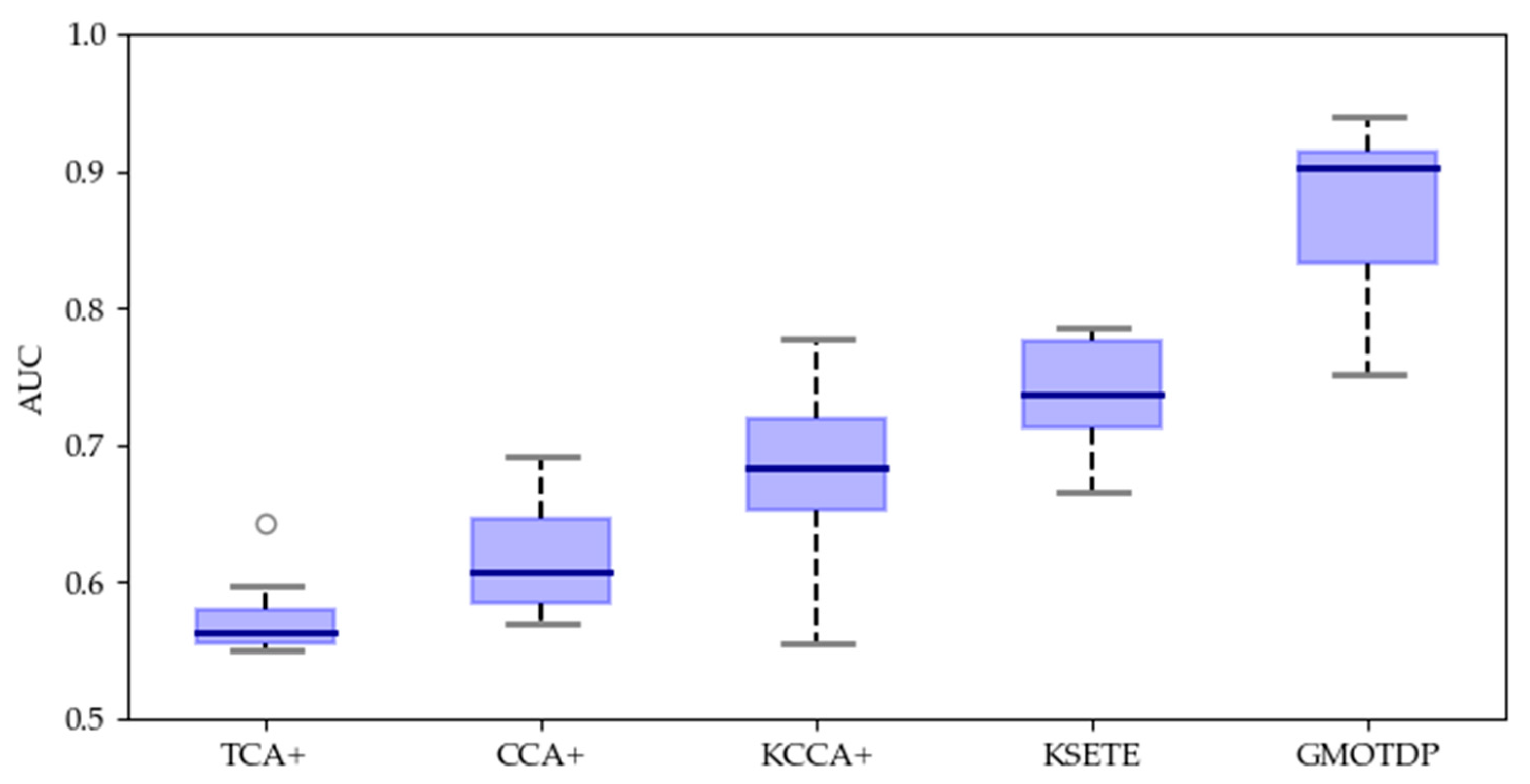

A lot of experiments were designed to validate the propose scheme using nine projects of three public domain software defect datasets. Compared with several representative software defect prediction methods, the proposed GMOTDP has better prediction effect. AUC results show that our method performs better usually than other four methods.

In the future, we will study how to combine other supervised learning methods with the sample level and algorithm level methods, and investigate the influence of extreme class imbalance in semi-supervised software defect predictor on more datasets. This is an interesting issue to be explored, which might shed light on the design of more powerful supervised learning algorithms.