Abstract

With the large scale deployment of multihomed mobile computing devices in today’s Internet, the Multipath TCP (MPTCP) is being considered as a preferred data transmission technology in the future Internet due to its promising features of bandwidth aggregation and multipath transmission. However, MPTCP is more likely to be vulnerable to the transmission quality differences of multiple paths, which cause a “hot-potato” out-of-order arrival of packets at the receiver side, and in the absence of a related approach to fix this issue, serious application level performance degradations will occur. In this paper, we proposes MPTCP-LM, a Lightweight path Management Mechanism to aid Multihomed MPTCP based mobile computing devices towards efficient multipath data transmission. The goals of MPTCP-LM are: (i) to offer MPTCP a promising path management mechanism, (ii) to reduce out-of-order data reception and protect against receiver buffer blocking, and (iii) to increase the throughput of mobile computing devices in a multihomed wireless environment. Simulations show that MPTCP-LM outperforms the current MPTCP schemes in terms of performance and quality of service.

1. Introduction

In the past several years, wireless communications systems and networks have experienced a dramatic development [1]. These dramatic advances in wireless communications and networking technologies provide ubiquitous Internet connectivity to a mobile user [2]. Moreover, promoted by the increasing popularity of various wireless networks, mobile devices (i.e., smart phones, laptop computers, and netbooks) are already embedded with multiple Wireless Network Interface Cards (WNICs) and enabled multiple IP features [3,4]. With the multiple IP features (also known as multihoming), the devices can connect to the Internet via multiple IP addresses to provide enhanced and reliable Internet connectivity; they also can simultaneously use several IP addresses to spread data across several end-to-end IP paths. For example, the Apple iOS based products (i.e., iPhone and iPad) [5] and Samsung Galaxy mobile phones (i.e., S9 and S9 Plus) [6] can use both the Wi-Fi and cellular network links to download large files. Such multihomed devices can speed up the transmission data rate and increase their throughput performance by making use of multiple wireless network links simultaneously, enabled by the emerging Multipath TCP (MPTCP) technology [7].

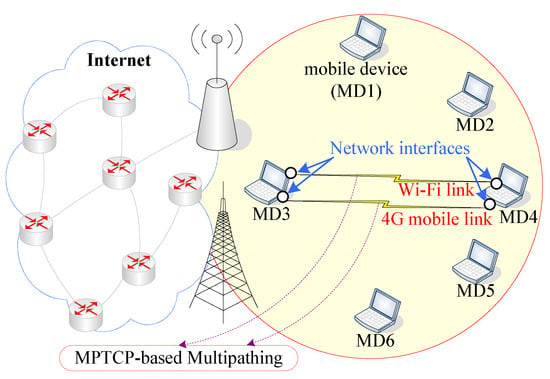

The MPTCP is an extension to traditional single path TCP that enables a multihomed device to allocate data traffic across multiple independent end-to-end paths concurrently through multiple network interfaces [8]. Figure 1 presents a typical MPTCP based multipath communication scenario that involves two multihomed Mobile Devices (MD3 and MD4), which communicate with each other by using both the Wi-Fi and 4G mobile links simultaneously. Such a multipathing feature would be beneficial to the mobile devices to aggregate bandwidth, increase throughput, and enhance communication robustness [9]. Furthermore, MPTCP inherits the standard socket Application Programming Interfaces (APIs) from TCP, which means an MPTCP based device can initiate and establish an MPTCP connection with other devices without making any modifications to today’s Internet applications [10]. With its multipath transmission and backwards compatibility features, the MPTCP is being considered as a preferred transmission technology for data delivery over the current and future Internet [11].

Figure 1.

A typical Multipath TCP (MPTCP) based multipath communication among multihomed mobile devices.

1.1. Motivation

Although applying MPTCP to the multihomed mobile computing devices towards multipath data transmission provides numerous potential benefits for data delivery [12,13,14,15,16,17,18,19], the MPTCP path management mechanism is very simple in RFC6824 [7], and this, in turn, leads to MPTCP being vulnerable to the path quality differences of the multiple paths [20]. With the regular path management mechanism, the MPTCP data traffic can be split and assigned to all the available end-to-end paths for concurrent multipath transmission. Such a full-MPTCP mode may be a useful way of providing a “best-effort” bandwidth aggregation service, but this, in turn, will determine data to be received out-of-order because asymmetric paths in an MPTCP connection are commonly encountered with different transmission characteristics. The out-of-order data arrival phenomenon can be especially problematic when applying MPTCP to a multihomed mobile computing device because today’s mobile computing devices commonly have a poor receiver buffer, due to their very limited memory space. When a large number of out-of-order packets are maintained in the overloaded receiver buffer for reordering, buffer blocking will occur, and thereby, the MPTCP’s performance will encounter a serious degradation (as we will discuss in more detail in the next section).

1.2. Related Works

In recent years, the growing interest in the MPTCP technology has resulted in thousands of peer reviewed publications. In this paper, we categorize the most relevant literature into two groups: MPTCP out-of-order packet arrival cases and MPTCP path management cases.

MPTCP out-of-order packet arrival cases: Xu et al. [9] presented a novel pipeline network coding based MPTCP solution to solve the out-of-order packet arrival problem and thus reduce the packet reordering overhead in multipath transmission. Yang et al. [12] introduced a new scheduling algorithm for MPTCP that mitigates jitter by transmitting packets out-of-order on different MPTCP sub-flows such that they can arrive in-order at the receiver. Alheid et al. [13] compared some different MPTCP congestion control mechanisms used in conjunction with the current TCP packet reordering solutions. Their results demonstrated that the performance of MPTCP could be influenced by the delay difference of multipath paths. Their study also identified combinations of congestion control and packet reordering solutions that give better aggregate goodput performance for different path delay differences. Ou et al. [16] designed a novel out-of-order transmission enabled congestion control and packet scheduling strategy for MPTCP. Xue et al. [17] presented a novel forward prediction based dynamic packet scheduling algorithm for MPTCP, by utilizing maximum likelihood estimation in TCP modeling and taking the packet loss rate and time offset into consideration. Le et al. [18] developed a new forward-delay based packet scheduling algorithm to solve the out-of-order received packet problem in the MPTCP based multipath transmission.

MPTCP path management cases: Wang et al. [20] investigated the performance of MPTCP in real-world Internet scenarios based on the NorNet Testbed. Their study revealed that path management and congestion control settings can present a significant impact on MPTCP’s performance. Kim et al. [21] designed a novel receive buffer based path management mechanism, which managed multiple paths by using both the available receive buffer size and dissimilar characteristics of multiple paths. This path management mechanism was devoted to possibly reducing the out-of-order packet arrival problem and alleviating receive buffer blocking in MPTCP based multipath transmission. Oh et al. [22] proposed a feedback based path failure detection and buffer blocking protection approach for MPTCP. This approach aimed to (i) prevent the usage of underperforming sub-flows in the MPTCP, by using a path failure detection method, and (ii) prevent goodput degradation due to delay differences between paths, by detecting buffer blocking and closing underperforming sub-flows. Our previous work, MPTCP-La/E [4], presented an LDDoS (Low-rate Distributed Denial-of-Service) attack aware energy saving oriented MPTCP extension aiming at optimizing the energy usage while still maintaining user’s perceived quality of cloud multipathing services, by selecting and managing a subset of suitable paths for multipathing. Our previous work (PU)M [14] introduced a “Potentially Underperforming” (PU) concept to MPTCP and further designed a novel PU aware multipath management mechanism for MPTCP. The main goals of (PU)M were: (i) to prevent the use of potentially failed paths in multipath transmission and (ii) to manage multiple paths in MPTCP adaptively.

1.3. Contributions

In this paper, we propose MPTCP-LM, a Lightweight, but highly promising Multipath Management Mechanism for MPTCP. The goals of MPTCP-LM are (i) to possibly mitigate the out-of-sequence packet reception and reduce the delay of packet reordering in multipath transmission, (ii) to aggregate the bandwidth of multiple network paths adaptively and enhance the performance of MPTCP, and (iii) to preserve backward compatibility with the current Internet applications, as MPTCP does. We present simulation results that show that MPTCP-LM can outperform the baseline MPTCP scheme in terms of data sending and receiving times, out-of-order data arrival, and throughput performance. The proposed MPTCP-LM scheme makes fundamental contributions against the state-of-the-art in the literature, in the following aspects:

- (i)

- It reveals the fact that although each transmission path in MPTCP independently performs data delivery, a poorly performing path (with large delay) can lower the performance of MPTCP, by causing out-of-order data arrival and even receiver buffer blocking in multipath transmission.

- (ii)

- It introduces a lightweight multipath management mechanism for MPTCP, which allows a path to be removed and reconnected in multipath transmission adaptively, in order to reduce out-of-order data reception and protect against receiver buffer blocking in MPTCP.

Especially, it is worth pointing out that both our previous work (PU)M [14] and the MPTCP-LM solution proposed in this paper were devoted to helping MPTCP adaptively manage multiple paths, by removing and reconnecting a transmission path accordingly. However, the (PU)M solution was mainly devoted to detecting path failure problems in MPTCP, while the MPTCP-LM solution is mainly devoted to identifying which path would cause the performance degradations in multipath transmission. In addition, (PU)M prevented the usage of a PU path according to the path’s delay variations, while in MPTCP-LM, a path can be removed/reconnected according to not only the path’s delay performance, but also the path’s congestion window size. This help MPTCP-LM address the limitation of the (PU)M solution, avoiding performing worse than the baseline MPTCP if a path has a high level of transmission capacity, but its delay performance becomes worse accidentally or suddenly.

2. Problem Statement

The asymmetric paths in a practical heterogeneous wireless environment with different transmission characteristics are more common and very sensitive to the variations of the real-time wireless condition. Because of this, the out-of-order data arrival will be an inevitable phenomenon in multipath transmission. What is worse, large numbers of out-of-order packets buffered in the constrained receiver buffer will result in the “hot-potato” receiver buffer blocking problem and thereby cause serious throughput performance degradations [22].

Several real-world measurement based studies on MPTCP reported that a hug path quality difference is a very important cause for MPTCP’s low performance. Li et al. [23] performed a comprehensive large scale measurement on MPTCP in high speed mobility scenarios. Their study demonstrated that when Round-Trip Times (RTTs) in separate MPTCP paths differed, a significant out-of-order problem would arise, and the efficiency of MPTCP would be far from satisfactory. Chen et al. [24] presented a measurement based study of MPTCP performance with real wireless settings and background traffic. Their study revealed that the MPTCP performance was affected by path characteristic differences in terms of RTTs and loss rates. In this paper, we are especially interested in understanding how the performance of MPTCP is affected when path characteristics are diverse and caused by path failure. Thus, we present a group of reasonable simulations to answer this question. We also seek to answer why an effective multipath management mechanism is necessary for MPTCP.

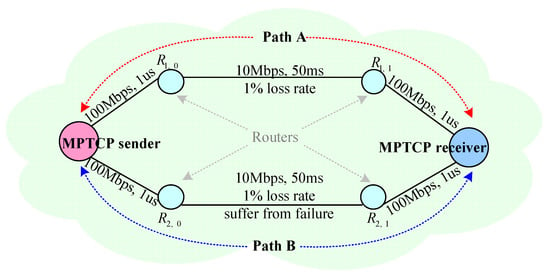

To achieve the above purposes, we implemented a sample dual dumbbell network topology in Network Simulator 2 Version 2.35 (NS-2) [25], in which the MPTCP module [26] was included. The network topology is shown in Figure 2. An MPTCP sender and an MPTCP receiver were connected to each other through two independent end-to-end paths (A and B). The propagation delay of the edge links between the sender (or the receiver) and the routers was set to one microsecond with 100 Mbps of bandwidth. The bottleneck link between and ( and ) was set with a 10 Mbps bandwidth and a 50 milliseconds propagation delay. Path B failed after 30 s of simulation time (we simulated Path B failure by bringing down the bottleneck link between routers and ). The total simulation time was 120 s with infinite FTP flows. Table 1 shows the parameters used in the simulations. Moreover, we performed two test cases named Case 1 and Case 2, which were examined as follows to investigate the effect of path transmission stability differences on the performance of MPTCP.

Figure 2.

A sample dual dumbbell simulation topology.

Table 1.

The parameters used in the simulations.

Case 1. The sender turns on its first interface and turns off its second interface at the beginning of simulation, which means always only Path A is used in MPTCP for data transmission.

Case 2. The sender turns on both of its interfaces, which means both Paths A and B are used in MPTCP. However, Path B suffered from network failure after 30 s of simulation time.

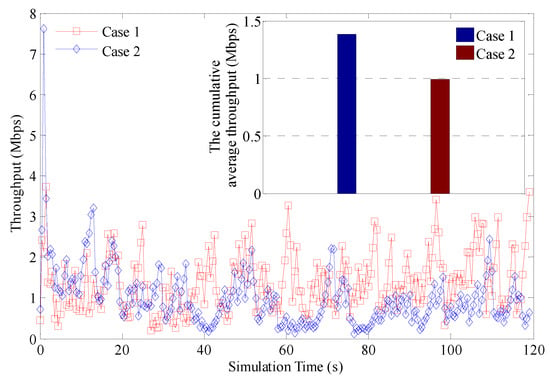

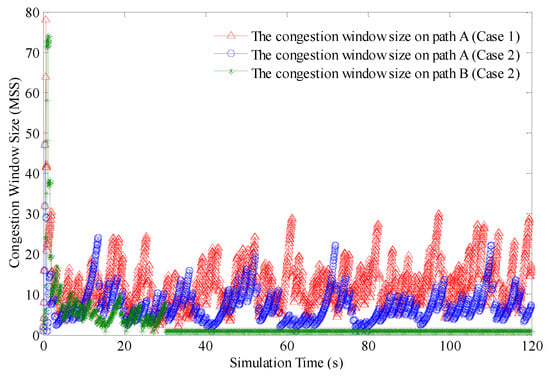

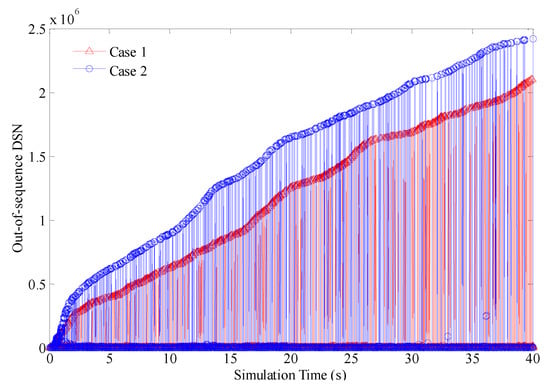

Figure 3, Figure 4 and Figure 5 illustrate the performance comparisons in terms of throughput, congestion window (cwnd), and out-of-order Data Sequence Number (DSN) when Case 1 and Case 2 were executed, respectively. As Figure 3 shows, compared with the MPTCP’s throughput in Case 1, the MPTCP’s throughput in Case 2 was gradually lowered from the former (before 30 s of simulation time) to backward (after 30 s of simulation time) due to failure on Path B. When a failure occurred on Path B from the 30th s, it caused back-to-back timeouts on Path B (see Figure 4; the cwnd size on Path B was always set to one after 30 s). As in the failure case, the MPTCP sender tried to transmit data after each of these timeouts on Path B (namely, unnecessary retransmission via the failure path [27]). The resulting receiver buffer blocking in MPTCP, with a great number of out-of-order data (see Figure 5) held in the constrained receiver buffer, prevented the efficient use of Path A for data transmission and resulted in serious throughput performance degradations.

Figure 3.

The throughput comparison.

Figure 4.

The congestion window comparison.

Figure 5.

The out-of-order data comparison.

From the above experimental results, we can observe that the MPTCP could achieve lower application level performance even than using a single path when it simultaneously exploited multiple paths that had huge path quality differences. When a path experienced network failure or multiple paths had huge quality differences, the receiver buffer blocking may become an inevitable phenomenon in MPTCP. Unfortunately, the standard MPTCP path management mechanism was very simple; it used all the available paths in the MPTCP connection for concurrent multipath data transmission, without considering that wireless network latency and link failure tended to occur frequently due to mobility, signal fading, and wireless interference. To remedy this situation, this paper proposes a lightweight multipath management mechanism to help MPTCP know not only when, but also how to prevent the use of an underperforming or failure path in multipath transmission. This approach could possibly alleviate the receiver buffer blocking and optimize the throughput performance of MPTCP.

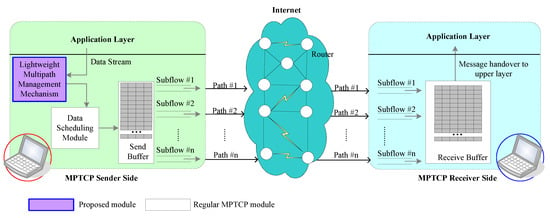

3. System Detail Design

Figure 6 illustrates the architecture of our MPTCP-LM system, which involves an MPTCP sender, an MPTCP receiver, and n multiple paths. As an extension of the standard MPTCP, the MPTCP-LM receiver was responsible for the path collection and reassembling if any MPTCP segment data arrived in the receiver buffer. At the MPTCP-LM sender side, there was an additional MPTCP-LM module compared with the standard MPTCP, which was a Lightweight Multipath Management Mechanism (LM) that aimed to (i) prevent the use of an underperforming or failure path in multipath transmission, (ii) collect appropriate paths into MPTCP for smart bandwidth aggregation and parallel transmission, and (iii) reduce the receiver buffer blocking and optimize the quality of service when deploying MPTCP on the multihomed mobile computing devices. Following is the detail of the design of the proposed MPTCP-LM solution.

Figure 6.

The architecture of MPTCP-Lightweight Multipath Management Mechanism (LM).

Simply, in MPTCP-LM, the receiver buffer maintaining the out-of-order data for reordering is viewed as a router queuing packets for forwarding. Further, a large number of out-of-order data held in the limited receiver buffer and resulting in receiver buffer blocking can be viewed as a large number of packets held in the overloaded router and resulting congestion. In view of this, MPTCP-LM thinks of buffer blocking occurring at the receiver side as “congestion” occurring in the receiver buffer, like network congestion caused by an overloaded router (the router buffer gets full). Therefore, we borrowed ideas from the classic TCP’s congestion Avoidance (CA) mechanism [28] to design the LM mode for the receiver buffer blocking avoidance. In order to make the paper self-contained, we introduce the general ideas of the CA mechanism as follows.

The CA mechanism is maintained by a TCP sender to adjust the cwnd size and alleviate network congestion. The main operations of the TCP’s CA mechanism running in the congestion avoidance state can be summarized as follows [28]: (i) for every packet acknowledged, the cwnd is additively increased by one Maximum Segment Size (MSS) each RTT; (ii) for any packet loss (three duplicate ACKs are received), set the cwnd value to half the current cwnd size; (iii) on timeout, the cwnd size is reset to one MSS. More simply, the TCP’s CA operations can be expressed by using the following mathematical model:

Inspired by the idea of the above TCP’s CA mechanism, MPTCP-LM was designed to adjust the number of transmission paths intelligently, like the cwnd adjustment in TCP’s CA mechanism, to protect possibly against receiver buffer blocking. To this end, in the connection establishment phase, MPTCP-LM set a buffer blocking counter () and triggered these counters with receiver buffer blocking events. The was set with a default value of zero and was incremented by one when a receiver buffer blocking occurred in the receiver buffer. After three consecutive receiver buffer blocking events, the was reset back to its default value. According to the value, MPTCP-LM could adaptively abandon or exploit multiple paths, similar to the cwnd adjustment in TCP’s CA mechanism, following the principles below:

- (i)

- For a case in which (or ), namely there is a single buffer blocking event occurring in the receiver buffer, MPTCP-LM views this case as “a packet loss event in the TCP congestion avoidance phase”. In this case, the number of paths within the MPTCP connection (denoted as ) will be halved, and the half of paths that has high performing transmission quality will be used in multipath transmission.

- (ii)

- For a case in which , namely three consecutive buffer blocking events occurred in the receiver buffer, MPTCP-LM views this case as “a timeout event in the TCP congestion avoidance phase”. In this case, only one path that has the highest transmission quality is used in MPTCP ( is set to one). In addition, the value of is reset back to zero.

As for path transmission quality, in MPTCP, there are two recommended schedulers used to distinguish multiple paths and assign data to these paths for transmission accordingly, which are the lowest-RTT-first scheduler and the largest-cwnd-first scheduler, which differentiate the transmission quality of multiple paths by using each path’s own RTT and cwnd [29], respectively. In MPTCP-LM, the transmission quality of multiple paths is differentiated by a function of two transport layer networking variables (namely RTT and cwnd), enabled by the Simple Additive Weighting (SAW) method [30], which is probably the most popular choice for multiple attribute decision making systems due to its simplicity. By using SAW, the transmission quality of each path in MPTCP-LM is determined by the weighted sum of both the RTT and cwnd values. Assume that path is one of multiple paths within the MPTCP-LM connection; the MPTCP-LM’s SAW based path quality evaluation method calculates the transmission quality of by using the following equation,

where represents the transmission quality of . In MPTCP-LM, the path with the largest value has the best transmission quality. Since SAW mostly reaches an optimal performance when the weighting factors of the system variables are fair [31], therefore, both weighting factors and ℓ used in Equation (2) were set to a fairness value by default. For real-world networking, the two weighting factors could be changed to other suitable values. Moreover, in Equation (2), and are the normalized cwnd and RTT values of , which can be calculated by using the following Equations (3) and (4), respectively,

where and are the upper bound and the lower bound of the cwnd size measured on , respectively. and are the upper bound and the lower bound of the RTT values measured on , respectively.

Let us suppose n possible paths within the MPTCP connection, and let , , and be the collection of all the available paths in the MPTCP connection, the collection of paths activated for multipathing, and the collection of paths removed from multipathing, respectively, in which . The main MPTCP-LM operations, each of which is explained in detail, are as follows:

- (i)

- Estimating each path’s transmission quality () periodically (per RTT) by jointly considering its own cwnd and RTT values;

- (ii)

- Sorting all the paths in in descending order according to their own values;

- (iii)

- If there is no receiver buffer blocking occurring at the receiver side, assigning traffic over all the paths in , as the regular MPTCP does;

- (iv)

- If there is a receiver buffer blocking event detected, but , halving the number of paths in multipath transmission, and then incrementing the value of by one, by using the following Equations (5) and (6),In this case, the top half of paths in the will be used in multipath transmission (there is at the beginning of MPTCP based multipathing).

- (v)

- If there is a receiver buffer blocking detected and , reducing the number of paths to one, then resetting the value of back to zero, by using the following Equations (7) and (8),In this case, only the first path in can be used for data transmission.

By using the above operations, MPTCP-LM can intelligently aggregate the bandwidth of multiple paths for data transmission while possibly mitigating the receiver buffer blocking problem. Moreover, in order to maximize the network resource utilization possibly, in MPTCP-LM, a path in can be put into for multipath transmission, if its current transmission quality, , is not lower than the average transmission quality of other high performing paths (in order to make sure has little transmission quality difference compared to other high performing paths), namely,

where is the number of paths in . is the path in . The pseudocode of multipath management mechanism used in MPTCP-LM is presented in Algorithm 1. For convenience, we adopted the following notations used in Algorithm 1 (as shown in Table 2).

Table 2.

Notations used in the MPTCP-LM based multipath management algorithm.

For Algorithm 1, the time complexity is primarily influenced by the first “for” statement (from Line 2 to Line 4), the second “for” statement (from Line 16 to Line 21), and the sort algorithm in Line 5. For the two “for” statements, the time complexity of each “for” statement is (we assumed the second “for” statement was executed). As for the sort algorithm, the time complexity was because the QuickSort algorithm was used for sorting the paths. Since there was , therefore the time complexity of this algorithm was . More simply, the total number of paths collected by Algorithm 1 can be expressed by using the following mathematical model:

| Algorithm 1. MPTCP-LM based multipath management algorithm. | |

| Initialization: | |

| ; | |

| ; | |

| ; | |

| . | |

| 1: | use all the paths in for multipathing; |

| 2: | fordo |

| 3: | calculate the value of by using Equation (2); |

| 4: | end for |

| 5: | sort the paths in in descending order according to their own values; |

| 6: | if there is a receiver buffer blocking detected then |

| 7: | set ; |

| 8: | if , then |

| 9: | set ; |

| 10: | end if |

| 11: | if , then |

| 12: | set ; |

| 13: | end if |

| 14: | end if |

| 15: | if, then |

| 16: | for do |

| 17: | calculate the value of by using Equation (2); |

| 18: | if |

| 19: | move into ; |

| 20: | end if |

| 21: | end for |

| 22: | end if |

| 23: | allocate the MPTCP data across the path(s) in . |

4. Performance Evaluation

4.1. Simulation Topology

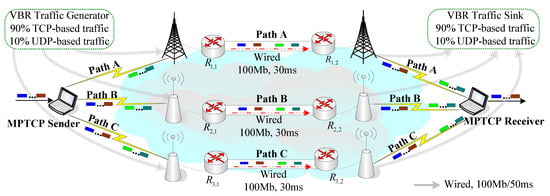

The performance evaluation was carried out on Network Simulator 2 Version 2.35 (NS-2) [25] with the MPTCP module [26]. The experiments considered a typical MPTCP based device-to-device communication scenario presented in Figure 7. As the figure shows, an MPTCP sender and an MPTCP receiver are connecting with each other via three independent transmission paths (denoted A, B, and C). Path A’s bottleneck has a 10 Mbps bandwidth and 10–20 ms propagation delay. Path B’s bottleneck has a 11 Mbps bandwidth and 10–20 ms propagation delay. Path C’s bottleneck has a 2 Mbps bandwidth and 10–60 ms propagation delay. In the simulation, Path C will experience a short term failure from 10 to 30 s and a complete failure after 50 s of simulation time (we simulated Path C failure by bringing down the bottleneck link between routers and ). The parameters shown in Table 3 were used for configuring multiple paths. The other parameters of the MPTCP used the default values specified in NS-2.

Figure 7.

Simulation topology.

Table 3.

Path parameter configuration used in the simulation.

In the experiment, all the wireless access links were attached to a Uniform Path-Loss (UPL) model and a Two-state Markov Loss (TML) model in order to simulate the highly frequent and bursty frame loss in the data link layer. The UPL and TML models were used to represent the distributed loss caused by wireless noise interference and the infrequent continuous loss caused by wireless signal fading, respectively [32]. In addition, in order to simulate the complex behaviors of Internet traffic [33], we attached each of the three paths to a VBR (Variable Bit-Rate) traffic generator to send the VBR cross-traffic to its corresponding sink. The packet size of the VBR cross-traffic utilized in the simulation was chosen as follows [34]: 49% were 44 bytes in length, 1.2% 576 bytes, 2.1% 628 bytes, 1.7% 1300 bytes, and the other 46% 1500 bytes in length, in which 90% of the VBR cross-traffic was transmitted by TCP technology and the remaining 10% by UDP technology. The VBR cross-traffic on each of the three paths consumed 0–50% of the access link bandwidth. The simulation time was set to 100 s with infinite FTP traffic.

4.2. Simulation Results

We here compare the performance of our MPTCP-LM with the baseline MPTCP [7] and our previous work (PU)M [14]. For convenience, we portray the results of MPTCP-LM as “MPTCP-LM” in the result figures, and the results when applying the baseline MPTCP and (PU)M schemes are portrayed as “the baseline MPTCP” and “MPTCP + (PU)M”, respectively.

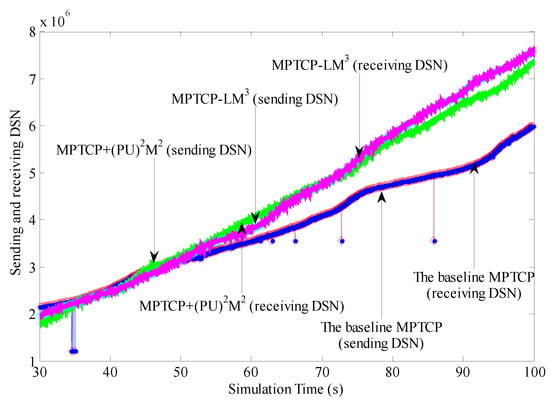

(1) Sending and receiving data DSN: Figure 8 shows the comparison results of data sending and receiving times when the baseline MPTCP, MPTCP + (PU)M, and MPTCP-LM were used, respectively. In order to better illustrate the comparison, the results between t = 30 s and t = 100 s are illustrated (including the no path failure phase (between 30 s to 50 s) and path failure phase (after 50 s)), representative for all the simulation results. From the figure, we can observe that between 30 and 50 s of simulation time, MPTCP-LM and MPTCP + (PU)M performed at a lower level of data sending and receiving times than the baseline MPTCP. This was because the baseline MPTCP fully utilized the three paths for data transmission (Path C was available and reused in multipath transmission after 30 s). In both MPTCP-LM and MPTCP + (PU)M, Path C may be prevented from multipathing due to the quality gap (compared with Paths A and B).

Figure 8.

The comparison of data sending and receiving times.

However, MPTCP-LM and MPTCP + (PU)M could achieve a higher level of data sending and receiving times than the baseline MPTCP after 50 s. This was because in both MPTCP-LM and MPTCP + (PU)M, the sender only selected the stable paths for multipath transmission, while in the baseline MPTCP, the path failure declaring was inherited from TCP’s operations (in the TCP, the time required to declare a broken path needs at least s ( and s)). That is, between 50 and 100 s, the broken Path C could also be used in multipath transmission, which caused the interruptions in Paths A and B and, thus, constrained the sender from transmitting data traffic.

Besides, we can also see that between 50 and 70 s of simulation time, MPTCP + LM achieved a lower level of data sending and receiving times than the MPTCP + (PU)M scheme. This was because MPTCP + LM detected path failures based on the counts of receiver buffer blocking, while MPTCP + (PU)M was based on the path’s delay variations, which means MPTCP + LM could possibly find out and prevent the usage of a broken path or a poorly performing path more slowly than MPTCP + (PU)M. However, in MPTCP + (PU)M, a path could be removed/reconnected frequently if its delay performance was getting worse accidentally or suddenly. This limitation made MPTCP + (PU)M not able to provide stable transmission efficiency and perform worse than MPTCP + LM in the rest of the simulation time (between 70 and 100 s).

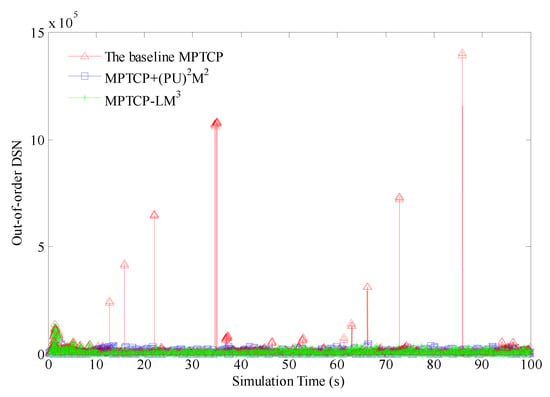

(2) Out-of-order DSN: The Out-Of-Order DSN (OOO DSN) is a good metric to select for studying and analyzing the performance of multipath protocols. The OOO DSN can be attained by the offset between the DSNs of two consecutively received MPTCP data chunks (the difference between the DSN of the current MPTCP chunk and that of the latest received MPTCP chunk). Figure 9 shows a comparison of out-of-order chunks among the baseline MPTCP, MPTCP + (PU)M, and MPTCP-LM. As the figure shows, the baseline MPTCP scheme generated more out-of-order chunks and required increased unnecessary packet reordering than both MPTCP + (PU)M and MPTCP-LM. This was because the baseline MPTCP’s scheduler assigned data traffic over all the paths, without considering that a poorly performing path or a failure prone path would cause extremely great numbers of out-of-order chunks for reordering.

Figure 9.

The comparison of out-of-order Data Sequence Number (DSN).

In contrast, both MPTCP + (PU)M and MPTCP-LM could detect a broken/poorly performing path and select a group of stable paths for data transmission. In this way, they could possibly allocate data traffic over the stable and high performing paths and thus reduce the out-of-order data reception. However, it was noted that MPTCP + (PU)M often generated more out-of-order data chunks than MPTCP-LM. This was because in MPTCP + (PU)M, the paths selected for multipath transmission would be frequently changed and sensitive to paths’ delay variations, while in MPTCP-LM, the path group, which was jointly determined by the paths’ delay and cwnd characteristics, was relatively stable and changed little. When comparing the three schemes, we can see that the peak out-of-order data reception at the receiver was up to when using the baseline MPTCP, while it was approximately when using both MPTCP + (PU)M and MPTCP-LM.

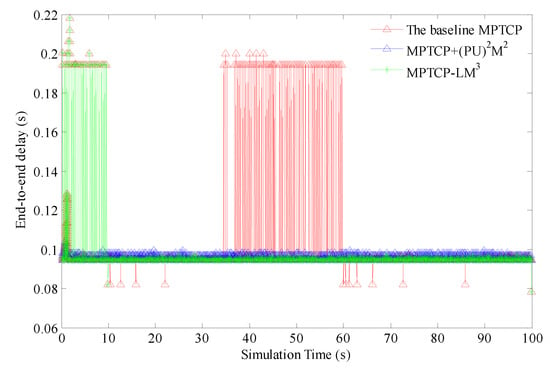

(3) End-to-end delay: Figure 10 shows a comparison of end-to-end delay performance when using the baseline MPTCP, MPTCP + (PU)M, and MPTCP-LM schemes, respectively. As we analyzed earlier, both MPTCP-LM and MPTCP + (PU)M could prevent an underperforming path (or a broken path) from multipathing, and they could thereby prevent unnecessary retransmission in the underperforming or broken paths. This made MPTCP-LM and MPTCP + (PU)M reduce the unnecessary retransmission delay. Moreover, both MPTCP-LM and MPTCP + (PU)M could possibly ensure that the MPTCP segments arrive at the receiver in the right order. This helped the two schemes reduce the packet reordering delay. As a result, MPTCP-LM and MPTCP + (PU)M obtained a lower level of delay than the baseline MPTCP.

Figure 10.

The comparison of end-to-end delay.

However, MPTCP + (PU)M performed worse than MPTCP-LM because more out-of-order data reception in MPTCP + (PU)M required increased reordering than MPTCP-LM. Moreover, in MPTCP + (PU)M, the paths used in multipath transmission often changed, and as a result, the data traffic could be offloaded from one path to another frequently, which incurred additional processing time overhead. When comparing the three methods in terms of the cumulative average delay (with a total of 100 s of simulation time), the MPTCP-LM scheme’s cumulative average delay was approximately 4.35% lower than that of the baseline MPTCP and 1.03% lower than that of MPTCP + (PU)M.

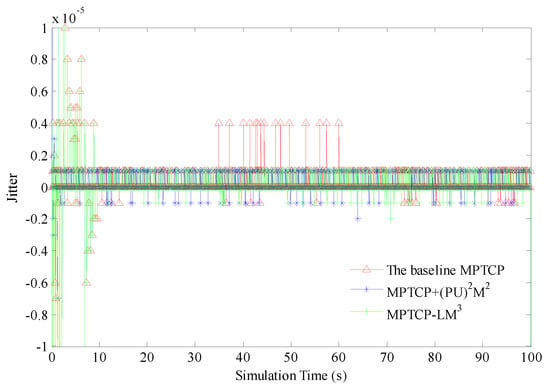

(4) Jitter comparison: Jitter can be defined as the amount of packet delay variation in the end-to-end data traffic transmission time. Jitter is a well suited metric to evaluate and analyze the temporal performance of a multipath transmission protocol. A higher level of jitter is more likely to occur on a poorly performing transport technology and vice versa. Figure 11 shows a comparison of the jitter performance when the three schemes were used. Although the path management behaviors of both MPTCP-LM and MPTCP + (PU)M (removing or reconnecting a path in multipath transmission) influenced the jitter performance, which was occasionally less than that of the baseline MPTCP, we argue that this was acceptable and worthy of achieving high level performance since MPTCP-LM and MPTCP + (PU)M could adaptively select appropriate paths for multipathing. In contrast, the baseline MPTCP, as previously mentioned, was bound to out-of-order data reception (due to path quality characteristics) and unnecessary retransmission (due to path failures), resulting in a lower quality of service than both MPTCP-LM and MPTCP + (PU)M.

Figure 11.

The comparison of jitter.

When comparing the MPTCP + (PU)M and MPTCP-LM methods, it was noted that MPTCP + (PU)M more or less generated a higher level of jitter than MPTCP-LM. This was because MPTCP + (PU)M frequently deactivated or reactivated a path for data transmission, which incurred the additional overhead for data offloading and reordering and, thus, increased the amount of packet delay variations. In contrast, MPTCP + (PU)M provided a more stable path group for data transmission than MPTCP + (PU)M, and it could possibly reduce the overhead of data offloading and reordering and correspondingly generate a lower level of jitter performance than MPTCP + (PU)M.

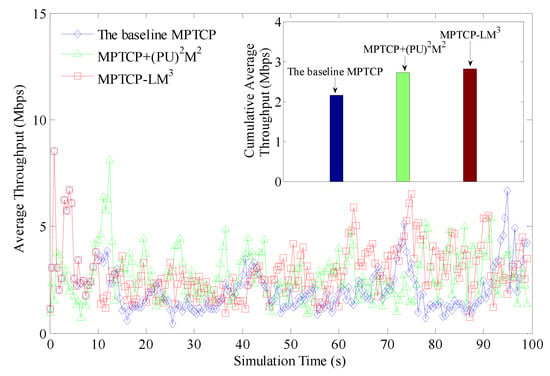

(5) Throughput performance: Figure 12 shows a comparison of throughput performance when using the three schemes. MPTCP + (PU)M and MPTCP-LM prevented the usage of underperforming or broken paths in multipath transmission, which could not only reduce the probability of receiver buffer blocking, but also reduce transmission interruptions in the other high performing paths. Therefore, both MPTCP + (PU)M and MPTCP-LM performed better than the baseline MPTCP. When comparing MPTCP + (PU)M and MPTCP-LM, it was noted that the MPTCP-LM achieved higher association average throughput than the MPTCP + (PU)M. This was because MPTCP + (PU)M detected an underperforming or a broken path by monitoring the delay changes of paths. This path estimation method was very sensitive to variations of delay and could cause throughput performance degradation because of frequently changing the paths in multipath transmission. In contrast, MPTCP-LM declared an underperforming or a broken path by monitoring the receiver buffer blocking, and in this way, it helped MPTCP-LM provide stable transmission performance.

Figure 12.

The comparison of throughput.

In order to illustrate the throughput comparison better, we calculated the cumulative average throughput of the three schemes respectively, by averaging the total average throughput values in a total of 100 s of simulation time. The subfigure in Figure 12 shows a comparison of cumulative average throughput when the three schemes were used, respectively. As the subfigure shows, MPTCP-LM performed the best among the three schemes compared. However, it is noteworthy that the cumulative average throughput of MPTCP-LM was about 2.8 Mbps, which corresponded only to 12% of the aggregated bandwidths of the three access links (10 Mbps + 11 Mbps + 2 Mbps = 23 Mbps). We argue that this phenomenon was caused by several reasons: (i) the VBR competing traffic could consume a certain amount of each path’s access bandwidth; (ii) the two wireless loss models attached to each of the three access links could lead to the degradation of MPTCP throughput performance; (iii) the path failures on Path C will inevitably caused transmission interruption in Paths A and B and, thus, lowered the MPTCP throughput performance; and (iv) the out-of-order data arrival would actually degrade the performance in most cases because of the path quality differences. Still, the MPTCP-LM scheme’s cumulative average throughput was 27.97% higher than that of the baseline MPTCP and 3.68% higher than that of MPTCP + (PU)M.

5. Discussion

In this paper, we proposed a lightweight path management mechanism to aid multihomed MPTCP based mobile computing devices towards efficient multipath data transmission, inspired by the TCP congestion avoidance mechanism. The proposed solution can be easily merged with the current MPTCP variants to remove and reconnect the connection in MPTCP adaptively. In this section, we highlight the limitations of our solution and give some interesting directions for future work. We encourage the researchers who are interested in this field to pay attention to and discuss the following interesting challenges.

- In practice, the number of connection interfaces on the end-devices may not be too large, which may constrain the success of our proposal in today’s Internet architectures. We argue that end-devices in the future Internet may be equipped with many network interfaces and can see multiple access links. The authors hope to attract more researchers to discuss this topic.

- In the Performance Evaluation Section, we discussed the possible reasons why the throughput performance of all the MPTCP variants was far from satisfactory. We noticed that in a sample dual dumbbell simulation topology (see Section 2), the cumulative average throughput of MPTCP was only close to 1.5 Mbps, which corresponded only to 15% of the bottleneck bandwidth of Path A (10 Mbps). For these negative simulation results, we argue that the NS-2 model of MPTCP may not be able to reflect the MPTCP implementation fully. We encourage more researchers to pay attention to and discuss this controversial problem.

6. Conclusions and Future Work

Mobile computing devices attached to more than one wireless network interface can improve their transmission performance by making use of the MPTCP technology. However, the path management mechanism in MPTCP is very simple and vulnerable to the quality differences of multiple paths in heterogeneous wireless environments. As a remedy, this paper presented a lightweight multipath management mechanism for MPTCP (MPTCP-LM) necessitating the following aims: (i) optimizing MPTCP path management mechanism and possibly preventing an underperforming path from multipathing, (ii) reducing the out-of-order data reception and alleviating the receiver buffer blocking problems in MPTCP, (iii) improving the throughput performance and quality of service of multipath transmission. The simulation results demonstrated that MPTCP-LM outperformed the baseline MPTCP and MPTCP + (PU)M in terms of data transmission service quality.

By carrying out simulations on top of NS-2, we noted a limitation that the throughput performance of all the three MPTCP variants was far from satisfactory, as we discussed in the Performance Evaluation Section. Recent research argues that there is an open-source implementation of multipath TCP that exists and is well maintained in the Linux kernel [35]. Our future work will develop a Linux kernel based hardware test-bed, then implement the proposed MPTCP-LM solution in the test-bed, and provide real experimental results.

Furthermore, due to the strict layering principles, MPTCP-LM can only use the networking parameters at the transport layer to estimate each path’s transmission quality and thereby make a “best-effort” transmission behavior. Considering the fact that variations of the wireless channel, such as channel fading and co-channel interference, are the extremely influencing factors in the wireless transmission, our future work will be devoted to the optimization of our MPTCP-LM solution by jointly considering the cross-layer design and “smart collaborative networking” method [36,37,38,39]. Then, a comprehensive wireless network environment including many and promising wireless technologies (i.e., Bluetooth, LoRaWAN, SigFox, etc.) will be considered, and the comparison with the state-of-the-art MPTCP schemes will be investigated in the performance evaluations.

Author Contributions

Proposed the ideas, Y.C., M.C., S.X., L.H., X.T., and Z.Z.; writing, original draft preparation, Y.C., M.C., and Z.Z.; writing, reviews and editing, S.X.; investigation and validations, M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (NSFC) under Grant No. 61962026, by the National Key R&D Program of China under Grant No. 2017YFC0806404, and by the Natural Science Foundation of Jiangxi Province under Grant Nos. 20192ACBL21031 and 20171BAB212014.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wu, J.; Tan, R.; Wang, M. Energy-Efficient Multipath TCP for Quality-Guaranteed Video over Heterogeneous Wireless Networks. IEEE Trans. Multimed. 2018. [Google Scholar] [CrossRef]

- Song, F.; Ai, Z.; Zhou, Y.; You, I.; Choo, R.; Zhang, H. Smart Collaborative Automation for Receive Buffer Control in Multipath Industrial Networks. IEEE Trans. Ind. Inform. 2019. [Google Scholar] [CrossRef]

- Wu, J.; Cheng, B.; Wang, M. Improving Multipath Video Transmission with Raptor Codes in Heterogeneous Wireless Networks. IEEE Trans. Multimed. 2018, 20, 457–472. [Google Scholar] [CrossRef]

- Cao, Y.; Song, F.; Liu, Q.; Huang, M.; Wang, H.; You, I. A LDDoS-Aware Energy-Efficient Multipathing Scheme for Mobile Cloud Computing Systems. IEEE Access 2017, 5, 21862–21872. [Google Scholar] [CrossRef]

- Available online: Https://support.apple.com/lv-lv/HT201373 (accessed on 30 December 2018).

- Available online: Https://www.samsung.com/uk/support/mobile-devices/what-is-the-download-booster-and-how-do-i-enable-it-on-my-samsung-galaxy-alpha/ (accessed on 30 December 2018).

- Ford, A.; Raiciu, C.; Handley, M.; Bonaventure, O. TCP Extensions for Multipath Operation with Multiple Addresses. IETF RFC 6824. 2013. Available online: https://tools.ietf.org/html/rfc6824 (accessed on 30 December 2018).

- Wu, J.; Cheng, B.; Wang, M.; Chen, J. Quality-Aware Energy Optimization in Wireless Video Communication with Multipath TCP. IEEE/ACM Trans. Netw. 2017, 25, 2701–2718. [Google Scholar] [CrossRef]

- Xu, C.; Wang, P.; Xiong, C.; Wei, X.; Muntean, G.-M. Pipeline Network Coding-Based Multipath Data Transfer in Heterogeneous Wireless Networks. IEEE Trans. Broadcast. 2017, 63, 376–390. [Google Scholar] [CrossRef]

- Wang, W.; Wang, X.; Wang, D. Energy Efficient Congestion Control for Multipath TCP in Heterogeneous Networks. IEEE Access 2018, 6, 2889–2898. [Google Scholar] [CrossRef]

- Kimura, B.; Lima, D.; Loureiro, A. Alternative Scheduling Decisions for Multipath TCP. IEEE Commun. Lett. 2017, 21, 2412–2415. [Google Scholar] [CrossRef]

- Yang, F.; Wang, Q.; Amer, P. Out-of-Order Transmission for In-Order Arrival Scheduling for Multipath TCP. In Proceedings of the 28th International Conference on Advanced Information Networking and Applications Workshops, Victoria, BC, Canada, 13–16 May 2014; pp. 749–752. [Google Scholar]

- Alheid, A.; Kaleshi, D.; Doufexi, A. An Analysis of the Impact of Out-of-Order Recovery Algorithms on MPTCP Throughput. In Proceedings of the IEEE 28th International Conference on Advanced Information Networking and Applications, Victoria, BC, Canada, 13–16 May 2014; pp. 156–163. [Google Scholar]

- Cao, Y.; Song, F.; Luo, G.; Yi, Y.; Wang, W.; You, I.; Hao, W. (PU)2M2: A Potentially Underperforming-aware Path Usage Management Mechanism for Secure MPTCP based Multipathing Services. Concurr. Comput. Pract. Exp. 2018, 30, e4191. [Google Scholar] [CrossRef]

- Liu, Y.; Neri, A.; Ruggeri, A.; Vegni, A. A MPTCP-Based Network Architecture for Intelligent Train Control and Traffic Management Operations. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2290–2302. [Google Scholar] [CrossRef]

- Ou, S.; Huang, C.; Lee, T.; Huang, C. Out-of-order transmission enabled congestion and scheduling control for multipath TCP. In Proceedings of the International Wireless Communications and Mobile Computing Conference, Paphos, Cyprus, 5–9 September 2016. [Google Scholar]

- Xue, K.; Han, J.; Ni, D.; Wei, W.; Cai, Y.; Xu, Q.; Hong, P. DPSAF: Forward Prediction Based Dynamic Packet Scheduling and Adjusting with Feedback for Multipath TCP in Lossy Heterogeneous Networks. IEEE Trans. Veh. Technol. 2018, 67, 1521–1534. [Google Scholar] [CrossRef]

- Le, T.; Bui, L. Forward Delay based Packet Scheduling Algorithm for Multipath TCP. Mob. Netw. Appl. 2018, 23, 4–12. [Google Scholar] [CrossRef]

- Ferlin, S.; Kucera, S.; Claussen, H.; Alay, O. MPTCP Meets FEC: Supporting Latency-Sensitive Applications Over Heterogeneous Networks. IEEE/ACM Trans. Netw. 2018, 26, 2005–2018. [Google Scholar] [CrossRef]

- Wang, K.; Dreibholz, T.; Zhou, X.; Fa, F.; Tan, Y.; Cheng, X.; Tan, Q. On the Path Management of Multi-Path TCP in Internet Scenarios based on the NorNet Testbed. In Proceedings of the IEEE 31st International Conference on Advanced Information Networking and Applications (AINA), Taipei, Taiwan, 27–29 March 2017; pp. 1–8. [Google Scholar]

- Kim, J.; Oh, B.; Lee, J. Receive Buffer based Path Management for MPTCP in heterogeneous networks. In Proceedings of the IFIP/IEEE IM, Lisbon, Portugal, 8–12 May 2017; pp. 648–651. [Google Scholar]

- Oh, B.; Lee, J. Feedback-Based Path Failure Detection and Buffer Blocking Protection for MPTCP. IEEE/ACM Trans. Netw. 2016, 24, 3450–3461. [Google Scholar] [CrossRef]

- Li, L.; Xu, K.; Li, T.; Zheng, K.; Peng, C.; Wang, D.; Wang, X.; Shen, M.; Mijumbi, R. A Measurement Study on Multi-path TCP with Multiple Cellular Carriers on High Speed Rails. In Proceedings of the ACM SIGCOMM, Budapest, Hungary, 20–25 August 2018; pp. 455–468. [Google Scholar]

- Chen, Y.; Lim, Y.; Gibbens, R.; Nahum, E.; Khalili, R.; Towsley, D. A measurement based study of multipath tcp performance over wireless networks. In Proceedings of the ACM IMC, Barcelona, Spain, 23–25 October 2013; pp. 455–468. [Google Scholar]

- UC Berkeley. LBL, USC/ISI and Xerox Parc, NS-2 Documentation and Software, version 2.35; UC Berkeley: Berkeley, CA, USA, November 2011; Available online: https://www.isi.edu/nsnam/ns/doc/index.html (accessed on 30 December 2018).

- Google Code Project. Multipath-TCP: Implement Multipath TCP on NS-2. Available online: http://code.google.com/p/multipath-tcp/ (accessed on 30 December 2018).

- Hwang, J.; Walid, A.; Yoo, J. Fast Coupled Retransmission for Multipath TCP in Data Center Networks. IEEE Syst. J. 2018, 12, 1056–1059. [Google Scholar] [CrossRef]

- Jacobson, V.; Karels, M. Congestion Avoidance and Control. In Proceedings of the ACM SIGCOMM, Stanford, CA, USA, 16–18 August 1988; pp. 314–329. [Google Scholar]

- Paasch, C.; Ferlin, S.; Alay, O.; Bonaventure, O. Experimental Evaluation of Multipath TCP Schedulers. In Proceedings of the the ACM SIGCOMM Workshop on Capacity Sharing Workshop, Chicago, IL, USA, 17–22 August 2014; pp. 27–32. [Google Scholar]

- Tzeng, G.; Huang, J. Multiple Attribute Decision Making: Methods and Applications; CRC Press: Boca Raton, FL, USA, 2011; pp. 55–56. [Google Scholar]

- Ramirez-Perez, C.; Ramos-R, V.M. On the effectiveness of multi-criteria decision mechanisms for vertical handoff. In Proceedings of the IEEE 27th International Conference on Advanced Information Networking and Applications, Barcelona, Spain, 25–28 March 2013; pp. 1157–1164. [Google Scholar]

- Song, F.; Zhou, Y.; Liu, C.; Zhang, H. Modeling Space-Terrestrial Integrated Networks with Smart Collaborative Theory. IEEE Netw. 2019, 33, 51–57. [Google Scholar] [CrossRef]

- Ai, Z.; Zhou, Y.; Song, F. A Smart Collaborative Routing Protocol for Reliable Data Diffusion in IoT Scenarios. Sensors 2018, 18, 1926. [Google Scholar] [CrossRef]

- Xu, C.; Li, Z.; Li, J.; Zhang, H.; Muntean, G. Cross-layer Fairness-driven Concurrent Multipath Video Delivery over Heterogenous Wireless Networks. IEEE Trans. Circ. Syst. Video Technol. 2015, 25, 1175–1189. [Google Scholar]

- Paasch, C.; Weber, D.; Baerts, M.; Froidcoeur, T.; Hesmans, B. Multipath TCP in the Linux Kernel. Available online: https://www.multipath-tcp.org (accessed on 30 December 2019).

- Song, F.; Zhu, M.; Zhou, Y.; You, I.; Zhang, H. Smart Collaborative Tracking for Ubiquitous Power IoT in Edge-Cloud Interplay Domain. IEEE Internet Things J. 2019. [Google Scholar] [CrossRef]

- Ai, Z.; Liu, Y.; Song, F.; Zhang, H. A Smart Collaborative Charging Algorithm for Mobile Power Distribution in 5G Networks. IEEE Access 2018, 6, 28668–28679. [Google Scholar] [CrossRef]

- Song, F.; Zhou, Y.; Wang, Y.; Zhao, T.; You, I.; Zhang, H. Smart Collaborative Distribution for Privacy Enhancement in Moving Target Defense. Inf. Sci. 2019, 479, 593–606. [Google Scholar] [CrossRef]

- Quan, W.; Liu, Y.; Zhang, H.; Yu, S. Enhancing Crowd Collaborations for Software Defined Vehicular Networks. IEEE Commun. Mag. 2017, 55, 80–86. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).