1. Introduction

Nonlinear systems play an important role in musical signal processing. In particular, there are many effects categorized as overdrive, distortion, or fuzz, whose primary objective is to introduce harmonic distortion to enrich the signal. Usually, the nonlinear behavior is combined with (linear) filtering to spectrally shape the output signal or to make the amount of introduced distortion frequency-dependent. While many of these systems were originally designed in the analog domain, naturally, there is interest in deriving digital models for them, e.g., [

1,

2,

3,

4].

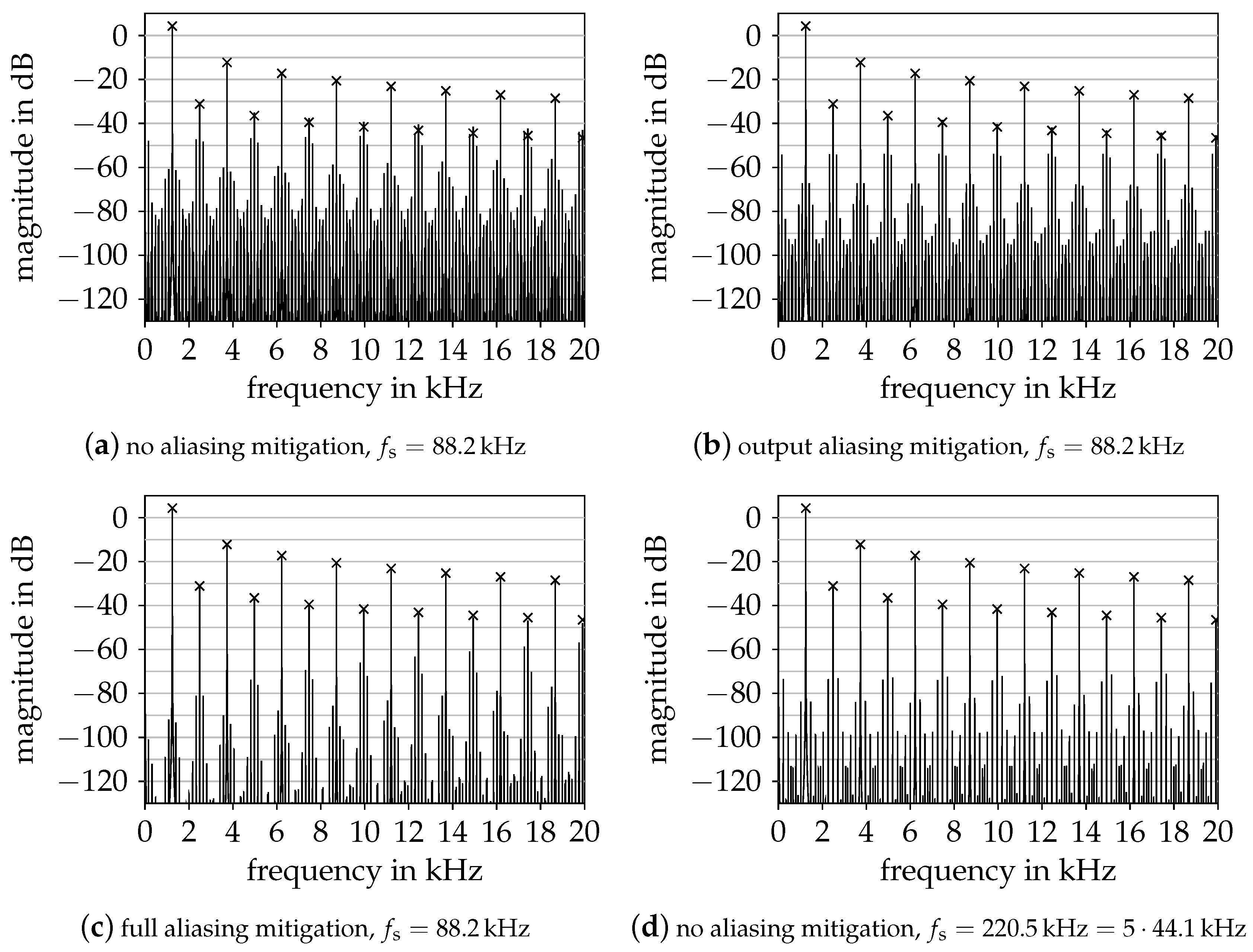

One major problem encountered in digital nonlinear systems, whether designed from scratch or derived by virtual analog modeling is aliasing distortion. Once the additional harmonics introduced by the nonlinearity exceed the Nyquist limit at half the sampling frequency, they get folded back to lower frequencies, just as if the corresponding analog signal had been sampled without appropriate band-limiting. Contrary to the desired harmonic distortion, aliasing distortion is usually perceived as unpleasant. Therefore, methods to suppress or reduce aliasing distortion are needed.

The conceptually simplest aliasing reduction method is oversampling. However, if the harmonics decay slowly with frequency, the oversampling factor has to be high, making the approach unattractive due to the rising computational demand. Consequently, various alternatives have been proposed, e.g., [

5,

6,

7,

8,

9,

10,

11]. These methods, however, usually come with certain limitations, most commonly the restriction to memoryless systems. In this work, an extension of [

10] is presented that loosens the restriction from memoryless systems to a certain class of stateful systems.

This article is an extension of our previous conference paper [

12]. The main differences are the generalization of the method to the case of multiple parallel nonlinear functions and an added discussion of nonlinearities with vector-valued input.

2. Antiderivative-Based Aliasing Reduction for Memoryless Nonlinear

Systems

As the proposed method builds upon the approach from [

10], we shall briefly summarize the latter. Conceptually, the digital signal is converted to a continuous-time signal using linear interpolation between consecutive samples, the nonlinearity is applied, and the result is lowpass-filtered by integrating over one sampling interval before sampling again to obtain the digital output signal. Thus, the nonlinear system

where

denotes the nonlinear function, mapping input sample

to output sample

, is transformed into

Only rarely, the integral will have a closed solution and numerical integration at run time is unattractive considering the computational load. However, by switching the integration variable, we obtain

where we may apply the fundamental theorem of calculus and by further special-casing

to avoid numerical issues, we finally arrive at

where

is the antiderivative of

. Again, the antiderivative may not have a closed solution, but being a function in one variable, it may be pre-computed and stored in a lookup table.

In addition to reducing aliasing artifacts, the approach introduces a half-sample delay and attenuates signal components not only above the Nyquist limit, but also at high frequencies below it, i.e., it acts as a low-pass filter in the audio band. This can be readily seen when using the identity function

instead of a true nonlinearity. In that case, straightforward calculation yields

which is a first-order finite impulse response low-pass filter with a group delay of half a sample. The low-pass effect can be countered by a modest amount of oversampling (e.g., by a factor of two) and the delay usually is of no concern.

The Higher-Dimensional Case

Before turning to stateful systems, we shall briefly discuss how to lift the restriction to systems with one scalar input and one scalar output quietly assumed so far. To keep the notation concise, we collect the inputs of a system with scalar inputs into an input vector (using bold letters to indicate vectors here and in the following). Likewise, multiple outputs are collected into an output vector . We may first notice that the extension to vector-valued output is straightforward: the above derivation does not depend at all on the fact that the non-linear function (or its antiderivative) is scalar-valued.

For vector-valued input, on the other hand, things do not work out so nicely anymore. In particular, we can no longer rewrite (2) as (3). So unless the integral in (2) happens to have a closed solution, to avoid numerical solving at run time, we are almost stuck with precomputing and tabulating the solution for all combinations of and at a certain density. Thus, if the input is -dimensional, having to use two subsequent input vectors stacked on top of each other to form the lookup (index) vector, we would require a -dimensional lookup table. A small improvement is possible by realizing that we always integrate along lines, and a line can be parameterized by parameters in a -dimensional space. Along a line, we can precompute the antiderivative with respect to the scalar coordinate along the line. This antiderivative with a one-dimensional argument and parameters could be stored in a -dimensional lookup table. While this may be reasonable for , it quickly becomes infeasible for larger due to prohibitive memory requirements.

3. Extension to Stateful Systems

The half-sample delay introduced by the method of [

10] becomes problematic if the nonlinearity is embedded in the feedback loop of a stateful system. As noted in [

10], for the particular case of an integrator following the nonlinearity and using trapezoidal rule for time-discretization, one can simply replace the numerator of the discretized integrator’s transfer function with the filter introduced by antialiasing. This fusing of antialiased nonlinearity and integrator then has no additional delay compared to the system without antialiasing, hence can be used inside a feedback system without problems.

Here, we consider systems that do not necessarily have an integrator following the nonlinearity. In particular, we shall consider the general discrete nonlinear state-space system

with

where

is the state vector,

is the input vector, and

is the output vector. The nonlinearity of the system is captured by the (potentially) nonlinear functions

and

for state update and output, respectively. Their arguments

and

are calculated by (8) and (9) as a linear combination of previous states and current input where the coefficient matrices

,

,

and

depend on the system. Some remarks are in order:

We not only allow multiple states, collected in the vector , but similarly multiple inputs in and multiple outputs in . In many applications, input and output will however be scalar.

While having only one nonlinear function on the right-hand side, i.e., , is perfectly valid, we allow the decomposition into a sum of multiple functions. We do this because, as discussed above, nonlinear functions with scalar or very low-dimensional argument are much better suited to the proposed method than those with higher-dimensional argument. Thus, while we theoretically allow vector-valued and of arbitrary dimension, from a practical viewpoint, their dimensionalities and should be low. Therefore, if the system’s nonlinearity can be decomposed into a sum of nonlinear functions with low-dimensional arguments, it should be. It has to be noted, though, that unfortunately not all systems allow their nonlinearity to be decomposed in such a way.

It may often be desirable to differentiate between linear and nonlinear parts of this system. By letting

and

be the identity function and further renaming

,

,

, and

, we therefore rewrite our system as

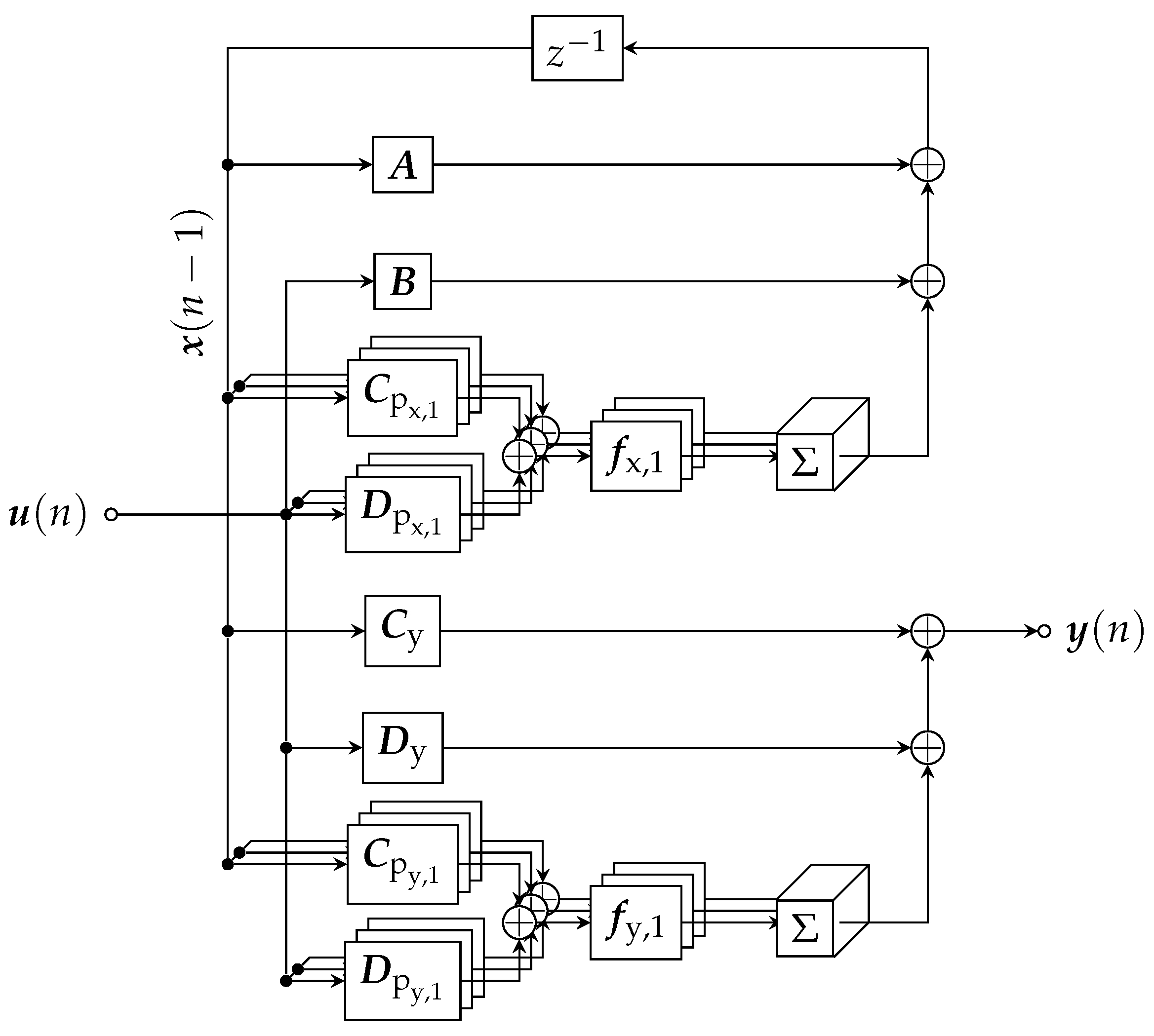

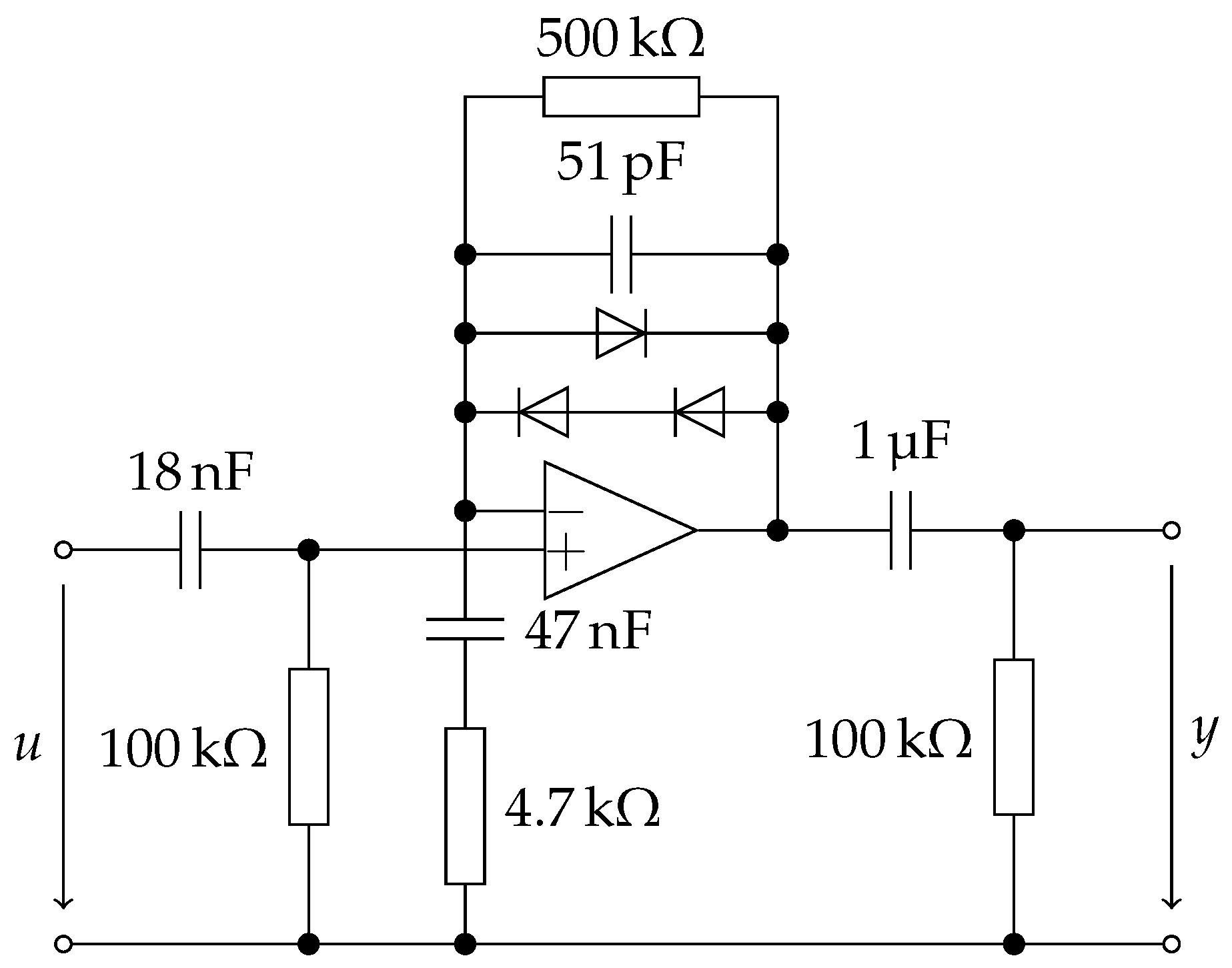

A visual representation is given as a block diagram in

Figure 1.

If the system is obtained in the context of virtual analog modeling, usually the nonlinear functions will only be given implicitly (as the solution of what is sometimes referred to as a delay-free loop), making solving a nonlinear equation necessary. However, they are typically based on a common set of functions

, only applying different weighting to their outputs, i.e.,

with

and

. While this redundancy should be kept in mind for optimizing an implementation, we will derive our method for the more general case of possibly independent nonlinear functions for state update and output.

In a first step, we may consider only applying the aliasing suppression to

, as they are not part of any feedback loop. We have to be careful though, and may not just replace

with

in (11), as that would lead to a misalignment in time of the linear and antialiased nonlinear terms. Instead, we have to apply the antialiasing also to the linear part

, even though it obviously does not introduce aliasing distortion. Fortunately, the integral has a closed solution no matter the dimensionality, namely

resulting in

However, any aliasing distortion introduced into

by (10) will not undergo any mitigation (except for the lowpass filtering).

Now, if we naively rewrite (10) as we did with (11), we modify our system in an unwanted way as we introduce additional delay in the feedback. But we do that in a very controlled way: the unit delay in the feedback is replaced by a delay of

samples. As a delay of

samples corresponds to a delay of one sample at

of the sampling rate, we can compensate for the extra half-sample delay by designing our system for this reduced sampling rate, but then operating it at the original sampling rate. We thus arrive at

with

where all coefficients are calculated for the reduced sampling rate

. We can only do this because we do not have a delay-free loop. Or rather, the delay-free loop is hidden inside the nonlinear functions: Instead of worrying about a nonlinearity within a delay-free loop, we treat the solution of the delay-free loop as the nonlinearity to apply aliasing reduction to. Note that the behavior for frequencies above

is ill-defined, but with the mild oversampling suggested by [

10] anyway, we do not have to worry about this.

The increased delay is not the only effect of the modification. There is also the low-pass filtering. To study this in more detail, assume all

and

to be linear so that we have a linear system, and let

denote the transfer function obtained from (8)–(11). If we instead use (16)–(19) without adjusting the coefficients, it is straightforward to verify that the resulting transfer function fulfills

We may observe two effects: the well-known filtering with a factor on the outside and the substitution

in the argument of

H. Evaluating the latter for

, we note that

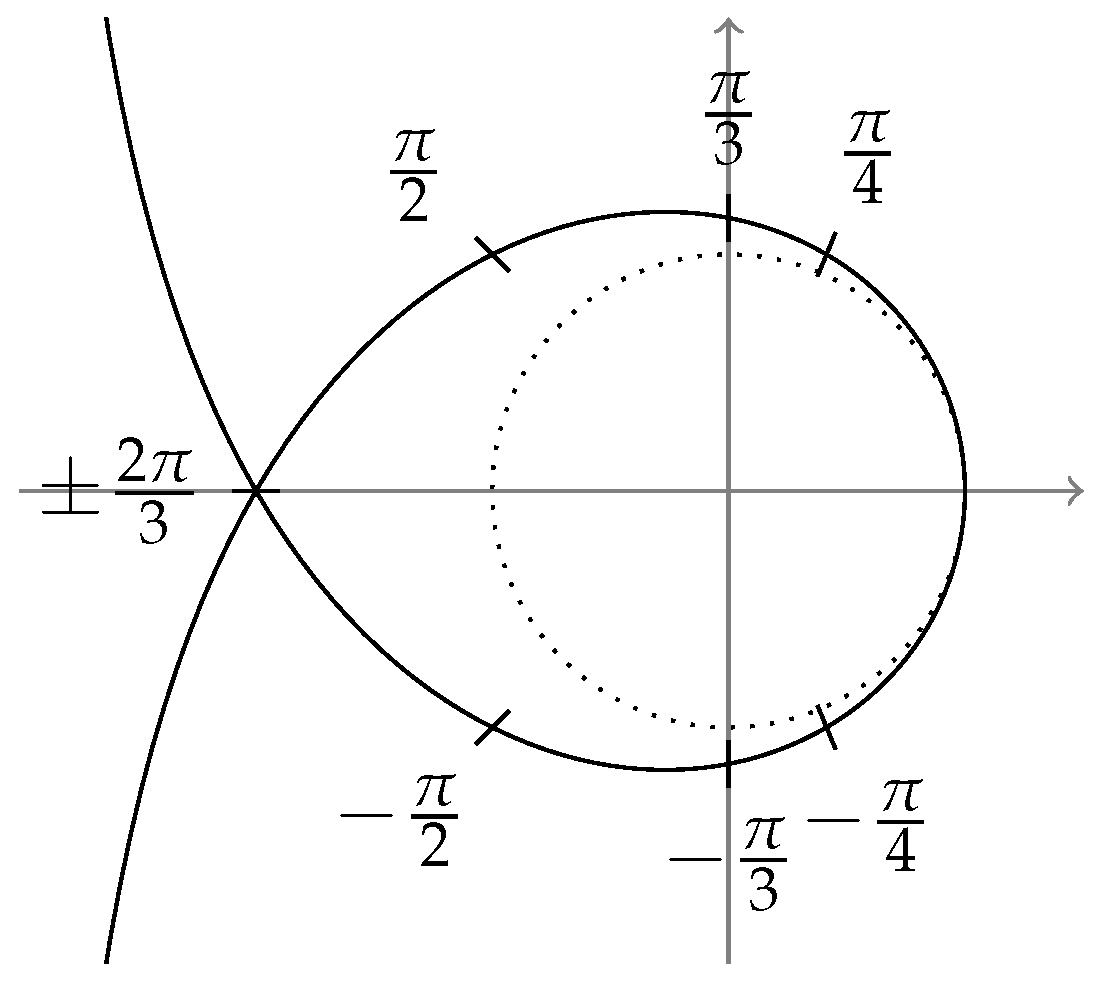

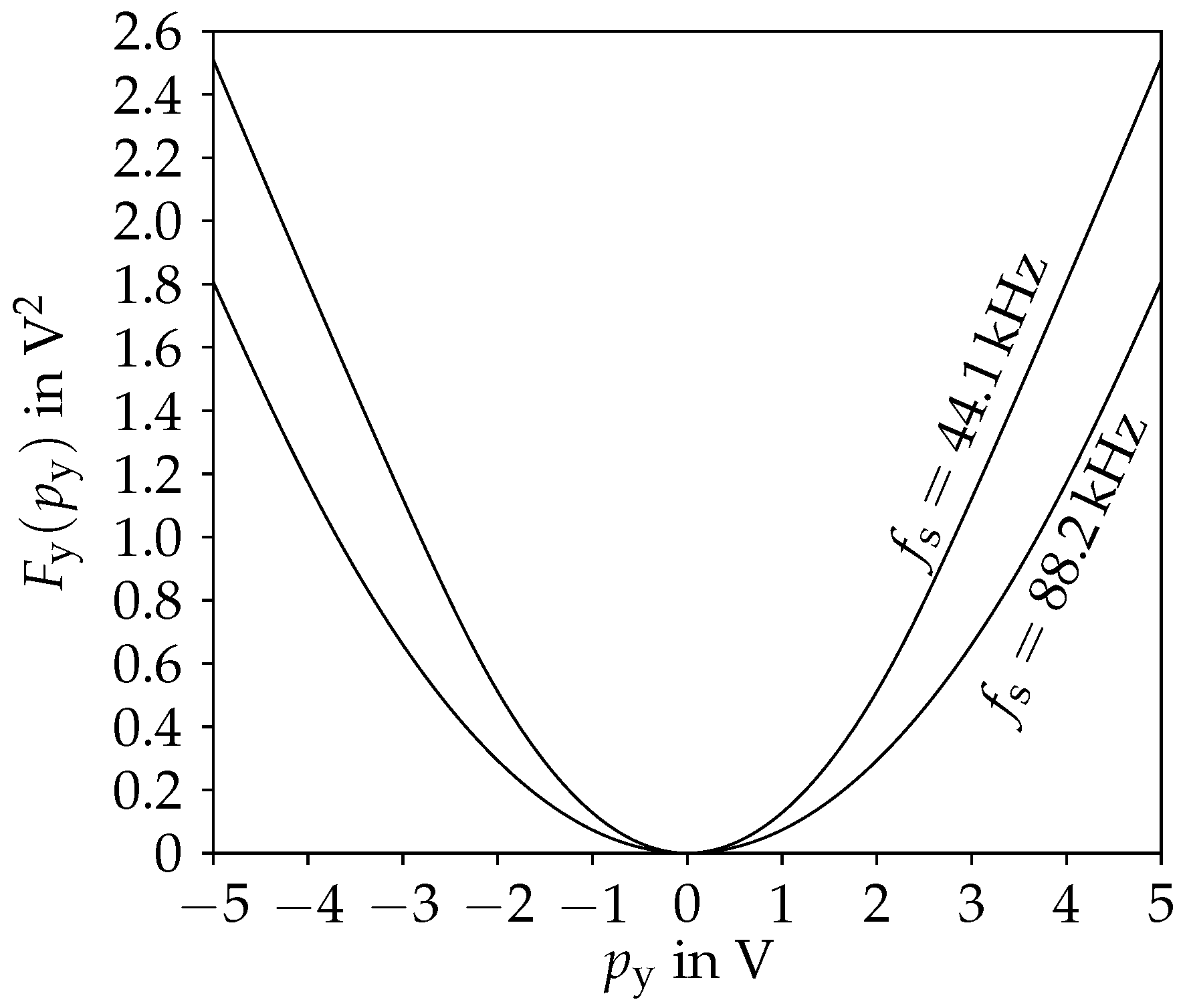

depicted in

Figure 2.

While in the original system is evaluated on the unit circle (shown dotted) to obtain the frequency response, for the modified system, the original is evaluated on the trajectory of (21). We notice that, in addition to the frequency scaling by , there is an additional scaling away from the unit circle, increasing with frequency. Importantly, as we only evaluate for z on or outside the unit circle, we preserve stability, i.e., if is stable, so is . Nevertheless, especially for higher frequencies, this may cause a significant distortion of the frequency response.

An extreme example would be an all-pass filter with high Q-factor, where the transformation might result in the zero moving onto the frequency axis, turning a flat frequency response into one with a deep notch. As the examples will demonstrate, many typical systems are rather well-behaved under the transformation, but one has to be aware of this pitfall.

4. Computational Cost

In addition to potentially altering the system behavior for high frequencies, the price to pay for applying the proposed antialiasing approach is computational cost. Obviously, the exact total number of operations needed depends on the actual system the method will be applied to, but we may observe some trends. First, by the necessary oversampling by a factor of two, the computational cost doubles even for the operations we do not otherwise alter. This is, of course, still better than just using a higher oversampling factor and no other antialiasing at all. If the input and output sampling rates are fixed, the required resampling necessitates interpolation and decimation filters, which incur additional cost, but for the proposed approach and naive oversampling alike.

To judge the computational cost of the modifications made by the proposed method, let us assume that both the nonlinear functions and their antiderivatives are stored in lookup tables with the same resolution and lookup/interpolation method. Then replacing the nonlinear functions by (4) introduces a division and slightly increases the number of required table lookups. Note that the number of table lookups does not double, as may be stored to avoid the lookup of in the next time step. Only when switching between the two cases in (4), an additional lookup is needed. However, the computational cost of the interpolation during table lookup grows exponentially with its dimensionality.

So if all nonlinear functions have only scalar arguments, the extra effort per time step is a number of additions and multiplications by and one division per nonlinear function, and the occasional extra table lookup. This will almost certainly be more efficient than increasing the sampling rate even further. However, when the nonlinear functions have arguments of higher dimensionality, the (almost) doubled dimensionality for the antiderivatives leads to a quickly increasing computational cost for the table lookups, making the method unattractive in that case, in addition to the exploding memory requirements discussed above.

6. Conclusions and Outlook

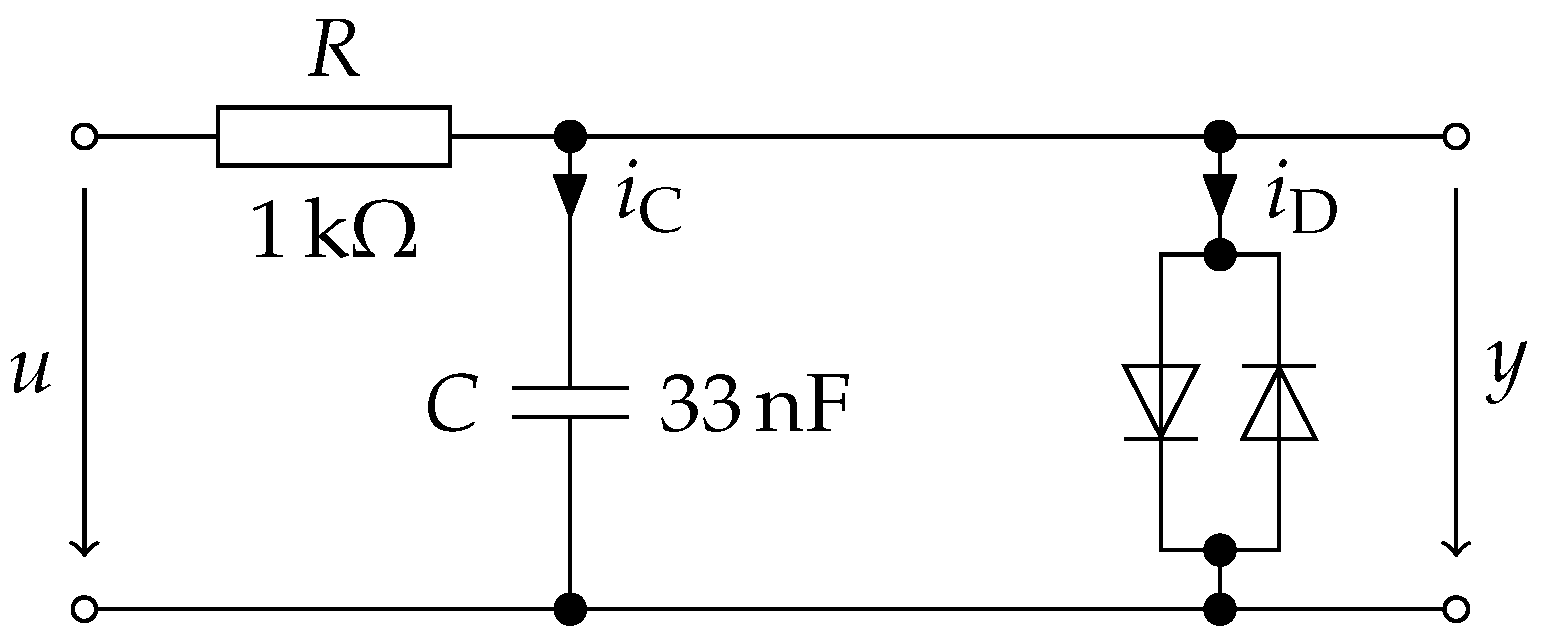

The presented approach for aliasing reduction generalizes the approach of [

10] to all nonlinear systems that can be cast in a way that the nonlinearity takes scalar or very-low-dimensional input. This includes, but is not limited to, all models of circuits with a single one-port nonlinear element. If the system contains a delay-free loop, it has to be re-cast such that the nonlinearity is defined as the solution of the delay-free loop. Then, the delay introduced by applying the method of [

10] to the nonlinearity can be compensated by adjusting the system’s coefficients, even if the nonlinearity is part of a feedback loop.

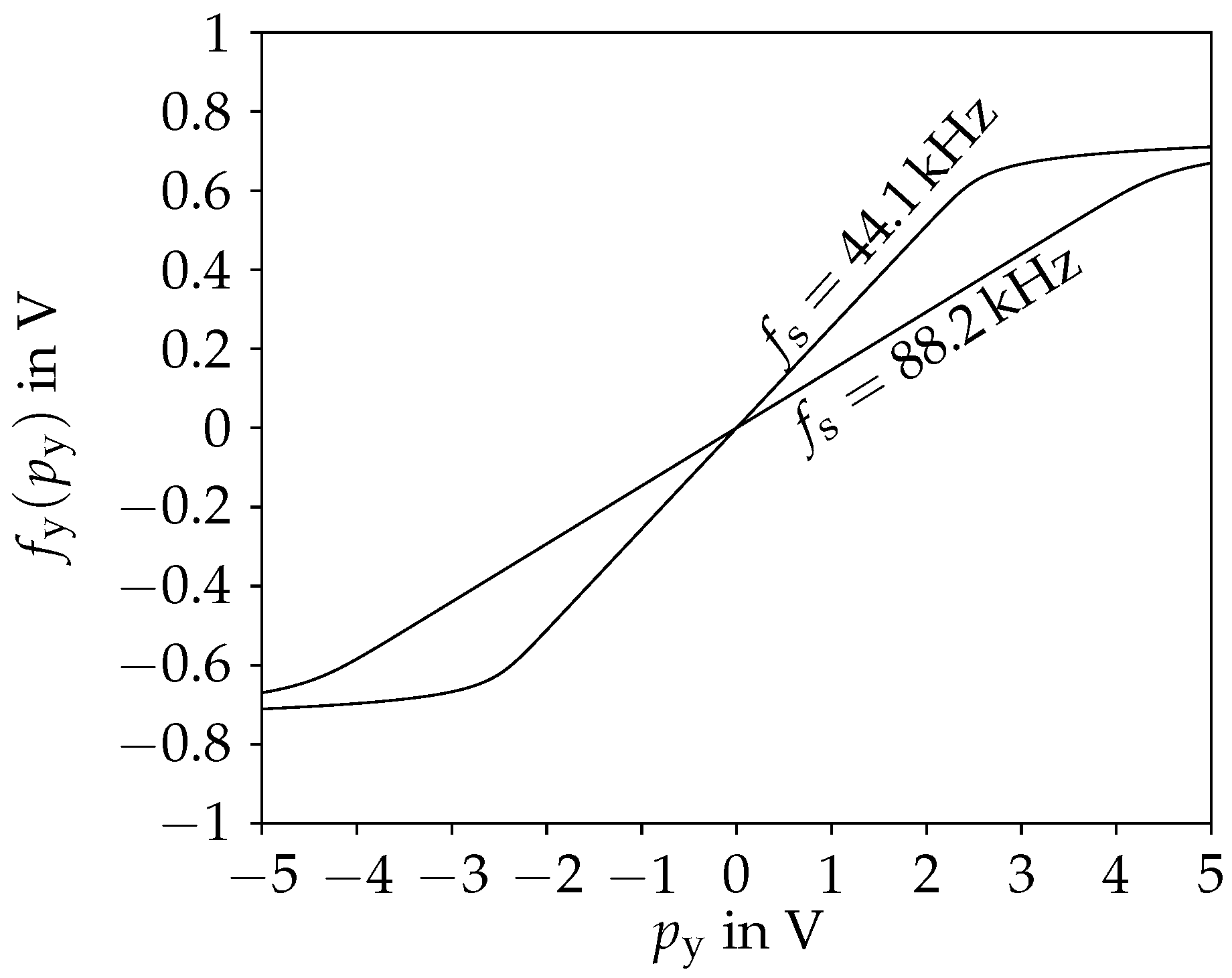

As is to be expected, the achieved aliasing reduction is comparable to that of [

10], allowing to significantly reduce the required oversampling especially for systems which introduce strong distortion, while the additional computational load is modest. Assuming lookup tables are used for

(in general being implicitly defined) and its antiderivative

, the main price to pay is in terms of memory used.

It should be noted that the extensions to higher-order antiderivatives as proposed in [

14] or [

15] should be straightforward, following the same principle. A more interesting future direction would be to lift the restriction on the nonlinear function to have only scalar or very-low-dimensional input. If the method of [

10] (or even the higher-order extensions of [

14] or [

15]) could be generalized to nonlinear functions with multiple inputs without requiring excessively large lookup tables, the method proposed in the present paper would immediately generalize to all stateful nonlinear systems.