When Institutions Cannot Keep up with Artificial Intelligence: Expiration Theory and the Risk of Institutional Invalidation

Abstract

1. Introduction

What makes specific leadership models, governance systems, and societal institutions expire under the accelerating influence of AI?

2. Literature Review

2.1. Disruption and Institutional Inertia

2.2. AI as a Meta-Disruptor

2.3. Gaps in Current Research

2.4. Limitations of Existing Institutional Frameworks

3. Conceptualizing Expiration Theory

3.1. Origins and Construction of the Theory

- 1

- Conceptual synthesis of existing literature on technological disruption (Christensen, 1997; Roblek et al., 2021), institutional rigidity (Mahoney, 2000; Oliver, 1991), and legitimacy failure (Suchman, 1995).

- 2

- Thematic patterns observed in prior empirical case studies from sectors such as law, education, finance, and healthcare—where institutions remained structurally intact but epistemically incoherent (e.g., COMPAS in judicial systems, algorithmic hiring in HR, opaque diagnostics in healthcare).

- 3

- Analytical extrapolation of recent findings in AI ethics, organizational incoherence, and assumption invalidation (Stoltz et al., 2019; Oesterling et al., 2024; Gidley, 2020).

- Cognitive supremacy of humans (e.g., leadership, law);

- Predictability and rule-following in governance;

- Moral centrality of human actors in decision-making;

- Hierarchical control and slow deliberation in bureaucratic structures.

- Superior pattern recognition and autonomous decision-making;

- Opaque reasoning mechanisms that defy explanation or justification;

- Distributed, decentralized models of intelligence;

- Acceleration in decision cycles that outpace human institutional rhythms.

3.2. Expiration vs. Disruption

3.3. Illustrative Case: From Disruption to Expiration in Recruitment Systems

3.4. Expiration as Epistemic Invalidation

- A leadership theory based on charisma and emotional intelligence may no longer suffice in decision-making environments characterized by algorithmic input.

- A legal system designed for analog contexts may become incoherent within a digital landscape that encompasses smart contracts, predictive enforcement, and artificial intelligence judges.

- Democratic discourse established around print-era deliberation is insufficient to address real-time manipulation conducted through AI-generated deepfakes.

3.5. Tracking Assumption Decay in Institutions

3.6. Theoretical Distinctions: Expiration Theory in Institutional Collapse and Adaptation

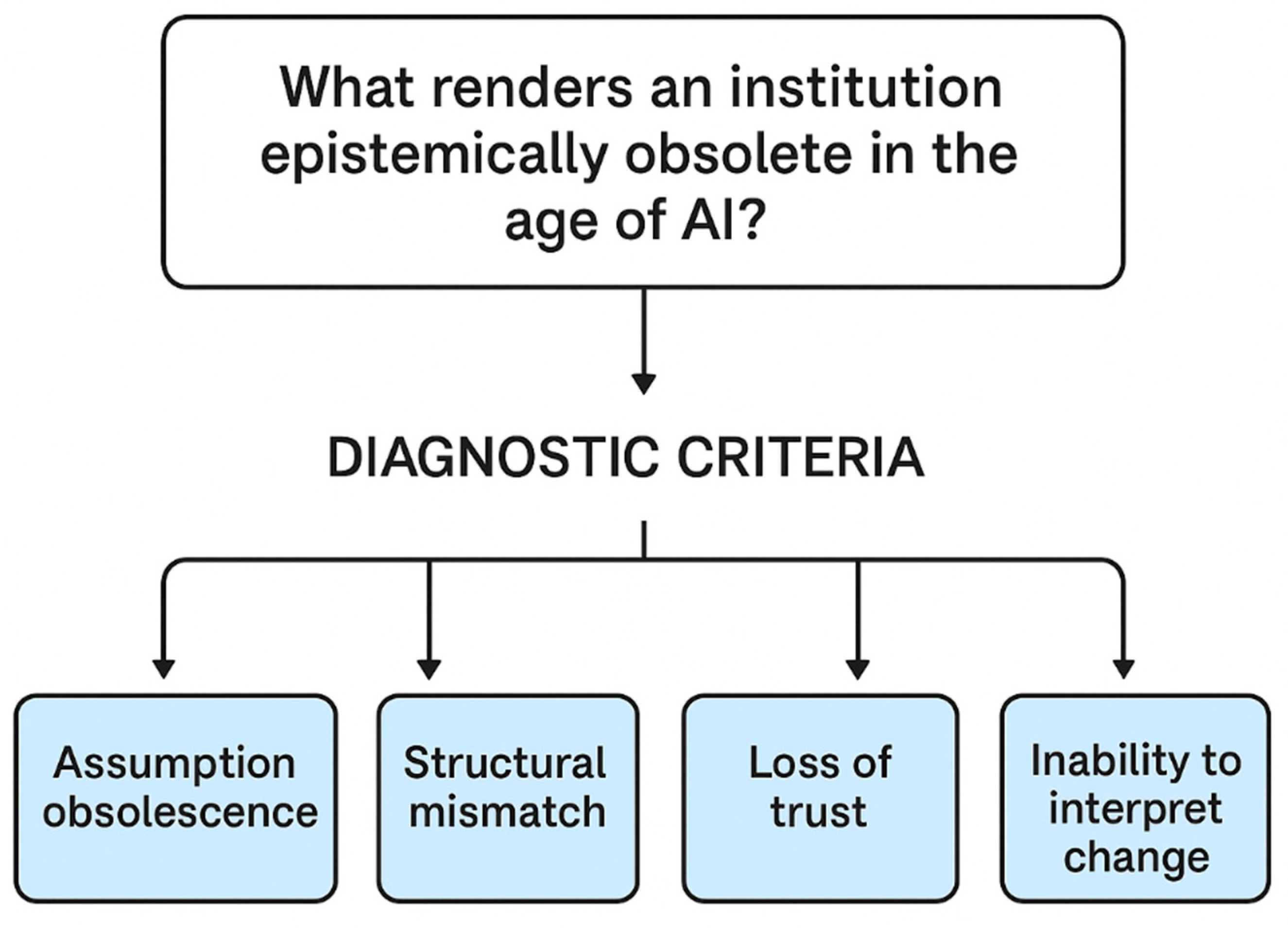

3.7. Criteria for Institutional Expiration

- Assumption Obsolescence: The fundamental beliefs justifying the institution are no longer valid, either empirically or normatively.

- Structural Mismatch: The institution’s structure is too slow and rigid to effectively engage with AI-driven environments.

- Loss of Trust: Users, citizens, or stakeholders start to doubt the institution’s relevance and authority.

- Inability to Interpret Change: The institution lacks the necessary tools to understand, predict, or assess changes resulting from AI.

3.8. Methodological Approach

- 1

- Degree of AI Disruption (drawn from literature on algorithmic displacement and automation pressure).

- 2

- Institutional Resilience (adapted from the institutional literature on adaptation, legitimacy, and epistemic flexibility).

- Case Study Design: Researchers can assign specific institutions to the AI Pressure Clock according to their level of AI integration and ability to adapt, such as through procedural reform, explainability audits, and staff retraining.

- Indicator Development: Factors such as algorithmic opacity, rapid decision-making, and procedural challenges can be turned into metrics for assessing institutional expiration risk.

- Longitudinal Studies: Monitoring changes in institutional coherence, perceptions of legitimacy, and structural reform over time will help evaluate the model’s predictive and diagnostic capabilities.

4. The AI Pressure Clock Framework

- AI Disruption Level (vertical axis): The extent to which artificial intelligence technologies confront, substitute, or reorganize the fundamental functions of an institution.

- Institutional Resilience (horizontal axis): The institution’s capacity for adaptation, response, reform, and realignment by the epistemic changes introduced by artificial intelligence.

- Quadrant I—AI-Disrupted and Fragile

- Legal systems depend on slow, precedent-based decision-making.

- Electoral oversight is vulnerable to algorithmic manipulation.

- Traditional HR departments struggle with algorithmic hiring processes.

- Quadrant II—AI-disrupted but Adaptive

- Healthcare systems are integrating diagnostic AI.

- Logistics sectors are optimizing with machine learning.

- Financial firms are using AI for compliance and risk management.

- Quadrant III—Stable but Vulnerable

- Higher education systems based on rigid curricula.

- Civil service frameworks using traditional methods.

- Faith-based governance organizations with established hierarchies.

- Quadrant IV—Low-Threat Zones

- Creative sectors (e.g., live performing arts).

- Niche artisan careers.

- Community-driven governance frameworks with integrated redundancy.

- Strategic Utility

- Diagnose where the risk of expiration is highest.

- Help decision-makers prioritize transformation efforts.

- Guide researchers in identifying case studies of fragile versus adaptive institutions.

- Develop an early-warning system to identify epistemic misalignment between human systems and AI environments.

4.1. Interpretation and Use over Time

4.2. Risks of Misuse and Oversimplification

4.3. Linking the Assumption Decay Index to the AI Pressure Clock

5. Sectoral Applications of Expiration Theory

5.1. Legal Systems (Quadrant I: AI-Disrupted and Fragile)

5.2. Financial Systems (Quadrant I/II: Diverging)

5.3. Healthcare (Quadrant II: AI-Disrupted but Adaptive)

5.4. Higher Education (Quadrant III: Stable but Vulnerable)

5.5. Religious Institutions (Quadrant III/IV: Stable but Vulnerable or Low Threat)

5.6. Humanitarian Systems (Quadrant II: AI-Disrupted but Adaptive)

6. Theoretical and Research Implications

6.1. Theoretical Contributions

- 1

- Epistemic Misalignment as Core Mechanism

- Expiration Theory views institutional decline due to AI not as a performance failure or loss of trust, but as assumption decay—when an institution’s core beliefs no longer match algorithmic logic.

- 2

- AI as a Meta-Disruptor of Institutional Logic

- The Expiration Theory highlights that AI can redefine valid knowledge, authority, and procedural legitimacy, demanding cognitive adjustments that extend beyond simple innovation.

- 3

- Complement to Procedural Governance Models

- Recent AI oversight frameworks, such as the OECD (2024a) AI Principles and the EU AI Act, emphasize the need for transparency and human involvement. Expiration Theory argues that these measures are crucial for ensuring human accountability and conducting epistemic audits, which help prevent assumption decay and maintain institutional coherence.

6.2. Research Implications

- Case Studies: Conduct in-depth studies on institutions facing AI-related epistemic challenges, such as courts using predictive sentencing tools. Use interviews and document analysis to detect early signs of assumption decay.

- Delphi Panels: Conduct structured expert elicitation with lawyers, data scientists, and public administrators to refine diagnostic criteria and the Assumption Decay Index (ADI).

- Discourse and Ethnography: Examine internal communications and rituals to understand how institutional narratives evolve or struggle to adapt in response to algorithmic decision-making.

- Assumption Decay Index (ADI) Surveys: Develop multi-item scales (such as interpretability, trust, and procedural alignment) to measure assumption decay in organizations.

- Longitudinal Panel Studies: Track institutions’ ADI and performance/legitimacy metrics over time to test predictive validity of the AI Pressure Clock.

- Comparative Mapping: Use cluster analysis or latent class modeling to position institutions on the clock according to their composite ADI and resilience scores.

- Role-Play Simulations: Design decision-making exercises where participants manage AI recommendations with different levels of transparency and override conditions, focusing on any interpretive challenges that arise.

- Sandbox Field Experiments: Collaborate with agencies to test various oversight models (e.g., with and without URO presence) and track changes in assumption decay indicators.

- Agent-Based Modeling: Simulate institutional actors with different cognitive abilities and AI exposure levels to analyze how changes in disruption pressure or resilience resources impact systemic expiration.

6.3. Expiration Velocity

- Acceleration Factors

- ○

- Rapid deployment of opaque AI systems without human oversight.

- ○

- High-stakes decision environments, like emergency response, have amplified costs due to misinterpretations.

- ○

- Rigid organizational cultures that lack feedback and learning mechanisms.

- Deceleration Factors

- ○

- Strong interpretive frameworks, such as algorithmic impact assessments and URO oversight.

- ○

- Gradual AI integration with repeated sandbox testing.

- ○

- Rotational oversight panels for distributed accountability.

- Guiding Questions

- Thresholds: What percentage drop in ADI metrics (e.g., a 25% decrease in explainability scores) causes a quadrant shift?

- Tipping Points: Do specific events, like implementing a new AI module, significantly speed up assumption decay?

- Intervention Lags: How long after implementing oversight reforms (URO, sandbox) do we see measurable slowdowns in ADI trends?

- Contextual Moderators: What institutional factors (sector, size, regulatory environment) most influence expiration velocity?

6.4. Bridging Institutional Theory and AI Ethics

- AI ethics should include institutional epistemology, focusing on how organizations acquire knowledge, make decisions, and justify actions in AI-driven settings.

- Shift from model-level interventions to system-level reforms by embedding AI in new governance structures.

6.5. Contributions to Leadership Theory

- It calls for post-human leadership models integrating collaborative intelligence and algorithmic co-governance).

- It opens an inquiry into AI-augmented moral authority, distributed responsibility, and machine-mediated influence in decision-making.

6.6. Interdisciplinary Research Agenda

- Different sectors face varying speeds of epistemic stress from AI.

- Institutional safeguards can help prevent premature expiration.

- It is important to measure assumption decay in both normative and procedural systems.

- Hybrid institutions are emerging to promote human-AI collaboration.

- Organizational studies.

- AI ethics and governance.

- Public administration and policy.

- Law and jurisprudence.

- Education, finance, and health system studies.

- STS (Science, Technology, and Society).

7. Policy and Practice Implications

7.1. Early-Warning Systems for Institutional Obsolescence

7.2. Prioritized Institutional Reform

- Institutions such as justice systems and electoral bodies, which are significantly impacted by AI and lack resilience, require immediate foundational reform.

- These institutions should enhance their adaptive capacity with ethical oversight, improved governance, and cross-disciplinary collaboration.

- While stable, these institutions should modernize proactively to prepare for future disruptions.

- Institutions in this quadrant require minimal updates.

7.3. Embedding AI Accountability in Governance Design

7.4. Workforce and Leadership Retraining

7.5. Institutional Innovation Infrastructure

7.6. Summary: From Awareness to Action

8. Limitations

- Conceptual FocusThis work is theoretical, synthesizing the existing literature to introduce new concepts, such as assumption decay and the AI Pressure Clock. However, it lacks empirical validation. Future research should operationalize and test these ideas in real-world scenarios.

- Contextual VariabilityInstitutional responses to AI vary based on cultural, political, and economic factors. Governance maturity, regulatory frameworks, and available resources influence how organizations understand and implement the AI Pressure Clock. Our framework is adaptable, but it requires adjustments to fit local conditions.

- Risk of OversimplificationSimplifying complex institutional dynamics into four quadrants or criteria overlooks important nuances. This model should be used as a helpful guide, rather than a strict classification.

- Future Refinements

- Empirically validate the Assumption Decay Index using case studies and survey research.

- Investigating how the AI Pressure Clock can be adapted to specific sectors and regions.

- Incorporating stakeholder perspectives to fine-tune interpretive thresholds and enhance practical guidance.

- Intervention trials, such as URO appointments or institutional sandbox pilots, to assess practical effectiveness and refine governance prototypes.

- We highlight these limitations to strengthen the framework’s academic rigor and inform future empirical and contextual improvements.

9. Conclusions: Rethinking Institutional Viability in the Age of AI

- Researchers: Expiration Theory focuses on institutional change by highlighting the importance of cognitive alignment and epistemic validity, in addition to structural dynamics.

- Policymakers: The framework emphasizes the need for human accountability and safeguards in AI oversight, utilizing roles such as the Ultimate Responsibility Owner and sandbox environments, rather than relying solely on performance metrics.

- Institutional leaders and designers: To maintain relevance and consistency in an AI-driven world, institutions should focus on early detection of assumption decay, conduct specific resilience audits, and implement targeted interventions based on the severity of the situation.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Acemoğlu, D., Egorov, E., & Sonin, K. (2021). Institutional change and institutional persistence. Elsevier EBooks, 365–389. [Google Scholar] [CrossRef]

- Avolio, B. J., Walumbwa, F. O., & Weber, T. J. (2009). Leadership: Current theories, research, and future directions. Annual Review of Psychology, 60(1), 421–449. [Google Scholar] [CrossRef] [PubMed]

- Baumgartner, F. R., & Jones, B. D. (2010). Agendas and instability in American politics (2nd ed.). University of Chicago Press. [Google Scholar]

- Binns, R., Van Kleek, M., Veale, M., Lyngs, U., Zhao, J., & Shadbolt, N. (2018, April 21–26). It’s reducing a human being to a percentage. 2018 CHI Conference on Human Factors in Computing Systems—CHI’18 (pp. 1–14), Montreal QC, Canada. [Google Scholar] [CrossRef]

- Brynjolfsson, E., & McAfee, A. (2014). The second machine age: Work, progress, and prosperity in a time of brilliant technologies. W. W. Norton & Company. [Google Scholar]

- Centre for Eye Research Australia. (2023). AI in healthcare series workshop: 11-applications of AI: Experiences from Aravind eye care system. YouTube. Available online: https://www.youtube.com/watch?v=wrf3bg5hwEg (accessed on 30 June 2025).

- Chemma, N. (2021). Disruptive innovation in a dynamic environment: A winning strategy? An illustration through the analysis of the yoghurt industry in Algeria. Journal of Innovation and Entrepreneurship, 10(1), 34. [Google Scholar] [CrossRef]

- Choi, T., & Park, S. (2021). Theory building via agent-based modeling in public administration research: Vindications and limitations. International Journal of Public Sector Management, 34(6), 614–629. [Google Scholar] [CrossRef]

- Christensen, C. M. (1997). The innovator’s dilemma: When new technologies cause great firms to fail. Harvard Business School Press. [Google Scholar]

- Clapp, M. (2020). Assessing the efficacy of an institutional effectiveness unit. Assessment Update, 32(3), 6–13. [Google Scholar] [CrossRef]

- Coppi, G., Moreno Jimenez, R., & Kyriazi, S. (2021). Explicability of humanitarian AI: A matter of principles. Journal of International Humanitarian Action, 6(1). [Google Scholar] [CrossRef]

- Coşkun, R., & Arslan, S. (2024). The role of organizational language in gaining legitimacy from the perspective of new institutional theory. Journal of Management & Organization, 30(6). [Google Scholar] [CrossRef]

- Danaher, J. (2019). Automation and utopia: Human flourishing in a world without work (pp. 87–131). Harvard University Press. [Google Scholar]

- de Vaujany, F.-X., Vaast, E., Clegg, S. R., & Aroles, J. (2020). Organizational memorialization: Spatial history and legitimation as chiasms. Qualitative Research in Organizations and Management: An International Journal, 16(1), 76–97. [Google Scholar] [CrossRef]

- DiMaggio, P. J., & Powell, W. W. (1983). The Iron cage revisited: Institutional isomorphism and collective rationality in organizational fields. American Sociological Review, 48(2), 147–160. [Google Scholar] [CrossRef]

- Doshi-Velez, F., & Kim, B. (2017). Towards a rigorous science of interpretable machine learning. arXiv, arXiv:1702.08608. [Google Scholar]

- Esteva, A., Kuprel, B., Novoa, R. A., Ko, J., Swetter, S. M., Blau, H. M., & Thrun, S. (2017). Dermatologist-level classification of skin cancer with deep neural networks. Nature, 542(7639), 115–118. [Google Scholar] [CrossRef] [PubMed]

- EU. (2024). The Act. The artificial intelligence act. Available online: https://artificialintelligenceact.eu/the-act/ (accessed on 30 June 2025).

- Eubanks, V. (2018). Automating inequality: How high-tech tools profile, police, and punish the poor. St. Martin’s Press. [Google Scholar]

- Feng, L., Qin, G., Wang, J., & Zhang, K. (2022). Disruptive innovation path of start-ups in the digital context: The perspective of dynamic capabilities. Sustainability, 14(19), 12839. [Google Scholar] [CrossRef]

- Floridi, L., & Cowls, J. (2019). A unified framework of five principles for AI in society. Harvard Data Science Review, 1(1). [Google Scholar] [CrossRef]

- Frimpong, V. (2025a). Artificial Intelligence on trial: Who is responsible when systems fail? Toward a framework for the ultimate AI accountability owner. Preprints. [Google Scholar] [CrossRef]

- Frimpong, V. (2025b). The strategic risk of delayed and non-adoption of artificial intelligence: Evidence from organizational decline in extinction zones. International Journal of Business and Management, 20(4), 26. [Google Scholar] [CrossRef]

- Gidley, D. (2020). Creating institutional disruption: An alternative method to study institutions. Journal of Organizational Change Management. ahead-of-print. [Google Scholar] [CrossRef]

- Grint, K. (2008). Leadership, management and command: Rethinking D-day. Palgrave/Macmillan. [Google Scholar]

- Guo, J., Shi, M., Peng, Q., & Zhang, J. (2023). Ex-ante project management for disruptive product innovation: A review. Journal of Project Management, 8(1), 57–66. [Google Scholar] [CrossRef]

- Hacker, J. S. (2004). Privatizing risk without privatizing the welfare state: The hidden politics of social policy retrenchment in the united states. American Political Science Review, 98(2), 243–260. Available online: https://EconPapers.repec.org/RePEc:cup:apsrev:v:98:y:2004:i:02:p:243-260_00 (accessed on 2 July 2025). [CrossRef]

- He, K., & Feng, H. (2019). Leadership transition and global governance: Role conception, institutional balancing, and the AIIB. The Chinese Journal of International Politics, 12(2), 153–178. [Google Scholar] [CrossRef]

- ISACA. (2024). How to build digital trust in AI with a robust AI governance framework. Empowering careers. Advancing trust in technology. Available online: https://www.isaca.org/resources/isaca-journal/issues/2024/volume-3/how-to-build-digital-trust-in-ai-with-a-robust-ai-governance-framework (accessed on 2 July 2025).

- Jahan, I., Bologna Pavlik, J., & Williams, R. B. (2020). Is the devil in the shadow? The effect of institutional quality on income. Review of Development Economics, 24(4), 1463–1483. [Google Scholar] [CrossRef]

- Khanna, T., & Palepu, K. G. (2010). Winning in emerging markets: A road map for strategy and execution (pp. 13–26). Harvard Business Press. [Google Scholar]

- Kleinberg, J., Lakkaraju, H., Leskovec, J., Ludwig, J., & Mullainathan, S. (2017). Human decisions and machine predictions. The Quarterly Journal of Economics, 133(1), 237–293. [Google Scholar] [CrossRef]

- Krajnović, A. (2020). Institutional distance. Journal of Corporate Governance, Insurance and Risk Management, 7(1), 15–24. [Google Scholar] [CrossRef]

- Mahoney, J. (2000). Path dependence in historical sociology. Theory and Society, 29(4), 507–548. [Google Scholar] [CrossRef]

- Ménard, C. (2022). Disentangling institutions: A challenge. Agricultural and Food Economics, 10(1), 16. [Google Scholar] [CrossRef]

- Mittelstadt, B. D., Allo, P., Taddeo, M., Wachter, S., & Floridi, L. (2016). The ethics of algorithms: Mapping the debate. Big Data & Society, 3(2), 1–21. [Google Scholar] [CrossRef]

- OECD. (2024a). AI principles. OECD. Available online: https://www.oecd.org/en/topics/sub-issues/ai-principles.html (accessed on 30 June 2025).

- OECD. (2024b). Model AI governance framework for generative AI. Available online: https://oecd.ai/en/catalogue/tools/model-ai-governance-framework-for-generative-ai (accessed on 2 July 2025).

- Oesterling, N., Ambrose, G., & Kim, J. (2024). Understanding the emergence of computational institutional addedscience: A review of computational modeling of institutions and institutional dynamics. International Journal of the Commons, 18(1). [Google Scholar] [CrossRef]

- Oliver, C. (1991). Strategic responses to institutional processes. Academy of Management Review, 16(1), 145–179. [Google Scholar] [CrossRef]

- Özer, M. Y. (2022). Informal sector and institutions. Theoretical and Practical Research in the Economic Fields, 13(2), 180. [Google Scholar] [CrossRef] [PubMed]

- Pasquale, F. (2015). Black box society: The secret algorithms that control money and information. Harvard University Press. [Google Scholar]

- Petrenko, V. (2025). AI-based credit scoring: Revolutionizing financial assessments. Litslink. Available online: https://litslink.com/blog/ai-based-credit-scoring (accessed on 30 June 2025).

- Pieczewski, A. (2023). Poland’s institutional cycles. Remarks on the historical roots of the contemporary institutional matrix. UR Journal of Humanities and Social Sciences, 29(4), 5–21. [Google Scholar] [CrossRef]

- Rahwan, I., Cebrian, M., Obradovich, N., Bongard, J., Bonnefon, J.-F., Breazeal, C., Crandall, J. W., Christakis, N. A., Couzin, I. D., Jackson, M. O., Jennings, N. R., Kamar, E., Kloumann, I. M., Larochelle, H., Lazer, D., McElreath, R., Mislove, A., Parkes, D. C., Pentland, A., … Roberts, M. E. (2019). Machine behaviour. Nature, 568(7753), 477–486. [Google Scholar] [CrossRef]

- Roblek, V., Meško, M., Pušavec, F., & Likar, B. (2021). The role and meaning of the digital transformation as a disruptive innovation on small and medium manufacturing enterprises. Frontiers in Psychology, 12, 592528. [Google Scholar] [CrossRef]

- Scott, W. R. (2014). Institutions and organizations: Ideas, interests, and identities. Sage Publications, Inc. [Google Scholar]

- Siddiki, S., Heikkila, T., Weible, C. M., Pacheco-Vega, R., Carter, D., Curley, C., Deslatte, A., & Bennett, A. (2019). Institutional analysis with the institutional grammar. Policy Studies Journal, 50(2), 315–339. [Google Scholar] [CrossRef]

- Silver, D., Schrittwieser, J., Simonyan, K., Antonoglou, I., Huang, A., Guez, A., Hubert, T., Baker, L., Lai, M., Bolton, A., Chen, Y., Lillicrap, T., Hui, F., Sifre, L., van den Driessche, G., Graepel, T., & Hassabis, D. (2017). Mastering the game of Go without human knowledge. Nature, 550(7676), 354–359. [Google Scholar] [CrossRef] [PubMed]

- Stoltz, D. S., Taylor, M. A., & Lizardo, O. (2019). Functionaries: Institutional theory without institutions. SocArXiv. [Google Scholar] [CrossRef]

- Suchman, M. C. (1995). Managing legitimacy: Strategic and institutional approaches. Academy of Management Review, 20(3), 571–610. [Google Scholar] [CrossRef]

- Sun, Y., & Zhou, Y. (2024). Specialized complementary assets and disruptive innovation: Digital capability and ecosystem embeddedness. Management Decision, 62(11), 3704–3730. [Google Scholar] [CrossRef]

- Thelen, K. (2004). How institutions evolve: The political economy of skills in Germany, Britain, the United States, and Japan. Cambridge University Press. [Google Scholar] [CrossRef]

- Ungar-Sargon, J. (2025). AI and spirituality: The disturbing implications. Journal of Medical-Clinical Research & Review, 9(3), 1–7. Available online: https://www.scivisionpub.com/pdfs/ai-and-spirituality-the-disturbing-implications-3742.pdf (accessed on 29 June 2025).

- Wen, H. (2024). Human-AI collaboration for enhanced safety. In Methods in chemical process safety (Vol. 8, pp. 51–80). Elsevier. [Google Scholar] [CrossRef]

- Zuboff, S. (2019). The age of surveillance capitalism: The fight for a human future at the new frontier of power. Public Affairs. [Google Scholar]

| Feature | Disruption | Expiration |

|---|---|---|

| Mechanism | Competitive replacement | Epistemic breakdown |

| Agent | New market entrant | Exogenous force (e.g., AI) |

| Focus | Efficiency, price, accessibility | Assumptions, legitimacy, functional alignment |

| Scope | Product, service, organization | System, institution, model |

| Adaptation possible? | Yes—through innovation | Not always—may require structural rethinking |

| Business (Example) | Uber disrupting taxis | Algorithmic hiring expiring HR-led recruitment models |

| Public Sector (Example) | E-learning platforms disrupting in-person training | Predictive policing systems expiring court-based sentencing frameworks |

| Sector | Quadrant | Primary Risk | Reform Priorities |

|---|---|---|---|

| Legal Systems | I—AI-Disrupted & Fragile | Procedural opacity, loss of fairness | Explainability, AI-adapted legal reasoning |

| Banking & Finance | I/II—Disrupted & Diverging | Governance mismatch, exclusion risks | Transparent AI use, compliance redesign, inclusion tools |

| Healthcare | II—AI-Disrupted but Adaptive | Role confusion, over-reliance on opaque tools | Interdisciplinary retraining, ethical AI oversight |

| Higher Education | III—Stable but Vulnerable | Curricular rigidity, credential obsolescence | AI-integrated learning models, modular reform |

| Religious Institutions | III/IV—Vulnerable/Low Threat | Theological incoherence, AI-led spiritual authority | Human-led doctrinal boundaries, ethical AI curation |

| Humanitarian Systems | II—AI-Disrupted but Adaptive | Algorithmic opacity in life-critical decisions | Participatory ethics, explainable aid systems |

| Strategic Goal | Recommended Action |

|---|---|

| Detect Expiration Early | AI resilience audits, Pressure Clock quadrant mapping |

| Target Reform Strategically | Reform pathways by quadrant severity |

| Clarify Final Accountability | Introduce URO roles and human-in-the-loop mandates. |

| Build Cognitive Readiness | AI-informed leadership retraining and staff development |

| Invest in Institutional Futures | Fund sandbox governance and adaptive regulatory models |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Frimpong, V. When Institutions Cannot Keep up with Artificial Intelligence: Expiration Theory and the Risk of Institutional Invalidation. Adm. Sci. 2025, 15, 263. https://doi.org/10.3390/admsci15070263

Frimpong V. When Institutions Cannot Keep up with Artificial Intelligence: Expiration Theory and the Risk of Institutional Invalidation. Administrative Sciences. 2025; 15(7):263. https://doi.org/10.3390/admsci15070263

Chicago/Turabian StyleFrimpong, Victor. 2025. "When Institutions Cannot Keep up with Artificial Intelligence: Expiration Theory and the Risk of Institutional Invalidation" Administrative Sciences 15, no. 7: 263. https://doi.org/10.3390/admsci15070263

APA StyleFrimpong, V. (2025). When Institutions Cannot Keep up with Artificial Intelligence: Expiration Theory and the Risk of Institutional Invalidation. Administrative Sciences, 15(7), 263. https://doi.org/10.3390/admsci15070263