Justice for the Crowd: Organizational Justice and Turnover in Crowd-Based Labor

Abstract

1. Introduction

2. Literature Review

2.1. Key Elements of Crowd-Based Labor

2.2. Review of Crowd-Based Labor Platforms

2.3. Platform Review Results

2.4. Benefits and Concerns of Crowd-Based Labor

2.5. Crowd-Based Labor Concerns and Their Relation to Human Resource Management (HRM)

2.6. Review of Organizational Justice

2.7. Review of Organizational Justice and Turnover in Crowdwork Literature

2.8. Literature Review Results

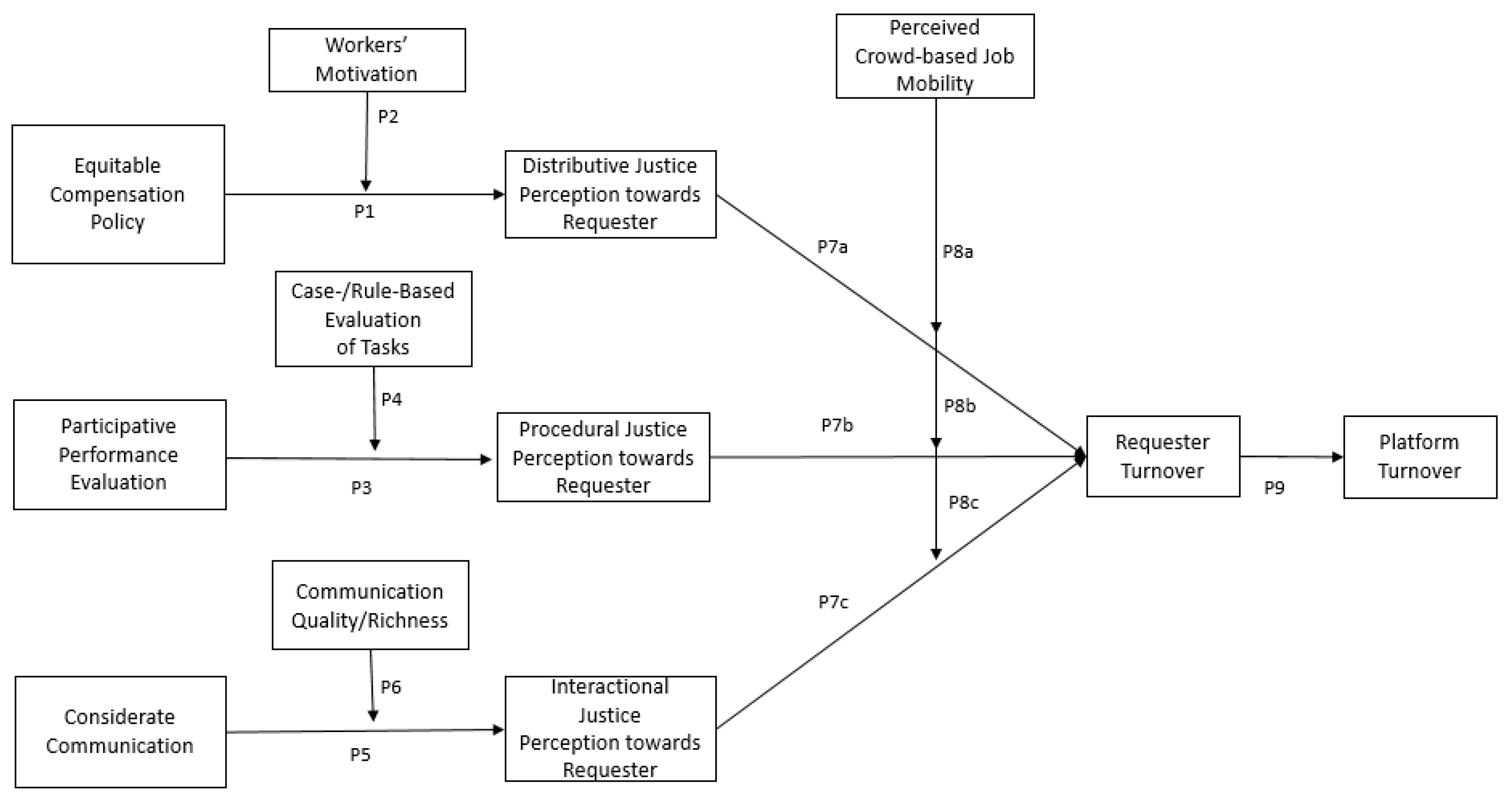

3. Conceptual Work—Antecedents of Crowd-Based Workers’ Organizational Justice Perception

3.1. Compensation Policy and Distributive Justice

3.2. Compensation Policy and Motivation

3.3. Performance Evaluation Methods and Procedural Justice

3.4. Case-Based vs. Rule-Based Performance Evaluation

3.5. Considerate Communication and Interactional Justice

3.6. Communication Quality

4. Conceptual Work—Outcomes of Organizational Justice Issues

4.1. Turnover

4.2. Job Mobility

4.3. Escalation of Crowd-Based Turnover

5. Discussion

5.1. General Discussion

5.2. Contributions

5.3. Theoretical Implications

5.4. Practical Implications

5.5. Future Research

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Acosta, Maribel, Amrapali Zaveri, Elena Simperl, Dimitris Kontokostas, Sören Auer, and Jens Lehmann. 2013. Crowdsourcing Linked Data Quality Assessment. In The Semantic Web. Edited by Harith Alani, Lalana Kagal, Achille Fokoue, Paul Groth, Chris Biemann, Josiane X. Parreira, Lora Aroyo, Natasha Noy, Chris Welty and Krzysztof Janowicz. Berlin/Heidelberg: Springer, pp. 260–76. [Google Scholar] [CrossRef]

- Adams, Stacy. 1963. Towards an understanding of inequity. The Journal of Abnormal and Social Psychology 67: 422–36. [Google Scholar] [CrossRef]

- Adams, Stacy, and Sara Freedman. 1976. Equity theory revisited: Comments and annotated bibliography. Advances in Experimental Social Psychology 9: 43–90. [Google Scholar] [CrossRef]

- Aguinis, Herman. 2009. Performance Management. Upper Saddle River: Pearson Prentice Hall. [Google Scholar]

- Aguinis, Herman, and Sola O. Lawal. 2013. Elancing: A review and research agenda for bridging the science–practice gap. Human Resource Management Review 23: 6–17. [Google Scholar] [CrossRef]

- Aguinis, Herman, Harry Joo, and Ryan K. Gottfredson. 2011. Why we hate performance management—And why we should love it. Business Horizons 54: 503–7. [Google Scholar] [CrossRef]

- Alam, Sultana L., and John Campbell. 2017. Temporal motivations of volunteers to participate in cultural Crowdsourcing work. Information Systems Research 28: 744–59. [Google Scholar] [CrossRef]

- Alberghini, Elena, Livio Cricelli, and Michele Grimaldi. 2013. KM versus enterprise 2.0: A framework to tame the clash. International Journal of Information Technology and Management 12: 320–36. [Google Scholar] [CrossRef]

- Allen, Natalie J., and John P. Meyer. 1996. Affective, continuance, and normative commitment to the organization: An examination of construct validity. Journal of Vocational Behavior 49: 252–76. [Google Scholar] [CrossRef]

- Askay, David. 2017. A conceptual framework for investigating organizational control and resistance in crowd-based platforms. Paper presented at the 50th Hawaii International Conference on System Sciences, Hilton Waikoloa Village, HI, USA, January 4–7. [Google Scholar]

- Baldwin, Carliss, and Eric Von Hippel. 2010. Modeling a paradigm shift: From producer innovation to user and open collaborative innovation. SSRN Electronic Journal. [Google Scholar] [CrossRef]

- Barnes, Sally-Anne, Anne Green, and Maria de Hoyos. 2015. Crowdsourcing and work: Individual factors and circumstances influencing employability. New Technology, Work and Employment 30: 16–31. [Google Scholar] [CrossRef]

- Barrett-Howard, Edith, and Tom R. Tyler. 1986. Procedural justice as a criterion in allocation decisions. Journal of Personality and Social Psychology 50: 296–304. [Google Scholar] [CrossRef]

- Behrend, Tara S., David J. Sharek, Adam W. Meade, and Eric N. Wiebe. 2011. The viability of crowdsourcing for survey research. Behavior Research Methods 43: 800–13. [Google Scholar] [CrossRef]

- Bies, Robert J. 2001. International (in)justice: The sacred and the profane. In Advances in Organization Justice. Edited by Jerald Greenberg and Russell Cropanzano. Stanford: Stanford University Press, pp. 89–118. [Google Scholar]

- Bies, Robert J., and Joseph S. Moag. 1986. Interactional communication criteria of fairness. Research in Organizational Behavior 9: 289–319. [Google Scholar]

- Blader, Steven L., and Tom R. Tyler. 2003. What constitutes fairness in work settings? A four-component model of procedural justice. Human Resource Management Review 13: 107–26. [Google Scholar] [CrossRef]

- Boons, Mark, Daan Stam, and Harry G. Barkema. 2015. Feelings of pride and respect as drivers of ongoing member activity on Crowdsourcing platforms. Journal of Management Studies 52: 717–41. [Google Scholar] [CrossRef]

- Boxall, Peter. 1998. Achieving competitive advantage through human resource strategy: Towards a theory of industry dynamics. Human Resource Management Review 8: 265–88. [Google Scholar] [CrossRef]

- Brabham, Daren C. 2008. Crowdsourcing as a model for problem solving. Convergence: The International Journal of Research into New Media Technologies 14: 75–90. [Google Scholar] [CrossRef]

- Brabham, Daren C. 2013. Crowdsourcing. Cambridge: MIT Press. [Google Scholar]

- Brashear, Thomas G., Chris Manolis, and Charles M. Brooks. 2005. The effects of control, trust, and justice on salesperson turnover. Journal of Business Research 58: 241–49. [Google Scholar] [CrossRef]

- Brawley, Alice M. 2017. The big, gig picture: We can’t assume the same constructs matter. Industrial and Organizational Psychology 10: 687–96. [Google Scholar] [CrossRef]

- Brawley, Alice M., and Cynthia L. Pury. 2016. Work experiences on MTurk: Job satisfaction, turnover, and information sharing. Computers in Human Behavior 54: 531–46. [Google Scholar] [CrossRef]

- Breaugh, James A., Leslie A. Greising, James W. Taggart, and Helen Chen. 2003. The relationship of recruiting sources and pre-hire outcomes: Examination of yield ratios and applicant quality. Journal of Applied Social Psychology 33: 2267–87. [Google Scholar] [CrossRef]

- Buettner, Ricardo. 2015. A systematic literature review of Crowdsourcing research from a human resource management perspective. Paper presented at the 2015 48th Hawaii International Conference on System Sciences, Kauai, HI, USA, January 5–8. [Google Scholar]

- Buhrmester, Michael, Tracy Kwang, and Samuel D. Gosling. 2011. Amazon’s mechanical turk. Perspectives on Psychological Science 6: 3–5. [Google Scholar] [CrossRef] [PubMed]

- Byrne, Zinta S., and Russell Cropanzano. 2001. The history of organizational justice: The founders speak. Justice in the Workplace: From Theory to Practice 2: 3–26. [Google Scholar]

- Camerer, Colin F., and Ernst Fehr. 2006. When does “Economic man” Dominate social behavior? Science 311: 47–52. [Google Scholar] [CrossRef]

- Campbell, John P., and Robert D. Pritchard. 1976. Motivation theory in industrial and organizational psychology. In Handbook of Industrial and Organizational Psychology. Edited by Marvin D. Dunnette. Chicago: Rand McNally College, pp. 63–130. [Google Scholar]

- Campbell, John P., and Brenton M. Wiernik. 2015. The modeling and assessment of work performance. Annual Review of Organizational Psychology and Organizational Behavior 2: 47–74. [Google Scholar] [CrossRef]

- Chaisiri, Sivadon. 2013. Utilizing human intelligence in a Crowdsourcing marketplace for big data processing. Paper presented at the 2013 International Conference on Parallel and Distributed Systems, Seoul, Korea, December 15–18. [Google Scholar]

- Chambers, Elizabeth G., Mark Foulon, Helen Handfield-Jones, Steven M. Hankin, and Edward G. Michaels. 1998. The War for Talent. The McKinsey Quarterly 1: 44–57. [Google Scholar]

- Chandler, Jesse, Gabriele Paolacci, and Pam Mueller. 2013. Risks and rewards of Crowdsourcing marketplaces. In Handbook of Human Computation. New York: Springer, pp. 377–92. [Google Scholar] [CrossRef]

- Chi, Robert, and Melody Y. Kiang. 1993. Reasoning by coordination: An integration of case-based and rule-based reasoning systems. Knowledge-Based Systems 6: 103–13. [Google Scholar] [CrossRef]

- Chiaburu, Dan S., and Sophia V. Marinova. 2006. Employee role enlargement. Leadership and Organization Development Journal 27: 168–82. [Google Scholar] [CrossRef]

- Colbert, Amy, Nick Yee, and Gerard George. 2016. The digital workforce and the workplace of the future. Academy of Management Journal 59: 731–39. [Google Scholar] [CrossRef]

- Colquitt, Jason A. 2001. On the dimensionality of organizational justice: A construct validation of a measure. Journal of Applied Psychology 86: 386–400. [Google Scholar] [CrossRef]

- Colquitt, Jason A., Donald E. Conlon, Michael J. Wesson, Christopher O. Porter, and Yee Ng. 2001. Justice at the millennium: A meta-analytic review of 25 years of organizational justice research. Journal of Applied Psychology 86: 425–45. [Google Scholar] [CrossRef]

- Colquitt, Jason A., and Jessica B. Rodell. 2015. Measuring justice and fairness. In The Oxford Handbook of Justice in the Workplace. Edited by Russell Cropanzano and Maureen L. Ambrose. Oxford: Oxford University Press, pp. 187–202. [Google Scholar] [CrossRef]

- Cropanzano, Russell, and Maureen L. Ambrose. 2001. Procedural and distributive justice are more similar than you think: A monistic perspective and a research agenda. In Advances in Organization Justice. Edited by Jerald Greenberg and Russell Cropanzano. Stanford: Stanford University Press, pp. 119–51. [Google Scholar]

- Cropanzano, Russell, Zinta S. Byrne, Ramona Bobocel, and Deborah E. Rupp. 2001a. Moral virtues, fairness heuristics, social entities, and other denizens of organizational justice. Journal of Vocational Behavior 58: 164–209. [Google Scholar] [CrossRef]

- Cropanzano, Russell, and Deborah E. Rupp. 2003. An overview of organizational justice: Implications for work motivation. In Motivation and Work Behavior. Edited by Lyman W. Porter, Gregory Bigley and Richard M. Steers. New York: McGraw-Hill Irwin, pp. 82–95. [Google Scholar]

- Cropanzano, Russell, Deborah E. Rupp, Carolyn J. Mohler, and Marshall Schminke. 2001b. Three roads to organizational justice. In Research in Personnel and Human Resources Management. Edited by Ronald M. Buckley, Jonathon R. B. Halbesleben and Anthony R. Wheeler. Bingley: Emerald Group Publishing Limited, pp. 1–113. [Google Scholar]

- Cropanzano, Russell, Deborah E. Rupp, and Zinta S. Byrne. 2003. The relationship of emotional exhaustion to work attitudes, job performance, and organizational citizenship behaviors. Journal of Applied Psychology 88: 160–69. [Google Scholar] [CrossRef] [PubMed]

- Cugueró-Escofet, Natàlia, and Josep M. Rosanas. 2013. The just design and use of management control systems as requirements for goal congruence. Management Accounting Research 24: 23–40. [Google Scholar] [CrossRef]

- Cullina, Eoin, Kieran Conboy, and Lorraine Morgan. 2015. Measuring the crowd: A preliminary taxonomy of crowdsourcing metrics. Paper presented at the 11th International Symposium on Open Collaboration, San Francisco, CA, USA, August 19–21. [Google Scholar]

- Cummings, Larry L., and Donald P. Schwab. 1973. Performance in Organizations: Determinants and Appraisal. Culver City: Good Year Books. [Google Scholar]

- Daft, Richard L., and Robert H. Lengel. 1986. Organizational information requirements, media richness and structural design. Management Science 32: 554–71. [Google Scholar] [CrossRef]

- Dahlman, Carl J. 1979. The problem of externality. The Journal of Law and Economics 22: 141–62. [Google Scholar] [CrossRef]

- Deci, Edward L. 1975. Intrinsic Motivation. New York: Plenum Press. [Google Scholar]

- Deci, Edward L., and Richard M. Ryan. 1985. The general causality orientations scale: Self-determination in personality. Journal of Research in Personality 19: 109–34. [Google Scholar]

- Deci, Edward L., and Richard M. Ryan. 2000. The “What” and “Why” of goal pursuits: Human needs and the self-determination of behavior. Psychological Inquiry 11: 227–68. [Google Scholar] [CrossRef]

- Deng, Xuefei, Kshiti D. Joshi, and Robert D. Galliers. 2016. The duality of empowerment and marginalization in Microtask Crowdsourcing: Giving voice to the less powerful through value sensitive design. MIS Quarterly 40: 279–302. [Google Scholar] [CrossRef]

- Dissanayake, Indika, Jie Zhang, and Bin Gu. 2015. Task division for team success in Crowdsourcing contests: Resource allocation and alignment effects. Journal of Management Information Systems 32: 8–39. [Google Scholar] [CrossRef]

- Duggan, James, Ultan Sherman, Ronan Carbery, and Anthony McDonnell. 2020. Algorithmic management and app-work in the gig economy: A research agenda for employment relations and HRM. Human Resource Management Journal 30: 114–32. [Google Scholar] [CrossRef]

- Dutta, Soumitra, and Piero P. Bonissone. 1993. Integrating case- and rule-based reasoning. International Journal of Approximate Reasoning 8: 163–203. [Google Scholar] [CrossRef]

- Dworkin, Ronald. 1986. Law’s Empire. Cambridge: Harvard University Press. [Google Scholar]

- Eickhoff, Carsten, Christopher G. Harris, Arjen P. de Vries, and Padmini Srinivasan. 2012. Quality through flow and immersion: Gamifying crowdsourced relevance assessments. Paper presented at the 35th International ACM SIGIR Conference on Research and Development in Information Retrieval, Portland, OR, USA, August 12–16. [Google Scholar]

- Estellés-Arolas, Enrique, and Fernando González-Ladrón-de-Guevara. 2012. Towards an integrated crowdsourcing definition. Journal of Information Science 38: 189–200. [Google Scholar] [CrossRef]

- Faradani, Siamak, Björn Hartmann, and Panagiotis G. Ipeirotis. 2011. What’s the right price? Pricing tasks for finishing on time. Human Computation 11: 26–31. [Google Scholar]

- Faullant, Rita, Johann Füller, and Katja Hutter. 2017. Fair play: Perceived fairness in crowdsourcing competitions and the customer relationship-related consequences. Management Decision 55: 1924–41. [Google Scholar]

- Fernández-Macías, Enrique. 2017. Automation, Digitisation and Platforms: Implications for Work and Employment. Loughlinstown: Eurofound. Available online: https://www.eurofound.europa.eu/publications/report/2018/automation-digitisation-and-platforms-implications-for-work-and-employment (accessed on 11 November 2020).

- Fieseler, Christian, Eliane Bucher, and Christian P. Hoffmann. 2019. Unfairness by design? The perceived fairness of digital labor on Crowdworking platforms. Journal of Business Ethics 156: 987–1005. [Google Scholar] [CrossRef]

- Folger, Robert G., and Russell Cropanzano. 1998. Organizational Justice and Human Resource Management. Thousand Oaks: SAGE. [Google Scholar]

- Fortin, Marion, Irina Cojuharenco, David Patient, and Hayley German. 2014. It is time for justice: How time changes what we know about justice judgments and justice effects. Journal of Organizational Behavior 37: S30–S56. [Google Scholar] [CrossRef]

- Franke, Nikolaus, Peter Keinz, and Katharina Klausberger. 2013. Does this sound like a fair deal: Antecedents and consequences of fairness expectations in the individual’s decision to participate in firm innovation. Organization Science 24: 1495–516. [Google Scholar] [CrossRef]

- Gassenheimer, Jule B., Judy A. Siguaw, and Gary L. Hunter. 2013. Exploring motivations and the capacity for business crowdsourcing. AMS Review 3: 205–16. [Google Scholar] [CrossRef]

- Gilliland, Stephen W. 1993. The perceived fairness of selection systems: An organizational justice perspective. Academy of Management Review 18: 694–734. [Google Scholar] [CrossRef]

- Gleibs, Ilka H. 2016. Are all “research fields” equal? Rethinking practice for the use of data from crowdsourcing market places. Behavior Research Methods 49: 1333–42. [Google Scholar] [CrossRef]

- Golding, Andrew R., and Paul S. Rosenbloom. 1996. Improving accuracy by combining rule-based and case-based reasoning. Artificial Intelligence 87: 215–54. [Google Scholar] [CrossRef]

- Goldman, Alan H. 2015. Justice and Reverse Discrimination. Princeton: Princeton University Press. [Google Scholar]

- Goldman, Barry, and Russell Cropanzano. 2015. “Justice” and “fairness” are not the same thing. Journal of Organizational Behavior 36: 313–18. [Google Scholar] [CrossRef]

- Gond, Jean-Pascal, Assâad El Akremi, Valérie Swaen, and Nishat Babu. 2017. The psychological microfoundations of corporate social responsibility: A person-centric systematic review. Journal of Organizational Behavior 38: 225–46. [Google Scholar] [CrossRef]

- Greenberg, Jerald. 1986. Determinants of perceived fairness of performance evaluations. Journal of Applied Psychology 71: 340–42. [Google Scholar] [CrossRef]

- Greenberg, Jerald. 1987. A taxonomy of organizational justice theories. Academy of Management Review 12: 9–22. [Google Scholar] [CrossRef]

- Greenberg, Jerald. 1993. The Social Side of Fairness: Interpersonal and Informational Classes of Organizational Justice. In Justice in the Workplace: Approaching Fairness in Human Resource Management. Edited by Russell Cropanzano. Mahwah: Lawrence Erlbaum Associates, pp. 79–103. [Google Scholar]

- Greenberg, Jerald, and Robert Folger. 1983. Procedural justice, participation, and the fair process effect in groups and organizations. In Basic Group Processes. New York: Springer, pp. 235–56. [Google Scholar] [CrossRef]

- Gregg, Dawn G. 2010. Designing for collective intelligence. Communications of the ACM 53: 134–38. [Google Scholar] [CrossRef]

- Hambley, Laura A., Thomas A. O’Neill, and Theresa J. Kline. 2007. Virtual team leadership: The effects of leadership style and communication medium on team interaction styles and outcomes. Organizational Behavior and Human Decision Processes 103: 1–20. [Google Scholar] [CrossRef]

- Harms, Peter D., and Justin A. DeSimone. 2015. Caution! MTurk workers ahead-Fines doubled. Industrial and Organizational Psychology 8: 183–90. [Google Scholar] [CrossRef]

- Heponiemi, Tarja, Marko Elovainio, Laura Pekkarinen, Timo Sinervo, and Anne Kouvonen. 2008. The effects of job demands and low job control on work–family conflict: The role of fairness in decision making and management. Journal of Community Psychology 36: 387–98. [Google Scholar] [CrossRef]

- Herr, Paul M., Frank R. Kardes, and John Kim. 1991. Effects of word-of-Mouth and product-attribute information on persuasion: An accessibility-diagnosticity perspective. Journal of Consumer Research 17: 454–62. [Google Scholar] [CrossRef]

- Hetmank, Lars. 2013. Components and Functions of Crowdsourcing Systems-A Systematic Literature Review. Wirtschaftsinformatik 4: 55–69. [Google Scholar] [CrossRef]

- Hossain, Mokter. 2012. Users’ motivation to participate in online crowdsourcing platforms. Paper presented at the 2012 International Conference on Innovation Management and Technology Research, Malacca, Malaysia, May 21–22. [Google Scholar]

- Howcroft, Debra, and Birgitta Bergvall-Kåreborn. 2019. A typology of Crowdwork platforms. Work, Employment and Society 33: 21–38. [Google Scholar] [CrossRef]

- Howe, Jeff. 2006. The Rise of Crowdsourcing. WIRED. June 1. Available online: https://www.wired.com/2006/06/crowds/ (accessed on 11 November 2020).

- Howe, Jeff. 2009. Crowdsourcing: Why the Power of the Crowd Is Driving the Future of Business. Fort Collins: Crown Pub. [Google Scholar]

- Internal Revenue Service. 2020. Topic No. 762 Independent Contractor vs. Employee. September 22. Available online: https://www.irs.gov/taxtopics/tc762 (accessed on 11 November 2020).

- Irani, Lilly. 2013. The cultural work of microwork. New Media and Society 17: 720–39. [Google Scholar] [CrossRef]

- Jäger, Georg, Laura S. Zilian, Christian Hofer, and Manfred Füllsack. 2019. Crowdworking: Working with or against the crowd? Journal of Economic Interaction and Coordination 14: 761–88. [Google Scholar] [CrossRef]

- Jebb, Andrew T., Scott Parrigon, and Sang Eun Woo. 2017. Exploratory data analysis as a foundation of inductive research. Human Resource Management Review 27: 265–76. [Google Scholar] [CrossRef]

- Jones, David A., and Daniel P. Skarlicki. 2003. The relationship between perceptions of fairness and voluntary turnover among retail Employees1. Journal of Applied Social Psychology 33: 1226–43. [Google Scholar] [CrossRef]

- Kamar, Ece, Severin Hacker, and Eric Horvitz. 2012. Combining human and machine intelligence in large-scale crowdsourcing. Paper presented at the 11th International Conference on Autonomous Agents and Multiagent Systems, Valencia, Spain, June 4–8. [Google Scholar]

- Kaufmann, Nicolas, Thimo Schulze, and Daniel Veit. 2011. More than fun and money. Worker Motivation in Crowdsourcing-A Study on Mechanical Turk. Paper presented at the 2011 Conference on Computer Supported Cooperative Work, Hangzhou, China, March 19–23. [Google Scholar]

- Keith, Melissa G., and Peter D. Harms. 2016. Is Mechanical Turk the answer to our sampling woes? Industrial and Organizational Psychology: Perspectives on Science and Practice 9: 162–67. [Google Scholar]

- Keith, Melissa G., Peter D. Harms, and Alexander C. Long. 2020. Worker health and well-being in the gig economy: A proposed framework and research agenda. In Research in Occupational Stress and Well Being. Bingley: Emerald Publishing Limited, pp. 1–33. [Google Scholar] [CrossRef]

- Keith, Melissa G., Louis Tay, and Peter D. Harms. 2017. Systems perspective of Amazon mechanical turk for organizational research: Review and recommendations. Frontiers in Psychology 8: 1359. [Google Scholar] [CrossRef]

- Kelman, Herbert C. 1958. Compliance, identification, and internalization three processes of attitude change. Journal of Conflict Resolution 2: 51–60. [Google Scholar] [CrossRef]

- Kelman, Herbert C. 2017. Further thoughts on the processes of compliance, identification, and internalization. In Social Power and Political Influence. Abingdon-on-Thames: Routledge, pp. 125–71. [Google Scholar]

- Kittur, Aniket, Jeffrey V. Nickerson, Michael Bernstein, Elizabeth Gerber, Aaron Shaw, John Zimmerman, Matt Lease, and John Horton. 2013. The future of crowd work. Paper presented at the 2013 Conference on Computer Supported Cooperative Work, San Antonio, TX, USA, February 23–27. [Google Scholar]

- Landers, Richard N., and Tara S. Behrend. 2015. An inconvenient truth: Arbitrary distinctions between organizational, mechanical turk, and other convenience samples. Industrial and Organizational Psychology 8: 142–64. [Google Scholar] [CrossRef]

- Lankford, William M., and Faramarz Parsa. 1999. Outsourcing: A primer. Management Decision 37: 310–16. [Google Scholar] [CrossRef]

- Latané, Bibb, James H. Liu, Andrzej Nowak, Michael Bonevento, and Long Zheng. 1995. Distance matters: Physical space and social impact. Personality and Social Psychology Bulletin 21: 795–805. [Google Scholar] [CrossRef]

- Lee, Moohun, Sunghoon Cho, Hyokyung Chang, Junghee Jo, Hoiyoung Jung, and Euiin Choi. 2008. Auditing system using rule-based reasoning in ubiquitous computing. Paper presented at the 2008 International Conference on Computational Sciences and Its Applications, Perugia, Italy, June 30–July 3. [Google Scholar]

- Leventhal, Gerald S. 1980. What should be done with equity theory? New approaches to the study of fairness in social relationships. In Social Exchange: Advances in Theory and Research. Edited by Kenneth J. Gergen, Martin S. Greenberg and Richard H. Willis. New York: Plenum, pp. 27–55. [Google Scholar] [CrossRef]

- Leung, Gabriel, and Vincent Cho. 2018. An Empirical Study of Motivation, Justice and Self-Efficacy in Solvers’ Continued Participation Intention in Microtask Crowdsourcing. Paper presented at the American Conference on Information Systems, New Orleans, LA, USA, August 16–18. [Google Scholar]

- Litman, Leib, Jonathan Robinson, and Cheskie Rosenzweig. 2014. The relationship between motivation, monetary compensation, and data quality among US- and India-based workers on mechanical turk. Behavior Research Methods 47: 519–28. [Google Scholar] [CrossRef]

- Liu, Ying, and Yongmei Liu. 2019. The effect of workers’ justice perception on continuance participation intention in the crowdsourcing market. Internet Research 29: 1485–508. [Google Scholar] [CrossRef]

- Locke, Edwin A. 2007. The case for inductive theory Building. Journal of Management 33: 867–90. [Google Scholar] [CrossRef]

- Ma, Xiao, Lara Khansa, and Jinghui Hou. 2016. Toward a contextual theory of turnover intention in online crowd working. Paper presented at the International Conference on Information Systems, Dublin, Ireland, December 11–14. [Google Scholar]

- Ma, Xiao, Lara Khansa, and Sung S. Kim. 2018. Active community participation and Crowdworking turnover: A longitudinal model and empirical test of three mechanisms. Journal of Management Information Systems 35: 1154–87. [Google Scholar] [CrossRef]

- Marjanovic, Sonja, Caroline Fry, and Joanna Chataway. 2012. Crowdsourcing based business models: In search of evidence for innovation 2.0. Science and Public Policy 39: 318–32. [Google Scholar] [CrossRef]

- Mandl, Irene, Maurizio Curtarelli, Sara Riso, Oscar Vargas-Llave, and Elias Georgiannis. 2015. New Forms of Employment. Loughlinstown: Eurofound. Available online: https://www.eurofound.europa.eu/publications/report/2015/working-conditions-labour-market/new-forms-of-employment (accessed on 11 November 2020).

- Mao, Andrew, Ece Kamar, Yiling Chen, Eric Horvitz, Megan E. Schwamb, Chris J. Lintott, and Arfon M. Smith. 2013. Volunteering versus work for pay: Incentives and tradeoffs in crowdsourcing. Paper presented at the First AAAI Conference on Human Computation and Crowdsourcing, Palm Springs, CA, USA, November 7–9. [Google Scholar]

- Marling, Cynthia R., Grace. J. Petot, and Leon S. Sterling. 1999. Integrating case-based and rule-based reasoning to meet multiple design constraints. Computational Intelligence 15: 308–32. [Google Scholar]

- Milland, Kristy. 2016. Crowdwork: The fury and the fear. In The Digital Economy and the Single Market. Edited by Werner Wobbe, Elva Bova and Catalin Dragomirescu-Gaina. Brussels: Foundation for European Progressive Studies, pp. 83–92. [Google Scholar]

- Mitchell, Terrence R. 1997. Matching motivational strategies with organizational contexts. In Research in Organizational Behavior. Edited by Larry L. Cummings and Barry M. Staw. Greenwich: JAI, pp. 57–149. [Google Scholar]

- Nakatsu, Robbie T., Elissa B. Grossman, and Charalambos L. Iacovou. 2014. A taxonomy of crowdsourcing based on task complexity. Journal of Information Science 40: 823–34. [Google Scholar]

- Opsahl, Robert L., and Marvin D. Dunnette. 1966. Role of financial compensation in industrial motivation. Psychological Bulletin 66: 94–118. [Google Scholar]

- Paolacci, Gabriele, Jesse Chandler, and Panagiotis G. Ipeirotis. 2010. Running experiments on Amazon Mechanical Turk. Judgment and Decision Making 5: 411–19. [Google Scholar]

- Porter, Christopher O., Ryan Outlaw, Jake P. Gale, and Thomas S. Cho. 2019. The use of online panel data in management research: A review and recommendations. Journal of Management 45: 319–44. [Google Scholar]

- Prentzas, Jim, and Ioannis Hatzilygeroudis. 2009. Combinations of case-based reasoning with other intelligent methods. International Journal of Hybrid Intelligent Systems 6: 189–209. [Google Scholar] [CrossRef]

- Prpić, John, Prashant P. Shukla, Jan H. Kietzmann, and Ian P. McCarthy. 2015. How to work a crowd: Developing crowd capital through crowdsourcing. Business Horizons 58: 77–85. [Google Scholar] [CrossRef]

- Rawls, John. 2005. A Theory of Justice. Cambridge: Harvard University Press. [Google Scholar]

- Rossille, Delphine, Jean-François Laurent, and Anita Burgun. 2005. Modelling a decision-support system for oncology using rule-based and case-based reasoning methodologies. International Journal of Medical Informatics 74: 299–306. [Google Scholar] [CrossRef] [PubMed]

- Rothschild, Michael L. 1999. Carrots, sticks, and promises: A conceptual framework for the management of public health and social issue behaviors. Journal of Marketing 63: 24–37. [Google Scholar] [CrossRef]

- Rousseau, Denise M. 1990. New hire perceptions of their own and their employer’s obligations: A study of psychological contracts. Journal of Organizational Behavior 11: 389–400. [Google Scholar] [CrossRef]

- Ryan, Richard M., and Edward L. Deci. 2000. The darker and brighter sides of human existence: Basic psychological needs as a unifying concept. Psychological Inquiry 11: 319–38. [Google Scholar] [CrossRef]

- Ryan, Ann Marie, and Jennifer L. Wessel. 2015. Implications of a changing workforce and workplace for justice perceptions and expectations. Human Resource Management Review 25: 162–75. [Google Scholar]

- Schaufeli, Wilmar B., Isabel M. Martinez, Alexandra Marques Pinto, Marisa Salanova, and Arnold B. Bakker. 2002. Burnout and engagement in University students. Journal of Cross-Cultural Psychology 33: 464–81. [Google Scholar] [CrossRef]

- Schulte, Julian, Katharina D. Schlicher, and Günter W. Maier. 2020. Working everywhere and every time? Chances and risks in crowdworking and crowdsourcing work design. Gruppe. Interaktion. Organisation. Zeitschrift für Angewandte Organisationspsychologie (GIO) 51: 59–69. [Google Scholar] [CrossRef]

- Segal, Lewis M., and Daniel G. Sullivan. 1997. The growth of temporary services work. Journal of Economic Perspectives 11: 117–36. [Google Scholar] [CrossRef]

- Semuels, Alana. 2018. The Internet Is Enabling a New Kind of Poorly Paid Hell. The Atlantic. February 5. Available online: https://www.theatlantic.com/business/archive/2018/01/amazon-mechanical-turk/551192/ (accessed on 11 November 2020).

- Shapiro, Debra L., Holly Buttner, and Bruce Barry. 1994. Explanations: What factors enhance their perceived adequacy? Organizational Behavior and Human Decision Processes 58: 346–68. [Google Scholar] [CrossRef]

- Sharpe-Wessling, Kathryn, Joel Huber, and Oded Netzer. 2017. MTurk character misrepresentation: Assessment and solutions. Journal of Consumer Research 44: 211–330. [Google Scholar] [CrossRef]

- Sheridan, Thomas B. 1992. Musings on telepresence and virtual presence. Presence: Teleoperators and Virtual Environments 1: 120–26. [Google Scholar] [CrossRef]

- Siemsen, Enno, Aleda V. Roth, and Sridhar Balasubramanian. 2007. How motivation, opportunity, and ability drive knowledge sharing: The constraining-factor model. Journal of Operations Management 26: 426–45. [Google Scholar] [CrossRef]

- Silberman, Six, Bill Tomlinson, Rochelle LaPlante, Joel Ross, Lilly Irani, and Andrew Zaldivar. 2018. Responsible research with crowds. Communications of the ACM 61: 39–41. [Google Scholar] [CrossRef]

- Simula, Henri, and Tuomas Ahola. 2014. A network perspective on idea and innovation crowdsourcing in industrial firms. Industrial Marketing Management 43: 400–8. [Google Scholar] [CrossRef]

- Sitkin, Sim B., and Robert J. Bies. 1993. Social accounts in conflict situations: Using explanations to manage conflict. Human Relations 46: 349–70. [Google Scholar] [CrossRef]

- Skarlicki, Daniel P., Danielle D. van Jaarsveld, Ruodan Shao, Young Ho Song, and Mo Wang. 2016. Extending the multifoci perspective: The role of supervisor justice and moral identity in the relationship between customer justice and customer-directed sabotage. Journal of Applied Psychology 101: 108–21. [Google Scholar] [CrossRef]

- Slade, Stephen. 1991. Case-based reasoning: A research paradigm. Artificial Intelligence Magazine 12: 42–55. [Google Scholar] [CrossRef][Green Version]

- Smith, Derek, Mohammad M. G. Manesh, and Asrar Alshaikh. 2013. How can entrepreneurs motivate Crowdsourcing participants? Technology Innovation Management Review 3: 23–30. [Google Scholar] [CrossRef]

- Steuer, Jonathan. 1992. Defining virtual reality: Dimensions determining telepresence. Journal of Communication 42: 73–93. [Google Scholar] [CrossRef]

- Stewart, Neil, Christoph Ungemach, Adam J. Harris, Daniel M. Bartels, Ben R. Newell, Gabriele Paolacci, and Jesse Chandler. 2015. The average laboratory samples a population of 7300 Amazon Mechanical Turk workers. Judgment and Decision Making 10: 479–91. [Google Scholar]

- Sundararajan, Arun. 2016. The Sharing Economy: The End of Employment and the Rise of Crowd-Based Capitalism. Cambridge: MIT Press. [Google Scholar]

- Surowiecki, James. 2005. The Wisdom of Crowds. New York: Anchor. [Google Scholar]

- Tekleab, Amanuel G., Kathryn M. Bartol, and Wei Liu. 2005. Is it pay levels or pay raises that matter to fairness and turnover? Journal of Organizational Behavior 26: 899–921. [Google Scholar] [CrossRef]

- Thibaut, John, and Laurens Walker. 1978. A theory of procedure. California Law Review 66: 541–66. [Google Scholar] [CrossRef]

- Thomas, Joe G., and Ricky W. Griffin. 1989. The power of social information in the workplace. Organizational Dynamics 18: 63–75. [Google Scholar] [CrossRef]

- Tyler, Tom R., and Robert J. Bies. 1990. Beyond Formal Procedures: The Interpersonal Context of Procedural Justice. In Applied Social Psychology and Organizational Settings. Edited by Jason S. Carroll. Mahwah: Erlbaum Associates, pp. 77–98. [Google Scholar] [CrossRef]

- Tyler, Tom R., and Steven L. Blader. 2000. Cooperation in Groups: Procedural Justice, Social Identity, and Behavioral Engagement. East Sussex: Psychology Press. [Google Scholar]

- Vander Elst, Tinne, Jacqueline Bosman, Nele De Cuyper, Jeroen Stouten, and Hans De Witte. 2013. Does positive affect buffer the associations between job insecurity and work engagement and psychological distress? A test among South African workers. Applied Psychology: An International Review 62: 558–70. [Google Scholar]

- Vukovic, Maja, and Arjun Natarajan. 2013. Operational excellence in IT services using enterprise Crowdsourcing. Paper presented at the 2013 IEEE International Conference on Services Computing, Santa Clara, CA, USA, June 28–July 3. [Google Scholar]

- Wang, Nan, Yongqiang Sun, Xiao-Liang Shen, and Xi Zhang. 2018. A value-justice model of knowledge integration in wikis: The moderating role of knowledge equivocality. International Journal of Information Management 43: 64–75. [Google Scholar]

- Watson, Ian, and Farhi Marir. 1994. Case-based reasoning: A review. The Knowledge Engineering Review 9: 327–54. [Google Scholar] [CrossRef]

- Weng, Jiaxiong, Huimin Xie, Yuanyue Feng, Ruoqing Wang, Yi Ye, Peiying Huang, and Xizhi Zheng. 2019. Effects of gamification elements on crowdsourcing participation: The mediating role of justice perceptions. Paper presented at the International Conference on Electronic Business, Newcastle upon Tyne, UK, December 8–12. [Google Scholar]

- Wexler, Mark N. 2011. Reconfiguring the sociology of the crowd: Exploring crowdsourcing. International Journal of Sociology and Social Policy 31: 6–20. [Google Scholar] [CrossRef]

- Wheeler, Anthony R., Vickie C. Gallagher, Robyn L. Brouer, and Chris J. Sablynski. 2007. When person-organization (mis)fit and (dis)satisfaction lead to turnover. Journal of Managerial Psychology 22: 203–19. [Google Scholar] [CrossRef]

- Woo, Sang Eun, Ernest H. O’Boyle, and Paul E. Spector. 2017. Best practices in developing, conducting, and evaluating inductive research. Human Resource Management Review 27: 255–64. [Google Scholar] [CrossRef]

- Yang, Congcong, Yuanyue Feng, Xizhi Zheng, Ye Feng, Ying Yu, Ben Niu, and Pianpian Yang. 2018. Fair or Not: Effects of Gamification Elements on Crowdsourcing Participation. Paper presented at the International Conference on Electronic Business, Guilin, China, December 2–6. [Google Scholar]

- Yuen, Man-Ching, Irwin King, and Kwong-Sak Leung. 2011. A survey of crowdsourcing systems. Paper presented at the 2011 IEEE Third International Conference on Privacy, Security, Risk and Trust and 2011 IEEE Third International Conference on Social Computing, Boston, MA, USA, October 9–11. [Google Scholar]

- Zhao, Yuxiang, and Qinghua Zhu. 2012. Exploring the motivation of participants in crowdsourcing contest. Paper presented at the 33rd International Conference on Information Systems, Melbourne, Australia, June 28–July 2. [Google Scholar]

- Zheng, Haichao, Dahui Li, and Wenhua Hou. 2011. Task design, motivation, and participation in Crowdsourcing contests. International Journal of Electronic Commerce 15: 57–88. [Google Scholar] [CrossRef]

- Zou, Lingfei, Jinlong Zhang, and Wenxing Liu. 2015. Perceived justice and creativity in crowdsourcing communities: Empirical evidence from China. Social Science Information 54: 253–79. [Google Scholar] [CrossRef]

| Fernandez-Macias (2017) (The Author Termed Online-Based Work as “Crowd Work” and Offline-Based Work as “gig Work”) | Duggan et al. (2020) (The Authors Used the Term “gig Work” to Describe All Three Types Below) | Howcroft and Bergvall-Kåreborn (2019) |

|---|---|---|

| Online-based work | Crowdwork—tasks are assigned to and finished by a geographically dispersed crowd, with requesters and workers connected by online platforms. | Type A work—tasks assigned to and finished by workers online. |

| Type B work—“playbour” tasks assigned to and finished by workers online. Workers finish tasks primarily for fun and joy, instead of being compensated. | ||

| Online- and/or offline-based work a | Type D work—profession-based freelance work, with requesters and workers connected by online platforms. Workers deliver services either online or offline. | |

| Offline-based work | Capital Platform Work—products sold or leased offline, with buyers and sellers connected by online platforms. | Type C work—asset-based services, with requesters and workers connected by online platforms. Workers deliver service offline by utilizing assets/equipment owned by workers. |

| App Work—tasks deployed to worker and finished offline, with requesters and workers connected by online platforms. |

| Platform Name and Founding Year | Platform’s Business Mode a | Compensation Policy | Payment Procedure | Performance Evaluation Process | Case-Based vs. Rule-Based Evaluation | Platform-Supported Communication b |

|---|---|---|---|---|---|---|

| Aileensoul 2017 | Requesters will not need to pay the commission. | Workers are compensated for the completion of tasks posted by requesters | No escrow accounts Requester makes direct payment to the worker upon the completion of the task | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| ClickWorker 2005 | Requesters will need to pay 40% of the compensation amount as commission to the platform The platform sets the minimum compensation rate | Workers are compensated for the completion of their corresponding tasks posted by requesters. | Requester makes the upfront payment to an escrow account held by either platform; the fund will be released to the worker upon the completion of the requested task | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| CloudPeeps 2015 | Requesters will need to pay 5%–15% of the compensation amount as commission to the platform, plus 2.9% processing fees Requesters can also choose a subscription plan and pay a monthly fee to reduce the commission percentage | Workers are compensated for completion of their corresponding tasks posted by requesters Workers can also be compensated on an hourly basis | Requester makes the upfront payment to an escrow account held by either platform; the fund will be released to the worker upon the completion of the requested task | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Expert 360 2013 | Requesters will not need to pay the commission; however, 15% of the total payment will be deducted from workers’ earnings and go to the platform | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes direct payment to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Fiverr 2010 | Requesters will not need to pay the commission; however, 20% of the total payment will be deducted from workers’ earnings and go to the platform | Workers will receive compensation upon the completion of their corresponding task | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| FlexJobs 2007 | Requesters will not need to pay the commission The requester needs to subscribe to the platform by paying a monthly fee Workers also need to subscribe to the platform by paying a monthly fee | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes direct payment to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Freelancer 2009 | Requesters will not need to pay the commission, however, 3% of the compensation or $3 (or its approximate equivalent in other currencies) -whichever is greater - is collected by the platform when the workers are compensated | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks. | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Freelancermap 2011 | Requesters will not need to pay the commission Workers need to subscribe to the platform by paying a monthly fee | Workers will receive compensation upon the completion of their corresponding tasks | Requester make direct payment to the worker upon the completion of tasks, the platform does not involve in payment to workers | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site text message |

| FreeUp 2015 | Requesters will need to pay 15% of the compensation amount as commission to the platform | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Giggrabbers 2015 | Requesters will not need to pay the commission; however, 9.5% of the total payment will be deducted from workers’ earnings and go to the platform | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Guru 1998 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings Platform sets minimum compensation rate | Workers will receive compensation upon the completion of their corresponding tasksRequesters can compensate workers on an hourly basis | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Idea Connection 2007 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings | Participants receive compensation upon solving the problems. | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Case-based | In-site multi-media message |

| iJobDesk 2018 | Requesters will need to pay 2% of the compensation amount as commission to the platform The platform sets the minimum compensation rate | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site text message |

| InnoCentive 2001 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Case-based | In-site multi-media message |

| LocalLancers 2013 | Requesters will not need to pay the commission | Workers will receive compensation upon the completion of their corresponding tasks | Requester make direct payment to the worker upon the completion of tasks, the platform does not involve in payment to workers | Requester evaluates the work and decides compensation | Rule-based Case-based | No in-site communication |

| LocalSolo 2014 | Requesters will not need to pay the commission The requester needs to subscribe to the platform by paying a monthly fee | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Case-based | In-site multi-media message |

| Mechanical Turk 2005 | Requesters will need to pay 20% of the compensation amount as commission to the platform | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| MediaBistro 1999 | Requesters will not need to pay the commission Requesters will need to pay for posting tasks on the platformWorkers will also need to subscribe to the platform by paying a monthly fee | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Micro Job Market 2018 | Requesters will not need to pay the commission. | Workers will receive compensation upon the completion of their corresponding tasks | Requester make direct payment to the worker upon the completion of tasks, the platform does not involve in payment to workers is out of the platform | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| MyRemoteTeam 2017 | Requesters will not need to pay the commission Requester will need to subscribe to the platform by paying a monthly fee | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Nexxt 1996 | Requesters will not need to pay the commission The requester needs to subscribe to the platform by paying a monthly fee | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| NineSigma 2000 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings | Participants will receive compensation when their proposals are accepted by the clients | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Case-based | In-site multi-media message |

| Oridle 2008 | Requesters will not need to pay the commission Requesters will need to subscribe to the platform by paying a monthly fee | Participants will receive compensation when their proposals are accepted by the clients | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | No in-site communication |

| Project4Hire 2009 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings | Participants will receive compensation when their proposals are accepted by the clients | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| People PerHour 2007 | Requesters will need to pay 10% of the compensation amount as commission to the platform | Workers will receive compensation upon the completion of their corresponding tasks The compensation is paid on an hourly basis | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Prolific 2014 | Requesters will need to pay 25% of the compensation amount as commission to the platform The platform sets the minimum compensation rate | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site text message |

| Rat Race Rebellion 1999 | Requesters will not need to pay the commission | Workers will receive compensation upon the completion of their corresponding tasks | Requester make direct payment to the worker upon the completion of tasks, the platform does not involve in payment to workers is out of the platform | Requester evaluates the work and decides compensation | Rule-based Case-based | No in-site communication |

| ServiceScape 2000 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings | Workers will receive compensation upon the completion of their corresponding tasks | The requester needs to add a valid payment method before workers start works, workers will be paid upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Skip the Drive 2013 | Requesters will not need to pay the commission The requester needs to pay to the platform for posting the task | Workers will receive compensation upon the completion of their corresponding tasks | Requester make direct payment to the worker upon the completion of tasks, the platform does not involve in payment to workers is out of the platform | Requester evaluates the work and decides compensation | Rule-based Case-based | No in-site communication |

| Soshace 2013 | Requesters will need to pay 10%–13% of the compensation amount as commission to the platform | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | No in-site communication |

| Speedlancer 2014 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Thumbtack 2009 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Toogit 2016 | Requesters will not need to pay the commission; however, an 8% “facilitator fee” will be deducted from workers’ earnings | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Toptal 2010 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Transformify 2015 | Requesters will not need to pay the commission Requester will need to either subscribe to the platform by paying a monthly fee or make a one-time payment for a job posting | Workers will receive compensation upon the completion of their corresponding tasks | The requester needs to add a valid payment method before workers start works, workers will be paid upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Truelancer 2014 | Requesters will not need to pay the commission; however, a certain amount of fee will be deducted from workers’ earnings | Workers will receive compensation upon the completion of their corresponding tasks. The platform sets the minimum compensation rate | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site text message |

| UpWork 2015 | Requesters will not need to pay the commission; however, 20% commission and 2.75% processing fees will be deducted from workers’ earnings | Workers will receive compensation upon the completion of their corresponding tasks. The compensation can also be paid on an hourly basis | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Virtual Vocations 2008 | Requesters will not need to pay the commission; however, workers need to subscribe for receiving task information | Workers will receive compensation upon the completion of their corresponding tasks | Requester make direct payment to the worker upon the completion of tasks, the platform does not involve in payment to workers is out of the platform | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| WeWork Remotely 2010 | Requesters will not need to pay the commission Requester will need to make a one-time payment for each job posting | Workers will receive compensation upon the completion of their corresponding tasks | Requester make direct payment to the worker upon the completion of tasks, the platform does not involve in payment to workers is out of the platform | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| Working Nomads 2014 | Requesters will not need to pay the commission The requester needs to make a one-time payment for each job posting | Workers will receive compensation upon the completion of their corresponding tasks | Requester make direct payment to the worker upon the completion of tasks, the platform does not involve in payment to workers is out of the platform | Requester evaluates the work and decides compensation | Rule-based Case-based | In-site multi-media message |

| YunoJuno 2012 | Requesters will need to pay the platform a certain amount of fee on top of the compensation amount that pays to workers. The fee rate depends on requesters’ subscription | Workers will receive compensation upon the completion of their corresponding tasks | Requester makes an upfront payment to an escrow account; the fund will be released to the worker upon the completion of tasks | Requester evaluates the work and decides compensation | Rule-based Case-based | No in-site communication |

| Author and Year | Type | Antecedent(s) | Mediator | Outcome(s) |

|---|---|---|---|---|

| Faullant et al. (2017) | Empirical |

|

|

|

| Franke et al. (2013) | Empirical |

|

|

|

| Leung and Cho (2018) | Empirical |

|

| |

| Liu and Liu (2019) | Empirical |

|

|

|

| Ma et al. (2016) | Empirical |

|

|

|

| Ma et al. (2018) | Empirical |

|

|

|

| Wang et al. (2018) | Empirical |

|

|

|

| Weng et al. (2019) | Empirical |

|

|

|

| Yang et al. (2018) | Empirical |

|

|

|

| Zou et al. (2015) | Empirical |

|

|

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, X.; Lowman, G.H.; Harms, P. Justice for the Crowd: Organizational Justice and Turnover in Crowd-Based Labor. Adm. Sci. 2020, 10, 93. https://doi.org/10.3390/admsci10040093

Song X, Lowman GH, Harms P. Justice for the Crowd: Organizational Justice and Turnover in Crowd-Based Labor. Administrative Sciences. 2020; 10(4):93. https://doi.org/10.3390/admsci10040093

Chicago/Turabian StyleSong, Xiaochuan, Graham H. Lowman, and Peter Harms. 2020. "Justice for the Crowd: Organizational Justice and Turnover in Crowd-Based Labor" Administrative Sciences 10, no. 4: 93. https://doi.org/10.3390/admsci10040093

APA StyleSong, X., Lowman, G. H., & Harms, P. (2020). Justice for the Crowd: Organizational Justice and Turnover in Crowd-Based Labor. Administrative Sciences, 10(4), 93. https://doi.org/10.3390/admsci10040093