The air quality index (AQI) is monitored by regional and district councils for planning purposes, particularly in areas experiencing significant industrial growth and land-use intensification [

1,

2]. The AQI is reported with the following classification (good, moderate, unhealthy, and hazardous) annually by Land Air Water Aotearoa (LAWA) in New Zealand and is used to inform policies and procedures necessary to maintain or improve the air quality as it impacts the population’s health [

3]. Outdoor air quality not only impacts the health of those spending time outdoors, but also has a big impact on indoor air quality (IAQ) as it is drawn in by ventilation and air-conditioning systems, and hence significantly impacts the health of those spending time indoors. Fanger [

4] illustrated how this negative impact could be found with poor IAQ, impacting respiratory health, and exacerbating allergies and asthma. Boamponsem et al. [

5] analysed the sources and trends of outdoor pollutants in Auckland, New Zealand between 2006 and 2016, attributed them to traffic emissions and biomass burning, and identified a pressing need to enhance air quality to protect public health. Ancelet et al. [

6] performed a similar study on PM10 in Nelson, New Zealand and found distinct diurnal patterns with morning and evening peaks primarily driven by biomass combustion, with additional contributions from vehicles, marine aerosols, shipping sulfates, and crustal matter. This indicates that both anthropogenic sources, such as residential heating and traffic, and natural phenomena, such as sea spray aerosols, significantly influence local air quality. While the Auckland and Nelson air quality studies focus on understanding pollution sources and trends to inform targeted emission reduction strategies, [

7], in their analysis of Toronto’s transit system, highlight the importance of climate change on outdoor air quality and adapting urban transit management practices to evolving environmental conditions. Both contexts highlight the necessity of integrating climate and environmental considerations into urban planning to mitigate adverse effects on public health, infrastructure reliability, and overall urban resilience [

7].

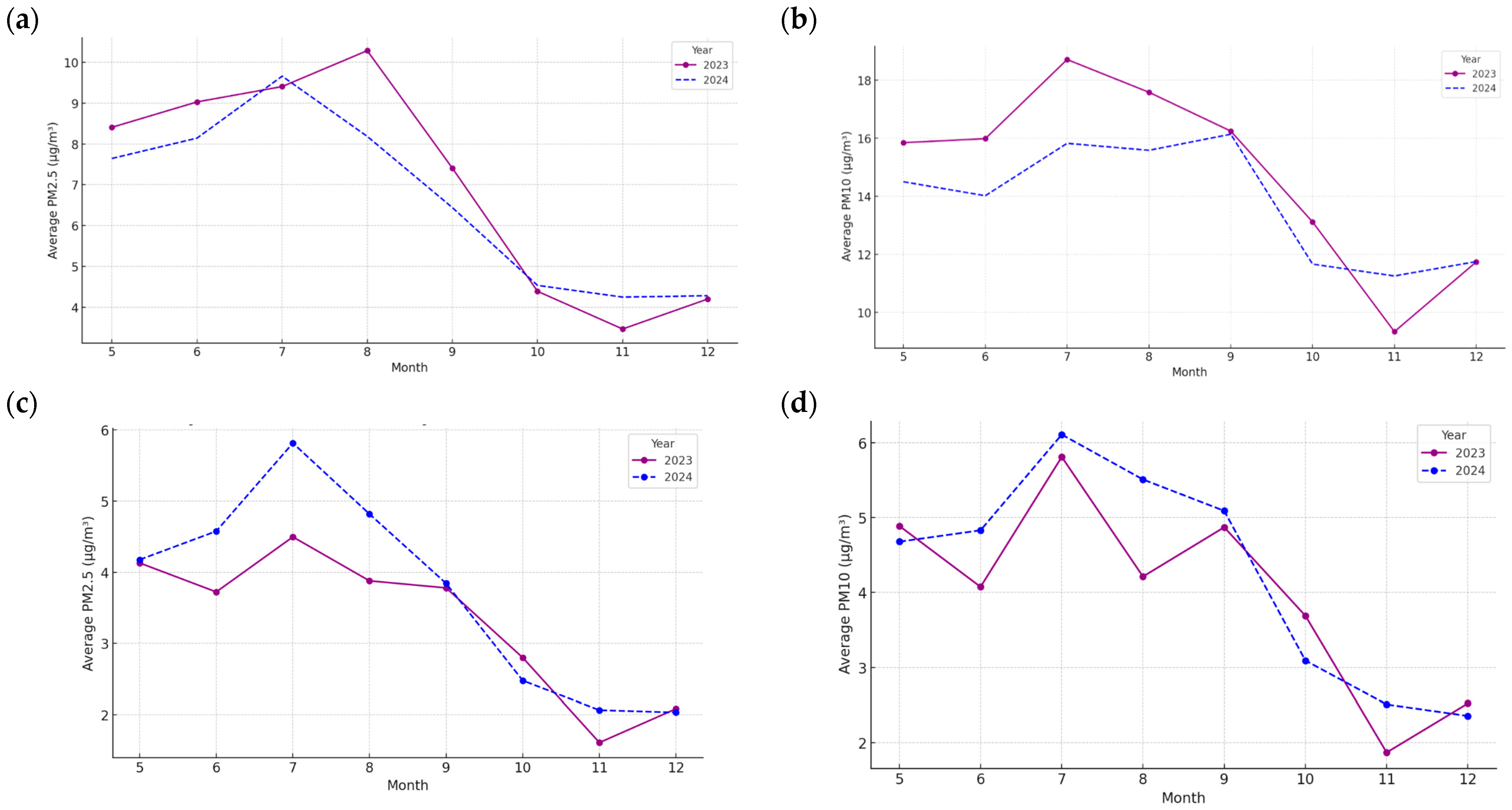

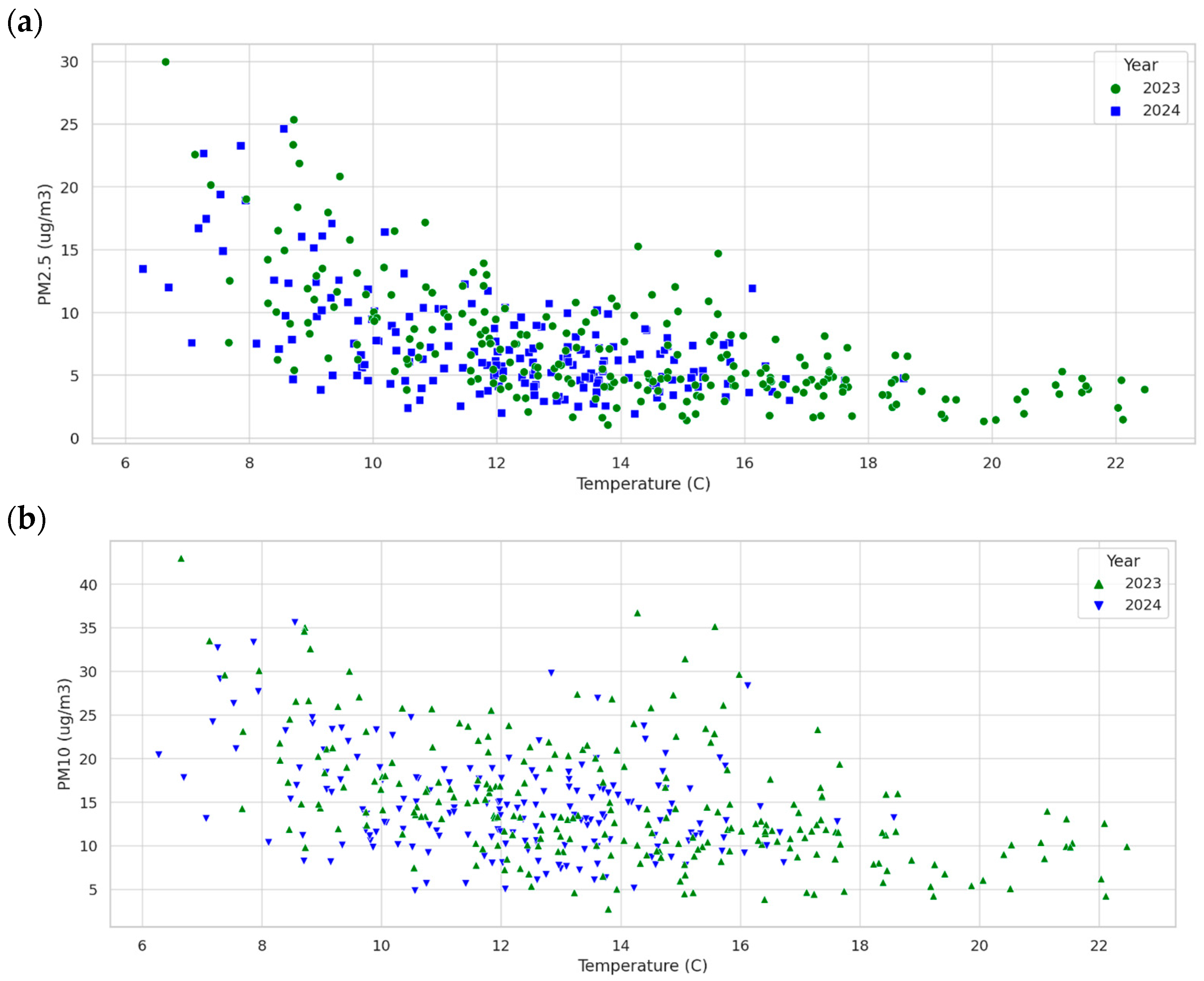

In Hamilton, New Zealand, Williams et al. [

8] compared the particulate matter (PM2.5) between classrooms based in the central city campus and others based on the rural fringe of Hamilton as a semi-urban. The results also indicate that when outdoor PM2.5 levels exceed the city council threshold of 25 μg/m

3, the impact is proportionally reflected in the indoor readings. This effect varies between urban and semi-urban areas and is more pronounced in semi-urban settings.

1.1. Machine Learning in Air Quality

Muthukumar et al. [

9] note that to estimate the patterns and correlations for PM2.5, we need to understand the behaviour of these air pollution indicators to predict it in advance. Therefore, this prediction requires advanced deep learning predictive models to achieve a new air quality index customised for each city and region. Therefore, researchers conducted spatiotemporal air quality analysis to achieve a correlation with the seasonal changes in PM2.5 levels using different machine learning tools [

10,

11]. Machine learning using satellite data enhances air quality modelling by addressing challenges such as spatial variability, imbalanced data, and effective model validation and interpretation, and data could be sent to a foresight lab to conduct further predictive analysis and compare urban and industrial air quality data. This approach improves the accuracy and generalisability of models, benefiting environmental management and health assessments for air pollutants and greenhouse gases [

12]. Ravindiran et al. [

13] examined the air quality index for the Indian state of Andhra Pradesh’s Visakhapatnam city using machine learning models such as the light gradient boosting machine (LightGBM), random forest, Gatboost, AdaBoost, and XGboost to forecast the AQI. Their machine learning models predicted the AQI, with the random forest and Gatboost models showing maximum correlations of 0.9998 and 0.9936, respectively. Gupta et al. [

14] used three different algorithms to draw a comparative analysis of the AQI values of several cities in India using different parameters, such as PM2.5 and PM10, due to high population density. These algorithms were the support vector regression (SVR), random forest regression (RFR), and CatBoost regression (CR). The RFR and CR models provided high accuracy compared to the synthetic minority oversampling technique (SMOTE) algorithm. Additionally, their results indicate that some algorithms proved to be more accurate for cities than others. For example, CR gave the highest accuracy for New Delhi and Bangalore cities; however, RFR came with the highest accuracy in Kolkata and Hyderabad.

Senthivel and Chidambaranathan [

15] explored different machine learning tools to forecast and classify the AQI for a selected city. These tools could generate different accuracies; therefore, it is important to profile the right tool for each city. They used logistic regression, gradient boosting (GB), decision tree (DT), deep natural networks (DNN), support vector regression, a support vector classifier, random forest tree, naive Bayes classifier, and k-nearest neighbour. The results show that the algorithms for naive Bayes, GB regression, and deep natural networks are recommended for forecasting AQI. However, the results show that logistic regression is recommended for predicting classes. Additionally, the results conclude that each dataset and geographical area would require different machine learning techniques.

Zhang et al. [

16] presented a comprehensive survey for AQ prediction using deep learning with a focus on research conducted for the period of 2017–2023 in different study areas such as China, the USA, India, Iran, Spain, Korea, and Saudi Arabia. These studies stressed the importance of selecting a suitable algorithm for each city based on the population size and industrial activities. The mean absolute percentage error (MAPE) was 0.698 using spatial attention-embedded (SpAttRNN) [

17] and 8.07 using a combined empirical mode decomposition (EMD) of LSTM with improved particle swarm optimisation (IPSO) to become EMD-IPSO-LSTM [

18] in Beijing.

Zaini et al. [

19] found that the ensemble empirical mode decomposition and long short-term memory (EEMD-LSTM) hybrid model is the recommended method for forecasting PM2.5 as this model outperformed other deep learning models for AQI prediction for urban areas, such as Kuala Lumpur, West Malaysia, with high industrial activities and urbanisation. The proposed method decreased the forecasting error compared to the empirical mode decomposition with long short-term memory architecture by 49.49% regarding root mean square error (RMSE).

Zhao et al. [

20] found that the hybrid deep learning framework outperformed traditional models for AQI prediction during the COVID-19 pandemic in Wuhan and Shanghai. They found that lockdowns improved AQI prediction accuracy using the long-term short-term memory network (LSTM) and the bidirectional LSTM (Bi-LSTM).

Li and Jiang [

21] demonstrated that advanced hybrid models such as the Loess STL-TCN-BiLSTM with dependency matrix attention can predict multiple air pollutants (including PM2.5 and PM10) with high accuracy in Beijing, achieving mean absolute percentage errors as low as 6.8%. Similarly, Mohammadi et al. [

22] evaluated nine years of PM2.5 data from Isfahan, Iran, across several machine learning algorithms and found that artificial neural networks (ANNs) achieved the best performance (91.1% accuracy), outperforming KNN, SVM, and random forest. Vieru and Cărbureanu [

23], using data from 30 Romanian cities, highlighted AdaBoost and gradient boosting as being the most effective for real-time air quality prediction. In Russian cities, Gladkova and Saychenko [

24] showed that LSTM outperformed ARIMA and Facebook Prophet for three-month PM2.5 forecasts, achieving the lowest RMSE. Bai et al. [

25] further emphasised the value of hybrid deep learning approaches, where a CNN-LSTM model reached 91% accuracy in predicting PM2.5 in Qingdao, China. Collectively, these studies confirm that ensemble and deep learning approaches can capture the complex, nonlinear relationships between pollutants and meteorological factors, supporting our two-stage framework that combines clustering with supervised learning for localised air quality prediction in Hamilton.

Bellinger et al. [

26] surveyed 400 articles and identified 47 of these articles illustrating the processes using data mining in air pollution epidemiology with the aim to forecast these data based on recorded life data from 2000 to 2017. These studies identify three primary research areas: source apportionment, forecasting, and hypotheses for the increased use of machine learning applications. In England, Wood [

27] analysed trend attributes for PM2.5 forecasting using data from 2018 to 2022, while in Türkiye, Gundogdu and Elbir [

28] conducted a similar analysis using data from 2020 to 2021. Both studies relied on publicly available data from densely populated cities. The Wood [

27] study shows the mean PM2.5 average hourly values around 5.74–11.27 µg/m

3. However, the Türkiye PM2.5 ranges from 0.1 to 209.7 µg/m

3 with a standard deviation of 16.1 and 11.8 µg/m

3, respectively [

28]. The results show that the nonlinear autoregressive with exogenous inputs (NARX) model achieved a high score of 89% for Türkiye. However, the supervised machine learning models (SML) method used for England cities by Wood [

27] achieved a mean averaged error (MAE) range of 1–3 µg/m

3 for t0 to t + 3 h ahead. Huang et al. [

18] used three representative meteorological monitoring stations in Beijing, China, for the following period: 1 January 2020 to 31 December 2020. This study suggested combining empirical mode decomposition (EMD) with a gated recurrent unit (GRU) to predict PM2.5 concentrations, achieving improved results compared to single models with the support of using the LSTM algorithm.

1.2. Urban Pollution Forecasting

In New Zealand, the air quality is checked and reported by local councils and organisations, such as Land Air Water Aotearoa (LAWA), who help guide decisions for the environment and public safety [

3]. These reports usually put air quality into categories such as good, moderate, unhealthy, or hazardous, and they are helpful for councils to decide how to respond, especially in cities where there is more traffic or growing industry [

1,

2]. Outdoor air quality not only affects individuals who spend significant time outside, but also plays a crucial role in determining indoor air quality (IAQ), as pollutants are drawn indoors via ventilation systems [

4]. Poor IAQ has been linked to a range of adverse health effects, including respiratory illnesses, allergies, and asthma [

4]. Studies across various cities in New Zealand highlighted the influence of anthropogenic activities on outdoor air pollution. For example, Boamponsem et al. [

5] identified vehicular emissions and biomass combustion as dominant contributors to PM2.5 levels in Auckland between 2006 and 2016, while Ancelet et al. [

6] observed diurnal PM10 peaks in Nelson attributed primarily to residential wood burning and shipping activity.

Despite increasing interest in machine learning (ML) for air quality forecasting globally [

10,

13,

16], New Zealand-specific studies remain limited in scope and application. Internationally, researchers employed a wide range of models, including decision trees, support vector machines, and deep learning architectures, such as LSTM and CNN-LSTM, to model air quality patterns in large metropolitan regions, such as Beijing, Kuala Lumpur, and Visakhapatnam [

17,

19,

25]. These models demonstrated success in capturing nonlinear pollutant behaviour, enhancing spatial resolution, and improving short-term forecasts [

18,

20,

21]. However, such techniques have been largely underutilised in medium-sized New Zealand cities, where datasets are more fragmented and environmental conditions vary significantly between locations.

1.3. Aim of This Study

Current air quality studies in Hamilton are mostly descriptive or observational, focusing on single-point measurements or trend analysis without predictive modelling [

8]. There is a lack of research applying integrated ML approaches combining unsupervised (clustering) and supervised (classification) methods to generate AQI categories and forecast pollution levels across multiple urban and semi-urban sites. Moreover, no existing study tested model application by training on one location (e.g., Claudelands) and predicting on another (e.g., Rotokauri), which is essential for validating real-world applicability. This absence of cross-location validation and clustering-informed prediction limits the ability of environmental agencies to deploy scalable, adaptive models for public use.

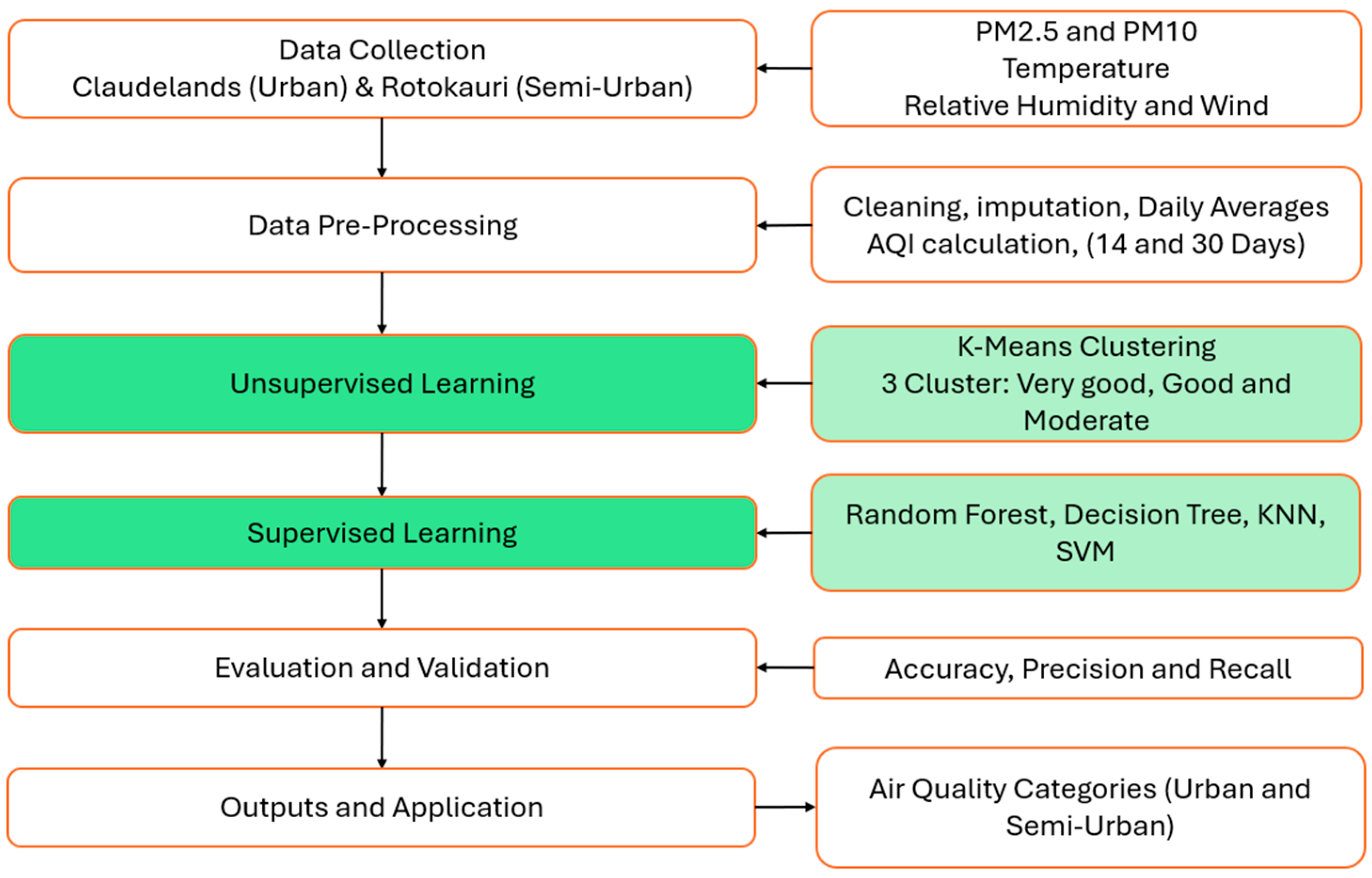

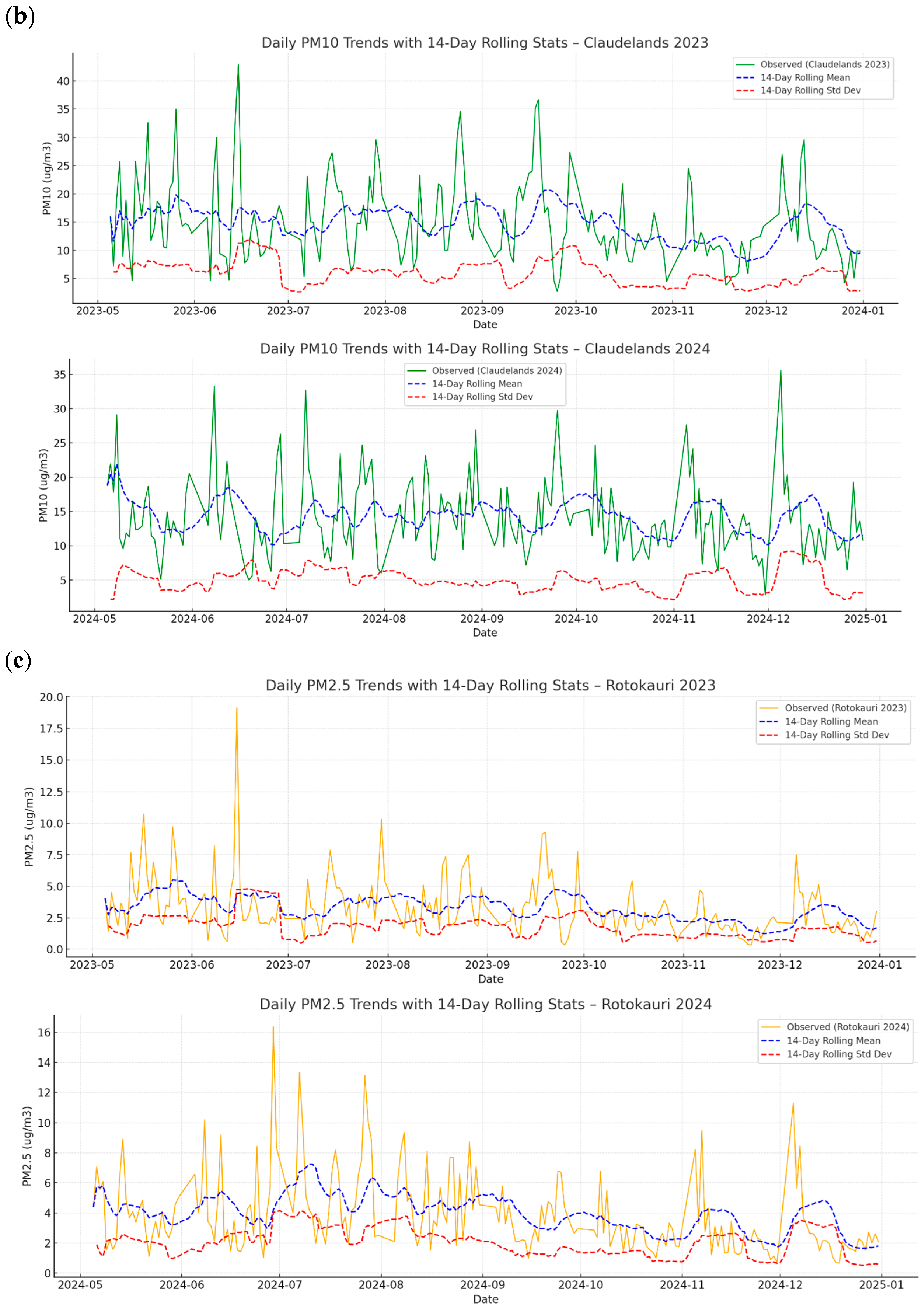

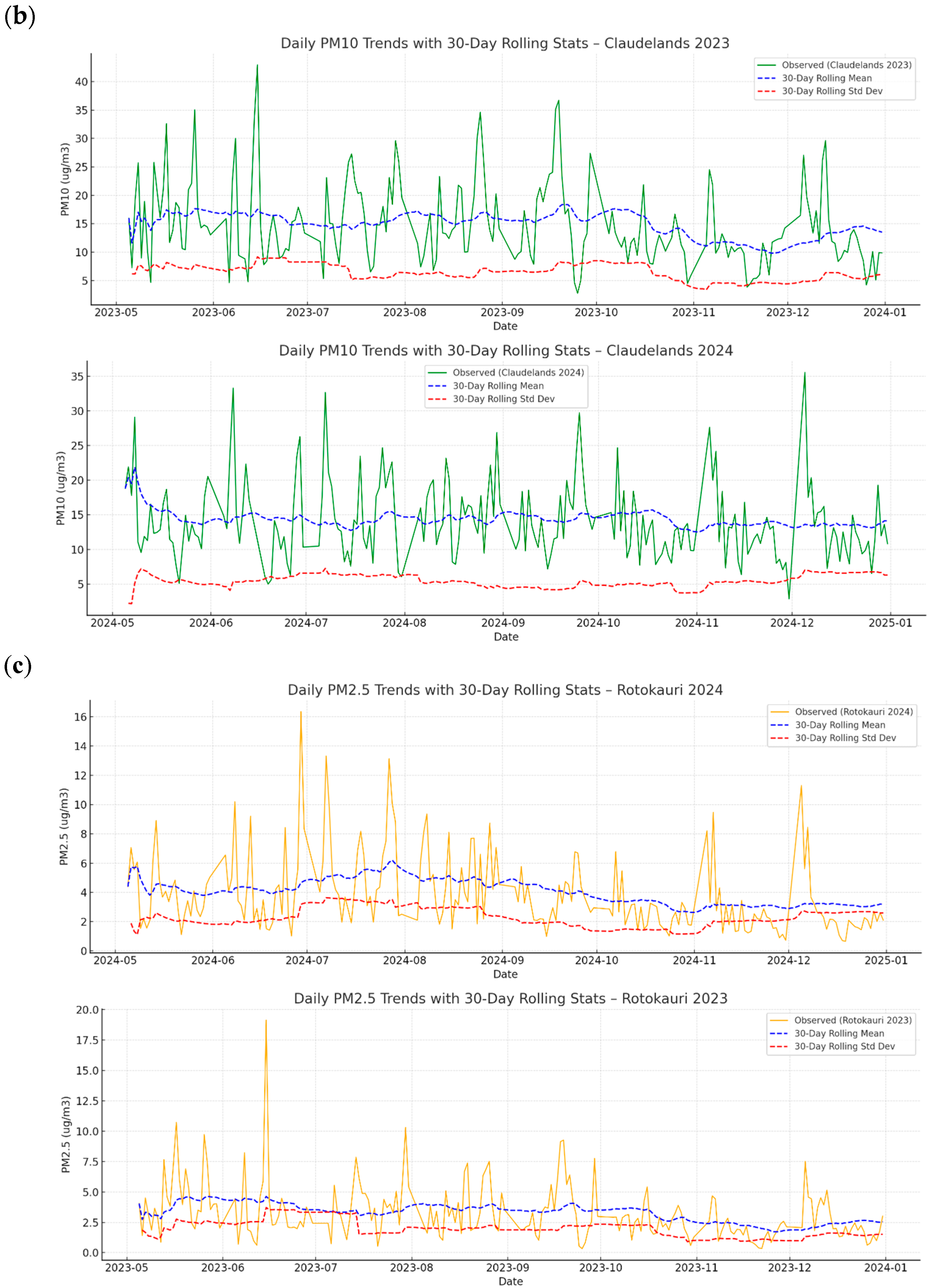

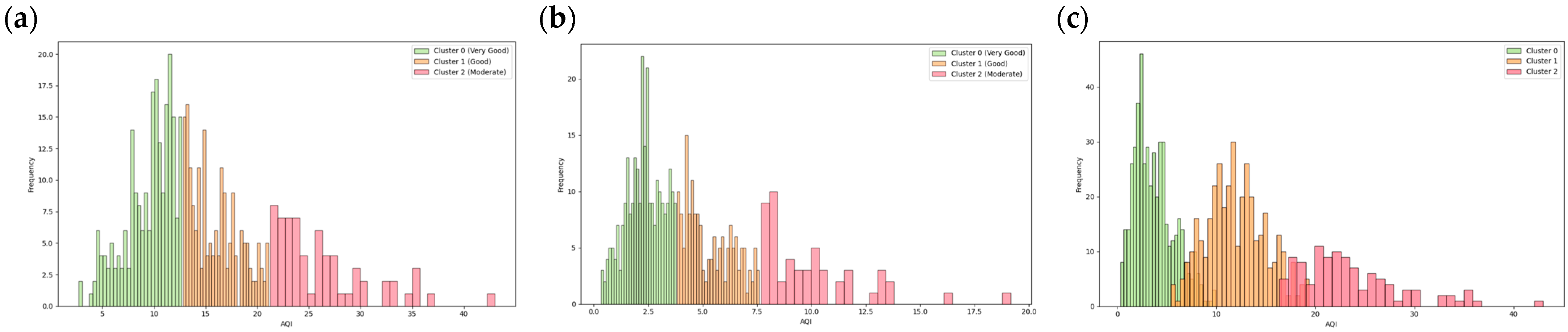

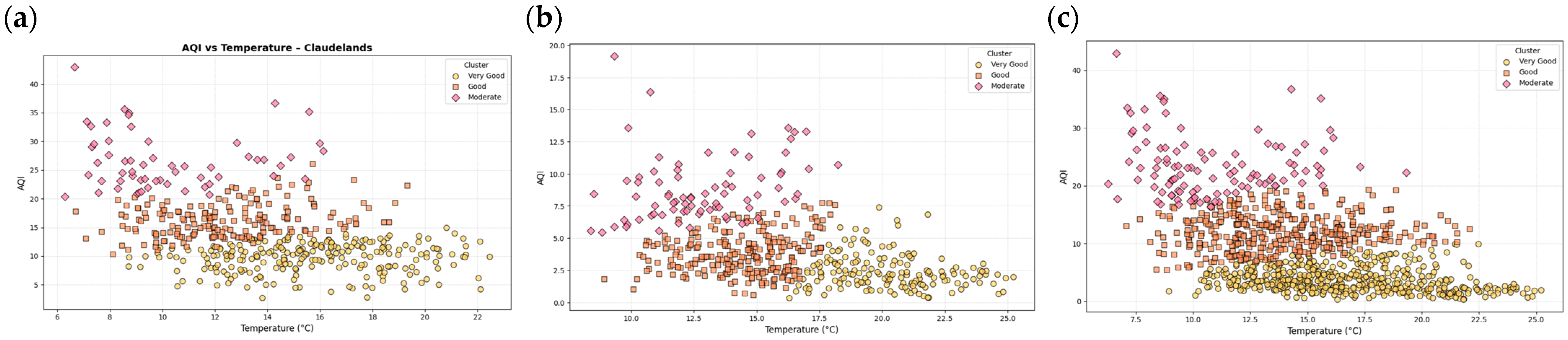

This study addresses the above gaps by leveraging machine learning to classify and predict air quality trends at two spatially distinct sites in Hamilton Claudelands (urban) and Rotokauri (semi-urban). Using a two-stage approach, we first apply k-means clustering to derive natural groupings in air quality data, followed by the use of supervised models, including decision trees, random forests, support vector machines, and k-nearest neighbours, to predict AQI clusters. Data pre-processing steps, such as rolling averages, imputation, and feature harmonisation, are used to ensure temporal and spatial consistency.

The aim of this study is twofold: (1) to assess the performance of widely used machine learning models on short-term urban air quality prediction, and (2) to examine whether combining clustering with supervised methods improves predictive performance relative to standalone approaches. While not intended as a fully developed technological innovation at this stage, the study contributes methodological innovation by demonstrating that cluster-informed modelling enhances both interpretability and predictive accuracy. In this way, the study provides practical insights for medium-sized cities with limited monitoring infrastructure while advancing methodological approaches for air quality prediction.

This work not only contributes to the emerging field of AI-driven environmental monitoring in Aotearoa, New Zealand, but also aligns with sustainable development goals (SDG 3: Good Health and Well-being; SDG 11: Sustainable Cities and Communities) by promoting proactive air quality management; that is, enabling early prediction of pollution episodes and supporting timely interventions by councils and communities rather than relying solely on reactive responses.

Each city requires a special algorithm to predict the AQI to forecast the particulate matters such as PM2.5 and PM10. This is necessary to meet the sustainable development goals (SDGs) for health and improving urban sustainability, aligning with SDG 3 (Good Health and Well-being) and SDG 11 (Sustainable Cities and Communities).

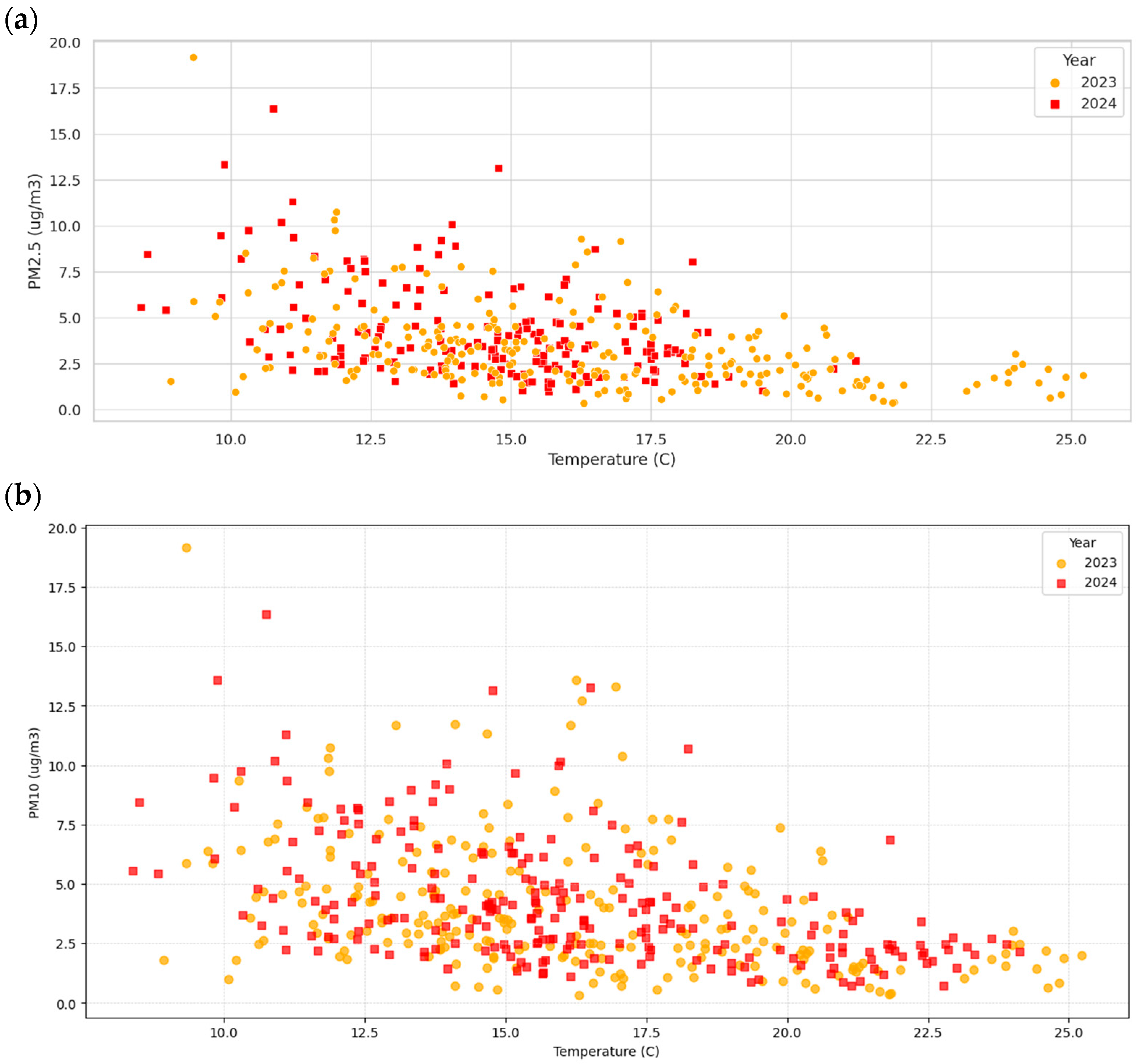

Prediction of outdoor AQI trends based on data from prior years can assist in assessing the risk of increased particulate matter when extrapolating or transferring data from one sensor location to another, thereby enabling a more reliable interpretation of spatial air quality patterns rather than directly mitigating the risk itself.

We predict and forecast the PM2.5 at a specific location, such as semi-urban, and compare it with two air quality monitoring systems based in the Hamilton city centre. The locations for the stations are Hamilton Airshed-Claudelands and Hamilton Airshed-Bloodbank, at a distance from our specific location of 5.7 km and 6.78 km, respectively.