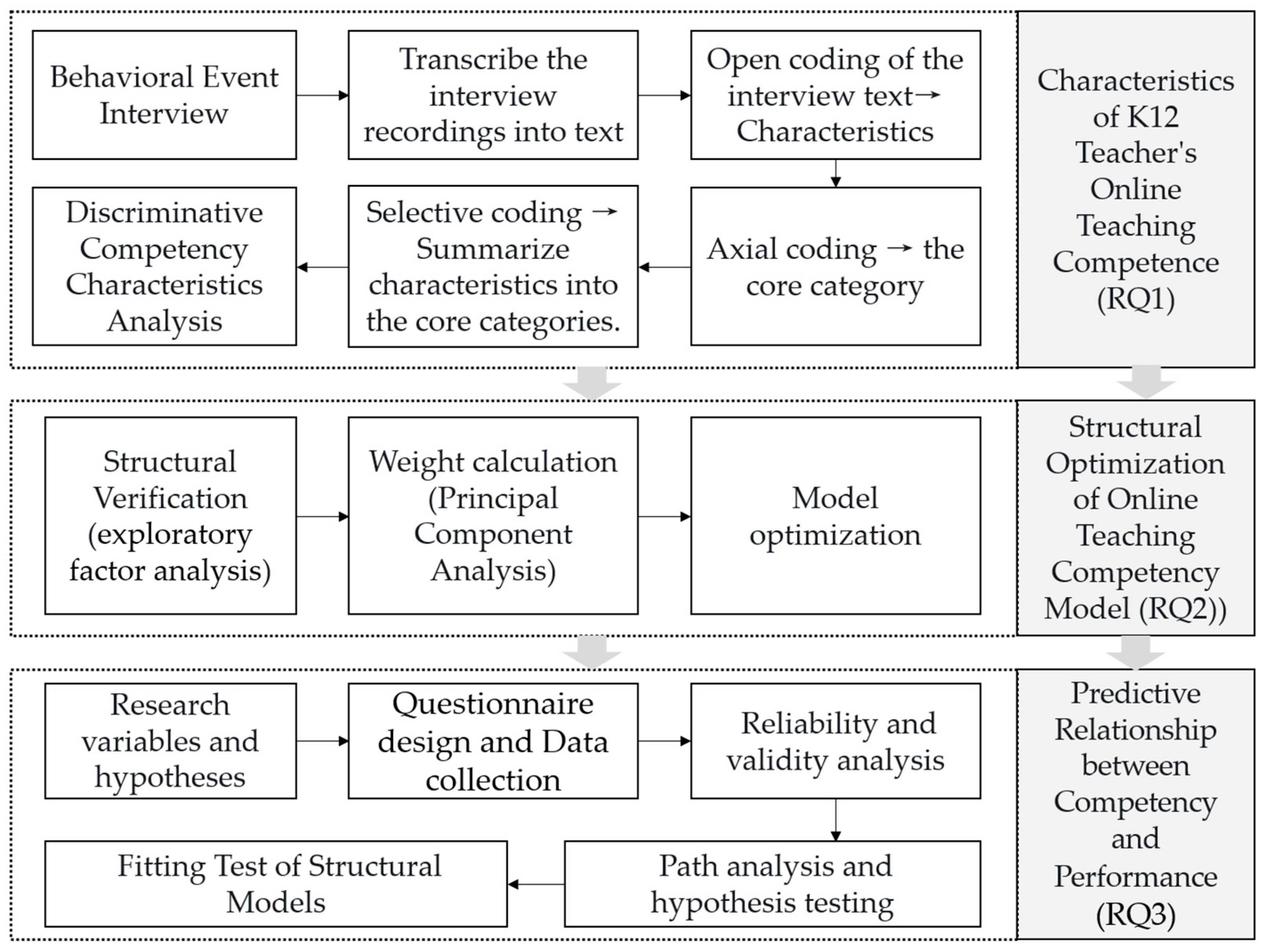

6.1. Research Variables and Hypotheses

In the structural equation model, which explores the relationship between competence and performance, the independent variable is competence, and the dependent variable is performance. Competence, according to the competency model, is expressed by six first-order characteristic factors, including knowledge characteristics, technical characteristics, teaching characteristics, management characteristics, achievement characteristics, and individual traits.

However, there are usually three ways to express performance. First, it is expressed in terms of results or outputs. For example,

Bernardin (

1984) argued that performance is the record of an individual’s output during a specific job, task, or activity at a specific time. Second, it is expressed in terms of process behavior performance. For example,

Campbell (

2012) argued that performance is a goal-related behavior controlled by the employees themselves.

Obilor (

2020) confirmed that high school teachers’ communication skills (one of the competency characteristics) largely affect students’ academic performance (outcomes). Third, it is expressed in terms of behavior and results. For example,

Binning and Barrett (

1989) argued that the best way to describe performance is to reflect the relationship between behavior and results. This view holds that performance is work-related, multi-dimensional, multi-caused, and dynamically changing. This view is consistent with this study.

Stufflebeam (

2000) pointed out in the decision-oriented CIPP model that when judging and evaluating the success of a project, not only the outcome factors should be considered, but also the background factors, input factors and process factors should be considered, especially the process factors can provide a lot of effective information for decision makers to revise the project plan. Therefore, in the measurement of online teaching performance, in addition to the outcome performance, this study also emphasizes the achievement behavior of teachers and students in the process of teaching and learning, that is, process performance.

Many scholars have different opinions on the performance or achievement indicators of online teaching and learning. After studying more than ten typical learning analysis systems,

Buniyamin et al. (

2015) sorted out multiple indicators, including academic performance, course participation, learning style, social performance, etc. When studying learning achievement,

Bukralia et al. (

2015) used academic ability, economic level, academic goals, technical preparation, course motivation, and participation as predictors.

Sun and Fang (

2019) analyzed the performance analysis framework of online learning into seven dimensions: engagement level, interaction level, positive level, stage achievement, learning attitude, learning habits, and frustration level.

F. X. Guo and Liu (

2018) used classroom behavior, homework performance, cognitive level, and test scores as indicators to evaluate learning effects in online learning research based on Blackboard. In addition, there are a large number of studies on online teaching effects, such as SPOC, MOOC, flipped classroom, and mixed classroom, most of which use variables such as academic performance, test pass rate, learning motivation, learning interest, and student satisfaction to represent learning effects.

In the process of online teaching, there are not only students but also teachers. Therefore, the measurement of online teaching performance should not only consider the development of students, but also the development of teachers. Therefore, drawing on

Binning and Barrett’s (

1989) framework, this study adopts a dual-perspective approach involving both teachers and students. Specifically, it evaluates K12 online teaching performance through two dimensions—process performance and outcome performance—while integrating the perspectives of these two key stakeholders. Process performance is mainly measured from learning habits, knowledge transfer ability, learning interest, self-study learning ability, learning input, etc. Outcome performance is mainly measured from learning achievement, teaching goal achievement, teaching satisfaction, and teacher professional development.

In summary, the following research hypotheses (H) are proposed for exploring the relationship between competence and performance in K12 online teaching.

H1a. Knowledge competency characteristics have a significant predictive effect on process performance.

H1b. Knowledge competency characteristics have a significant predictive effect on outcome performance.

H2a. Technical competency characteristics have a significant predictive effect on process performance.

H2b. Technical competency characteristics have a significant predictive effect on outcome performance.

H3a. Teaching competency characteristics have a significant predictive effect on process performance.

H3b. Teaching competency characteristics have a significant predictive effect on outcome performance.

H4a. Management competency characteristics have a significant predictive effect on process performance.

H4b. Management competency characteristics are significant predictors of outcome performance.

H5a. Achievement competency characteristics have a significant predictive effect on process performance.

H5b. Achievement competency characteristics have a significant predictive effect on outcome performance.

H6a. Personal competency characteristics have a significant predictive effect on process performance.

H6b. Personal competency characteristics are significant predictors of outcome performance.

6.2. Research Tool Design and Data Collection

In the context of this study, the measurement items of process performance were adapted from the learning performance and participation in

Buniyamin et al. (

2015), academic ability in

Bukralia et al. (

2015), learning habits and learning attitudes in

Sun and Fang (

2019), cognitive level, knowledge transfer, and learning engagement in

F. X. Guo and Liu (

2018), and learning interaction, learning interest, and autonomous learning ability in

X. Luo (

2016). The measurement items of outcome performance were adapted from the teaching satisfaction, teaching professional ability, and self-compiled student achievement and teaching goal achievement in TALIS2018. After completing the design of the measurement indicators of each latent variable, the questionnaire was designed for each measurement dimension in the form of a 5-point Likert scale. The 5-point Likert scale measures attitudes by asking respondents to rate statements on a symmetric agreement scale (1 = strongly disagree, 5 = strongly agree), converting qualitative responses into quantifiable data.

In order to ensure the validity of the final survey data, a pre-survey of the initial questionnaire was carried out before the formal survey. The pre-survey was administered through our established network of collaborating teachers in primary and secondary schools across multiple regions, including Shenzhen, Dongguan, Yongkang, and Wuhan. These participating teachers, with whom we had previous research collaborations, helped distribute the questionnaires, resulting in 162 valid responses. According to the feedback from the pre-survey teachers, the description of the question items was simplified to reduce the cognitive load of the respondents. Finally, a questionnaire composed of 1 screening question item, 11 sample background and characteristic questions (single and multiple-select questions), 50 competence characteristic variable questions, 17 online teaching performance variable questions, a total of 79 question items was formed. To assess teachers’ online teaching performance, this study employed teacher self-reports of observable student outcomes. Given that students were the primary participants in online instruction, teachers evaluated their own effectiveness based on perceived student improvements. For instance, teachers were asked to rate statements such as “Online assignment sharing and feedback improved students’ work quality through peer learning”.

According to the principle of convenient sampling, the questionnaires were distributed in the working group of audio-visual education centers in Hubei Province through the Wenjuanxing, and then forwarded by the teachers of audio-visual education centers in various places to the teachers of local primary and secondary schools. After the survey, a total of 13,865 teacher questionnaires were collected from 41 districts (counties) from the Wenjunxing platform, most of which were filled in through mobile phones by means of WeChat forwarding links. The questionnaires with a response time of less than 200 s were screened out, the samples that participated in online teaching for less than 10 class hours were deleted, and 12,726 valid questionnaires were recovered, with an effective collection rate of 91.78%.

6.3. Reliability and Validity Analysis

In order to test the reliability of the questionnaire, this study used the Cronbach’s α coefficient to measure the degree of internal consistency for reliability testing. According to the standard of

Nunnally (

1978), when the α coefficient is greater than 0.7, the questionnaire has good internal consistency. For the six competence variables and two performance variables in the model, the Cronbach’s α coefficient of the measurement items is greater than 0.7, indicating that the measurement reliability is good.

The core variables of competence were tested by exploratory factor analysis in

Section 5.1. The retained factor loads were all greater than 0.5, and the structural validity was good.

Exploratory factor analysis (EFA) was used to test the structural validity of the performance variables measured in the questionnaire. There were 17 performance items in total. The results of KMO and Bartlett spherical tests showed that the KMO was 0.856, greater than 0.7. The approximate chi-square value of the Bartlett spherical test was 11,342.734, the degree of freedom (df) was 336, and the significance (Sig.) was 0.000. This shows that the data concentration of each performance item is good, which is suitable for factor analysis.

Next, the principal component method was used to extract the factors. The eigenvalues were greater than 1, and the maximum variance method was used to rotate, sort by size, and the coefficients of less than 0.5 were cancelled. After orthogonal rotation, a total of two common factors were extracted, and the cumulative variance rate is 74.405%, indicating that the extracted two common factors and 17 items can well explain the performance variable information (as shown in

Table 7). The rotated factor loads are all greater than 0.6, indicating that the structural validity of the performance measurement items is good.

6.4. Path Analysis and Hypothesis Testing

The online teaching competency of the surveyed teachers was rated at an upper-middle level across six dimensions (measured on a 5-point Likert scale ranging from 1 [strongly disagree] to 5 [strongly agree]). Specifically, the mean scores were as follows: cognitive characteristics (M = 3.509), technical characteristics (M = 3.496), teaching characteristics (M = 3.570), management characteristics (M = 3.562), achievement characteristics (M = 3.544), and individual traits (M = 3.574). Notably, all participants in this study were teachers with prior experience in online instruction. Their sustained engagement in professional training and practical implementation of online teaching over recent years contributed to their relatively high overall competency. Both process performance (M = 3.623) and outcome performance (M = 3.235) were similarly positioned within the upper-middle range of the scale (1–5 points). Among them, the process performance is significantly higher than the result performance, which also shows that the implicit performance in the online teaching process in terms of learners’ learning habits, interests, and investment cannot be ignored.

The structural model analysis yielded nuanced insights into competency-performance relationships. Among the 12 hypothesized paths, 10 demonstrated statistical significance (

p < 0.05), confirming the overall model validity (

Table 8). However, two non-significant relationships warrant attention: (1) knowledge characteristics → process performance (

p = 0.178 > 0.05) and (2) individual traits → outcome performance (

p = 0.527 > 0.05). That is to say, the prediction effect of knowledge competency characteristics on online teaching process performance is not significant; the competence of individual traits dimension has no significant effect on online teaching outcome performance prediction. These exceptions suggest that knowledge application in online teaching may depend more on pedagogical integration (technical/instructional competencies) than pure content knowledge, and personality factors might mediate—rather than directly determine—outcomes, aligning with

Bandura and Wessels’s (

1997) social cognitive theory of reciprocal determinism.

The joint variability interpretation rate of each dimension of competency and process performance was 54.8%, and the joint variability interpretation rate of outcome performance was 45.2%. It is remarkably high for behavioral research. The management competency characteristics had the strongest predictive effect on process performance (β = 0.363) and outcome performance (β = 0.289). It emphasized the centrality of organizational skills in virtual classrooms and the need to prioritize structured facilitation over purely technical training in teacher development programs.