Understanding the Impact of Algorithmic Discrimination on Unethical Consumer Behavior

Abstract

1. Introduction

2. Literature Review

2.1. UCB

2.2. Algorithmic Discrimination

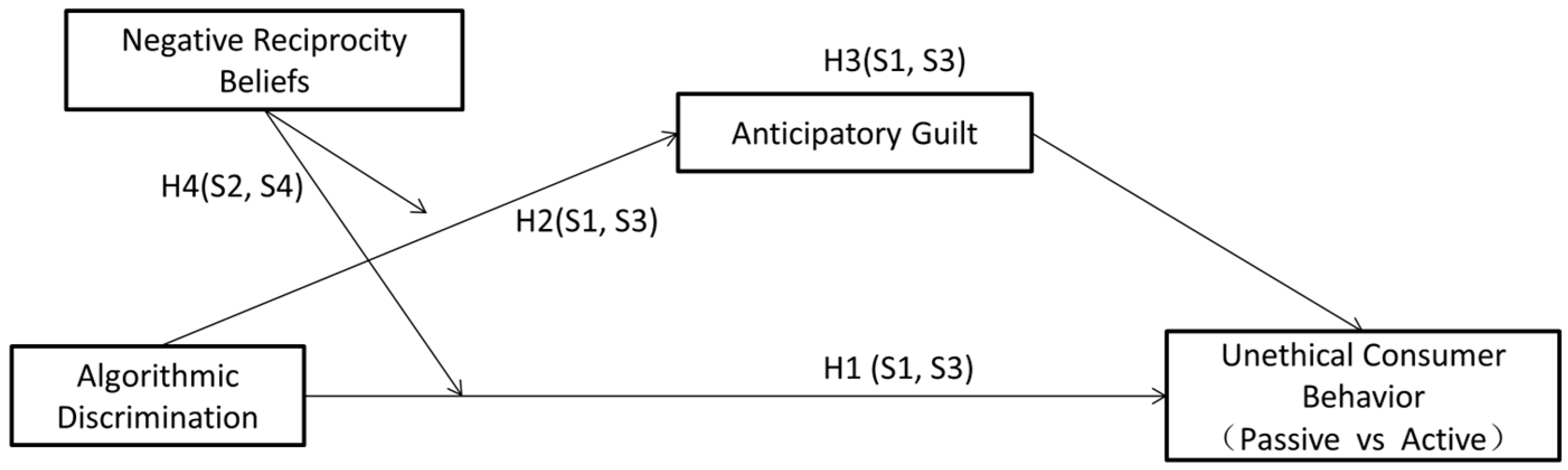

3. Hypothesis Development

3.1. Algorithmic Discrimination, Anticipatory Guilt, and UCB

3.2. The Moderating Role of Negative Reciprocity Beliefs

4. The Overview of the Research

4.1. Pre-Experimentation

4.2. Experiment 1: Algorithmic Discrimination, Anticipatory Guilt, and Passive UCB

4.2.1. Method

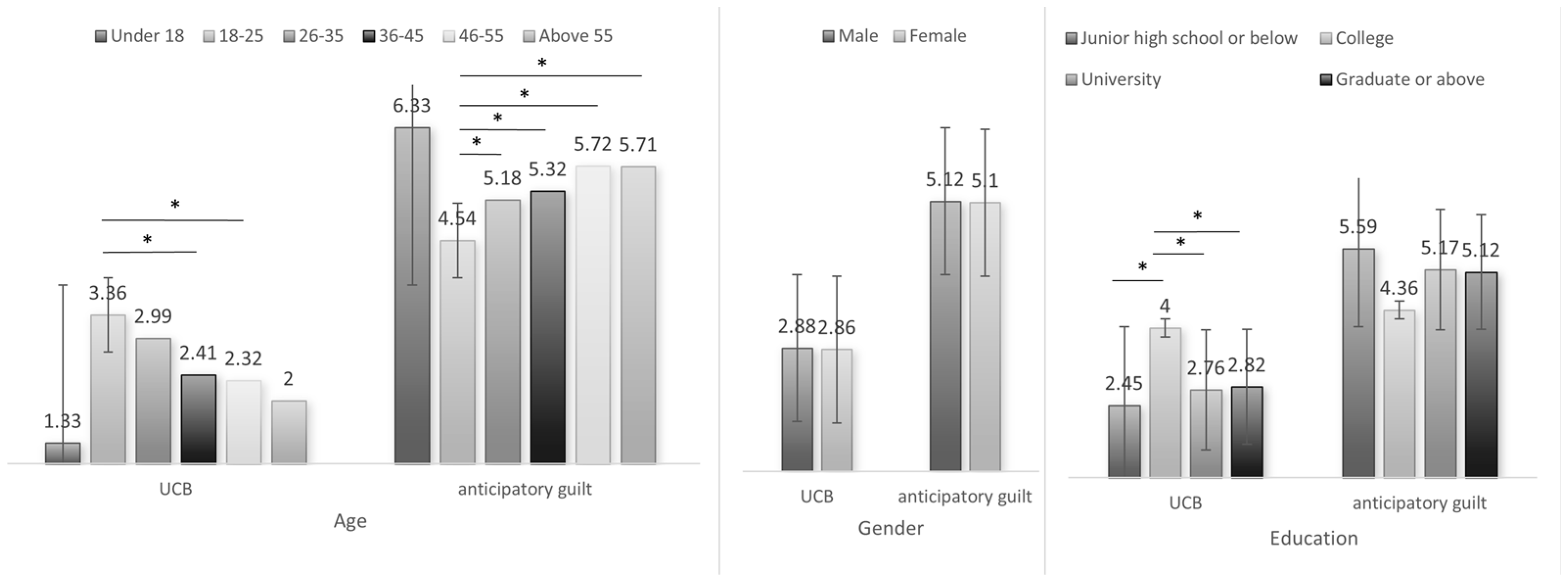

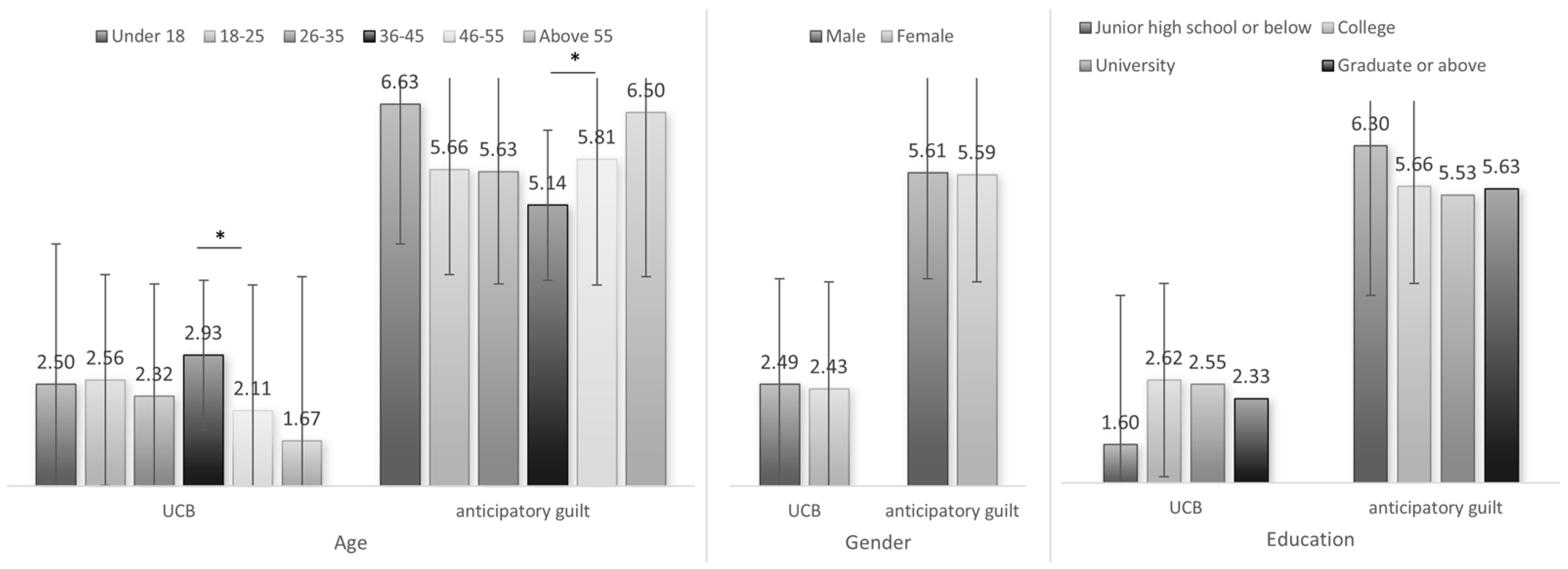

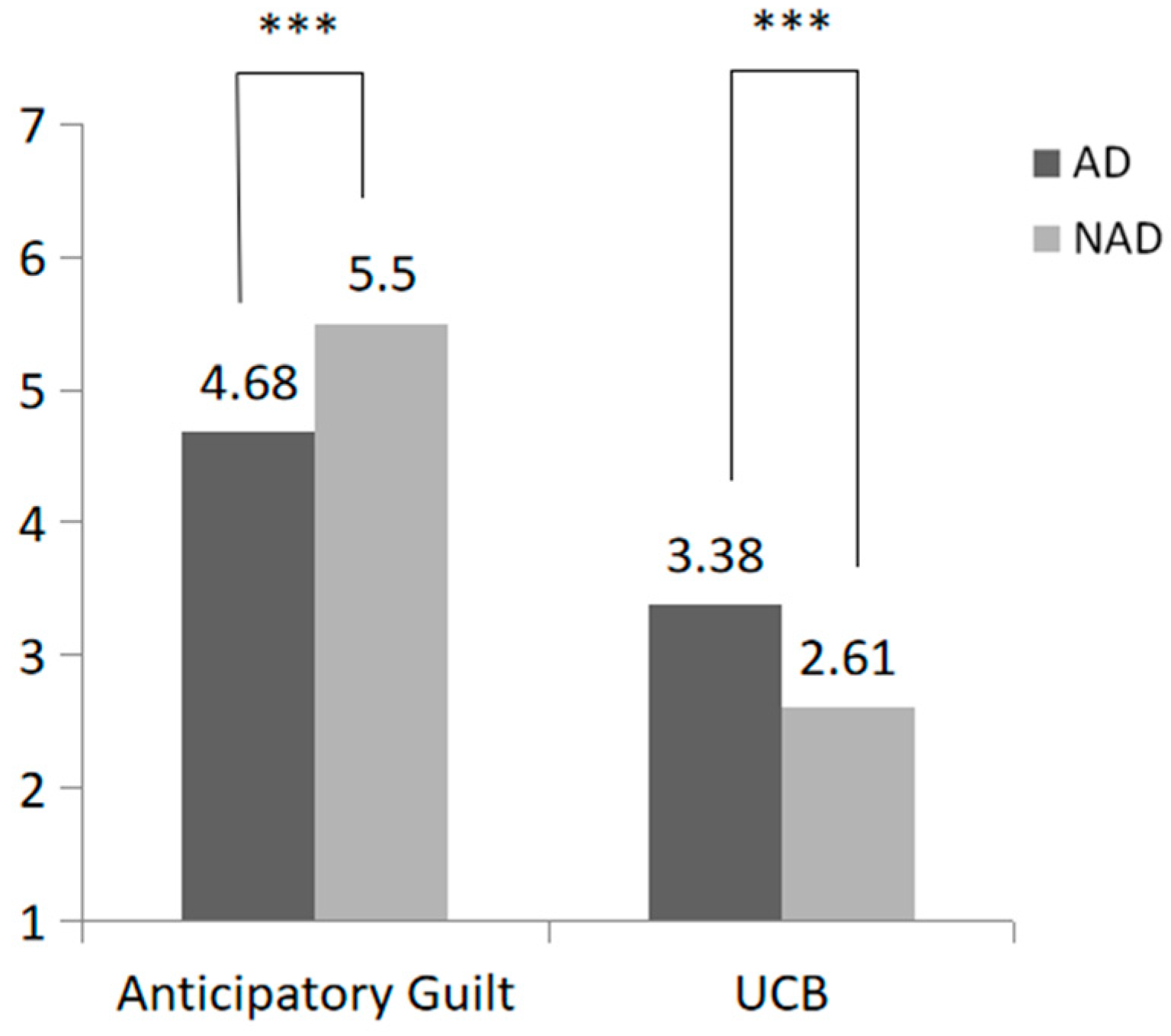

4.2.2. Result

4.3. Experiment 2: A Test of the Moderating Effect of Negative Reciprocity Preferences in the Context of Passive UCB

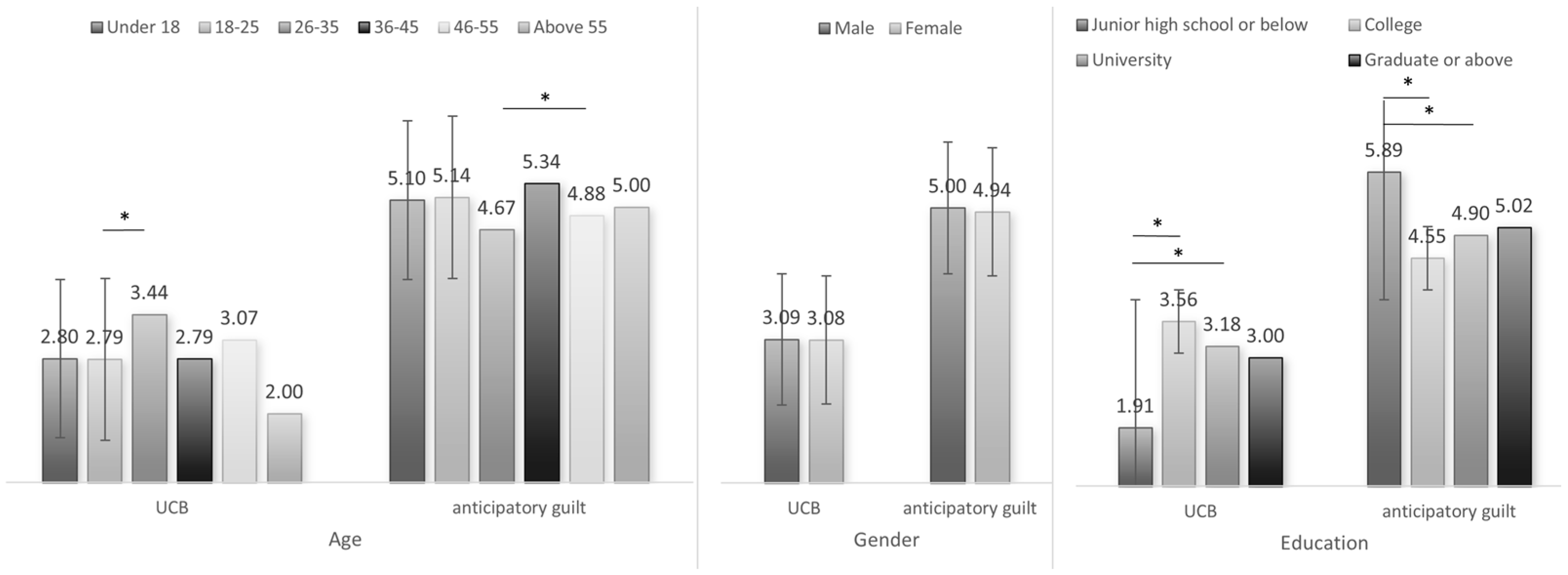

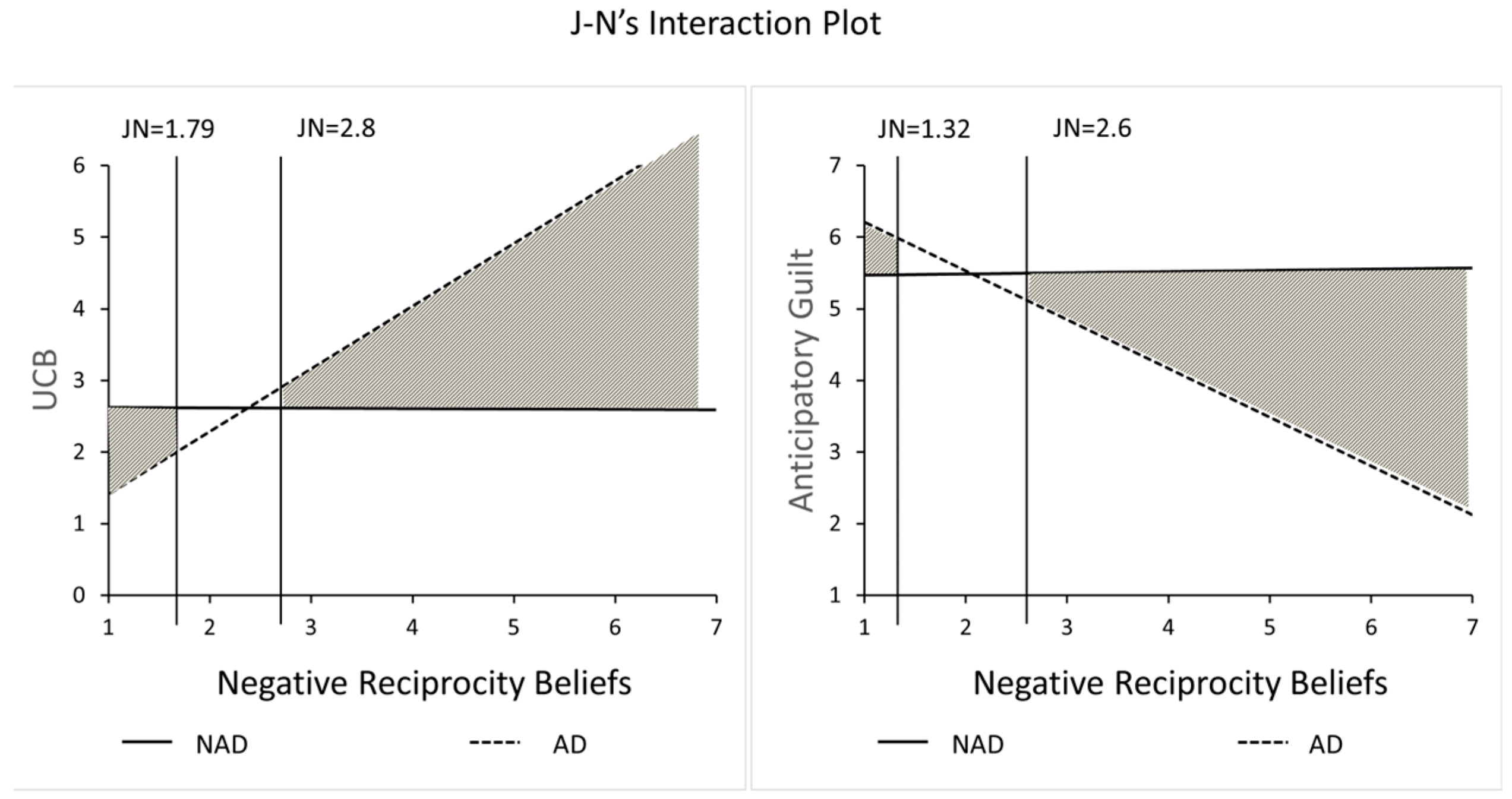

4.3.1. Method

4.3.2. Result

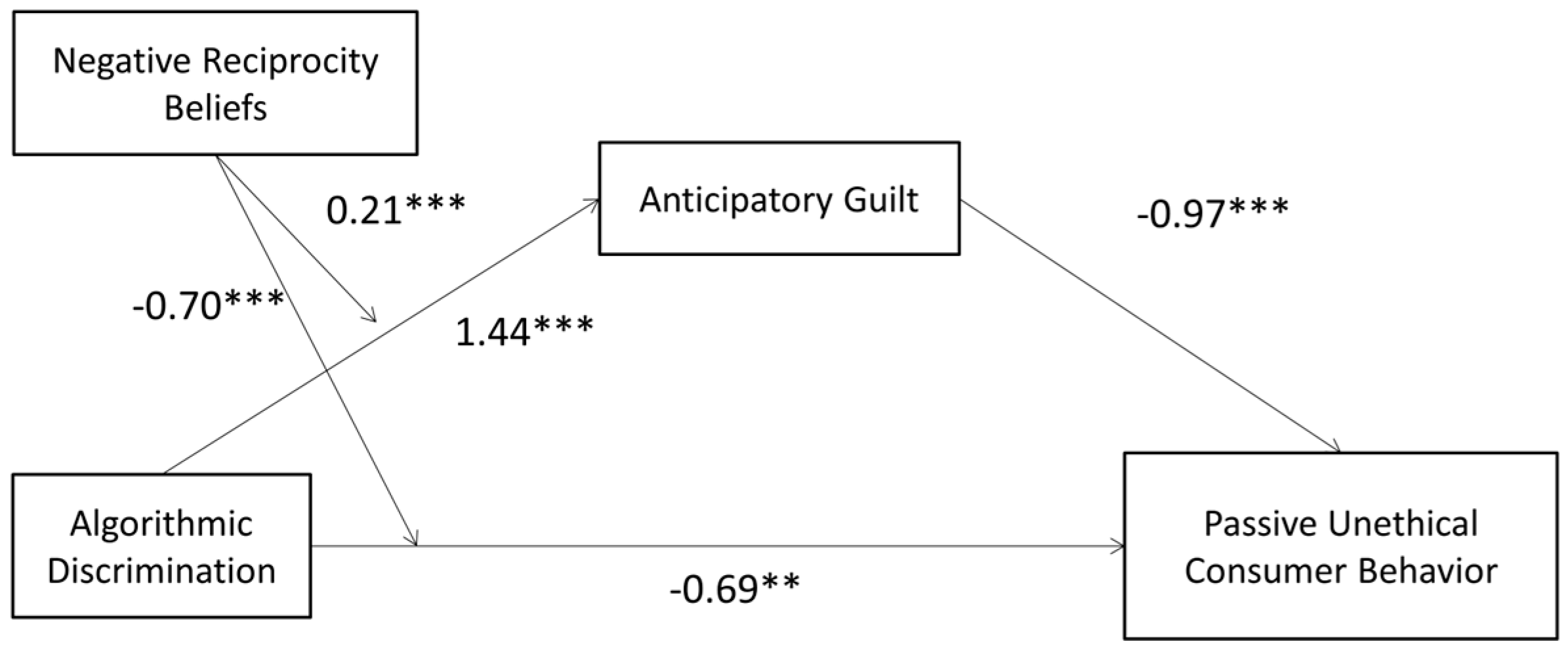

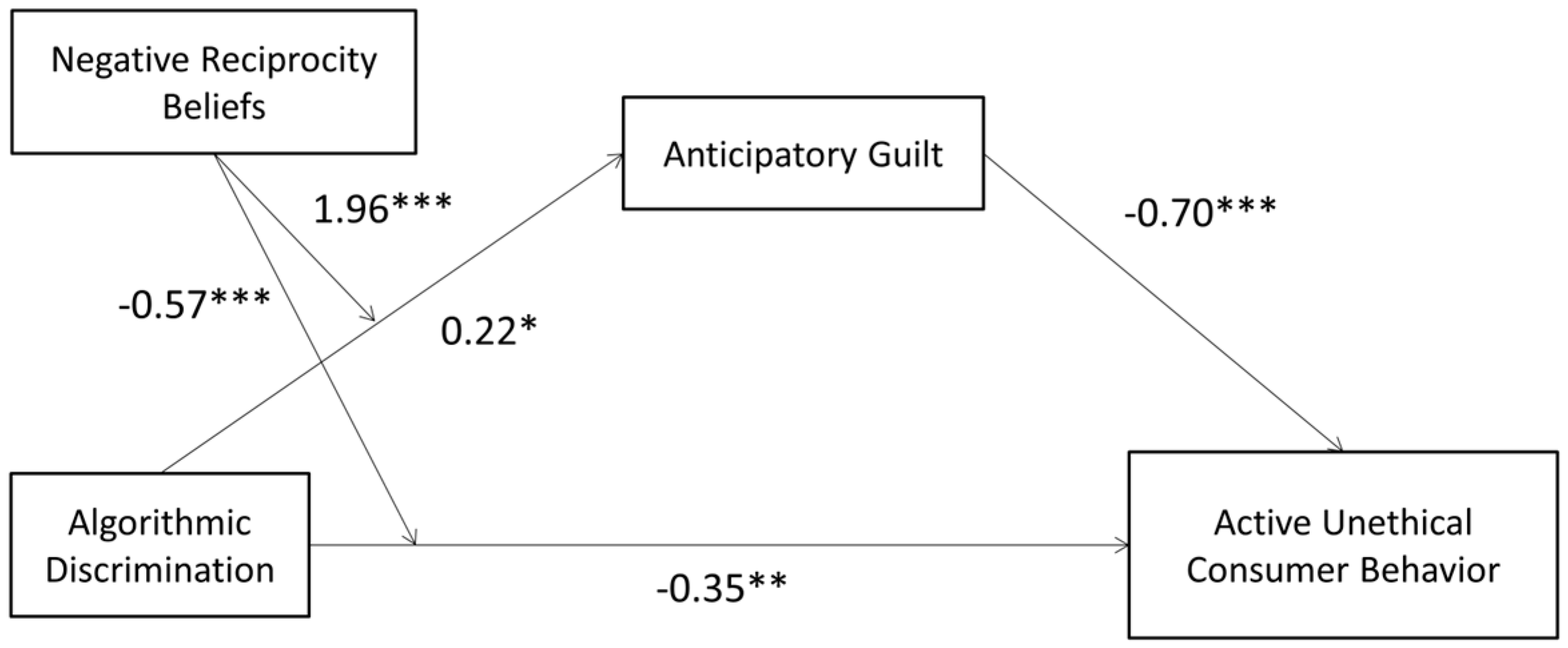

4.4. Experiment 3: Algorithmic Discrimination, Anticipatory Guilt, and Active UCB

4.4.1. Method

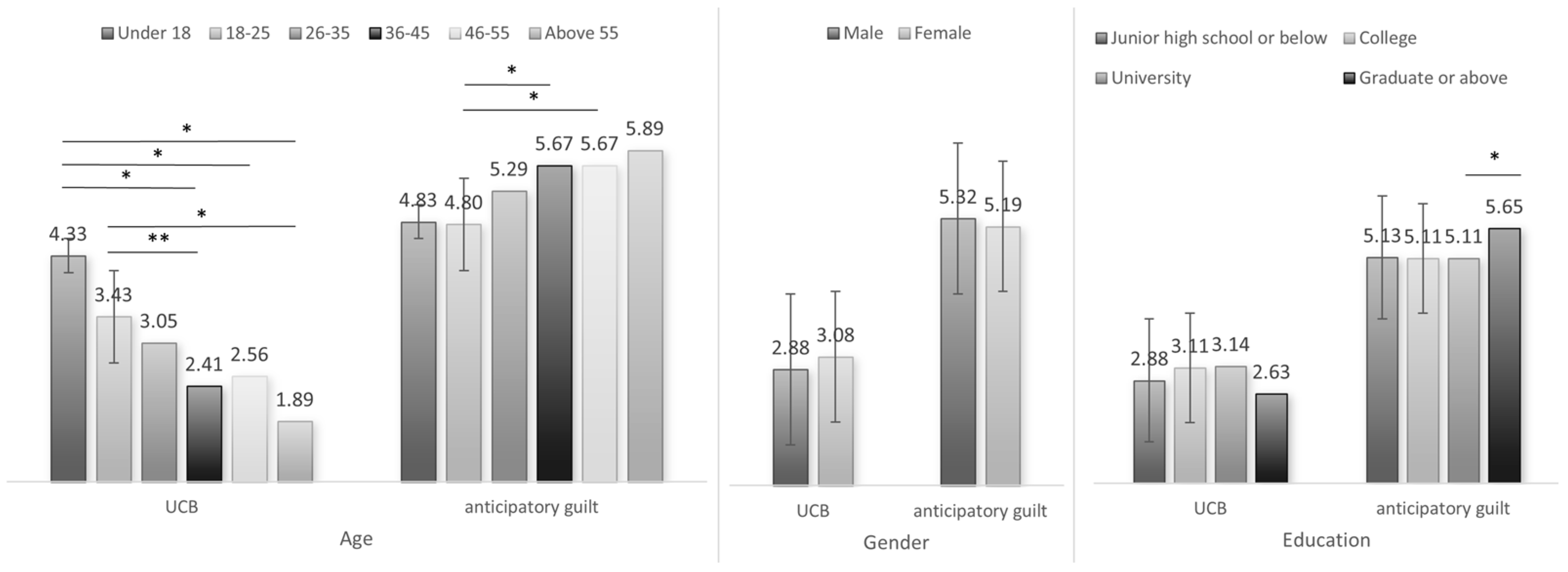

4.4.2. Results

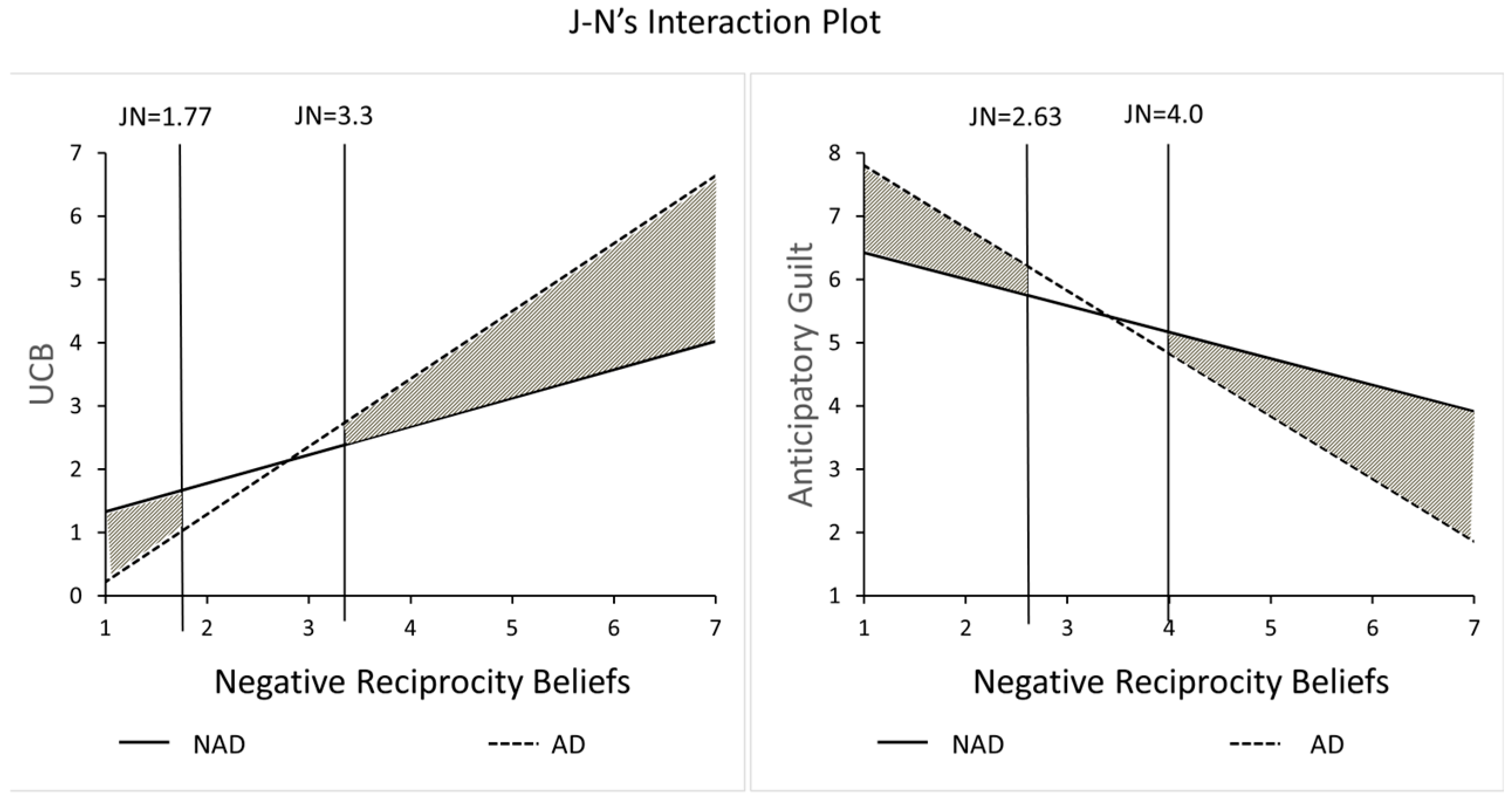

4.5. Experiment 4: The Moderating Effect of Negative Reciprocity Preferences

4.5.1. Method

4.5.2. Results

5. Discussion and Conclusions

5.1. Conclusions

5.2. Theoretical Contributions

5.3. Managerial Implications

5.4. Limitations and Directions for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UCB | Unethical customer behavior |

| AI | Artificial intelligence |

| PA | Perceived anger |

| PD | Perceived discrimination |

| ED | Error detectability |

| EP | Error preventability |

| EC | Error contingency |

| AD | Algorithmic discrimination |

| AG | Anticipatory guilt |

Appendix A

| Variable | S1 | S2 | S3 | S4 | ||||

|---|---|---|---|---|---|---|---|---|

| N | % | N | % | N | % | N | % | |

| Gender | ||||||||

| Male | 74 | 37.76 | 82 | 40.39 | 89 | 39.73 | 87 | 41.04 |

| Female | 122 | 62.24 | 121 | 59.61 | 135 | 60.27 | 125 | 58.96 |

| Age | ||||||||

| Under 18 | 3 | 1.53 | 6 | 2.96 | 2 | 0.89 | 5 | 2.36 |

| 18–25 | 56 | 28.57 | 69 | 33.99 | 57 | 25.45 | 62 | 29.25 |

| 26–35 | 77 | 39.29 | 62 | 30.54 | 84 | 37.50 | 91 | 42.92 |

| 35–45 | 34 | 17.35 | 39 | 19.21 | 42 | 18.75 | 39 | 18.40 |

| 45–55 | 19 | 9.69 | 18 | 8.87 | 36 | 16.07 | 14 | 6.60 |

| Above 55 | 7 | 3.57 | 9 | 4.43 | 3 | 1.34 | 1 | 0.47 |

| Education | ||||||||

| Junior high school or below | 11 | 5.61 | 8 | 3.94 | 5 | 2.23 | 11 | 5.19 |

| College | 16 | 8.16 | 28 | 13.79 | 26 | 11.61 | 16 | 7.55 |

| University | 102 | 52.04 | 118 | 58.13 | 113 | 50.45 | 118 | 55.66 |

| Graduate or above | 67 | 34.18 | 49 | 24.14 | 80 | 35.71 | 67 | 31.60 |

Appendix B

Appendix B.1

- 1.

- Scenario for Algorithmic Discrimination

- 2.

- Perceived Prejudiced Motivation Measurement (0 = strongly disagree, 100 = strongly agree)

- 3.

- The restaurant’s AI algorithm discriminates against regular users

- 4.

- The restaurant’s AI algorithm treats people differently based on how often they use it

- 5.

- The restaurant’s AI algorithm discriminates against regular users

- 6.

- Moral Outrage Measurement (0 = strongly disagree, 100 = strongly agree)

- 7.

- I am angry at the algorithmic discrimination in this restaurant

- 8.

- I am outraged by algorithmic discrimination in this restaurant

- 9.

- I am disgusted by the algorithmic discrimination in this restaurant

- 10.

- Scenario for Passive UCB Condition

- 11.

- Experiences with AI Agents

- 12.

- Have you had any experience with AI service robotsA. Yes I haveB. No I have not

- 13.

- Attention Test

- 14.

- I frequently elect to utilize AI agents in my daily activities.A. I think this description fits me very wellB. I am like this some of the timeC. Please select the first option directlyD. I hate using AI agents

- 15.

- UCB Measurement

- 16.

- If you were Zhang San, would you like to do this?(1 = strongly disagree, 7 = strongly agree).

- 17.

- Perceived Guilt Measurement (1 = strongly disagree, 7 = strongly agree).

- 18.

- I would feel anxious if I did not report billing errors.

- 19.

- I would feel remorse if I did not report billing errors.

- 20.

- I would feel guilty if I did not report billing errors.

- 21.

- I would feel irresponsible if I did not report billing errors.

- 22.

- Material testing

- 23.

- How similar do you think the above described scenario is to reality?

- 24.

- What do you think is the clarity of the scenario described above?

- 25.

- Do you think the scenario described above is something you could put yourself in?(1 = Absolutely not, 5 = Absolutely).

Appendix B.2

- 26.

- Scenario for Algorithmic Discrimination

- 27.

- Perceived Prejudiced Motivation Measurement

- 28.

- The hotel’s AI algorithm discriminates against regular users

- 29.

- The hotel’s AI algorithm treats people differently based on how often they use it

- 30.

- The hotel’s AI algorithm discriminates against regular users(0 = strongly disagree, 100 = strongly agree)

- 31.

- Moral Outrage Measurement

- 32.

- I am angry at the algorithmic discrimination in this hotel

- 33.

- I am outraged by algorithmic discrimination in this hotel

- 34.

- I am disgusted by the algorithmic discrimination in this hotel(0 = strongly disagree, 100 = strongly agree)

- 35.

- Scenario for Passive UCB Condition

- 36.

- Negative reciprocity beliefs

- 37.

- If someone despises you, you should despise them too.

- 38.

- If someone dislikes you, you should dislike them too.

- 39.

- If someone says something nasty to you, you should say something nasty back.

- 40.

- If someone treats you like an enemy, they deserve your resentment.

- 41.

- If someone treats me badly, I feel I should treat them even worse.

- 42.

- If someone has treated you poorly, you should not return the poor treatment.

- 43.

- You should not give help to those who treat you badly.

- 44.

- If a person wants to be your enemy, you should treat them like an enemy.

- 45.

- A person who has contempt for you deserves your contempt.(1 = strongly disagree, 7 = strongly agree).

Appendix B.3

- 46.

- Scenario for Algorithmic Discrimination

- 47.

- Perceived Prejudiced Motivation Measurement

- 48.

- The mall’s AI algorithm discriminates against regular users

- 49.

- The mall’s AI algorithm treats people differently based on how often they use it

- 50.

- The mall’s AI algorithm discriminates against regular users(0 = strongly disagree, 100 = strongly agree)

- 51.

- Moral Outrage Measurement

- 52.

- I am angry at the algorithmic discrimination in this mall

- 53.

- I am outraged by algorithmic discrimination in this mall

- 54.

- I am disgusted by the algorithmic discrimination in this mall(0 = strongly disagree, 100 = strongly agree)

- 55.

- Scenario for Active UCB Condition

- 56.

- Perceived Guilt Measurement

- 57.

- I would feel anxious if I used expired coupon.

- 58.

- I would feel remorse if I used expired coupon.

- 59.

- I would feel guilty if I used expired coupon.

- 60.

- I would feel irresponsible if I used expired coupon.(1 = strongly disagree, 7 = strongly agree).

- 61.

- Perceived Detectability Measurement

- 62.

- How would you estimate the probability of the mall to detect this error and correct this error in future?(1 = very impossible, 7 = very possible).

- 63.

- Preventability Measurement

- 64.

- How preventable was the mistake?(1 = not at all preventable, 7 = highly preventable).

- 65.

- Accidental Mistake Measurement

- 66.

- Was it an accidental mistake?(1 = not at all accidental, 7 = very much accidental).

Appendix B.4

- 67.

- Scenario for Algorithmic Discrimination

- 68.

- Perceived Prejudiced Motivation Measurement

- 69.

- The mall’s AI algorithm discriminates against regular users

- 70.

- The mall’s AI algorithm treats people differently based on how often they use it

- 71.

- The mall’s AI algorithm discriminates against regular users(0 = strongly disagree, 100 = strongly agree)

- 72.

- Moral Outrage Measurement

- 73.

- I am angry at the algorithmic discrimination in this mall

- 74.

- I am outraged by algorithmic discrimination in this mall

- 75.

- I am disgusted by the algorithmic discrimination in this mall(0 = strongly disagree, 100 = strongly agree)

- 76.

- Scenario for Active UCB Condition

Appendix C

Appendix C.1. Experiment 1

Appendix C.2. Experiment 2

Appendix C.3. Experiment 3

Appendix C.4. Experiment 4

| Variable | Item | Mean | Cronbach’s Alpha | Composite Reliability |

|---|---|---|---|---|

| Anticipatory guilt | AG1: I would feel anxious if I did not report billing errors. AG2: I would feel remorse if I did not report billing errors. AG3: I would feel guilty if I did not report billing errors. AG4: I would feel irresponsible if I did not report billing errors. | 4.593 | 0.967 | 0.967 |

| Anticipatory guilt | AG1: I would feel anxious if I used expired coupon. AG2: I would feel remorse if I used expired coupon. AG3: I would feel guilty if I used expired coupon. AG4: I would feel irresponsible if I used expired coupon. | 5.08 | 0.957 | 0.958 |

| Perceived discrimination | PD1: The restaurant’s AI algorithm discriminates against regular users. PD2: The restaurant’s AI algorithm treats people differently based on how often they use it. PD3: The restaurant’s AI algorithm discriminates against regular users. | 81.441 | 0.851 | 0.846 |

| Perceived anger | PA1: I am angry at the algorithmic discrimination in this restaurant. PA2: I am outraged by algorithmic discrimination in this restaurant. PA3: I am disgusted by the algorithmic discrimination in this restaurant. | 83.192 | 0.922 | 0.923 |

| Negative reciprocity beliefs | NRB1: If someone despises you, you should despise them too. NRB2: If someone dislikes you, you should dislike them too. NRB3: If someone says something nasty to you, you should say something nasty back. NRB4: If someone treats you like an enemy, they deserve your resentment. NRB5: If someone treats me badly, I feel I should treat them even worse. NRB6: If someone has treated you poorly, you should not return the poor treatment. NRB7: You should not give help to those who treat you badly. NRB8: If a person wants to be your enemy, you should treat them like an enemy. NRB9: A person who has contempt for you deserves your contempt. | 3.859 | 0.889 | 0.890 |

References

- Akaah, I. P. (1989). Differences in research ethics judgments between male and female marketing professionals. Journal of Business Ethics, 8(5), 375–381. [Google Scholar] [CrossRef]

- Arli, D., & Tjiptono, F. (2014). The end of religion? Examining the role of religiousness, materialism, and long-term orientation on consumer ethics in Indonesia. Journal of Business Ethics, 123(3), 385–400. [Google Scholar]

- Babakus, E., Bettina Cornwell, T., Mitchell, V., & Schlegelmilch, B. (2004). Reactions to unethical consumer behavior across six countries. Journal of Consumer Marketing, 21(4), 254–263. [Google Scholar]

- Bateman, C. R., & Valentine, S. R. (2010). Investigating the effects of gender on consumers’ moral philosophies and ethical intentions. Journal of Business Ethics, 95(3), 393–414. [Google Scholar]

- Baumeister, R. F., Stillwell, A. M., & Heatherton, T. F. (1994). Guilt: An interpersonal approach. Psychological Bulletin, 115(2), 243. [Google Scholar] [PubMed]

- Baumeister, R. F., Vohs, K. D., Nathan DeWall, C., & Zhang, L. (2007). How emotion shapes behavior: Feedback, anticipation, and reflection, rather than direct causation. Personality Social Psychology Review, 11(2), 167–203. [Google Scholar]

- Bigman, Y. E., Wilson, D., Arnestad, M. N., Waytz, A., & Gray, K. (2023). Algorithmic discrimination causes less moral outrage than human discrimination. Journal of Experimental Psychology: General, 152(1), 4. [Google Scholar] [CrossRef]

- Chen, Y., Chen, X., & Portnoy, R. (2009). To whom do positive norm and negative norm of reciprocity apply? Effects of inequitable offer, relationship, and relational-self orientation. Journal of Experimental Social Psychology, 45(1), 24–34. [Google Scholar] [CrossRef]

- Chen, Y., Pan, Y., Cui, H., & Yang, X. (2023). The contagion of unethical behavior and social learning: An experimental study. Behavioral Sciences, 13(2), 172. [Google Scholar]

- Chen, Z. (2023). Ethics and discrimination in artificial intelligence-enabled recruitment practices. Humanities Social Sciences Communications, 10(1), 1–12. [Google Scholar] [CrossRef]

- Choi, H. (2022). Feeling one thing and doing another: How expressions of guilt and shame influence hypocrisy judgment. Behavioral Sciences, 12(12), 504. [Google Scholar]

- Choi, H. (2023). Consideration of future consequences affects the perception and interpretation of self-conscious emotions. Behavioral Sciences, 13(8), 640. [Google Scholar]

- Choi, H. (2024). Integrating guilt and shame into the self-concept: The influence of future opportunities. Behavioral Sciences, 14(6), 472. [Google Scholar] [PubMed]

- Cropanzano, R., & Mitchell, M. S. (2005). Social exchange theory: An interdisciplinary review. Journal of Management, 31(6), 874–900. [Google Scholar]

- Davenport, T., Guha, A., Grewal, D., & Bressgott, T. (2020). How artificial intelligence will change the future of marketing. Journal of the Academy of Marketing Science, 48(1), 24–42. [Google Scholar]

- Dietvorst, B. J., Simmons, J. P., & Massey, C. (2018). Overcoming algorithm aversion: People will use imperfect algorithms if they can (even slightly) modify them. Management Science, 64(3), 1155–1170. [Google Scholar]

- Dong, X., Zvereva, G., Wen, X., & Xi, N. (2025). More polite, more immoral: How does politeness in service robots influence consumer moral choices? The Service Industries Journal, 1–33. [Google Scholar] [CrossRef]

- Eisenberger, R., Lynch, P., Aselage, J., & Rohdieck, S. (2004). Who takes the most revenge? Individual differences in negative reciprocity norm endorsement. Personality and Social Psychology Bulletin, 30(6), 787–799. [Google Scholar]

- Favaretto, M., De Clercq, E., & Elger, B. S. (2019). Big Data and discrimination: Perils, promises and solutions. A systematic review. Journal of Big Data, 6(1), 1–27. [Google Scholar]

- Fuller, C. M., Simmering, M. J., Atinc, G., Atinc, Y., & Babin, B. J. (2016). Common methods variance detection in business research. Journal of Business Research, 69(8), 3192–3198. [Google Scholar]

- Fullerton, R. A., & Punj, G. (1993). Choosing to misbehave: A structural model of aberrant consumer behavior. Advances in Consumer Research, 20(1), 570. [Google Scholar]

- Fullerton, R. A., & Punj, G. (2004). Repercussions of promoting an ideology of consumption: Consumer misbehavior. Journal of Business Research, 57(11), 1239–1249. [Google Scholar]

- Ghasemaghaei, M., & Kordzadeh, N. (2024). Understanding how algorithmic injustice leads to making discriminatory decisions: An obedience to authority perspective. Information & Management, 61(2), 103921. [Google Scholar]

- Giroux, M., Kim, J., Lee, J. C., & Park, J. (2022). Artificial intelligence and declined guilt: Retailing morality comparison between human and AI. Journal of Business Ethics, 178(4), 1027–1041. [Google Scholar]

- Gong, X., Wang, H., Zhang, X., & Tian, H. (2022). Why does service inclusion matter? The effect of service exclusion on customer indirect misbehavior. Journal of Retailing Consumer Services, 68, 103005. [Google Scholar]

- Guan, H., Dong, L., & Zhao, A. (2022). Ethical risk factors and mechanisms in artificial intelligence decision making. Behavioral Sciences, 12(9), 343. [Google Scholar]

- Hunt, S. D., & Vitell, S. (1986). A general theory of marketing ethics. Journal of Macromarketing, 6(1), 5–16. [Google Scholar]

- Kim, T., Lee, H., Kim, M. Y., Kim, S., & Duhachek, A. (2023). AI increases unethical consumer behavior due to reduced anticipatory guilt. Journal of the Academy of Marketing Science, 51(4), 785–801. [Google Scholar]

- Köbis, N., Bonnefon, J. F., & Rahwan, I. (2021). Bad machines corrupt good morals. Nature human behaviour, 5(6), 679–685. [Google Scholar]

- LaMothe, E., & Bobek, D. (2020). Are individuals more willing to lie to a computer or a human? Evidence from a tax compliance setting. Journal of Business Ethics, 167(2), 157–180. [Google Scholar]

- Lee, M. K., Jain, A., Cha, H. J., Ojha, S., & Kusbit, D. (2019). Procedural justice in algorithmic fairness: Leveraging transparency and outcome control for fair algorithmic mediation. Proceedings of the ACM on Human-Computer Interaction, 3(CSCW), 1–26. [Google Scholar] [CrossRef]

- Lei, S., Xie, L., & Peng, J. (2024). Unethical consumer behavior following artificial intelligence agent encounters: The differential effect of AI agent roles and its boundary conditions. Journal of Service Research. [Google Scholar] [CrossRef]

- Li, T. G., Zhang, C. B., Chang, Y., & Zheng, W. (2024). The impact of AI identity disclosure on consumer unethical behavior: A social judgment perspective. Journal of Retailing and Consumer Services, 76, 103606. [Google Scholar] [CrossRef]

- Liu, J., Kwong Kwan, H., Wu, L. z., & Wu, W. (2010). Abusive supervision and subordinate supervisor-directed deviance: The moderating role of traditional values and the mediating role of revenge cognitions. Journal of Occupational Organizational Psychology, 83(4), 835–856. [Google Scholar] [CrossRef]

- Liu, Y., Wang, X., Du, Y., & Wang, S. (2023). Service robots vs. human staff: The effect of service agents and service exclusion on unethical consumer behavior. Journal of Hospitality Tourism Management, 55, 401–415. [Google Scholar] [CrossRef]

- Mayr, K., Schwendtner, T., Teller, C., & Gittenberger, E. (2022). Unethical customer behaviour: Causes and consequences. International Journal of Retail & Distribution Management, 50(13), 200–224. [Google Scholar]

- Mazar, N., Amir, O., & Ariely, D. (2008). The dishonesty of honest people: A theory of self-concept maintenance. Journal of Marketing Research, 45(6), 633–644. [Google Scholar] [CrossRef]

- McLeay, F., Osburg, V. S., Yoganathan, V., & Patterson, A. (2021). Replaced by a robot: Service implications in the age of the machine. Journal of Service Research, 24(1), 104–121. [Google Scholar] [CrossRef]

- Meque, A. G. M., Hussain, N., Sidorov, G., & Gelbukh, A. (2023). Machine learning-based guilt detection in text. Scientific Reports, 13(1), 11441. [Google Scholar] [CrossRef]

- Mills, P., & Groening, C. (2021). The role of social acceptability and guilt in unethical consumer behavior: Following the crowd or their own moral compass? Journal of Business Research, 136, 377–388. [Google Scholar]

- Mitchell, V. W., Balabanis, G., Schlegelmilch, B. B., & Cornwell, T. B. (2009). Measuring unethical consumer behavior across four countries. Journal of Business Ethics, 88(2), 395–412. [Google Scholar] [CrossRef]

- Moreno-Izquierdo, L., Ramón-Rodríguez, A., & Ribes, J. P. (2015). The impact of the internet on the pricing strategies of the European low cost airlines. European Journal of Operational Research, 246(2), 651–660. [Google Scholar] [CrossRef]

- Mubin, O., Cappuccio, M., Alnajjar, F., Ahmad, M. I., & Shahid, S. (2020). Can a robot invigilator prevent cheating? AI & Society, 35(4), 981–989. [Google Scholar]

- Perugini, M., Gallucci, M., Presaghi, F., & Ercolani, A. P. (2003). The personal norm of reciprocity. European Journal of Personality, 17(4), 251–283. [Google Scholar]

- Petisca, S., Paiva, A., & Esteves, F. (2020). Perceptions of people’s dishonesty towards robots. In Social robotics: 12th International Conference, ICSR 2020, Golden, CO, USA, 14–18 November 2020, Proceedings 12 (pp. 132–143). Springer International Publishing. [Google Scholar]

- Podsakoff, P. M., MacKenzie, S. B., Lee, J.-Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology, 88(5), 879. [Google Scholar] [CrossRef]

- Robin, D. P., Reidenbach, R. E., & Forrest, P. J. (1996). The perceived importance of an ethical issue as an influence on the ethical decision-making of ad managers. Journal of Business Research, 35(1), 17–28. [Google Scholar] [CrossRef]

- Russell, P. S., & Giner-Sorolla, R. (2011). Moral anger, but not moral disgust, responds to intentionality. Emotion, 11(2), 233. [Google Scholar]

- Saintives, C. (2020). Guilt online vs. offline: What are its consequences on consumer behavior? Journal of Retailing Consumer Services, 55, 102114. [Google Scholar] [CrossRef]

- Scaffidi Abbate, C., Misuraca, R., Roccella, M., Parisi, L., Vetri, L., & Miceli, S. (2022). The role of guilt and empathy on prosocial behavior. Behavioral Sciences, 12(3), 64. [Google Scholar] [CrossRef]

- Schein, C., & Gray, K. (2015). The eyes are the window to the uncanny valley: Mind perception, autism and missing souls. Interaction Studies, 16(2), 173–179. [Google Scholar]

- Someh, I., Davern, M., Breidbach, C. F., & Shanks, G. (2019). Ethical issues in big data analytics: A stakeholder perspective. Communications of the Association for Information Systems, 44(1), 34. [Google Scholar]

- Tibbetts, S. G. (2003). Self-conscious emotions and criminal offending. Psychological Reports, 93(1), 101–126. [Google Scholar] [PubMed]

- Vitell, S. J. (2003). Consumer ethics research: Review, synthesis and suggestions for the future. Journal of Business Ethics, 43, 33–47. [Google Scholar]

- Vitell, S. J., & Muncy, J. (1992). Consumer ethics: An empirical investigation of factors influencing ethical judgments of the final consumer. Journal of Business Ethics, 11(8), 585–597. [Google Scholar]

- Wang, J., Zhou, Z., Cao, S., Liu, L., Ren, J., & Morrison, A. M. (2025). Who sets prices better? The impact of pricing agents on consumer negative word-of-mouth when applying price discrimination. Tourism Management, 106, 105003. [Google Scholar]

- Wang, Y., Qiu, X., Yin, J., Wang, L., & Cong, R. (2024). Drivers and obstacles of consumers’ continuous participation intention in online pre-sales: Social exchange theory perspective. Behavioral Sciences, 14(11), 1094. [Google Scholar]

- Wilson, A., Stefanik, C., & Shank, D. B. (2022). How do people judge the immorality of artificial intelligence versus humans committing moral wrongs in real-world situations? Computers in Human Behavior Reports, 8, 100229. [Google Scholar]

- Wirtz, J., & Kum, D. (2004). Consumer cheating on service guarantees. Journal of the Academy of Marketing Science, 32(2), 159–175. [Google Scholar]

- Wirtz, J., & McColl-Kennedy, J. R. (2010). Opportunistic customer claiming during service recovery. Journal of the Academy of Marketing Science, 38, 654–675. [Google Scholar]

- Xiong, Q., Pan, Q., Nie, S., Guan, F., Nie, X., & Sun, Z. (2023). How does collective moral judgment induce unethical pro-organizational behaviors in infrastructure construction projects: The mediating role of machiavellianism. Behavioral Sciences, 13(1), 57. [Google Scholar]

- Xu, L., Yu, F., & Peng, K. (2022). Algorithmic discrimination causes less desire for moral punishment than human discrimination. Acta Psychologica Sinica, 54(9), 1076. [Google Scholar]

- Zhao, B., & Xu, S. (2013). Does consumer unethical behavior relate to birthplace? Evidence from China. Journal of Business Ethics, 113, 475–488. [Google Scholar]

- Zhao, B., Rawwas, M. Y., & Zeng, C. (2020). How does past behaviour stimulate consumers’ intentions to repeat unethical behaviour? The roles of perceived risk and ethical beliefs. Business Ethics: A European Review, 29(3), 602–616. [Google Scholar] [CrossRef]

- Zhu, N., Liu, Y., & Zhang, J. (2023). How and when generalized reciprocity and negative reciprocity influence employees’ well-being: The moderating role of strength use and the mediating roles of intrinsic motivation and organizational obstruction. Behavioral Sciences, 13(6), 465. [Google Scholar]

| Variable | M | SD | Algorithmic Discrimination | Anticipatory Guilt | UCB |

|---|---|---|---|---|---|

| Algorithmic Discrimination | 0.50 | 0.50 | 1 | ||

| Anticipatory Guilt | 2.87 | 1.99 | −0.244 ** | 1 | |

| UCB | 5.11 | 1.71 | 0.298 *** | −0.902 *** | 1 |

| Variable | Model 1 | Model 2 | Model 3 | |||

|---|---|---|---|---|---|---|

| β | t | β | t | β | t | |

| Algorithmic Discrimination | 1.18 | 4.36 *** | −0.83 | −3.49 *** | 0.33 | 2.66 ** |

| Anticipatory Guilt | −1.03 | −27.97 *** | ||||

| R2 | 0.09 | 0.24 | 0.91 | |||

| F | 18.98 *** | 12.25 *** | 438.86 *** | |||

| β | SE | Bootstrap 95% CI | Proportion of Total Effect | ||

|---|---|---|---|---|---|

| LLCI | ULCI | ||||

| Total effect | 1.18 | 0.27 | 0.65 | 1.72 | 72% |

| Direct effect | 0.33 | 0.13 | 0.09 | 0.58 | |

| Indirect effect | 0.85 | 0.24 | 0.36 | 1.31 | |

| β | SE | Bootstrap 95% CI | Proportion of Total Effect | ||

|---|---|---|---|---|---|

| LLCI | ULCI | ||||

| Total effect | 1.50 | 0.20 | 1.1042 | 1.8958 | 58% |

| Direct effect | 0.63 | 0.14 | 0.3410 | 0.9123 | |

| Indirect effect | 0.87 | 0.15 | 0.5759 | 1.1759 | |

| Variable | AD | UCB | PD | PA | AG | ED | EP | EC |

|---|---|---|---|---|---|---|---|---|

| AD | 1 | |||||||

| UCB | 0.45 *** | 1 | ||||||

| PD | 0.98 *** | 0.45 *** | 1 | |||||

| PA | 0.98 *** | −0.78 *** | 0.99 *** | 1 | ||||

| AG | −0.37 *** | 0.40 *** | −0.35 *** | −0.33 *** | 1 | |||

| ED | −0.11 | −0.16 * | −0.12 | −0.11 | 0.11 | 1 | ||

| EP | −0.11 | −0.16 * | −0.12 | 0.20 | 0.19 ** | 0.35 *** | 1 | |

| EC | −0.17 * | −0.09 | −0.20 ** | −0.18 ** | −0.01 | 0.26 *** | 0.22 ** | 1 |

| Variable | β | BootSE | BootLLCI | BootULCI |

|---|---|---|---|---|

| PD | 0.015 | 0.014 | −0.019 | 0.035 |

| PA | 0.006 | 0.015 | −0.017 | 0.042 |

| DA | −0.815 | 0.051 | −0.918 | −0.718 |

| ED | −1.116 | 0.090 | −0.302 | 0.054 |

| EP | 0.084 | 0.085 | −0.090 | 0.245 |

| EC | −0.061 | 0.060 | −0.176 | 0.057 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, B.; Pei, S.; Wang, Q.; Meng, X. Understanding the Impact of Algorithmic Discrimination on Unethical Consumer Behavior. Behav. Sci. 2025, 15, 494. https://doi.org/10.3390/bs15040494

Sun B, Pei S, Wang Q, Meng X. Understanding the Impact of Algorithmic Discrimination on Unethical Consumer Behavior. Behavioral Sciences. 2025; 15(4):494. https://doi.org/10.3390/bs15040494

Chicago/Turabian StyleSun, Binbin, Shan Pei, Qingjin Wang, and Xuelei Meng. 2025. "Understanding the Impact of Algorithmic Discrimination on Unethical Consumer Behavior" Behavioral Sciences 15, no. 4: 494. https://doi.org/10.3390/bs15040494

APA StyleSun, B., Pei, S., Wang, Q., & Meng, X. (2025). Understanding the Impact of Algorithmic Discrimination on Unethical Consumer Behavior. Behavioral Sciences, 15(4), 494. https://doi.org/10.3390/bs15040494