Listening Beyond the Source: Exploring the Descriptive Language of Musical Sounds

Abstract

1. Introduction

2. Sound Qualities Perception and Descriptive Language: A Short Overview

3. Sound Qualities in Perception: Cross-Modal Perception and Fields

3.1. Defining Perceptual Sound Quality

3.2. Emergence of Cross-Modal Perceptive Fields in Sound Quality

3.2.1. The Field of Matter

3.2.2. The Field of Forms

3.2.3. The Field of Positions

3.3. The Qualities and Attributes of Sound Matter Field

- Color embodies the harmonic and spectral richness of sound and shapes its timbral identity. Attributes such as “bright” and “dull” are indicative of color.

- Texture encompasses sensations ranging from granular, where individual sound particles are discernible and evoke roughness, to smooth and continuous, where no elements disrupt the sense of uniformity.

- Density refers to the compactness of internal sound structures at a given moment, or how they evolve over time, influencing perceptions of fullness or sparseness relative to other sounds. For example, terms such as “opaque” and “transparent” are often associated with density.

3.3.1. Sound Color

- A sound is perceived as “bright” when it has a strong emphasis on high-frequency spectral energy, often characterized by crispness and sharpness. The higher the frequency content, the brighter the sound, such as the ringing of a bell or the sound of a violin.

- A sound that is perceived as “dull” lacks high-frequency energy, giving it a muted or subdued quality. These sounds have a spectral content predominantly in the low to mid frequencies, with little or no high-frequency emphasis, and are perceived as dull. These sounds feel less sharp and more rounded, like the low hum of a foghorn.

- A sound perceived as “dark” has a spectrum dominated by low frequencies, making it feel heavy or grounded. A bass drum or the low growl of an instrument is perceived as dark because of the dominance of lower frequencies.

- “Clarity” refers to the perceived distinctiveness of a sound, often linked to its spectral composition. Clear sounds tend to have well-defined harmonic content with minimal overlap or masking by other frequencies. Sounds with good clarity allow the listener to easily distinguish between different components, such as a pure piano note or a clean vocal recording.

3.3.2. Sound Texture

- A “smooth” sound is perceived as continuous and flowing, with no abrupt changes or harsh interruptions. The spectral content is stable or transitions gradually, providing a steady, uniform auditory experience. An example would be a sine wave, where the sound is even and free of jagged variations.

- A “rough” sound is characterized by irregular, erratic micro-fluctuations in the amplitude or frequency of each spectral component. The irregularity in its waveform results in a jagged, abrasive, or harsh auditory sensation due to these unpredictable variations in its spectral content.

- A “granular” sound is made up of small, discrete sound particles or “grains” that are superimposed or densely packed. These sounds have a textured, “grainy” quality, often resembling a series of tiny clicks, bursts, or noise-like sounds that, when combined, form a continuous but textured sound. Granular synthesis specifically creates this texture by arranging small grains of sound into a cohesive auditory experience.

- “Striated” sounds show a regular, repeating pattern or structure, similar to stripes or lines. These sounds often have a rhythmic, periodic quality, such as the hum of a machine or the sustained note of an instrument. The rapid repetition and cyclical nature of the internal elements of the sound create an organized, patterned texture over time.

- An “iterative” sound involves the repetition of a pattern or structure over time, often with slight variations, iterations. But unlike striated sounds, which exhibit regularity, iterative sounds tend to evolve slightly with each repetition. They can evoke a sense of rhythm or repetitive motion, but with subtle shifts or changes in each cycle.

4. The Sound Entity Quality Research Survey (SEQR)

- Participant Characterization and Survey Assessment

- Listening and Responding.

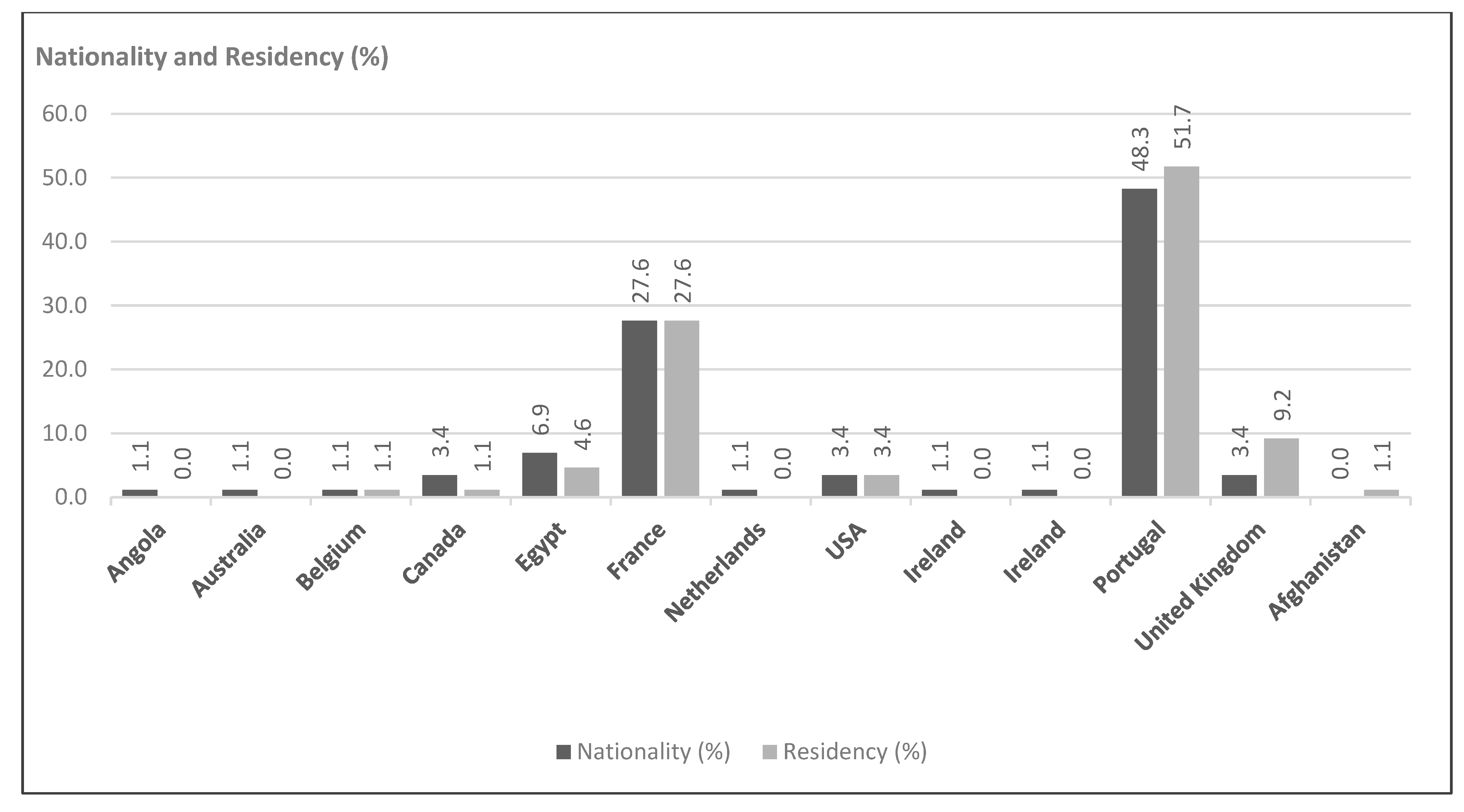

4.1. Participant Characterisation

- Amateurs: Non-professional musicians who could indicate their preferred musical genres (e.g., classical, electroacoustic, pop, jazz).

- Professionals: Music or sound professionals, who could specify their role (e.g., composer, performer) and primary musical genres.

4.2. Survey Assessment

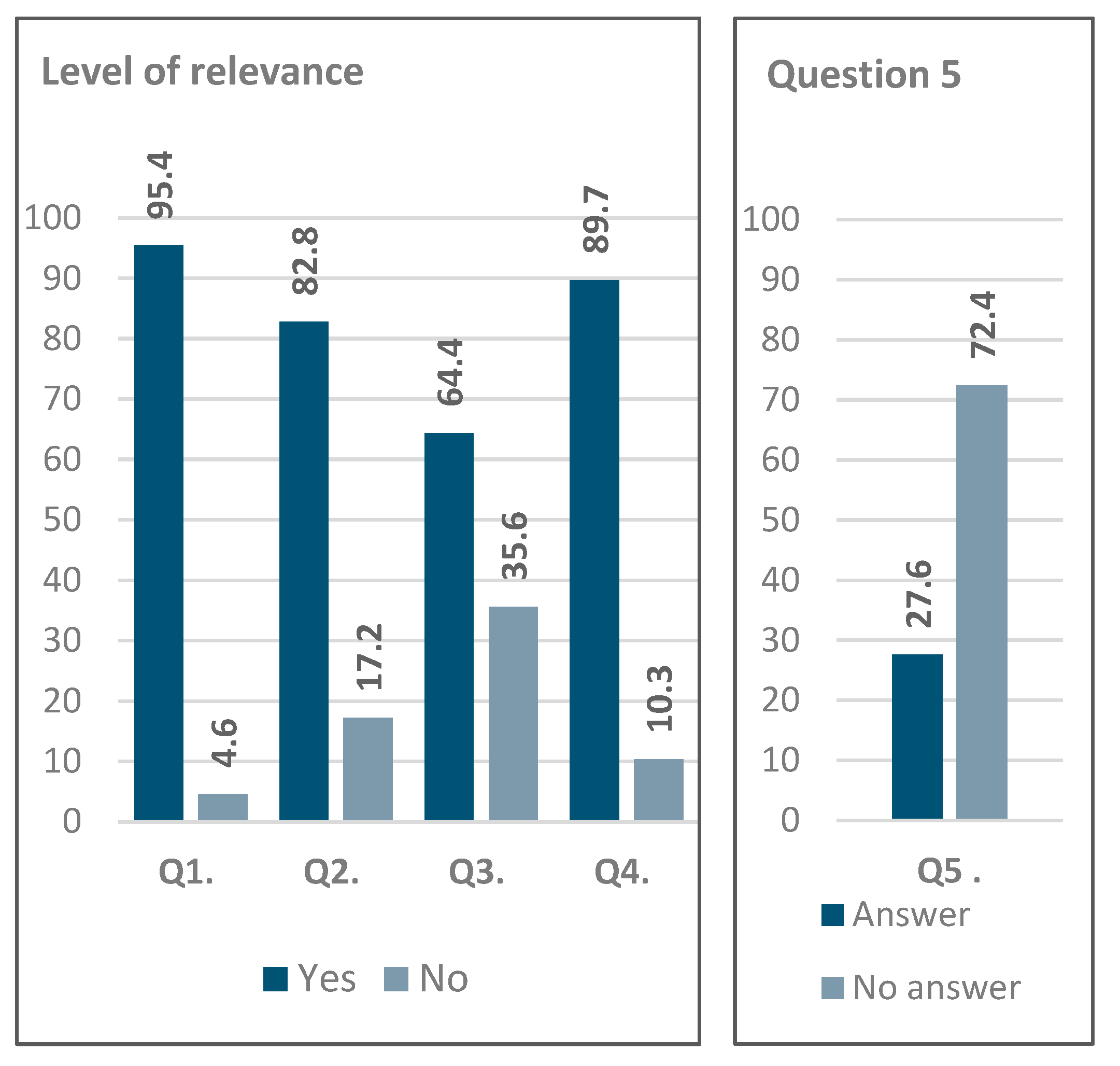

- Q1: Do you pay attention to sound qualities other than identifying the sound source? [Yes/No]

- Q2: Do you use music software? [Yes/No]

- Q3: Do you use sound manipulation software? [Yes/No]

- Q4: Would a perceptual sound manipulation interface be useful? [Yes/No]

- Q5: Could perceptual sound studies have applications beyond music and sound manipulation? [Open-ended question]

- Artistic and Creative Applications: Enhancing sound production for artists, including creators and performers, sound design for film, ambient sound creation, and optimizing soundtrack production tools for both amateurs and professionals.

- Educational and Therapeutic Uses: Applications in music education and therapy, in particular to improve auditory perception and sound quality recognition.

- Technological Advancements: Development of user-friendly sound manipulation interfaces for marketing, human–computer interaction, and ecological sound design.

- Scientific and Analytical Applications: Advancing data sonification, the semiology of music, and the study of sound–image relationships.

- Human Perception Insights: Investigating the brain’s perception of sound and improving the link between composers’ intentions and audiences’ perceptions.

4.3. Listening and Responding

- Matter Field: 18 sounds assessed based on internal or surface qualities, including a 1000 Hz simple sine wave as a test sound.

- Form Field: 12 sounds evaluated for shape or contour characteristics.

- Position Field: 12 sounds rated for spatial qualities, such as perceived position and movement.

5. Intrinsic Sound Qualities: SEQR Survey Results Analysis

| Sound | Profile | Generation Method |

|---|---|---|

| #1 | Sine wave. (1000 Hz) | Simple generator; CSound |

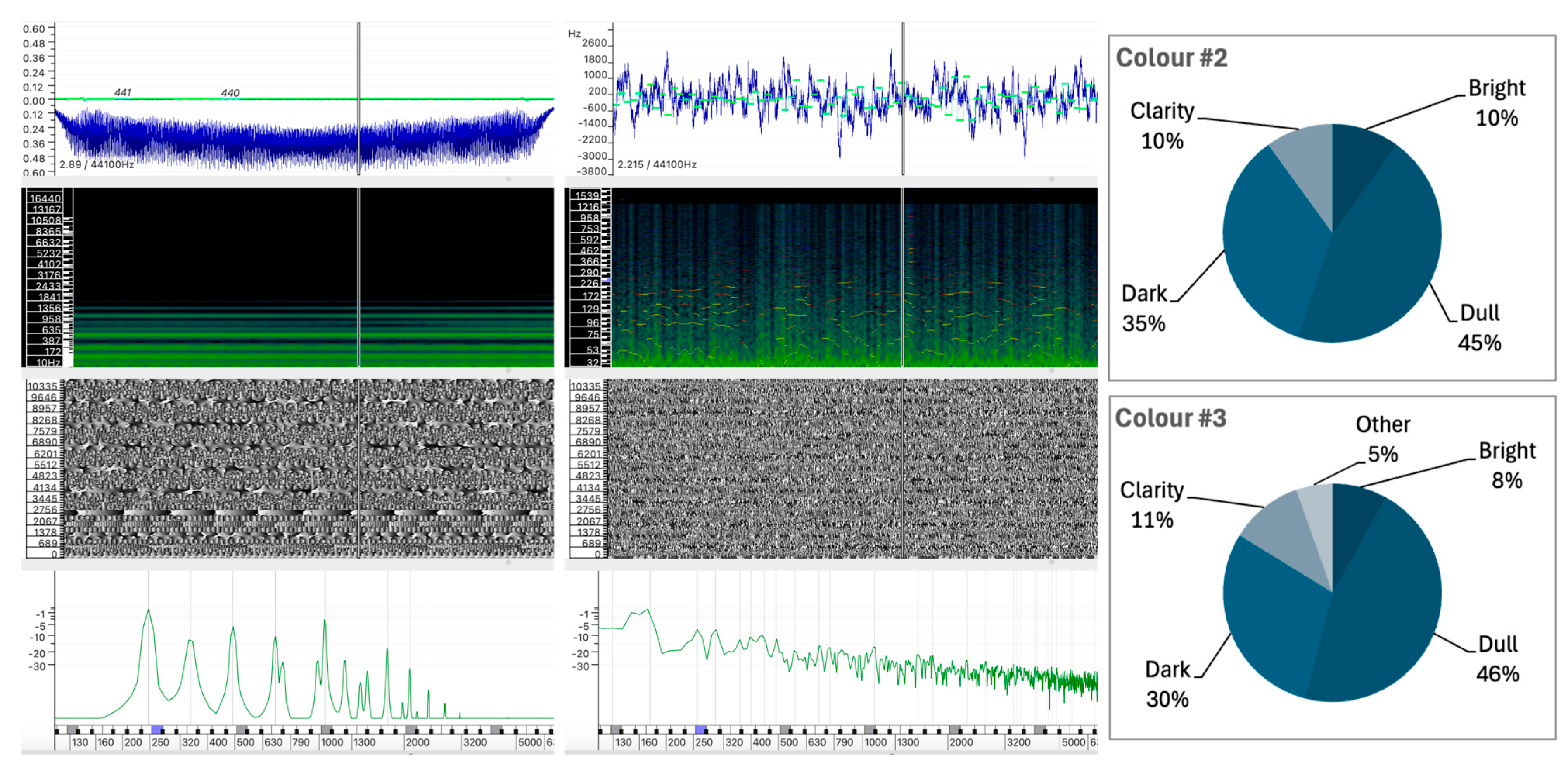

| #2 | Near-harmonic spectrum with two prominent spectral peaks in the medium register | Modulated additive synthesis; CSound |

| #3 | White noise, slightly filtered in a medium-low register | Noise generator; CSound |

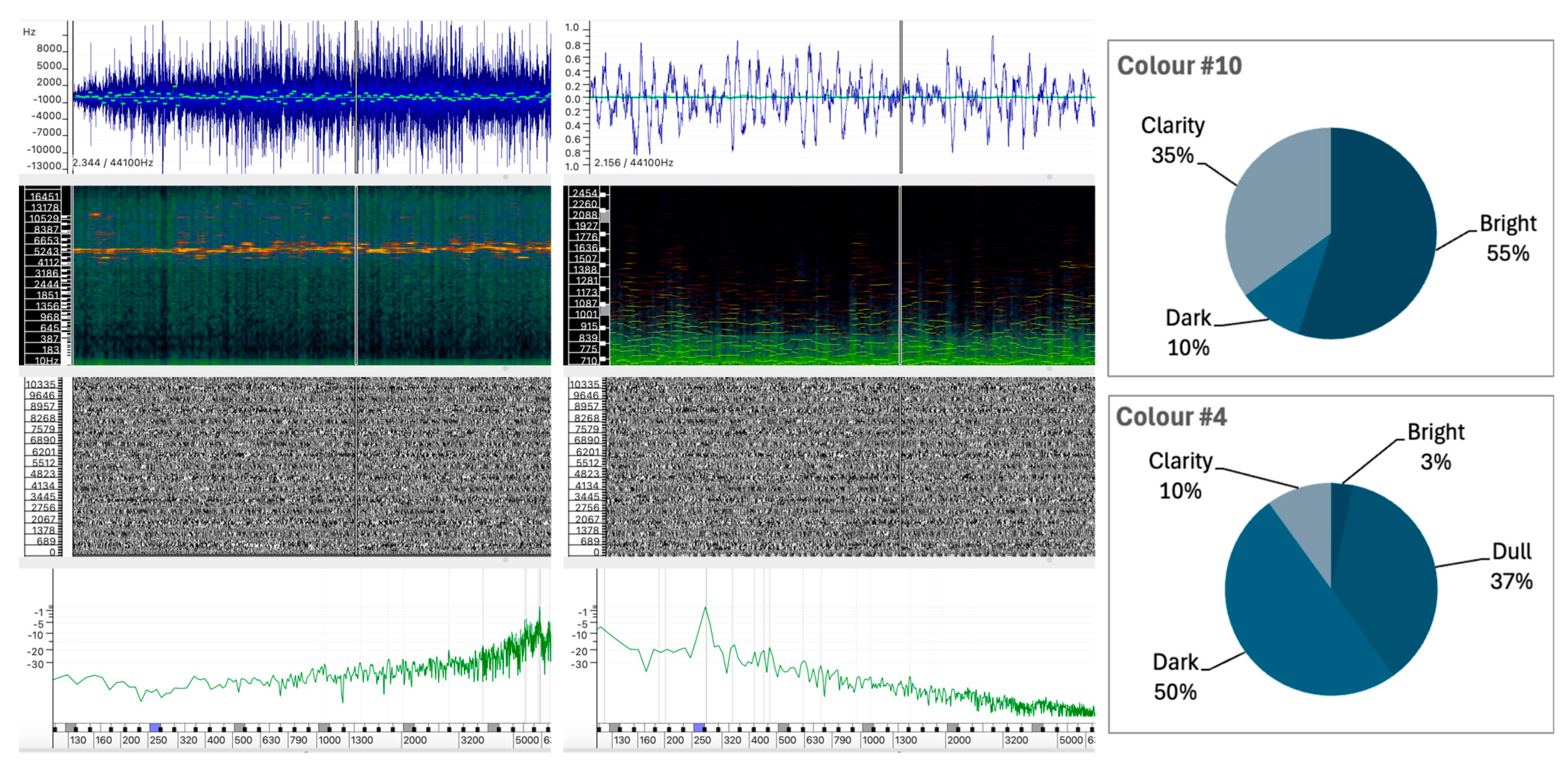

| #4 | Natural noise recording, processed to remove causal references | Natural noise manipulated; Audacity/CSound |

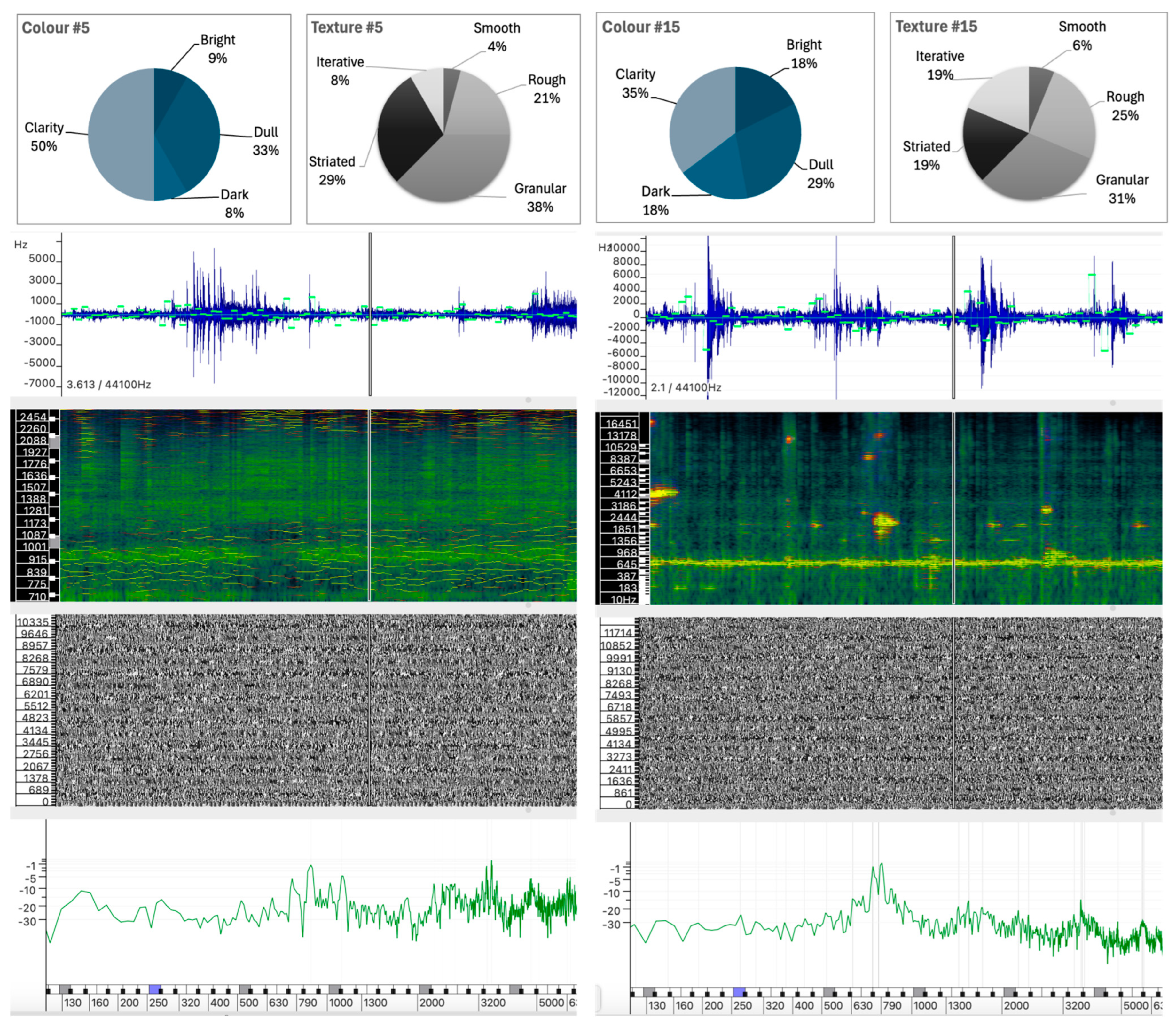

| #5 | Granular texture generated from a recorded natural sound; medium-low register | Granular synthesis; CSound |

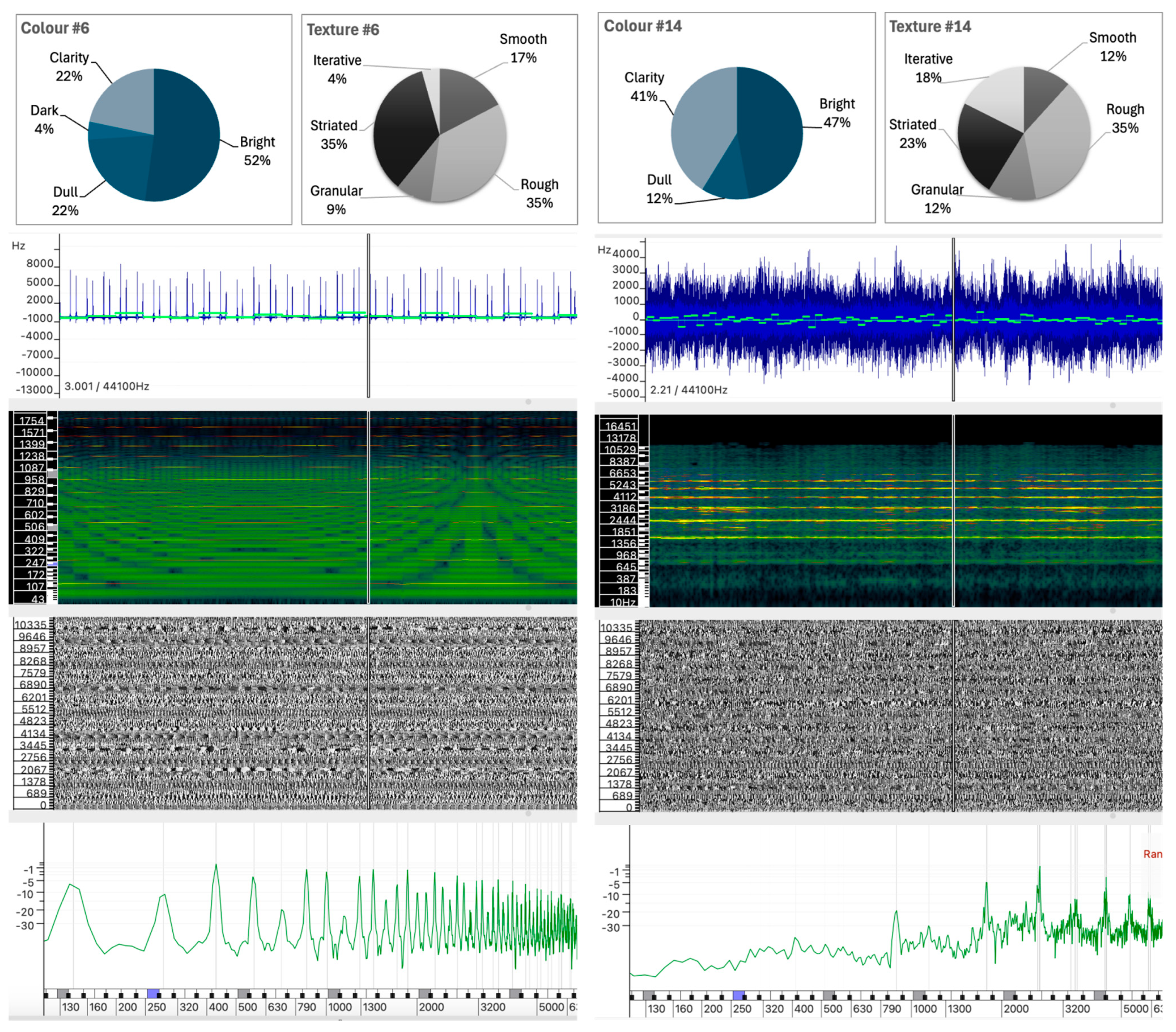

| #6 | Buzzing sound in a medium register | Additive-based hybrid synthesis; CSound |

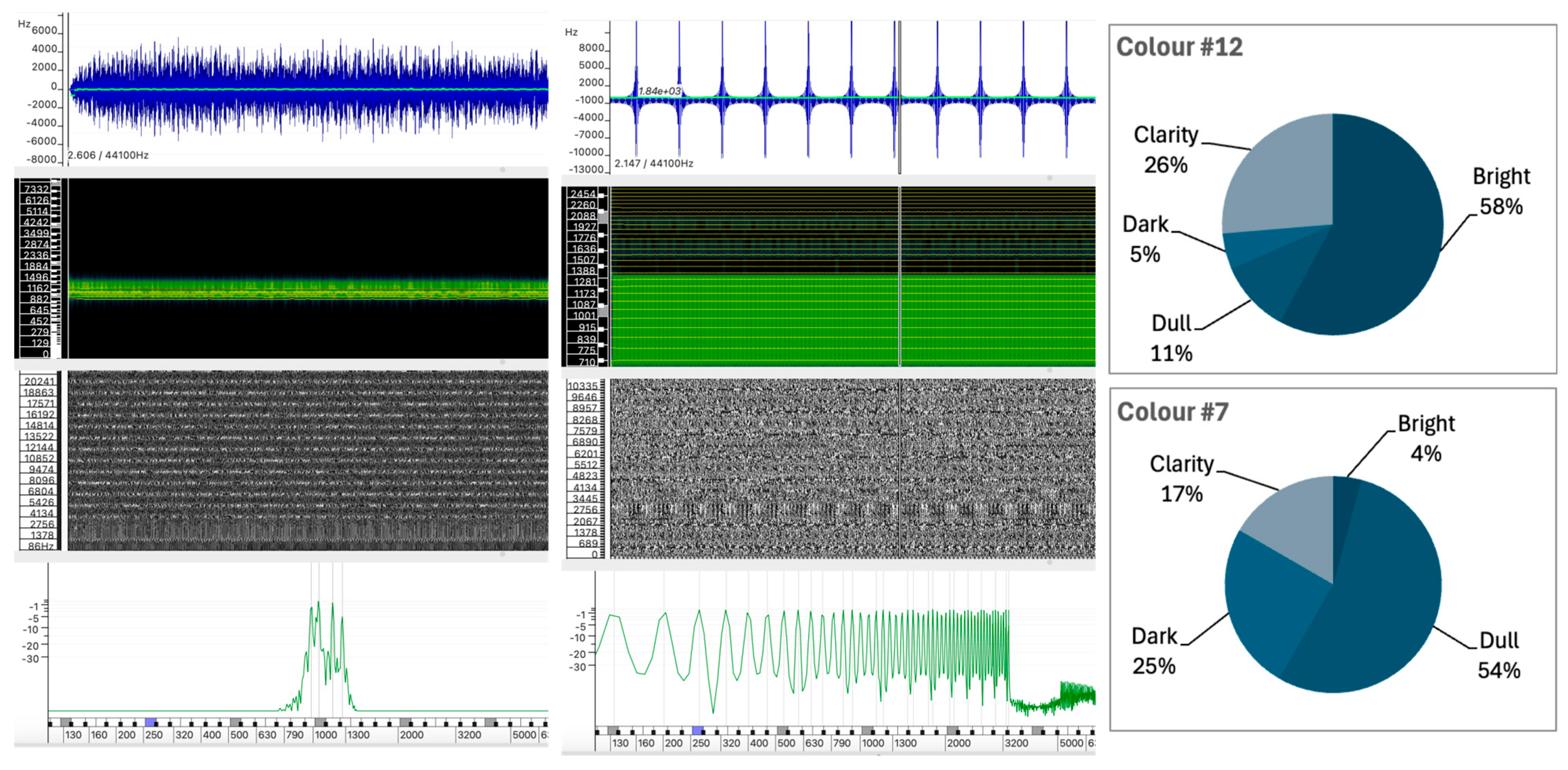

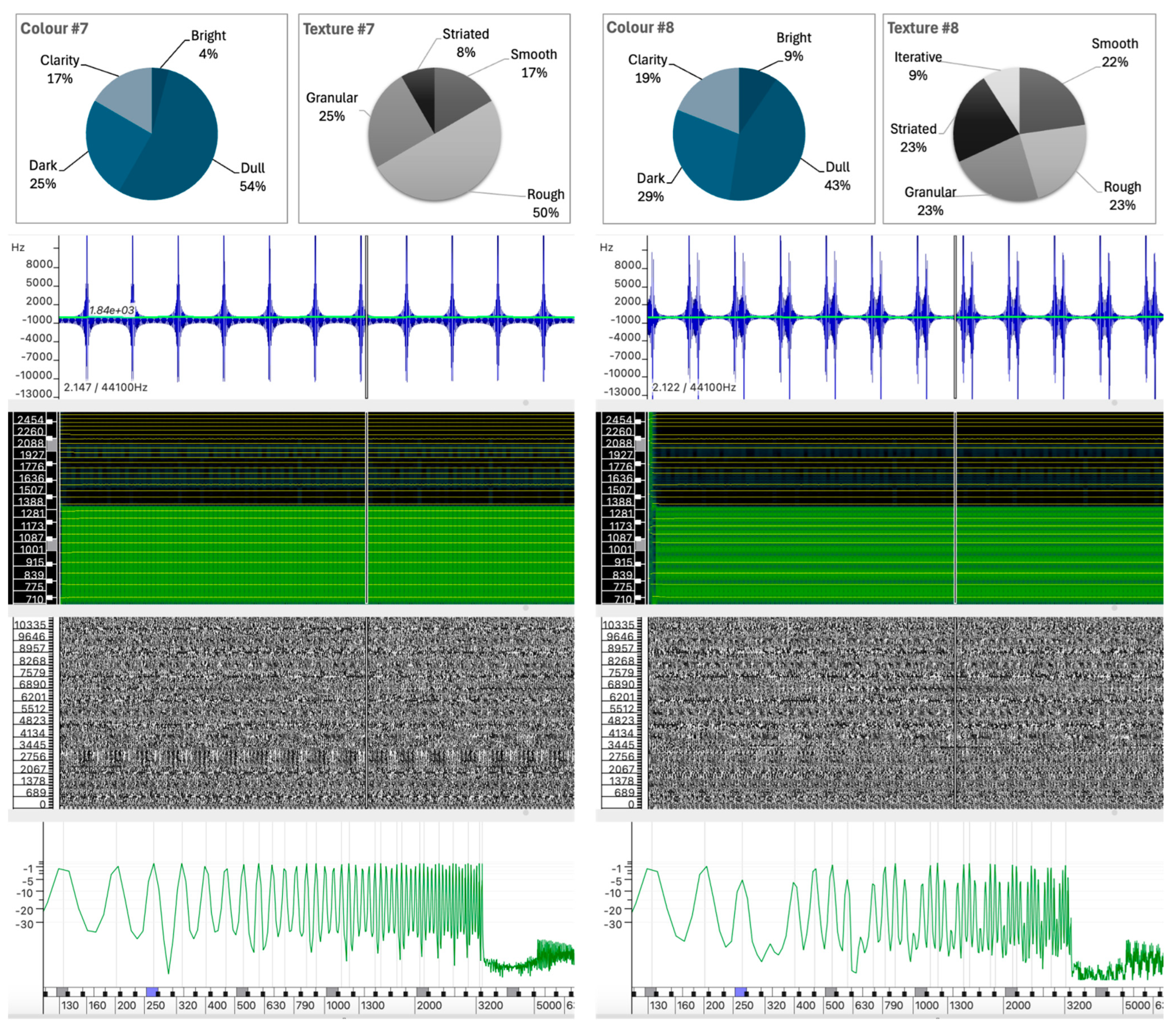

| #7 | Similar to Sound #6, in a medium-low frequency range | Additive-based hybrid synthesis; CSound |

| #8 | Equivalent to Sound #7, with slight amplitude modulation | Additive-based hybrid synthesis; CSound |

| #9 | Medium-register sound with rapid pulsation, evoking a flutter | Additive-based hybrid synthesis; CSound |

| #10 | Grainy, rustling sound akin to small wood shims, medium-high register | Granular synthesis; CSound |

| #11 | Near-harmonic spectrum in a low register | Additive synthesis; CSound |

| #12 | Buzzing sound in the medium-high register, with a fluttering, striated texture | Granular-based synthesis; CSound |

| #13 | Slow, deep tremolo-like oscillation in a near-harmonic spectrum, medium register | Additive-based hybrid synthesis; CSound |

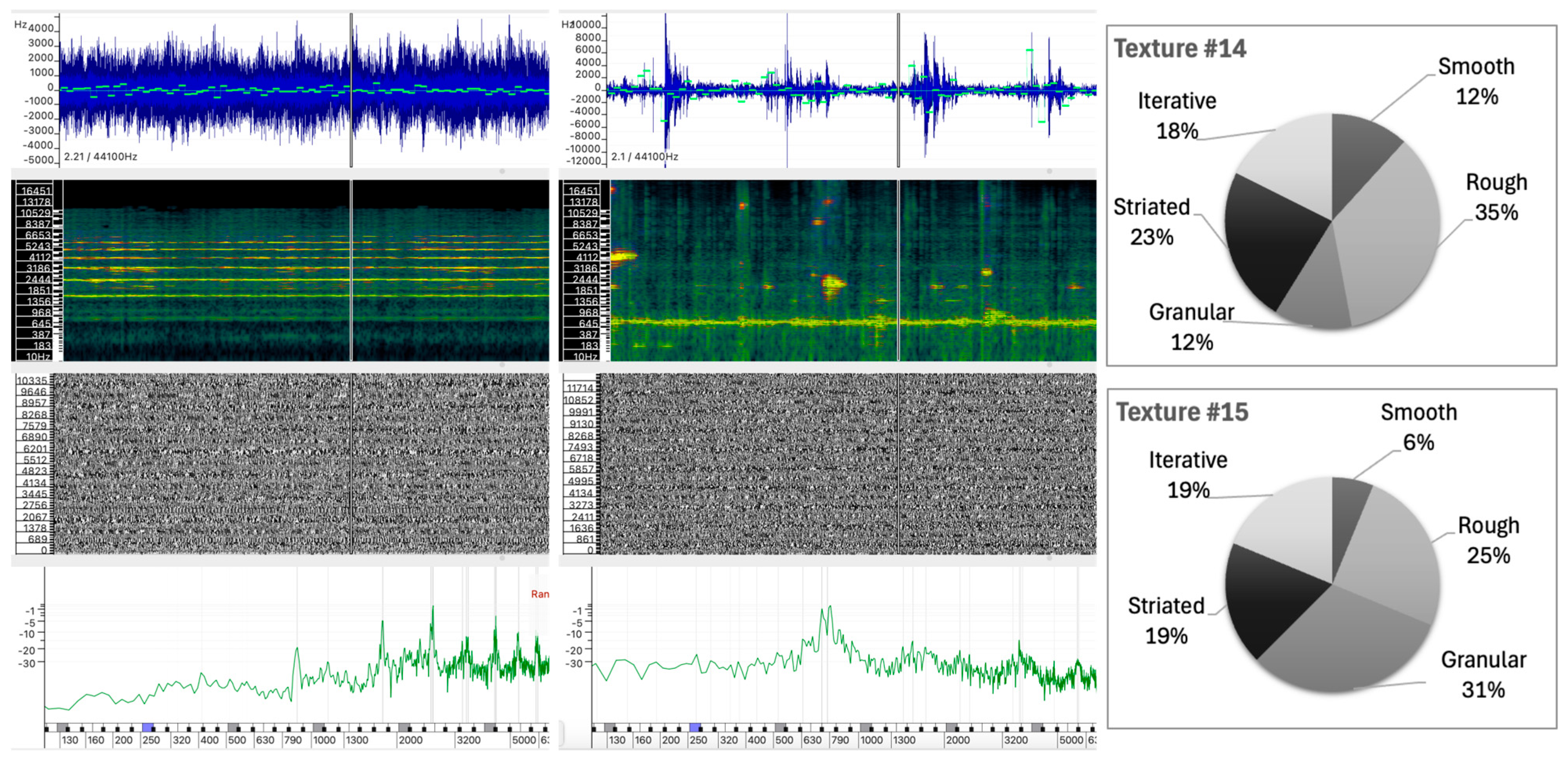

| #14 | Buzzing sound in the medium-high register | Heavily processed natural sound; Audacity/CSound |

| #15 | Liquid, granular texture reminiscent of large water droplets on wood, medium-low register | Processed natural sound with granular synthesis; CSound |

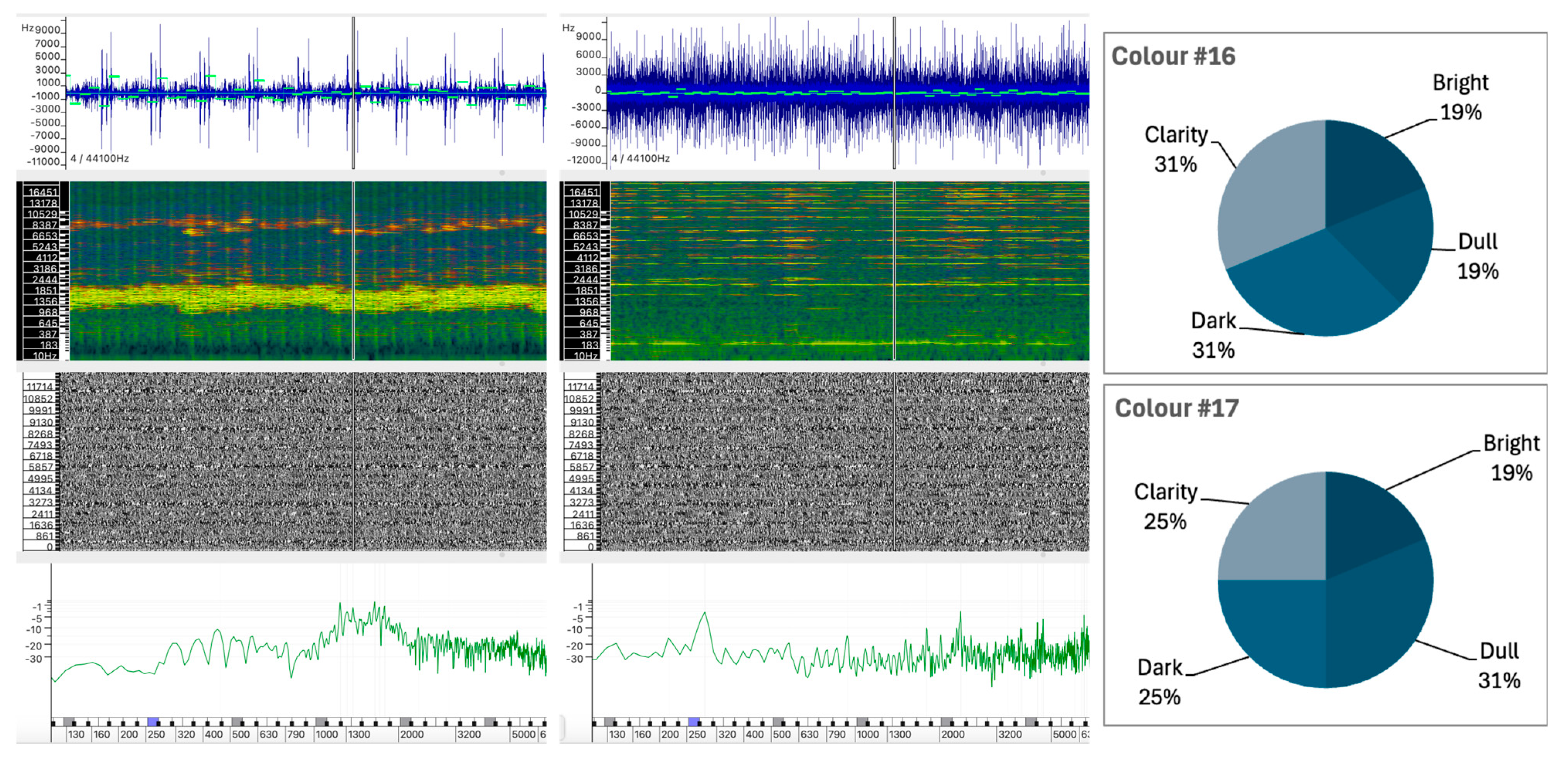

| #16 | Granular sound with tiny grains in a thin medium register band, creating a pitch sensation | Granular synthesis; CSound |

| #17 | Iterative texture in a thin medium register band | Granular synthesis; CSound |

| #18 | Similar to Sound #16, with a broader medium register band evoking a white noise sensation, with no discernible pitch | Granular synthesis; CSound |

- Waveform and Linear Frequency Centroid: The first layer shows the sound wave (in blue) and the linear frequency centroid (in green), providing a direct view of the amplitude and pitch variations over time. This layer has been analyzed using the Vamp Spectral Centroid plugin on a linear frequency scale in Hz. The vertical ruler represents the relative frequency and the horizontal axis represents the duration of the sound example. Due to software limitations and overlapping elements, the amplitude level of the waveform (between −1 and +1) and the time ruler are not displayed.

- Spectrogram: The second layer shows the frequency distribution and intensity over time, highlighting harmonic structures and temporal changes. The spectrogram was calculated using a 1024-point FFT analysis window (in greenish background) on a Mel scale. A larger 4096-point FFT window (in sunset colors) is superimposed, highlighting the highest intensity partials of the sound spectrum.

- Phase Visualization: The third layer shows the phase of the sound wave partials, giving an insight into the evolution of the sound wave components. The phase variation has been analyzed using a short 512-point FFT window on a linear phase analysis scale. This layer visualizes the interaction between the phases of the partials and shows how phase alignment or misalignment affects the overall sound wave. Constructive interference results in the amplification of certain frequencies, while destructive interference can cause phase cancellation, altering the perceived tonal quality of the sound. Regular patterns appear when there is phase alignment between partials.

- Instantaneous Spectral Content: The fourth layer displays a point on the spectral content, providing a detailed snapshot of the frequency peak of the sound at a given point in time. The instantaneous spectral analysis was performed on the 250 Hz point of the spectrum, indicated by a blue marker on the frequency ruler. The horizontal axis represents the spectral frequency at a given time, while the vertical axis shows the relative intensity of spectral regions, with their values displayed on the vertical axis.

5.1. Sound Colour Attributes

| Colour | |||||

|---|---|---|---|---|---|

| Sound | Bright | Dull | Dark | Clarity | Other |

| #1 | 24.1 | 27.8 | 3.7 | 40.7 | 3.7 |

| #2 | 10.0 | 45.0 | 35.0 | 10.0 | — |

| #3 | 8.1 | 45.9 | 29.7 | 10.8 | 5.4 |

| #4 | 3.3 | 36.7 | 50.0 | 10.0 | — |

| #5 | 8.3 | 33.3 | 8.3 | 50.0 | — |

| #6 | 52.2 | 21.7 | 4.3 | 21.7 | — |

| #7 | 4.2 | 54.2 | 25.0 | 16.7 | — |

| #8 | 9.5 | 42.9 | 28.6 | 19.0 | — |

| #9 | 38.1 | 23.8 | 9.5 | 28.6 | — |

| #10 | 55.0 | — | 10.0 | 35.0 | — |

| #11 | 11.1 | 16.7 | 50.0 | 16.7 | 5.6 |

| #12 | 57.9 | 10.5 | 5.3 | 26.3 | — |

| #13 | 11.1 | 50.0 | 16.7 | 22.2 | — |

| #14 | 47.1 | 11.8 | — | 41.2 | — |

| #15 | 17.6 | 29.4 | 17.6 | 35.3 | — |

| #16 | 18.8 | 18.8 | 31.3 | 31.3 | — |

| #17 | 18.8 | 31.3 | 25.0 | 25.0 | — |

| #18 | 29.4 | 47.1 | 5.9 | 17.6 | — |

5.1.1. Sound #16 and Sound #17

- Sound #16 displays irregular fluctuations in amplitude over its duration, suggesting a more complex or dynamic sound structure. While the frequency centroid remains relatively stable with small deviations, indicating moderate fluctuations in the frequency content of the sound over time, the spectral content appears to be dispersed. The spectrogram shows a wide frequency range with some high intensity partials in the mid to high frequencies. The instantaneous spectrum analysis shows more energy peaks, particularly in the higher frequencies, above 2000 Hz. This presence of higher frequency spectral content could be associated with perceptual descriptors such as Bright and Clear.

- Sound #17, however, shows a more stable and repetitive pattern compared to #16, thus suggesting a less complex or more regular internal structure. The frequency centroid shows more consistent behavior, indicating that the spectral content of the sound is relatively stable over time. The spectrogram displays a broad frequency spectrum, with an obvious dominance in the middle region of the spectrum, as also confirmed by the instantaneous spectrum analysis. The strongest energy distribution is also observed in the mid frequencies, particularly between 1200 Hz and 1800 Hz, suggesting a reduced brightness compared to #16. This feature contributes to the Dull descriptor.

5.1.2. Sound #2 and Sound #3

- Sound #2 was created using additive synthesis, resulting in a near-harmonic spectrum with distinct spectral peaks regularly distributed across the spectrum. The frequency centroid shows stability with minor fluctuations, indicating that the sound maintains a consistent spectral content over time. The spectrogram shows moderate energy distributed across the low-mid region of the spectrum, and the instantaneous spectrum confirms a steady intensity in the low-mid frequencies. The phase plot shows the expected regularity of an additive synthesized sound wave.

- Sound #3, produced by a noise generator with light filtering, has a more diffuse spectral profile, as expected from a noisy sound wave. Despite this, the auditory descriptors are similar to those of Sound #2. The waveform shows more fluctuation, indicating a more dynamic or complex sound, and the frequency centroid shows a more irregular frequency range. The spectrogram shows a concentration of partials in the lower frequency range, and the instantaneous spectrum analysis supports this by showing intensity primarily in the lower frequencies, reinforcing the dullness and darkness of the sound.

5.1.3. Sound #10 and Sound #4

- Sound #10 shows irregular fluctuations in amplitude, and while the frequency centroid shows some variation, it remains generally stable. This indicates a relatively consistent distribution of spectral content over the duration of the sound. The spectrogram shows a significant number of partials in the mid frequencies, which is confirmed by the instantaneous spectrum analysis, which shows a relatively even distribution of energy in the lower frequencies, with slightly more energy in the higher frequencies. These characteristics may explain why Sound #10 is perceived as bright and clear.

- Sound #4, however, has a more variable waveform compared to #10, suggesting a more complex sound. The frequency centroid in Sound #4 is more consistent over time, with fewer fluctuations which could indicate a sound with a more defined pitch. The spectral content distribution is centered in the lower frequencies, with a clear peak around 250 Hz, as shown in the instantaneous spectrum analysis.

5.1.4. Sound #12 vs. Sound #7

- Sound #12, produced by granular synthesis, has spectral components concentrated in a relatively narrow and stable frequency range, as shown in the spectrogram. The waveform appears relatively uniform, with a frequency centroid that remains stable, indicating that the sound’s spectral content of the sound is consistent throughout its duration. The instantaneous spectrum confirms the concentration of energy in a narrow frequency band between 700 Hz and 1300 Hz.

- Sound #7, created by additive synthesis, has spectral components that are more evenly distributed across the spectrum, with partials of similar intensity. The waveform shows a repetitive pattern with a stable frequency center. The spectrogram shows a broad frequency spectrum, with energy present at similar intensities throughout the spectrum up to about 3200 Hz, where it drops off abruptly.

5.2. Sound Texture Attributes

| Texture | ||||||

|---|---|---|---|---|---|---|

| Sound | Smooth | Rough | Granular | Striated | Iterative | Other |

| #1 | 88.5 | 3.8 | — | 5.8 | 1.9 | — |

| #2 | 21.1 | 23.7 | 7.9 | 44.7 | — | 2.6 |

| #3 | 2.6 | 34.2 | 55.3 | 2.6 | 5.3 | — |

| #4 | 3.3 | 50.0 | 16.7 | 16.7 | 6.7 | 6.7 |

| #5 | 4.2 | 20.8 | 37.5 | 29.2 | 8.3 | — |

| #6 | 17.4 | 34.8 | 8.7 | 34.8 | 4.3 | — |

| #7 | 16.7 | 50.0 | 25.0 | 8.3 | — | — |

| #8 | 22.7 | 22.7 | 22.7 | 22.7 | 9.1 | — |

| #9 | 19.0 | 9.5 | 23.8 | 38.1 | 9.5 | — |

| #10 | 10.0 | 5.0 | 50.0 | 30.0 | 5.0 | — |

| #11 | 27.8 | 16.7 | 11.1 | 16.7 | 22.2 | 5.6 |

| #12 | 31.6 | 10.5 | 15.8 | 26.3 | 15.8 | — |

| #13 | 27.8 | 16.7 | — | 33.3 | 22.2 | — |

| #14 | 11.8 | 35.3 | 11.8 | 23.5 | 17.6 | — |

| #15 | 6.3 | 25.0 | 31.3 | 18.8 | 18.8 | — |

| #16 | 12.5 | 37.5 | 25.0 | 12.5 | 6.3 | 6.3 |

| #17 | 12.5 | 6.3 | 56.3 | 18.8 | 6.3 | — |

| #18 | — | 47.1 | 17.6 | 29.4 | 5.9 | — |

- Sound #3 was classified with a significantly higher rating in the Rough attribute (34.2%) compared to Sounds #10 and #17, which show significantly lower ratings of 5.0% and 6.3%, respectively.

- Sounds #10 and #17 have a significant amount of Striated texture (30.0% and 18.8%), while Sound #3 has only 2.6% in this category.

5.2.1. Sound #11 and Sound #13

- Sound #13, despite its near-harmonic spectrum, exhibits a deep tremolo-like vibration with prominent spectral peaks at 350 Hz, 650 Hz, and 950 Hz, giving it a mid-range perception. The tremolo introduces same variations in the frequency centroid, contributing to the irregularity of the perceived texture. The spectrogram and phase analysis show a relatively stable pattern, with clear synchrony between the partial phases below 1200 Hz, indicating a striated texture. While the spectral peaks are close to harmonic, the regular fluctuations in waveform and phase further support the perception of striation.

- In contrast, Sound #11 has a lower register profile with spectral partials concentrated below 400 Hz and a more stable frequency centroid. The spectrogram for Sound #11 shows a more even distribution of energy across the lower frequencies, which is further supported by the less differentiated peaks observed in the instantaneous spectrum analysis. In addition, the stable spectral structure seen in the phase analysis layer confirms a consistent internal structure.

5.2.2. Sound #14 and Sound #15

- The natural sound that gave rise to Sound #14 has undergone extensive processing to produce a medium-high buzzing sound with a more structured and repetitive profile. The waveform has noticeably sharp peaks, while the frequency centroid shows some stability. The spectrogram shows a clear concentration of energy in the mid-to-high frequency range with repetitive spectral patterns, reinforcing the perception of a striated and structured texture that contributes to the buzzing auditory sensation.

- Sound #15, also generated from a natural source that has been lightly processed, retains an irregular, liquid-like quality with random, grainy textures resembling large falling water droplets. The waveform shows irregularity with sharp fluctuations, indicating an unpredictable or random structure. The frequency centroid shows greater variation with more pronounced shifts, suggesting a more complex or irregular spectral content. The spectrogram shows clear energy peaks at both low and high frequencies. However, the distribution is more spread out compared to Sound #14. These fluctuations, or seemingly erratic behavior over time, contribute to the perceived granularity or roughness. In addition, the instantaneous spectrum shows a more irregular distribution of energy peaks.

5.3. Integrating Colour and Texture Attributes

| Sound | #1 | #2 | #3 | #4 | #5 | #6 | #7 | #8 | #9 | #10 | #11 | #12 | #13 | #14 | #15 | #16 | #17 | #18 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #1 | — | |||||||||||||||||

| #2 | 129.5 | — | ||||||||||||||||

| #3 | 137.7 | 72.6 | — | |||||||||||||||

| #4 | 157.6 | 61.7 | 66.9 | — | ||||||||||||||

| #5 | 109.0 | 87.1 | 81.3 | 96.1 | — | |||||||||||||

| #6 | 117.3 | 73.7 | 116.0 | 98.3 | 89.0 | — | ||||||||||||

| #7 | 131.5 | 64.5 | 49.3 | 49.1 | 82.9 | 96.4 | — | |||||||||||

| #8 | 110.0 | 37.8 | 53.8 | 59.5 | 63.0 | 76.8 | 44.7 | — | ||||||||||

| #9 | 97.0 | 69.7 | 102.1 | 107.0 | 62.7 | 46.8 | 99.9 | 61.1 | — | |||||||||

| #10 | 114.3 | 106.8 | 97.4 | 127.9 | 70.3 | 66.4 | 112.3 | 87.6 | 47.6 | — | ||||||||

| #11 | 121.1 | 62.5 | 94.3 | 67.0 | 96.2 | 94.9 | 84.3 | 54.1 | 80.8 | 111.5 | — | |||||||

| #12 | 103.1 | 94.2 | 127.9 | 127.4 | 96.9 | 45.8 | 119.4 | 81.4 | 44.4 | 52.7 | 80.1 | — | ||||||

| #13 | 105.1 | 37.5 | 64.6 | 85.8 | 61.6 | 79.0 | 55.9 | 32.9 | 56.6 | 73.8 | 64.0 | 74.4 | — | |||||

| #14 | 114.3 | 84.9 | 110.7 | 81.1 | 77.6 | 41.1 | 90.5 | 75.3 | 53.4 | 60.9 | 69.7 | 50.6 | 82.6 | — | ||||

| #15 | 103.9 | 73.2 | 66.8 | 75.7 | 36.8 | 74.8 | 64.3 | 46.6 | 54.0 | 71.0 | 70.9 | 81.1 | 52.7 | 58.1 | — | |||

| #16 | 114.5 | 75.0 | 69.2 | 55.0 | 62.7 | 72.4 | 55.3 | 49.7 | 68.3 | 86.8 | 58.7 | 86.1 | 73.2 | 51.5 | 38.6 | — | ||

| #17 | 104.5 | 82.5 | 57.3 | 93.2 | 59.2 | 98.6 | 83.3 | 55.5 | 64.7 | 53.7 | 82.5 | 95.1 | 50.4 | 92.5 | 47.1 | 60.0 | — | |

| #18 | 80.1 | 65.4 | 80.7 | 65.9 | 74.2 | 50.6 | 55.0 | 56.5 | 66.7 | 84.4 | 93.9 | 85.3 | 56.7 | 64.6 | 61.0 | 62.6 | 85.2 | — |

| MIN | 80.1 | 37.5 | 49.3 | 49.1 | 36.8 | 41.1 | 44.7 | 32.9 | 44.4 | 52.7 | 58.7 | 50.6 | 50.4 | 51.5 | 38.6 | 60.0 | 85.2 | — |

| MAX | 157.6 | 106.8 | 127.9 | 127.9 | 96.9 | 98.6 | 119.4 | 87.6 | 80.8 | 111.5 | 93.9 | 95.1 | 82.6 | 92.5 | 61.0 | 62.6 | 85.2 | — |

5.3.1. Integrated Attributes of Sound #5 and Sound #15

| Attribute | #5 Value | #15 Value | Euclidean Distance |

|---|---|---|---|

| Texture | |||

| Smooth | 4.2 | 6.3 | 2.1 |

| Rough | 20.8 | 25.0 | 4.2 |

| Granular | 37.5 | 31.3 | 6.2 |

| Striated | 29.2 | 18.8 | 10.4 |

| Iterative | 8.3 | 18.8 | 10.5 |

| Color | |||

| Bright | 8.3 | 17.6 | 9.3 |

| Dull | 33.3 | 29.4 | 3.9 |

| Dark | 8.3 | 17.6 | 9.3 |

| Clarity | 50.0 | 35.3 | 14.7 |

5.3.2. Integrated Attributes of Sound #6 and Sound #14

| Attribute | #6 Value | #14 Value | Euclidean Distance |

|---|---|---|---|

| Texture | |||

| Smooth | 17.4 | 11.8 | 5.6 |

| Rough | 34.8 | 35.3 | 0.5 |

| Granular | 8.7 | 11.8 | 3.1 |

| Striated | 34.8 | 23.5 | 11.3 |

| Iterative | 4.3 | 17.6 | 13.3 |

| Color | |||

| Bright | 52.2 | 47.1 | 5.1 |

| Dull | 21.7 | 11.8 | 9.9 |

| Dark | 4.3 | — | 4.3 |

| Clarity | 21.7 | 41.2 | 19.5 |

5.3.3. Integrated Attributes of Sound #7 and Sound #8

| Attribute | #7 Value | #8 Value | Euclidean Distance |

|---|---|---|---|

| Texture | |||

| Smooth | 16.7 | 22.7 | 6.0 |

| Rough | 50.0 | 22.7 | 27.3 |

| Granular | 25.0 | 22.7 | 2.3 |

| Striated | 8.3 | 22.7 | 14.4 |

| Iterative | — | 9.1 | 9.1 |

| Color | |||

| Bright | 4.2 | 9.5 | 5.3 |

| Dull | 54.2 | 42.9 | 11.3 |

| Dark | 25.0 | 28.6 | 3.6 |

| Clarity | 16.7 | 19.0 | 2.3 |

5.3.4. Integrated Attributes of Sound #11 and Sound #13

| Attribute | #11 Value | #13 Value | Euclidean Distance |

|---|---|---|---|

| Texture | |||

| Smooth | 27.8 | 27.8 | — |

| Rough | 16.7 | 16.7 | — |

| Granular | 11.1 | — | 11.1 |

| Striated | 16.7 | 33.3 | 16.6 |

| Iterative | 22.2 | 22.2 | — |

| Color | |||

| Bright | 11.1 | 11.1 | — |

| Dull | 16.7 | 50.0 | 33.4 |

| Dark | 50.0 | 16.7 | 33.4 |

| Clarity | 16.7 | 22.2 | 5.5 |

6. Discussion of Results

6.1. Correlations Between Descriptors and Spectral Properties

6.2. Metaphorical Use of Cross-Modal Descriptors

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

| 1 | The present article presents a selection of findings from a study conducted in 2011–2012 as part of my postdoctoral research, investigating the auditory perception of abstract sounds. Given the limited number of studies that have addressed perception of complex, non-identifiable sound stimuli, the findings contribute to our understanding of cross-modal perception, which remains relevant for advancing both theoretical and practical approaches in sound analysis and electroacoustic research. |

| 2 | Italics as per source. |

| 3 | Note that in this context we are not referring to the inherent fidelity of sound (i.e., high or low fidelity). Instead, we are focusing on the descriptive qualities that listeners intuitively use to characterize the perceptual aspects of the sound they experience. |

| 4 | Une qualité ne dépend donc pas de son appréhension par le sujet, la qualité existe dans les objets, elle dépend de leurs particularités. La qualité ne nécessite pas d’être mesurable mais seulement d’être perçue. Une qualité, est la manière d’être, est ce que la perception dégage comme caractéristique dans un objet, un phénomène ou une entité. (Original text in French.) |

| 5 | Forme s’emploie pour dire figure ou l’aspect qu’on donne à une chose matérielle […]. On est ici dans l’ordre de l’extériorité, d’une configuration visible d’une manière ou d’une autre. Ce sont avant tout les contours dont on se préoccupe—en un sens proche de la morphologie. (Original text in French.) |

| 6 | This platform is no longer available. |

| 7 | We will not discuss in detail all the implications of the professional or musical experience of the participants, as this is not the purpose of this article. |

| 8 | Sound examples can be listened here: https://soundcloud.com/isabel-pires/sonsseqr. (Accessed on 1 February 2025). |

References

- Bulusu, V., & Lazar, L. (2024). Crossmodal associations between naturally occurring tactile and sound textures. Perception, 53(4), 219–239. [Google Scholar] [CrossRef] [PubMed]

- Canelon, C. (n.d.). Classification of abstract sounds for the application in sound design [Master’s Thesis, HAW-Hamburg]. Available online: https://reposit.haw-hamburg.de/bitstream/20.500.12738/9452/1/Masterarbeit_Claret_Canelon.pdf (accessed on 24 October 2024).

- Chion, M. (1994). Guide des objets sonores. Buchet Chastel. [Google Scholar]

- Handel, S. (2019). Perceptual organization: An integrated multisensory approach. Springer. [Google Scholar]

- Klempe, S. H. (2022). Sound and reason. Springer Nature. [Google Scholar]

- Lee, A. K. C., Wallace, M. T., Coffin, A. B., Popper, A. N., & Fay, R. R. (2019). Multisensory processes: The auditory perspective. Springer International Publishing. [Google Scholar]

- Lemaitre, G., & Rocchesso, D. (2014). On the effectiveness of vocal imitations and verbal descriptions of sounds. The Journal of the Acoustical Society of America, 135(2), 862–873. [Google Scholar] [CrossRef] [PubMed]

- Marian, V., Hayakawa, S., & Schroeder, S. R. (2021). Cross-modal interaction between auditory and visual input impacts memory retrieval. Frontiers in Neuroscience, 15, 661477. [Google Scholar] [CrossRef] [PubMed]

- Marks, L. E. (1978). The unity of the senses. Academic Press. [Google Scholar]

- Marks, L. E., Ben-Artzi, E., & Lakatos, S. (2003). Cross-modal interactions in auditory and visual discrimination. International Journal of Psychophysiology, 50(1–2), 125–145. [Google Scholar] [CrossRef] [PubMed]

- McAdams, S., & Cunible, J.-C. (1992). Perception of timbral analogies. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences, 336(1278), 383–389. [Google Scholar] [CrossRef] [PubMed]

- Merer, A., Ystad, S., Kronland-Martinet, R., & Aramaki, M. (2011). Abstract sounds and their applications in audio and perception research. Exploring Music Contents, 176–187. [Google Scholar] [CrossRef]

- Noble, J., Thoret, E., Henry, M., & McAdams, S. (2020). Semantic dimensions of sound mass music. Music Perception, 38(2), 214–242. [Google Scholar] [CrossRef]

- Occelli, V., Spence, C., & Zampini, M. (2009). Compatibility effects between sound frequency and tactile elevation. NeuroReport, 20(8), 793–797. [Google Scholar] [CrossRef] [PubMed]

- Opoku-Baah, C., Schoenhaut, A. M., Vassall, S. G., Tovar, D. A., Ramachandran, R., & Wallace, M. T. (2021). Visual influences on auditory behavioral, neural, and perceptual processes: A review. Journal of the Association for Research in Otolaryngology, 22(4), 365–386. [Google Scholar] [CrossRef] [PubMed]

- Pierce, C. S. (1978). Écrits sur le signe. Éditions du Seuil. [Google Scholar]

- Pires, I. (2007). La notion d’espace dans la création musicale: Idées, concepts et attributions. ANRT—Thèse à la carte. [Google Scholar]

- Rey, J.-M. (1998). Un concept introuvable. In P. Ropars-Wuilleumier, & M.-C. Lagny (Eds.), La forme en jeu (pp. 101–115). Presses Universitaires de Vincennes. [Google Scholar] [CrossRef]

- Reybrouck, M. (2019). Experience as cognition: Musical sense-making and the “in-time/outside-of-time” dichotomy. Interdisciplinary Studies in Musicology, 19, 53–80. [Google Scholar] [CrossRef]

- Reybrouck, M. (2020a). Music as epistemic construct: From sonic experience to musical sense-making. Leonardo Music Journal, 30, 56–60. [Google Scholar] [CrossRef]

- Reybrouck, M. (2020b). Musical sense-making. Routledge. [Google Scholar]

- Rosi, V., Houix, O., Misdariis, N., & Susini, P. (2022). Investigating the shared meaning of metaphorical sound attributes. Music Perception, 39(5), 468–483. [Google Scholar] [CrossRef]

- Schaeffer, P. (2017). Treatise on musical objects: Essays across disciplines (C. North, & J. Dack, Trans.). University of California Press. (Original work published 1966). [Google Scholar]

- Siedenburg, K., Saitis, C., McAdams, S., Popper, A. N., & Fay, R. R. (2019). Timbre: Acoustics, perception, and cognition. Springer. [Google Scholar]

- Smalley, D. (1997). Spectromorphology: Explaining sound-shapes. Organised Sound, 2(2), 107–126. [Google Scholar] [CrossRef]

- Spence, C., & Deroy, O. (2013). How automatic are crossmodal correspondences? Consciousness and Cognition, 22(1), 245–260. [Google Scholar] [CrossRef] [PubMed]

- Susini, P., Lemaitre, G., & McAdams, S. (2012). Psychological measurement for sound description and evaluation. In B. Berglund, G. B. Rossi, J. T. Townsend, & L. R. Pendrill (Eds.), Measurement with persons: Theory, methods, and implementation areas (pp. 227–253). Psychology Press. [Google Scholar]

- Tillmann, B. (2012). Music and language perception: Expectations, structural integration, and cognitive sequencing. Topics in Cognitive Science, 4(4), 568–584. [Google Scholar] [CrossRef] [PubMed]

- Wake, S., & Asahi, T. (1998). Sound retrieval with intuitive verbal expressions. In Electronic Workshops in Computing. BCS Learning & Development. [Google Scholar] [CrossRef]

- Wallmark, Z. (2019). Semantic crosstalk in timbre perception. Music & Science, 2, 205920431984661. [Google Scholar] [CrossRef]

- Wallmark, Z., & Kendal, R. A. (2021). Describing sound: The cognitive linguistics of timbre. In E. I. Dolan, & A. Rehding (Eds.), The oxford handbook of timbre. Oxford University Press. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pires, I. Listening Beyond the Source: Exploring the Descriptive Language of Musical Sounds. Behav. Sci. 2025, 15, 396. https://doi.org/10.3390/bs15030396

Pires I. Listening Beyond the Source: Exploring the Descriptive Language of Musical Sounds. Behavioral Sciences. 2025; 15(3):396. https://doi.org/10.3390/bs15030396

Chicago/Turabian StylePires, Isabel. 2025. "Listening Beyond the Source: Exploring the Descriptive Language of Musical Sounds" Behavioral Sciences 15, no. 3: 396. https://doi.org/10.3390/bs15030396

APA StylePires, I. (2025). Listening Beyond the Source: Exploring the Descriptive Language of Musical Sounds. Behavioral Sciences, 15(3), 396. https://doi.org/10.3390/bs15030396