Abstract

With the rapid development of generative artificial intelligence (AI), AI agents are evolving into “intelligent partners” integrated into various consumer scenarios, posing new challenges to conventional consumer decision-making processes and perceptions. However, the mechanisms through which consumers develop trust and adopt AI agents in common scenarios remain unclear. Therefore, this article develops a framework based on the heuristic–systematic model to explain the behavioral decision-making mechanisms of future consumers. This model is validated through PLS-SEM with data from 632 participants in China. The results show that trust can link individuals’ dual decision paths to further drive user behavior. Additionally, we identify the key drivers of consumer behavior from two dimensions. These findings provide practical guidance for businesses and policymakers to optimize the design and development of AI agents and promote the widespread acceptance and adoption of AI technologies.

1. Introduction

In recent years, the rise in generative artificial intelligence (AI) and large language models (LLMs) has marked a revolutionary advance in the field of AI (Hadi et al., 2023). AI agents, the entities that can replace humans to autonomously perform specific tasks (Wiesinger et al., 2024), are evolving from traditional single-task execution tools to intelligent partners capable of independently executing complex decisions and behaviors. Technological breakthroughs have equipped AI agents with stronger language understanding, generation, and adaptive capabilities (Xi et al., 2023). For example, current AI agents can not only replace humans in making certain levels of cognitive decisions (Badghish et al., 2024; Ali et al., 2024), but also partially replace human embodied behaviors (Fu et al., 2024). Shortly, AI agents may fundamentally change the way humans live.

The aforementioned disruptive changes in technology have already profoundly reshaped consumer cognition and behavior. In particular, AI agents, which exhibit far more intelligent and humanized characteristics (Hayawi & Shahriar, 2024), not only deepen the complexity and diversity of consumer cognition, but also fundamentally change consumer trust mechanisms. For example, compared to algorithms, people seem to trust human judgment more, leading to hesitation toward AI (Ananthan et al., 2023). This lack of trust will inevitably become a major barrier to the widespread adoption of AI agents in the near future. Therefore, with the proliferation of AI agents in various forms in different scenarios (McKinsey Global Institute, 2023; World Economic Forum, 2023; Ipsos MORI, 2024), a crucial question arises: How do consumers build trust and acceptance of AI agents as a comprehensive category rather than a specific tool?

Previous research on AI trust has provided valuable insights. Nevertheless, some limitations warrant further exploration. First, the conclusions of the research do not have universal value due to the limitations of the research scenario. The existing results are mostly focused on specific domains, such as healthcare (Zhang et al., 2023), e-commerce chatbots (Chakraborty et al., 2024), and travel planning (Shi et al., 2021). The design premise of these studies stems from the single-task attribute of traditional AI, which limits research conclusions to expert barriers and makes it difficult to explain the common consumers’ trust laws when faced with generalized agents. This dilemma is particularly pronounced in the current context of AI breaking domain boundaries and evolving into a full-scenario intelligent ecosystem. In other words, AI agents are entering every aspect of our lives, and there is an urgent need to establish a cross-domain and common trust framework. Second, from a theoretical perspective, path dependence hinders the unpacking of the micro-foundations of trust itself. Although existing studies have analyzed the correlation between trust and acceptance through traditional paradigms, such as the Technology Acceptance Model (TAM) (Choung et al., 2023) and the Unified Theory of Acceptance and Use of Technology (UTUAT) (Roh et al., 2023; Abadie et al., 2024), they cannot further identify the underlying cognition of trust formation. Later research discussed the components of trust, such as dividing it into emotional trust (a form of trust based on a user’s emotional resonance and subjective feelings toward a target object) and cognitive trust (a form of trust based on a user’s rational evaluation of the target object) (Kyung & Kwon, 2022). Although this provides great inspiration, this classification seems unable to capture the comprehensiveness of trust and deserves further expansion and discussion. Third, the single dimension of antecedents separates the systematic construction of trust. Existing research often focuses on only one type of attribute, resulting in a disconnect between the combined effects of technical attributes and user characteristics on trust. For example, some studies consider only technical attributes (J. Kim & Im, 2023), while others consider only user characteristics (Huo et al., 2022). This leads to research conclusions that cannot objectively reflect real-life situations and cannot comprehensively reveal the driving force of user trust.

In response to these research limitations, this article proposes an extended theoretical framework to explain consumers’ behavioral responses to AI agents, aiming to understand the construction process of trust and acceptance from the perspective of behavioral decision-making. Compared to previous studies, our research has the following novelties. First, we explore the public’s perspective on AI agents as a broad category rather than a specific application or tool through the design of survey scenarios. By doing so, it achieves a transition from the specialized domain to the general domain and constructs a trust framework applicable to AI agents in a wide range of scenarios. Second, this study constructs a three-stage dynamic model of cue–trust–behavior based on the heuristic–systematic model (HSM) (Chaiken, 1980), which is a dual-path decision theory. Based on the existing trust classification (emotional trust and cognitive trust), the concept of overall trust was expanded in this paper, making the trust mechanism more stable. Third, regarding the antecedents of trust, we consider both user characteristics and technical features in each decision path to reflect the true driving process of trust more comprehensively and objectively. Fourth, based on the current technological development background, we discuss users’ ethical expectations of AI agents and provide demand-oriented practical guidance for the design and development. This theoretical framework was validated with empirical data from 632 participants, and all hypotheses were supported. The results reveal the cogwheel effect of trust, which means that trust can transform static basic characteristics into positive behavioral intentions toward AI agents. This study promotes research progress on AI trust and acceptance and lays a theoretical foundation for building a human-centered AI governance system. At the same time, the findings provide a multidimensional optimization path of “emotion–cognition–ethics” for the design and development of AI agents, establish a step-by-step strategy for trust cultivation, and enhance the acceptance of human-centered AI solutions.

2. Theoretical Background

AI agents have been the subject of academic research since the mid-to-late 1980s (Maes, 1990; Nilsson, 1992; Müller & Pischel, 1994). AI agents are defined as entities that can autonomously perform specific tasks on behalf of humans, attempting to achieve goals by observing the world and using its tools (Wiesinger et al., 2024). Our study follows this definition and focuses on consumers’ perceptions of this comprehensive concept. Early AI agents were mainly used to perform pre-programmed specific tasks, such as automated operations or rule-based decision support (L. Xu et al., 2021; Sànchez-Marrè, 2022). The design of such agents is based on fixed rules and limited interactions, and they lack flexible learning capabilities (Kadhim et al., 2013), making them more like tools. However, in recent years, with breakthroughs in LLMs and generative AI, current AI agents have made significant progress in terms of intelligence and autonomy. The current AI agents are capable of perceiving environmental information, making autonomous decisions, and executing actions to gradually achieve specific complex goals (Xi et al., 2023). Compared to traditional agents, they exhibit more flexible, diverse, and human-like characteristics and are able to perform complex tasks independently. For example, with ChatGPT 4.0 (https://chatgpt.com/ (accessed on 28 February 2025)), users only need to enter simple requests, and it can proactively call multiple tools to progressively complete tasks to meet users’ needs. These changes imply that AI agents are gradually evolving into independent individuals capable of decision-making and execution, which may have a disruptive impact on consumer perception. Therefore, in this section, we propose a theoretical framework to explain consumers’ cognitive and psychological responses to future AI agents.

2.1. Trust Model Based on HSM

The heuristic–systematic model (HSM) is one of the most popular models rooted in the dual-process theory, which was first proposed by psychologist Shelly Chaiken (Chaiken, 1980). This model proposes two modes of information processing modes: heuristic processing (also known as the H-path) and systematic processing (also known as the S-path) (Chaiken, 1980). Heuristic processing refers to information processors who use non-content and situational cues to evaluate information and reach evaluative conclusions with minimal cognitive effort. In other words, evaluators may rely on more readily available information (such as personal experience) to make decisions. It is therefore a fast, simple, and cognitively resource-efficient processing method. In contrast, systematic processing is a more thoughtful and meticulous approach to processing. It involves individuals conducting detailed analyses and examinations of the content features of information, forming reasonable cognitions and logic, and then making final decisions. In summary, the HSM mainly assumes that individuals adopt these two modes to process external information and use them as antecedents for attitude formation and behavioral responses (Ryu & Kim, 2015).

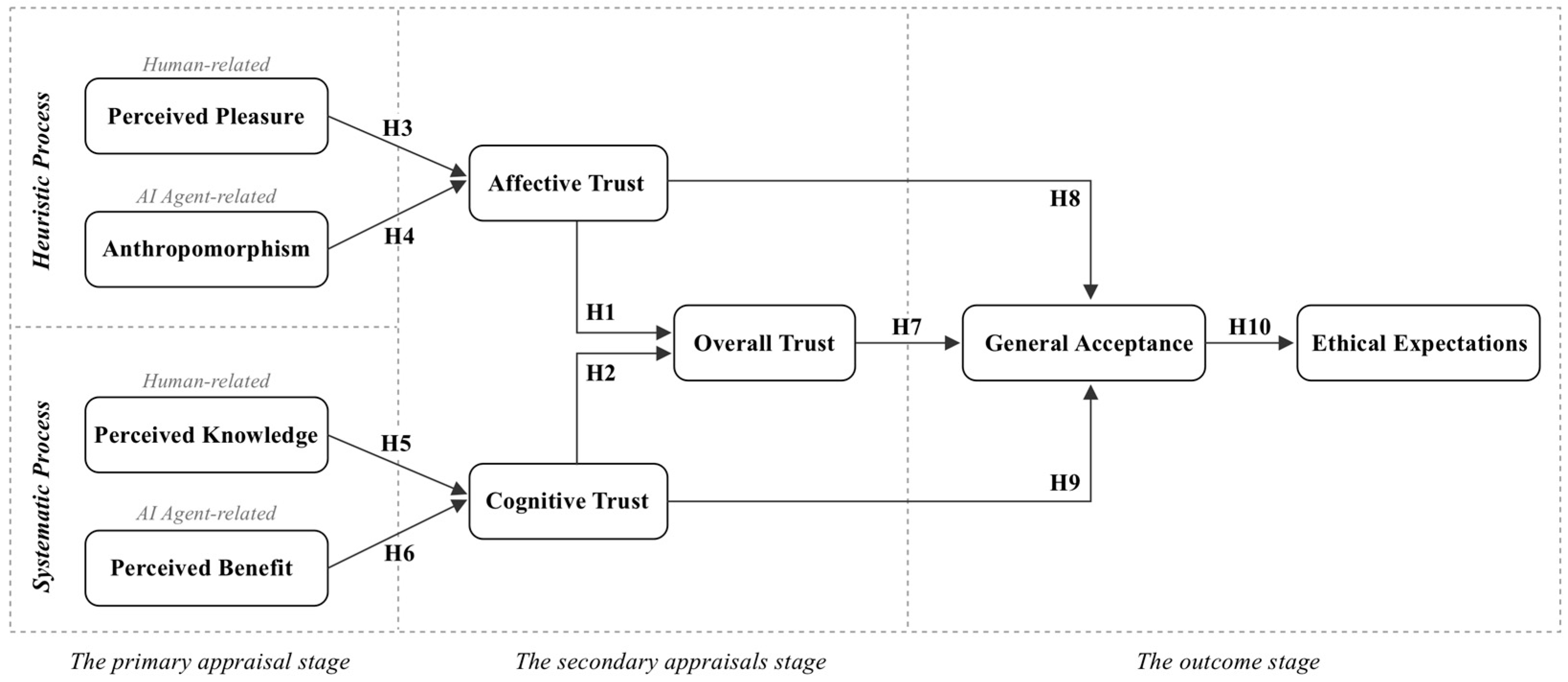

Some studies have applied the HSM in the field of AI to understand the interaction patterns between users and AI. The existing works have demonstrated the empirical effectiveness of the HSM in backgrounds such as travel plan recommendation systems (Shi et al., 2021), news recommendations (Shin, 2021), and health chatbots (Y. L. Liu et al., 2022). These studies suggest that both heuristic and systematic processing play a role in evaluating AI output, and ultimately jointly drive user behavior. However, there are still some gaps that need to be explored further. On the one hand, existing research assumes that AI operates within predefined roles, which cannot represent users’ decision-making mechanisms toward AI agents in general scenarios. On the other hand, these studies have overlooked the multidimensionality of cues in different pathways (H-path and S-path). For example, these studies typically only considered the cues related to AI, such as explainability and performance effectiveness (Shi et al., 2021; Shin, 2021), while ignoring the cues related to the user. Therefore, inspired by these shortcomings, this article proposes a comprehensive theoretical framework based on the HSM to explain users’ behavioral responses and decision-making mechanisms toward AI agents (as a comprehensive concept), as shown in Figure 1. This model divides the process of trust establishment into three key stages: the dual-path decision-making process, the trust-linking mechanism, and the behavior-driven process. In the first stage, we propose that trust decisions are driven by dual information processing paths (H-path and S-path) while considering the multidimensionality of cues (human-related and AI agent-related) in each path. Finally, four representative factors are included as antecedents of trust (perceived pleasure, anthropomorphism, knowledge, and perceived benefit). In the second stage, trust is divided into emotional trust and cognitive trust, which ultimately integrate to form overall trust. The third stage is trust driven behavioral response, including acceptance and ethical expectations. The following sections will introduce these variables one by one and propose hypotheses about the relationships between these variables through the literature review.

Figure 1.

Research model and hypotheses.

2.2. Components of Trust

Trust, the general willingness to trust others (Mayer et al., 1995), has evolved into distinct dimensions in human–AI contexts. According to previous views, trust includes cognitive trust and emotional trust (Kyung & Kwon, 2022; J.-g. Lee & Lee, 2022). Cognitive trust refers to the cognition formed by users’ rational reasoning and evaluation of the evaluated object, which is based on objective results (Lewis & Weigert, 1985). In other words, users will establish cognitive trust based on rational cognition and the evaluation of the potential benefits of technology (Komiak & Benbasat, 2006). Emotional trust refers to the user’s attitude toward the evaluated object, which is formed by irrational factors (McAllister, 1995; Lahno, 2020) and is usually based on the user’s emotions and feelings. As previously suggested by research, it is necessary to simultaneously examine different types of user trust in the context of AI (Glikson & Woolley, 2020). This is because AI technology exhibits characteristics that mimic human intelligence, which may lead users to compare AI agents to humans during interactions, resulting in complex cognitive and emotional factors that cannot be ignored (Gursoy et al., 2019). Given the complexity and multidimensionality of trust, we believe that even emotional trust and cognitive trust cannot fully encompass the concept of trust. This paper boldly speculates that affective trust and cognitive trust are merely stage-specific psychological products of the two decision-making pathways, which are ultimately integrated by overall trust, thus representing a comprehensive view of an individual’s trust in AI agents. Therefore, building on existing classifications of trust (cognitive trust and emotional trust), we also consider overall trust, defined as the general tendency to be willing to rely on AI agents in a variety of situations (Mayer et al., 1995; McKnight & Chervany, 2001). It will facilitate a more scientific and thorough explanation of the mechanisms by which consumers develop trust in AI agents. In summary, this paper discusses three types of trust and their interrelationships, including affective trust, cognitive trust, and overall trust. We propose the following hypotheses:

H1.

The user’s affective trust in AI agents will positively influence overall trust.

H2.

The user’s cognitive trust in AI agents will positively affect overall trust.

2.3. Drivers of Trust

When considering the antecedents of user trust, previous studies have typically considered only a single category of factors. However, in the field of human–AI interaction, the characteristics of both interacting entities serve as cues that influence the process of developing human trust. Based on this, we emphasize and consider the multidimensionality of trust antecedents, which addresses the shortcomings of previous research (Yang & Wibowo, 2022). In other words, we include both human- and agent-related factors in the theoretical framework of both decision paths. It is worth noting that only a limited number of representative variables have been selected in this paper, and more variables will be further expanded in future research. Specifically, in the H-path, we selected perceived pleasure (user characteristic) and anthropomorphism (AI agent characteristic) as independent variables. In the S-path, we selected knowledge (user characteristic) and benefit (AI agent characteristic) as independent variables. Based on previous research and the results of meta-analyses, these four variables are often explored and have been shown to have a significant impact on trust (Duffy, 2017; Cabiddu et al., 2022; Venkatesh, 2000; Kaplan et al., 2023). Therefore, we assume that these variables will continue to be effective within our research framework. The following literature section will introduce each variable in turn.

Perceived pleasure is defined as the pleasurable response elicited by AI agents. Research has shown that users’ emotional responses often influence their trust in a particular technology (Lahno, 2001; Komiak & Benbasat, 2006). This finding is consistent with the perspective of affective heuristic theory, which suggests that emotional feedback related to the target object can provide cues to decision evaluators (Slovic et al., 2002). For example, positive emotions provide a “proceed” feedback signal, thus aiding in the quick judgment of whether to approach the object. In other words, in the process of interacting with AI, the emotion of pleasure will serve as a key cue in the heuristic processing path, helping evaluators to quickly respond positively, such as a higher willingness to accept the technology (Venkatesh, 2000; Tan et al., 2022). Based on these perspectives, we speculate that perceived pleasure during the interaction between users and AI agents can help improve users’ affective trust, assuming the following:

H3.

The user’s perceived pleasure of AI agents will positively influence affective trust.

Social presence theory asserts that the characteristics of the technology itself influence individual perceptions and points out that technology is perceived as more human-like than as a mere tool (Short et al., 1976). The theory emphasizes that the anthropomorphic characteristics of technology warrant attention, which refers to the degree to which users perceive AI agents as possessing human-like awareness and capabilities (H.-Y. Kim & McGill, 2018). However, there is currently no consensus on the relationship between anthropomorphism and user trust, which may be related to the research context. For example, a previous study showed that when AI is anthropomorphized, users tend to trust and follow it regardless of its actual performance (Castelo et al., 2019). Similar conclusions have been supported in different AI application scenarios, such as chatbots (Cheng et al., 2022) and personal assistants (Chen & Park, 2021; Patrizi et al., 2024). However, a study conducted in the banking and telecommunications industry did not seem to find such a significant relationship (Chi & Hoang Vu, 2023). Subsequently, some scholars further considered the different categories of anthropomorphism and emphasized that these categories would affect the relationship between anthropomorphism and trust (Inie et al., 2024). The research objective of this article is to discuss users’ overall anthropomorphic perception of AI agents (as a comprehensive category). Therefore, we have decided to follow the conclusions of most research. In other words, this article speculates that when humans rely on AI agents for decision-making and task execution, the anthropomorphic features of agents can provide users with positive emotional experiences, thereby helping to cultivate higher levels of emotional trust. Therefore, we propose the following hypothesis:

H4.

Anthropomorphism of AI agents will positively influence affective trust.

Third, knowledge is one of the core concepts for understanding consumer perceptions of new technologies and innovations (Knight, 2005). Without sufficient knowledge of a given issue, people may resort to “mystical beliefs and irrational fears of the unknown” (Sturgis & Allum, 2004). In addition, this paper defines cognitive trust as whether users are willing to accept factual information or suggestions and take action, and whether they believe that the technology is helpful, capable, or useful (Glikson & Woolley, 2020). It follows that cognitive trust is often directly related to the user’s knowledge and understanding of the technology’s capabilities, a view supported by many studies. For example, a study on autonomous driving found that those with the least knowledge had the worst attitudes toward autonomous driving (Sanbonmatsu et al., 2018). In other words, users construct trust based on their understanding of the new technology, while those who lack knowledge cannot reasonably construct trust. Thus, this paper speculates that perceived knowledge associated with AI agents could help people make rational evaluations and thus build cognitive trust. Therefore, we propose the following hypothesis:

H5.

The user’s perceived knowledge about AI agents will have a positive effect on cognitive trust.

Fourth, perceived benefits are defined in this paper as the advantages and benefits that users believe will result from the use of AI agents. Evaluating potential benefits is a cognitive process based on a rational analysis that influences users’ opinions about certain issues. Typically, when users strongly perceive the benefits of a technology, they tend to exhibit positive attitudes and behavioral tendencies (Hohenberger et al., 2017; Kohl et al., 2018). In other words, when users believe that AI is useful and helpful, they tend to trust it. For example, a study on AI-driven IRAs found that reflecting the potential benefits of information retrieval can strengthen participants’ cognitive trust and thus improve their attitudes (J. Kim, 2020). Therefore, we believe that if future users can perceive the benefits and advantages that AI agents bring to their lives and work, it will help establish a higher level of cognitive trust, thereby promoting their acceptance and willingness to use AI agents. We propose the following hypothesis:

H6.

The user’s perceived benefit of AI agents will positively influence cognitive trust.

2.4. Outcomes of Trust

As an important consequence of user trust, the intention to accept and use AI has become a hot research topic (Nazir et al., 2023). Previous studies consistently show that there is a significant positive correlation between user trust in AI and its adoption. For example, higher levels of trust stimulate higher behavioral intentions among users when interacting with various AI devices (Choi et al., 2023; Xiong et al., 2023). Moreover, even the most classical TAM needs to further consider the predictive role of trust (K. Liu & Tao, 2022; Vorm & Combs, 2022). These findings can be explained by the commitment–trust theory (Morgan & Hunt, 1994), which emphasizes the positive impact of trust on individuals’ commitment to product relationships. Trust has been considered a generic concept in previous studies, which corresponds to overall trust in this study. We propose the following hypothesis:

H7.

The user’s overall trust in AI agents will have a positive effect on general acceptance.

Similarly, affective trust and cognitive trust are also important predictors of acceptance. On the one hand, analogous to the affective heuristic theory (Slovic et al., 2002), the process of establishing affective trust is intuitive and unthinking. In other words, affective trust helps users simplify the evaluation process, allowing them to rely on intuition to decide whether to accept and use AI agents (Komiak & Benbasat, 2006). On the other hand, the process of establishing cognitive trust is based on logical reasoning and rational evaluation, the conclusion of which may be more reliable for users. In short, once users establish cognitive trust, it will largely determine their subsequent behavior. Combining the above discussions, we speculate that affective trust represents users’ sense of security and comfort in relying on AI agents (Komiak & Benbasat, 2006), which will become the basic requirement for their acceptance. Cognitive trust reflects the level of one’s confidence in the capabilities and performance of AI agents, and the higher the level, the more likely they are to accept and use the agents. Therefore, we propose the following hypotheses:

H8.

The user’s affective trust in AI agents will positively influence general acceptance.

H9.

The user’s cognitive trust in AI agents will positively influence general acceptance.

In recent years, AI ethics issues such as loss of privacy, unfairness, and opacity have emerged (Oseni et al., 2021), raising concerns and fears among people. Extensive discussions and efforts are underway in society at large to address the ethics of AI (John-Mathews, 2022). In this context, uncovering users’ core ethical requirements for AI technology has become a key breakthrough point. Based on this, this study proposes the variable of ethical expectations to measure users’ expectations of explainability, transparency, and ethical norms of AI. Although this variable is not a direct result of trust, it is highly necessary to include it in our theoretical framework. This is because deconstructing the relationship between acceptance and ethical expectations can provide actionable design guidelines for AI developers to create AI agents that meet people’s expectations. We propose the following hypothesis:

H10.

The user’s general acceptance toward AI agents will positively influence ethical expectations.

3. Materials and Methods

3.1. Data Collection

To validate the theoretical model proposed in this paper, we conducted an online survey through a professional Internet survey platform called Baidu Intelligent Cloud. This platform is one of the largest online survey networks in China, with 17 million users from a wide range of demographic backgrounds, covering 300 cities in China, which is advantageous for collecting data representative of the Chinese population. Considering the linguistic and cultural backgrounds of all respondents, the online questionnaires were distributed in Chinese to ensure accurate understanding and responses. All participants received informed consent before the survey and were fully informed of the research objectives. The data collected were used for academic purposes only, with strict adherence to confidentiality, voluntary participation, and anonymity. In addition, an attention check question (It is important that you pay attention to this study. Please check ‘strongly agree’) was included in the questionnaire to verify that the participants were paying attention and responding meaningfully. We collected 792 responses during the one-week online survey. We excluded 160 invalid responses that did not pass the attention check, were under the age of 18, or were incomplete. In the end, 632 valid responses were used for further analysis, with 55.4% male and 44.6% female.

3.2. Instrument Development

Before launching the formal survey, we conducted a pilot study to evaluate the effectiveness of the questionnaire content. The pilot study was conducted in two phases: qualitative interviews and quantitative surveys. In the first phase, five experts (PhDs and researchers with a focus on user experience) and five non-experts were selected to participate in semi-structured interviews. The interviews focused on a comprehensive evaluation of the questionnaire, including the introductory text, scales, and options. The goal was to assess the clarity, ambiguity, and overall design of these elements. In addition, participants were asked to provide open-ended feedback regarding their suggestions for potential improvements to the questionnaire. Following an analysis of the interview transcripts, the wording of the questionnaire was refined to improve its clarity and comprehensibility. This revised version was then subjected to a second round of testing to determine its reliability and validity. We then conducted an online survey using the modified questionnaire with 50 valid responses and analyzed the data for the measurement model. The results showed that the reliability of the questionnaire was met, indicating that the questionnaire is suitable for large-scale research.

The formal questionnaire mainly consists of three main parts. Firstly, the questionnaire commences with an introductory textual section to help participants better understand the research background, which is presented below:

“As a key step in this survey, please read the following text carefully before you answer the questions.

The definition of AI agent: AI agents are entities based on AI technology, which can be physical (such as a robot) or virtual (such as a software program). They have the ability to perceive information from the environment, make autonomous decisions, and carry out actions to achieve specific goals.

The development trend of AI agents: With the high maturity of the technology, AI agents are accelerating to the ground. In the next five years, they will be widely used in education, healthcare, work, and travel, which can help you handle almost any matter. As Bill Gates said, AI agents will revolutionize human lifestyle in the next few years.

Scenario imagination: Please imagine that in the near future, AI agents will be deeply integrated into your daily life and work as intelligent partners. Please rate your views on AI agents based on a wide range of future scenarios.”

Secondly, measurement items for each construct in the theoretical model were presented in turn. To ensure the accuracy of the research results, it was necessary to scientifically measure the content of the theoretical model and validate the research instruments. The majority of the items were therefore derived from existing mature scales and appropriately modified according to the research topic and interview results. The final analysis of the data confirmed that the reliability and validity of the instruments were satisfactory and that they were effective at measuring the intended constructs. Respondents were instructed to rate affective trust (Komiak & Benbasat, 2006) and cognitive trust (J.-g. Lee & Lee, 2022) separately using 4 items adapted from previous studies. Similarly, perceived pleasure (Shin, 2021; Tan et al., 2022; Hsieh, 2023) and perceived knowledge (Celik, 2023; Jang et al., 2023) each included 3 items, and anthropomorphism (Delgosha & Hajiheydari, 2021; K. Liu & Tao, 2022; Sinha et al., 2020; Wu et al., 2023) and perceived benefit (Bao et al., 2022; Said et al., 2023) each contained 4 items, all of which had to be rated subjectively. Subsequently, respondents rated 5 items to assess their acceptance, which included items related to attitude, acceptance, and behavioral intentions (Bao et al., 2022; Tan et al., 2022; Hsieh, 2023; Jang et al., 2023). And ethical expectation consisted of 8 items (Shin, 2021; Celik, 2023). To avoid bias due to conceptual similarity, overall trust was measured last by 3 items (K. Liu & Tao, 2022; Zhao et al., 2022; Jang et al., 2023). All items were measured using a 7-point Likert scale. The last part of the questionnaire was about demographic information, including age, gender, education, and AI experience. Appendix A lists all the items used for the formal survey.

4. Data Analysis and Results

In this study, structural equation modeling (SEM) and partial least squares (PLS) techniques were used for data analysis. SEM is a statistical analysis technique that incorporates both measurement and structural models capable of simultaneously addressing the relationships between latent variables and their indicators (Hair et al., 2021). In addition, PLS is a variance-based structural equation modeling technique (Wold, 1975) that facilitates the statistical evaluation of the hypotheses underlying the research model. PLS is suitable for analyzing complex relationships among multiple independent and dependent variables (Lowry & Gaskin, 2014), and it is superior to the covariance-based SEM (CB-SEM) method as a solution method for models containing construct measures (Y. J. Xu, 2011), making it appropriate for this study. Data analysis procedures mainly included demographic information statistics, model validation, and mediation effect analysis. We followed Hair’s recommendations for model testing, including both measurement and structural models (Hair et al., 2014).

4.1. Sample Characteristics

The final dataset retained 632 complete and valid responses. A descriptive statistical analysis was performed on gender, age, education, etc. Table 1 shows the demographic characteristics of the respondents and their previous experience with AI products. The gender distribution was relatively balanced, with 55.4% male and 44.6% female. Regarding the representativeness of the sample, all users were randomly selected through the platform, covering more than 300 cities in China. In addition, about 94% of the respondents had experience using AI-related products or tools, indicating that they represent a potential user group for future AI agents. Therefore, we believe that this sample is highly representative and can be used for further analysis.

Table 1.

Demographic characteristic variables of respondents.

4.2. Measurement Model

After excluding item EE4, the remaining items were used for a formal analysis. The measurement model test consisted of a sequential evaluation of the reliability, validity, and method bias of all constructs. The results indicated that the measurement properties of the scale met the standards.

In terms of reliability, composite reliability (CR) reflects the internal consistency among indicators of a latent variable, with CR values of all variables above 0.700 indicating reliable results, detailed in Table 2. Similarly, Cronbach’s alpha (α), another common reliability measure, showed that the α coefficients of all variables were above the recommended threshold of 0.700, confirming that these constructs meet reliability standards (Bagozzi & Yi, 1988; Urbach & Ahlemann, 2010). In terms of convergent validity, the average variance extracted (AVE) represents the shared variance between a latent variable and its indicators. The AVE indicated that more than half of the variance observed in the items was explained by their underlying constructs, and values of all variables were above the threshold of 0.500, indicating good convergent validity (Schumacker & Lomax, 2004; Henseler et al., 2009). Factor loadings (FLs), the correlation coefficients between each measure item and the latent variable, greater than 0.700 indicate good convergent validity (Fornell & Larcker, 1981), and all measure items in this study met these criteria. For discriminant validity, two methods of analysis confirmed the good discriminant effects of our scale. According to the Fornell–Larcker criterion (Fornell & Larcker, 1981), discriminant validity is considered good if the square root of the AVE of each variable (diagonal elements in Table 3) is greater than its correlation coefficients with different variables, and the data of this study meet this condition. In addition, cross-loadings (CLs), which reflect the contribution of a measure item to other latent variables, showed that the loadings of each latent variable measure item exceeded its CLs, indicating good discriminant validity.

Table 2.

Scales for reliability and validity of measurement models.

Table 3.

Discriminant validity and Correlation Matrix.

In addition, we excluded the possibility of measurement bias due to the survey method. The uniform measurement method could lead to a certain tendency among participants to rate, thus introducing measurement bias. Harman’s single-factor test (Podsakoff et al., 2003) was used to calculate the Common Method Variance (CMV). The results showed that the total variance extracted by the single factor was 41.28%, which is well below the expected threshold of 50%, indicating no common method bias in this study. Furthermore, the variance inflation factor (VIF) for the inner model was calculated to test the risk of multicollinearity, with VIF values of all variables below 3.00 (Hair et al., 2019), indicating no risk of multicollinearity in the scale. Overall, the results of the above analyses all confirmed the good reliability and validity of the scale. In other words, the various aspects of the measurement model met the standards, allowing the scale data to be used directly in the subsequent structural model analysis.

4.3. Structural Model and Hypothesis Test

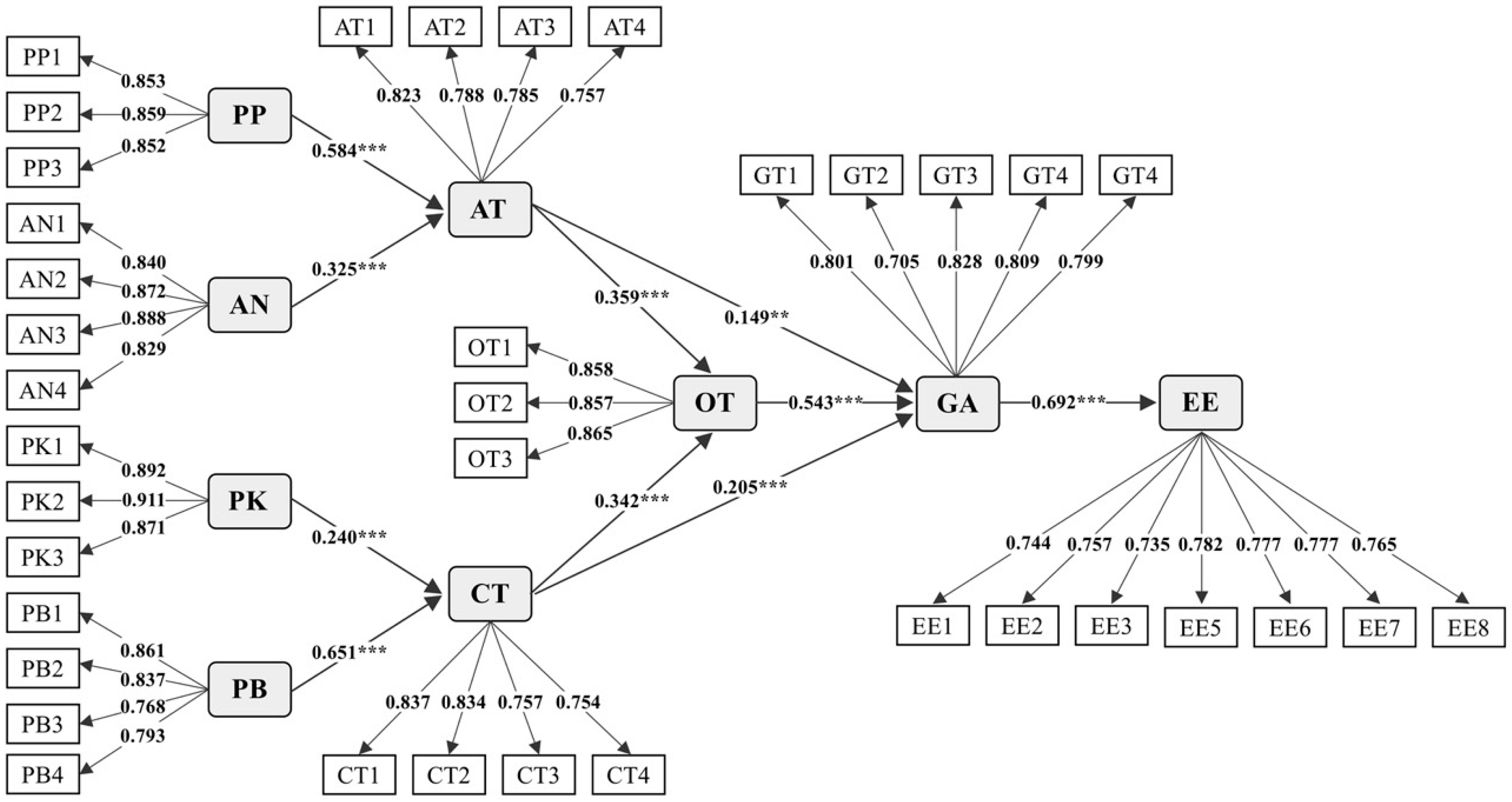

The first step in the structural model analysis was to test the significance of the relationships between variables. As recommended in a previous study, we conducted 5000 bootstrap samples to test the significance of each path, calculated by bootstrapping. All hypotheses proposed in this article were supported, as shown in Figure 2 and Table 4, based on the calculated path coefficients (β) and their significance levels (p). As hypothesized in the H-path, perceived pleasure (β = 0.584, p < 0.001) and anthropomorphism (β = 0.325, p < 0.001) had a significant positive effect on affective trust. Similarly, in the S-path, perceived knowledge (β = 0.240, p < 0.001) and perceived benefit (β = 0.651, p < 0.001) were found to be significant drivers of cognitive trust. Both affective trust (β = 0.359, p < 0.001) and cognitive trust (β = 0.342, p < 0.001) had similar influential patterns in facilitating overall trust, and they were statistically significant. In terms of consumer acceptance, overall trust (β = 0.543, p < 0.001) had the most positive significant impact, followed by cognitive trust (β = 0.205, p < 0.001) and affective trust (β = 0.149, p < 0.01). Additionally, this study found that there is a positive correlation between consumer acceptance of AI agents and their future ethical expectation (β = 0.692, p < 0.001).

Figure 2.

Structural model results. Note: ** p < 0.01; *** p < 0.001. AN = anthropomorphism, AT = affective trust, CT = cognitive trust, EE = ethical expectation, GA = general acceptance, OT = overall trust, PB = perceived benefit, PK = perceived knowledge, and PP = perceived pleasure.

Table 4.

The results of hypothesis testing.

The second step in the analysis of the structural model was to evaluate its performance. The evaluation dimensions mainly included the model’s explanatory power, predictive power, and fit of the model, respectively, reflected by the R2, Q2, GoF, and SRMR indices. As shown in Table 4, the calculated values of these four indices were all within acceptable ranges, indicating the overall good performance of the model. The results of the PLS algorithm provided R2 values for four endogenous variables, including overall trust (0.423), cognitive trust (0.575), affective trust (0.650), general acceptance (0.635), and ethical expectations (0.479). R2 represents the amount of variance in the dependent variables that is explained by the independent variables, and these values exceeded the recommended threshold of 0.200, indicating the good explanatory power of our model (Shi et al., 2021). The R2 values for cognitive trust, affective trust, and acceptance were higher than those reported in previous studies (Shi et al., 2021), confirming the importance of multidimensional antecedents of trust emphasized in this article. Secondly, Q2 is related to predictive ability, with values greater than 0 indicating good predictive ability of the model. The blindfolding results showed that Q2 for affective trust (0.400), cognitive trust (0.360), overall trust (0.309), general acceptance (0.390), and ethical expectation (0.272) all met the standards, suggesting good predictive ability of the model. As a measure of model fit, SRMR ranges from 0 to 1, with values closer to 0 indicating better fit. The SRMR result of this study was 0.063, which is below the recommended value of 0.080 (Hu & Bentler, 1999), indicating a good model fit. Additionally, the GoF value was calculated according to an accepted formula (Tenenhaus et al., 2005) and was 0.613, exceeding the cutoff value for a medium effect size of 0.36, also indicating a good model fit.

To further test the structural relationships in the model, we examined mediation effects, which are detailed in Table 5. Following the rigorous procedure, a step-by-step regression coefficient test method was used to examine mediation effects, and bootstrapping was used to test the significance of these mediation effects. The calculation of 95% confidence intervals indicated mediation effects only when the range did not cross zero. Furthermore, we calculated the variance accounted for (VAF) to determine the strength of the indirect effects (i.e., indirect effects) relative to the total effect (i.e., direct plus indirect effects). VAF values greater than 20% and less than 80% indicated partial mediation (Hair et al., 2016). The results suggest that affective trust mediates the relationships between perceived pleasure–overall trust and anthropomorphism–overall, with VAFs of 31.11% and 79.91%, respectively. In addition, affective trust mediates the relationships between perceived pleasure and general acceptance (VAF = 28.95%) as well as anthropomorphism and general acceptance (VAF = 73.36%). Additionally, cognitive trust was found to have similar mediating effects. Cognitive trust played a significant mediating role in the relationships between knowledge and overall trust (VAF = 35.99%) as well as perceived benefit and overall trust (VAF = 36.15%). It also mediated the indirect influences of knowledge–general acceptance and perceived benefit–general acceptance at VAF values of 37.56% and 52.03%, respectively. Moreover, the overall level of trust played a significant role in mediating the relationships between affective trust and overall acceptance, as well as between cognitive trust and overall acceptance. The mediating effect was strong, with VAFs greater than 50%.

Table 5.

The results of the mediating effect test of trust.

5. Discussion and Implications

Trust and acceptance are the fundamental engines for creating human-centered AI (Shin, 2020), as well as the crucial bridge between consumers and future AI agents. Therefore, this study proposes a comprehensive framework to explain consumer trust and acceptance, along with their complex decision-making mechanisms. The results provide robust support for the effectiveness of the proposed model: all of the hypotheses were supported, and the model demonstrated better explanatory power than previous studies (Pelau et al., 2021; J.-g. Lee & Lee, 2022). In addition, this research reveals a crucial mechanism of trust, namely, its ability to integrate the results of dual-path processing, which further influences subsequent user behavior such as acceptance and ethical expectations. Furthermore, this study identifies the key factors that drive different types of trust in different decision pathways, and demonstrates that different types of trust promote user behavioral intentions. In summary, this study provides valuable insights into consumer trust and acceptance in the age of AI agents. It further deepens our understanding of how different types of trust influence consumer behavior and promotes a dynamic perspective on the fundamental characteristics of human and AI agents, trust-building mechanisms, and user acceptance in consumer markets.

5.1. Main Findings

Overall, the main contribution of this study is the decoupling of statistical significance and practical differentiation. This not only confirms the statistical significance of each variable, but more importantly, provides empirical evidence for the implementation of differentiation strategies based on effect size analysis. Specifically, on the one hand, the results of this study show that all paths are significant, confirming that the variables in the model have significant explanatory power for the trust and acceptance behavior of AI agents. This phenomenon is consistent with the results of previous research (Venkatesh et al., 2012), which reveals the essential characteristics of the synergistic effect of multidimensional factors on user trust and acceptance in the context of new technologies. In other words, the results of this study also indicate the limitations of traditional single-factor optimization strategies in the era of AI agents. On the other hand, although all paths are statistically significant, the hierarchy of effect sizes reveals the different weights of each variable in practical applications. Specifically, based on the relative size of the path coefficients, we recommend adopting a phased implementation strategy in the practical process. For example, in the early stages of promotion, priority can be given to strengthening the dual driving path of emotional experience design and actual value perception to quickly establish a foundation of user trust. The key findings of this article are discussed in turn below.

Firstly, with the HSM as the theoretical background, the results reveal the cogwheel effect of trust, which means that trust can transform static basic characteristics into positive behavioral intentions toward AI agents. In other words, trust can integrate the processing outcomes of dual pathways for decision-making, thereby further facilitating user behavioral intentions. Simply put, in future scenarios where users interact with AI agents, users will combine the characteristics of themselves and AI agents to process and evaluate trust according to the HSM theory. They establish affective trust by intuitively evaluating heuristic cues, while simultaneously building cognitive trust through the logical analysis of systematic cues. The former provides a simplified evaluation process for quickly judging the credibility of AI agents; the latter relies on deep thinking, combining personal knowledge and the potential value of AI agents for a rational evaluation. Subsequently, the psychological products of these two processing pathways are integrated by overall trust, forming a comprehensive trust conclusion. Ultimately, these different types of trust further promote user acceptance and ethical expectations. This linking mechanism mentioned above is statistically reflected in the mediating effects of affective and cognitive trust. In other words, affective trust and cognitive trust play significant roles in mediating the relationship between the four key drivers and overall trust. Additionally, affective and cognitive trust have a significant indirect effect on acceptance through the bridge of overall trust, in addition to their direct effect on acceptance. In summary, differing from the operational modes pointed out in previous studies (Shin, 2020, 2021), the linking mechanism of trust revealed in this study can serve as an important clue for research on human interactions with AI agents, providing a breakthrough for human-centered AI research.

Second, this article emphasizes the multidimensionality of trust antecedents and identifies the key drivers of affective and cognitive trust based. Specifically, the influencing factors in this model explain affective and cognitive trust better than in previous studies (Shi et al., 2021; J.-g. Lee & Lee, 2022), whose R2 is much larger. In other words, this conclusion suggests that both human-related and AI agent-related characteristics will serve as crucial cues for individual decision judgments. Only by considering these multidimensional factors can we more fully explain user psychology and behavior. Following this approach, this paper identifies the key factors that influence affective and cognitive trust. For affective trust, it is found that both perceived pleasure (human-related) and anthropomorphism (AI agent-related) significantly positively promote trust, consistent with previous research (J. I. Lee et al., 2023). Concerning the degree of anthropomorphism, simply put, the more similar an AI agent is to a human, the more likely people are to trust the AI agent on affective dimensions. The reason may be that when AI agents exhibit human-like emotions, language, and social behaviors, people are more likely to view them as social partners rather than mere tools, thereby strengthening trust in an affective aspect. It is worth noting, however, that perceived pleasure has a stronger effect on affective trust than anthropomorphism in this study. This finding may follow the affective heuristic theory (Slovic et al., 2007), which means that when people are asked to evaluate complex entities, they first rely on their affective response to make judgments. In other words, when confronted with an AI agent in the future, humans may prefer to rely on their affective reactions and intuition to simplify the decision evaluation process, thereby overcoming the evaluation speed and reliance on anthropomorphism. For cognitive trust, we found that both knowledge and the perceived benefit of AI agents are significant influencing factors. Simply speaking, individuals who believe they have a better understanding of agents are more likely to cognitively trust them. In addition, when consumers perceive greater benefits, they are more likely to develop stronger cognitive trust, which is closely aligned with previous perspectives (J. Kim, 2020). Further findings indicate that perceived benefits have a more significant impact on cognitive trust, about 2.7 times greater than knowledge. This could be explained by the fact that perceived benefits are directly related to the user’s assessment of whether the AI agent can meet their needs. In other words, while some cognitive trust comes from understanding the AI agent, it primarily comes from the expected benefits of using the AI agent. In summary, these findings not only help perfect the understanding of the antecedents of user trust in the AI domain but also provide direct insights for the subsequent design and development of AI agents. For example, in the initial promotion phase, actively demonstrating the potential benefits of AI agents can increase cognitive trust among general consumers. In addition, industry professionals could consider enhancing positive emotional experiences when interacting with AI agents to foster users’ affective trust.

Finally, this paper confirms the importance of considering the multidimensionality of trust, as well as the crucial role of trust in the widespread acceptance of future AI agents. As is well known, trust is often considered a key mechanism to reduce uncertainty and complexity in decision-making processes, especially in the adoption of new technologies. The results of this study indicate that affective trust, cognitive trust, and overall trust are all essential prerequisites for the user acceptance of AI agents. It is worth noting that the approach of considering the multidimensionality of trust provides strong support for its effectiveness in predicting AI agent acceptance, as it explains 59.3% of the variance in AI agent acceptance, surpassing several previous studies (H. Liu et al., 2019; Pelau et al., 2021). This conclusion implies that in the process of implementing AI agents in the future, researchers need to consider the subdivided dimensions of the trust concept to more accurately capture and distinguish the attributions behind user behavior. Furthermore, we find that compared to affective and cognitive trust, overall trust has a stronger facilitating effect on acceptance, which could be explained by the different mechanisms through which different types of trust influence individual behavior. Specifically, cognitive trust is often based on logical reasoning and rational evaluation, while affective trust emphasizes the affective components of trust. In comparison, overall trust represents a more comprehensive, integrated perspective based on the fusion of these two, which can more effectively transfer and promote adoption. In other words, overall trust provides a more comprehensive and stable evaluation basis for the AI agent and thus has a stronger facilitating effect on acceptance. In addition, we revealed an important finding regarding ethical expectations: users who are more inclined to adopt AI agents tend to have stronger expectations and visions for them. For example, they are more likely to hope that the AI agent will adhere to ethical norms of human society, and they expect the AI agent’s processes to remain highly transparent. This may be the result of the high acceptance of user groups’ deep understanding of the AI agent and their high regard for AI ethical issues. In conclusion, the above findings have strong implications. For example, the development of trustworthy AI agents is an inevitable trend, because only by gaining users’ trust can AI agents be widely accepted and truly integrated into human society. In addition, the research and development of AI agents should prioritize ethical considerations to ensure that technological innovation and ethical standards progress in tandem to meet the expectations of potential AI agent user groups.

5.2. Theoretical and Practical Implications

Our study makes a significant original contribution and offers several theoretical insights. Most importantly, in response to the demands of earlier phases of AI development, this research re-examines and constructs the consumer cognitive framework for future general AI agents, rather than being limited to specific applications in a particular domain. In addition, this study addresses some of the limitations of previous studies. For example, it adopts HSM to analyze the construction process of user trust, acceptance, and its underlying cognitive mechanisms in the age of AI agents, thus broadening the theoretical and methodological approaches to AI trust and acceptance. In terms of model construction, this research takes into account the multidimensionality of trust antecedents and ethical considerations, thereby enhancing the explanatory power of user trust and behavioral intentions, which is expected to further advance the field of human–machine trust research. Finally, this study reveals that trust is not only a bridge between humans and AI agents but also a dynamic mechanism that transforms static basic characteristics into positive behavioral intentions. This significant discovery provides key insights for understanding consumers’ behavioral patterns in the age of AI agents.

Furthermore, this research has significant practical implications. First of all, compared to prior research, the findings of this research better reflect the perspectives of potential future AI consumers. In addition, this paper clarifies the key antecedents of user trust in AI agents and confirms the mechanism by which trust influences acceptance. This will provide scientifically reliable practical guidance for the design and development of future AI agents, while also urging practitioners to adjust strategies promptly to further promote the development and application of AI agents. For example, improving the level of anthropomorphism of AI agents can be a key strategy to enhance consumer trust, and stimulating positive emotional experiences should be regarded as a foundation for building deep trust relationships. In addition, policymakers in the AI agent industry could consider improving education and communication strategies, such as emphasizing the societal benefits of AI agents and improving the consumer’s understanding of AI agents, to effectively improve consumer trust and acceptance.

5.3. Limitations and Future Research

This study has several limitations that can be addressed in future work. The first limitation is that we only focus on consumers’ overall view of agents as a comprehensive category, without further discussion of different agents. Future research can explore in detail the differences in cognitive mechanisms between different types of agents. In addition, we only considered some specific variables as antecedents of trust, which means that future research can investigate more comprehensive variables to enrich this theoretical framework. Furthermore, this study used a cross-sectional survey design to primarily explore non-causal relationships among variables. Future research could employ longitudinal designs and cross-lagged panel models to dynamically examine the evolution of consumer psychological mechanisms, thereby enhancing the validity of causal inferences. Finally, although the definition provided at the beginning of the questionnaire improves measurement consistency, it may also lead to some degree of a potential priming effect, which should be controlled by balanced stimulus variants in future replication experiments.

6. Conclusions

Revolutionary advances in AI are bringing humanity into the age of super-agents, which means a complete upheaval in human social patterns, accompanied by significant psychological adaptation challenges. This paper explores the consumer’s trust and behavior mechanisms of future widespread and independent AI agents. Our model provides strategic considerations and a theoretical framework for combining different types of trust with their antecedents and consequences from a decision-making perspective. This research empirically reveals a crucial integrative mechanism in users’ evaluative decision-making: overall trust integrates the results of dual-pathway processing, further influencing the acceptance behavior and ethical expectations of users. In addition, considering the multidimensionality of trust antecedents, this study identifies the key drivers of trust from the human perspective and the AI agent perspective, respectively, and incorporates ethical factors into the model construction. In summary, the long-term goal of AI is to develop human-centered services, and the results of this study are a significant step toward achieving that goal. These insights will guide practitioners and policymakers to take measures to design and implement trustworthy, responsible, and ethically oriented AI agents to meet consumer needs and expectations, thereby further promoting social progress and development.

Author Contributions

Conceptualization, X.Z., W.Y. and Z.Z.; methodology, X.Z. and L.S.; software, X.Z.; validation, X.Z., Y.L. and Z.Z.; formal analysis, X.Z.; investigation, X.Z., W.Y., Z.Z., S.S. and Y.L.; resources, S.S., Y.L. and L.S.; data curation, X.Z.; writing—original draft preparation, X.Z., Z.Z., S.S. and Y.L.; writing—review and editing, X.Z., W.Y. and L.S.; visualization, X.Z.; supervision, Z.Z. and L.S.; project administration, X.Z., W.Y. and Y.L.; funding acquisition, L.S. and W.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Key Research and Development Program of China (2023YFF0904900).

Institutional Review Board Statement

Ethical review and approval were waived for this study due to its exclusive use of fully anonymized data (exempt under Article 32(2) of China’s Ethical Review Measures for Human Life Sciences and Medical Research, 2023) and compliance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. The Survey Questionnaire Items

| Construct | Scale Items |

| Perceived Pleasure (PP) | PP1: Interacting with AI agents will be very fun. PP2: The actual process of using AI agents will be enjoyable. PP3: Using AI agents will be pleasant. |

| Anthropomorphism (AN) | AN1: AI agents will experience emotions. AN2: AI agents will have consciousness. AN3: AI agents will have minds of their own. AN4: AI agents will have personalities. |

| Perceived Benefit (PB) | PB1: AI agents will provide convenience for daily life. PB2: AI agents will improve work efficiency and productivity. PB3: AI agents will promote economic development. PB4: AI agents will provide personalized services to better meet individual needs. |

| Perceived Knowledge (PK) | PK1: I know AI agents very well. PK2: Compared to most people, I have a better understanding of AI agents. PK3: Among the people I know, I can be regarded as an “expert” in the field of AI agents. |

| Cognitive Trust (CT) | CT1: AI agents will be technologically trustworthy. CT2: The overall ability and performance of AI agents will be reliable. CT3: AI agents will have rich and powerful domain knowledge. CT4: AI agents will make accurate and wise decisions. |

| Affective Trust (AT) | AT1: AI agents will closely follow and understand my needs in future interactions. AT2: AI agents will provide timely assistance when I need help. AT3: AI agents that help me with tasks will make me feel comfortable. AT4: AI agents will demonstrate understanding and resonance with my emotional state. |

| Overall Trust (OT) | OT1: AI agents will be reliable. OT2: AI agents will be trustworthy. OT3: Overall, I can trust AI agents in the future. |

| General Acceptance (GA) | GA1: I will use AI agents in the future. GA2: I will pay for AI agents in the future. GA3: I will recommend AI agents to my family and friends. GA4: Please rate your overall attitude toward future AI agents. GA5: Please indicate your acceptability level of future AI agents. |

| Ethical Expectation (EE) | EE1: I expect AI agents to provide me with sufficient information to explain their operational principle when performing tasks in the future. EE2: I expect explanations from AI agents to be clear and easy to understand in the future. EE3: I expect AI agents to fully demonstrate their action to me, ensuring transparency in the future. EE4: I expect AI agents to enable me to clearly understand how they make decisions in the future. EE5: I expect AI agents to follow the moral and ethical standards of human society in the future. EE6: I expect AI agents to be fair, such as to ensure that every user receives support in the future. EE7: I expect AI agents to be inclusive, such as avoiding gender discrimination in the future. EE8: I expect AI agents to effectively protect my personal privacy and data security in the future. |

| Note: All items were adapted from established studies and refined based on the findings from the pre-experiment. | |

References

- Abadie, A., Chowdhury, S., & Mangla, S. K. (2024). A shared journey: Experiential perspective and empirical evidence of virtual social robot ChatGPT’s priori acceptance. Technological Forecasting and Social Change, 201, 123202. [Google Scholar] [CrossRef]

- Ali, J., Amjad, U. A., Ansari, W. I., & Hafeez, F. (2024). Artificial intelligence system-based chatbot as a hotel agent. Recent Advances in Electrical & Electronic Engineering (Formerly Recent Patents on Electrical & Electronic Engineering), 17(3), 316–325. [Google Scholar]

- Ananthan, B., Sudhan, P., & Sukumaran, R. (2023). English facilitators’ hesitation to adopt AI-assisted speaking assessment in higher education. Boletin de Literatura Oral-The Literary Journal, 10(1), 1324–1329. [Google Scholar]

- Badghish, S., Shaik, A. S., Sahore, N., Srivastava, S., & Masood, A. (2024). Can transactional use of AI-controlled voice assistants for service delivery pickup pace in the near future? A social learning theory (SLT) perspective. Technological Forecasting and Social Change, 198, 122972. [Google Scholar] [CrossRef]

- Bagozzi, R. P., & Yi, Y. (1988). On the evaluation of structural equation models. Journal of the Academy of Marketing Science, 16, 74–94. [Google Scholar] [CrossRef]

- Bao, L., Krause, N. M., Calice, M. N., Scheufele, D. A., Wirz, C. D., Brossard, D., Newman, T. P., & Xenos, M. A. (2022). Whose AI? How different consumers think about AI and its social impacts. Computers in Human Behavior, 130, 107182. [Google Scholar] [CrossRef]

- Cabiddu, F., Moi, L., Patriotta, G., & Allen, D. G. (2022). Why do users trust algorithms? A review and conceptualization of initial trust and trust over time. European Management Journal, 40(5), 685–706. [Google Scholar] [CrossRef]

- Castelo, N., Bos, M. W., & Lehmann, D. R. (2019). Task-dependent algorithm aversion. Journal of Marketing Research, 56(5), 809–825. [Google Scholar] [CrossRef]

- Celik, I. (2023). Towards intelligent-TPACK: An empirical study on teachers’ professional knowledge to ethically integrate artificial intelligence (AI)-based tools into education. Computers in Human Behavior, 138, 107468. [Google Scholar] [CrossRef]

- Chaiken, S. (1980). Heuristic versus systematic information processing and the use of source versus message cues in persuasion. Journal of Personality and Social Psychology, 39(5), 752–766. [Google Scholar] [CrossRef]

- Chakraborty, D., Kar, A. K., Patre, S., & Gupta, S. (2024). Enhancing trust in online grocery shopping through generative AI chatbots. Journal of Business Research, 180, 114737. [Google Scholar] [CrossRef]

- Chen, Q. Q., & Park, H. J. (2021). How anthropomorphism affects trust in intelligent personal assistants. Industrial Management & Data Systems, 121(12), 2722–2737. [Google Scholar]

- Cheng, X., Zhang, X., Cohen, J., & Mou, J. (2022). Human vs. AI: Understanding the impact of anthropomorphism on consumer response to chatbots from the perspective of trust and relationship norms. Information Processing & Management, 59(3), 102940. [Google Scholar]

- Chi, N. T. K., & Hoang Vu, N. (2023). Investigating the customer trust in artificial intelligence: The role of anthropomorphism, empathy response, and interaction. CAAI Transactions on Intelligence Technology, 8(1), 260–273. [Google Scholar] [CrossRef]

- Choi, S., Jang, Y., & Kim, H. (2023). Influence of pedagogical beliefs and perceived trust on teachers’ acceptance of educational artificial intelligence tools. International Journal of Human–Computer Interaction, 39(4), 910–922. [Google Scholar] [CrossRef]

- Choung, H., David, P., & Ross, A. (2023). Trust in AI and its role in the acceptance of AI technologies. International Journal of Human–Computer Interaction, 39(9), 1727–1739. [Google Scholar] [CrossRef]

- Delgosha, M. S., & Hajiheydari, N. (2021). How human users engage with consumer robots? A dual model of psychological ownership and trust to explain post-adoption behaviours. Computers in Human Behavior, 117, 106660. [Google Scholar] [CrossRef]

- Duffy, A. (2017). Trusting me, trusting you: Evaluating three forms of trust on an information-rich consumer review web-site. Journal of Consumer Behaviour, 16(3), 212–220. [Google Scholar] [CrossRef]

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. [Google Scholar] [CrossRef]

- Fu, Z., Zhao, T. Z., & Finn, C. (2024). Mobile aloha: Learning bimanual mobile manipulation with low-cost whole-body teleoperation. arXiv, arXiv:2401.02117. [Google Scholar]

- Glikson, E., & Woolley, A. W. (2020). Human trust in artificial intelligence: Review of empirical research. Academy of Management Annals, 14(2), 627–660. [Google Scholar] [CrossRef]

- Gursoy, D., Chi, O. H., Lu, L., & Nunkoo, R. (2019). Consumers acceptance of artificially intelligent (AI) device use in service delivery. International Journal of Information Management, 49, 157–169. [Google Scholar] [CrossRef]

- Hadi, M. U., Qureshi, R., Shah, A., Irfan, M., Zafar, A., Shaikh, M. B., Akhtar, N., Hassan, S. Z., Shoman, M., Wu, J., Mirjalili, S., & Mirjalili, S. (2023). A survey on large language models: Applications, challenges, limitations, and practical usage. Authorea Preprints. Available online: https://techrxiv.figshare.com/articles/preprint/A_Survey_on_Large_Language_Models_Applications_Challenges_Limitations_and_Practical_Usage/23589741/1/files/41501037.pdf (accessed on 28 February 2025).

- Hair, J. F., Jr., Hult, G. T. M., Ringle, C. M., & Sarstedt, M. (2016). A primer on partial least squares structural equation modeling (PLS-SEM). Sage Consumerations. [Google Scholar]

- Hair, J. F., Jr., Hult, G. T. M., Ringle, C. M., Sarstedt, M., Danks, N. P., & Ray, S. (2021). An introduction to structural equation modeling. In Partial least squares structural equation modeling (PLS-SEM) using R: A workbook (pp. 1–29). Springer. [Google Scholar]

- Hair, J. F., Jr., Sarstedt, M., Hopkins, L., & Kuppelwieser, V. G. (2014). Partial least squares structural equation modeling (PLS-SEM): An emerging tool in business research. European Business Review, 26, 106–121. [Google Scholar] [CrossRef]

- Hair, J. F., Risher, J. J., Sarstedt, M., & Ringle, C. M. (2019). When to use and how to report the results of PLS-SEM. European Business Review, 31(1), 2–24. [Google Scholar] [CrossRef]

- Hayawi, K., & Shahriar, S. (2024). AI agents from copilots to coworkers: Historical context, challenges, limitations, implications, and practical guidelines. Available online: https://www.preprints.org/manuscript/202404.0709/v1 (accessed on 28 February 2025).

- Henseler, J., Ringle, C. M., & Sinkovics, R. R. (2009). The use of partial least squares path modeling in international marketing. Emerald Group Publishing Limited. [Google Scholar]

- Hohenberger, C., Sprrle, M., & Welpe, I. M. (2017). Not fearless, but self-enhanced: The effects of anxiety on the willingness to use autonomous cars depend on individual levels of self-enhancement. Technological Forecasting and Social Change, 116, 40–52. [Google Scholar] [CrossRef]

- Hsieh, P.-J. (2023). Determinants of physicians’ intention to use AI-assisted diagnosis: An integrated readiness perspective. Computers in Human Behavior, 147, 107868. [Google Scholar] [CrossRef]

- Hu, L. t., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55. [Google Scholar] [CrossRef]

- Huo, W., Zheng, G., Yan, J., Sun, L., & Han, L. (2022). Interacting with medical artificial intelligence: Integrating self-responsibility attribution, human–computer trust, and personality. Computers in Human Behavior, 132, 107253. [Google Scholar] [CrossRef]

- Inie, N., Druga, S., Zukerman, P., & Bender, E. M. (2024, June 3–6). From “AI” to probabilistic automation: How does anthropomorphization of technical systems descriptions influence trust? 2024 ACM Conference on Fairness, Accountability, and Transparency (pp. 2322–2347), Rio de Janeiro, Brazil. [Google Scholar]

- Ipsos MORI. (2024). Global attitudes on AI agents: From tools to teammates. Ipsos MORI. [Google Scholar]

- Jang, W., Kim, S., Chun, J. W., Jung, A.-R., & Kim, H. (2023). Role of recommendation sizes and travel involvement in evaluating travel destination recommendation services: Comparison between artificial intelligence and travel experts. Journal of Hospitality and Tourism Technology, 14(3), 401–415. [Google Scholar] [CrossRef]

- John-Mathews, J.-M. (2022). Some critical and ethical perspectives on the empirical turn of AI interpretability. Technological Forecasting and Social Change, 174, 121209. [Google Scholar] [CrossRef]

- Kadhim, M. A., Alam, M. A., & Kaur, H. (2013, December 21–23). Design and implementation of intelligent agent and diagnosis domain tool for rule-based expert system. 2013 International Conference on Machine Intelligence and Research Advancement (pp. 619–622), Katra, India. [Google Scholar]

- Kaplan, A. D., Kessler, T. T., Brill, J. C., & Hancock, P. A. (2023). Trust in artificial intelligence: Meta-analytic findings. Human Factors, 65(2), 337–359. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.-Y., & McGill, A. L. (2018). Minions for the rich? Financial status changes how consumers see products with anthropomorphic features. Journal of Consumer Research, 45(2), 429–450. [Google Scholar] [CrossRef]

- Kim, J. (2020). The influence of perceived costs and perceived benefits on AI-driven interactive recommendation agent value. Journal of Global Scholars of Marketing Science, 30(3), 319–333. [Google Scholar] [CrossRef]

- Kim, J., & Im, I. (2023). Anthropomorphic response: Understanding interactions between humans and artificial intelligence agents. Computers in Human Behavior, 139, 107512. [Google Scholar] [CrossRef]

- Knight, A. J. (2005). Differential effects of perceived and objective knowledge measures on perceptions of biotechnology. AgBioForum, 8(4), 221–227. [Google Scholar]

- Kohl, C., Knigge, M., Baader, G., Bhm, M., & Krcmar, H. (2018). Anticipating acceptance of emerging technologies using twitter: The case of self-driving cars. Journal of Business Economics, 88(5), 617–642. [Google Scholar] [CrossRef]

- Komiak, S. Y., & Benbasat, I. (2006). The effects of personalization and familiarity on trust and adoption of recommendation agents. MIS Quarterly, 30, 941–960. [Google Scholar] [CrossRef]

- Kyung, N., & Kwon, H. E. (2022). Rationally trust, but emotionally? The roles of cognitive and affective trust in laypeople’s acceptance of AI for preventive care operations. Production and Operations Management, 1–20. [Google Scholar] [CrossRef]

- Lahno, B. (2001). On the emotional character of trust. Ethical Theory and Moral Practice, 4, 171–189. [Google Scholar] [CrossRef]

- Lahno, B. (2020). Trust and emotion. In The Routledge handbook of trust and philosophy (pp. 147–159). Routledge. [Google Scholar]

- Lee, J.-g., & Lee, K. M. (2022). Polite speech strategies and their impact on drivers’ trust in autonomous vehicles. Computers in Human Behavior, 127, 107015. [Google Scholar] [CrossRef]

- Lee, J. I., Dirks, K. T., & Campagna, R. L. (2023). At the heart of trust: Understanding the integral relationship between emotion and trust. Group & Organization Management, 48(2), 546–580. [Google Scholar]

- Lewis, J. D., & Weigert, A. (1985). Trust as a social reality. Social Forces, 63(4), 967–985. [Google Scholar] [CrossRef]

- Liu, H., Yang, R., Wang, L., & Liu, P. (2019). Evaluating initial consumer acceptance of highly and fully autonomous vehicles. International Journal of Human–Computer Interaction, 35(11), 919–931. [Google Scholar] [CrossRef]

- Liu, K., & Tao, D. (2022). The roles of trust, personalization, loss of privacy, and anthropomorphism in consumer acceptance of smart healthcare services. Computers in Human Behavior, 127, 107026. [Google Scholar] [CrossRef]

- Liu, Y. L., Yan, W., Hu, B., Li, Z., & Lai, Y. L. (2022). Effects of personalization and source expertise on users’ health beliefs and usage intention toward health chat-bots: Evidence from an online experiment. Digital Health, 8, 20552076221129718. [Google Scholar] [CrossRef]

- Lowry, P. B., & Gaskin, J. (2014). Partial least squares (PLS) structural equation modeling (SEM) for building and testing behavioral causal theory: When to choose it and how to use it. IEEE Transactions on Professional Communication, 57(2), 123–146. [Google Scholar] [CrossRef]

- Maes, P. (1990). Situated agents can have goals. Robotics and Autonomous Systems, 6(1–2), 49–70. [Google Scholar] [CrossRef]

- Mayer, R. C., Davis, J. H., & Schoorman, F. D. (1995). An integrative model of organizational trust. Academy of Management Review, 20(3), 709–734. [Google Scholar] [CrossRef]

- McAllister, D. J. (1995). Affect-and cognition-based trust as foundations for interpersonal cooperation in organizations. Academy of Management Journal, 38(1), 24–59. [Google Scholar] [CrossRef]

- McKinsey Global Institute. (2023). The economic potential of generative AI: The next productivity frontier. McKinsey Global Institute. [Google Scholar]

- McKnight, D. H., & Chervany, N. L. (2001). What trust means in e-commerce customer relationships: An interdisciplinary conceptual typology. International Journal of Electronic Commerce, 6(2), 35–59. [Google Scholar] [CrossRef]

- Morgan, R. M., & Hunt, S. D. (1994). The commitment-trust theory of relationship marketing. Journal of Marketing, 58(3), 20–38. [Google Scholar] [CrossRef]

- Müller, J. P., & Pischel, M. (1994, August 8–12). Modelling interacting agents in dynamic environments. 11th European Conference on Artificial Intelligence (pp. 709–713), Amsterdam, The Netherlands. [Google Scholar]

- Nazir, S., Khadim, S., Asadullah, M. A., & Syed, N. (2023). Exploring the influence of artificial intelligence technology on consumer repurchase intention: The mediation and moderation approach. Technology in Society, 72, 102190. [Google Scholar] [CrossRef]

- Nilsson, N. J. (1992). Toward agent programs with circuit semantics. Technical report. NASA.

- Oseni, A., Moustafa, N., Janicke, H., Liu, P., Tari, Z., & Vasilakos, A. (2021). Security and privacy for artificial intelligence: Opportunities and challenges. arXiv, arXiv:2102.04661. [Google Scholar]

- Patrizi, M., Šerić, M., & Vernuccio, M. (2024). Hey Google, I trust you! The consequences of brand anthropomorphism in voice-based artificial intelligence contexts. Journal of Retailing and Consumer Services, 77, 103659. [Google Scholar] [CrossRef]

- Pelau, C., Dabija, D.-C., & Ene, I. (2021). What makes an AI device human-like? The role of interaction quality, empathy and perceived psychological anthropomorphic characteristics in the acceptance of artificial intelligence in the service industry. Computers in Human Behavior, 122, 106855. [Google Scholar] [CrossRef]

- Podsakoff, P. M., MacKenzie, S. B., Lee, J.-Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology, 88(5), 879. [Google Scholar] [CrossRef]

- Roh, T., Park, B. I., & Xiao, S. S. (2023). Adoption of AI-enabled Robo-advisors in fintech: Simultaneous employment of UTAUT and the theory of reasoned action. Journal of Electronic Commerce Research, 24(1), 29–47. [Google Scholar]

- Ryu, Y., & Kim, S. (2015). Testing the heuristic/systematic information-processing model (HSM) on the perception of risk after the Fukushima nuclear accidents. Journal of Risk Research, 18(7), 840–859. [Google Scholar] [CrossRef]

- Said, N., Potinteu, A. E., Brich, I., Buder, J., Schumm, H., & Huff, M. (2023). An artificial intelligence perspective: How knowledge and confidence shape risk and benefit perception. Computers in Human Behavior, 149, 107855. [Google Scholar] [CrossRef]

- Sanbonmatsu, D. M., Strayer, D. L., Yu, Z., Biondi, F., & Cooper, J. M. (2018). Cognitive underpinnings of beliefs and confidence in beliefs about fully automated vehicles. Transportation Research Part F: Traffic Psychology and Behaviour, 55, 114–122. [Google Scholar] [CrossRef]