Trust Formation, Error Impact, and Repair in Human–AI Financial Advisory: A Dynamic Behavioral Analysis

Abstract

1. Introduction

2. Literature Review and Hypotheses

2.1. Cognitive and Behavioral Foundations of AI Trust

2.2. Algorithm Appreciation vs. Algorithm Aversion: Reconciling Contradictory Behavioral Patterns

2.3. Single-Error Shock and Error Tolerance in Algorithmic Contexts

2.4. Explanatory Transparency as a Post-Error Trust Repair Mechanism

2.5. Individual Differences and the Role of Context: Financial Literacy as Focal Moderator

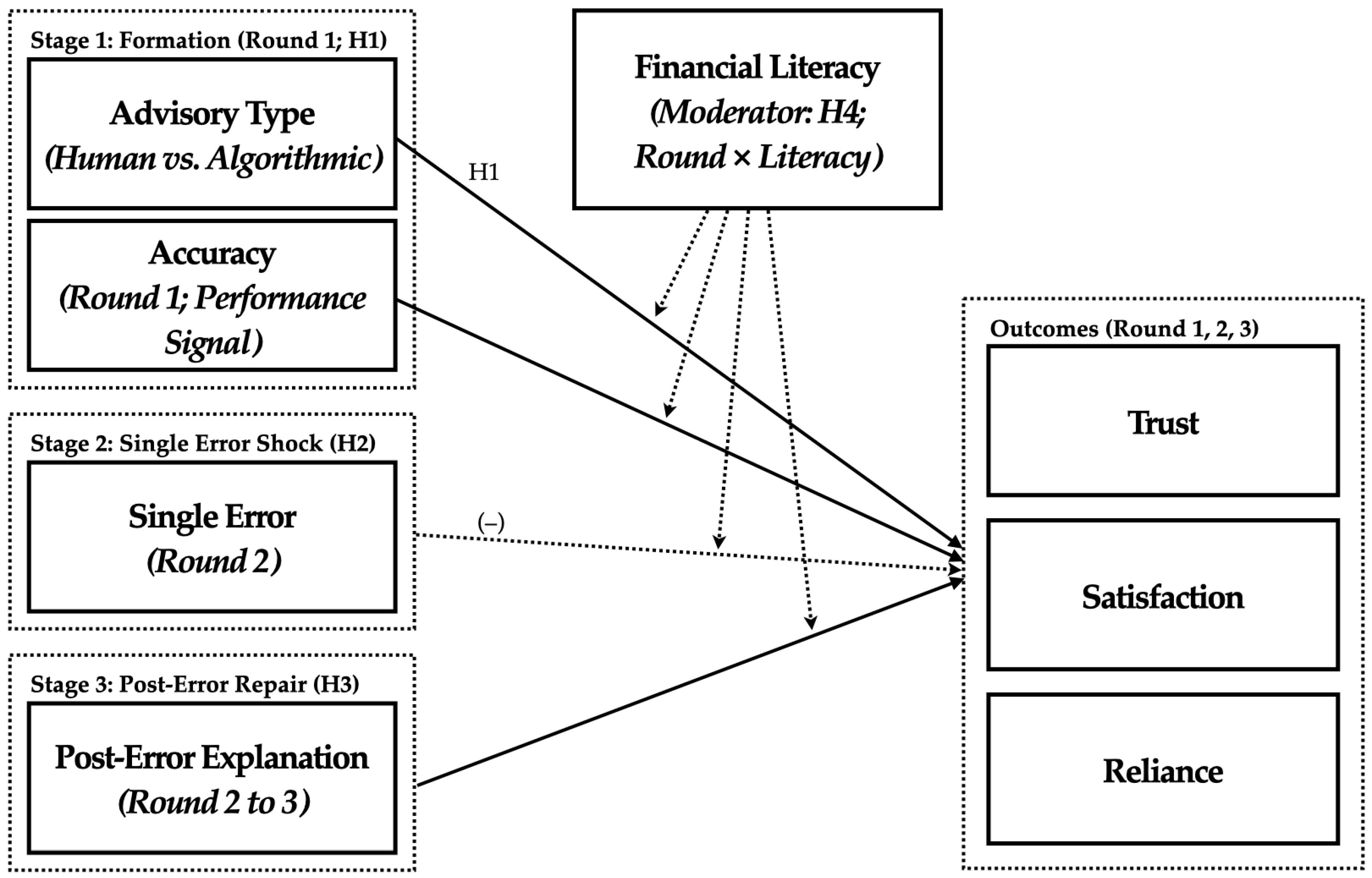

2.6. Conceptual Framework

3. Materials and Methods

3.1. Common Materials, Measures, and Procedures

3.2. Study 1: Participants, Design, Materials, and Procedure

3.3. Study 2: Participants, Design, Materials, and Procedure

3.4. Ethics, Data/Materials, and GenAI Use

4. Results

4.1. Study 1

4.2. Study 2

5. Discussion

5.1. Overview of Findings

5.2. Theoretical Contributions and Integration

5.2.1. Temporal Process Theory of AI Trust

5.2.2. Reconciliation of Algorithm Appreciation and Aversion

5.2.3. Cognitive Mechanisms and Individual Differences

5.3. Alternative Explanations and Robustness Considerations

5.4. Cross-Domain Implications and Limitations

5.5. Practical Implications for Design and Policy

5.6. Limitations and Future Research Directions

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ahmad, M. I., Bernotat, J., Lohan, K., & Eyssel, F. (2019). Trust and cognitive load during human-robot interaction. arXiv, arXiv:1909.05160. [Google Scholar] [CrossRef]

- Appelman, A., & Sundar, S. S. (2015). Measuring message credibility: Construction and validation of an exclusive scale. Journalism & Mass Communication Quarterly, 93(1), 59–79. [Google Scholar] [CrossRef]

- Bailey, N. R., & Scerbo, M. W. (2007). Automation-induced complacency for monitoring highly reliable systems: The role of task complexity, system experience, and operator trust. Theoretical Issues in Ergonomics Science, 8(4), 321–348. [Google Scholar] [CrossRef]

- Bauer, K., von Zhan, M., & Hinz, O. (2023). Expl(AI)ned: The impact of explainable artificial intelligence on users’ information processing. Information Systems Research, 34(4), 1582–1602. [Google Scholar] [CrossRef]

- Burton, J. W., Stein, M. K., & Jensen, T. B. (2020). A systematic review of algorithm aversion in augmented decision making. Journal of Behavioral Decision Making, 33(2), 119–254. [Google Scholar] [CrossRef]

- Castelo, N., Bos, M. W., & Lehmann, D. R. (2019). Task-dependent algorithm aversion. Journal of Marketing Research, 56(5), 809–825. [Google Scholar] [CrossRef]

- Chekroud, A. M., Hawrilenko, M., Loho, H., Bondar, J., Gueorguieva, R., Hasan, A., Kambeitz, J., Corlett, P. R., Koutsouleris, N., Krumholz, H. M., Krystal, J. H., & Paulus, M. (2024). Illusory generalizability of clinical prediction models. Science, 383(6679), 164–167. [Google Scholar] [CrossRef]

- Cummings, M. L. (2017). Automation bias in intelligent time critical decision support systems. In D. Harris, & W. C. Lu (Eds.), Decision making in aviation (pp. 289–294). Routledge. [Google Scholar]

- Dietvorst, B. J., Simmons, J. P., & Massey, C. (2015). Algorithm aversion: People erroneously avoid algorithms after seeing them err. Journal of Experimental Psychology: General, 144(1), 114–126. [Google Scholar] [CrossRef]

- Dietvorst, B. J., Simmons, J. P., & Massey, C. (2018). Overcoming algorithm aversion: People will use imperfect algorithms if they can (even slightly) modify them. Management Science, 64(3), 1155–1170. [Google Scholar] [CrossRef]

- Dohmen, T., Falk, A., Huffman, D., Sunde, U., Schupp, J., & Wagner, G. G. (2011). Individual risk attitudes: Measurement, determinants, and behavioral consequences. Journal of the European Economic Association, 9(3), 522–550. [Google Scholar] [CrossRef]

- Dzindolet, M. T., Peterson, S. A., Pomranky, R. A., Pierce, L. G., & Beck, H. P. (2003). The role of trust in automation reliance. International Journal of Human-Computer Studies, 58(6), 697–718. [Google Scholar] [CrossRef]

- Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41, 1149–1160. [Google Scholar] [CrossRef] [PubMed]

- Fernandes, D., Lynch, J. G., & Netemeyer, R. G. (2014). Financial literacy, financial education, and downstream financial behaviors. Management Science, 60(8), 1861–1883. [Google Scholar] [CrossRef]

- Flavián, C., Pérez-Rueda, A., Belanche, D., & Casaló, L. V. (2022). Intention to use analytical artificial intelligence (AI) in services—The effect of technology readiness and awareness. Journal of Service Management, 33(2), 293–320. Available online: https://ssrn.com/abstract=4128118 (accessed on 19 August 2025). [CrossRef]

- Gefen, D. (2000). E-commerce: The role of familiarity and trust. Omega, 28(6), 725–737. [Google Scholar] [CrossRef]

- Glikson, E., & Woolley, A. W. (2020). Human trust in artificial intelligence: Review of empirical research. Academy of Management, 14(2), 627–660. [Google Scholar] [CrossRef]

- Goddard, K., Roudsari, A., & Wyatt, J. C. (2012). Automation bias: A systematic review of frequency, effect mediators, and mitigators. Journal of the American Medical Informatics Association, 19(1), 121–127. [Google Scholar] [CrossRef]

- Gulati, S., Sousa, S., & Lamas, D. (2019). Design, development and evaluation of a human-computer trust scale. Behaviour & Information Technology, 38(10), 1004–1015. [Google Scholar] [CrossRef]

- Hancock, P. A., Billings, D. R., Schaefer, K. E., Chen, J. Y. C., de Visser, E. J., & Parasuraman, R. (2011). A meta-analysis of factors affecting trust in human-robot interaction. Hum Factors, 53(5), 517–527. [Google Scholar] [CrossRef]

- Hoffmann, A. O. I., Post, T., & Pennings, J. M. E. (2013). Individual investor perceptions and behavior during the financial crisis. Journal of Banking & Finance, 37(1), 60–74. [Google Scholar] [CrossRef]

- Hoffmann, R. R., Klein, G., & Mueller, S. T. (2018). Explaining explanation for “Explainable AI”. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 62(1), 197–201. [Google Scholar] [CrossRef]

- Kim, P. H., Dirks, K. T., & Cooper, C. D. (2009). The repair of trust: A dynamic bilateral perspective and multilevel conceptualization. Academy of Management Review, 34(3), 401–422. [Google Scholar] [CrossRef]

- Kim, P. H., Ferrin, D. L., Cooper, C. D., & Dirks, K. T. (2004). Removing the shadow of suspicion: The effects of apology versus denial for repairing competence- versus integrity-based trust violations. Journal of Applied Psychology, 89(1), 104–118. [Google Scholar] [CrossRef]

- Kim, T., & Song, H. (2023). “I believe AI can learn from the error. or can it not?”: The effects of implicit theories on trust repair of the intelligent agent. International Journal of Social Robotics, 15, 115–128. [Google Scholar] [CrossRef]

- Klingbeil, A., Grützner, C., & Schreck, P. (2024). Trust and reliance on AI—An experimental study on the extent and costs of overreliance on AI. Computers in Human Behavior, 160, 108352. [Google Scholar] [CrossRef]

- Lee, J. D., & See, K. A. (2004). Trust in automation: Designing for appropriate reliance. Human Factors, 46(1), 50–80. [Google Scholar] [CrossRef]

- Logg, J. M., Minson, J. A., & Moore, D. A. (2019). Algorithm appreciation: People prefer algorithmic to human judgment. Organizational Behavior and Human Decision Processes, 151, 90–103. [Google Scholar] [CrossRef]

- Lusardi, A., & Mitchell, O. S. (2014). The economic importance of financial literacy: Theory and evidence. Journal of Economic Literature, 52(1), 5–44. [Google Scholar] [CrossRef] [PubMed]

- Madhavan, P., & Wiegmann, D. A. (2007). Similarities and differences between human-human and human-automation trust: An integrative review. Theoretical Issues in Ergonomics Science, 8(4), 277–301. [Google Scholar] [CrossRef]

- Madhavan, P., Wiegmann, D. A., & Lacson, F. C. (2006). Automation failures on tasks easily performed by operators undermine trust in automated aids. Human Factors, 48(2), 241–256. [Google Scholar] [CrossRef] [PubMed]

- Mahmud, H., Islam, A. K. M. N., Ahmed, S. I., & Smolander, K. (2022). What influences algorithmic decision-making? a systematic literature review on algorithm aversion. Technological Forecasting and Social Change, 175, 121390. [Google Scholar] [CrossRef]

- Mayer, R. C., Davis, J. H., & Schoorman, F. D. (1995). An integrative model of organizational trust. Academy of Management Review, 20(3), 709–734. [Google Scholar] [CrossRef]

- McKnight, D. H., & Chervany, N. L. (2001). What trust means in e-commerce customer relationships: An interdisciplinary conceptual typology. International Journal of Electronic Commerce, 6(2), 35–59. [Google Scholar] [CrossRef]

- Miller, T. (2019). Explanation in artificial intelligence: Insights from the social sciences. Artificial Intelligence, 267, 1–38. [Google Scholar] [CrossRef]

- Nizette, F., Hammedi, W., van Riel, A. C. R., & Steils, N. (2025). Why should I trust you? Influence of explanation design on consumer behavior in AI-based services. Journal of Service Management, 36(1), 50–74. [Google Scholar] [CrossRef]

- Poursabzi-Sangdeh, F., Goldstein, D. G., Hofman, J. M., Vaughan, J. W. W., & Wallach, H. (2021, May 8–13). Manipulating and measuring model interpretability. CHI ’21: Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan. [Google Scholar] [CrossRef]

- Rousseau, D. M., Sitkin, S. B., Burt, R. S., & Camerer, C. (1998). Introduction to special topic forum: Not so different after all: A cross-discipline view of trust. The Academy of Management Review, 23(3), 393–404. Available online: https://www.jstor.org/stable/259285 (accessed on 13 August 2025). [CrossRef]

- Sanders, T., Kaplan, A., Koch, R., Schwartz, M., & Hancock, P. A. (2019). The relationship between trust and use choice in human-robot interaction. Human Factors, 61(4), 614–626. [Google Scholar] [CrossRef]

- Schecter, A., Bogert, E., & Lauharatanahirun, N. (2023, April 23–28). Algorithmic appreciation or aversion? The moderating effects of uncertainty on algorithmic decision making. CHI EA ’23: Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany. [Google Scholar] [CrossRef]

- Schmidt, P., Biessmann, F., & Teubner, T. (2020). Transparency and trust in artificial intelligence systems. Journal of Decision Systems, 29(4), 260–278. [Google Scholar] [CrossRef]

- Schwarzer, R., & Jerusalem, M. (1995). General self-efficacy scale. APA PsycTests. [Google Scholar] [CrossRef]

- Shin, D. (2021). The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. International Journal of Human-Computer Studies, 146, 102551. [Google Scholar] [CrossRef]

- Wang, B., Rau, P. L. P., & Yuan, T. (2023). Measuring user competence in using artificial intelligence: Validity and reliability of artificial intelligence literacy scale. Behaviour & Information Technology, 42(9), 1324–1337. [Google Scholar] [CrossRef]

- Xie, Y., Zhao, S., Zhou, P., & Liang, C. (2023). Understanding continued use intention of AI assistants. Journal of Computer Information Systems, 63(6), 1424–1437. [Google Scholar] [CrossRef]

- Yan, Y., Fan, W., Shao, B., & Lei, Y. (2022). The impact of perceived control and power on adolescents’ acceptance intention of intelligent online services. Frontiers in Psychology, 13, 1013436. [Google Scholar] [CrossRef] [PubMed]

- Yang, R., Li, S., Qi, Y., Liu, J., He, Q., & Zhao, H. (2025). Unveiling users’ algorithm trust: The role of task objectivity, time pressure, and cognitive load. Computers in Human Behavior Reports, 18, 100667. [Google Scholar] [CrossRef]

| Panel A. Study 1 | ||||||||

| Variable | Total Sample (N = 189) | Low-Risk/ Human Expert (N = 43) | Low-Risk/ AI Robo-Advisor (N = 50) | High-Risk/ Human Expert (N = 48) | High-Risk/ AI Robo-Advisor (N = 48) | X2/F | ||

| Gender (%) | ||||||||

| Male | 50.3 | 48.8 | 50.0 | 50.0 | 52.1 | 0.101 | ||

| Female | 49.7 | 51.2 | 50.0 | 50.0 | 47.9 | |||

| Age (M, SD) | 39.96 (10.43) | 39.19 (11.25) | 40.24 (10.22) | 40.08 (10.55) | 40.23 (10.08) | 0.102 | ||

| Education (%) | ||||||||

| High school graduate | 5.8 | 9.3 | 2.0 | 8.3 | 4.2 | 4.660 | ||

| College graduate | 82.0 | 79.1 | 90.0 | 77.1 | 81.3 | |||

| Postgraduate degree | 12.2 | 11.6 | 8.0 | 14.6 | 14.6 | |||

| Household income (KRW 10 k, M, SD) | 390.74 (232.72) | 409.33 (267.59) | 377.60 (197.33) | 340.52 (202.10) | 438.00 (256.59) | 1.563 | ||

| Risk attitude (M, SD) | 5.07 (1.75) | 4.60 (1.40) | 5.20 (1.91) | 5.38 (1.88) | 5.04 (1.70) | 1.610 | ||

| Financial skill (M, SD) | 4.69 (0.80) | 4.72 (0.82) | 4.66 (0.99) | 4.70 (0.70) | 4.69 (0.68) | 0.035 | ||

| Financial literacy (M, SD) | 4.36 (1.47) | 4.56 (1.18) | 4.26 (1.61) | 4.06 (1.59) | 4.58 (1.38) | 1.380 | ||

| Self-efficacy (M, SD) | 4.80 (0.88) | 4.72 (1.00) | 4.76 (0.96) | 4.77 (0.82) | 4.93 (0.75) | 0.487 | ||

| Investment independence (M, SD) | 3.32 (0.82) | 3.51 (0.74) | 3.30 (0.81) | 3.27 (0.74) | 3.23 (0.97) | 1.039 | ||

| Prospectus review (%) | ||||||||

| Yes | 37.0 | 30.2 | 38.0 | 41.7 | 37.5 | 1.319 | ||

| No | 63.0 | 69.8 | 62.0 | 58.3 | 62.5 | |||

| AI literacy (M, SD) | 4.58 (0.94) | 4.61 (0.72) | 4.50 (1.00) | 4.53 (1.02) | 4.68 (0.98) | 0.349 | ||

| Trust in AI (M, SD) | 3.97 (1.04) | 3.80 (1.10) | 4.08 (0.99) | 3.85 (1.00) | 4.12 (1.05) | 1.111 | ||

| Panel B. Study 2 | ||||||||

| Variable | Total Sample (N = 294) | Human/ Accurate (N = 50) | Human/ Inaccurate/ +Explanation (N = 50) | Human/ Inaccurate/ −Explanation (N = 47) | AI advisor/ Accurate (N = 50) | AI advisor/ Inaccurate/ +Explanation (N = 47) | AI advisor/ Inaccurate/ −Explanation (N = 50) | X2/F |

| Gender (%) | ||||||||

| Male | 49.7 | 50.0 | 50.0 | 48.9 | 50.0 | 48.9 | 50.0 | 0.029 |

| Female | 50.3 | 50.0 | 50.0 | 51.1 | 50.0 | 51.1 | 50.0 | |

| Age (M, SD) | 39.86 (10.40) | 40.28 (10.14) | 39.62 (10.54) | 40.23 (10.26) | 39.62 (10.60) | 39.98 (11.56) | 39.48 (9.80) | 0.053 |

| Education (%) | ||||||||

| High school graduate | 10.9 | 0.0 | 6.0 | 17.0 | 16.0 | 10.6 | 16.0 | 16.059 |

| College graduate | 78.6 | 88.0 | 86.0 | 68.1 | 80.0 | 76.6 | 72.0 | |

| Postgraduate degree | 10.5 | 12.0 | 8.0 | 14.9 | 4.0 | 12.8 | 12.0 | |

| Household income (KRW 10 k, M, SD) | 409.31 (257.34) | 480.00 (324.51) | 438.80 (196.93) | 380.64 (184.90) | 385.74 (277.91) | 384.47 (203.30) | 383.00 (308.45) | 1.285 |

| Risk attitude (M, SD) | 5.38 (2.21) | 4.94 (2.32) | 5.38 (1.96) | 5.13 (2.21) | 5.78 (2.45) | 5.79 (2.07) | 5.30 (2.17) | 1.185 |

| Financial skill (M, SD) | 4.66 (0.89) | 4.79 (0.85) | 4.80 (0.90) | 4.67 (0.92) | 4.46 (1.01) | 4.66 (0.72) | 4.55 (0.89) | 1.135 |

| Financial literacy (M, SD) | 4.19 (1.50) | 4.28 (1.33) | 4.36 (1.37) | 4.21 (1.64) | 4.02 (1.72) | 4.06 (1.51) | 4.20 (1.43) | 0.360 |

| Self-efficacy (M, SD) | 4.77 (0.91) | 4.96 (0.88) | 4.68 (0.94) | 4.89 (0.89) | 4.53 (1.02) | 4.77 (0.82) | 4.79 (0.85) | 1.406 |

| Investment independence (M, SD) | 3.40 (0.78) | 3.16 (0.89) | 3.44 (0.76) | 3.47 (0.75) | 3.44 (0.76) | 3.43 (0.65) | 3.50 (0.81) | 1.259 |

| Prospectus review (%) | ||||||||

| Yes | 38.1 | 40.0 | 36.0 | 34.0 | 34.0 | 46.8 | 38.0 | 2.366 |

| No | 61.9 | 60.0 | 64.0 | 66.0 | 66.0 | 53.2 | 62.0 | |

| AI literacy (M, SD) | 4.60 (0.96) | 4.65 (1.01) | 4.70 (1.02) | 4.62 (0.99) | 4.30 (1.06) | 4.84 (0.85) | 4.49 (0.76) | 1.856 |

| Trust in AI (M, SD) | 4.09 (1.02) | 4.34 (0.90) | 3.98 (1.08) | 3.98 (1.03) | 4.05 (1.03) | 4.19 (1.00) | 4.02 (1.09) | 0.977 |

| Dependent Variable | Condition | Mean (SD) | F | p | η2 |

|---|---|---|---|---|---|

| Trust | All—Human | 4.44 (0.89) | |||

| All—AI | 4.79 (0.82) | ||||

| Low Risk—Human | 4.52 (0.87) | ||||

| Low Risk—AI | 4.83 (0.94) | ||||

| High Risk—Human | 4.36 (0.92) | ||||

| High Risk—AI | 4.75 (0.69) | ||||

| Main Effects | |||||

| Advisory Type | 7.820 ** | 0.006 | 0.041 | ||

| Risk Level | 0.859 | 0.355 | 0.005 | ||

| Advisory Type × Risk | 0.092 | 0.762 | 0.000 | ||

| Satisfaction | All—Human | 4.53 (1.15) | |||

| All—AI | 4.89 (0.96) | ||||

| Low Risk—Human | 4.51 (1.18) | ||||

| Low Risk—AI | 4.82 (0.94) | ||||

| High Risk—Human | 4.54 (1.13) | ||||

| High Risk—AI | 4.96 (0.99) | ||||

| Main Effects | |||||

| Advisory Type | 5.507 * | 0.020 | 0.029 | ||

| Risk Level | 0.297 | 0.586 | 0.002 | ||

| Advisory Type × Risk | 0.123 | 0.726 | 0.001 | ||

| Reliance | All—Human | 4.12 (1.13) | |||

| All—AI | 4.63 (0.92) | ||||

| Low Risk—Human | 3.96 (1.12) | ||||

| Low Risk—AI | 4.67 (0.90) | ||||

| High Risk—Human | 4.27 (1.13) | ||||

| High Risk—AI | 4.59 (0.96) | ||||

| Main Effects | |||||

| Advisory Type | 11.726 *** | <0.001 | 0.060 | ||

| Risk Level | 0.607 | 0.437 | 0.003 | ||

| Advisory Type × Risk | 1.663 | 0.199 | 0.009 |

| Panel A. Round × Accuracy | |||||||||

| Trust | Satisfaction | Reliance | |||||||

| df | F | Partial η2 | df | F | Partial η2 | df | F | Partial η2 | |

| Between-Subject Effects | |||||||||

| Gender (male = 0) | 1 | 0.001 | 0.000 | 1 | 0.793 | 0.003 | 1 | 0.515 | 0.002 |

| Age | 1 | 1.557 | 0.006 | 1 | 0.111 | 0.000 | 1 | 0.299 | 0.001 |

| Monthly income (KRW 10 k) | 1 | 0.288 | 0.001 | 1 | 0.238 | 0.001 | 1 | 1.121 | 0.004 |

| High school (university = 0) | 1 | 0.338 | 0.001 | 1 | 0.375 | 0.001 | 1 | 0.268 | 0.001 |

| Graduate (university = 0) | 1 | 1.510 | 0.005 | 1 | 1.682 | 0.006 | 1 | 1.039 | 0.004 |

| Risk Attitude | 1 | 0.051 | 0.000 | 1 | 0.054 | 0.000 | 1 | 0.018 | 0.000 |

| Financial Skill | 1 | 0.009 | 0.000 | 1 | 0.179 | 0.001 | 1 | 0.126 | 0.000 |

| Financial Literacy | 1 | 0.869 | 0.003 | 1 | 0.575 | 0.002 | 1 | 2.421 | 0.009 |

| Self-efficacy | 1 | 1.518 | 0.005 | 1 | 0.260 | 0.001 | 1 | 0.000 | 0.000 |

| Investment independence | 1 | 1.237 | 0.004 | 1 | 0.249 | 0.001 | 1 | 0.391 | 0.001 |

| Prospectus review (don’t = 0) | 1 | 0.020 | 0.000 | 1 | 0.550 | 0.002 | 1 | 1.016 | 0.004 |

| AI Literacy | 1 | 0.030 | 0.000 | 1 | 0.094 | 0.000 | 1 | 0.000 | 0.000 |

| Trust in AI | 1 | 45.558 *** | 0.141 | 1 | 32.136 *** | 0.104 | 1 | 47.465 *** | 0.146 |

| Advisory Type | 1 | 5.650 * | 0.020 | 1 | 4.236 * | 0.015 | 1 | 3.507 | 0.013 |

| Accuracy | 1 | 14.035 *** | 0.048 | 1 | 10.081 ** | 0.035 | 1 | 6.568 * | 0.023 |

| Advisory Type × Accuracy | 1 | 0.368 | 0.001 | 1 | 0.018 | 0.000 | 1 | 0.027 | 0.000 |

| Within-Subjects Effects | |||||||||

| Round (Time Effect) | 1.916 | 0.181 | 0.001 | 1.928 | 0.701 | 0.003 | 1.910 | 0.132 | 0.000 |

| Round × Gender (male = 0) | 1.916 | 0.532 | 0.002 | 1.928 | 0.002 | 0.000 | 1.910 | 0.096 | 0.000 |

| Round × Age | 1.916 | 1.329 | 0.005 | 1.928 | 1.160 | 0.004 | 1.910 | 1.757 | 0.006 |

| Round × Monthly income (KRW 10 k) | 1.916 | 1.710 | 0.006 | 1.928 | 0.693 | 0.002 | 1.910 | 1.586 | 0.006 |

| Round × High school (university = 0) | 1.916 | 0.614 | 0.002 | 1.928 | 1.969 | 0.007 | 1.910 | 1.325 | 0.005 |

| Round × Graduate (university = 0) | 1.916 | 1.167 | 0.004 | 1.928 | 0.316 | 0.001 | 1.910 | 0.575 | 0.002 |

| Round × Risk attitude | 1.916 | 1.921 | 0.007 | 1.928 | 3.552 * | 0.013 | 1.910 | 0.887 | 0.003 |

| Round × Financial skill | 1.916 | 1.122 | 0.004 | 1.928 | 1.598 | 0.006 | 1.910 | 1.181 | 0.004 |

| Round × Financial literacy | 1.916 | 4.126 * | 0.015 | 1.928 | 2.100 | 0.008 | 1.910 | 3.527 * | 0.013 |

| Round × Self-efficacy | 1.916 | 1.005 | 0.004 | 1.928 | 0.978 | 0.004 | 1.910 | 0.877 | 0.003 |

| Round × Investment independence | 1.916 | 4.777 * | 0.017 | 1.928 | 6.376 ** | 0.022 | 1.910 | 4.293 * | 0.015 |

| Round × Prospectus review (don’t = 0) | 1.916 | 2.742 | 0.010 | 1.928 | 2.141 | 0.008 | 1.910 | 0.648 | 0.002 |

| Round × AI literacy | 1.916 | 0.498 | 0.002 | 1.928 | 0.046 | 0.000 | 1.910 | 0.847 | 0.003 |

| Round × Trust in AI | 1.916 | 1.612 | 0.006 | 1.928 | 0.675 | 0.002 | 1.910 | 0.541 | 0.002 |

| Round × Advisory Type | 1.916 | 0.711 | 0.003 | 1.928 | 1.210 | 0.004 | 1.910 | 1.096 | 0.004 |

| Round × Accuracy | 1.916 | 45.327 *** | 0.141 | 1.928 | 33.015 *** | 0.106 | 1.910 | 36.600 *** | 0.117 |

| Round × Advisory Type × Accuracy | 1.916 | 1.661 | 0.006 | 1.928 | 1.189 | 0.004 | 1.910 | 0.731 | 0.003 |

| Panel B. Round × Explanation | |||||||||

| Trust | Satisfaction | Reliance | |||||||

| df | F | Partial η2 | df | F | Partial η2 | df | F | Partial η2 | |

| Between-Subject Effects | |||||||||

| Gender (male = 0) | 1 | 0.007 | 0.000 | 1 | 1.690 | 0.009 | 1 | 1.006 | 0.006 |

| Age | 1 | 1.853 | 0.010 | 1 | 0.355 | 0.002 | 1 | 0.228 | 0.001 |

| Monthly income (KRW 10 k) | 1 | 0.137 | 0.001 | 1 | 0.007 | 0.000 | 1 | 0.397 | 0.002 |

| High school (university = 0) | 1 | 0.086 | 0.000 | 1 | 0.028 | 0.000 | 1 | 0.042 | 0.000 |

| Graduate (university = 0) | 1 | 5.317 * | 0.029 | 1 | 3.275 | 0.018 | 1 | 4.858 * | 0.027 |

| Risk Attitude | 1 | 0.044 | 0.000 | 1 | 0.000 | 0.000 | 1 | 0.030 | 0.000 |

| Financial Skill | 1 | 0.258 | 0.001 | 1 | 0.001 | 0.000 | 1 | 0.063 | 0.000 |

| Financial Literacy | 1 | 1.221 | 0.007 | 1 | 0.511 | 0.003 | 1 | 1.711 | 0.010 |

| Self-efficacy | 1 | 1.247 | 0.007 | 1 | 0.000 | 0.000 | 1 | 0.191 | 0.001 |

| Investment independence | 1 | 1.881 | 0.011 | 1 | 0.136 | 0.001 | 1 | 0.018 | 0.000 |

| Prospectus review (don’t = 0) | 1 | 0.076 | 0.000 | 1 | 0.019 | 0.000 | 1 | 0.313 | 0.002 |

| AI Literacy | 1 | 0.151 | 0.001 | 1 | 0.010 | 0.000 | 1 | 0.039 | 0.000 |

| Trust in AI | 1 | 22.085 *** | 0.111 | 1 | 15.230 *** | 0.079 | 1 | 31.144 *** | 0.150 |

| Advisory Type | 1 | 3.030 | 0.017 | 1 | 4.471 * | 0.025 | 1 | 2.913 | 0.016 |

| Explanation | 1 | 2.505 | 0.014 | 1 | 1.569 | 0.009 | 1 | 1.580 | 0.009 |

| Advisory Type × Explanation | 1 | 0.222 | 0.001 | 1 | 0.019 | 0.000 | 1 | 0.194 | 0.001 |

| Within-Subjects Effects | |||||||||

| Round (Time Effect) | 1.869 | 0.721 | 0.004 | 1.839 | 1.555 | 0.009 | 1.805 | 0.398 | 0.002 |

| Round × Gender (male = 0) | 1.869 | 1.264 | 0.007 | 1.839 | 0.214 | 0.001 | 1.805 | 0.154 | 0.001 |

| Round × Age | 1.869 | 0.419 | 0.002 | 1.839 | 0.007 | 0.000 | 1.805 | 0.595 | 0.003 |

| Round × Monthly income (KRW 10 k) | 1.869 | 0.747 | 0.004 | 1.839 | 0.454 | 0.003 | 1.805 | 0.682 | 0.004 |

| Round × High school (university = 0) | 1.869 | 0.081 | 0.000 | 1.839 | 1.950 | 0.011 | 1.805 | 1.541 | 0.009 |

| Round × Graduate (university = 0) | 1.869 | 0.864 | 0.005 | 1.839 | 0.348 | 0.002 | 1.805 | 0.365 | 0.002 |

| Round × Risk attitude | 1.869 | 2.931 | 0.016 | 1.839 | 2.152 | 0.012 | 1.805 | 1.194 | 0.007 |

| Round × Financial skill | 1.869 | 2.423 | 0.014 | 1.839 | 1.879 | 0.011 | 1.805 | 2.196 | 0.012 |

| Round × Financial literacy | 1.869 | 4.048 * | 0.022 | 1.839 | 2.674 | 0.015 | 1.805 | 3.638 * | 0.020 |

| Round × Self-efficacy | 1.869 | 1.092 | 0.006 | 1.839 | 0.076 | 0.000 | 1.805 | 0.141 | 0.001 |

| Round × Investment independence | 1.869 | 6.085 ** | 0.033 | 1.839 | 6.329 ** | 0.035 | 1.805 | 3.566 * | 0.020 |

| Round × Prospectus review (don’t = 0) | 1.869 | 3.526 * | 0.020 | 1.839 | 3.073 | 0.017 | 1.805 | 1.054 | 0.006 |

| Round × AI literacy | 1.869 | 1.277 | 0.007 | 1.839 | 1.933 | 0.011 | 1.805 | 1.119 | 0.006 |

| Round × Trust in AI | 1.869 | 2.877 | 0.016 | 1.839 | 0.873 | 0.005 | 1.805 | 0.162 | 0.001 |

| Round × Advisory Type | 1.869 | 1.378 | 0.008 | 1.839 | 1.952 | 0.011 | 1.805 | 0.976 | 0.005 |

| Round × Explanation | 1.869 | 16.573 *** | 0.086 | 1.839 | 6.709 ** | 0.037 | 1.805 | 4.079 * | 0.023 |

| Round × Advisory Type × Explanation | 1.869 | 0.836 | 0.005 | 1.839 | 2.221 | 0.012 | 1.805 | 3.533 * | 0.020 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, J.; Ko, D. Trust Formation, Error Impact, and Repair in Human–AI Financial Advisory: A Dynamic Behavioral Analysis. Behav. Sci. 2025, 15, 1370. https://doi.org/10.3390/bs15101370

Han J, Ko D. Trust Formation, Error Impact, and Repair in Human–AI Financial Advisory: A Dynamic Behavioral Analysis. Behavioral Sciences. 2025; 15(10):1370. https://doi.org/10.3390/bs15101370

Chicago/Turabian StyleHan, Jihyung, and Daekyun Ko. 2025. "Trust Formation, Error Impact, and Repair in Human–AI Financial Advisory: A Dynamic Behavioral Analysis" Behavioral Sciences 15, no. 10: 1370. https://doi.org/10.3390/bs15101370

APA StyleHan, J., & Ko, D. (2025). Trust Formation, Error Impact, and Repair in Human–AI Financial Advisory: A Dynamic Behavioral Analysis. Behavioral Sciences, 15(10), 1370. https://doi.org/10.3390/bs15101370