Predicting Behaviour Patterns in Online and PDF Magazines with AI Eye-Tracking

Abstract

1. Introduction

- Eye-tracking data can provide valuable insights into readers’ natural gaze patterns, indicating where their attention is focused and how they navigate the content. By utilising this information, designers can optimise the layout of college magazines by placing critical information, headings, and multimedia elements in areas where users are most likely to look first, resulting in a more intuitive and user-friendly reading experience [5].

- Understanding the hierarchy of visual elements is crucial for capturing and maintaining the user’s attention. Eye tracking can help identify the elements that users perceive as more prominent or essential, enabling designers to adjust the visual hierarchy accordingly to guide readers through the content in a way that aligns with the intended narrative or informational flow [9].

- Eye-tracking technology can provide insights into how users consume textual content. By analysing reading patterns, designers can enhance the readability of college magazines. This includes optimising font sizes, line spacing, and paragraph lengths to ensure a comfortable reading experience. It also helps identify optimal placements for pull quotes, captions, and other text elements [11]. Incorporating multimedia elements, such as visuals, videos, and interactive features, is common in college publications. Eye-tracking data can offer valuable insights into how users interact with these components. Designers can optimise the placement and visibility of multimedia aspects to enhance the user experience and provide engaging, informative content that caters to user preferences [12]. Eye tracking allows designers to differentiate between user behaviour on online platforms and PDF formats. This information is crucial for effectively tailoring the design of each medium. For instance, online readers may display distinct gaze patterns owing to interactive elements, while PDF readers may follow a more traditional reading flow. By understanding these differences, designers can create seamless and enjoyable experiences across various formats [13].

- Responsive design is essential for delivering an optimal user experience across different devices and screen sizes. Eye-tracking data can provide valuable insights into how users interact with content on various devices. This information can be used to create responsive layouts that adapt to different screen sizes, ensuring that the college magazine remains visually appealing and user-friendly on both desktop and mobile devices [13].

- Eye tracking enables usability testing by offering real-time information about user interactions with prototypes. This tool allows designers to observe user gaze patterns and identify potential usability issues. Through this iterative design process, continuous improvement based on user feedback is possible, resulting in a more refined and user-centric college magazine [9].

2. Literature Review

2.1. Consumer Behaviour-Prediction Software

2.2. Introduction to AI Eye Tracking in Consumer-Behaviour Prediction

2.3. Attention Patterns in Online vs. PDF Formats

2.4. Related Work

3. Materials and Methods

4. Results

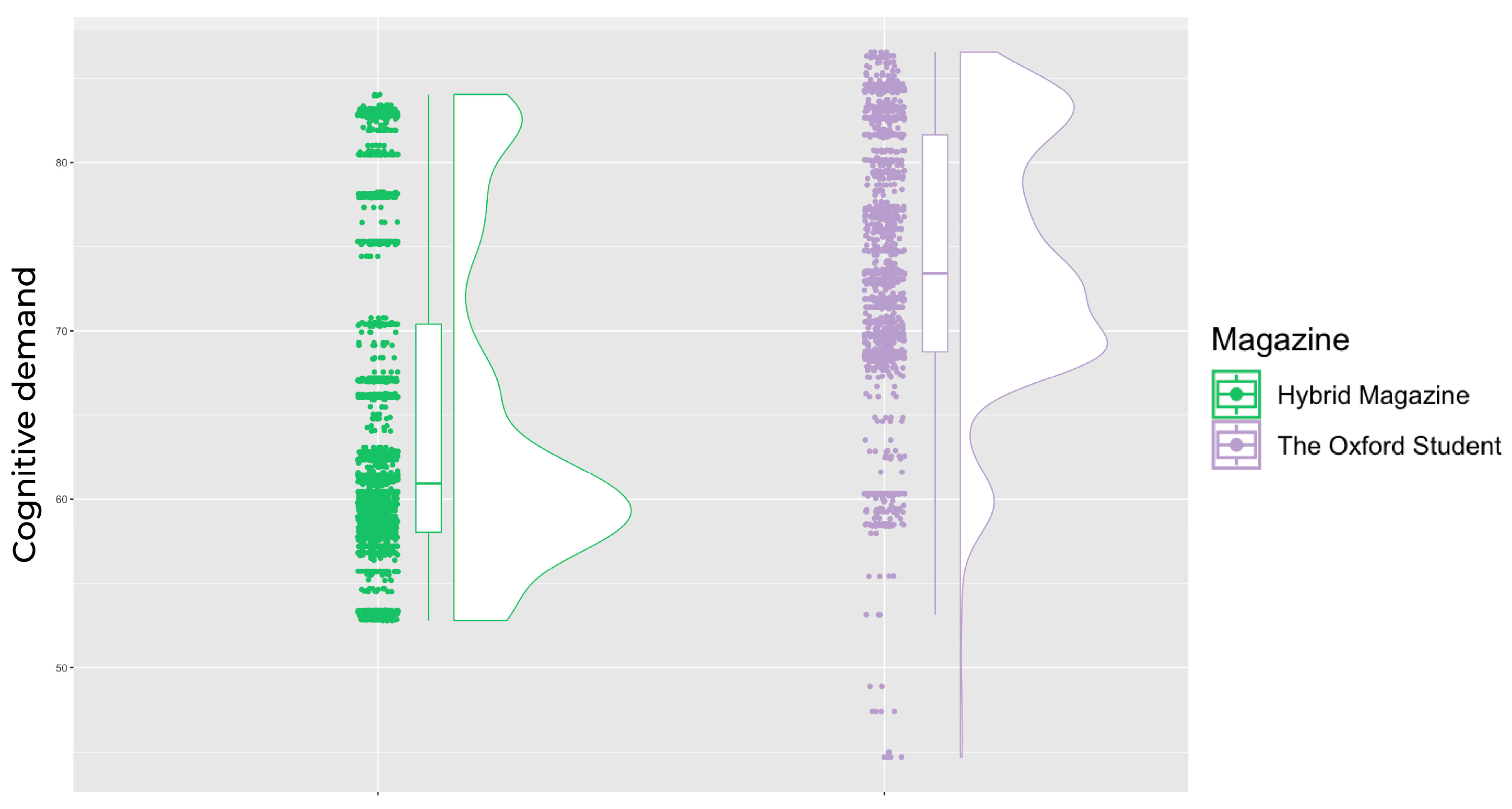

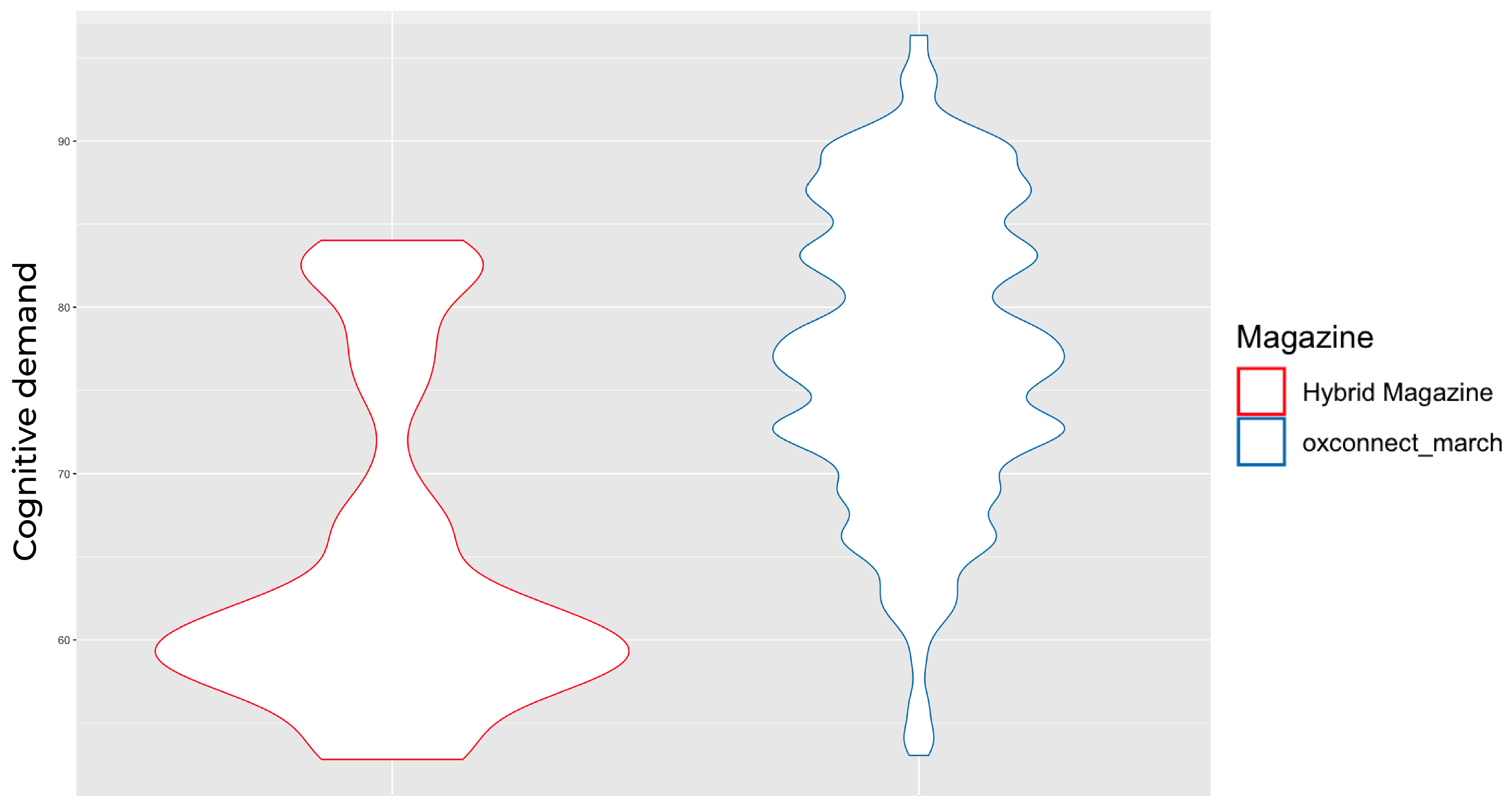

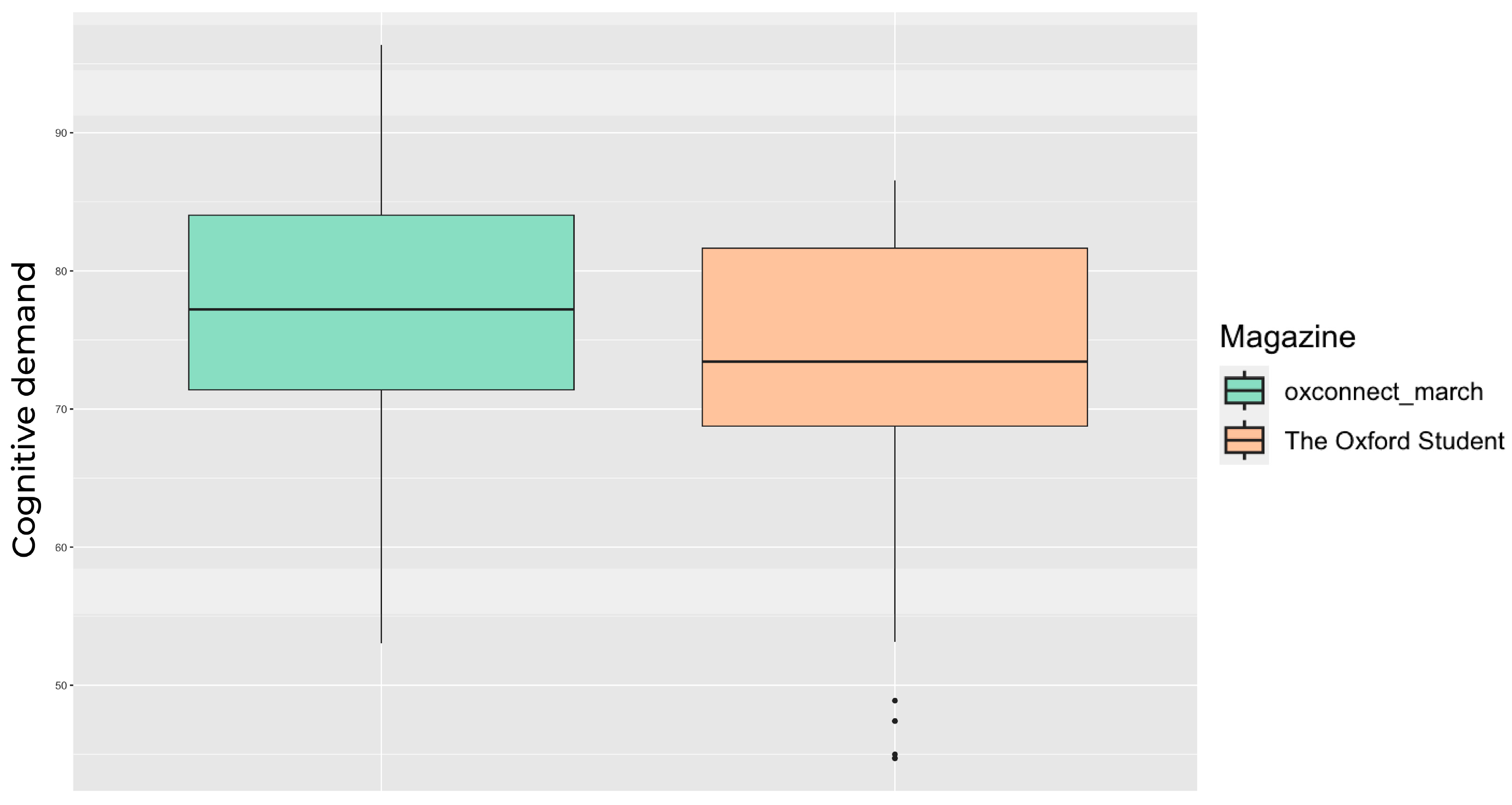

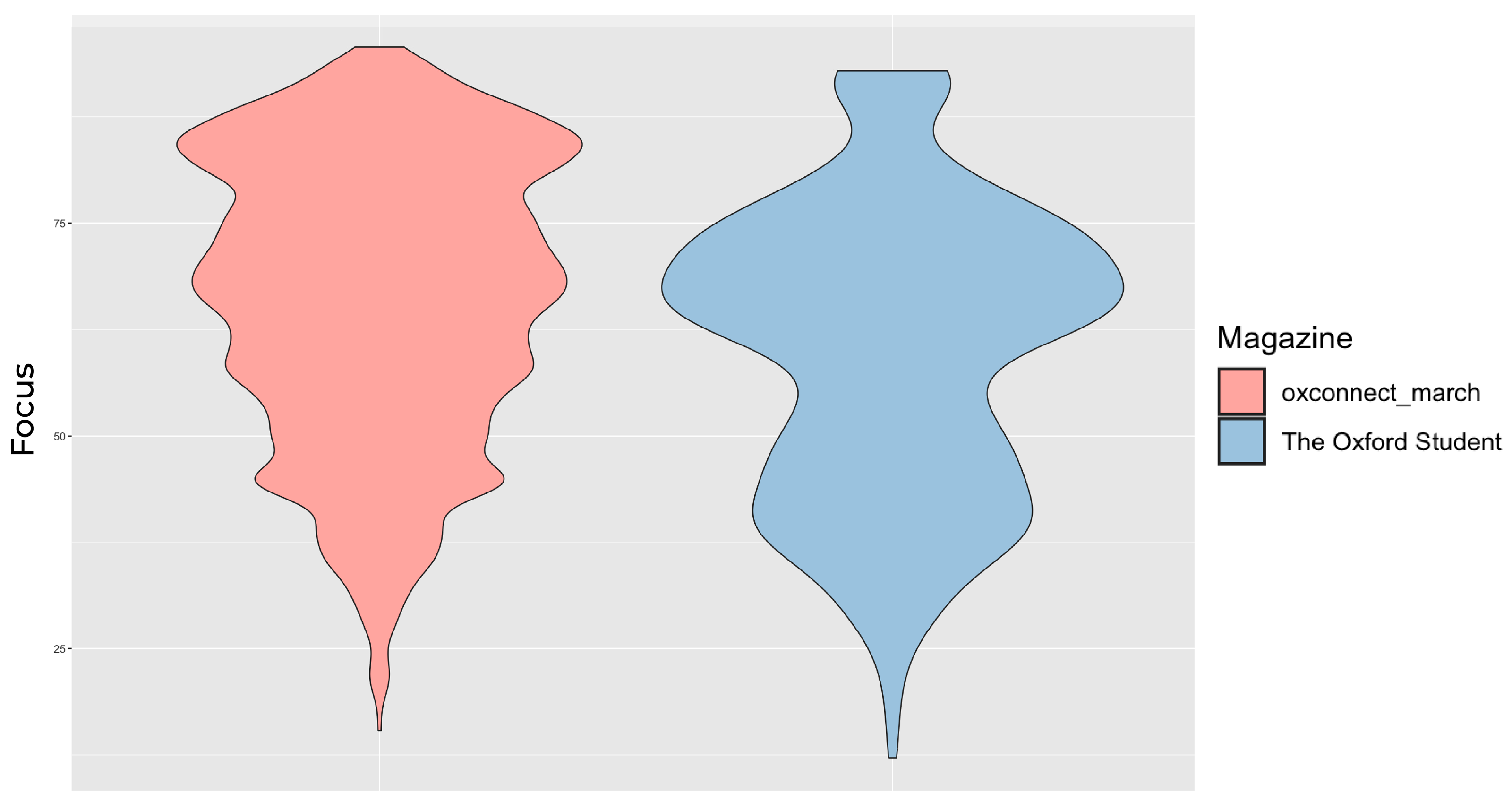

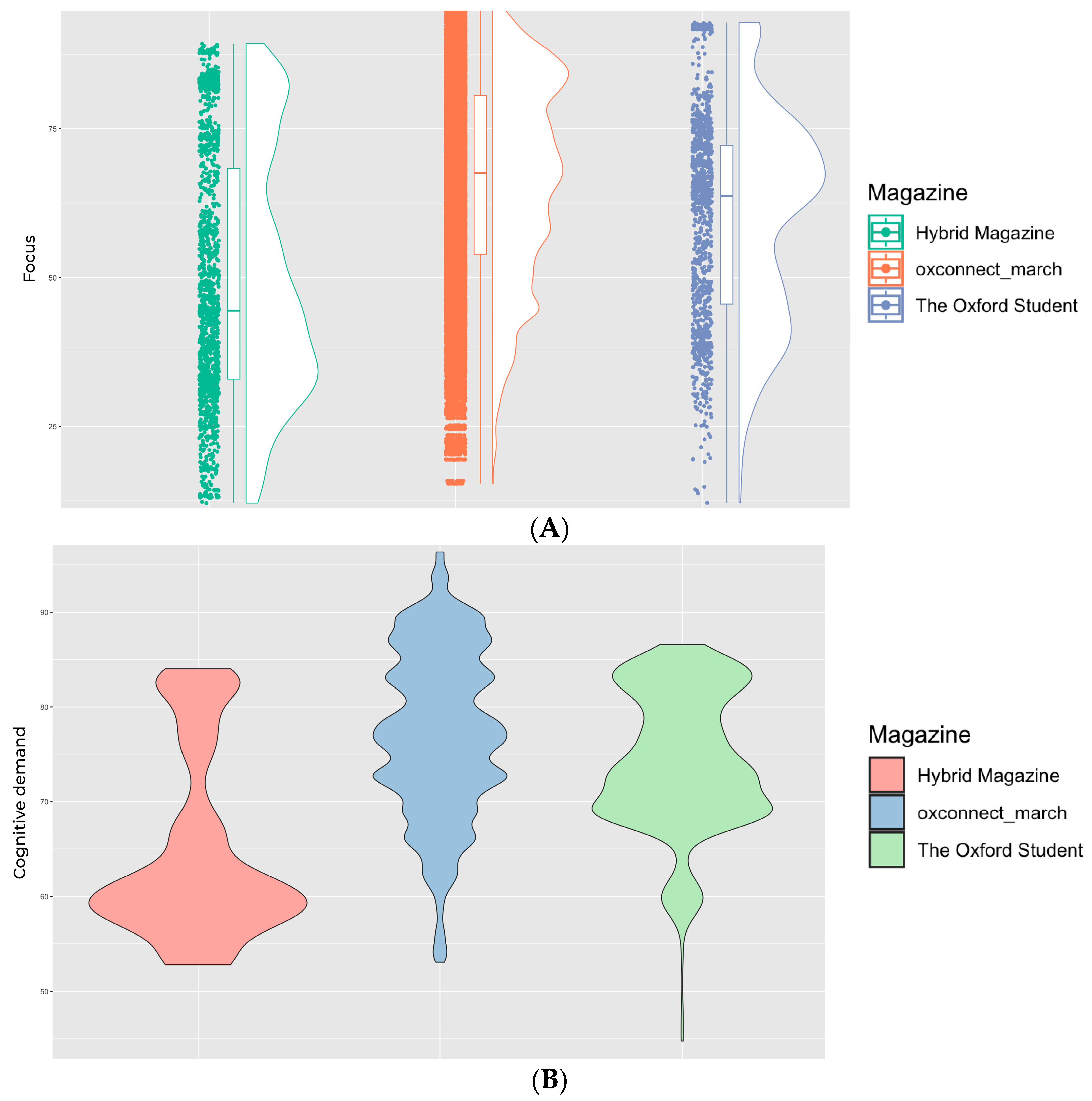

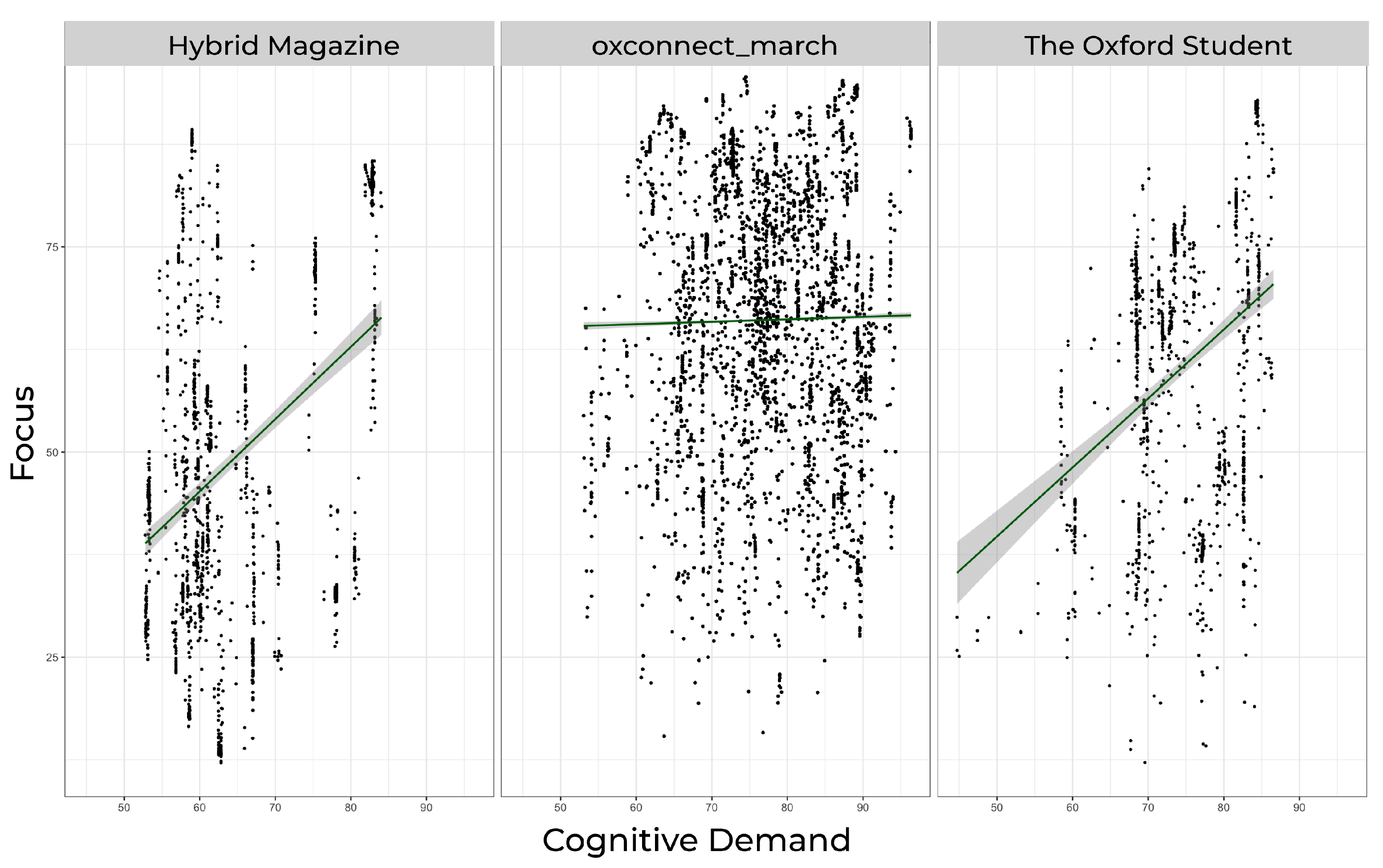

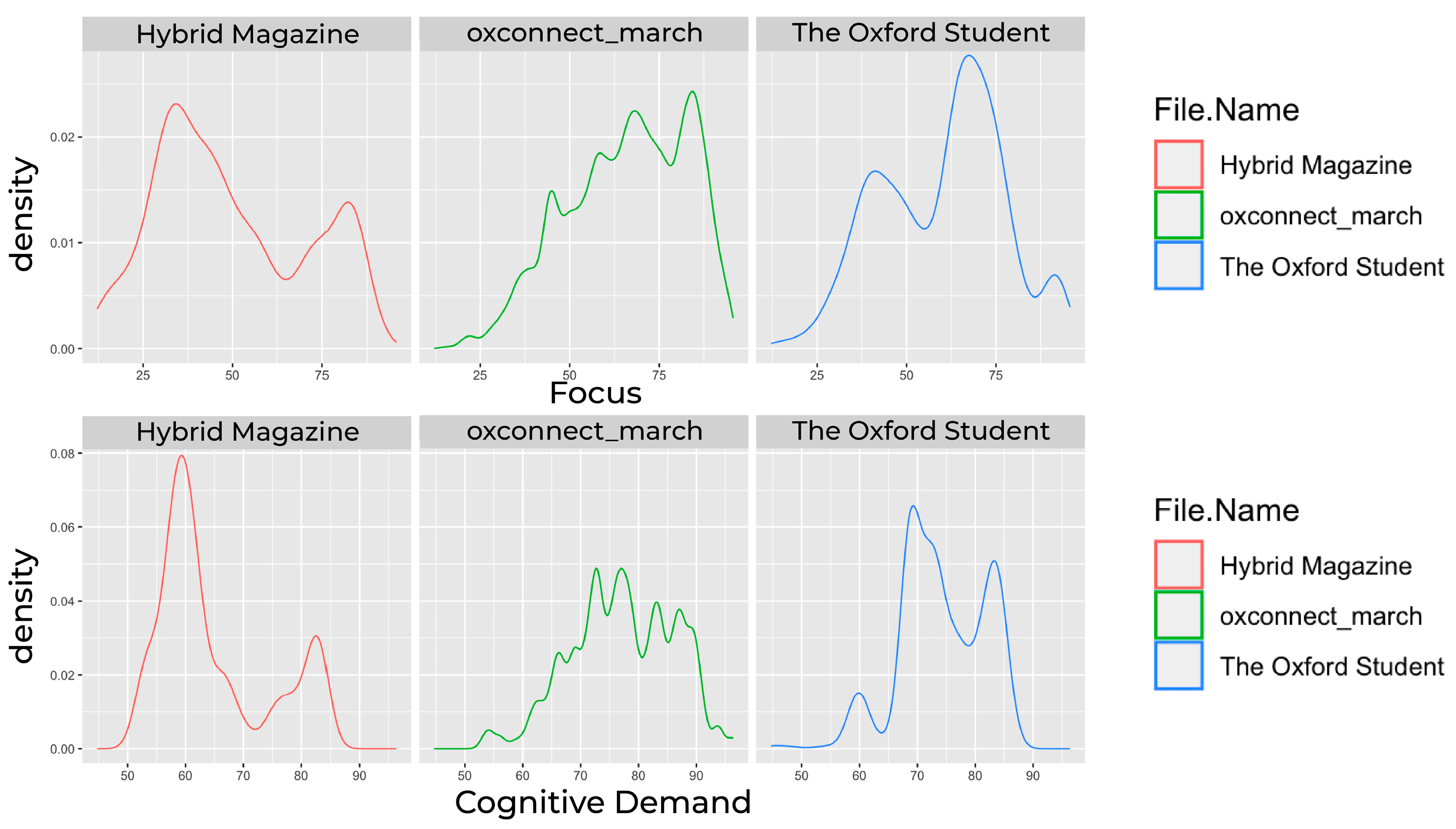

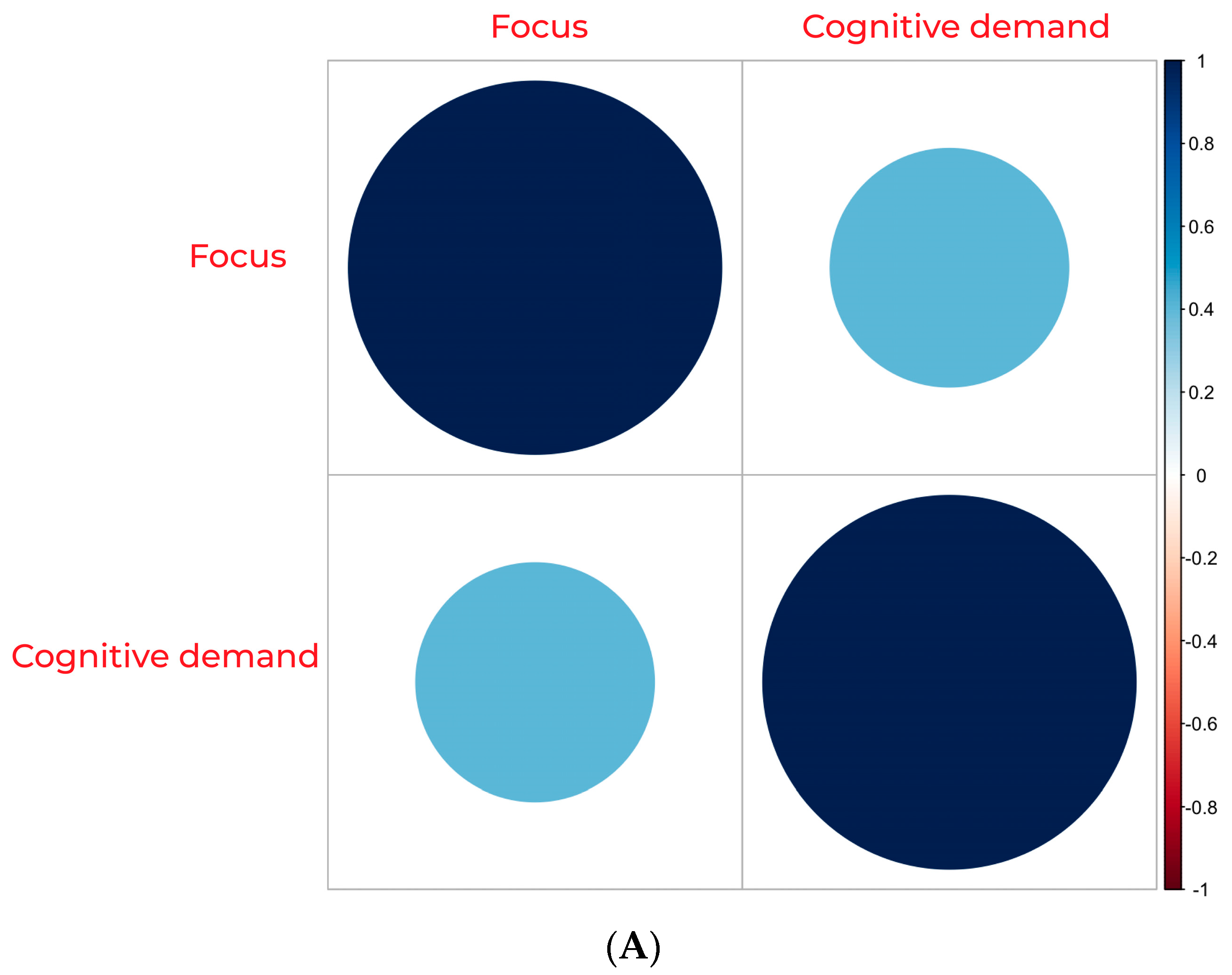

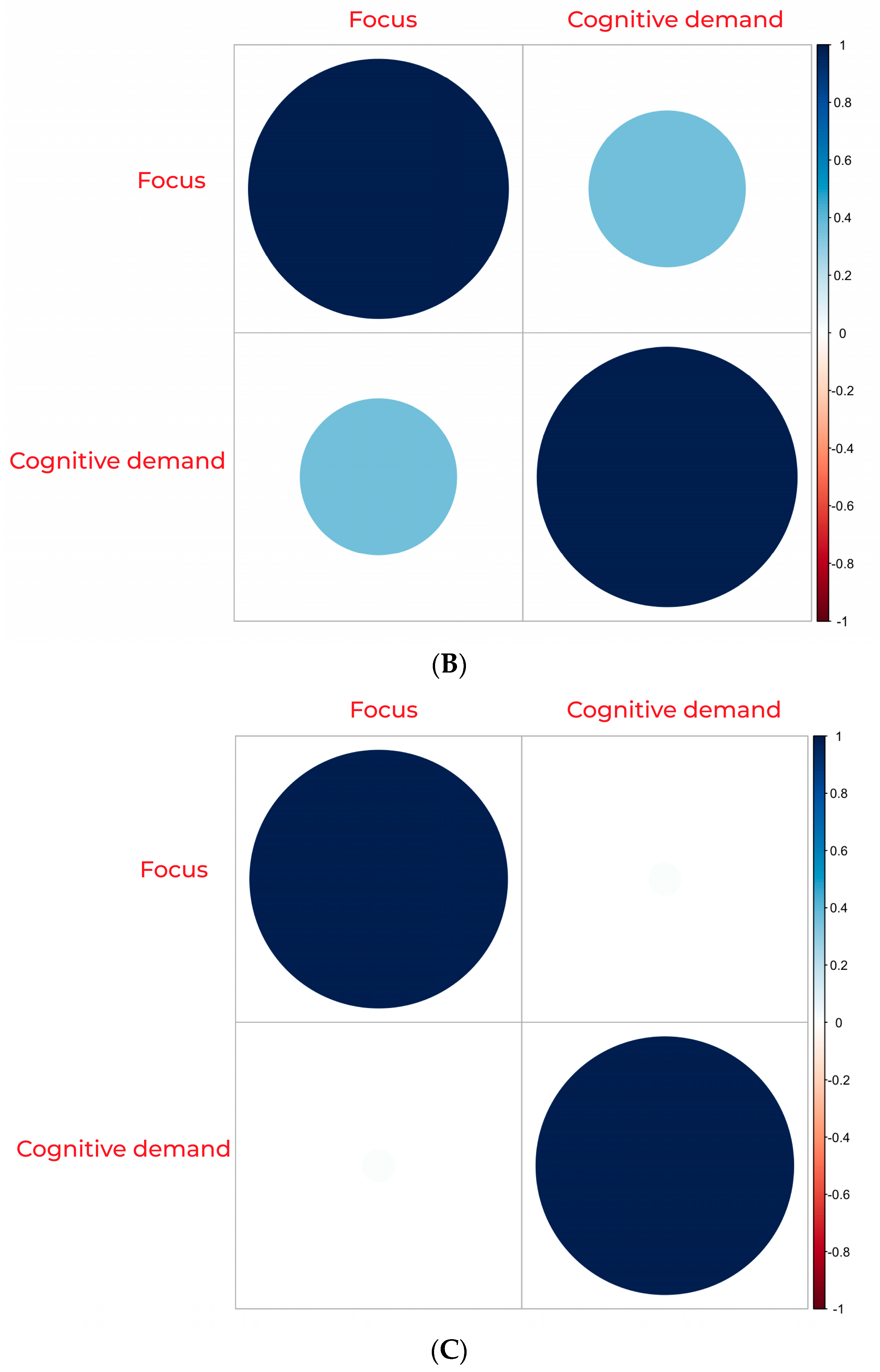

4.1. Focus and Cognitive Demand

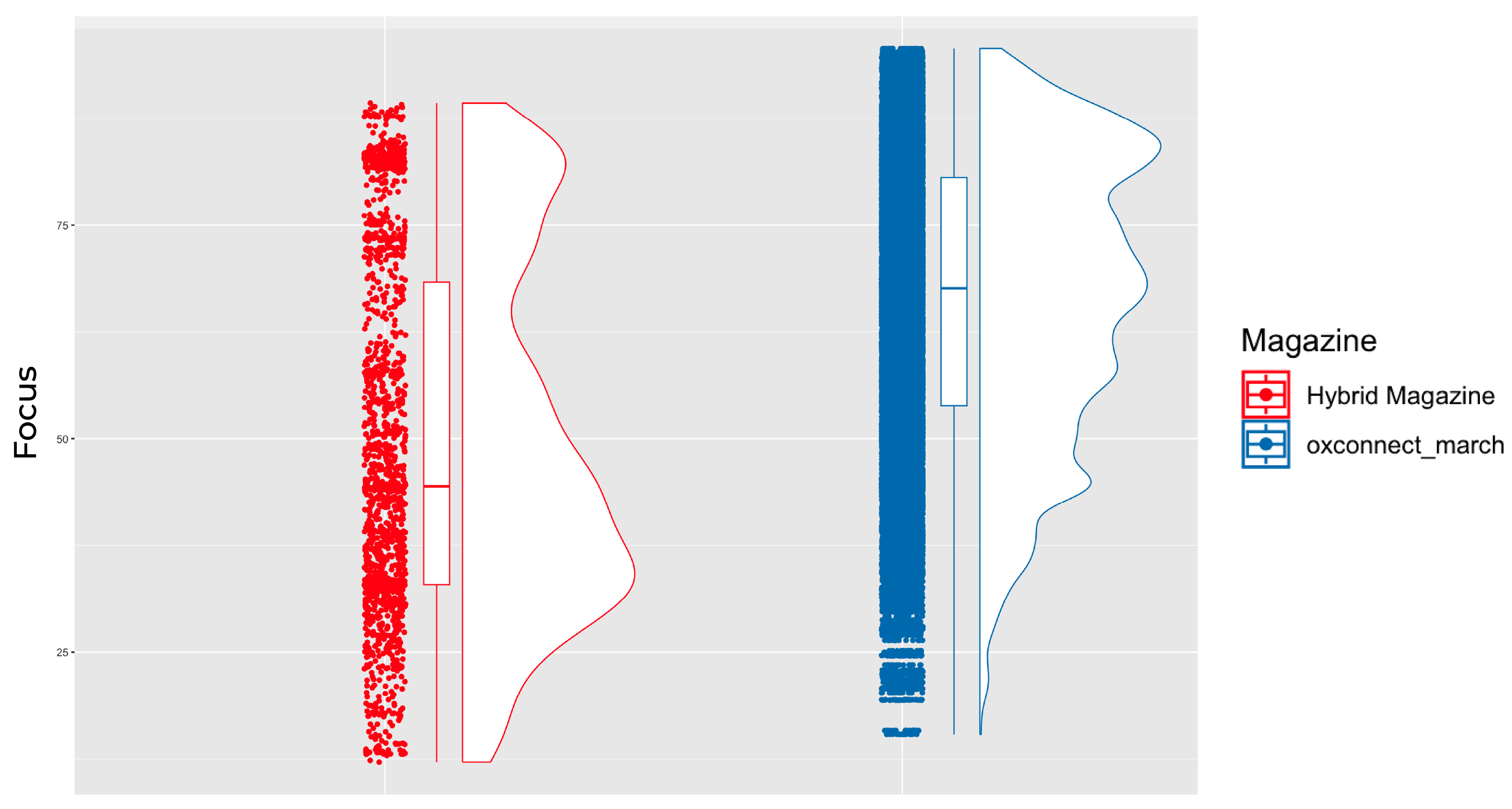

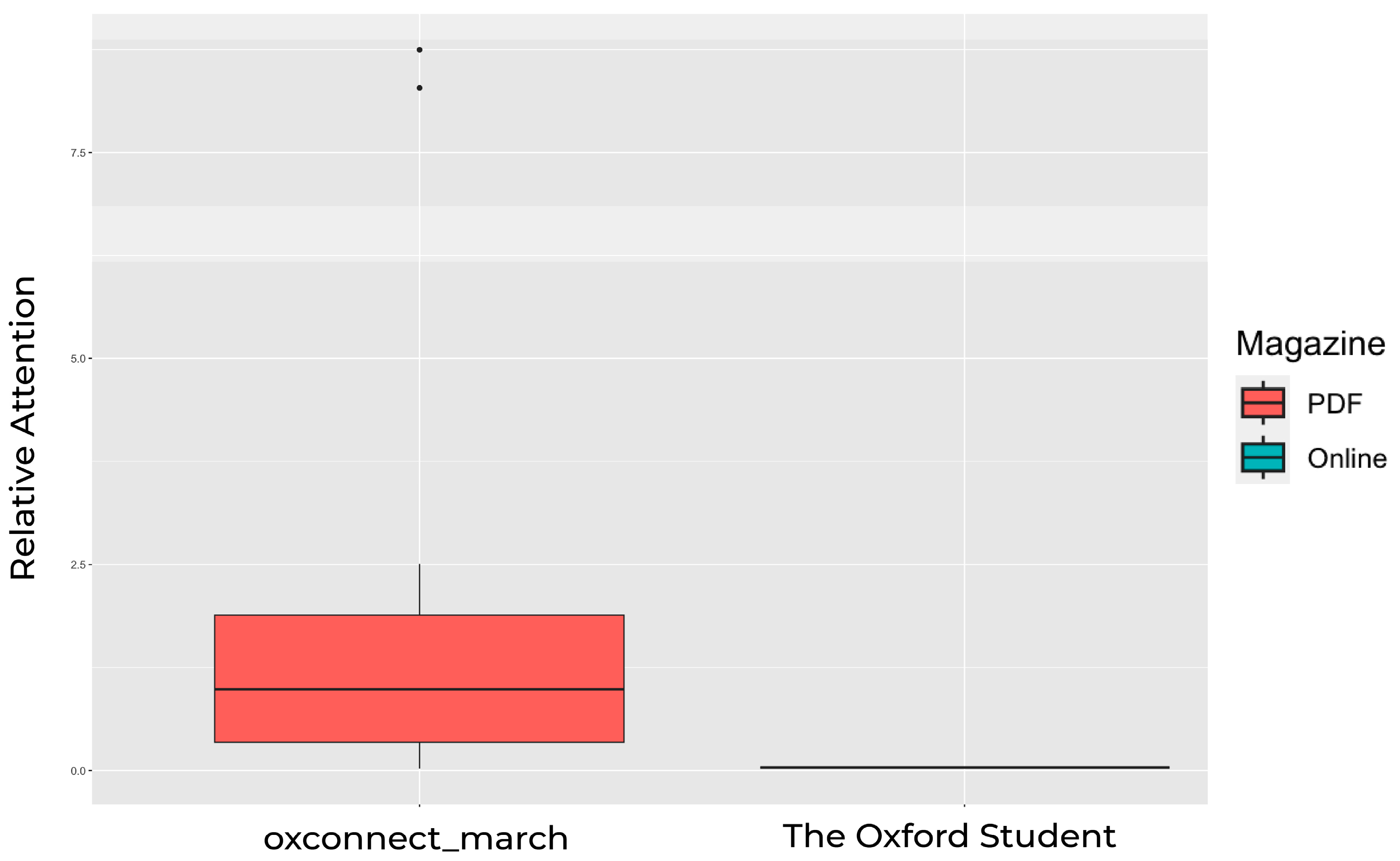

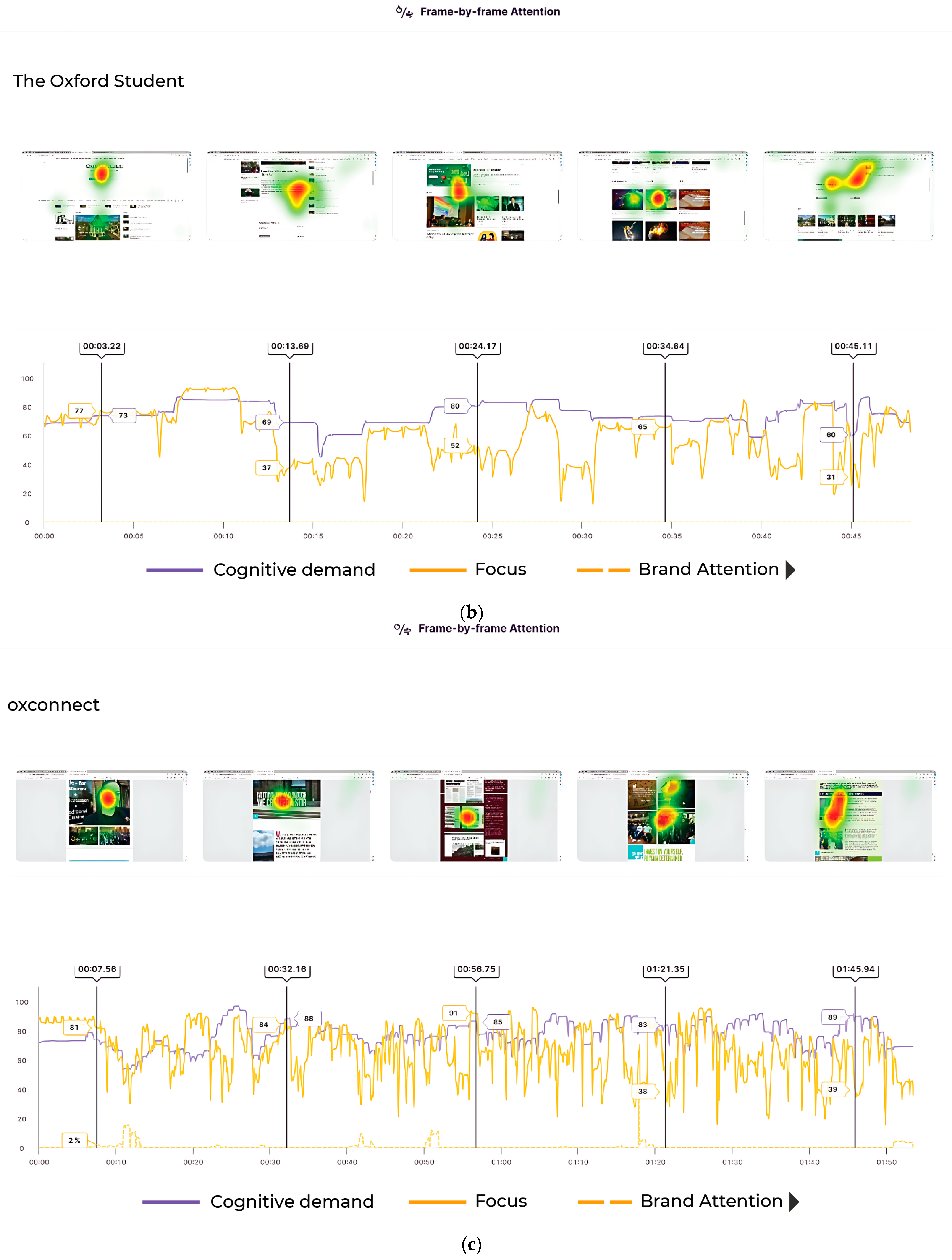

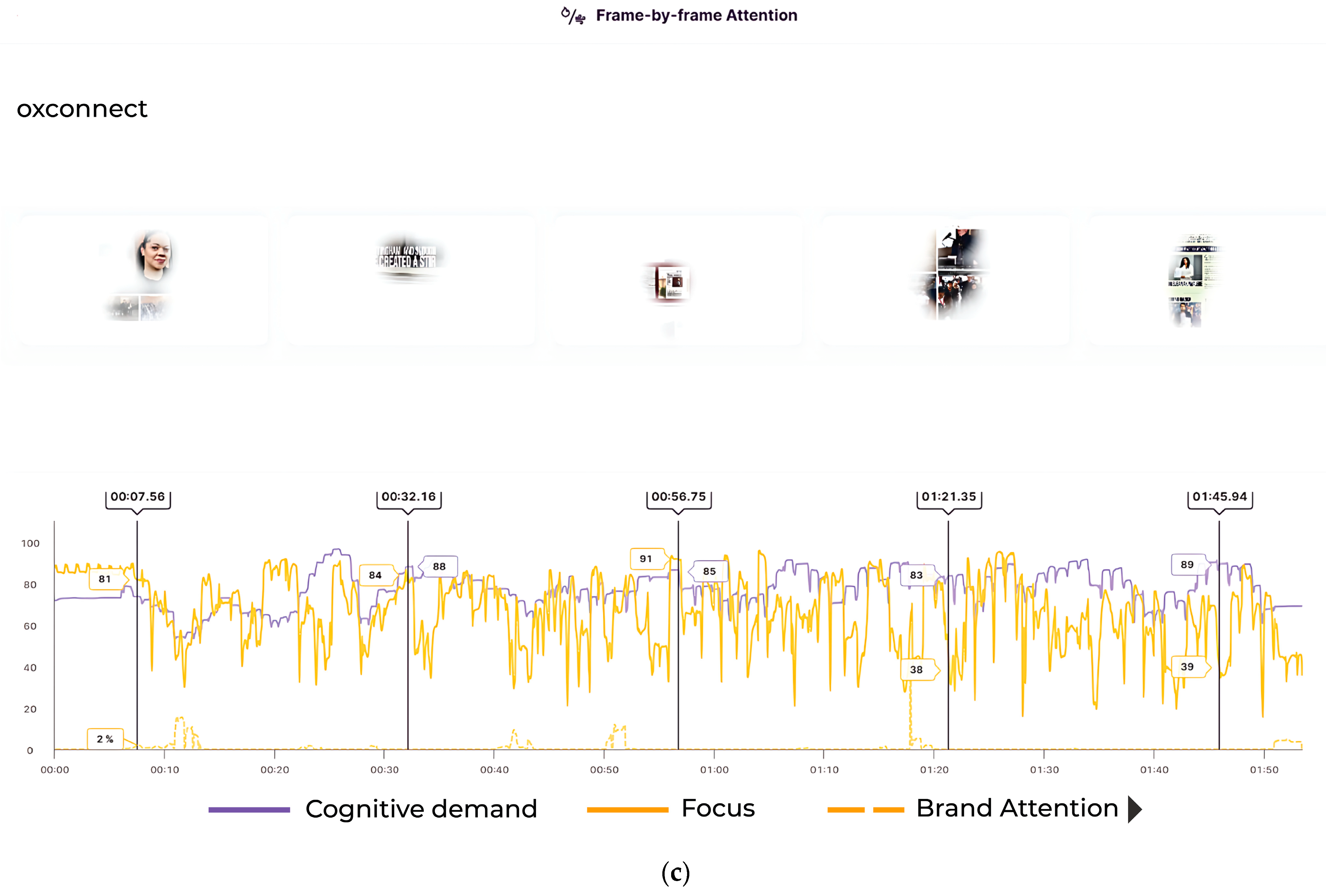

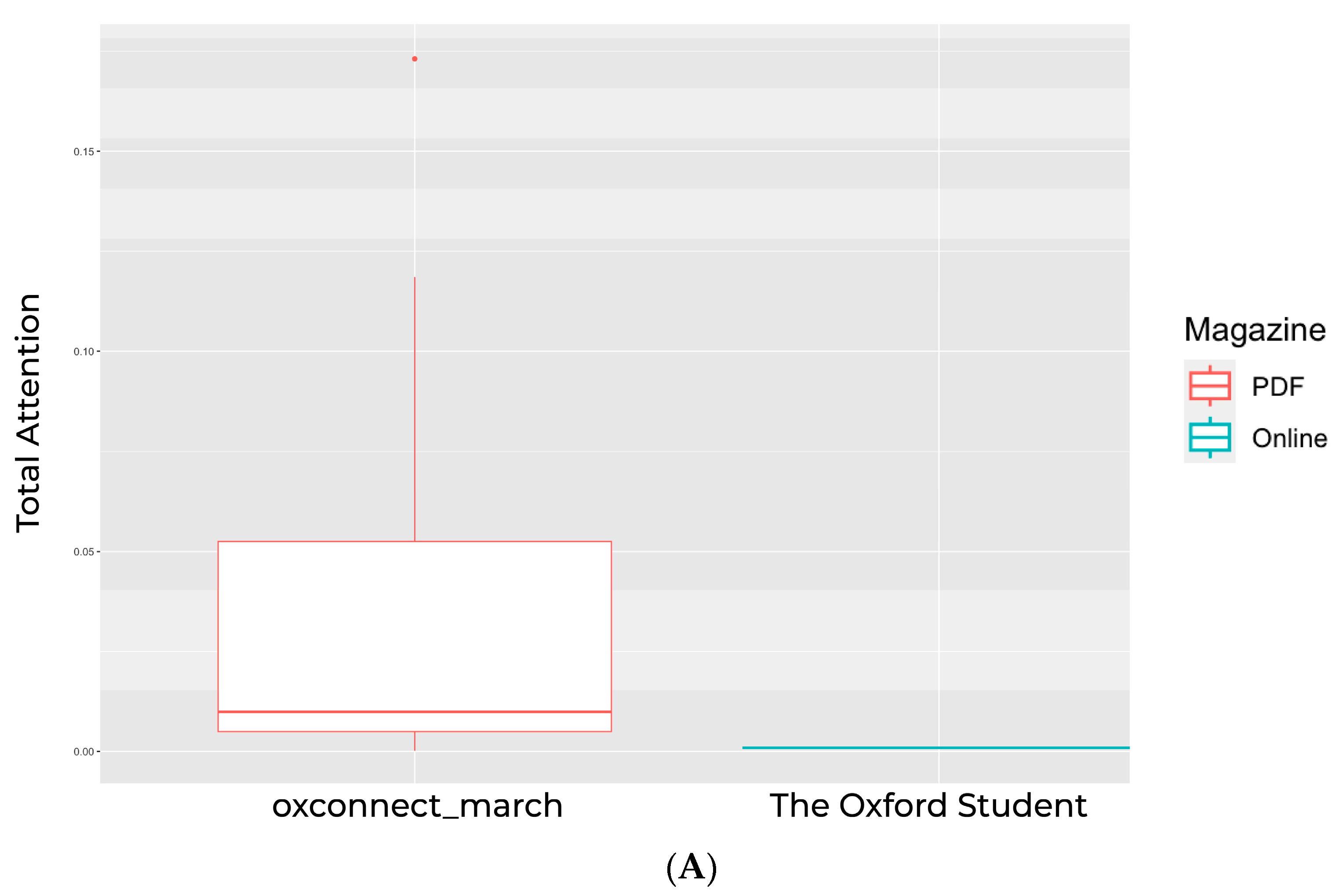

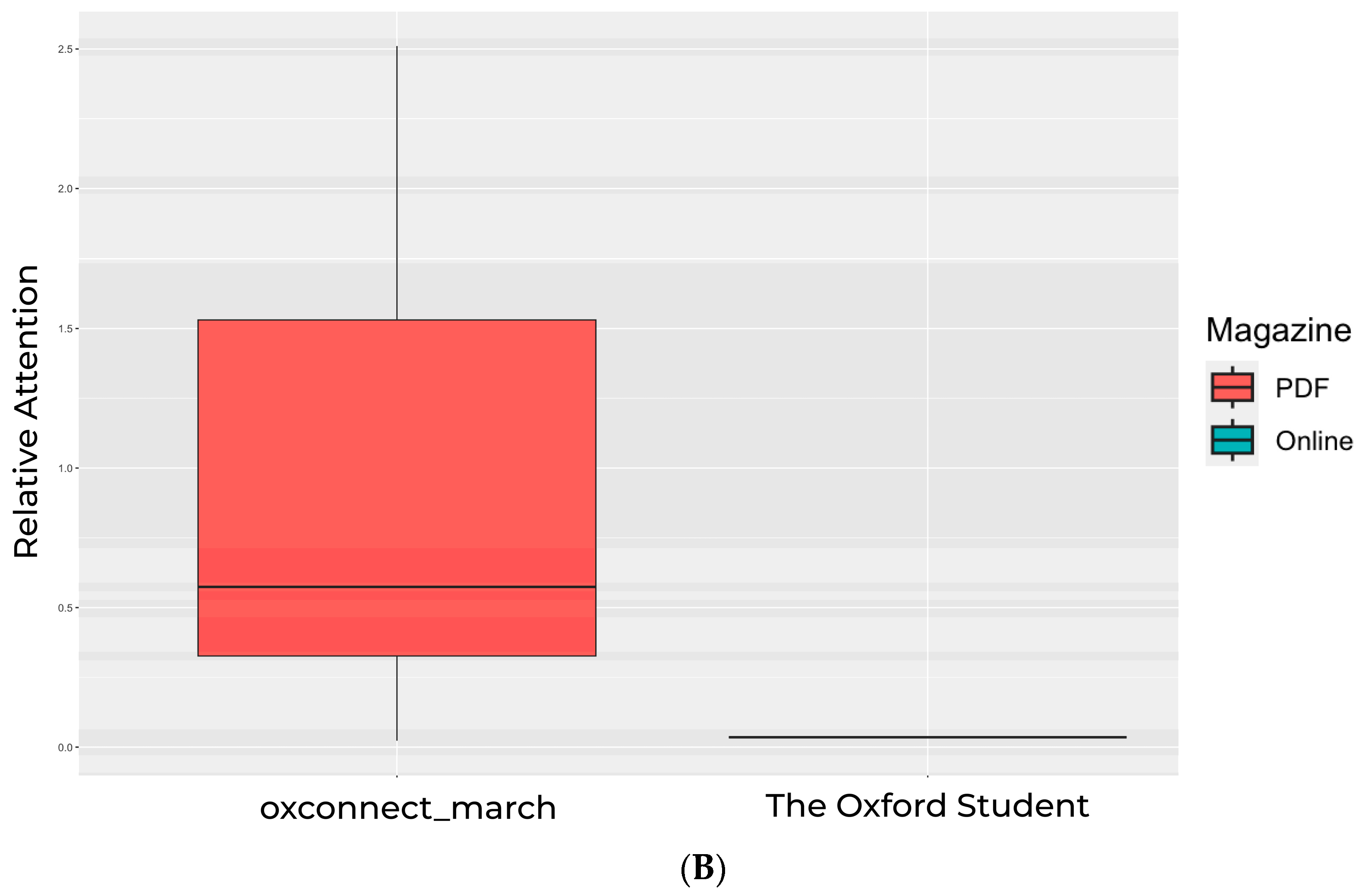

4.2. User Attention

5. Discussion

Limitations of Study

6. Conclusions

7. Implications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | artificial intelligence |

| CNNs | convolutional neural networks |

| DNN | deep neural networks |

| MMI | multimedia information |

| Predict | consumer-behaviour AI eye-tracking prediction software |

| ET | eye tracking |

| CD | cognitive demand |

| AOI | area of interest |

| EI | emotional intelligence |

Appendix A

Appendix B

Appendix C

Appendix D

Appendix E

Appendix F

Appendix G

Appendix H

References

- King, A.J.; Bol, N.; Cummins, R.G.; John, K.K. Improving Visual Behavior Research in Communication Science: An Overview, Review, and Reporting Recommendations for Using Eye-Tracking Methods. Commun. Methods Meas. 2019, 13, 149–177. [Google Scholar] [CrossRef]

- Brunyé, T.T.; Drew, T.; Weaver, D.L.; Elmore, J.G. A review of eye tracking for understanding and improving diagnostic interpretation. Cogn. Res. Princ. Implic. 2019, 4, 7. [Google Scholar] [CrossRef]

- Latini, N.; Bråten, I.; Salmerón, L. Does reading medium affect processing and integration of textual and pictorial information? A multimedia eye-tracking study. Contemp. Educ. Psychol. 2020, 62, 101870. [Google Scholar] [CrossRef]

- Wang, P.; Chiu, D.K.; Ho, K.K.; Lo, P. Why read it on your mobile device? Change in reading habit of electronic magazines for university students. J. Acad. Librariansh. 2016, 42, 664–669. [Google Scholar] [CrossRef]

- Wu, M.-J.; Zhao, K.; Fils-Aime, F. Response rates of online surveys in published research: A meta-analysis. Comput. Hum. Behav. Rep. 2022, 7, 100206. [Google Scholar] [CrossRef]

- Bonner, E.; Roberts, C. Millennials and the Future of Magazines: How the Generation of Digital Natives Will Determine Whether Print Magazines Survive. J. Mag. Media 2017, 17, 32–78. [Google Scholar] [CrossRef]

- Barral, O.; Lallé, S.; Guz, G.; Iranpour, A.; Conati, C. Eye-Tracking to Predict User Cognitive Abilities and Performance for User-Adaptive Narrative Visualizations. In Proceedings of the 2020 International Conference on Multimodal Interaction, Virtual, 25–29 October 2020; ACM: New York, NY, USA, 2020; pp. 163–173. [Google Scholar]

- Klaib, A.F.; Alsrehin, N.O.; Melhem, W.Y.; Bashtawi, H.O.; Magableh, A.A. Eye tracking algorithms, techniques, tools, and applications with an emphasis on machine learning and Internet of Things technologies. Expert Syst. Appl. 2021, 166, 114037. [Google Scholar] [CrossRef]

- Sharma, K.; Giannakos, M.; Dillenbourg, P. Eye-tracking and artificial intelligence to enhance motivation and learning. Smart Learn. Environ. 2020, 7, 13. [Google Scholar] [CrossRef]

- Yi, T.; Chang, M.; Hong, S.; Lee, J.-H. Use of Eye-tracking in Artworks to Understand Information Needs of Visitors. Int. J. Hum. Comput. Interact. 2021, 37, 220–233. [Google Scholar] [CrossRef]

- Novák, J.; Masner, J.; Benda, P.; Šimek, P.; Merunka, V. Eye Tracking, Usability, and User Experience: A Systematic Review. Int. J. Hum. Comput. Interact. 2023, 1–17. [Google Scholar] [CrossRef]

- Vehlen, A.; Spenthof, I.; Tönsing, D.; Heinrichs, M.; Domes, G. Evaluation of an eye tracking setup for studying visual attention in face-to-face conversations. Sci. Rep. 2021, 11, 2661. [Google Scholar] [CrossRef] [PubMed]

- Gerstenberg, T.; Peterson, M.F.; Goodman, N.D.; Lagnado, D.A.; Tenenbaum, J.B. Eye-Tracking Causality. Psychol. Sci. 2017, 28, 1731–1744. [Google Scholar] [CrossRef]

- Olan, F.; Suklan, J.; Arakpogun, E.O.; Robson, A. Advancing Consumer Behavior: The Role of Artificial Intelligence Technologies and Knowledge Sharing. IEEE Trans. Eng. Manag. 2021, 1–13. [Google Scholar] [CrossRef]

- Chaudhary, K.; Alam, M.; Al-Rakhami, M.S.; Gumaei, A. Machine learning-based mathematical modelling for prediction of social media consumer behavior using big data analytics. J. Big Data 2021, 8, 73. [Google Scholar] [CrossRef]

- Nunes, C.A.; Ribeiro, M.N.; de Carvalho, T.C.; Ferreira, D.D.; de Oliveira, L.L.; Pinheiro, A.C. Artificial intelligence in sensory and consumer studies of food products. Curr. Opin. Food Sci. 2023, 50, 101002. [Google Scholar] [CrossRef]

- Al Adwan, A.; Aladwan, R. Use of artificial intelligence system to predict consumers’ behaviors. Int. J. Data Netw. Sci. 2022, 6, 1223–1232. [Google Scholar] [CrossRef]

- Hakami, N.A.; Mahmoud, H.A.H. The Prediction of Consumer Behavior from Social Media Activities. Behav. Sci. 2022, 12, 284. [Google Scholar] [CrossRef]

- Gkikas, D.C.; Theodoridis, P.K.; Beligiannis, G.N. Enhanced Marketing Decision Making for Consumer Behaviour Classification Using Binary Decision Trees and a Genetic Algorithm Wrapper. Informatics 2022, 9, 45. [Google Scholar] [CrossRef]

- Pop, Ș.; Pelau, C.; Ciofu, I.; Kondort, G. Factors Predicting Consumer-AI Interactions. In Proceedings of the 9th BASIQ International Conference on New Trends in Sustainable Business and Consumption, Constanța, Romania, 8–10 June 2023; ASE: Fremont, CA, USA, 2023; pp. 592–597. [Google Scholar] [CrossRef]

- Kar, A. MLGaze: Machine Learning-Based Analysis of Gaze Error Patterns in Consumer Eye Tracking Systems. Vision 2020, 4, 25. [Google Scholar] [CrossRef]

- Li, Y.; Zhong, Z.; Zhang, F.; Zhao, X. Artificial Intelligence-Based Human–Computer Interaction Technology Applied in Consumer Behavior Analysis and Experiential Education. Front. Psychol. 2022, 13, 784311. [Google Scholar] [CrossRef] [PubMed]

- Margariti, K.; Hatzithomas, L.; Boutsouki, C. Implementing Eye Tracking Technology in Experimental Design Studies in Food and Beverage Advertising. In Consumer Research Methods in Food Science; Springer: Berlin/Heidelberg, Germany, 2023; pp. 293–311. [Google Scholar] [CrossRef]

- Pfeiffer, J.; Pfeiffer, T.; Meißner, M.; Weiß, E. Eye-Tracking-Based Classification of Information Search Behavior Using Machine Learning: Evidence from Experiments in Physical Shops and Virtual Reality Shopping Environments. Inf. Syst. Res. 2020, 31, 675–691. [Google Scholar] [CrossRef]

- Deng, R.; Gao, Y. A review of eye tracking research on video-based learning. Educ. Inf. Technol. 2023, 28, 7671–7702. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.Z.; Mountstephens, J.; Teo, J. Eye-Tracking Feature Extraction for Biometric Machine Learning. Front. Neurorobot. 2022, 15, 796895. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Houpt, J.W.; Frame, M.E.; Blaha, L.M. Unsupervised parsing of gaze data with a beta-process vector auto-regressive hidden Markov model. Behav. Res. Methods 2018, 50, 2074–2096. [Google Scholar] [CrossRef] [PubMed]

- Darapaneni, N.; Prakash, M.D.; Sau, B.; Madineni, M.; Jangwan, R.; Paduri, A.R.; Jairajan, K.P.; Belsare, M.; Madhavankutty, P. Eye Tracking Analysis Using Convolutional Neural Network. In Proceedings of the 2022 Interdisciplinary Research in Technology and Management (IRTM), Kolkata, India, 24–26 February 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Yin, Y.; Juan, C.; Chakraborty, J.; McGuire, M.P. Classification of Eye Tracking Data using a Convolutional Neural Network. In Proceedings of the 17th IEEE International Conference on Machine Learning and Applications, Orlando, FL, USA, 17–20 December 2018. [Google Scholar]

- Yin, Y.; Alqahtani, Y.; Feng, J.H.; Chakraborty, J.; McGuire, M.P. Classification of Eye Tracking Data in Visual Information Processing Tasks Using Convolutional Neural Networks and Feature Engineering. SN Comput. Sci. 2021, 2, 59. [Google Scholar] [CrossRef]

- Ahtik, J. Using artificial intelligence for predictive eye-tracking analysis to evaluate photographs. J. Graph. Eng. Des. 2023, 14, 29–35. [Google Scholar] [CrossRef]

- Wu, A.X.; Taneja, H.; Boyd, D.; Donato, P.; Hindman, M.; Napoli, P.; Webster, J. Computational social science: On measurement. Science 2020, 370, 1174–1175. [Google Scholar] [CrossRef] [PubMed]

- Roy, A.; Saffar, M.; Vaswani, A.; Grangier, D. Efficient Content-Based Sparse Attention with Routing Transformers. Trans. Assoc. Comput. Linguist. 2021, 9, 53–68. [Google Scholar] [CrossRef]

- Colin, R.; Minh-Thang, L.; Peter, J.L.; Ron, J.W.; Douglas, E. Online and linear-time attention by enforcing monotonic alignments. In Proceedings of the 34th International Conference on Machine Learning—Volume 70, Sydney, Australia, 6–11 August 2017; pp. 2837–2846. [Google Scholar]

- Zhang, Z.; Ma, J.; Du, J.; Wang, L.; Zhang, J. Multimodal Pre-Training Based on Graph Attention Network for Document Understanding. IEEE Trans. Multimed. 2023, 25, 6743–6755. [Google Scholar] [CrossRef]

- Amin, Z.; Ali, N.M.; Smeaton, A.F. Attention-Based Design and User Decisions on Information Sharing: A Thematic Literature Review. IEEE Access 2021, 9, 83285–83297. [Google Scholar] [CrossRef]

- Moe-Byrne, T.; Knapp, P.; Perry, D.; Achten, J.; Spoors, L.; Appelbe, D.; Roche, J.; Martin-Kerry, J.M.; Sheridan, R.; Higgins, S. Does digital, multimedia information increase recruitment and retention in a children’s wrist fracture treatment trial, and what do people think of it? A randomised controlled Study Within A Trial (SWAT). BMJ Open 2022, 12, e057508. [Google Scholar] [CrossRef] [PubMed]

- Gao, Q.; Li, S. Impact of Online Courses on University Student Visual Attention During the COVID-19 Pandemic. Front. Psychiatry 2022, 13, 848844. [Google Scholar] [CrossRef] [PubMed]

- Aily, J.B.; Copson, J.; Voinier, D.; Jakiela, J.; Hinman, R.; Grosch, M.; Noonan, C.; Armellini, M.; Schmitt, L.; White, M.; et al. Understanding Recruitment Yield From Social Media Advertisements and Associated Costs of a Telehealth Randomized Controlled Trial: Descriptive Study. J. Med. Internet Res. 2023, 25, e41358. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Jeong, J.; Simpkins, B.; Ferrara, E. Exploring the Behavior of Users With Attention-Deficit/Hyperactivity Disorder on Twitter: Comparative Analysis of Tweet Content and User Interactions. J. Med. Internet Res. 2023, 25, e43439. [Google Scholar] [CrossRef] [PubMed]

- Mehmood, A.; Taber, N.; Bachani, A.M.; Gupta, S.; Paichadze, N.; A Hyder, A. Paper Versus Digital Data Collection for Road Safety Risk Factors: Reliability Comparative Analysis From Three Cities in Low- and Middle-Income Countries. J. Med. Internet Res. 2019, 21, e13222. [Google Scholar] [CrossRef] [PubMed]

- Dorta-González, P.; Dorta-González, M.I. The funding effect on citation and social attention: The UN Sustainable Development Goals (SDGs) as a case study. Online Inf. Rev. 2023, 47, 1358–1376. [Google Scholar] [CrossRef]

- Guseman, E.H.; Jurewicz, L.; Whipps, J. Physical Activity And Screen Time Patterns During The Covid-19 Pandemic: The Role Of School Format. Med. Sci. Sports Exerc. 2022, 54, 147. [Google Scholar] [CrossRef]

- Byrne, S.A.; Reynolds, A.P.F.; Biliotti, C.; Bargagli-Stoffi, F.J.; Polonio, L.; Riccaboni, M. Predicting choice behaviour in economic games using gaze data encoded as scanpath images. Sci. Rep. 2023, 13, 4722. [Google Scholar] [CrossRef] [PubMed]

- Vajs, I.A.; Kvascev, G.S.; Papic, T.M.; Jankovic, M.M. Eye-Tracking Image Encoding: Autoencoders for the Crossing of Language Boundaries in Developmental Dyslexia Detection. IEEE Access 2023, 11, 3024–3033. [Google Scholar] [CrossRef]

- Liu, L.; Wang, K.I.-K.; Tian, B.; Abdulla, W.H.; Gao, M.; Jeon, G. Human Behavior Recognition via Hierarchical Patches Descriptor and Approximate Locality-Constrained Linear Coding. Sensors 2023, 23, 5179. [Google Scholar] [CrossRef]

- Ahn, H.; Jun, I.; Seo, K.Y.; Kim, E.K.; Kim, T.-I. Artificial Intelligence for the Estimation of Visual Acuity Using Multi-Source Anterior Segment Optical Coherence Tomographic Images in Senile Cataract. Front. Med. 2022, 9, 871382. [Google Scholar] [CrossRef] [PubMed]

- Pattemore, M.; Gilabert, R. Using eye-tracking to measure cognitive engagement with feedback in a digital literacy game. Lang. Learn. J. 2023, 51, 472–490. [Google Scholar] [CrossRef]

- Chen, H.-C.; Tzeng, S.-S.; Hsiao, Y.-C.; Chen, R.-F.; Hung, E.-C.; Lee, O.K. Smartphone-Based Artificial Intelligence–Assisted Prediction for Eyelid Measurements: Algorithm Development and Observational Validation Study. JMIR mHealth uHealth 2021, 9, e32444. [Google Scholar] [CrossRef] [PubMed]

- Thomas, T.; Hoppe, D.; Rothkopf, C.A. Measuring the cost function of saccadic decisions reveals stable individual gaze preferences. J. Vis. 2022, 22, 4007. [Google Scholar] [CrossRef]

- Kasinidou, M. AI Literacy for All: A Participatory Approach. In Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 2, Turku, Finland, 10–12 July 2023; ACM: New York, NY, USA, 2023; pp. 607–608. [Google Scholar]

- Neurons. Predict Tech Paper; Denmark. 2024.

- Neurons. Predict Datasheet. 2024.

- Awadh, F.H.R.; Zoubrinetzky, R.; Zaher, A.; Valdois, S. Visual attention span as a predictor of reading fluency and reading comprehension in Arabic. Front. Psychol. 2022, 13, 868530. [Google Scholar] [CrossRef] [PubMed]

- Lusnig, L.; Hofmann, M.J.; Radach, R. Mindful Text Comprehension: Meditation Training Improves Reading Comprehension of Meditation Novices. Mindfulness 2023, 14, 708–719. [Google Scholar] [CrossRef]

- Kobayashi, J.; Kawashima, T. Paragraph-based Faded Text Facilitates Reading Comprehension. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019; pp. 1–12. [Google Scholar]

- Giles, F. ‘The magazine that isn’t’: The future of features online. TEXT 2014, 18, 1–16. [Google Scholar] [CrossRef]

- Henderson, C.M.; Steinhoff, L.; Harmeling, C.M.; Palmatier, R.W. Customer inertia marketing. J. Acad. Mark. Sci. 2021, 49, 350–373. [Google Scholar] [CrossRef]

- Ju-Pak, K.-H. Content dimensions of Web advertising: A cross-national comparison. Int. J. Advert. 1999, 18, 207–231. [Google Scholar] [CrossRef]

- Zanker, M.; Rook, L.; Jannach, D. Measuring the impact of online personalisation: Past, present and future. Int. J. Hum. Comput. Stud. 2019, 131, 160–168. [Google Scholar] [CrossRef]

- Bernard, M.; Baker, R.; Chaparro, B.; Fernandez, M. Paging VS. Scrolling: Examining Ways to Present Search Results. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2002, 46, 1296–1299. [Google Scholar] [CrossRef]

- Garett, R.; Chiu, J.; Zhang, L.; Young, S.D. A Literature Review: Website Design and User Engagement. Online J. Commun. Media Technol. 2016, 6, 1–14. [Google Scholar] [CrossRef]

- Murali, R.; Conati, C.; Azevedo, R. Predicting Co-occurring Emotions in MetaTutor when Combining Eye-Tracking and Interaction Data from Separate User Studies. In Proceedings of the LAK23: 13th International Learning Analytics and Knowledge Conference, Arlington, TX, USA, 13–17 March 2023; ACM: New York, NY, USA, 2023; pp. 388–398. [Google Scholar] [CrossRef]

- Douneva, M.; Jaron, R.; Thielsch, M.T. Effects of Different Website Designs on First Impressions, Aesthetic Judgements and Memory Performance after Short Presentation. Interact. Comput. 2016, 28, 552–567. [Google Scholar] [CrossRef]

- Hasan, L. Evaluating the Usability of Educational Websites Based on Students’ Preferences of Design Characteristics. Int. Arab. J. E-Technol. (IAJeT) 2017, 3, 179–193. [Google Scholar]

- Pettersson, J.; Falkman, P. Intended Human Arm Movement Direction Prediction using Eye Tracking. Int. J. Comput. Integr. Manuf. 2023, 1–19. [Google Scholar] [CrossRef]

- Lavdas, A.A.; Salingaros, N.A.; Sussman, A. Visual Attention Software: A New Tool for Understanding the “Subliminal” Experience of the Built Environment. Appl. Sci. 2021, 11, 6197. [Google Scholar] [CrossRef]

- Gheorghe, C.-M.; Purcărea, V.L.; Gheorghe, I.-R. Using eye-tracking technology in Neuromarketing. Rom. J. Ophthalmol. 2023, 67, 2–6. [Google Scholar] [CrossRef]

- Rvacheva, I.M. Eyetracking as a Modern Neuromarketing Technology. Ekon. I Upr. Probl. Resheniya 2023, 5/3, 80–84. [Google Scholar] [CrossRef]

- Zdarsky, N.; Treue, S.; Esghaei, M. A Deep Learning-Based Approach to Video-Based Eye Tracking for Human Psychophysics. Front. Hum. Neurosci. 2021, 15, 685830. [Google Scholar] [CrossRef]

- Pettersson, J.; Falkman, P. Human Movement Direction Prediction using Virtual Reality and Eye Tracking. In Proceedings of the 2021 22nd IEEE International Conference on Industrial Technology (ICIT), Virtual, 10–12 March 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 889–894. [Google Scholar] [CrossRef]

- Kondak, A. AGH University of Krakow the application of eye tracking and artificial intelligence in contemporary marketing communication management. Sci. Pap. Silesian Univ. Technol. Organ. Manag. Ser. 2023, 2023, 239–253. [Google Scholar] [CrossRef]

- Morozkin, P.; Swynghedauw, M.; Trocan, M. Neural Network Based Eye Tracking. In Proceedings of the Computational Collective Intelligence: 9th International Conference, ICCCI 2017, Nicosia, Cyprus, 27–29 September 2017; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 600–609. [Google Scholar] [CrossRef]

- Stein, N.; Bremer, G.; Lappe, M. Eye Tracking-based LSTM for Locomotion Prediction in VR. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 12–16 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 493–503. [Google Scholar] [CrossRef]

- Vazquez, E.E. Effects of enduring involvement and perceived content vividness on digital engagement. J. Res. Interact. Mark. 2019, 14, 1–16. [Google Scholar] [CrossRef]

- Sibarani, B. Cognitive Engagement and Motoric Involvement in Learning: An Experiment on the Effect of Interaction Story Game on English Listening Comprehension in EFL Context. Engl. Linguist. Res. 2019, 8, 38. [Google Scholar] [CrossRef]

- Yeung, A.S. Cognitive Load and Learner Expertise: Split-Attention and Redundancy Effects in Reading Comprehension Tasks With Vocabulary Definitions. J. Exp. Educ. 1999, 67, 197–217. [Google Scholar] [CrossRef]

- Alhamad, K.A.; Manches, A.; Mcgeown, S. The Impact of Augmented Reality (AR) Books on the Reading Engagement and Comprehension of Child Readers. Edinb. Open Res. 2022, 27, 1–21. [Google Scholar] [CrossRef]

- Al-Samarraie, H.; Sarsam, S.M.; Alzahrani, A.I. Emotional intelligence and individuals’ viewing behaviour of human faces: A predictive approach. User Model. User-Adapt. Interact. 2023, 33, 889–909. [Google Scholar] [CrossRef]

- Santiago-Reyes, G.; O’Connell, T.; Kanwisher, N. Artificial neural networks predict human eye movement patterns as an emergent property of training for object classification. J. Vis. 2022, 22, 4194. [Google Scholar] [CrossRef]

- Buettner, R. Cognitive Workload of Humans Using Artificial Intelligence Systems: Towards Objective Measurement Applying Eye-Tracking Technology. In KI 2013: Advances in Artificial Intelligence: Proceedings of the 36th Annual German Conference on AI, Koblenz, Germany, 16–20 September 2013; Proceedings 36; Springer: Berlin/Heidelberg, Germany, 2013; pp. 37–48. [Google Scholar] [CrossRef]

- Williams, J. Consumer Behavior Analysis in the Age of Big Data for Effective Marketing Strategies. Int. J. Strat. Mark. Pract. 2024, 6, 36–46. [Google Scholar] [CrossRef]

| Estimates | Estimate 1 | Estimate 2 | Statistic | p-Value | Parameter | Conf. Low | Conf. High | Method | Alternative |

|---|---|---|---|---|---|---|---|---|---|

| 1: Hybrid Magazine 2: The Oxford Student | 64.70426 | 74.15509 | −29.019 | <0.001 | 2722.9 | −10.089445 | −8.812227 | Welch’s Two-Sample t-test | two-sided |

| 1: Hybrid Magazine 2: OxConnect | 64.70426 | 77.21173 | −50.705 | <0.001 | 1655.5 | −12.99129 | −12.02365 | Welch’s Two-Sample t-test | two-sided |

| 1: OxConnect 2: The Oxford Student | 77.21173 | 74.15509 | 13.876 | <0.001 | 13.876 | 2.624477 | 3.488791 | Welch’s Two-Sample t-test | two-sided |

| Df | Sum Sq | Mean Sq | F Value | Pr (<F) | |

|---|---|---|---|---|---|

| Magazines | 2 | 245,195 | 122,597 | 1605 | <2 × 10−16 *** |

| Residuals | 49,033 | 3,746,212 | 76 |

| Estimates | Estimate 1 | Estimate 2 | Statistic | p-Value | Parameter | Conf. Low | Conf. High | Method | Alternative |

|---|---|---|---|---|---|---|---|---|---|

| 1: Hybrid Magazine 2: The Oxford Student | 49.34619 | 60.01919 | −14.68 | <0.001 | 2694.5 | −12.0986 | −9.24742 | Welch’s Two-Sample t-test | two-sided |

| 1: Hybrid Magazine 2: OxConnect | 49.34619 | 66.08838 | −31.55 | <0.001 | 1635.9 | −17.783 | −15.7014 | Welch’s Two-Sample t-test | two-sided |

| 1: OxConnect 2: The Oxford Student | 66.08838 | 60.01919 | 11.927 | <0.001 | 1214.5 | 5.070804 | 7.067574 | Welch’s Two-Sample t-test | two-sided |

| Df | Sum Sq | Mean Sq | F Value | Pr (<F) | |

|---|---|---|---|---|---|

| Magazines | 2 | 459,638 | 229,819 | 812.7 | <2 × 10−16 *** |

| Residuals | 49,033 | 13,866,203 | 283 |

| Measure | Online | Statistic | p-Value | Parameter | Conf. Low | Conf. High | Method | Alternative | |

|---|---|---|---|---|---|---|---|---|---|

| Total attention | 0.000917 | 0.036568 | 2.9115 | <0.05 | 16 | 0.01061 | 0.062526 | Welch’s Two-Sample t-test | two-sided |

| Relative attention | 0.035459 | 0.933812 | 4.4552 | <0.001 | 14 | 0.50133 | 1.366294 | Welch’s Two-Sample t-test | two-sided |

| Availability | 1.25 | 2.294118 | 1.8295 | 0.08602 | 16 | 1.084275 | 3.503961 | Welch’s Two-Sample t-test | two-sided |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Šola, H.M.; Qureshi, F.H.; Khawaja, S. Predicting Behaviour Patterns in Online and PDF Magazines with AI Eye-Tracking. Behav. Sci. 2024, 14, 677. https://doi.org/10.3390/bs14080677

Šola HM, Qureshi FH, Khawaja S. Predicting Behaviour Patterns in Online and PDF Magazines with AI Eye-Tracking. Behavioral Sciences. 2024; 14(8):677. https://doi.org/10.3390/bs14080677

Chicago/Turabian StyleŠola, Hedda Martina, Fayyaz Hussain Qureshi, and Sarwar Khawaja. 2024. "Predicting Behaviour Patterns in Online and PDF Magazines with AI Eye-Tracking" Behavioral Sciences 14, no. 8: 677. https://doi.org/10.3390/bs14080677

APA StyleŠola, H. M., Qureshi, F. H., & Khawaja, S. (2024). Predicting Behaviour Patterns in Online and PDF Magazines with AI Eye-Tracking. Behavioral Sciences, 14(8), 677. https://doi.org/10.3390/bs14080677