Attentional Bias of Individuals with Social Anxiety towards Facial and Somatic Emotional Cues in a Holistic Manner

Abstract

1. Introduction

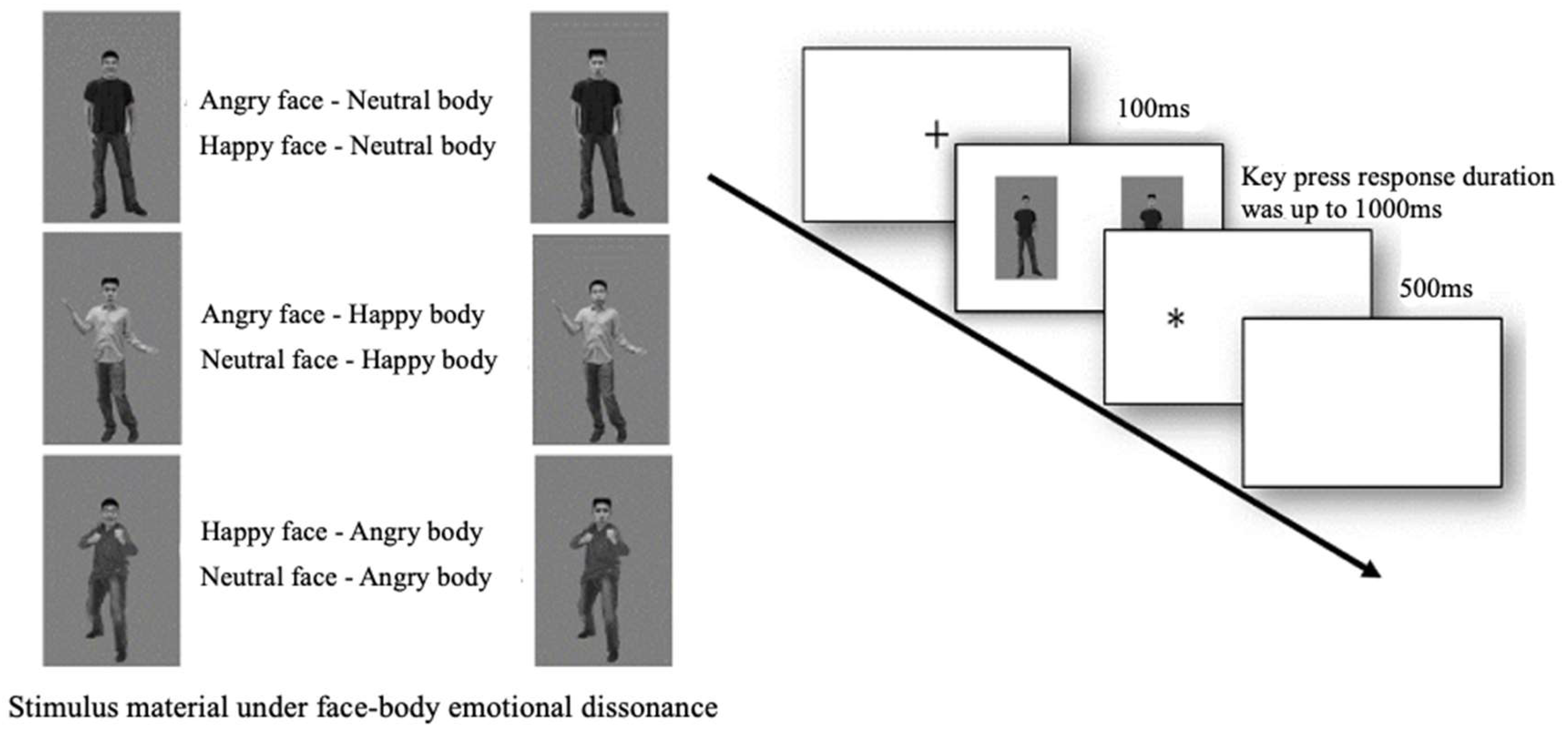

2. Experiment 1: Characteristics of Face–Body Overall Emotional Cue Processing When the Stimulus Was Presented for 100 ms

2.1. Method

2.2. Results

2.3. Discussion

3. Experiment 2: The Effect of Facial Emotion on the Processing of Face–Body Whole Emotional Cues

3.1. Method

3.2. Results

3.3. Discussion

4. Experiment 3: The Effect of Somatic Emotion on the Processing of Face–Body Whole Emotional Cues

4.1. Method

4.2. Result

4.3. Discussion

5. General Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhao, X.; Dang, C.; Maes, J.H.R. Effects of working memory training on EEG, cognitive performance, and self-report indices potentially relevant for social anxiety. Biol. Psychol. 2020, 150, 107840. [Google Scholar] [CrossRef]

- Herff, S.A.; Dorsheimer, I.; Dahmen, B.; Prince, J.B. Information processing biases: The effects of negative emotional symptoms on sampling pleasant and unpleasant information. J. Exp. Psychol. Appl. 2023, 29, 259–279. [Google Scholar] [CrossRef] [PubMed]

- Delchau, H.L.; Christensen, B.K.; Lipp, O.V.; Goodhew, S.C. The effect of social anxiety on top-down attentional orienting to emotional faces. Emotion 2022, 22, 572–585. [Google Scholar] [CrossRef]

- Yiend, J. The effects of emotion on attention: A review of attentional processing of emotional information. Cogn. Emot. 2010, 24, 3–47. [Google Scholar] [CrossRef]

- Bai, T.; Yang, H.B. The attentional bias towards threat in posttraumatic stress disorder: Evidence from eye movement studies. Adv. Psychol. Sci. 2021, 29, 737–746. [Google Scholar] [CrossRef]

- MacLeod, C.; Grafton, B. Anxiety-linked attentional bias and its modification: Illustrating the importance of distinguishing processes and procedures in experimental psychopathology research. Behav. Res. Ther. 2016, 86, 68–86. [Google Scholar] [CrossRef] [PubMed]

- Lin, M.; Hofmann, S.G.; Qian, M.; Li, S. Enhanced association between perceptual stimuli and trauma-related information in individuals with posttraumatic stress disorder symptoms. J. Behav. Ther. Exp. Psychiatry 2015, 46, 202–207. [Google Scholar] [CrossRef]

- Johnson, M.; Schofield, J.; Pearson, F.; Leaf, A. Differences between prescribed, delivered and recommended energy and protein intakes in preterm infants. Proc. Nutr. Soc. 2012, 70, 261. [Google Scholar] [CrossRef]

- Horley, K.; Williams, L.M.; Gonsalvez, C.; Gordon, E. Social phobics do not see eye to eye. J. Anxiety Disord. 2003, 17, 33–44. [Google Scholar] [CrossRef]

- Heinrichs, N.; Hoffman, E.C.; Hofmann, S.G. Cognitive-behavioral treatment for social phobia in parkinson’s disease: A single-case study. Cogn. Behav. Pract. 2002, 8, 328–335. [Google Scholar] [CrossRef]

- Gregory, N.J.; Bolderston, H.; Antolin, J.V. Attention to faces and gaze-following in social anxiety: Preliminary evidence from a naturalistic eye-tracking investigation. Cogn. Emot. 2019, 33, 931–942. [Google Scholar] [CrossRef] [PubMed]

- Byrow, Y.; Chen, N.T.; Peters, L. Time course of attention in socially anxious individuals: Investigating the effects of adult attachment style. Behav. Ther. 2016, 47, 560–571. [Google Scholar] [CrossRef] [PubMed]

- Wells, A.; Clark, D.M.; Salkovskis, P.; Ludgate, J.; Hackmann, A.; Gelder, M. Social phobia: The role of in-situation safety behaviors in maintaining anxiety and negative beliefs-Republished article. Behav. Ther. 2016, 47, 669–674. [Google Scholar] [CrossRef] [PubMed]

- Mogg, K.; Bradley, B.P. Selective orienting of attention to masked threat faces in social anxiety. Behav. Res. Ther. 2002, 40, 1403–1414. [Google Scholar] [CrossRef] [PubMed]

- Buckner, J.D.; Timpano, K.R.; Zvolensky, M.J.; Sachs-Ericsson, N.; Schmidt, N.B. Implications of comorbid alcohol dependence among individuals with social anxiety disorder. Depress. Anxiety 2010, 25, 1028–1037. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Carlson, J.M.; Aday, J. In the presence of conflicting gaze cues, fearful expression and eye-size guide attention. Cogn. Emot. 2018, 32, 1178–1188. [Google Scholar] [CrossRef] [PubMed]

- MacLeod, C.; Grafton, B.; Notebaert, L. Anxiety-linked attentional bias: Is it reliable? Annu. Rev. Clin. Psychol. 2019, 15, 529–554. [Google Scholar] [CrossRef] [PubMed]

- Posner, M.I.; Snyder, C.R.; Davidson, B.J. Attention and the detection of signals. J. Exp. Psychol. Gen. 1980, 109, 160–174. [Google Scholar] [CrossRef]

- Mathews, A.; MacLeod, C. Discrimination of threat cues without awareness in anxiety states. J. Abnorm. Psychol. 1986, 95, 131–138. [Google Scholar] [CrossRef]

- Kang, T.J.; Ding, X.B.; Zhao, X.; Li, X.Y.; Xie, R.Q.; Jiang, H.; He, L.; Hu, Y.J.; Liang, J.J.; Zhou, G.F.; et al. Influence of improved behavioral inhibition on decreased cue-induced craving in heroin use disorder: A preliminary intermittent theta burst stimulation study. J. Psychiatr. Res. 2022, 152, 375–383. [Google Scholar] [CrossRef]

- Cooper, R.M.; Langton, S.R. Attentional bias to angry faces using the dot-probe task? It depends when you look for it. Behav. Res. Ther. 2006, 44, 1321–1329. [Google Scholar] [CrossRef]

- Kuhn, G.; Pickering, A.; Cole, G.G. “Rare” emotive faces and attentional orienting. Emotion 2016, 16, 1–5. [Google Scholar] [CrossRef]

- Aviezer, H.; Trope, Y.; Todorov, A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science 2012, 338, 1225–1229. [Google Scholar] [CrossRef]

- Kret, M.E.; Karin, R.; Stekelenburg, J.J.; Beatrice, D.G. Emotional signals from faces, bodies and scenes influence observers’ face expressions, fixations and pupil-size. Front. Hum. Neurosci. 2013, 7, 810. [Google Scholar] [CrossRef]

- Dael, N.; Mortillaro, M.; Scherer, K.R. Emotion expression in body action and posture. Emotion 2012, 17, 557–565. [Google Scholar] [CrossRef]

- de Gelder, B. Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3475–3484. [Google Scholar] [CrossRef]

- Mondloch, C.J.; Horner, M.; Mian, J. Wide eyes and drooping arms: Adult-like congruency effects emerge early in the development of sensitivity to emotional faces and body posture. J. Exp. Child Psychol. 2013, 114, 203–216. [Google Scholar] [CrossRef]

- Kret, M.E.; Stekelenburg, J.J.; de Gelder, B.; Roelofs, K. From face to hand: Attentional bias towards expressive hands in social anxiety. Biol. Psychol. 2017, 122, 42–50. [Google Scholar] [CrossRef] [PubMed]

- Ding, X.B.; Kang, T.J.; Zhao, X. Neglected clues in research of emotional recognition: Characteristics, neural bases and mechanism of bodily expression processing. J. Psychol. Sci. 2017, 40, 1084–1090. [Google Scholar]

- Lecker, M.; Shoval, R.; Aviezer, H. Temporal integration of bodies and faces: United we stand, divided we fall? Vis. Cogn. 2017, 25, 477–491. [Google Scholar] [CrossRef]

- Zheng, J.; Cao, F.; Chen, Y.; Yu, L.; Yang, Y.; Katembu, S.; Xu, Q. Time course of attentional bias in social anxiety: Evidence from visuocortical dynamics. Int. J. Psychophysiol. 2023, 184, 110–117. [Google Scholar] [CrossRef] [PubMed]

- Lazarov, A.; Abend, R.; Bar-Haim, Y. Social anxiety is related to increased dwell time on socially threatening faces. J. Affect. Disord. 2016, 193, 282–288. [Google Scholar] [CrossRef] [PubMed]

- Hofmann, S.G.; Sawyer, A.T.; Witt, A.A.; Oh, D. The effect of mindfulness-based therapy on anxiety and depression: A meta-analytic review. J. Consult. Clin. Psychol. 2010, 78, 169–183. [Google Scholar] [CrossRef]

- Kret, M.E.; de Gelder, B. When a smile becomes a fist: The perception of facial and bodily expressions of emotion in violent offenders. Exp. Brain Res. 2013, 228, 399–410. [Google Scholar] [CrossRef]

- Kret, M.E.; Denollet, J.; Grèzes, J.; de Gelder, B. The role of negative affectivity and social inhibition in perceiving social threat: An fMRI study. Neuropsychologia 2011, 49, 1187–1193. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Luo, Y.; Zhao, S.; Chen, W.; Hong, L.I. Attentional bias towards threat: Facilitated attentional orienting or impaired attentional disengagement? Adv. Psychol. Sci. 2014, 22, 1129. [Google Scholar] [CrossRef]

- Martinez, L.; Falvello, V.B.; Aviezer, H.; Todorov, A. Contributions of facial expressions and body language to the rapid perception of dynamic emotions. Cogn. Emot. 2016, 30, 939–952. [Google Scholar] [CrossRef]

- Meeren, H.K.; van Heijnsbergen, C.C.; de Gelder, B. Rapid perceptual integration of facial expression and emotional body language. Proc. Natl. Acad. Sci. USA 2005, 102, 16518–16523. [Google Scholar] [CrossRef]

- Springer, U.S.; Rosas, A.; Mcgetrick, J.; Bowers, D. Differences in startle reactivity during the perception of angry and fearful faces. Emotion 2007, 7, 516–525. [Google Scholar] [CrossRef]

- Gilboa-Schechtman, E.; Foa, E.B.; Amir, N. Attentional biases for facial expressions in social phobia: The face-in-the-crowd paradigm. Cogn. Emot. 1999, 13, 305–318. [Google Scholar] [CrossRef]

- Lecker, M.; Dotsch, R.; Bijlstra, G. Bidirectional contextual influence between faces and bodies in emotion perception. Emotion 2019, 20, 1154–1164. [Google Scholar] [CrossRef] [PubMed]

- Weeks, J.W.; Ashley, N.; Goldin, P.R. Gaze avoidance in social anxiety disorder. Depress. Anxiety 2013, 30, 746–756. [Google Scholar] [CrossRef] [PubMed]

- Alden, L.E.; Mellings, T.M.B. Generalized Social Phobia and social judgments: The salience of self- and partner-information. J. Anxiety Disord. 2004, 18, 143. [Google Scholar] [CrossRef] [PubMed]

- Wenzler, S.; Levine, S.; Dick, R.V.; Oertel-Knchel, V.; Aviezer, H. Beyond pleasure and pain: Facial expression ambiguity in adults and children during intense situations. Emotion 2016, 16, 807–814. [Google Scholar] [CrossRef]

| Group | Age | LSAS | BDI-II |

|---|---|---|---|

| M ± SD | M ± SD | M ± SD | |

| Experiment 1 | |||

| HSA (n = 33) | 24.12 ± 2.421 | 80.30 ± 18.684 | 3.45 ± 3.800 |

| LSA (n = 24) | 23.96 ± 2.710 | 18.21 ±10.579 | 1.46 ± 2.284 |

| t | 0.238 | 15.905 *** | 2.467 * |

| Experiment 2 | |||

| HSA (n = 35) | 24.57 ± 2.913 | 79.37 ± 18.916 | 3.69 ± 3.652 |

| LSA (n = 24) | 23.79 ± 2.750 | 18.58 ± 9.740 | 1.29 ± 1.829 |

| t | 1.033 | 16.145 *** | 3.318 ** |

| Experiment 3 | |||

| HSA (n = 33) | 24.58 ± 3.011 | 79.21 ± 19.390 | 16.95 ± 11.337 |

| LSA (n = 22) | 24.00 ± 2.619 | 3.70 ± 3.695 | 1.45 ± 2.365 |

| t | 0.731 | 14.996 *** | 2.519 * |

| Emotional Pair Type | Location of Stimulus Presentation | High Social Anxiety Group | Low Social Anxiety Group |

|---|---|---|---|

| M ± SD (n = 33) | M ± SD (n = 24) | ||

| Anger–Happiness | Conformity | 328.138 ± 34.674 | 348.436 ± 37.999 |

| Inconformity | 338.097 ± 39.102 | 370.640 ± 54.509 | |

| Anger–Neutral | Conformity | 331.769 ± 39.304 | 351.822 ± 51.625 |

| Inconformity | 325.115 ± 25.723 | 357.771 ± 46.658 | |

| Happy–Neutral | Conformity | 345.919 ± 37.488 | 371.581 ± 52.910 |

| Inconformity | 333.866 ± 37.817 | 363.621 ± 45.804 | |

| Neutral–Neutral | 336.234 ± 29.768 | 357.423 ± 44.243 |

| High Social Anxiety Group | Low Social Anxiety Group | |||

|---|---|---|---|---|

| M ± SD (n = 33) | M ± SD (n = 24) | t | p | |

| Reaction times under different probe conditions | ||||

| Probe point agreement | 335.300 ± 33.491 | 357.117 ± 42.559 | −2.166 * | 0.035 * |

| Probe point without agreement | 332.149 ± 29.96 | 364.106 ± 45.087 | −3.021 ** | 0.005 ** |

| Neutral control | 336.234 ± 29.768 | 357.423 ± 44.243 | −2.035 * | 0.051 |

| Attentional bias component | ||||

| BI | −3.150 ± 17.727 | 6.989 ± 19.494 | −2.045 * | 0.046 * |

| OI | 0.934 ± 17.658 | 0.306 ± 21.737 | 0.120 | 0.905 |

| DI | −4.085 ± 15.343 | 6.682 ± 17.708 | −2.451 * | 0.017 * |

| Emotional Pair Type | Variable | High Social Anxiety Group | Low Social Anxiety Group | ||

|---|---|---|---|---|---|

| M ± SD (n = 33) | M ± SD (n = 24) | t | p | ||

| Anger–Happiness | BI | 9.959 ± 29.180 | 22.204 ± 37.256 | −1.391 | 0.170 |

| OI | 8.096 ± 18.356 | 8.987 ± 28.810 | −0.142 | 0.887 | |

| DI | 1.863 ± 26.976 | 13.217 ± 26.377 | −1.584 | 0.119 | |

| Anger–Neutral | BI | −6.654 ± 35.914 | 5.949 ± 35.994 | −1.307 | 0.197 |

| OI | 4.465 ± 28.109 | 5.601 ± 32.718 | −0.140 | 0.889 | |

| DI | −11.119 ± 19.506 | 0.348 ± 23.074 | −2.029 * | 0.047 * | |

| Happy–Neutral | BI | −12.053 ± 25.104 | −7.96 ± 35.394 | −0.511 | 0.611 |

| OI | −9.685 ± 24.368 | −14.158 ± 31.030 | 0.610 | 0.545 | |

| DI | −2.368 ± 23.251 | 6.198 ± 29.103 | −1.235 | 0.222 |

| Emotional Pair Type | Location of Stimulus Presentation | High Social Anxiety Group | Low Social Anxiety Group |

|---|---|---|---|

| M ± SD (n = 35) | M ± SD (n = 24) | ||

| AH face–N body | Conformity | 322.051 ± 31.187 | 349.503 ± 40.184 |

| Inconformity | 314.695 ± 36.000 | 338.549 ± 38.520 | |

| AN face–H body | Conformity | 342.911 ± 33.319 | 371.437 ± 41.613 |

| Inconformity | 333.679 ± 41.714 | 339.980 ± 34.041 | |

| HN face–A body | Conformity | 325.398 ± 34.867 | 342.124 ± 33.040 |

| Inconformity | 335.851 ± 41.600 | 342.002 ± 32.204 | |

| Neutral–Neutral | 333.528 ± 34.769 | 349.528 ± 34.219 |

| High Social Anxiety Group | Low Social Anxiety Group | |||

|---|---|---|---|---|

| M ± SD (n = 35) | M ± SD (n = 22) | t | p | |

| Reaction times under different probe conditions | ||||

| Probe point agreement | 330.300 ± 30.341 | 353.978 ± 33.634 | −2.817 ** | 0.007 ** |

| Probe point without agreement | 327.962 ± 36.932 | 340.127 ± 30.273 | −1.334 | 0.187 |

| Neutral control | 333.247 ± 34.769 | 349.528 ± 34.219 | −1.778 | 0.081 |

| Attentional bias component | ||||

| BI | −2.337 ± 19.098 | −13.850 ± 24.635 | 2.020 * | 0.048 * |

| OI | 2.947 ± 14.310 | −4.449 ± 23.635 | 1.373 | 0.178 |

| DI | −5.284 ± 18.024 | −9.400 ± 19.358 | 0.836 | 0.407 |

| Emotional Pair Type | Variable | High Social Anxiety Group | Low Social Anxiety Group | ||

|---|---|---|---|---|---|

| M ± SD (n = 35) | M ± SD (n = 24) | t | p | ||

| Angry face–Neutral body Happy face–Neutral body | BI | −7.356 ± 23.444 | −10.954 ± 31.522 | 0.503 | 0.617 |

| OI | 11.477 ± 19.917 | 0.025 ± 32.692 | 1.495 | 0.144 | |

| DI | −18.833 ± 22.524 | −10.979 ± 23.855 | −1.239 | 0.221 | |

| Angry face–Happy body Neutral face–Happy body | BI | −9.232 ± 26.593 | −31.457 ± 37.983 | 2.647 ** | 0.010 ** |

| OI | −9.383 ± 19.254 | −21.909 ± 31.836 | 1.685 | 0.101 | |

| DI | 0.431 ± 22.995 | −9.548 ± 27.319 | 1.516 | 0.135 | |

| Happy face–Angry body Neutral face–Angry body | BI | 10.453 ± 33.726 | −0.122 ± 34.038 | 1.179 | 0.243 |

| OI | 7.848 ± 18.307 | 7.404 ± 27.966 | 0.074 | 0.941 | |

| DI | 2.603 ± 25.379 | −7.526 ± 26.935 | 1.469 | 0.147 |

| Emotional Pair Type | Location of Stimulus Presentation | High Social Anxiety Group | Low Social Anxiety Group |

|---|---|---|---|

| M ± SD (n = 33) | M ± SD (n = 22) | ||

| A face–HN body | Conformity | 321.398 ± 33.379 | 333.547 ± 39.256 |

| Inconformity | 317.799 ± 43.753 | 351.265 ± 41.25 | |

| H face–AN body | Conformity | 346.218 ± 34.973 | 348.715 ± 45.269 |

| Inconformity | 330.399 ± 35.921 | 351.151 ± 33.586 | |

| N face–AH body | Conformity | 331.748 ± 37.624 | 349.277 ± 43.587 |

| Inconformity | 338.758 ± 37.296 | 355.580 ± 38.267 | |

| Neutral–Neutral | 334.973 ± 38.445 | 356.705 ± 39.840 |

| High Social Anxiety Group | Low Social Anxiety Group | |||

|---|---|---|---|---|

| M ± SD (n = 33) | M ± SD (n = 22) | t | p | |

| Reaction times under different probe conditions | ||||

| Probe point agreement | 333.493 ± 30.824 | 343.917 ± 36.752 | −1.137 | 0.261 |

| Probe point without agreement | 329.252 ± 33.576 | 352.447 ± 33.892 | −2.500 * | 0.016 * |

| Neutral control | 334.973 ± 38.445 | 356.705 ± 39.840 | −2.024 * | 0.051 |

| Attentional bias component | ||||

| BI | −4.240 ± 18.050 | 8.529 ± 20.628 | −2.427 * | 0.019 * |

| OI | 1.479 ± 17.990 | 12.787 ± 23.945 | −1.999 | 0.051 |

| DI | −5.720 ± 14.580 | −4.258 ± 26.785 | −0.234 | 0.817 |

| Emotional Pair Type | Variable | High Social Anxiety Group | Low Social Anxiety Group | ||

|---|---|---|---|---|---|

| M ± SD (n = 33) | M ± SD (n = 22) | t | p | ||

| Angry face–Happy body Angry face–Neutral body | BI | −3.599 ± 42.263 | 17.718 ± 37.512 | −1.915 | 0.061 |

| OI | 13.575 ± 27.657 | 23.158 ± 34.551 | −1.139 | 0.260 | |

| DI | −17.174 ± 25.046 | −5.44 ± 32.732 | −1.504 | 0.138 | |

| Happy face–Angry body Happy face–Neutral body | BI | −15.819 ± 28.750 | 2.436 ± 31.315 | −2.226 * | 0.030 * |

| OI | −11.245 ± 23.809 | 7.99 ± 30.382 | −2.626 * | 0.011 * | |

| DI | −4.574 ± 24.915 | −5.554 ± 28.606 | 0.135 | 0.893 | |

| Neutral face–Angry body Neutral face–Happy body | BI | 7.01 ± 28.915 | 6.303 ± 33.373 | 0.084 | 0.933 |

| OI | 3.225 ± 21.789 | 7.428 ± 32.474 | -0.575 | 0.568 | |

| DI | 3.785 ± 20.967 | −1.125 ± 34.671 | 0.655 | 0.515 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Liang, J.; Zhu, Z.; Gao, J.; Yao, Q.; Ding, X. Attentional Bias of Individuals with Social Anxiety towards Facial and Somatic Emotional Cues in a Holistic Manner. Behav. Sci. 2024, 14, 244. https://doi.org/10.3390/bs14030244

Wang Y, Liang J, Zhu Z, Gao J, Yao Q, Ding X. Attentional Bias of Individuals with Social Anxiety towards Facial and Somatic Emotional Cues in a Holistic Manner. Behavioral Sciences. 2024; 14(3):244. https://doi.org/10.3390/bs14030244

Chicago/Turabian StyleWang, Yuetan, Jingjing Liang, Ziwen Zhu, Jingyi Gao, Qiuyan Yao, and Xiaobin Ding. 2024. "Attentional Bias of Individuals with Social Anxiety towards Facial and Somatic Emotional Cues in a Holistic Manner" Behavioral Sciences 14, no. 3: 244. https://doi.org/10.3390/bs14030244

APA StyleWang, Y., Liang, J., Zhu, Z., Gao, J., Yao, Q., & Ding, X. (2024). Attentional Bias of Individuals with Social Anxiety towards Facial and Somatic Emotional Cues in a Holistic Manner. Behavioral Sciences, 14(3), 244. https://doi.org/10.3390/bs14030244