1. Introduction

The 21st century is witnessing a demographic shift as the global population ages, pressuring global social and public healthcare services [

1]. Society is, thus, grappling with an escalating demand for innovative solutions that can effectively support the mounting needs of this burgeoning demographic. As a result, the last few decades have witnessed a swift rise in the deployment of smart aged-care products [

2] or social service robots [

3], particularly in the healthcare sector, as a means to meet this demand. Prominent companion robots, like Paro, not only assist but also provide emotional support, improving the quality of life of elderly people [

4,

5]. These robots serve not merely as assistive tools, but as companions that can provide emotional support, thus opening up a new frontier in human–robot interaction (HRI). While companion robots show potential in healthcare, understanding their successful interaction with the elderly, who may heavily rely on them, remains a challenge. The HRI field is presently a focal point of exhaustive research as scientists aim to deepen our understanding of the myriad facets that define and influence this complex interplay. Unraveling the root causes of discomfort or mistrust during interactions is essential for fostering deeper affinity and trust toward robots as social partners [

6].

One crucial component that has been highlighted in these studies is the role of empathy, a human capacity that is pivotal to understanding the emotional and mental states of others [

7]. As innovative humanoid robots, like Ameca, are widening the emotional bandwidth, it is important to bridge the human–robot social divide with a broader range of emotions [

8]. Thus, empathy is seen as a key factor determining the success of these interactions [

9], which is often delineated into two aspects, affective and cognitive [

10]. Affective empathy concerns the ability to resonate emotionally with others, while cognitive empathy involves understanding others’ thoughts and emotions, a process that often necessitates perspective-taking [

11]. Recent research has elucidated a nuanced relationship between empathy and perceptual face recognition skills. For instance, perspective-taking, a sub-component of cognitive empathy, has been linked to the accuracy of recognizing and dismissing certain emotional faces. Furthermore, perspective-taking is related to expedited reaction times when discarding faces expressing disgust [

12]. Hence, cognitive empathy offers profound insights into the processes and mechanisms that engender meaningful engagement, thereby contributing to the design of robots that are adept at addressing user needs and adapting to an array of social contexts.

While cognitive empathy in HRI is less explored, its role in perceiving a robot’s life-likeness is significant. The role of a robot’s animacy in influencing individuals’ cognitive empathy during HRI is a contested subject with inconsistent findings in research. On one hand, theories such as simulation theory [

13] and group classification theory [

14] suggest that a higher degree of robot animacy—meaning robots that more closely resemble humans—promotes greater perspective-taking, where individuals more readily adopt the robots’ perspectives. Supporting evidence has been furnished by researchers such as Amorim, Isableu, and Jarraya [

15], who found that as an object’s animacy increased, participants became more proficient in reasoning about the object-centered perspective by employing self-centered analogies. Moreover, Carlson et al. [

16] revealed that when the interactive partner is a robot, an individual tends to assume their own perspective rather than that of the robot. On the other hand, Mori’s uncanny valley theory [

17,

18] argues that high-animacy robots may cause decreased familiarity and emotional distance, leading to reduced perspective-taking. For example, Yu and Zacks [

19] discovered that human-like visual stimuli are more likely to elicit a person’s self-centered perspective, while inanimate objects are more inclined to be regarded from their own perspective. Similarly, Zhao, Cusimano, and Malle [

20] found that people tend to adopt a robot’s perspective rather than that of a human-like entity when the robot displays nonverbal behaviors. Meanwhile, some recent studies challenge both perspectives, suggesting that an individual’s perspective-taking does not change regardless of whether they interact with a robot or a human [

21]. Given these conflicting views, further research is needed to clarify the impact of robot animacy on cognitive empathy during HRI.

Our study explores the interplay between robot animacy, facial expressions, and participant’s age, aiming for holistic insight. While individual studies have touched upon these elements in isolation, our integrative approach seeks to provide a nuanced understanding that could guide future designs and strategies in the realm of HRI. By deciphering the synergies and conflicts among these variables, we aspire to set a new benchmark in designing robots that can seamlessly fit into the healthcare needs of the aging population. The equation becomes even more complex when considering the influence of positive facial expressions and age. Prior research has elucidated that positive facial emotional expressions possess the capacity to augment cognitive empathy [

22]. Investigations have revealed that robot visages adorned with happy facial expressions are perceived as more animate than their neutral counterparts [

23]. Another study discovered a correlation between facial emotional expression and animacy perception, with robots exhibiting joyous expressions more likely to be perceived as possessing cognition compared to those bearing neutral expressions [

24]. Additionally, neuroimaging evidence indicates that positive social–emotional text stimuli can activate brain regions associated with adopting a third-person perspective, thereby bolstering perspective-taking abilities [

25]. However, to the best of our knowledge, no previous research has explored whether positive facial emotional expressions can improve animacy perception and further enhance perspective-taking abilities, which holds significant implications within the realm of HRI.

Age greatly influences perspective-taking abilities, which decline with advancing individuals [

26,

27], possibly due to reduced activity in brain regions associated with tasks that differentiate between self and others’ perspectives [

28,

29]. Adding to this discourse, a recent study identified that adults maintain a consistent performance on the director task (DT), a referential-communication measure of perspective-taking, up until their late 30s; thereafter, a decline is observed, partially influenced by individual differences in executive functions [

30]. Moreover, further studies have revealed that emotion and animacy can influence the perspective-taking performance of older individuals. In accordance with socio-emotional selectivity theory, older adults exhibit a preference for attending to positive emotions [

31]. Additionally, a separate study indicated that older adults are less inclined to observe low-animacy robots [

32]. However, previous research has not delved into how perceiving animacy and emotional expressions affects perspective-taking abilities in older adults.

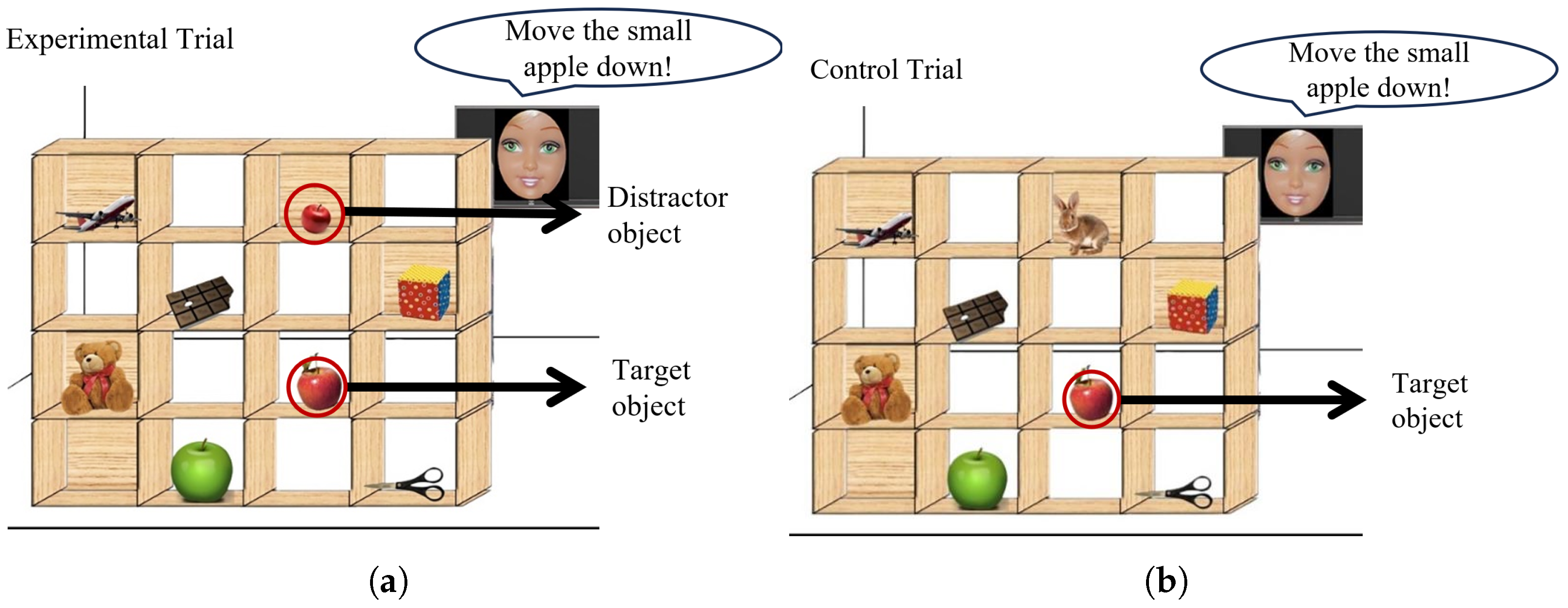

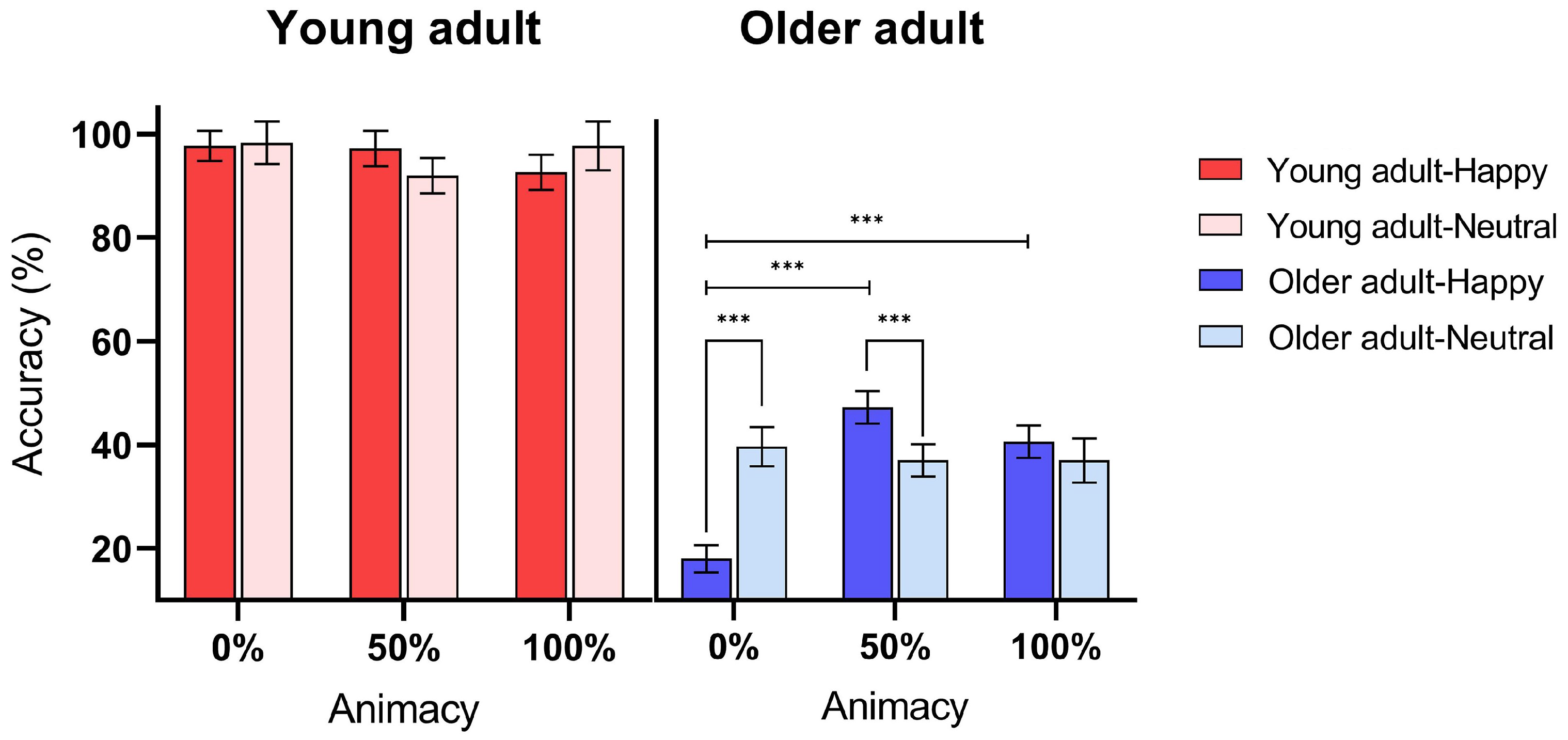

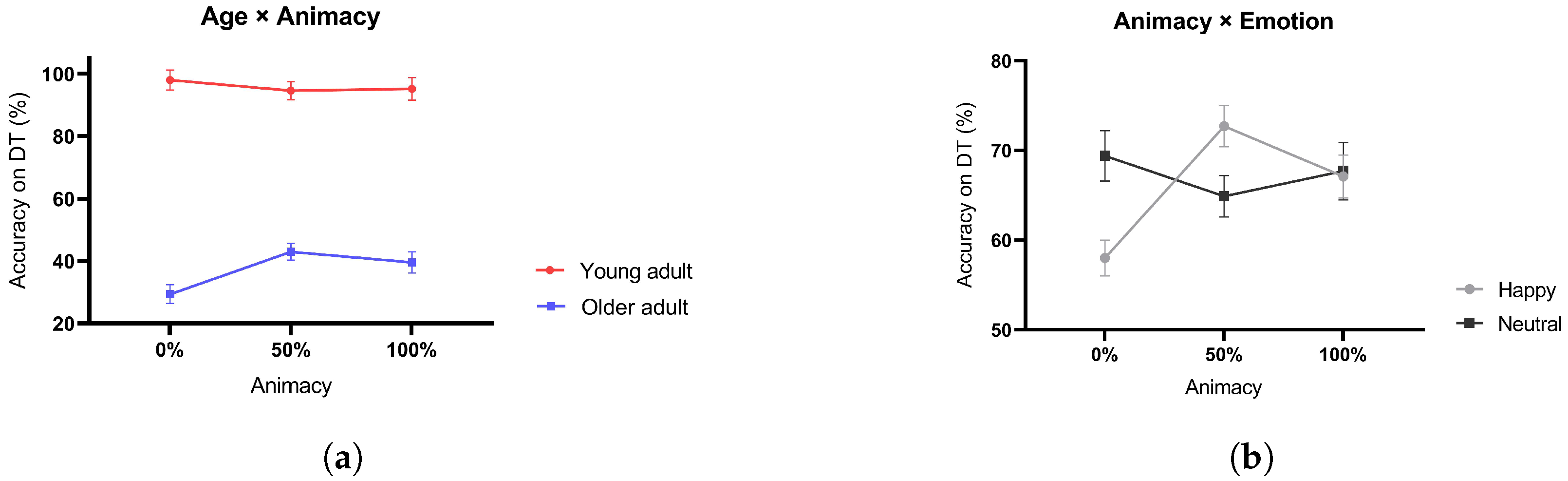

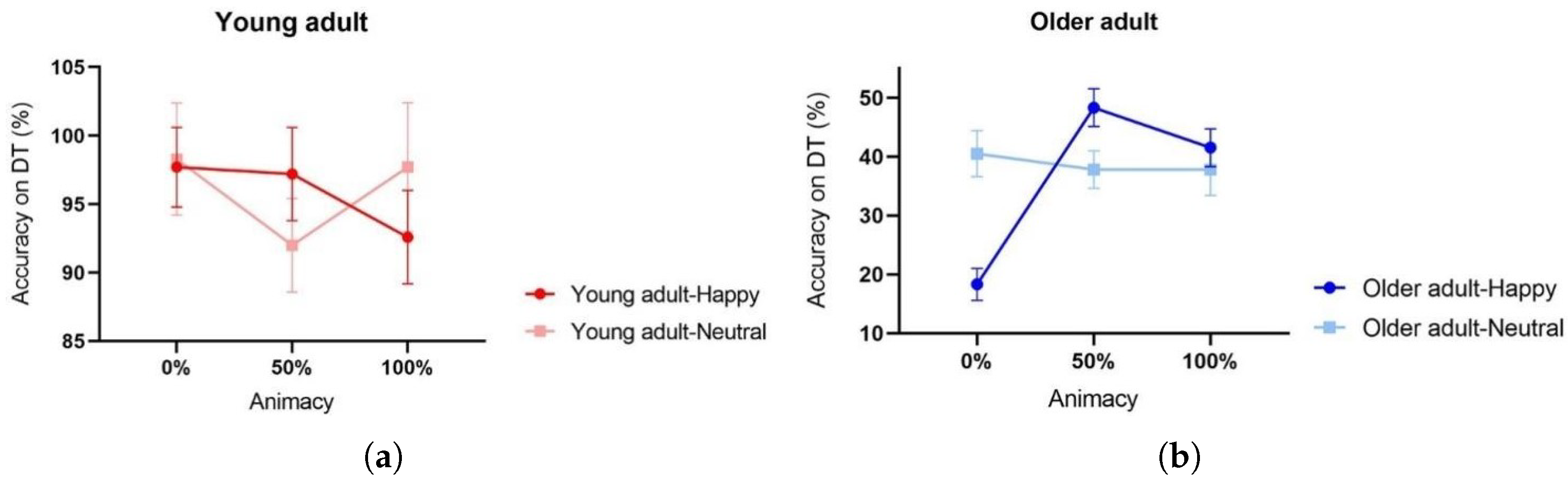

This study addresses the above gaps by investigating how animacy, emotional facial expressions, and age impact perspective-taking in HRI. Our hypotheses are as follows: (1)The more human-like a robot is, the better its perspective-taking abilities (simulation and group classification theories, and (2)positive emotional expressions displayed by a robot will amplify perspective-taking abilities compared to neutral ones, considering the social significance of positive emotions. We will test these hypotheses by employing the DT to assess perspective-taking abilities across different age groups, degrees of animacy, and emotional expressions. The experimental results expand and support the group classification theory, offering explanations for the conflicting results observed in the relationship between cognitive empathy and anthropomorphism. Our research is the first to incorporate both age and expression variables, unearthing a unique finding that elderly individuals show a preference for high-anthropomorphic robots while being repelled by low-anthropomorphic robots. Significantly, positive expressions further intensify these inclinations (a discovery that was previously overlooked); this carries paramount implications for designing companion robots for the elderly.

5. Conclusions

This study illuminates the substantial impact of facial animacy and emotional expression on the perspective-taking abilities of older adults, offering profound insights into HRI and cognitive empathy. The results suggest that robot faces with high animacy and happy expressions are more likely to elicit effective perspective-taking among the elderly. From a methodological standpoint, our innovative blend has allowed for a more nuanced and detailed understanding of cognitive empathy and perspective-taking in HRI. This breakthrough approach helped bridge an essential knowledge gap, offering a comprehensive perspective on how age, facial animacy, and emotional expressions influence cognitive empathy. Furthermore, these findings hold critical implications for the design of social service robots, particularly those targeting an older demographic. When designing AI and robot interactions for older adults, the influence of animacy and emotional expression should be considered and adjusted according to the specific needs and preferences of the elderly. This is vital for optimizing the user experiences for older adults, enhancing their acceptance of robots, and ultimately improving their quality of life.

Investigating human–robot interactions in real-world settings, such as homes or healthcare facilities, is a key next step, given our study’s controlled environment. The influence of everyday contexts on these interactions is crucial to understand. Moreover, as AI advances, studying the effects of enhanced algorithms on humanoid robots and their impact on the perceptions of older adults is essential. Such research aims to fine-tune interactions to suit the specific needs of the elderly.