Promoting Complex Problem Solving by Introducing Schema-Governed Categories of Key Causal Models

Abstract

:1. Introduction

2. Teaching Relational Reasoning

3. Differences between Novices and Experts’ Knowledge Organization

4. Learning Relational Categories

5. Effects of Learning Schema-Governed Categories on Complex Problem Solving

6. Current Study

Research Question and Hypotheses

7. Methods

7.1. Participants

7.2. Design and Procedure

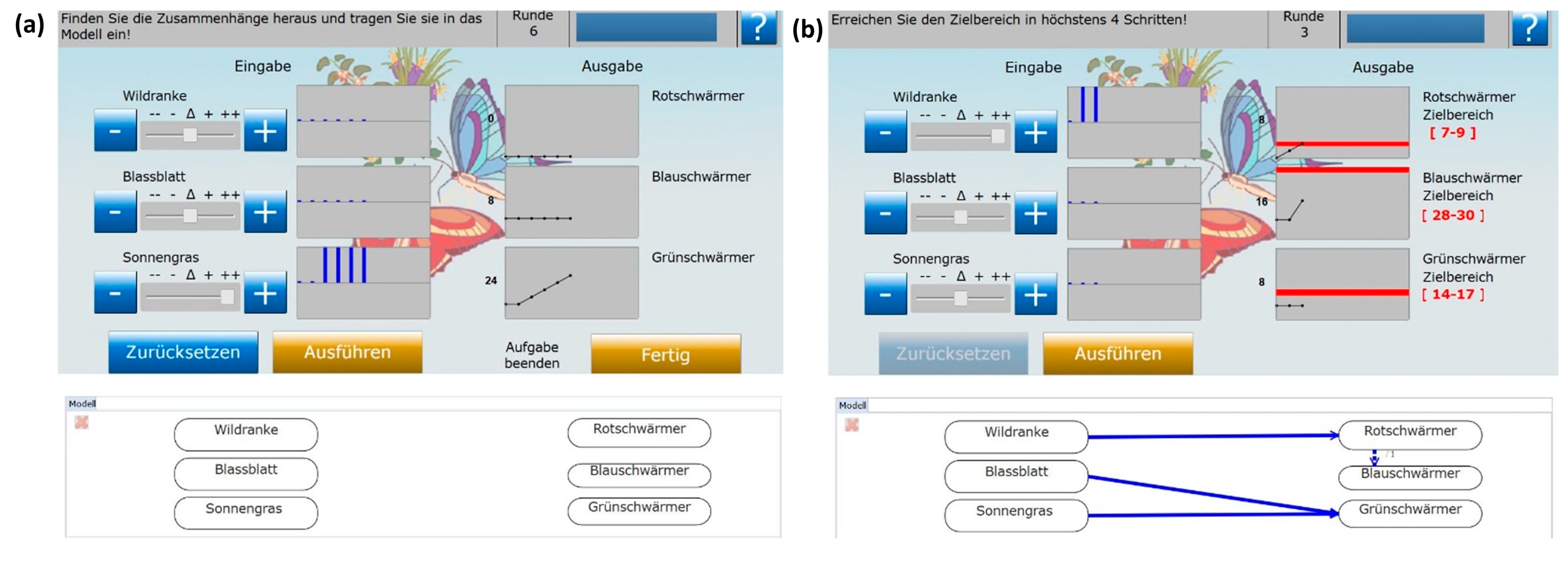

7.3. Material

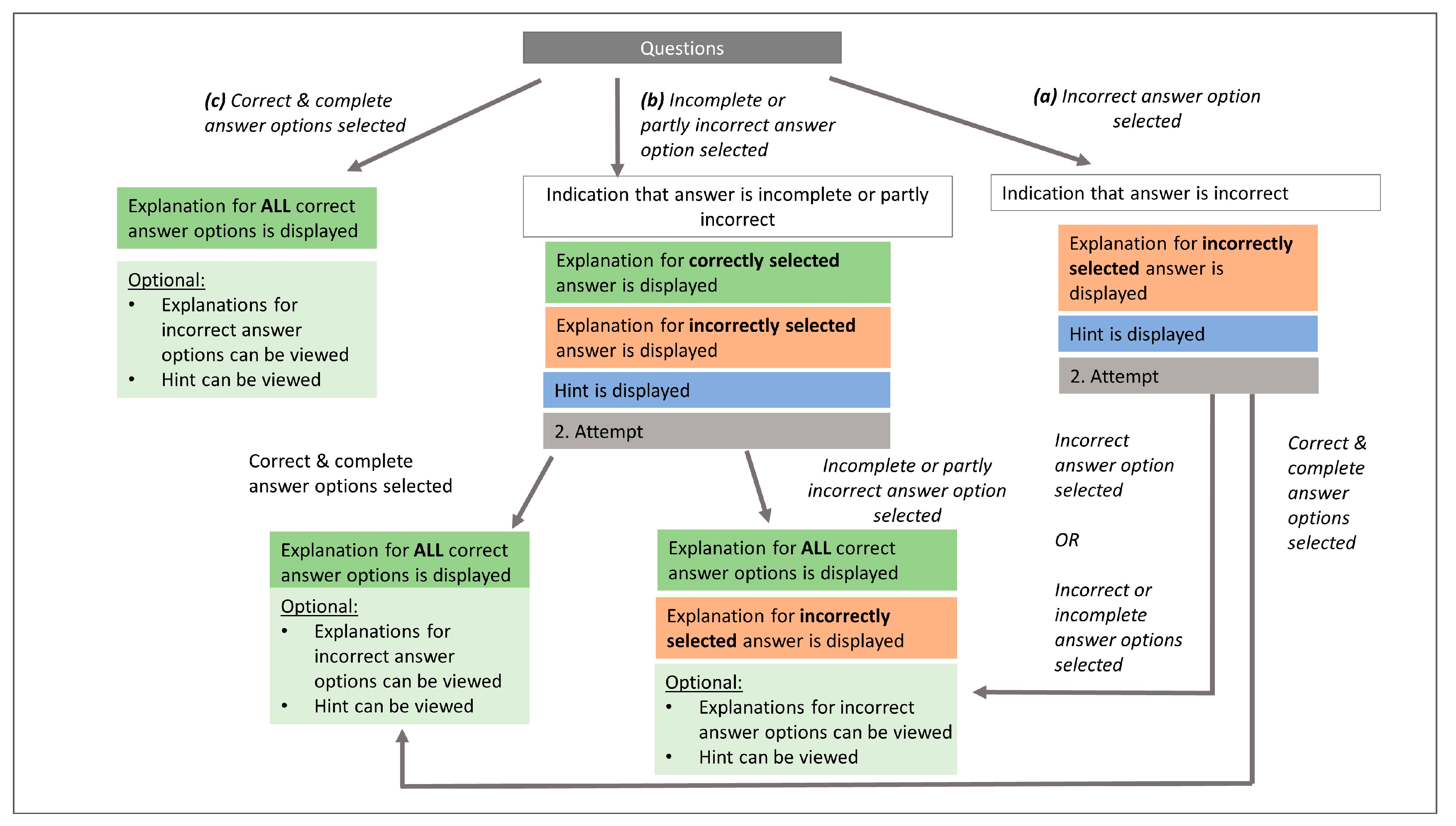

- (a)

- In case of an incorrect answer, the participants received feedback that their answer was incorrect and an explanation of why it was incorrect. Additionally, they were provided with a hint pointing to relevant aspects in the questions, thus helping participants to overcome deficient understanding or incorrect conceptions in order to help them correct their mistake on their own in a second attempt. After the second attempt, the participants were again informed about the correctness of their answer. This time, however, they also received corrective feedback in the form of the correct solution and explanations for each answer option.

- (b)

- If the participant’s answer was either incomplete or partly wrong (i.e., at least one correct and one incorrect answer option was chosen), then they received feedback that their answer was incomplete or partly wrong. For all correct answer options, they were provided with an explanation of why it was correct, and for all incorrect answer options they received an explanation of why it was incorrect. The participants also received a hint on important aspects of the question to pay attention to. Then they could proceed with the second attempt of answering the question. After the second attempt, the participants were informed about the correctness of their answer. This time, however, they also received corrective feedback in the form of the correct solution and explanations for each answer option.

- (c)

- In case the participants gave the correct answer, they received feedback that their answer was correct and also a short explanation as to why. Interested participants could access the explanations of all answer options as well as the hint. When finished reading, they could proceed to the next question.

8. Results

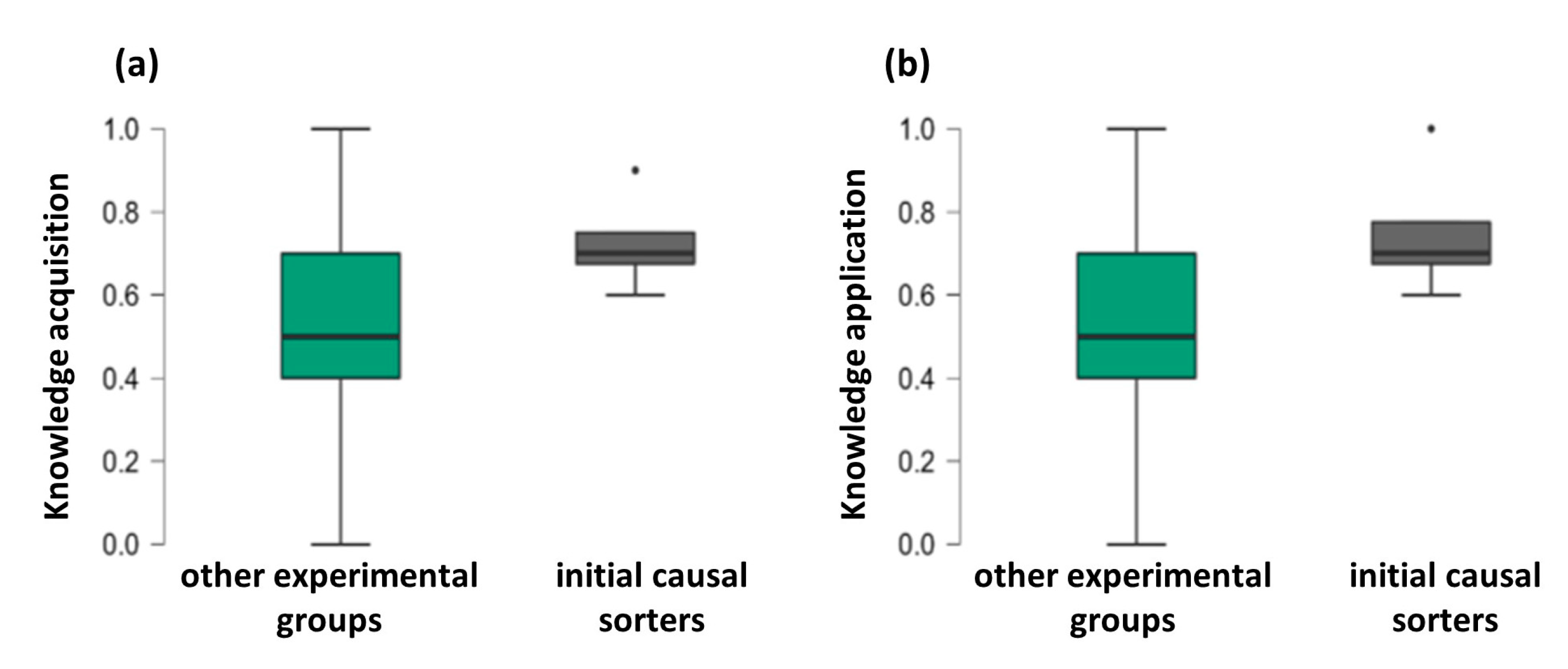

8.1. Initial Causal Sorters

8.2. Changes in the Ability to Detect Key Causal Models after Intervention and/or Tutorial

8.3. Complex Problem-Solving Performance across Experimental Groups

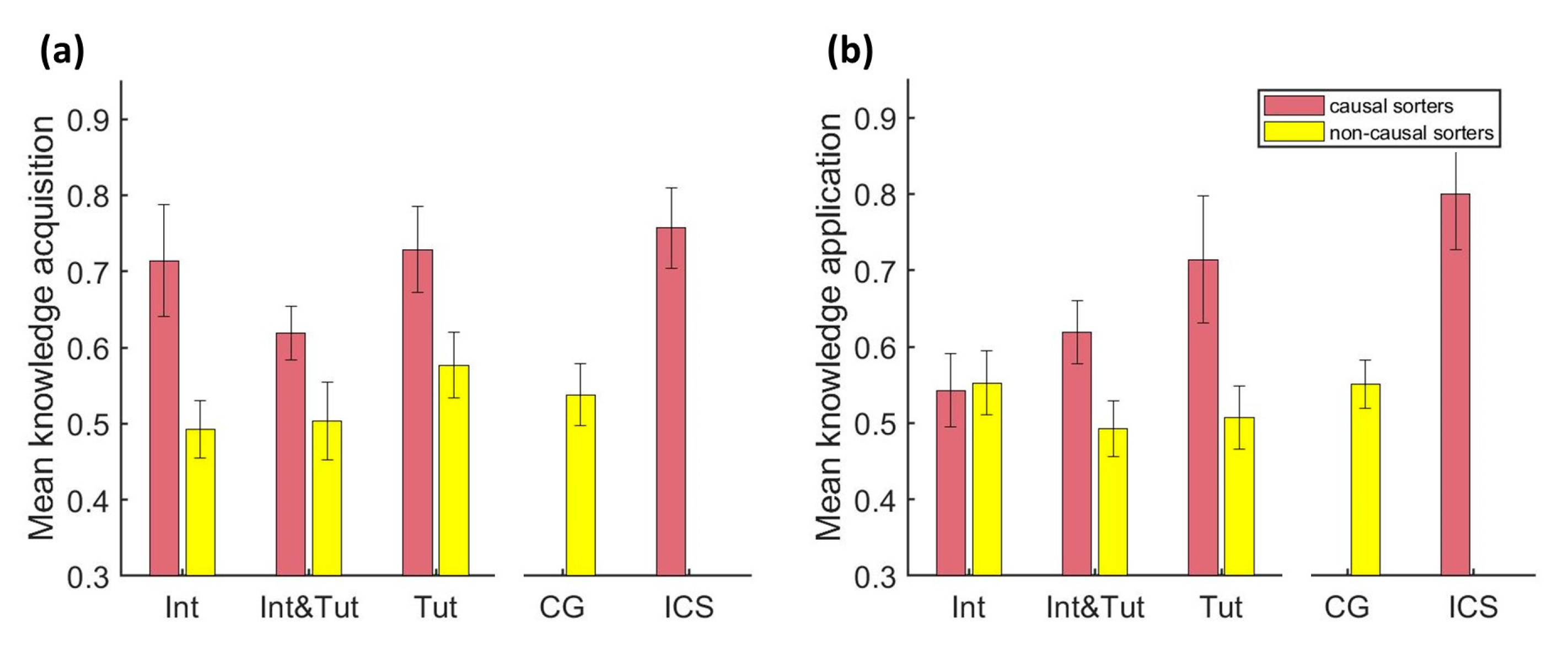

8.4. Complex Problem-Solving Performance of Causal Sorters versus Non-Causal Sorters in the Treatment Groups

8.5. Complex Problem-Solving Performance of Causal Sorters versus Baseline Conditions CG and Initial Causal Sorters

8.6. Performance in the Card Sorting Task of the Tutorial

9. Discussion

Limitations and Outlook

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Özkiziltan, D.; Hassel, A. Humans versus Machines: An Overview of Research on the Effects of Automation of Work. SSRN Electron. J. 2021. [Google Scholar] [CrossRef]

- Dechema, u.V.D.I. Modulare Anlagen: Flexible Chemische Produktion durch Modularisierung und Standardisierung—Status Quo und zukünftige Trends; ProcessNet: Frankfurt, Germany, 2017. [Google Scholar]

- Sheridan, T.B. Adaptive Automation, Level of Automation, Allocation Authority, Supervisory Control, and Adaptive Control: Distinctions and Modes of Adaptation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2011, 41, 662–667. [Google Scholar] [CrossRef]

- Hirsch-Kreinsen, H.; ten Hompel, M. Digitalisierung industrieller Arbeit: Entwicklungsperspektiven und Gestaltungsansätze. In Handbuch Industrie 4.0 Bd.3; Springer: Berlin/Heidelberg, Germany, 2017; pp. 357–376. [Google Scholar] [CrossRef]

- Autor, D.H.; Levy, F.; Murnane, R.J. The Skill Content of Recent Technological Change: An Empirical Exploration. Q. J. Econ. 2003, 118, 1279–1333. [Google Scholar] [CrossRef]

- Neubert, J.C.; Mainert, J.; Kretzschmar, A.; Greiff, S. The assessment of 21st century skills in industrial and organizational psychology: Complex and collaborative problem solving. Ind. Organ. Psychol. 2015, 8, 238–268. [Google Scholar] [CrossRef]

- Diamandis, P.H.; Kotler, S. The Future Is Faster Than You Think: How Converging Technologies Are Transforming Business; Simon & Schuster: New York, NY, USA, 2020; Volume 26, pp. 5–6. Available online: https://books.google.com/books?hl=en&lr=&id=K7HMDwAAQBAJ&oi=fnd&pg=PP11&dq=How+Accelerating+Technology+is+Transforming+Business,+Politics+and+Society&ots=Q7aUD4P_o7&sig=pXLR7Wpqgepwxpk1RyV7v4VW9Pk (accessed on 1 July 2022).

- Autor, D.H.; Mindell, D.A.; Reynolds, E.B.; Solow, R.M. 4: Education and training: Pathways to better jobs. In The Work of the Future: Building Better Jobs in an Age of Intelligent Machines; The MIT Press: Cambridge, MA, USA, 2021; pp. 79–100. [Google Scholar]

- Novick, L.R. Analogical Transfer, Problem Similarity, and Expertise. J. Exp. Psychol. Learn. Mem. Cogn. 1988, 14, 510–520. [Google Scholar] [CrossRef] [PubMed]

- Gentner, D. Structure-Mapping: A Theoretical Framework for Analogy. Cogn. Sci. 1983, 7, 155–170. [Google Scholar] [CrossRef]

- Gentner, D.; Markman, A.B. Structural alignment in analogy and similarity. Am. Psychol. 1997, 52, 45–56. Available online: https://pdfs.semanticscholar.org/7d65/5b765d962d47fa3eeb677c7411056b38165b.pdf (accessed on 7 March 2019). [CrossRef]

- Barnett, S.M.; Ceci, S.J. When and where do we apply what we learn? A taxonomy for far transfer. Psychol. Bull. 2002, 128, 612–637. [Google Scholar] [CrossRef] [PubMed]

- National Research Council. Education for Life and Work: Developing Transferable Knowledge and Skills in the 21st Century; Pellegrino, J.W., Hilton, M.L., Eds.; The National Academies Press: Washington, DC, USA, 2012. [Google Scholar] [CrossRef]

- Griffin, P.; Care, E.; McGaw, B. The changing role of education and schools. In Assessment and Teaching of 21st Century Skills; Springer: Berlin/Heidelberg, Germany, 2012; Volume 9789400723, pp. 1–15. [Google Scholar] [CrossRef]

- Whitehead, A. The Aims of Education and Other Essays; Macmillan: New York, NY, USA, 1929. [Google Scholar]

- Gentner, D.; Loewenstein, J.; Thompson, L.; Forbus, K.D. Reviving Inert Knowledge: Analogical Abstraction Supports Relational Retrieval of Past Events. Cogn. Sci. 2009, 33, 1343–1382. [Google Scholar] [CrossRef] [PubMed]

- Trench, M.; Minervino, R.A. The Role of Surface Similarity in Analogical Retrieval: Bridging the Gap Between the Naturalistic and the Experimental Traditions. Cogn. Sci. 2015, 39, 1292–1319. [Google Scholar] [CrossRef]

- Ross, B.H. This Is Like That: The Use of Earlier Problems and the Separation of Similarity Effects. J. Exp. Psychol. Learn. Mem. Cogn. 1987, 13, 629–639. [Google Scholar] [CrossRef]

- Ross, B.H. Distinguishing Types of Superficial Similarities: Different Effects on the Access and Use of Earlier Problems. J. Exp. Psychol. Learn. Mem. Cogn. 1989, 15, 456–468. [Google Scholar] [CrossRef]

- Gentner, D.; Rattermann, M.J.; Forbus, K.D. The Roles of Similarity in Transfer: Separating Retrievability from Inferential Soundness. Cogn. Psychol. 1993, 25, 524–575. [Google Scholar] [CrossRef] [PubMed]

- Forbus, K.D.; Gentner, D.; Law, K. MAC/FAC: A Model of Similarity-Based Retrieval. Cogn. Sci. 1995, 19, 141–205. [Google Scholar] [CrossRef]

- Hummel, J.E.; Holyoak, K.J. Distributed Representations of Structure: A Theory of Analogical Access and Mapping. Psychol. Rev. 1997, 104, 427–466. Available online: https://psycnet.apa.org/doiLanding?doi=10.1037/0033-295X.104.3.427 (accessed on 15 June 2020). [CrossRef]

- Nokes, T.J.; Schunn, C.D.; Chi, M.T.H. Problem solving and human expertise. In International Encyclopedia of Education, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2010; pp. 265–272. [Google Scholar] [CrossRef]

- Chi, M.T.H.; Feltovich, P.J.; Glaser, R. Categorization and Representation of Physics Problems by Experts and Novices*. Cogn. Sci. 1981, 5, 121–152. [Google Scholar] [CrossRef]

- Goldwater, M.B.; Gentner, D. On the acquisition of abstract knowledge: Structural alignment and explication in learning causal system categories. Cognition 2015, 137, 137–153. [Google Scholar] [CrossRef]

- Rottman, B.M.; Gentner, D.; Goldwater, M.B. Causal Systems Categories: Differences in Novice and Expert Categorization of Causal Phenomena. Cogn. Sci. 2012, 36, 919–932. [Google Scholar] [CrossRef]

- Kessler, F.; Proske, A.; Goldwater, M.B.; Urbas, L.; Greiff, S.; Narciss, S. Promoting thinking in terms of causal structures: Impact on performance in solving complex problems. Proc. Annu. Meet. Cogn. Sci. Soc. 2021, 43, 2059–2065. Available online: https://escholarship.org/uc/item/5jg329kj (accessed on 6 October 2021).

- Alexander, P.A.; Jablansky, S.; Singer, L.M.; Dumas, D. Relational Reasoning: What We Know and Why It Matters. Policy Insights Behav. Brain Sci. 2016, 3, 36–44. [Google Scholar] [CrossRef]

- Holyoak, K.J. Analogy and Relational Reasoning. In The Oxford Handbook of Thinking and Reasoning; Oxford University Press: Oxford, UK, 2012. [Google Scholar] [CrossRef]

- Kubricht, J.R.; Lu, H.; Holyoak, K.J. Individual differences in spontaneous analogical transfer. Mem. Cogn. 2017, 45, 576–588. [Google Scholar] [CrossRef] [PubMed]

- Vendetti, M.S.; Wu, A.; Holyoak, K.J. Far-Out Thinking: Generating Solutions to Distant Analogies Promotes Relational Thinking. Psychol. Sci. 2014, 25, 928–933. [Google Scholar] [CrossRef] [PubMed]

- Hummel, J.E.; Holyoak, K.J. A symbolic-connectionist theory of relational inference and generalization. Psychol. Rev. 2003, 110, 220–264. [Google Scholar] [CrossRef] [PubMed]

- Doumas, L.A.A.; Morrison, R.G.; Richland, L.E. Individual differences in relational learning and analogical reasoning: A computational model of longitudinal change. Front. Psychol. 2018, 9, 1235. [Google Scholar] [CrossRef] [PubMed]

- Dumas, D.; Schmidt, L. Relational reasoning as predictor for engineering ideation success using TRIZ. J. Eng. Des. 2015, 26, 74–88. [Google Scholar] [CrossRef]

- Klauer, K.J.; Willmes, K.; Phye, G.D. Inducing inductive reasoning: Does it transfer to fluid intelligence? Contemp. Educ. Psychol. 2002, 27, 1–25. [Google Scholar] [CrossRef]

- Klauer, K.J.; Phye, G.D. Inductive Reasoning: A Training Approach. Rev. Educ. Res. 2008, 78, 85–123. [Google Scholar] [CrossRef]

- Klauer, K.J. Training des induktiven Denkens—Fortschreibung der Metaanalyse von 2008. Z. Für Pädagogische Psychol. 2014, 28, 5–19. [Google Scholar] [CrossRef]

- Harper, M.E.; Jentsch, F.; Van Duyne, L.R.; Smith-Jentsch, K.; Sanchez, A.D. Computerized Card Sort Training Tool: Is it Comparable to Manual Card Sorting? Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2002, 46, 2049–2053. [Google Scholar] [CrossRef]

- Krieter, F.E.; Julius, R.W.; Tanner, K.D.; Bush, S.D.; Scott, G.E. Thinking Like a Chemist: Development of a Chemistry Card-Sorting Task To Probe Conceptual Expertise. J. Chem. Educ. 2016, 93, 811–820. [Google Scholar] [CrossRef]

- Stains, M.; Talanquer, V. Classification of chemical reactions: Stages of expertise. J. Res. Sci. Teach. 2008, 45, 771–793. [Google Scholar] [CrossRef]

- Lapierre, K.R.; Streja, N.; Flynn, A.B. Investigating the role of multiple categorization tasks in a curriculum designed around mechanistic patterns and principles. Chem. Educ. Res. Pract. 2022, 23, 545–559. [Google Scholar] [CrossRef]

- Bissonnette, S.A.; Combs, E.D.; Nagami, P.H.; Byers, V.; Fernandez, J.; Le, D.; Realin, J.; Woodham, S.; Smith, J.I.; Tanner, K.D. Using the biology card sorting task to measure changes in conceptual expertise during postsecondary biology education. CBE Life Sci. Educ. 2017, 16, ar14. [Google Scholar] [CrossRef] [PubMed]

- Smith, J.I.; Combs, E.D.; Nagami, P.H.; Alto, V.M.; Goh, H.G.; Gourdet, M.A.A.; Hough, C.M.; Nickell, A.E.; Peer, A.G.; Coley, J.D.; et al. Development of the biology card sorting task to measure conceptual expertise in biology. CBE Life Sci. Educ. 2013, 12, 628–644. [Google Scholar] [CrossRef] [PubMed]

- Gentner, D.; Rattermann, M.J. Language and the career of similarity. In Perspectives on Language and Thought; Gelman, S.A., Byrnes, J.P., Eds.; Cambridge University Press: Singapore, 1991; pp. 225–277. [Google Scholar] [CrossRef]

- Gentner, D. Metaphor as structure mapping: The relational shift. Child Dev. 1988, 59, 47–59. Available online: https://www.jstor.org/stable/1130388 (accessed on 12 December 2022). [CrossRef]

- Chi, M.T.H.; VanLehn, K.A. Seeing Deep Structure from the Interactions of Surface Features. Educ. Psychol. 2012, 47, 177–188. [Google Scholar] [CrossRef]

- Goldstone, R.L.; Landy, D.H.; Son, J.Y. The education of perception. Top. Cogn. Sci. 2010, 2, 265–284. [Google Scholar] [CrossRef] [PubMed]

- Pellegrino, J.W. Teaching, learning and assessing 21st century skills. In Pedagogical Knowledge and the Changing Nature of the Teaching Profession; Guerriero, S., Ed.; OECD Publishing: Paris, France, 2017. [Google Scholar] [CrossRef]

- Gentner, D.; Kurtz, K.J. Relational categories. In Categorization Inside and Outside the Laboratory: Essays in Honor of Douglas L. Medin; American Psychological Association: Washington, DC, USA, 2005; pp. 151–175. [Google Scholar] [CrossRef]

- Rosch, E.; Mervis, C.B. Family resemblances: Studies in the internal structure of categories. Cogn. Psychol. 1975, 7, 573–605. [Google Scholar] [CrossRef]

- Love, B.C.; Medin, D.L.; Gureckis, T.M. SUSTAIN: A Network Model of Category Learning. Psychol. Rev. 2004, 111, 309–332. [Google Scholar] [CrossRef]

- Goldwater, M.B.; Markman, A.B.; Stilwell, C.H. The empirical case for role-governed categories. Cognition 2011, 118, 359–376. [Google Scholar] [CrossRef]

- Markman, A.B.; Stilwell, C.H. Role-governed categories. J. Exp. Theor. Artif. Intell. 2001, 13, 329–358. [Google Scholar] [CrossRef]

- Goldwater, M.B.; Schalk, L. Relational categories as a bridge between cognitive and educational research. Psychol. Bull. 2016, 142, 729–757. [Google Scholar] [CrossRef] [PubMed]

- Kurtz, K.J.; Honke, G. Sorting out the problem of inert knowledge: Category construction to promote spontaneous transfer. J. Exp. Psychol. Learn. Mem. Cogn. 2020, 46, 803–821. [Google Scholar] [CrossRef] [PubMed]

- Alfieri, L.; Nokes-Malach, T.J.; Schunn, C.D. Learning through Case Comparisons: A Meta-Analytic Review. Educ. Psychol. 2013, 48, 87–113. [Google Scholar] [CrossRef]

- Jamrozik, A.; Gentner, D. Relational labeling unlocks inert knowledge. Cognition 2020, 196, 104146. [Google Scholar] [CrossRef] [PubMed]

- Quilici, J.L.; Mayer, R.E. Teaching students to recognize structural similarities between statistics word problems. Appl. Cogn. Psychol. 2002, 16, 325–342. [Google Scholar] [CrossRef]

- Mestre, J.P.; Docktor, J.L.; Strand, N.E.; Ross, B.H. Conceptual Problem Solving in Physics. In Psychology of Learning and Motivation—Advances in Research and Theory; Academic Press: Cambridge, MA, USA, 2011; Volume 55. [Google Scholar] [CrossRef]

- Dufresne, R.J.; Gerace, W.J.; Hardiman, P.T.; Mestre, J.P. Constraining Novices to Perform Expertlike Problem Analyses: Effects on Schema Acquisition. J. Learn. Sci. 1992, 2, 307–331. [Google Scholar] [CrossRef]

- Funke, J. Dynamic systems as tools for analysing human judgement. Think. Reason. 2001, 7, 69–89. [Google Scholar] [CrossRef]

- Frensch, P.; Funke, J. Complex Problem Solving: The European Perspective; Psychology Press: London, UK, 2014; Available online: https://books.google.com/books?hl=en&lr=&id=O-ZHAwAAQBAJ&oi=fnd&pg=PP1&dq=complex+problem+solving+funke&ots=9anJOgxtxh&sig=Ba-iRXErYTTQ_Gggagu3aP_3O9E (accessed on 3 August 2023).

- Novick, L.R.; Bassok, M. Problem solving. In The Cambridge Handbook of Thinking and Reasoning; Holyoak, K.J., Morrison, R.G., Eds.; Cambridge University Press: Cambridge, UK, 2005; pp. 321–349. [Google Scholar]

- Greiff, S.; Wüstenberg, S. Assessment with microworlds using MicroDYN: Measurement invariance and latent mean comparisons—Psychometric properties across several student samples and blue-collar workers. Eur. J. Psychol. Assess. 2014, 30, 304–314. [Google Scholar] [CrossRef]

- Gonzalez, C.; Vanyukov, P.; Martin, M.K. The Use of Microworlds to Study Dynamic Decision Making. Comput. Hum. Behav. 2005, 21, 273–286. [Google Scholar] [CrossRef]

- Brehmer, B.; Dörner, D. Experiments with computer-simulated microworlds: Escaping both the narrow straits of the laboratory and the deep blue sea of the field study. Comput. Hum. Behav. 1993, 9, 171–184. [Google Scholar] [CrossRef]

- Greiff, S.; Wüstenberg, S.; Funke, J. Dynamic Problem Solving: A New Assessment Perspective. Appl. Psychol. Meas. 2012, 36, 189–213. [Google Scholar] [CrossRef]

- Holyoak, K.J.; Cheng, P.W. Causal learning and inference as a rational process: The new synthesis. Annu. Rev. Psychol. 2011, 62, 135–163. [Google Scholar] [CrossRef] [PubMed]

- Tse, D.; Langston, R.F.; Kakeyama, M.; Bethus, I.; Spooner, P.A.; Wood, E.R.; Witter, M.P.; Morris, R.G.M. Schemas and memory consolidation. Science 2007, 316, 76–82. [Google Scholar] [CrossRef] [PubMed]

- Finger, H.; Goeke, C.; Diekamp, D.; Standvoß, K.; König, P. LabVanced: A Unified JavaScript Framework for Online Studies. In Proceedings of the International Conference on Computational Social Science (Cologne) 2017, Cologne, Germany, 10–13 July 2017; pp. 1–3. Available online: https://www.researchgate.net/profile/Caspar-Goeke/publication/322273524_LabVanced_A_Unified_JavaScript_Framework_for_Online_Studies/links/5a4f7ac64585151ee284d8c2/LabVanced-A-Unified-JavaScript-Framework-for-Online-Studies.pdf (accessed on 27 June 2022).

- JASP Team. JASP, Version 0.16.1; [Computer Software]; University of Amsterdam: Amsterdam, The Netherlands, 2022; Available online: https://jasp-stats.org/ (accessed on 27 June 2022).

- MATLAB. 9.8.0.1323502 (R2020a); The MathWorks Inc.: Natick, MA, USA, 2020. [Google Scholar]

- Galloway, K.R.; Leung, M.W.; Flynn, A.B. A Comparison of How Undergraduates, Graduate Students, and Professors Organize Organic Chemistry Reactions. J. Chem. Educ. 2018, 95, 355–365. [Google Scholar] [CrossRef]

- Sloman, S. Causal Models: How People Think about the World and Its Alternatives. In Causal Models: How People Think about the World and Its Alternatives; Oxford University Press: Oxford, UK, 2005. [Google Scholar] [CrossRef]

- Johnson-Laird, P.N. Mental Models: Towards a Cognitive Science of Language, Inference, and Consciousness; Harvard University Press: Cambridge, MA, USA, 1983; Available online: https://books.google.com/books?hl=en&lr=&id=FS3zSKAfLGMC&oi=fnd&pg=PR6&dq=Johnson-Laird,+P.+N.+(1983).+Mental+models.+Towards+a+cognitive+science+of+language,+inference,+and+consciousness.+Cambridge:+Cambridge+University+Press.&ots=whraRTgQHi&sig=pg4wLCEm (accessed on 1 September 2022).

- Narciss, S. Feedback Strategies for Interactive Learning Tasks. In Handbook of Research on Educational Communications and Technology, 3rd ed.; Routledge: London, UK, 2008; pp. 125–143. [Google Scholar] [CrossRef]

- Van der Kleij, F.M.; Feskens, R.C.W.; Eggen, T.J.H.M. Effects of Feedback in a Computer-Based Learning Environment on Students’ Learning Outcomes: A Meta-Analysis. Rev. Educ. Res. 2015, 85, 475–511. [Google Scholar] [CrossRef]

- Goldstone, R.L.; Son, J.Y. The Transfer of Scientific Principles Using Concrete and Idealized Simulations. J. Learn. Sci. 2005, 14, 69–110. [Google Scholar] [CrossRef]

- Belenky, D.M.; Schalk, L. The Effects of Idealized and Grounded Materials on Learning, Transfer, and Interest: An Organizing Framework for Categorizing External Knowledge Representations. Educ. Psychol. Rev. 2014, 26, 27–50. [Google Scholar] [CrossRef]

- D’Angelo, V.S.; Trench, M. Defending Diversity: Providing Examples from Different Domains Enhances Application of System Principles beyond the Domains Covered by the Examples. In Proceedings of the Annual Meeting of the Cognitive Science Society, Toronto, ON, Canada, 27–30 July 2022; Volume 44. [Google Scholar]

- Snoddy, S.; Kurtz, K.J. Preventing inert knowledge: Category status promotes spontaneous structure-based retrieval of prior knowledge. J. Exp. Psychol. Learn. Mem. Cognit. 2021, 47, 571–607. [Google Scholar] [CrossRef]

- Premo, J.; Cavagnetto, A.; Honke, G.; Kurtz, K.J. Categories in conflict: Combating the application of an intuitive conception of inheritance with category construction. J. Res. Sci. Teach. 2019, 56, 24–44. [Google Scholar] [CrossRef]

- Hsu, J.C. Multiple Comparisons. In Multiple Comparisons; Springer: New York, NY, USA, 1996. [Google Scholar] [CrossRef]

- Silver, E.A. Student Perceptions of Relatedness among Mathematical Verbal Problems. J. Res. Math. Educ. 1979, 10, 195–210. [Google Scholar] [CrossRef]

- Markman, A.B.; Ross, B.H. Category Use and Category Learning. Psychol. Bull. 2003, 129, 592–613. [Google Scholar] [CrossRef] [PubMed]

- Day, S.B.; Goldstone, R.L. Analogical Transfer From a Simulated Physical System. J. Exp. Psychol. Learn. Mem. Cogn. 2011, 37, 551–567. [Google Scholar] [CrossRef] [PubMed]

- Gray, M.E.; Holyoak, K.J. Individual differences in relational reasoning. Mem. Cogn. 2020, 48, 96–110. [Google Scholar] [CrossRef] [PubMed]

- Arthur, W.; Tubre, T.C.; Paul, D.S.; Sanchez-Ku, M.L. College-Sample Psychometric and Normative Data on a Short Form of the Raven Advanced Progressive Matrices Test. J. Psychoeduc. Assess. 1999, 17, 354–361. [Google Scholar] [CrossRef]

- Stamenković, D.; Ichien, N.; Holyoak, K.J. Metaphor comprehension: An individual-differences approach. J. Mem. Lang. 2019, 105, 108–118. [Google Scholar] [CrossRef]

- Ericsson, A.K.; Kintsch, W. Long-term working memory. Psychol. Rev. 1995, 102, 211–245. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive Load Theory. Psychol. Learn. Motiv. 2011, 55, 37–76. [Google Scholar] [CrossRef]

- Sweller, J. Implications of cognitive load theory for multimedia learning. In The Cambridge Handbook of Multimedia Learning, 2nd ed.; Mayer, R.E., Ed.; Cambridge University Press: Cambridge, UK, 2005; pp. 19–30. [Google Scholar] [CrossRef]

- Alloway, T.P. Working Memory, but Not IQ, Predicts Subsequent Learning in Children with Learning Difficulties. Eur. J. Psychol. Assess. 2009, 25, 92–98. [Google Scholar] [CrossRef]

- Greiff, S.; Wüstenberg, S.; Avvisati, F. Computer-generated log-file analyses as a window into students’ minds? A showcase study based on the PISA 2012 assessment of problem solving. Comput. Educ. 2015, 91, 92–105. [Google Scholar] [CrossRef]

- Wu, H.; Molnár, G. Logfile analyses of successful and unsuccessful strategy use in complex problem-solving: A cross-national comparison study. Eur. J. Psychol. Educ. 2021, 36, 1009–1032. [Google Scholar] [CrossRef]

- Molnár, G.; Csapó, B.B. The Efficacy and Development of Students’ Problem-Solving Strategies During Compulsory Schooling: Logfile Analyses. Front. Psychol. 2018, 9, 302. [Google Scholar] [CrossRef] [PubMed]

- Luwel, K.; Schillemans, V.; Onghena, P.; Verschaffel, L. Does switching between strategies within the same task involve a cost? Br. J. Psychol. 2009, 100, 753–771. [Google Scholar] [CrossRef] [PubMed]

- Samuelson, W.; Zeckhauser, R. Status quo bias in decision making. J. Risk Uncertain. 1988, 1, 7–59. [Google Scholar] [CrossRef]

- Donovan, J.J.; Radosevich, D.J. A meta-analytic review of the distribution of practice effect: Now you see it, now you don’t. J. Appl. Psychol. 1999, 84, 5. [Google Scholar] [CrossRef]

- Rittle-Johnson, B. Promoting Transfer: Effects of Self-Explanati on and Direct Instruction. Child Dev. 2006, 77, 1–15. [Google Scholar] [CrossRef]

- Atkinson, R.K.; Renkl, A.; Merrill, M.M. Transitioning From Studying Examples to Solving Problems: Effects of Self-Explanation Prompts and Fading Worked-Out Steps. J. Educ. Psychol. 2003, 95, 774–783. [Google Scholar] [CrossRef]

- Aleven, V.A.; Koedinger, K.R. An effective metacognitive strategy: Learning by doing and explaining with a computer-based Cognitive Tutor. Cogn. Sci. 2002, 26, 147–179. [Google Scholar] [CrossRef]

- Danner, D.; Hagemann, D.; Holt, D.V.; Hager, M.; Schankin, A.; Wüstenberg, S.; Funke, J. Measuring Performance in Dynamic Decision Making. J. Individ. Differ. 2011, 32, 225–233. [Google Scholar] [CrossRef]

- Gonzalez, C.; Thomas, R.P.; Vanyukov, P. The relationships between cognitive ability and dynamic decision making. Intelligence 2005, 33, 169–186. [Google Scholar] [CrossRef]

- Tetzlaff, L.; Schmiedek, F.; Brod, G. Developing Personalized Education: A Dynamic Framework. Educ. Psychol. Rev. 2021, 33, 863–882. [Google Scholar] [CrossRef]

- Walkington, C.; Bernacki, M.L. Appraising research on personalized learning: Definitions, theoretical alignment, advancements, and future directions. J. Res. Technol. Educ. 2020, 52, 235–252. [Google Scholar] [CrossRef]

- Engle, R.A.; Lam, D.P.; Meyer, X.S.; Nix, S.E. How Does Expansive Framing Promote Transfer? Several Proposed Explanations and a Research Agenda for Investigating Them. Educ. Psychol. 2012, 47, 215–231. [Google Scholar] [CrossRef]

- Engle, R.A.; Nguyen, P.D.; Mendelson, A. The influence of framing on transfer: Initial evidence from a tutoring experiment. Instr. Sci. 2011, 39, 603–628. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kessler, F.; Proske, A.; Urbas, L.; Goldwater, M.; Krieger, F.; Greiff, S.; Narciss, S. Promoting Complex Problem Solving by Introducing Schema-Governed Categories of Key Causal Models. Behav. Sci. 2023, 13, 701. https://doi.org/10.3390/bs13090701

Kessler F, Proske A, Urbas L, Goldwater M, Krieger F, Greiff S, Narciss S. Promoting Complex Problem Solving by Introducing Schema-Governed Categories of Key Causal Models. Behavioral Sciences. 2023; 13(9):701. https://doi.org/10.3390/bs13090701

Chicago/Turabian StyleKessler, Franziska, Antje Proske, Leon Urbas, Micah Goldwater, Florian Krieger, Samuel Greiff, and Susanne Narciss. 2023. "Promoting Complex Problem Solving by Introducing Schema-Governed Categories of Key Causal Models" Behavioral Sciences 13, no. 9: 701. https://doi.org/10.3390/bs13090701

APA StyleKessler, F., Proske, A., Urbas, L., Goldwater, M., Krieger, F., Greiff, S., & Narciss, S. (2023). Promoting Complex Problem Solving by Introducing Schema-Governed Categories of Key Causal Models. Behavioral Sciences, 13(9), 701. https://doi.org/10.3390/bs13090701