Race, Gender, and the U.S. Presidency: A Comparison of Implicit and Explicit Biases in the Electorate

Abstract

:1. Introduction and Theoretical Background

2. Research Questions

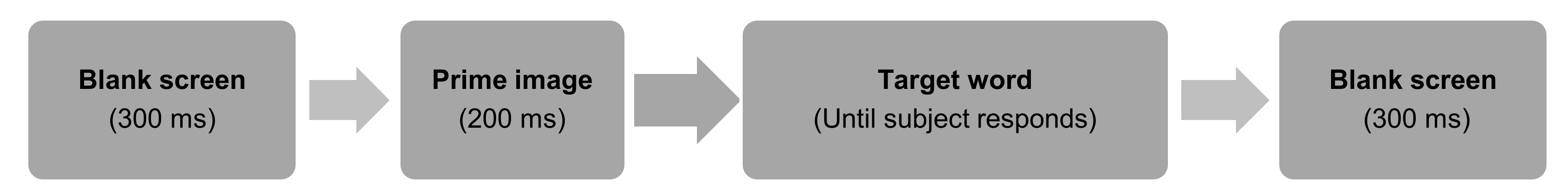

3. Method

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Appendix A

Appendix B

- Discrimination against blacks is no longer a problem in the United States.

- It is easy to understand the anger of black people in America.*

- Blacks have more influence upon school desegregation plans than they ought to have.

- Blacks are getting too demanding in their push for equal rights.

- Blacks should not push themselves where they are not wanted.

- Over the past few years, blacks have gotten more economically than they deserve.

- Over the past few years, the government and news media have shown more respect to blacks than they deserve.

- Discrimination against women in the labor force is no longer a problem in the U.S.

- I consider the present employment system to be unfair to women.

- Women shouldn’t push themselves where they are not wanted.

- Women will make more progress by being patient and not pushing too hard for change.

- It is difficult to work for a female boss.

- Women’s requests in terms of equality between the sexes are simply exaggerated.

- Over the past few years, women have gotten more from the government than they deserve.

- Universities are wrong to admit women in costly programs such as medicine, when in fact a large number will leave their jobs after a few years to raise their children.

- In order not to appear sexist, many men are inclined to overcompensate women.

- Due to social pressures, firms frequently have to hire underqualified women.

- In a fair employment system, men and women would be considered equal. *

References

- Fazio, R.H.; Olson, M.A. Implicit measures in social cognition research: Their meaning and use. Annu. Rev. Psychol. 2003, 54, 297–327. [Google Scholar] [CrossRef] [PubMed]

- Gawronski, B.; De Houwer, J. Implicit measures in social and personality psychology. In Handbook of Research Methods in Social and Personality Psychology; Cambridge University Press: Cambridge, UK, 2014; pp. 283–331. [Google Scholar]

- Kahneman, D. Thinking, Fast and Slow; Macmillan: Basingstoke, UK, 2011. [Google Scholar]

- Sherman, J.W.; Gawronski, B.; Trope, Y. Dual-Process Theories of the Social Mind; Guilford Publications: New York, NY, USA, 2014. [Google Scholar]

- Pérez, E.O. Explicit evidence on the import of implicit attitudes: The IAT and immigration policy judgments. Political Behav. 2010, 32, 517–545. [Google Scholar] [CrossRef]

- Perez, E.O. Implicit attitudes: Meaning, measurement, and synergy with political science. Politics Groups Identities 2013, 1, 275–297. [Google Scholar] [CrossRef]

- Glaser, J.; Finn, C. How and why implicit attitudes should affect voting. PS Political Sci. Politics 2013, 46, 537–544. [Google Scholar] [CrossRef] [Green Version]

- Gawronski, B.; Galdi, S.; Arcuri, L. What can political psychology learn from implicit measures? Empirical evidence and new directions. Political Psychol. 2015, 36, 1–17. [Google Scholar] [CrossRef]

- Friese, M.; Smith, C.T.; Koever, M.; Bluemke, M. Implicit measures of attitudes and political voting behavior. Soc. Personal. Psychol. Compass 2016, 10, 188–201. [Google Scholar] [CrossRef]

- Greenwald, A.G.; Smith, C.T.; Sriram, N.; Bar-Anan, Y.; Nosek, B.A. Implicit race attitudes predicted vote in the 2008 US presidential election. Anal. Soc. Issues Public Policy 2009, 9, 241–253. [Google Scholar] [CrossRef]

- Pettigrew, T.F. Social psychological perspectives on Trump supporters. J. Soc. Political Psychol. 2017, 5, 107–116. [Google Scholar] [CrossRef]

- Schaffner, B.F.; MacWilliams, M.; Nteta, T. Explaining white polarization in the 2016 vote for president: The sobering role of racism and sexism. J. Public Int. Aff. 2018, 133, 8–9. [Google Scholar]

- Finn, C.; Glaser, J. Voter affect and the 2008 US presidential election: Hope and race mattered. Anal. Soc. Issues Public Policy 2010, 10, 262–275. [Google Scholar] [CrossRef]

- Payne, B.K.; Krosnick, J.A.; Pasek, J.; Lelkes, Y.; Akhtar, O.; Tompson, T. Implicit and explicit prejudice in the 2008 American presidential election. J. Exp. Soc. Psychol. 2010, 46, 367–374. [Google Scholar] [CrossRef]

- Pasek, J.; Tahk, A.; Lelkes, Y.; Krosnick, J.A.; Payne, B.K.; Akhtar, O.; Tompson, T. Determinants of turnout and candidate choice in the 2008 US presidential election: Illuminating the impact of racial prejudice and other considerations. Public Opin. Q. 2009, 73, 943–994. [Google Scholar] [CrossRef]

- Arkes, H.R.; Tetlock, P.E. Attributions of implicit prejudice, or “would Jesse Jackson ’fail’ the Implicit Association Test?”. Psychol. Inq. 2004, 15, 257–278. [Google Scholar] [CrossRef]

- Greenwald, A.G.; Poehlman, T.A.; Uhlmann, E.L.; Banaji, M.R. Understanding and using the Implicit Association Test: III. Meta-analysis of predictive validity. J. Personal. Soc. Psychol. 2009, 97, 17. [Google Scholar] [CrossRef] [Green Version]

- Blanton, H.; Mitchell, G. Reassessing the predictive validity of the IAT II: Reanalysis of Heider & Skowronski (2007). N. Am. J. Psychol. 2011, 12, 99–106. [Google Scholar]

- Blanton, H.; Jaccard, J. Not so fast: Ten challenges to importing implicit attitude measures to media psychology. Media Psychol. 2015, 18, 338–369. [Google Scholar] [CrossRef]

- Mitchell, G.; Tetlock, P.E. Popularity as a poor proxy for utility: The case of implicit prejudice. In Psychological Science under Scrutiny: Recent Challenges and Proposed Solutions; Lilienfeld, S.O., Waldman, I.D., Eds.; Wiley Blackwell: Hoboken, NJ, USA, 2017; pp. 164–195. [Google Scholar]

- Oswald, F.L.; Mitchell, G.; Blanton, H.; Jaccard, J.; Tetlock, P.E. Predicting ethnic and racial discrimination: A meta-analysis of IAT criterion studies. J. Personal. Soc. Psychol. 2013, 105, 171. [Google Scholar] [CrossRef]

- Oswald, F.L.; Mitchell, G.; Blanton, H.; Jaccard, J.; Tetlock, P.E. Using the IAT to predict ethnic and racial discrimination: Small effect sizes of unknown societal significance. J. Personal. Soc. Psychol. 2015, 108, 562–571. [Google Scholar] [CrossRef]

- Greenwald, A.G.; Banaji, M.R.; Nosek, B.A. Statistically small effects of the Implicit Association Test can have societally large effects. J. Personal. Soc. Psychol. 2015, 108, 553–561. [Google Scholar] [CrossRef] [Green Version]

- Carlsson, R.; Agerström, J. A closer look at the discrimination outcomes in the IAT literature. Scand. J. Psychol. 2016, 57, 278–287. [Google Scholar] [CrossRef]

- Wittenbrink, B.; Schwarz, N. Implicit Measures of Attitudes; Guilford Press: New York, NY, USA, 2007. [Google Scholar]

- McClelland, J.L.; Rumelhart, D.E. An interactive activation model of context effects in letter perception: 1 An account of basic findings. Psychol. Rev. 1981, 88, 375–407. [Google Scholar] [CrossRef]

- Vonnahme, B. Associative memory and political decision making. In Oxford Research Encyclopedia of Politics; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- Hebb, D.O. The Organization of Behaviour; Wiley: New York, NY, USA, 1949. [Google Scholar]

- Perea, M.; Rosa, E. Does the proportion of associatively related pairs modulate the associative priming effect at very brief stimulus-onset asynchronies? Acta Psychol. 2002, 110, 103–124. [Google Scholar] [CrossRef] [Green Version]

- Tougas, F.; Brown, R.; Beaton, A.M.; Joly, S. Neosexism: Plus ça change, plus c’est pareil. Personal. Soc. Psychol. Bull. 1995, 21, 842–849. [Google Scholar] [CrossRef]

- McConahay, J.B. Modern Racism, Ambivalence, and the Modern Racism Scale; Academic Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Campbell, B.; Schellenberg, E.G.; Senn, C.Y. Evaluating measures of con-temporary sexism. Psychol. Women Q. 1997, 21, 89–102. [Google Scholar] [CrossRef]

- Rudman, L.A.; Phelan, J.E. Sex Differences, Sexism, and Sex: The Social Psychology of Gender from Past to Present. In Social Psychology of Gender; Correll, S.J., Ed.; Advances in Group Processes; Emerald Group Publishing Limited: Bingley, UK, 2007; Volume 24, pp. 19–45. [Google Scholar]

- Awad, G.H.; Cokley, K.; Ravitch, J. Attitudes toward affirmative action: A comparison of color-blind versus modern racist attitudes. J. Appl. Soc. Psychol. 2005, 35, 1384–1399. [Google Scholar] [CrossRef]

- Blatz, C.W.; Ross, M. Principled ideology or racism: Why do modern racists oppose race-based social justice programs? J. Exp. Soc. Psychol. 2009, 45, 258–261. [Google Scholar] [CrossRef]

- Morrison, T.G.; Kiss, M. Modern Racism Scale. In Encyclopedia of Personality and Individual Differences; Zeigler-Hill, V., Shackelford, T., Eds.; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Woods, A.T.; Velasco, C.; Levitan, C.A.; Wan, X.; Spence, C. Conducting perception research over the internet: A tutorial review. PeerJ 2015, 3, e1058. [Google Scholar] [CrossRef] [Green Version]

- Hofmann, W.; Gawronski, B.; Gschwendner, T.; Le, H.; Schmitt, M. A meta-analysis on the correlation between the Implicit Association Test and explicit self-report measures. Personal. Soc. Psychol. Bull. 2005, 31, 1369–1385. [Google Scholar] [CrossRef]

| Characteristic | Percentage (%) |

|---|---|

| Female | 65 |

| Black | 34 |

| Other race | 6 |

| Age 41–60 | 42 |

| Age 61+ | 14 |

| Some college | 42 |

| College degree | 33 |

| Graduate degree | 10 |

| 2nd income quartile | 21 |

| 3rd income quartile | 28 |

| 4th income quartile | 21 |

| Conservative Identity (z-Score) | Conservative Identity (z-Score) | Conservative Identity (z-Score) | Conservative Identity (z-Score) | Conservative Identity (z-Score) | |

|---|---|---|---|---|---|

| Panel A | |||||

| Implicit bias against females for undesirable traits (z-score) | −0.02 | −0.02 | |||

| Implicit bias against females for desirable traits (z-score) | 0.03 | 0.01 | |||

| Explicit sexism (z-score) | 0.35 *** | 0.35 *** | 0.35 *** | ||

| R2 | 0.11 | 0.11 | 0.21 | 0.21 | 0.21 |

| Panel B | |||||

| Implicit bias against blacks for undesirable traits (z-score) | 0.01 | −0.02 | |||

| Implicit bias against blacks for desirable traits (z-score) | 0.08 ** | 0.07 * | |||

| Explicit racism (z-score) | 0.34 *** | 0.34 *** | 0.33 *** | ||

| R2 | 0.11 | 0.11 | 0.19 | 0.19 | 0.20 |

| Republican Identity | Republican Identity | Republican Identity | Republican Identity | Republican Identity | |

|---|---|---|---|---|---|

| Panel A | |||||

| Implicit bias against females for undesirable traits (z-score) | −5.0 | 4.3 | |||

| Implicit bias against females for desirable traits (z-score) | 4.4 | 1.9 | |||

| Explicit sexism (z-score) | 27.9 *** | 27.8 *** | 27.7 *** | ||

| CCC (%) | 71.4 | 71.6 | 77.2 | 77.2 | 76.9 |

| Panel B | |||||

| Implicit bias against blacks for undesirable traits (z-score) | −1.4 | −3.2 | |||

| Implicit bias against blacks for desirable traits (z-score) | 6.8 * | 5.3 * | |||

| Explicit racism (z-score) | 31.2 *** | 31.5 *** | 30.7 *** | ||

| CCC (%) | 70.2 | 71.4 | 77.0 | 77.2 | 77.0 |

| Voted Romney | Voted Romney | Voted Romney | Voted Romney | Voted Romney | |

|---|---|---|---|---|---|

| Panel A | |||||

| Implicit bias against females for undesirable traits (z-score) | −5.1 | −5.0 | |||

| Implicit bias against females for desirable traits (z-score) | 5.6 * | 4.0 | |||

| Explicit sexism (z-score) | 23.7 *** | 23.7 *** | 23.3 *** | ||

| CCC (%) | 72.7 | 73.7 | 77.2 | 77.0 | 77.7 |

| Panel B | |||||

| Implicit bias against blacks for undesirable traits (z-score) | −1.0 | −2.0 | |||

| Implicit bias against blacks for desirable traits (z-score) | 7.5 ** | 6.1 * | |||

| Explicit racism (z-score) | 31.4 *** | 31.5 *** | 30.9 *** | ||

| CCC (%) | 71.7 | 73.1 | 78.1 | 78.7 | 78.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Calvert, G.A.; Evans, G.; Pathak, A. Race, Gender, and the U.S. Presidency: A Comparison of Implicit and Explicit Biases in the Electorate. Behav. Sci. 2022, 12, 17. https://doi.org/10.3390/bs12010017

Calvert GA, Evans G, Pathak A. Race, Gender, and the U.S. Presidency: A Comparison of Implicit and Explicit Biases in the Electorate. Behavioral Sciences. 2022; 12(1):17. https://doi.org/10.3390/bs12010017

Chicago/Turabian StyleCalvert, Gemma Anne, Geoffrey Evans, and Abhishek Pathak. 2022. "Race, Gender, and the U.S. Presidency: A Comparison of Implicit and Explicit Biases in the Electorate" Behavioral Sciences 12, no. 1: 17. https://doi.org/10.3390/bs12010017

APA StyleCalvert, G. A., Evans, G., & Pathak, A. (2022). Race, Gender, and the U.S. Presidency: A Comparison of Implicit and Explicit Biases in the Electorate. Behavioral Sciences, 12(1), 17. https://doi.org/10.3390/bs12010017