Results from the First Phase of the Seafloor Backscatter Processing Software Inter-Comparison Project

Abstract

1. Introduction

2. Materials and Methods

2.1. Selection of Test Backscatter Data

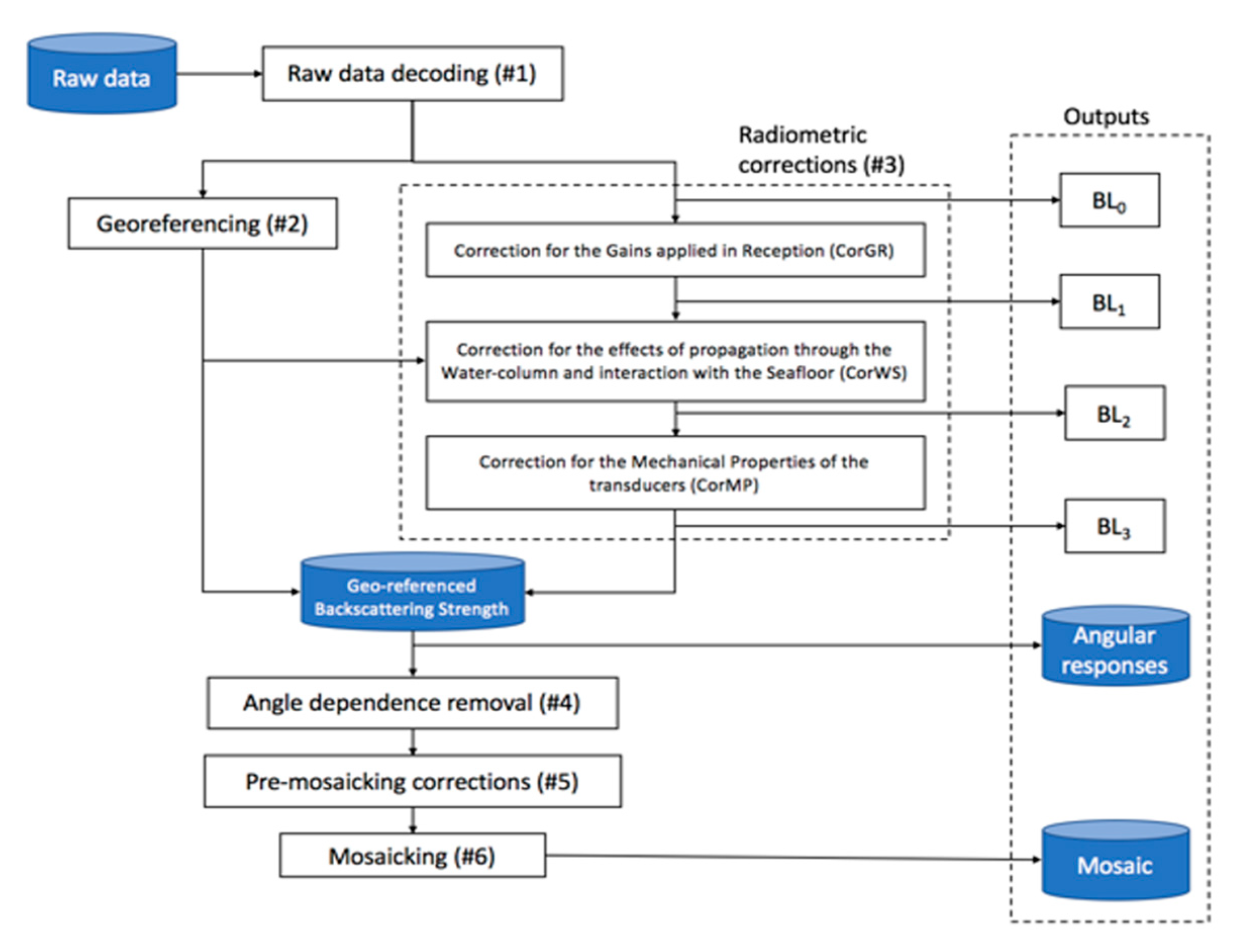

2.2. Selection of Intermediate Processed Backscatter Levels

2.2.1. BL0: The Backscatter Level as Read in The Raw Files

2.2.2. BL3: The Backscatter Level After Radiometric Corrections but Before Compensation for Angular Dependence

2.3. Data Processing by Software Developers

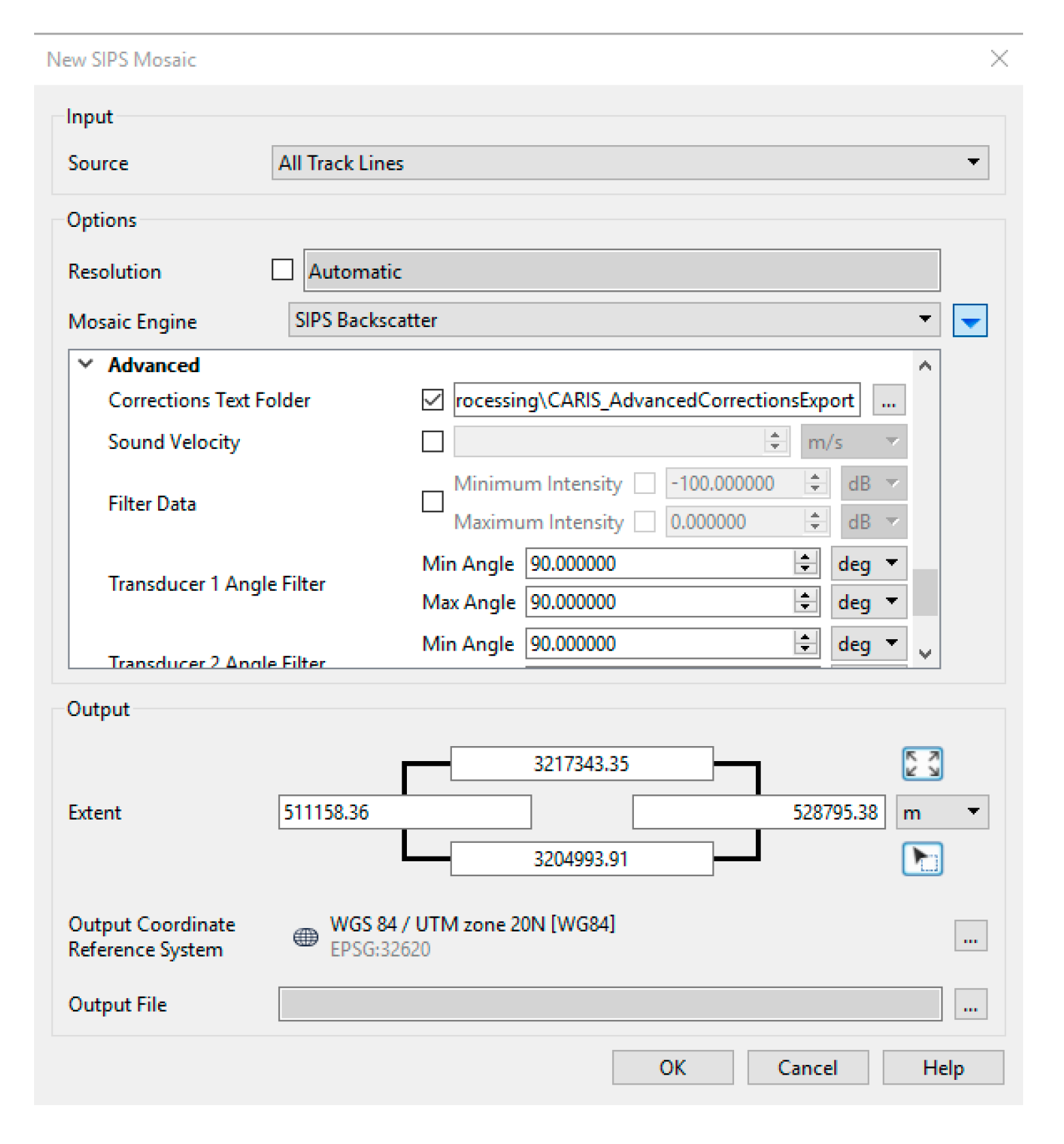

2.3.1. CARIS SIPS Backscatter Processing Workflow

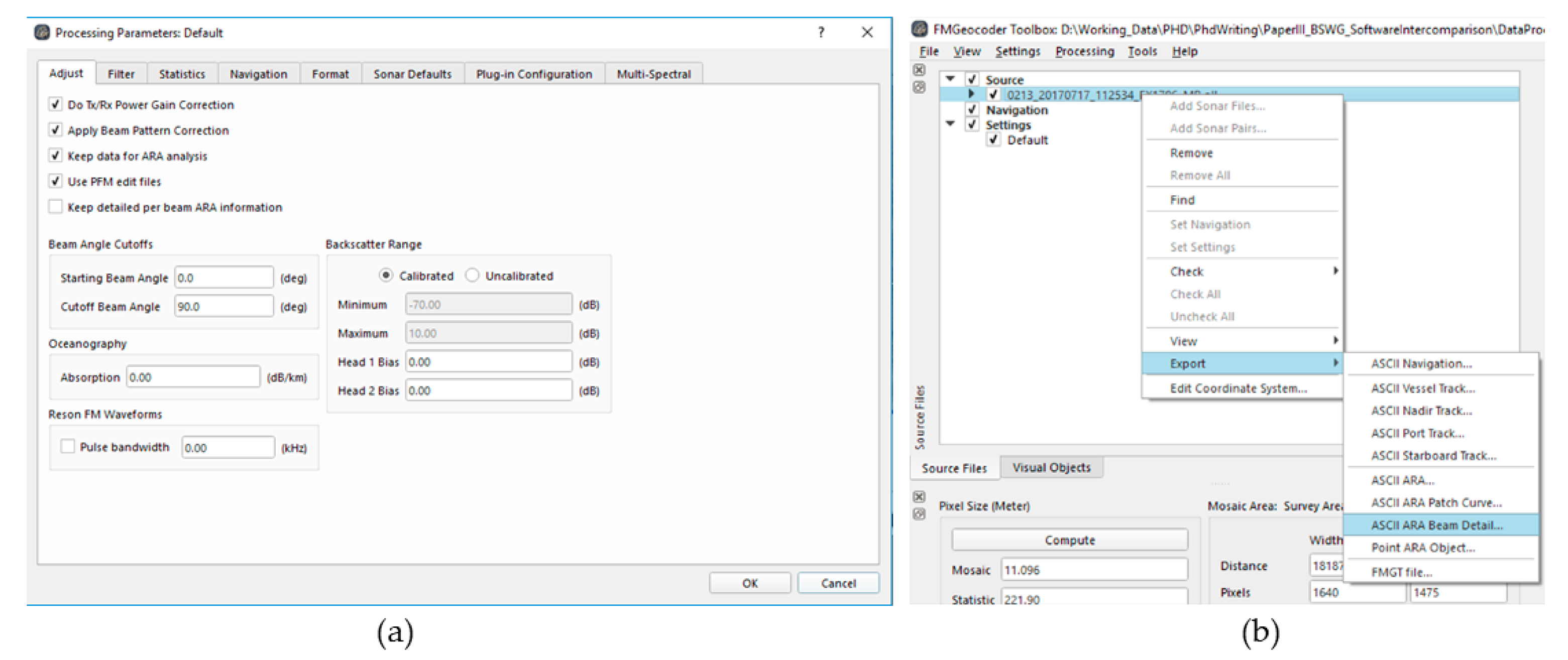

2.3.2. FMGT Backscatter Processing Workflow

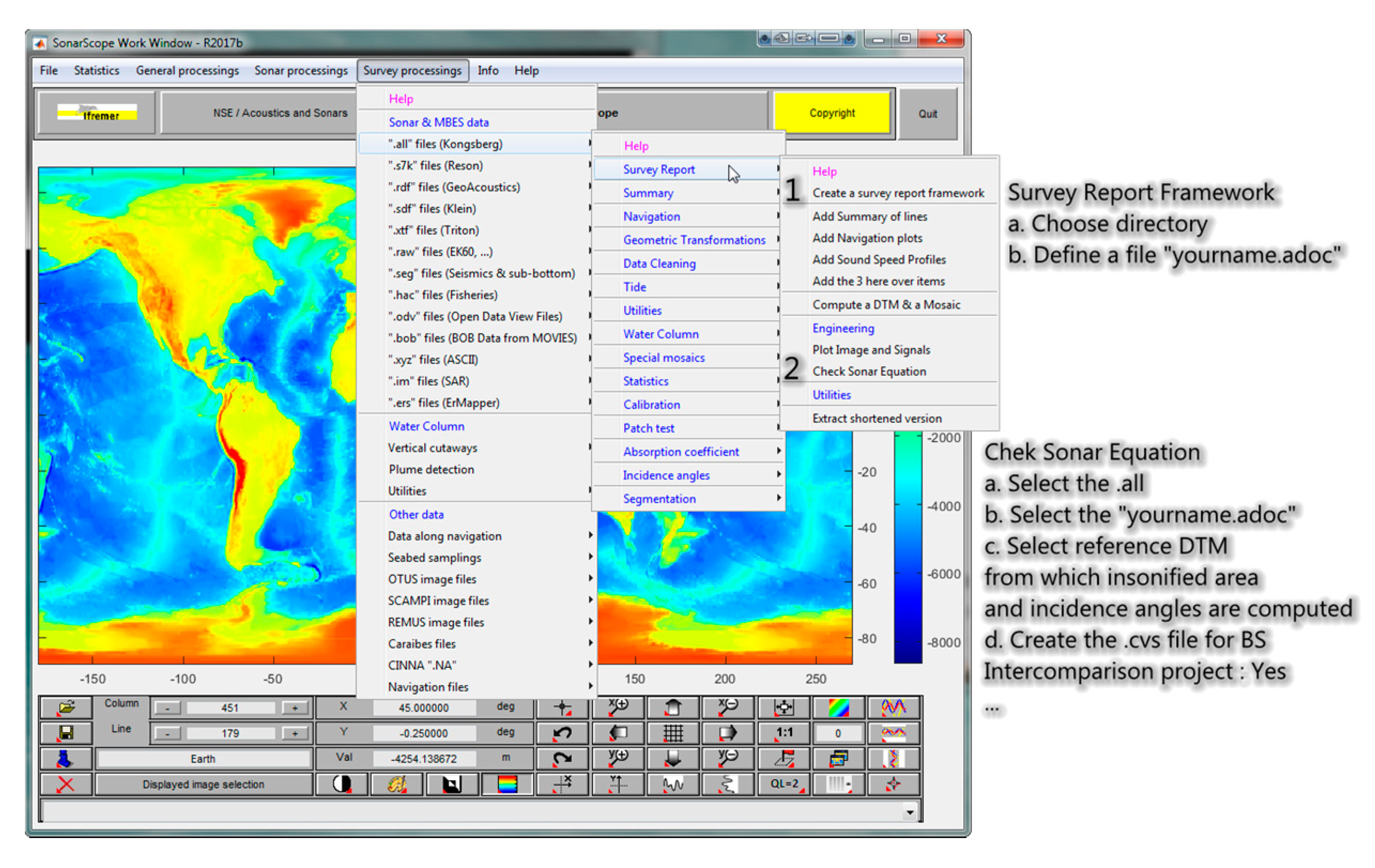

2.3.3. SonarScope Data Processing Workflow

2.3.4. MB Process Data Processing and SONAR2MAT Data Conversion

3. Results

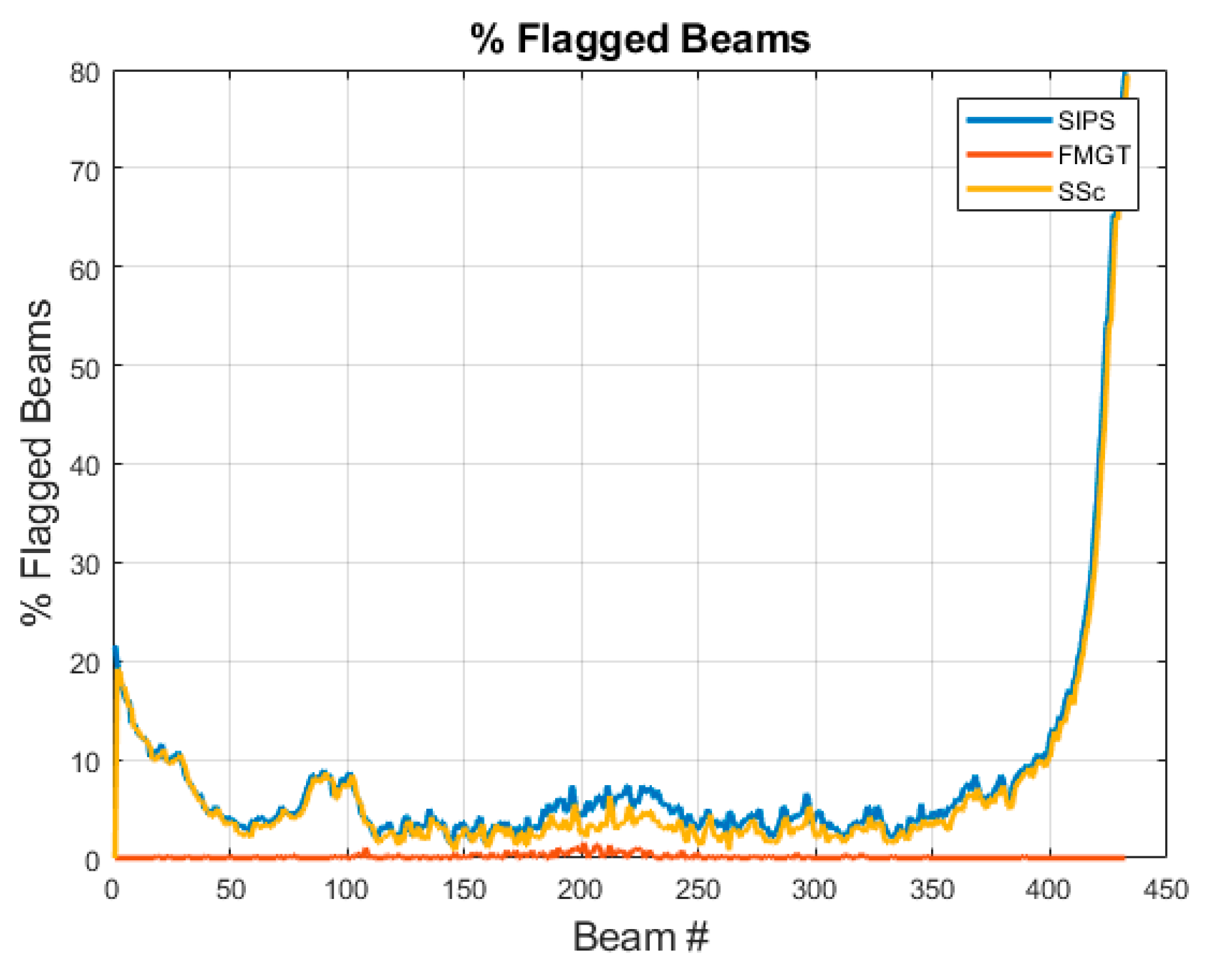

3.1. Flagged Invalid Beams

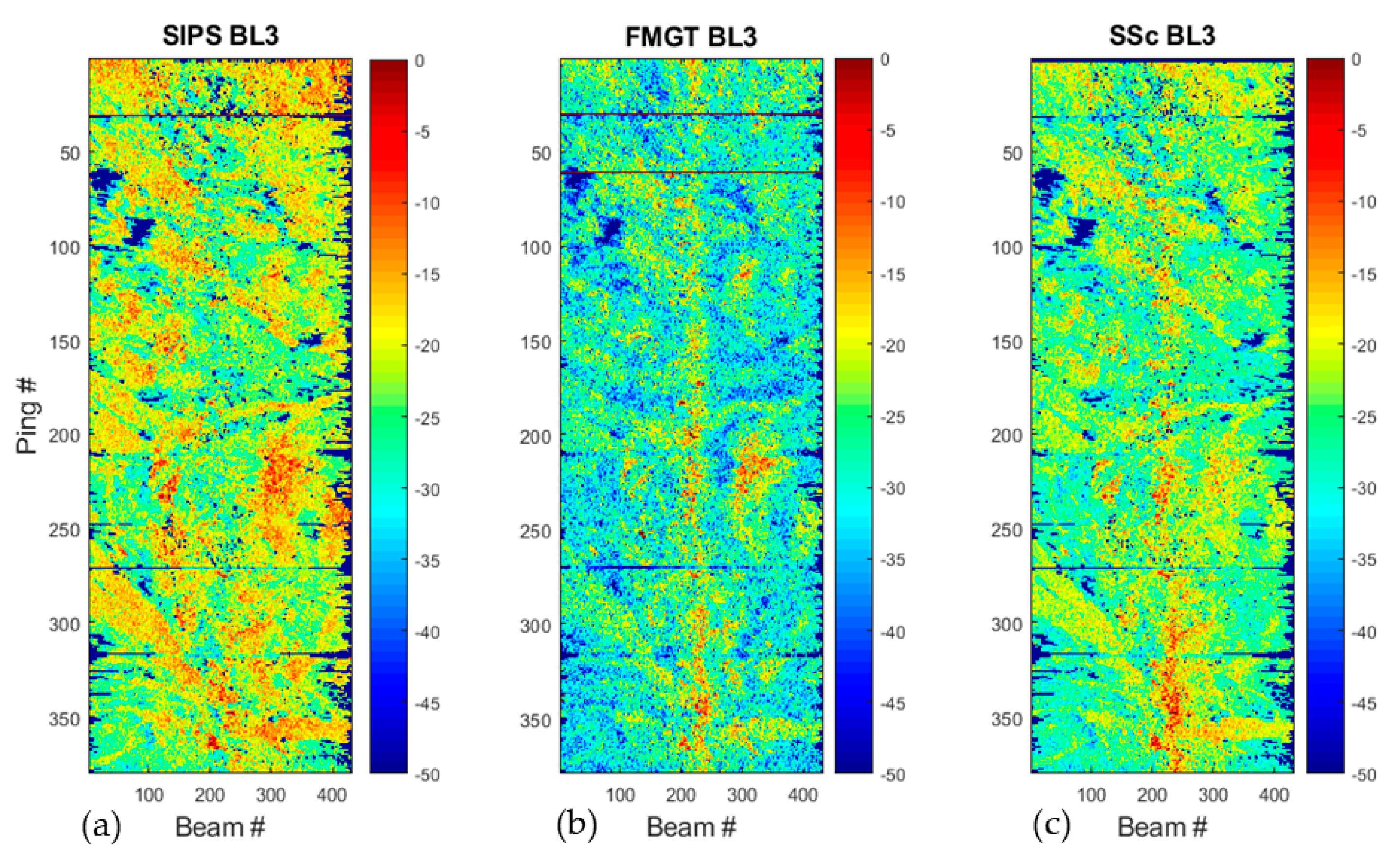

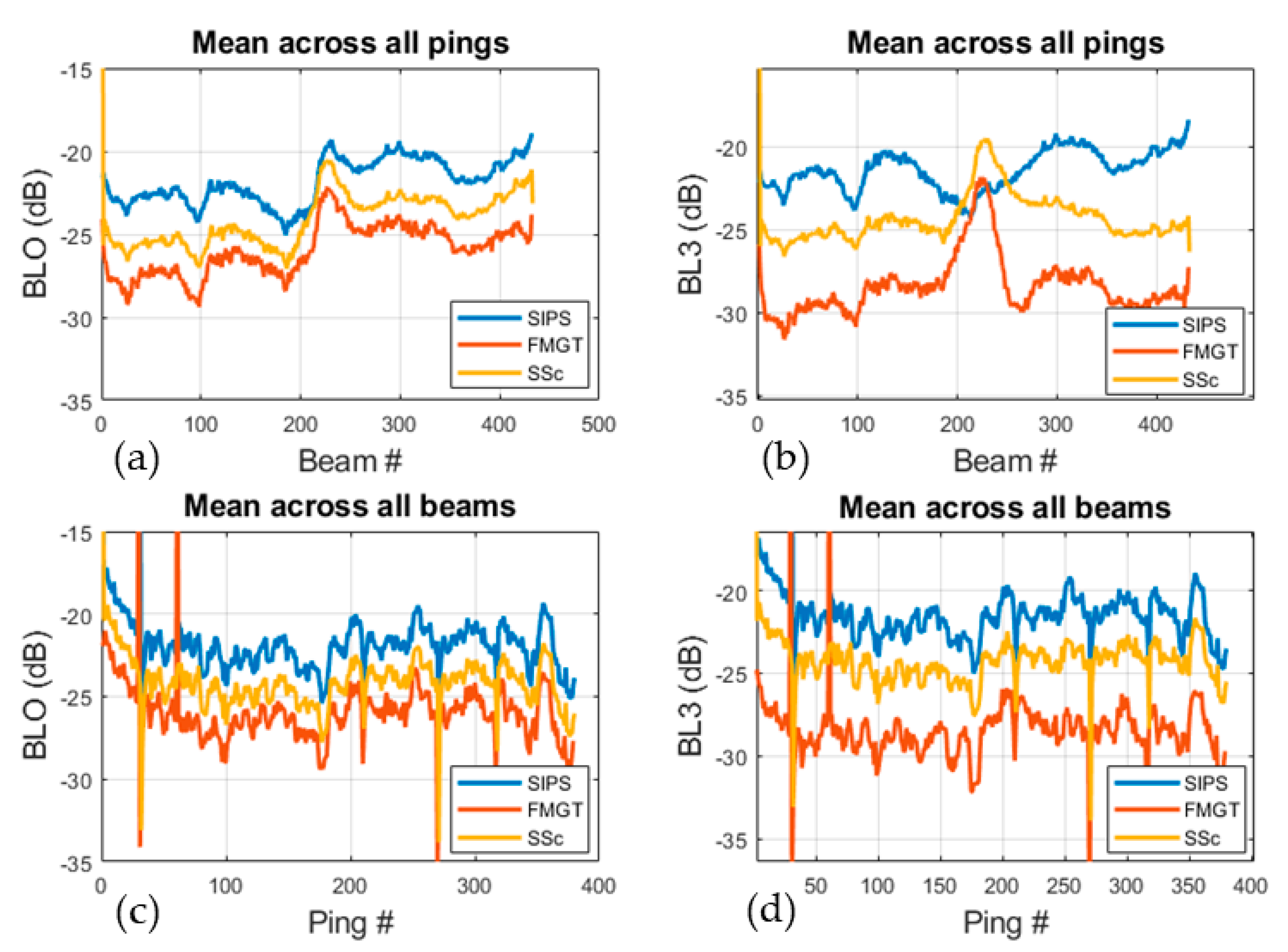

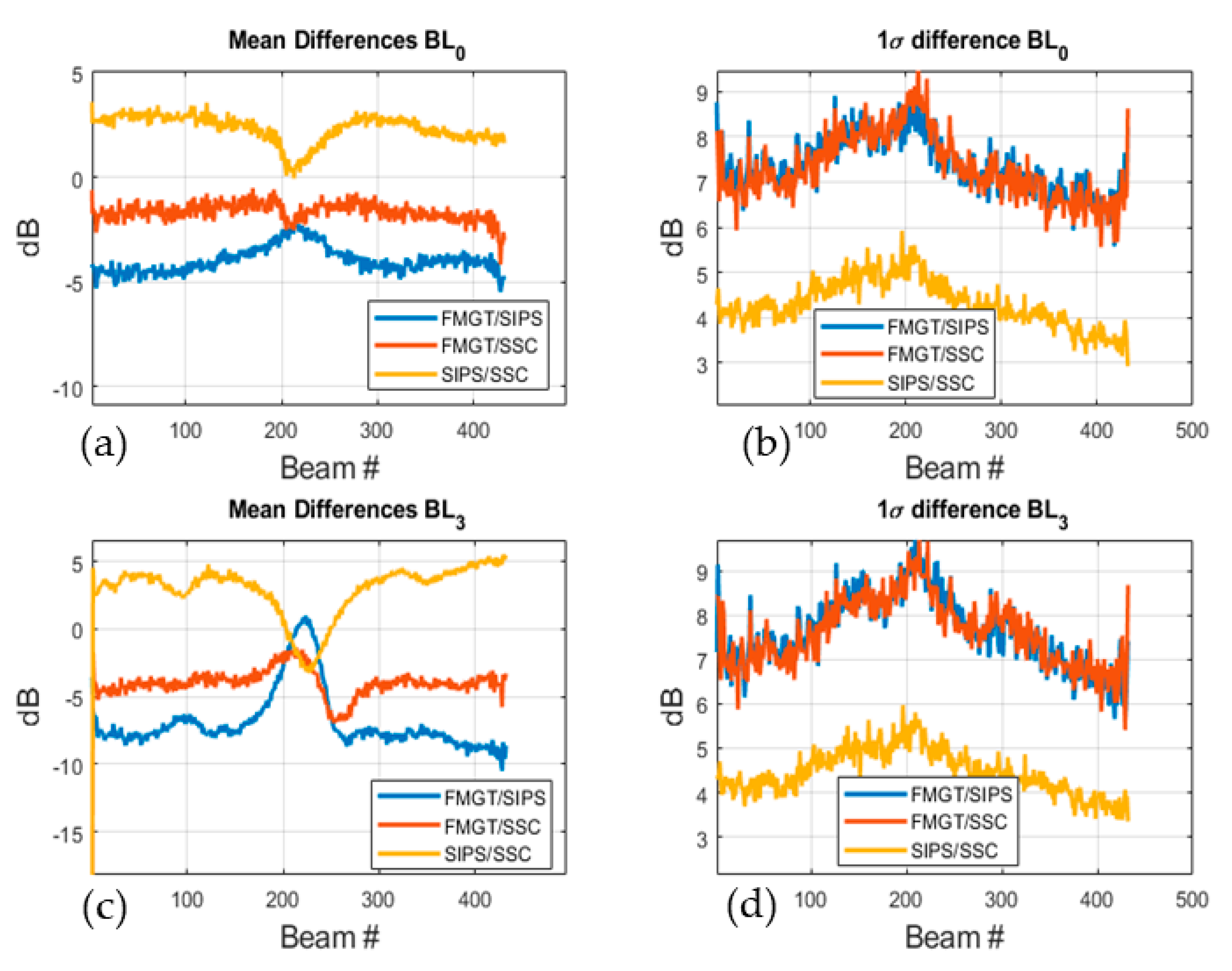

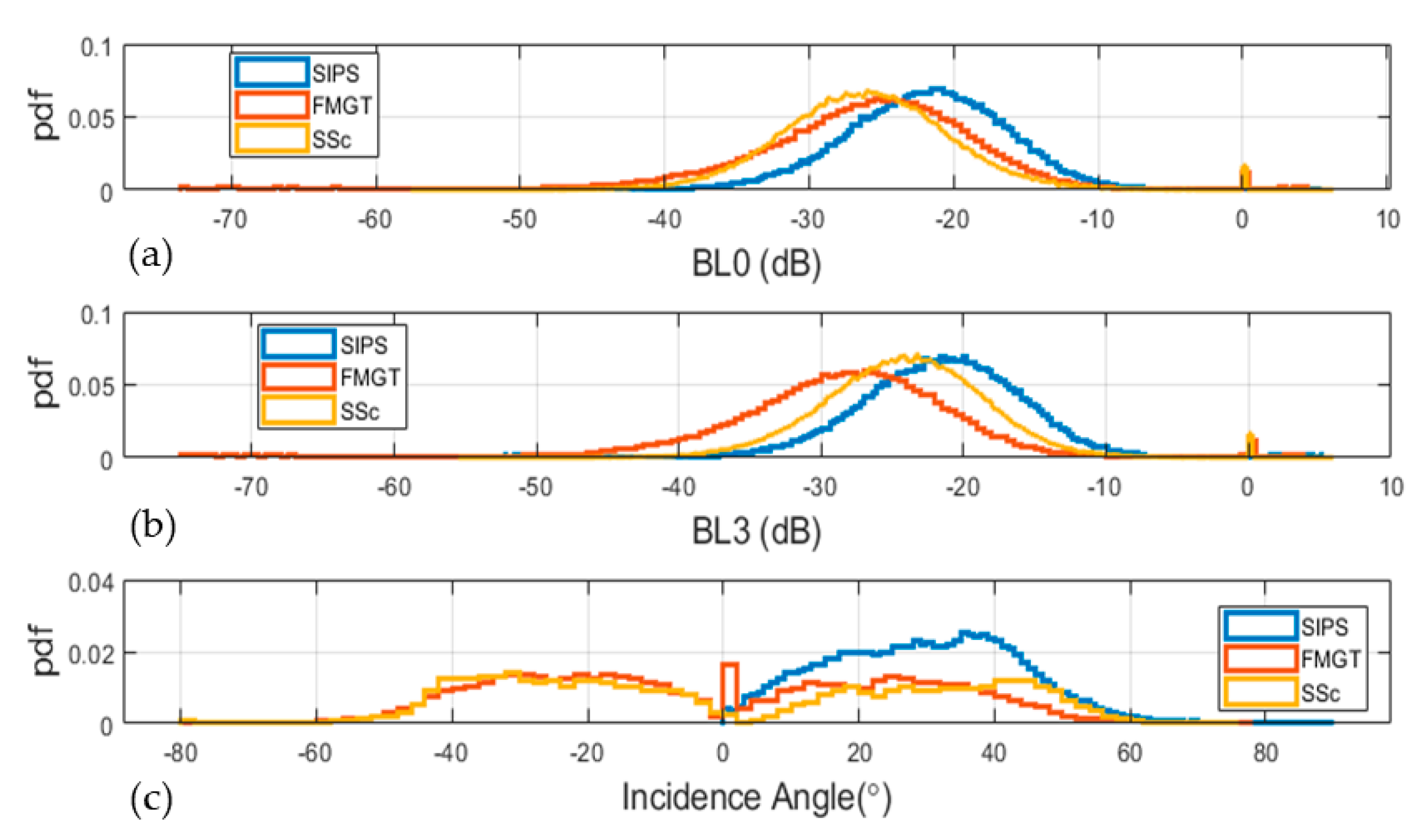

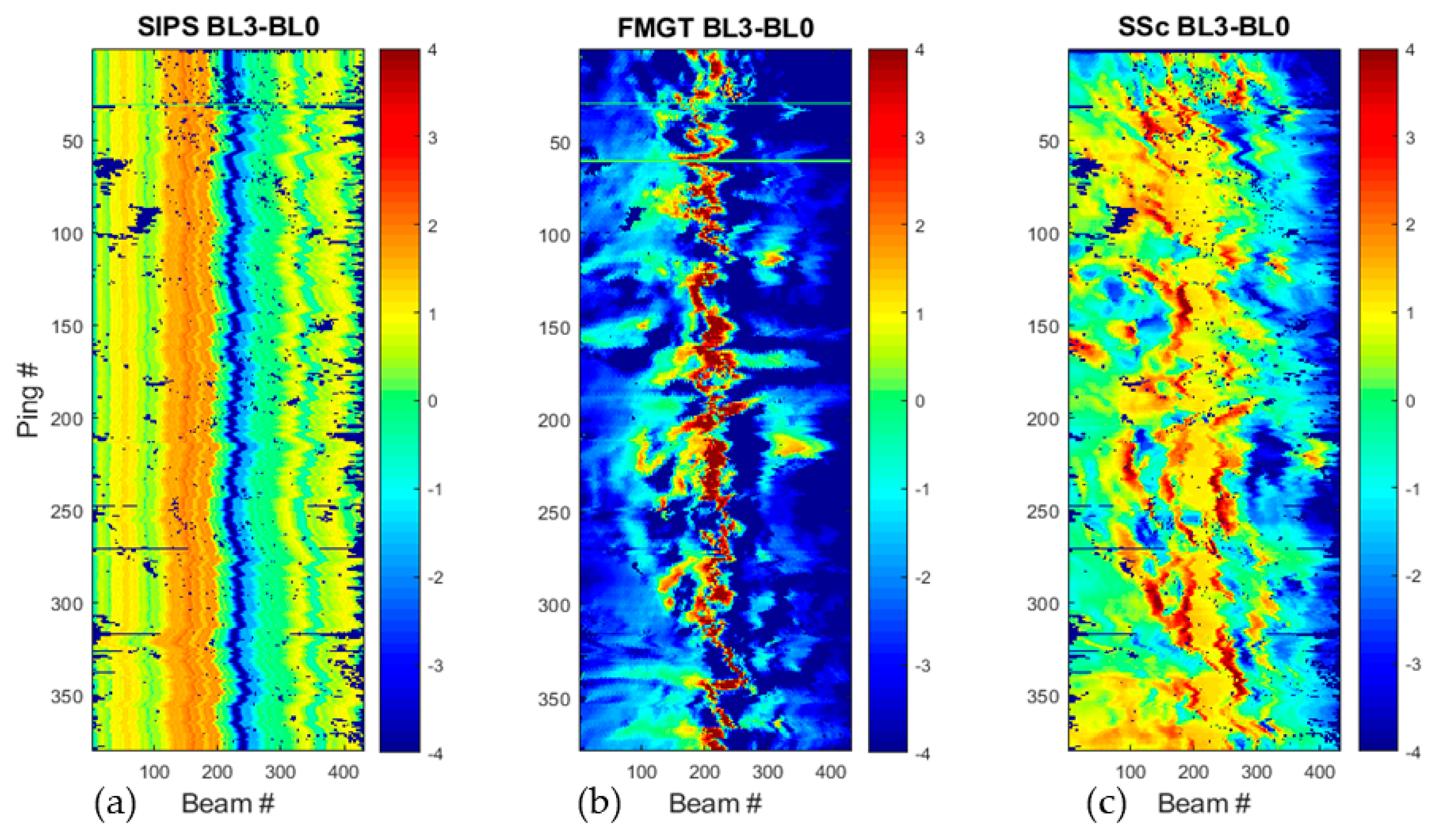

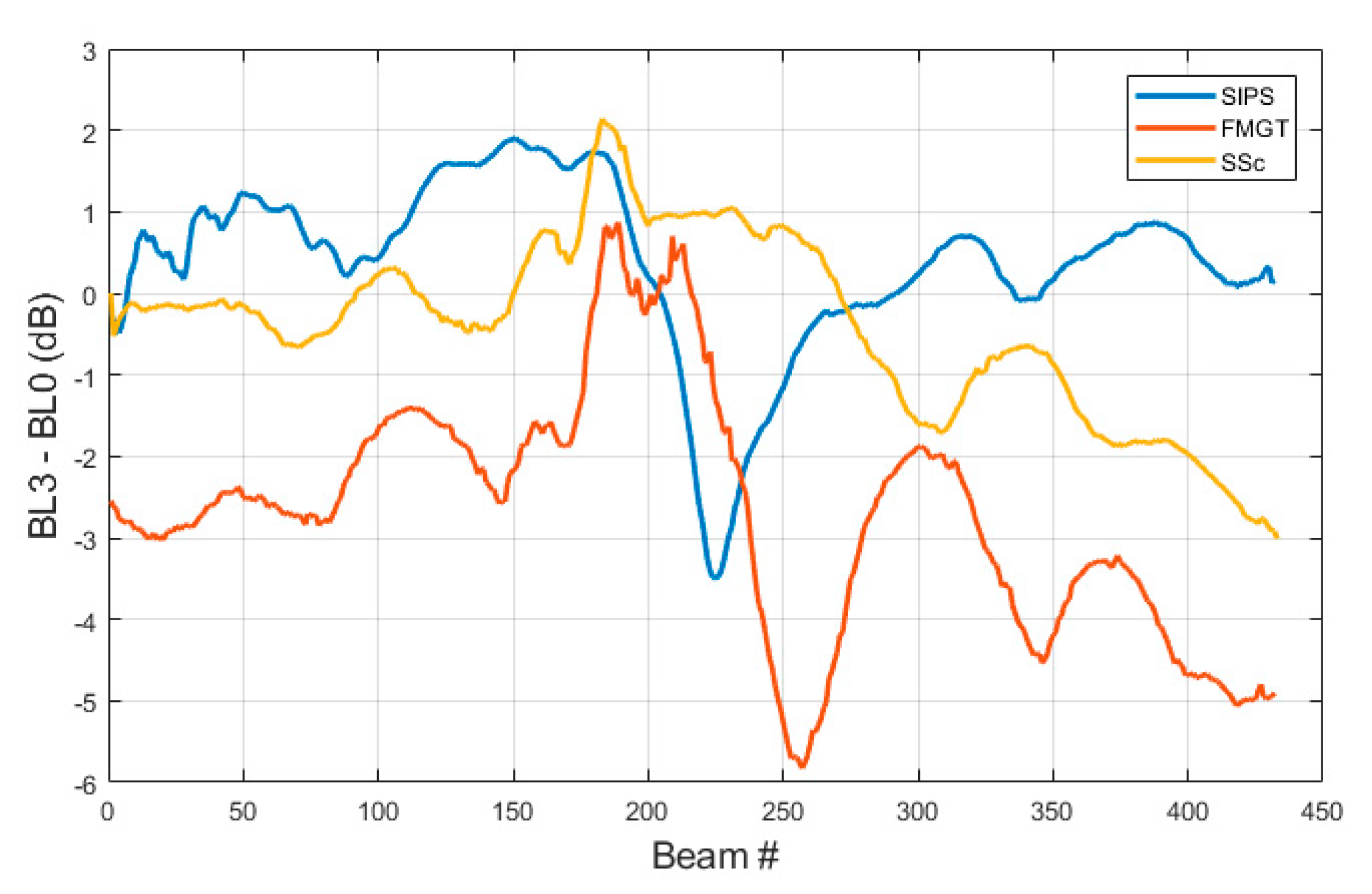

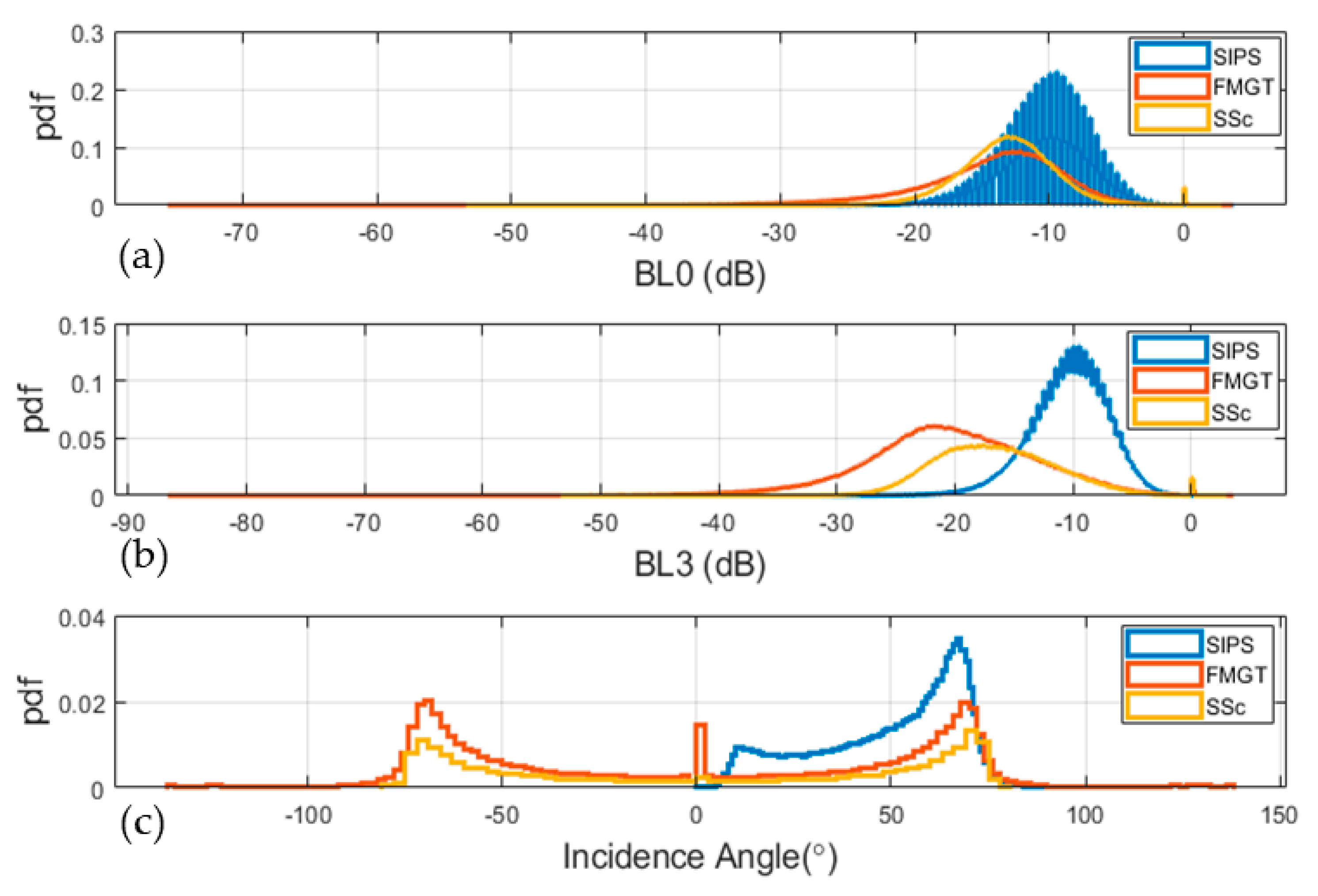

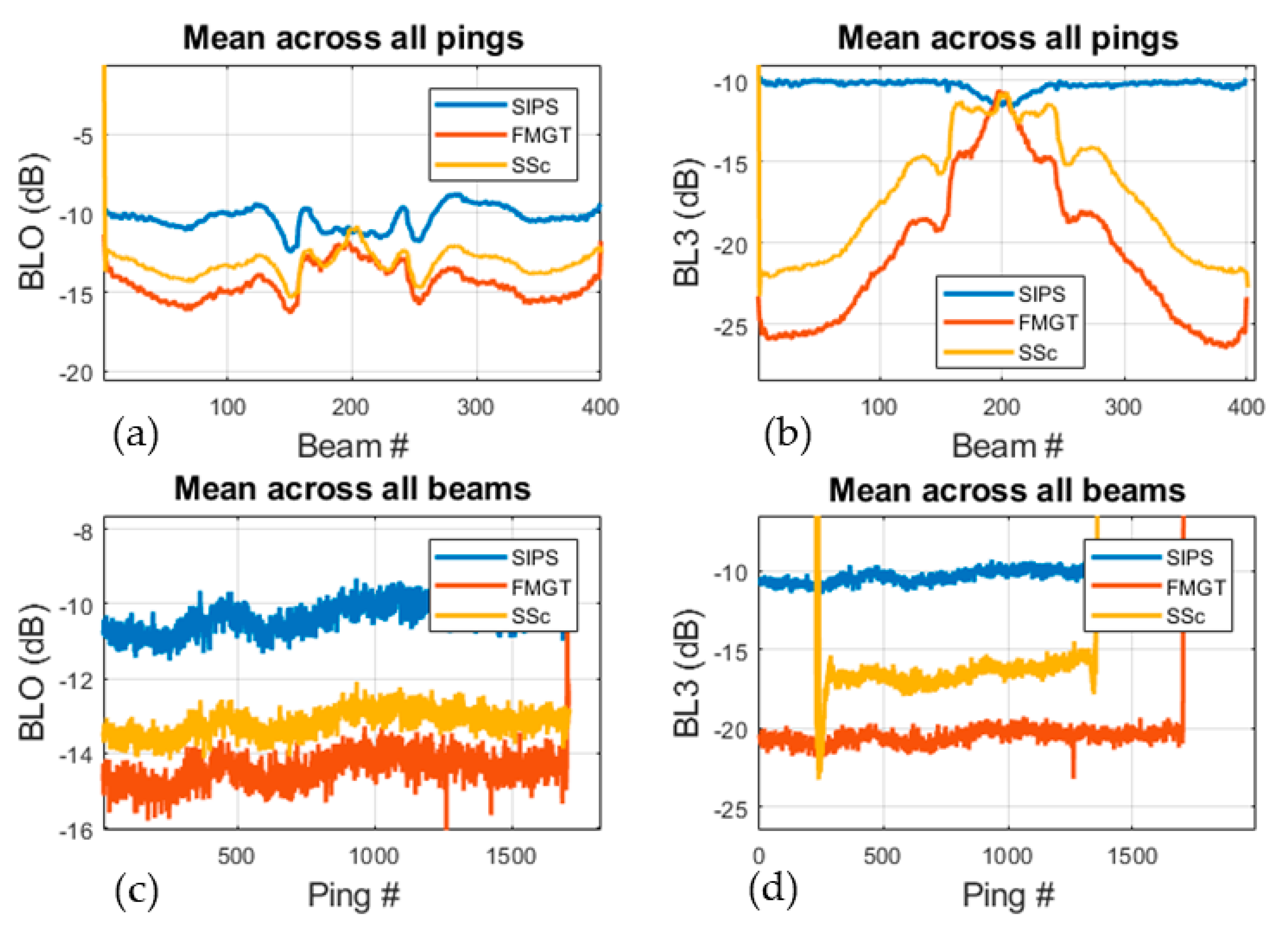

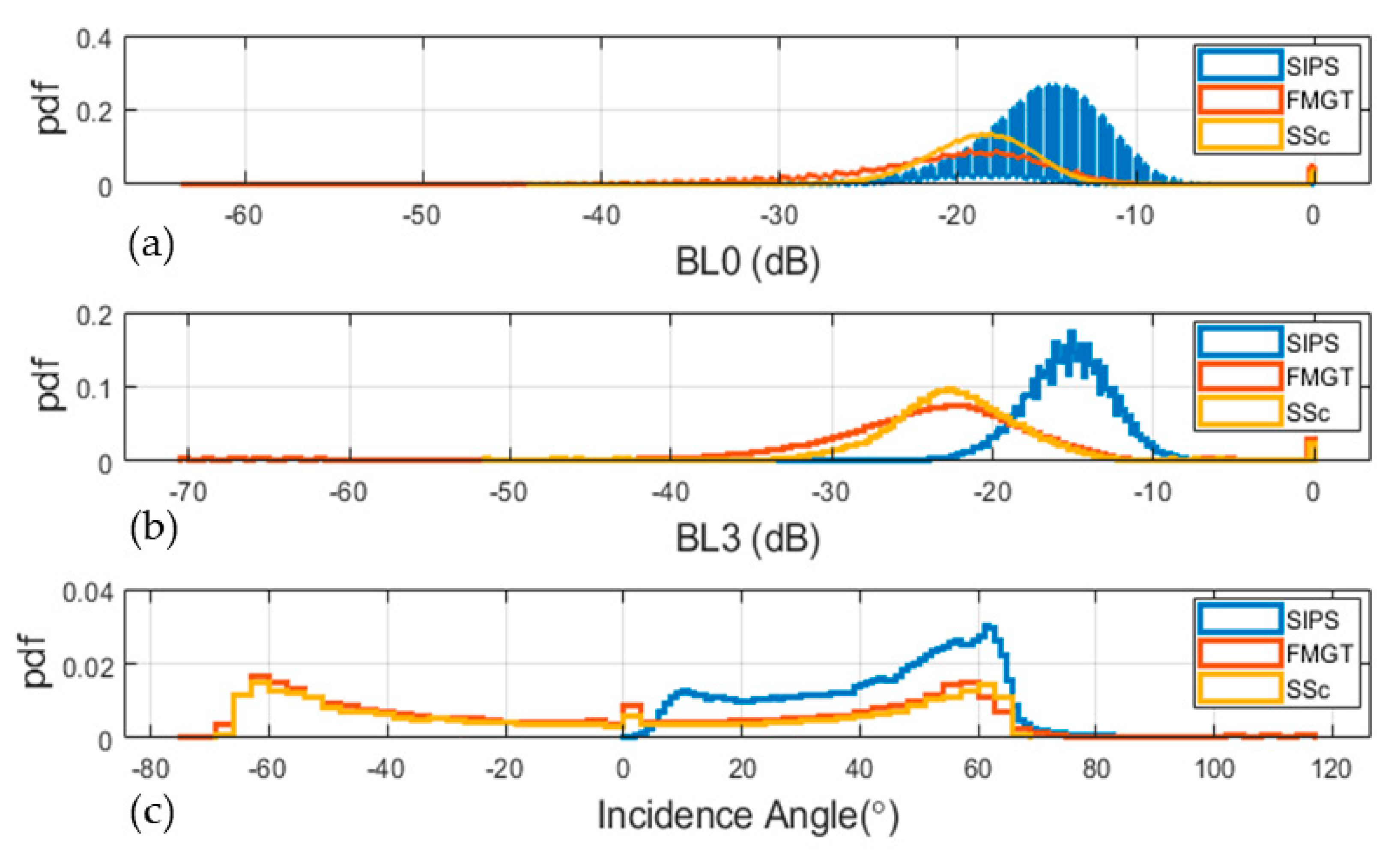

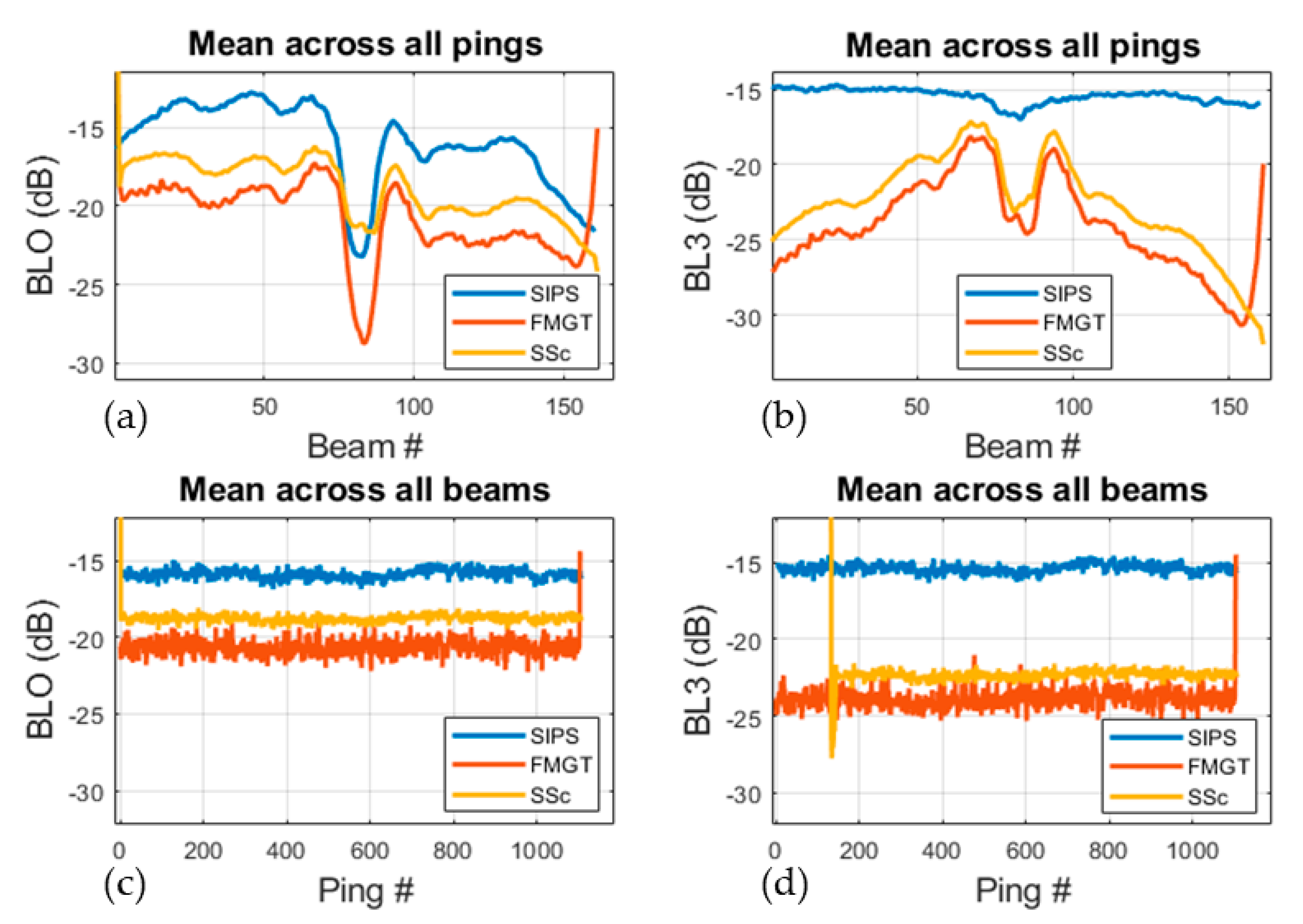

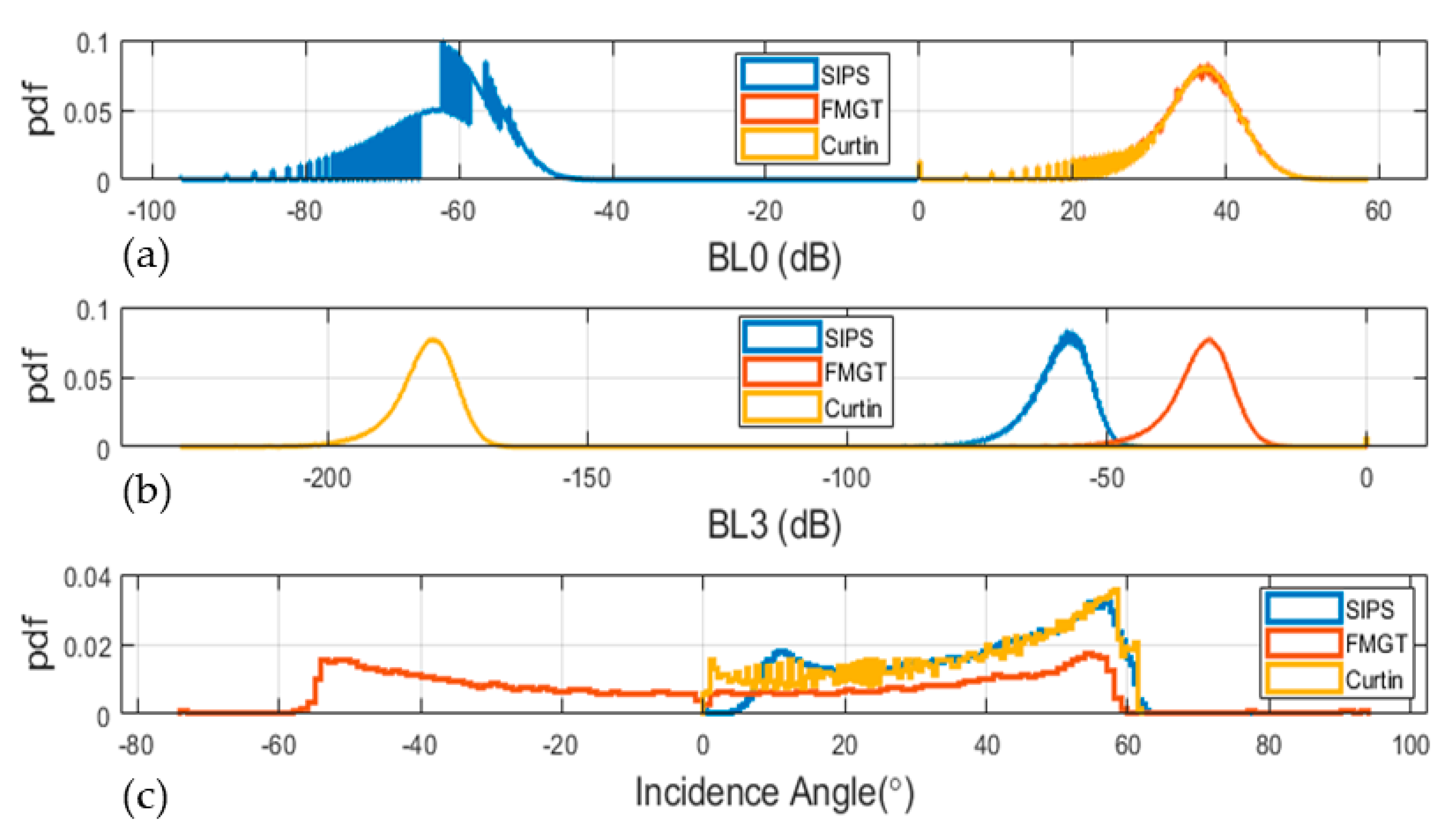

3.2. Comparison of BL0 and BL3

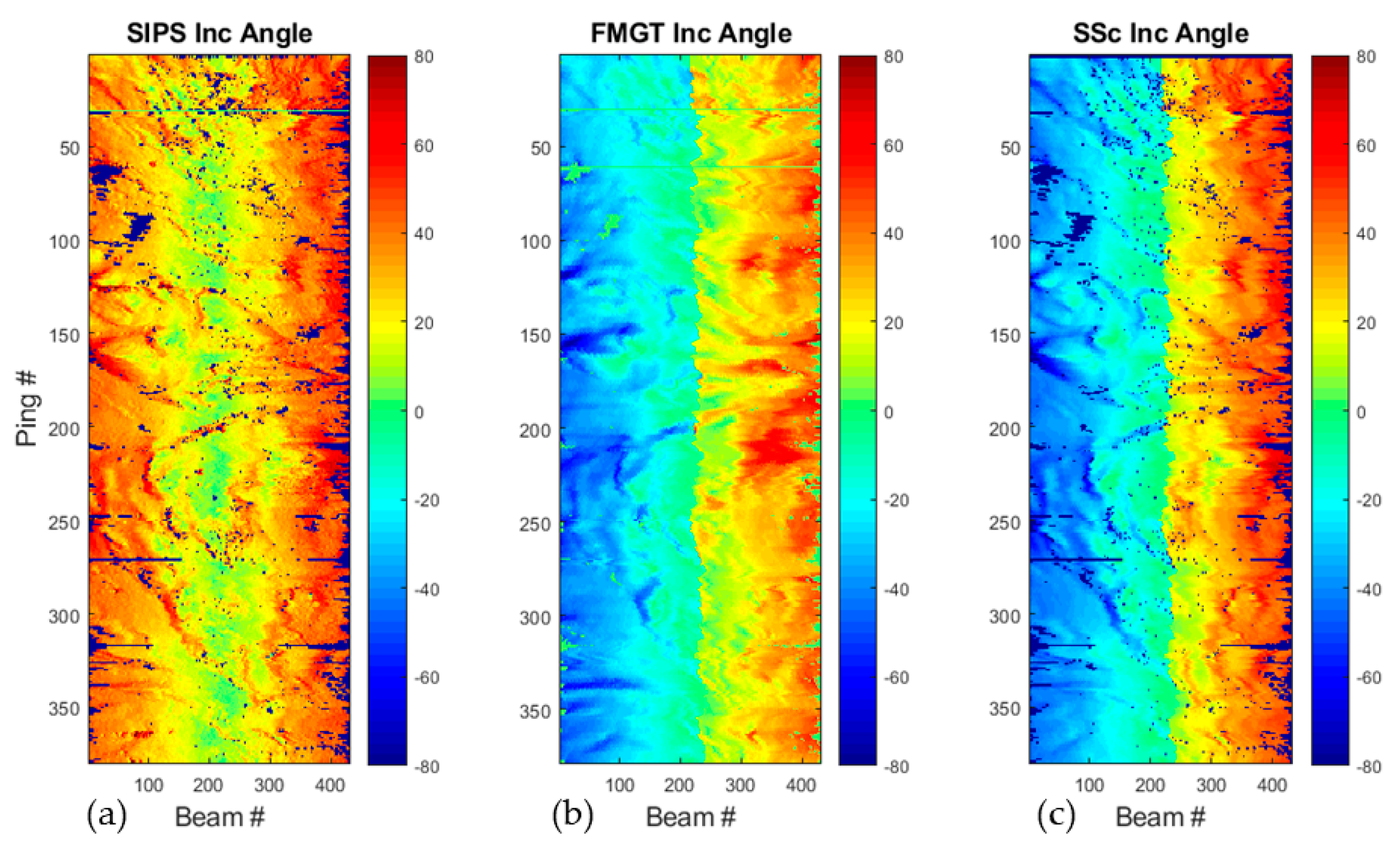

3.3. Comparison of Reported Incidence Angles

3.4. Comparison of Corrections Applied For BL3 Processing

3.5. Summary of Differences Between Software for Different Sonar Types

3.6. Relative Importance of Difference in Raw Data Reading (BL0) Compared to Radiometric Correction (BL3-BL0)

4. Discussion

4.1. Importance of Accurate, Transparent, and Consistent Software Solutions in Science

4.2. Why Do Different Approaches to Reading Raw Data Exist and Which One is Correct?

- Choice of central tendency, i.e., mean, median, or some other measure;

- Choice of how the backscatter samples are selected to compute a measure of central tendency, e.g., use all the samples within a beam vs. using some threshold around the bottom detect to obtain a subset of samples vs. some other variations to choose samples;

- Choice of the calculation method. MBES samples provided by sonar manufacturers represent backscatter strength in dB. These samples can be directly used to compute their central tendency, or they can be first converted into linear domain before calculating averages, and then the computed average converted back to a logarithmic scale.

4.3. Need for Adoption of Metadata Standards

4.4. Collaboration between Backscatter Stakeholders

- The collaboration works well if all the stakeholders can communicate. BSWG provided an effective communication platform that facilitated the discussions.

- Different entities may have different end goals in mind while collaborating on such projects. The framework of a successful collaboration depends on finding common goals. For example, in this case, the common goal was an improvement in the consistency of backscatter results, which motivated all stakeholders to agree to work closely. For other similar efforts, e.g., efforts to standardize seafloor backscatter segmentation and characterization, the identification of a common goal may not be very clear due to multiple divergent needs of end-users or desire to protect commercial interests.

- Challenges of navigating proprietary restrictions both for multibeam echosounder software and hardware manufacturers are very real and may hamper successful collaboration between stakeholders [42].

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Disclaimer

Appendix A

| Sonar Type | Data File |

|---|---|

| EM 302 | 0213_20170717_112534_EX1706_MB.all |

| EM 710 | 0002_20130214_091514_borda.all |

| EM 3002 | 0009_20100113_121654_guillemot.all |

| EM 2040 | 0005_20160412_104116_SimonStevin.all |

| SeaBat 7125 | 20140729_082527_SMB Owen.s7k |

Appendix B

| Software | SonarScope | FMGT | CARIS SIPS | MB Process |

|---|---|---|---|---|

| # columns | 31 | 12 | 17 | 11 |

| Time stamp (Unix Time) | Time UTC | Ping Time | Timestamp | Ping Time |

| Ping # | Ping | Ping Number | Ping | Ping Number |

| Beam # | Beam | Beam Number | Beam | Beam Number |

| Beam location (Lat/Long) | Latitude/Longitude | Latitude/Longitude | Longitude/Latitude | Longitude/Latitude |

| Beam location (E/N) | GeoX/GeoY | Easting/Northing | Easting/Northing | Easting/Northing |

| Beam depth | BathyRT | Depth | Depth | (Not Provided) |

| Incidence angle | IncidenceAngles | True Angle | IncidentAngle | Incidence Angle |

| BS as read from data files (BL0) | ReflecSSc | Backscatter Value | BL0 | Backscatter value |

| BS processed angular response (BL3) | SSc_Step1 | Corrected Backscatter Value | BL3 | Corr Backscatter Value |

| Data processed | All except SeaBat 7125 | All except EM 3002 | All | Only SeaBat 7125 |

References

- de Moustier, C. State of the art in swath bathymetry survey systems. Int. Hydrogr. Rev. 1988, 65, 25–54. [Google Scholar]

- Hughes Clarke, J.E.; Mayer, L.A.; Wells, D.E. Shallow-water imaging multibeam sonars: A new tool for investigating seafloor processes in the coastal zone and on the continental shelf. Mar. Geophys. Res. 1996, 18, 607–629. [Google Scholar] [CrossRef]

- de Moustier, C. Beyond bathymetry: Mapping acoustic backscattering from the deep seafloor with Sea Beam. J. Acoust. Soc. Am. 1986, 79, 316–331. [Google Scholar] [CrossRef]

- Mayer, L.A. Frontiers in Seafloor Mapping and Visualization. Mar. Geophys. Res. 2006, 27, 7–17. [Google Scholar] [CrossRef]

- Anderson, J.T.; Holliday, D.V.; Kloser, R.; Reid, D.; Simard, Y.; Brown, C.J.; Chapman, R.; Coggan, R.; Kieser, R.; Michaels, W.L.; et al. ICES Acoustic Seabed Classification of Marine Physical and Biological Landscapes; ICES Cooperative Research Report No. 286; International Council for the Exploration of the Sea Conseil, International pour l’Exploration de la Mer: Copenhagen, Denmark, August 2007. [Google Scholar]

- Jackson, D.R.; Richardson, M.D. High-Frequency Seafloor Acoustics; Springer Science & Business Media: New York, UK, 2007; ISBN 978-0-387-34154-5. [Google Scholar]

- Lurton, X. An Introduction to Underwater Acoustics: Principles and Applications, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2010; ISBN 978-3-540-78480-7. [Google Scholar]

- Lucieer, V.; Roche, M.; Degrendele, K.; Malik, M.; Dolan, M.; Lamarche, G. User expectations for multibeam echo sounders backscatter strength data-looking back into the future. Mar. Geophys. Res. 2018, 39, 23–40. [Google Scholar] [CrossRef]

- Schimel, A.C.G.; Beaudoin, J.; Parnum, I.M.; Le Bas, T.; Schmidt, V.; Keith, G.; Ierodiaconou, D. Multibeam sonar backscatter data processing. Mar. Geophys. Res. 2018, 39, 121–137. [Google Scholar] [CrossRef]

- Lurton, X.; Lamarche, G.; Brown, C.; Lucieer, V.; Rice, G.; Schimel, A.C.G.; Weber, T.C. Backscatter Measurements by Seafloor-Mapping Sonars—Guidelines and Recommendations. Geohab report. May 2015. Available online: http://geohab.org/wp-content/uploads/2013/02/BWSG-REPORT-MAY2015.pdf (accessed on 10 November 2019).

- Lamarche, G.; Lurton, X. Recommendations for improved and coherent acquisition and processing of backscatter data from seafloor-mapping sonars. Mar. Geophys. Res. 2018, 39, 5–22. [Google Scholar] [CrossRef]

- Dufek, T. Backscatter Analysis of Multibeam Sonar Data in the Area of the Valdivia Fracture Zone using Geocoder in CARIS HIPS&SIPS and IVS3D Fledermaus. Master’s Thesis, Hafencity University Hamburg, Hamburg, Germany, 2012. [Google Scholar]

- Roche, M.; Degrendele, K.; Mol, L.D. Constraints and limitations of MBES Backscatter Strength (BS) measurements for monitoring the seabed. Surveyor and geologist point of view. In Proceedings of the GeoHab (Maine Geological and Biological Habitat Mapping), Rome, Italy, 6–10 May 2013; p. 21. [Google Scholar]

- Roche, M.; Degrendele, K.; Vrignaud, C.; Loyer, S.; Le Bas, T.; Augustin, J.-M.; Lurton, X. Control of the repeatability of high frequency multibeam echosounder backscatter by using natural reference areas. Mar. Geophys. Res. 2018, 39, 89–104. [Google Scholar] [CrossRef]

- Malik, M.; Lurton, X.; Mayer, L. A framework to quantify uncertainties of seafloor backscatter from swath mapping echosounders. Mar. Geophys. Res. 2018, 39, 151–168. [Google Scholar] [CrossRef]

- Malik, M. Sources and impacts of bottom slope uncertainty on estimation of seafloor backscatter from swath sonars. Geosciences 2019, 9, 183. [Google Scholar] [CrossRef]

- Hare, R.; Godin, A.; Mayer, L.A. Accuracy Estimation of Canadian Swath (Multibeam) and Sweep (Multitransducer) Sounding Systems; Canadian Hydrographic Service Internal Report; Canadian Hydrographic Service: Ottawa, ON, Canada, 1995. [Google Scholar]

- FMGT—Fledermaus Geocoder Toolbox 7.8.0; Quality Positioning Services BV (QPS): Zeist, The Netherlands, 2018.

- Teledyne Computer Aided Resource Information System (CARIS) HIPS and SIPS; Teledyne CARIS Inc.: Fredericton, NB, Canada, 2019.

- Augustin, J. SonarScope® software on-line presentation. Available online: http://flotte.ifremer.fr/fleet/Presentation-of-the-fleet/Logiciels-embarques/SonarScope (accessed on 6 June 2019).

- Kongsberg Inc. Kongsberg Multibeam Echo Sounder EM Datagram Formats. Rev. W. Available online: https://www.kongsberg.com/globalassets/maritime/km-products/product-documents/160692_em_datagram_formats.pdf (accessed on 15 November 2019).

- Teledyne Reson Teledyne Reson Data Format Definition Document. 7k Data Format, Volume 1, Version 3.01. Available online: https://www.teledyne-pds.com/wp-content/uploads/2016/11/DATA-FORMAT-DEFINITION-DOCUMENT-7k-Data-Format-Volume-I-Version-3.01.pdf (accessed on 20 June 2019).

- Kongsberg Inc. KMALL Datagram description Rev:F. Available online: https://www.kongsberg.com/maritime/support/document-and-downloads/software-downloads/ (accessed on 15 June 2019).

- Gavrilov, A.; Duncan, A.; McCauley, R.; Parnum, I.; Penrose, J.; Siwabessy, P.; Woods, A.J.; Tseng, Y.-T. Characterization of the seafloor in Australia’s coastal zone using acoustic techniques. In Proceedings of the 1st International Conference on Underwater Acoustic Measurements, Heraklion, Greece, 28 June–1 July 2005; pp. 1075–1080. [Google Scholar]

- Parnum, I.M.; Tyler, E.; Miles, P. Software for rapid visualisation and analysis of multibeam echosounder water column data. In Proceedings of the ICES Symposium on Marine Ecosystem Acoustics, Nantes, France, 25–28 May 2015. [Google Scholar]

- Parnum, I.M.; Gavrilov, A.N. High-frequency multibeam echo-sounder measurements of seafloor backscatter in shallow water: Part 1—Data acquisition and processing. Underw. Technol. 2011, 30, 3–12. [Google Scholar] [CrossRef]

- European Parliament; Council of the European Union. Directive 2008/56/EC of the European Parliament and of the Council of 17 June 2008 Establishing a Framework for Community Action in the Field of Marine Environmental Policy (Marine Strategy Framework Directive) (Text with EEA Relevance); 2008. Available online: http://www.legislation.gov.uk/eudr/2008/56/2019-10-31# (accessed on 10 November 2019).

- Lucieer, V.; Walsh, P.; Flukes, E.; Butler, C.; Proctor, R.; Johnson, C. Seamap Australia—A National Seafloor Habitat Classification Scheme; Institute for Marine and Antarctic Studies (IMAS), University of Tasmania: Hobart TAS, Australia, 2017. [Google Scholar]

- Buhl-Mortensen, L.; Buhl-Mortensen, P.; Dolan, M.F.J.; Holte, B. The MAREANO programme—A full coverage mapping of the Norwegian off-shore benthic environment and fauna. Mar. Biol. Res. 2015, 11, 4–17. [Google Scholar] [CrossRef]

- Manzella, G.; Griffa, A.; de la Villéon, L.P. Report on Data Management Best Practice and Generic Data and Metadata Models. V.2.1 [Deliverable 5.9]; Ifremer for JERICO-NEXT Project: Issy-les-Moulineaux, France, 2017. [Google Scholar]

- Idaszak, R.; Tarboton, D.G.; Yi, H.; Christopherson, L.; Stealey, M.J.; Miles, B.; Dash, P.; Couch, A.; Spealman, C.; Horsburgh, J.S.; et al. HydroShare—A Case Study of the Application of Modern Software Engineering to a Large Distributed Federally-Funded Scientific Software Development Project. In Software Engineering for Science; Taylor & Francis CRC Press: Boca Raton, FL, USA, 2017; pp. 253–270. [Google Scholar]

- Hannay, J.E.; MacLeod, C.; Singer, J.; Langtangen, H.P.; Pfahl, D.; Wilson, G. How do scientists develop and use scientific software? In Proceedings of the 2009 ICSE Workshop on Software Engineering for Computational Science and Engineering, Washington, DC, USA, 23 May 2009; pp. 1–8. [Google Scholar]

- Hook, D.; Kelly, D. Testing for trustworthiness in scientific software. In Proceedings of the 2009 ICSE Workshop on Software Engineering for Computational Science and Engineering, Washington, DC, USA, 23 May 2009; pp. 59–64. [Google Scholar]

- Howison, J.; Deelman, E.; McLennan, M.J.; Ferreira da Silva, R.; Herbsleb, J.D. Understanding the scientific software ecosystem and its impact: Current and future measures. Res. Eval. 2015, 24, 454–470. [Google Scholar] [CrossRef]

- Carver, J.; Hong, N.P.C.; Thiruvathukal, G.K. Software Engineering for Science; Taylor & Francis CRC Press: Boca Raton, FL, USA, 2017; ISBN 978-1-4987-4385-3. [Google Scholar]

- Calder, B.R.; Mayer, L.A. Automatic processing of high-rate, high-density multibeam echosounder data. Geochem. Geophys. Geosyst. 2003, 4, 1048. [Google Scholar] [CrossRef]

- Hughes Clarke, J.E.; Iwanowska, K.K.; Parrott, R.; Duffy, G.; Lamplugh, M.; Griffin, J. Inter-calibrating multi-source, multi-platform backscatter data sets to assist in compiling regional sediment type maps: Bay of Fundy. In Proceedings of the Canadian Hydrographic and National Surveyors’ Conference, Victoria Conference Centre, Victoria, BC, Canada, 5–8 May 2008; p. 23. [Google Scholar]

- Feldens, P.; Schulze, I.; Papenmeier, S.; Schönke, M.; Schneider von Deimling, J. Improved interpretation of marine sedimentary environments using multi-frequency multibeam backscatter data. Geosciences 2018, 8, 214. [Google Scholar] [CrossRef]

- Pendleton, L.H.; Beyer, H.; Estradivari; Grose, S.O.; Hoegh-Guldberg, O.; Karcher, D.B.; Kennedy, E.; Llewellyn, L.; Nys, C.; Shapiro, A.; et al. Disrupting data sharing for a healthier ocean. ICES J. Mar. Sci. 2019, 76, 1415–1423. [Google Scholar] [CrossRef]

- Franken, S.; Kolvenbach, S.; Prinz, W.; Alvertis, I.; Koussouris, S. CloudTeams: Bridging the gap between developers and customers during software development processes. Procedia Comput. Sci. 2015, 68, 188–195. [Google Scholar] [CrossRef]

- Mackenzie, B.; Celliers, L.; de Freitas Assad, L.P.; Heymans, J.J.; Rome, N.; Thomas, J.; Anderson, C.; Behrens, J.; Calverley, M.; Desai, K.; et al. The role of stakeholders in creating societal value from coastal and ocean observations. Front. Mar. Sci. 2019, 6, 137. [Google Scholar] [CrossRef]

- Legendre, P. Reply to the comment by Preston and Kirlin on “Acoustic seabed classification: Improved statistical method”. Can. J. Fish. Aquat. Sci. 2003, 60, 1301–1305. [Google Scholar] [CrossRef][Green Version]

- Masetti, G.; Augustin, J.M.; Malik, M.; Poncelet, C.; Lurton, X.; Mayer, L.A.; Rice, G.; Smith, M. The Open Backscatter Toolchain (OpenBST) project: Towards an open-source and metadata-rich modular implementation. In Proceedings of the US Hydro, Biloxi, MS, USA, 19–21 March 2019. [Google Scholar] [CrossRef]

| Echosounder Model (Nominal Frequency) | Vessel | Data Acquisition Software | Agency | Location | Weather | Date | Depth Range |

|---|---|---|---|---|---|---|---|

| EM 2040 (300 kHz) | RV Simon Stevin | SIS | FPS Economy | Kwinte reference area (Belgium) | Calm | 12 April 2016 | 23–26 m |

| EM 3002 (300 kHz) | HSL Guillemot | SIS | SHOM | Carre Renard area, Brest Bay, France | Calm | 13 Jan 2010 | 18–22 m |

| EM 710 (70–100 kHz) | BH2 Borda | SIS | SHOM | Carre Renard area, Brest Bay, France | Calm | 14 Feb 2013 | 18–22 m |

| EM 302 (30 kHz) | NOAA Ship Okeanos Explorer | SIS | NOAA | Johnston Atoll near Hawaii, USA | Rough | 17 July 2017 | ~3000 m |

| Reson SeaBat 7125 (400 kHz) | HMSMB Owen | PDS2000 | Shallow survey common dataset 2015 | Plymouth, UK | Calm | 29 July 2014 | <10 m |

| Column # | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| Value reported | Ping # | Beam # | Ping Time (Unix time) | Latitude | Longitude | BL0 | Seafloor Incidence angle (BL3) | BL3 |

| SIPS/FMGT | FMGT/SonarScope | SonarScope/SIPS | MB Process/FMGT | MB Process/SIPS | |

|---|---|---|---|---|---|

| EM302 | 0.66 (1.23) | 0.57 (1.12) | 0.39 (0.87) | - | - |

| EM710 | 1.19 (2.28) | 0.63 (1.02) | 0.80 (1.68) | - | - |

| EM3002 | 0.63 (1.43) | 0.04 (0.13) | 0.94 (2.1) | - | - |

| EM2040 | 0.3 (0.73) | 0.04 (0.11) | 0.31 (0.76) | - | - |

| SeaBat7125 | 0.71 (0.04) | - | - | 55.98 (148.9) | 2.27(0.16) |

| CARIS SIPS | FMGT | Sonar Scope | Curtin Univ. MB Process |

|---|---|---|---|

| Reson Systems: Use the snippet sample associated with the bottom detection. Divide the stored value by 65536 (to convert from 2 byte to floating point) before applying the 20log10. Kongsberg systems: Fit a curve to snippet samples using a moving window (size 11 samples). Report the max value of the fit curve. | Reson and Kongsberg systems: Identify all the samples that fall within ±5 dB around the bottom detect echo level and compute an average of these qualifying samples using the amplitude values in dB as reported in the datagram. | Kongsberg systems: Use all of the full-time series samples recorded within a beam to compute an average value. By default, samples are first converted to energy (linear domain) before computing average and returned in dB. The new release (2019) provides the option to compute this value in dB, energy, median, or amplitude. The new default method is now in amplitude. | Reson systems: Calculate the mean of samples that fall within ±5 dB around the bottom detect echo level. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Malik, M.; Schimel, A.C.G.; Masetti, G.; Roche, M.; Le Deunf, J.; Dolan, M.F.J.; Beaudoin, J.; Augustin, J.-M.; Hamilton, T.; Parnum, I. Results from the First Phase of the Seafloor Backscatter Processing Software Inter-Comparison Project. Geosciences 2019, 9, 516. https://doi.org/10.3390/geosciences9120516

Malik M, Schimel ACG, Masetti G, Roche M, Le Deunf J, Dolan MFJ, Beaudoin J, Augustin J-M, Hamilton T, Parnum I. Results from the First Phase of the Seafloor Backscatter Processing Software Inter-Comparison Project. Geosciences. 2019; 9(12):516. https://doi.org/10.3390/geosciences9120516

Chicago/Turabian StyleMalik, Mashkoor, Alexandre C. G. Schimel, Giuseppe Masetti, Marc Roche, Julian Le Deunf, Margaret F.J. Dolan, Jonathan Beaudoin, Jean-Marie Augustin, Travis Hamilton, and Iain Parnum. 2019. "Results from the First Phase of the Seafloor Backscatter Processing Software Inter-Comparison Project" Geosciences 9, no. 12: 516. https://doi.org/10.3390/geosciences9120516

APA StyleMalik, M., Schimel, A. C. G., Masetti, G., Roche, M., Le Deunf, J., Dolan, M. F. J., Beaudoin, J., Augustin, J.-M., Hamilton, T., & Parnum, I. (2019). Results from the First Phase of the Seafloor Backscatter Processing Software Inter-Comparison Project. Geosciences, 9(12), 516. https://doi.org/10.3390/geosciences9120516