Automatic Classification of Cat Vocalizations Emitted in Different Contexts

Abstract

Simple Summary

Abstract

1. Introduction

2. Building the Dataset

2.1. Treatments

- 10 adult Maine Coon cats (one intact male, three neutered male, three intact females and three neutered females) belonging to a single private owner and housed under the same conditions; and

- 11 adult European Shorthair cats (one intact male, one neutered male, zero intact females and nine neutered females) belonging to different owners and housed under different conditions.

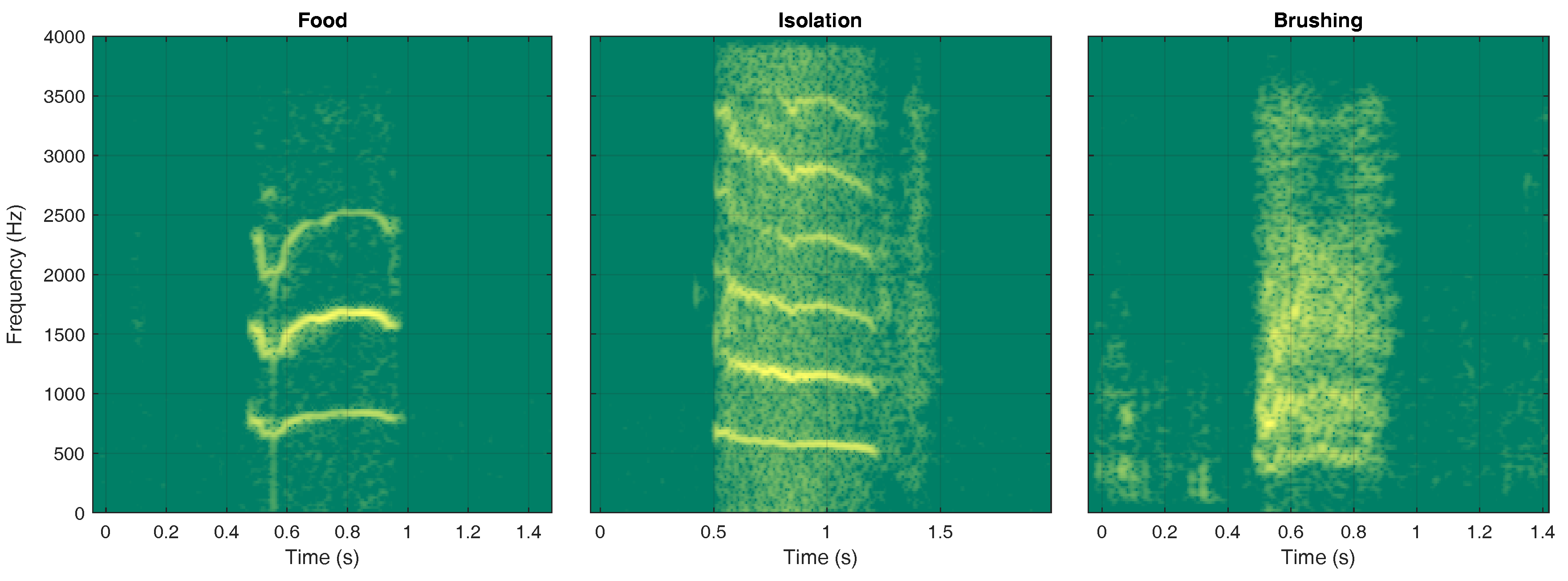

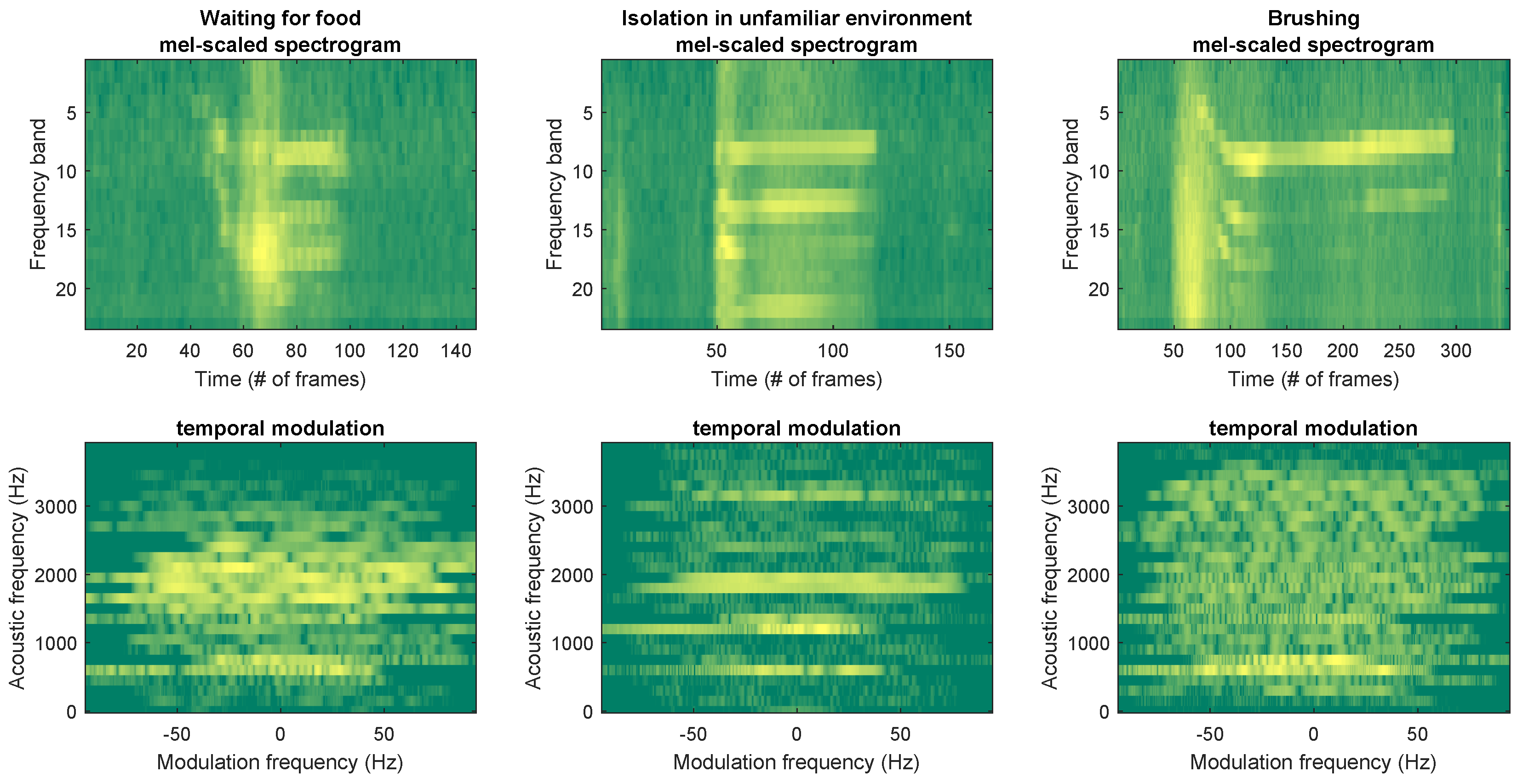

- Waiting for food: The owner started the normal routine operations that precede food delivery in the home environment, and food was actually delivered 5 min after the start of these routine operations.

- Isolation in unfamiliar environment: Cats were transported by their owner, adopting the normal routine used to transport them for any other reason, to an unfamiliar environment (e.g., a room in a different apartment or an office, not far from their home environment). Transportation lasted less than 30 min and cats were allowed 30 min with their owners to recover from transport, before being isolated in the unfamiliar environment, where they stayed alone for a maximum of 5 min.

- Brushing: Cats were brushed by their owner in their home environment for a maximum of 5 min.

2.2. Data Acquisition

3. Species-Independent Recognition of Cat Emission Context

3.1. Feature Extraction

- a

- mel-frequency cepstral coefficients; and

- b

- temporal modulation features.

3.1.1. Mel-Frequency Cepstral Coefficients (MFCC)

3.1.2. Temporal Modulation Features

3.2. Pattern Recognition

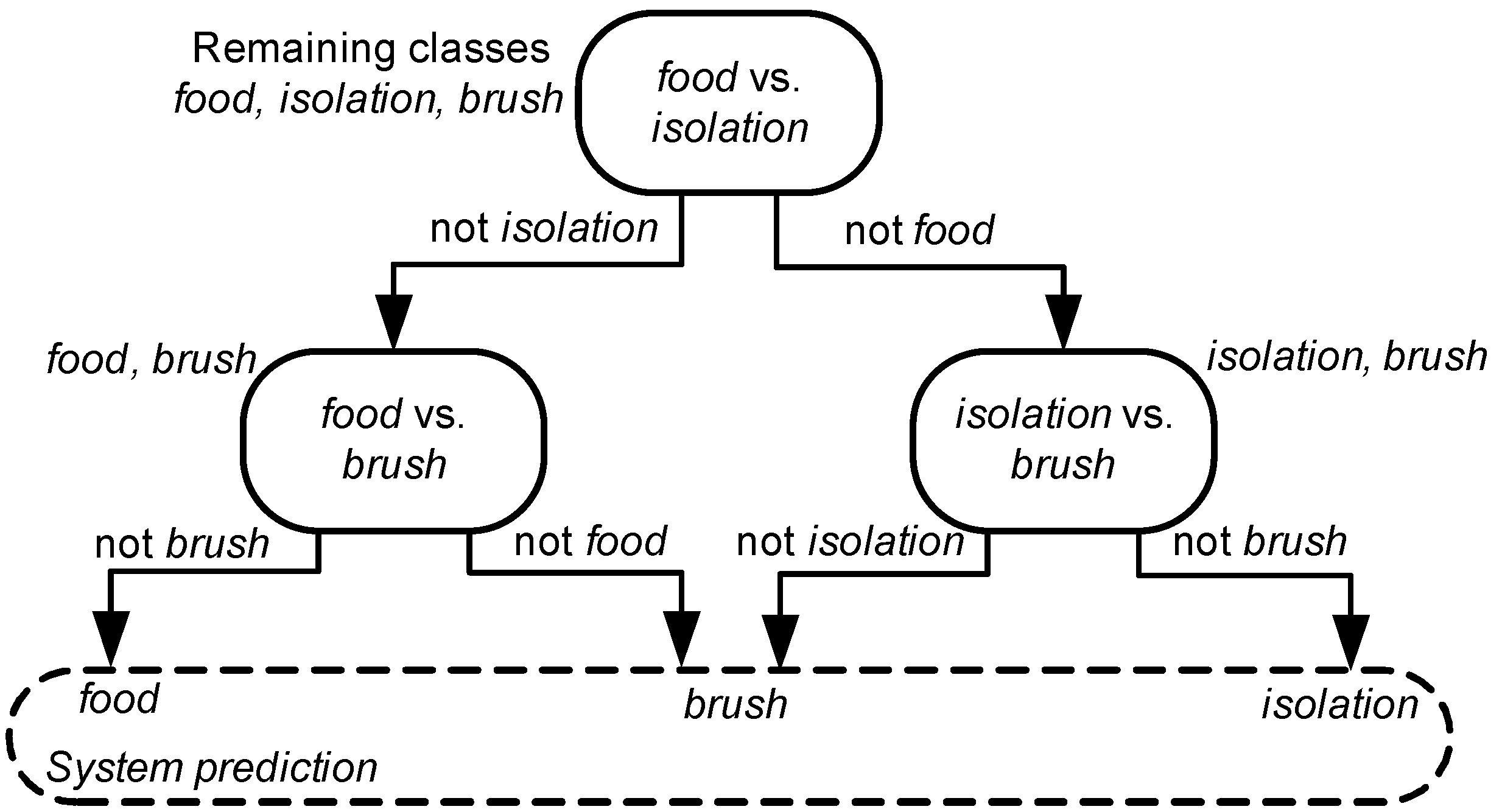

3.2.1. Topological Ordering of

3.2.2. DAG-HMM Operation

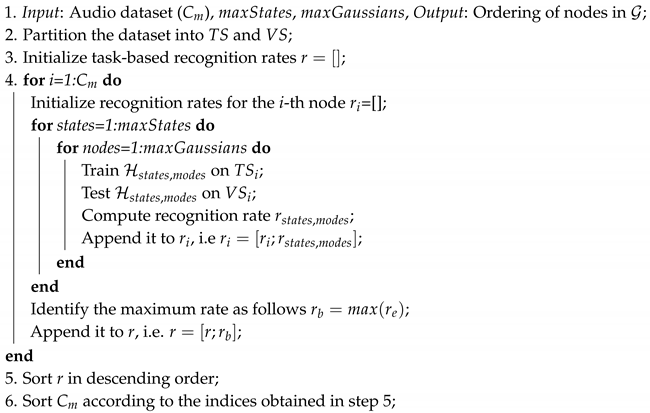

| Algorithm 1: The algorithm for determining the topological ordering of . |

|

4. Experimental Set-Up and Results

4.1. Parameterization

4.2. Experimental Results

Comment on DAG-HMM Applicability

5. Conclusions and Discussion

- compare emotional state predictions made by humans with those made by an automatic methodology;

- explore the sound characteristics conveying the meaning of cats’ meowing by analyzing the performance in a feature-wise manner;

- quantify the recognition rate achieved by experienced people working with cats when only the acoustic emission is available;

- establish analytical models explaining each emotional state by means of physically- or ethologically-motivated features; and

- develop a module able to deal with potential non-stationarities, for example new unknown emotional states.

6. Ethical Statement

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Karsh, E.B.; Turner, D.C. The human-cat relationship. In The Domestic Cat: The Biology of its Behaviour; Cambridge University Press: Cambridge, UK, 1988; pp. 159–177. [Google Scholar]

- Eriksson, M.; Keeling, L.J.; Rehn, T. Cats and owners interact more with each other after a longer duration of separation. PLoS ONE 2017, 12, e0185599. [Google Scholar] [CrossRef] [PubMed]

- Owens, J.L.; Olsen, M.; Fontein, A.; Kloth, C.; Kershenbaum, A.; Weller, S. Visual classification of feral cat Felis silvestris catus vocalizations. Curr. Zool. 2017, 63, 331–339. [Google Scholar] [CrossRef] [PubMed][Green Version]

- McComb, K.; Taylor, A.M.; Wilson, C.; Charlton, B.D. The cry embedded within the purr. Curr. Biol. 2009, 19, R507–R508. [Google Scholar] [CrossRef] [PubMed]

- Chapel, N.; Lucas, J.; Radcliffe, S.; Stewart, K.; Lay, D. Comparison of vocalization patterns in piglets which were crushed to those which underwent human restraint. Animals 2018, 8, 138. [Google Scholar] [CrossRef] [PubMed]

- Immelmann, K. Introduction to Ethology; Springer Science & Business Media: Berlin, Germany, 2012. [Google Scholar]

- Potamitis, I. Automatic classification of a taxon-rich community recorded in the wild. PLoS ONE 2014, 9, e96936. [Google Scholar] [CrossRef] [PubMed]

- Smirnova, D.S.; Volodin, I.A.; Demina, T.S.; Volodina, E.V. Acoustic structure and contextual use of calls by captive male and female cheetahs (Acinonyx jubatus). PLoS ONE 2016, 11, e0158546. [Google Scholar] [CrossRef]

- Kim, Y.; Sa, J.; Chung, Y.; Park, D.; Lee, S. Resource-efficient pet dog sound events classification using LSTM-FCN based on time-series data. Sensors 2018, 18, 4019. [Google Scholar] [CrossRef]

- Coffey, K.R.; Marx, R.G.; Neumaier, J.F. DeepSqueak: A deep learning-based system for detection and analysis of ultrasonic vocalizations. Neuropsychopharmacology 2019, 44, 859–868. [Google Scholar] [CrossRef]

- Stowell, D.; Benetos, E.; Gill, L.F. On-bird sound recordings: Automatic acoustic recognition of activities and contexts. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1193–1206. [Google Scholar] [CrossRef]

- Bradshaw, J.; Cameron-Beaumont, C. The signalling repertoire of the domestic cat and its undomesticated relatives. In The Domestic Cat: The Biology of Its Behaviour; Cambridge University Press: Cambridge, UK, 2000; pp. 67–94. [Google Scholar]

- Yeon, S.C.; Kim, Y.K.; Park, S.J.; Lee, S.S.; Lee, S.Y.; Suh, E.H.; Houpt, K.A.; Chang, H.H.; Lee, H.C.; Yang, B.G.; et al. Differences between vocalization evoked by social stimuli in feral cats and house cats. Behav. Process. 2011, 87, 183–189. [Google Scholar] [CrossRef]

- Herbst, C.T. Biophysics of vocal production in mammals. In Vertebrate Sound Production and Acoustic Communication; Springer: Berlin, Germany, 2016; pp. 159–189. [Google Scholar]

- Brown, S.L. The Social Behaviour of Neutered Domestic Cats (Felis catus). Ph.D. Thesis, University of Southampton, Southampton, UK, 1993. [Google Scholar]

- Cameron-Beaumont, C. Visual and Tactile Communication in the Domestic Cat (Felis silvestris catus) and Undomesticated Small-Felids. Ph.D. Thesis, University of Southampton, Southampton, UK, 1997. [Google Scholar]

- Schuller, B.; Hantke, S.; Weninger, F.; Han, W.; Zhang, Z.; Narayanan, S. Automatic recognition of emotion evoked by general sound events. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 341–344. [Google Scholar] [CrossRef]

- Weninger, F.; Eyben, F.; Schuller, B.W.; Mortillaro, M.; Scherer, K.R. On the acoustics of emotion in audio: What speech, music, and sound have in common. Front. Psychol. 2013, 4, 292. [Google Scholar] [CrossRef] [PubMed]

- Drossos, K.; Floros, A.; Kanellopoulos, N.G. Affective acoustic ecology: Towards emotionally enhanced sound events. In Proceedings of the 7th Audio Mostly Conference: A Conference on Interaction with Sound, Corfu, Greece, 26–28 September 2012; ACM: New York, NY, USA, 2012; pp. 109–116. [Google Scholar] [CrossRef]

- Ntalampiras, S. A transfer learning framework for predicting the emotional content of generalized sound events. J. Acoust. Soc. Am. 2017, 141, 1694–1701. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27:1–27:27. [Google Scholar] [CrossRef]

- Jaeger, H. Tutorial on Training Recurrent Neural Networks, Covering BPPT, RTRL, EKF and the ”Echo State Network” Approach; Technical Report; Fraunhofer Institute AIS: St. Augustin, Germany, 2002. [Google Scholar]

- Pandeya, Y.R.; Kim, D.; Lee, J. Domestic cat sound classification using learned features from deep neural nets. Appl. Sci. 2018, 8, 1949. [Google Scholar] [CrossRef]

- Casey, R. Fear and stress in companion animals. In BSAVA Manual of Canine and Feline Behavioural Medicine; Horwitz, D., Mills, D., Heath, S., Eds.; British Small Animal Veterinary Association: Gloucester, UK, 2002; pp. 144–153. [Google Scholar]

- Notari, L. Stress in veterinary behavioural medicine. In BSAVA Manual of Canine and Feline Behavioural Medicine; BSAVA Library: Gloucester, UK, 2009; pp. 136–145. [Google Scholar]

- Palestrini, C. Situational sensitivities. In BSAVA Manual of Canine and Feline Behavioural Medicine; Horwitz, D., Mills, D., Heath, S., Eds.; British Small Animal Veterinary Association: Gloucester, UK, 2009; pp. 169–181. [Google Scholar]

- Carney, H.; Gourkow, N. Impact of stress and distress on cat behaviour and body language. In The ISFM Guide to Feline Stress and Health; Ellis, S., Sparkes, A., Eds.; International Society of Feline Medicine (ISFM): Tisbury, UK, 2016. [Google Scholar]

- Palestrini, C.; Calcaterra, V.; Cannas, S.; Talamonti, Z.; Papotti, F.; Buttram, D.; Pelizzo, G. Stress level evaluation in a dog during animal-assisted therapy in pediatric surgery. J. Vet. Behav. 2017, 17, 44–49. [Google Scholar] [CrossRef]

- Telephony Working Group. Hands-Free Profile (HFP) 1.7.1, Bluetooth Profile Specification; Bluetooth SIG: Washington, DC, USA, 2015. [Google Scholar]

- Car Working Group. Headset Profile (HSP) 1.2; Bluetooth SIG: Washington, DC, USA, 2008. [Google Scholar]

- Stowell, D. Computational bioacoustic scene analysis. In Computational Analysis of Sound Scenes and Events; Virtanen, T., Plumbley, M.D., Ellis, D., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Chapter 11; pp. 303–333. [Google Scholar] [CrossRef]

- Ntalampiras, S. A novel holistic modeling approach for generalized sound recognition. IEEE Signal Process. Lett. 2013, 20, 185–188. [Google Scholar] [CrossRef]

- Pramono, R.X.A.; Bowyer, S.; Rodriguez-Villegas, E. Automatic adventitious respiratory sound analysis: A systematic review. PLoS ONE 2017, 12, e0177926. [Google Scholar] [CrossRef]

- Colonna, J.; Peet, T.; Ferreira, C.A.; Jorge, A.M.; Gomes, E.F.; Gama, J.A. Automatic classification of anuran sounds using convolutional neural networks. In Proceedings of the Ninth International C* Conference on Computer Science & Software Engineering, Porto, Portugal, 20–22 July 2016; ACM: New York, NY, USA, 2016; pp. 73–78. [Google Scholar] [CrossRef]

- Sohn, J.; Kim, N.S.; Sung, W. A statistical model-based voice activity detection. IEEE Signal Process. Lett. 1999, 6, 1–3. [Google Scholar] [CrossRef]

- Eyben, F.; Weninger, F.; Gross, F.; Schuller, B. Recent developments in openSMILE, the Munich open-source multimedia feature extractor. In Proceedings of the 21st ACM International Conference on Multimedia, Barcelona, Spain, 21–25 October 2013; ACM: New York, NY, USA, 2013; pp. 835–838. [Google Scholar] [CrossRef]

- Clark, P.; Atlas, L. Time-frequency coherent modulation filtering of nonstationary signals. IEEE Trans. Signal Process. 2009, 57, 4323–4332. [Google Scholar] [CrossRef]

- Schimmel, S.M.; Atlas, L.E.; Nie, K. Feasibility of single channel speaker separation based on modulation frequency analysis. In Proceedings of the 2007 IEEE International Conference on Acoustics, Speech and Signal Processing—ICASSP ’07, Honolulu, HI, USA, 15–20 April 2007; Volume 4, pp. 605–608. [Google Scholar] [CrossRef]

- Les Atlas, P.C.; Schimmel, S. Modulation Toolbox Version 2.1 for MATLAB; University of Washington: Seattle, WA, USA, 2010. [Google Scholar]

- Klapuri, A. Multipitch analysis of polyphonic music and speech signals using an auditory model. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 255–266. [Google Scholar] [CrossRef]

- Hoehndorf, R.; Ngomo, A.C.N.; Dannemann, M.; Kelso, J. Statistical tests for associations between two directed acyclic graphs. PLoS ONE 2010, 5, e10996. [Google Scholar] [CrossRef]

- Ntalampiras, S. Directed acyclic graphs for content based sound, musical genre, and speech emotion classification. J. New Music Res. 2014, 43, 173–182. [Google Scholar] [CrossRef]

- VanderWeele, T.J.; Robins, J.M. Signed directed acyclic graphs for causal inference. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2010, 72, 111–127. [Google Scholar] [CrossRef]

- Ntalampiras, S. Moving vehicle classification using wireless acoustic sensor networks. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 129–138. [Google Scholar] [CrossRef]

- Mesaros, A.; Heittola, T.; Benetos, E.; Foster, P.; Lagrange, M.; Virtanen, T.; Plumbley, M.D. Detection and classification of acoustic scenes and events: Outcome of the DCASE 2016 challenge. IEEE/ACM Trans. Audio Speech Lang. Proc. 2018, 26, 379–393. [Google Scholar] [CrossRef]

- Imoto, K. Introduction to acoustic event and scene analysis. Acoust. Sci. Technol. 2018, 39, 182–188. [Google Scholar] [CrossRef]

- Rabiner, L.R. A tutorial on hidden Markov models and selected applications in speech recognition. Proc. IEEE 1989, 77, 257–286. [Google Scholar] [CrossRef]

| Food (93) | Isolation (220) | Brushing (135) | ||||

|---|---|---|---|---|---|---|

| MC (40) | ES (53) | MC (91) | ES (129) | MC (65) | ES (70) | |

| IM (20) | - | 5 | 10 | - | 5 | - |

| NM (79) | 14 | 8 | 17 | 15 | 21 | 4 |

| IF (70) | 22 | - | 28 | - | 20 | - |

| NF (279) | 4 | 40 | 36 | 114 | 19 | 66 |

| Classification Approach | Recognition Rate (%) |

|---|---|

| Directed acyclic graphs—Hidden Markov Models | 95.94 |

| Class-specific Hidden Markov Models | 80.95 |

| Universal Hidden Markov Models | 76.19 |

| Support vector machine | 78.51 |

| Echo state network | 68.9 |

| Responded | Waiting for Food | Isolation | Brushing | |

|---|---|---|---|---|

| Presented | ||||

| Waiting for food | 100 | - | - | |

| Isolation | - | 92.59 | 7.41 | |

| Brushing | 4.76 | - | 95.24 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ntalampiras, S.; Ludovico, L.A.; Presti, G.; Prato Previde, E.; Battini, M.; Cannas, S.; Palestrini, C.; Mattiello, S. Automatic Classification of Cat Vocalizations Emitted in Different Contexts. Animals 2019, 9, 543. https://doi.org/10.3390/ani9080543

Ntalampiras S, Ludovico LA, Presti G, Prato Previde E, Battini M, Cannas S, Palestrini C, Mattiello S. Automatic Classification of Cat Vocalizations Emitted in Different Contexts. Animals. 2019; 9(8):543. https://doi.org/10.3390/ani9080543

Chicago/Turabian StyleNtalampiras, Stavros, Luca Andrea Ludovico, Giorgio Presti, Emanuela Prato Previde, Monica Battini, Simona Cannas, Clara Palestrini, and Silvana Mattiello. 2019. "Automatic Classification of Cat Vocalizations Emitted in Different Contexts" Animals 9, no. 8: 543. https://doi.org/10.3390/ani9080543

APA StyleNtalampiras, S., Ludovico, L. A., Presti, G., Prato Previde, E., Battini, M., Cannas, S., Palestrini, C., & Mattiello, S. (2019). Automatic Classification of Cat Vocalizations Emitted in Different Contexts. Animals, 9(8), 543. https://doi.org/10.3390/ani9080543