Pig Face Open Set Recognition and Registration Using a Decoupled Detection System and Dual-Loss Vision Transformer

Simple Summary

Abstract

1. Introduction

- We propose a decoupled pig face detection, recognition, and registration system that reduces manual effort and improves recognition accuracy by focusing on relevant facial features.

- We introduce a dynamic registration mechanism that allows the system to adapt to changes in the pig population without retraining, addressing the Open-Set recognition challenge inherent in PFOSR.

- We design a dual-loss structure that combines metric learning loss and classification loss during training to enhance the discriminative power of the feature extractor. This dual-loss structure captures subtle differences among pig faces while maintaining robustness to intra-class variations, significantly improving recognition accuracy in both Closed-Set and Open-Set scenarios.

- We create a comprehensive pig face dataset comprising a detection dataset, a recognition dataset, a side-face dataset, and a pig face gallery. This dataset facilitates the development of robust detection and recognition models for pig face identification tasks.

2. Materials and Methods

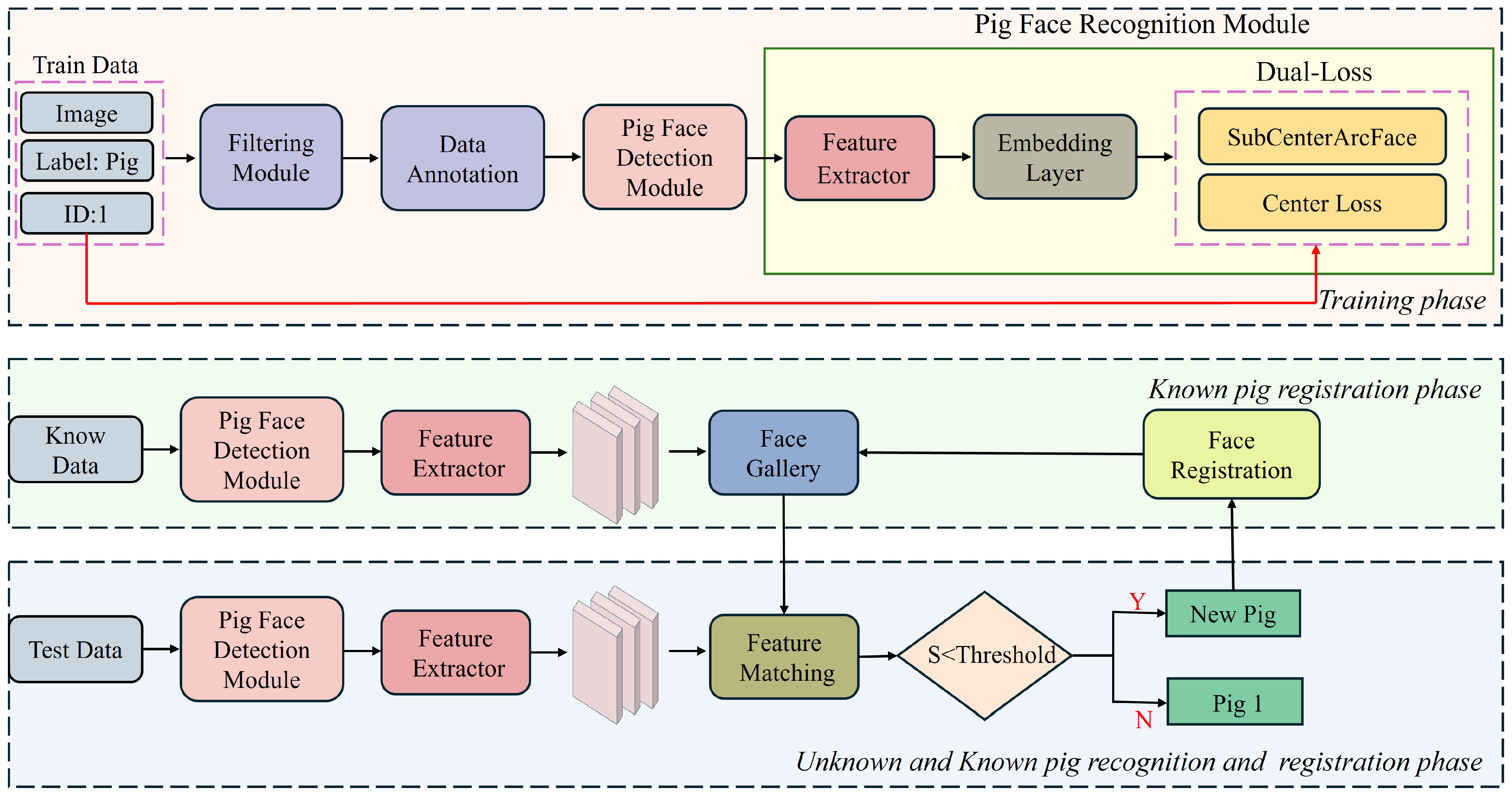

2.1. System Overview

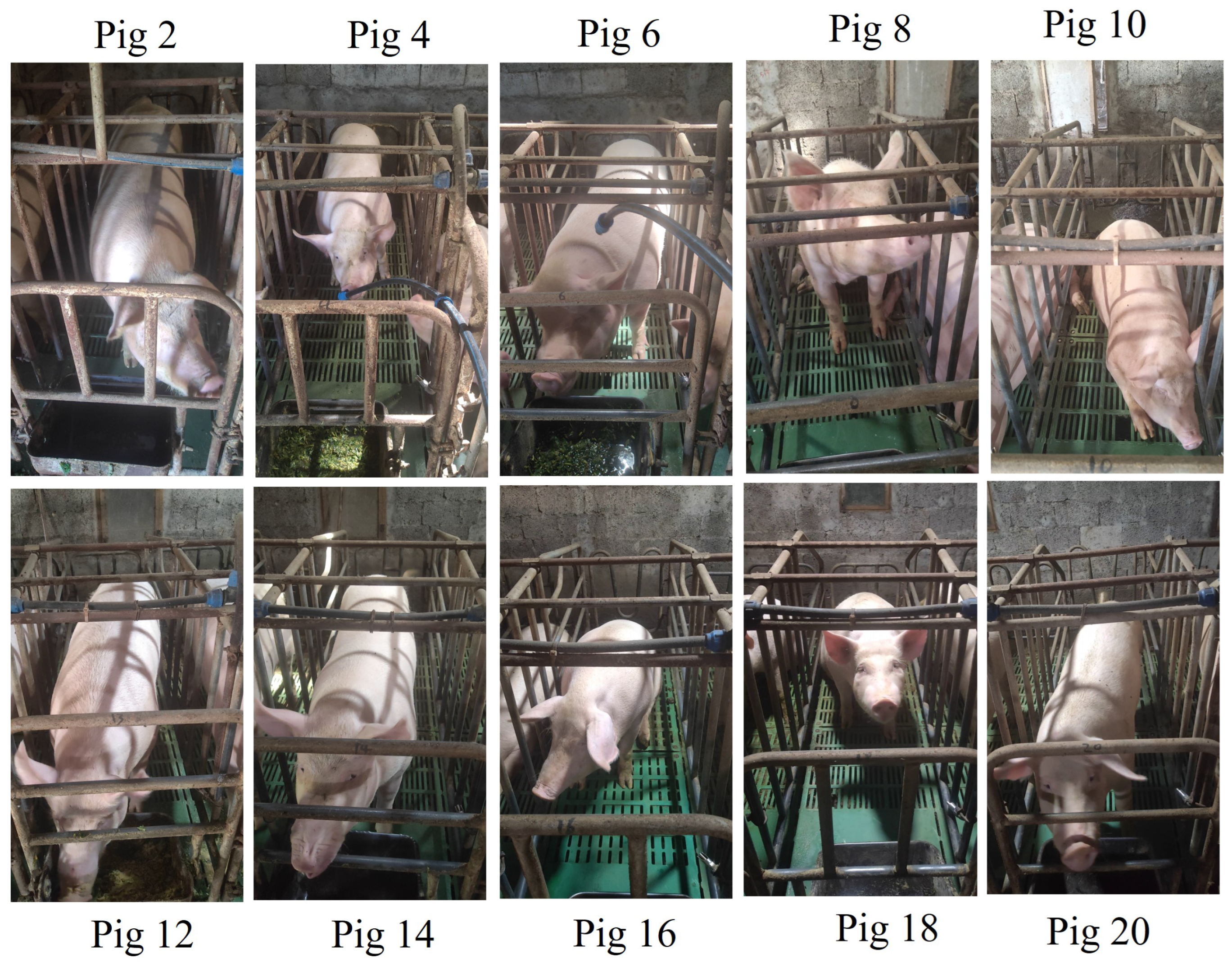

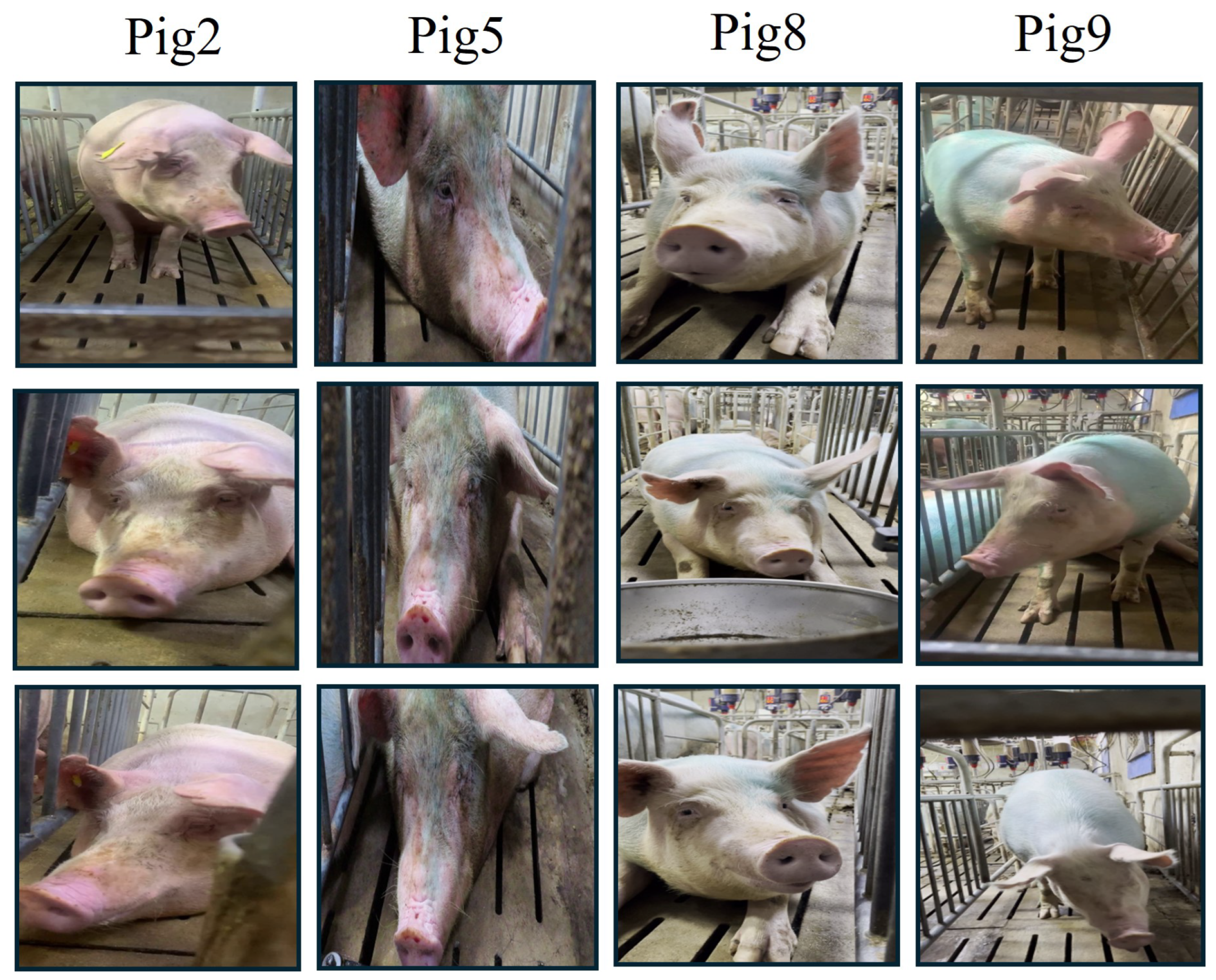

2.2. Dataset

2.3. Training Phase

2.3.1. Pig Face Detection Module

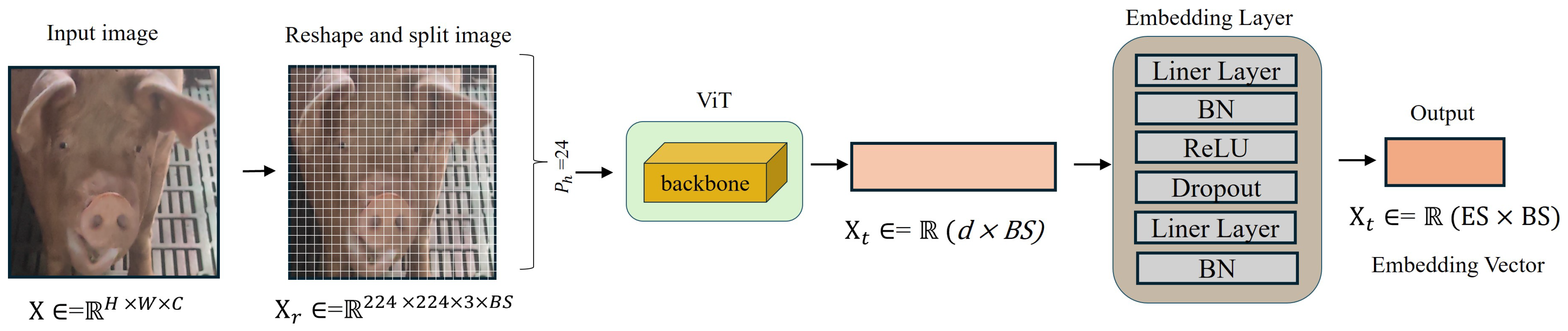

2.3.2. Pig Face Recognition Module

2.4. Registration and Face Gallery Updating System

3. Results

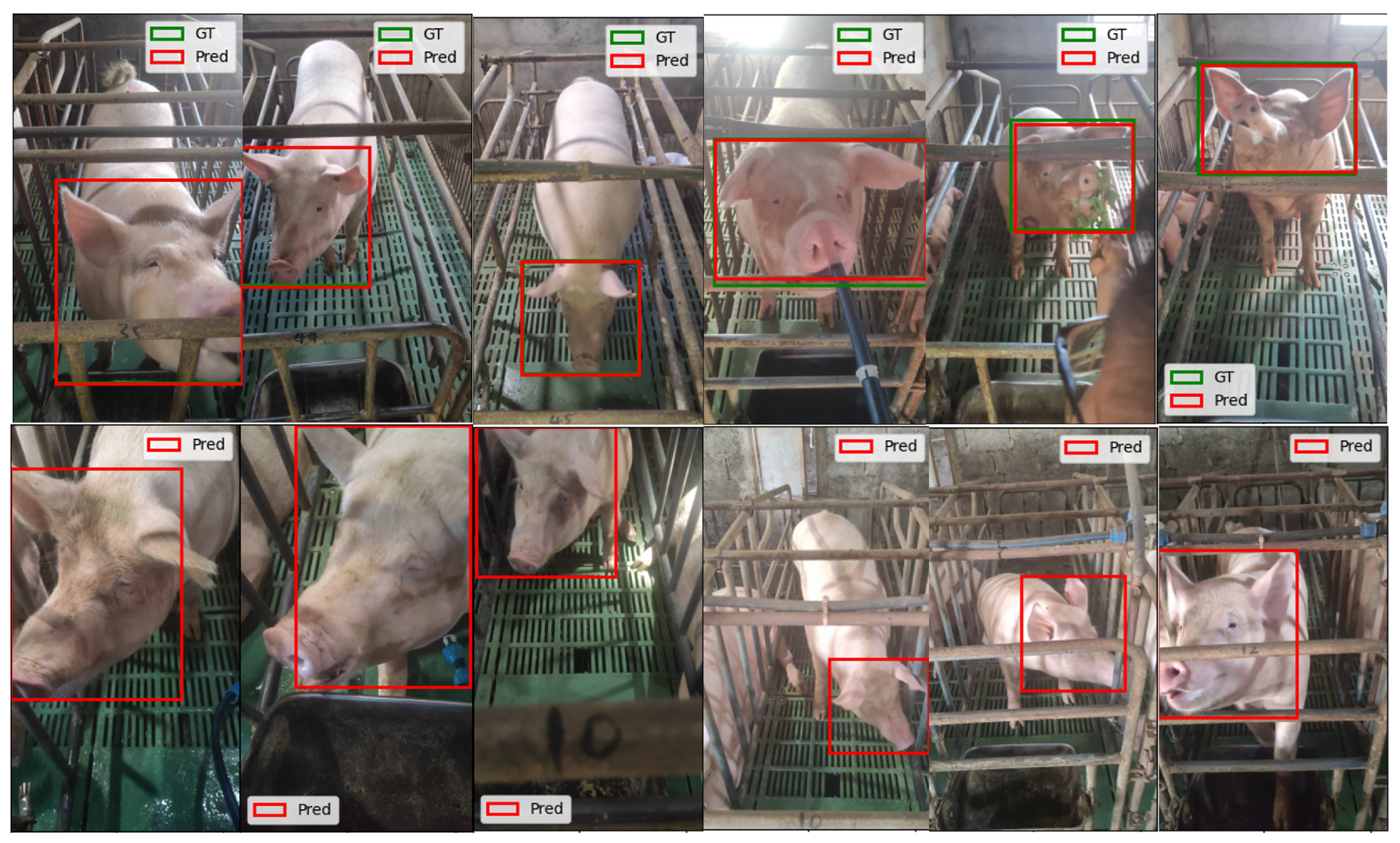

3.1. Automatic Pig Face Detection Module

3.1.1. Implementation Details

3.1.2. Experimental Results

3.1.3. Automatic Pig Face Detection Using the Pre-Trained YOLOv8 on Known Pig Face Recognition Dataset

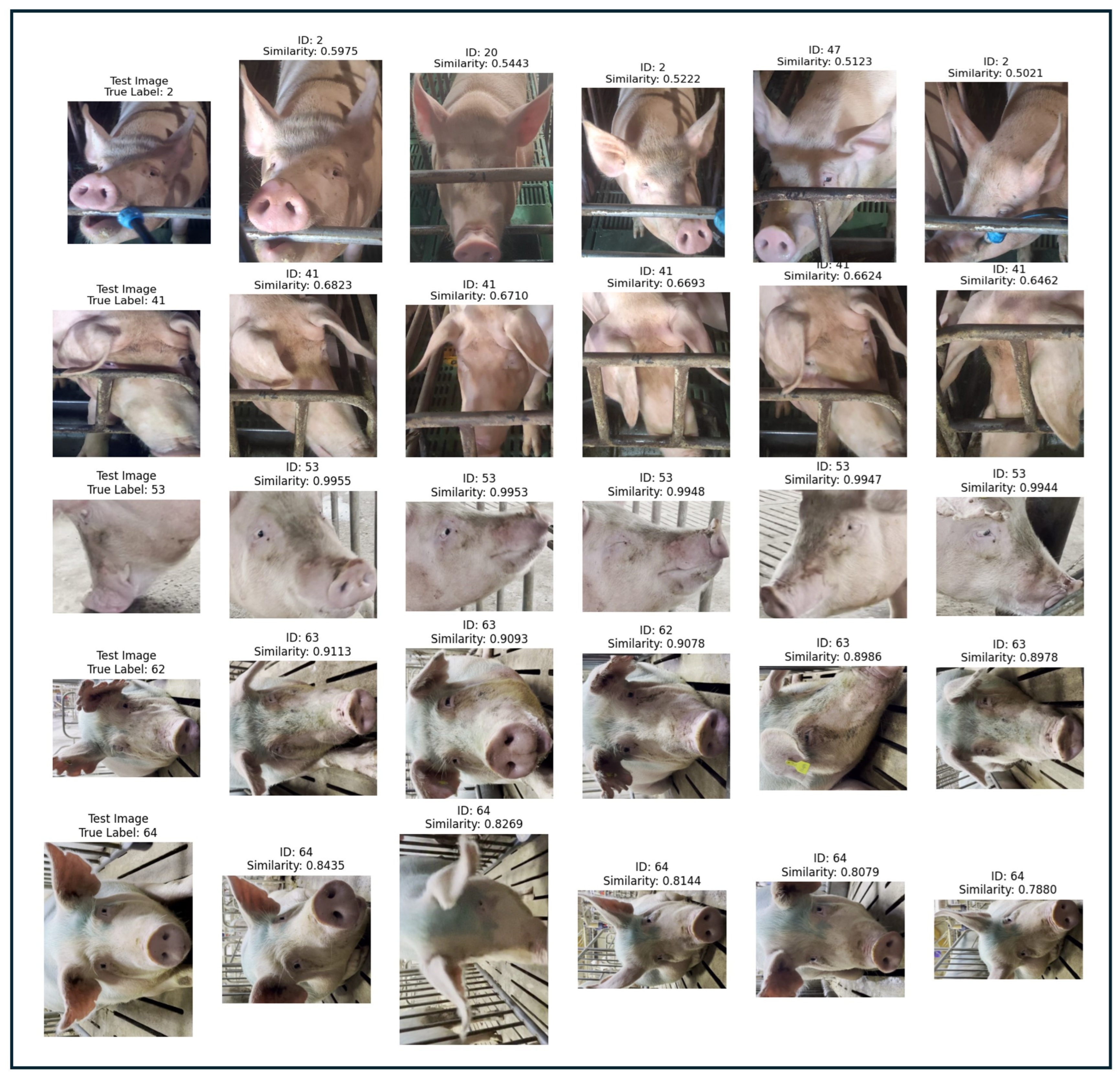

3.2. Pig Face Recognition Module

3.2.1. Experimental Settings

3.2.2. Comparative Models

3.2.3. Evaluation Metrics for the Test Dataset

- Closed-Set Accuracy (CSA) [33]: The proportion of correctly classified images out of the total number of images.

- Precision [34]: The ratio of true positive predictions to the total predicted positives.

- Recall [35]: The ratio of true positive predictions to the total actual positives.

- F1 Score [36]: The harmonic mean of precision and recall.

- Adjusted Mutual Information (AMI) [37]: Measures the agreement between the true labels and the predicted labels, adjusted for chance.

- Normalized Mutual Information (NMI) [38]: Similar to AMI but normalized to a scale between 0 (no mutual information) and 1 (perfect correlation).

- Closed-Set Accuracy (CSA) [33]: Calculated only on known classes.

- Area Under the Receiver Operating Characteristic Curve (AUROC) [39]: Measures the model’s ability to distinguish between known and unknown classes.

- Area Under the Precision-Recall Curve (AUPR) [40]: Evaluates the trade-off between precision and recall for different thresholds.

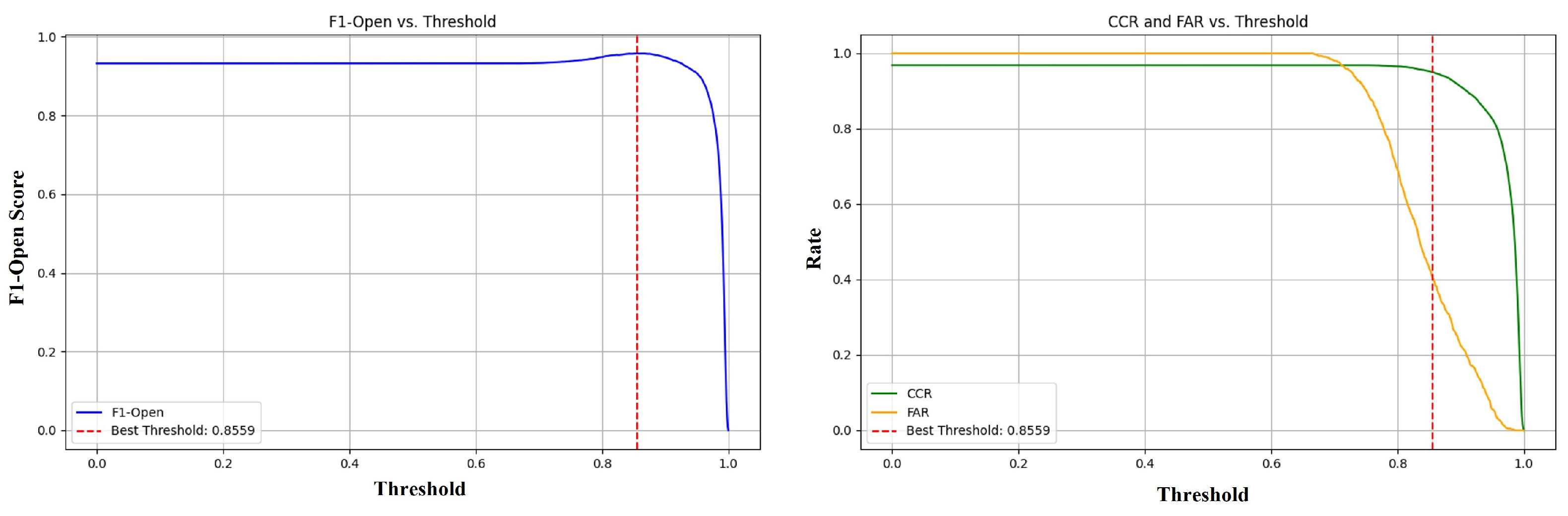

- F1-Open Score [41]: The F1 score adjusted for Open-Set recognition, considering both known and unknown classes.

- False Accept Rate (FAR) [42]: The proportion of unknown class samples that are incorrectly accepted as known classes.

- Correct Classification Rate (CCR) [43]: It measures the percentage of correctly classified instances over all instances, indicating the model’s overall accuracy.

- Open-Set Classification Rate (OSCR) [44]: Combines the correct classification rate of known classes and the false positive rate of unknown classes.

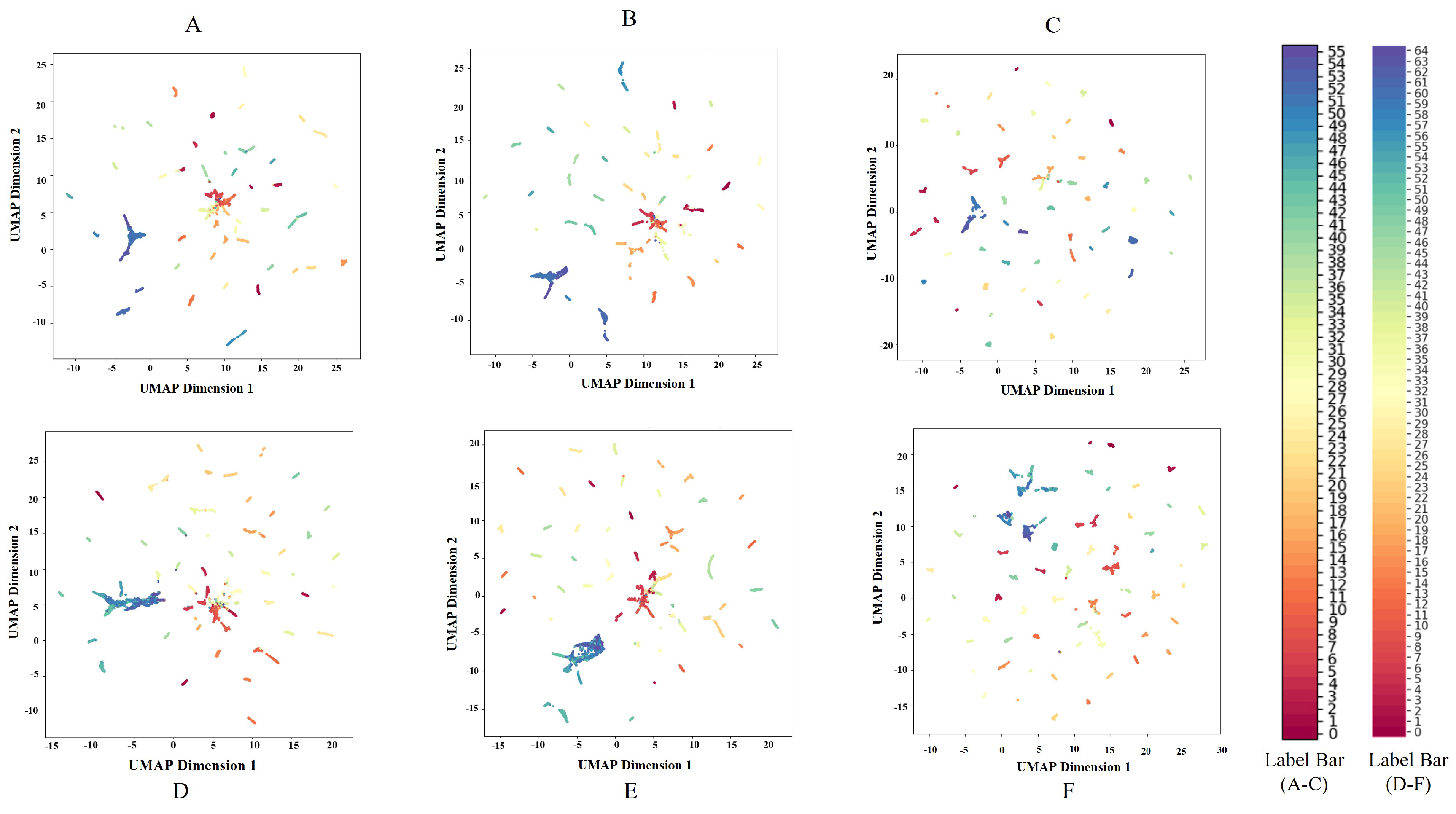

3.2.4. Pig Face Open-Set Recognition Experiments

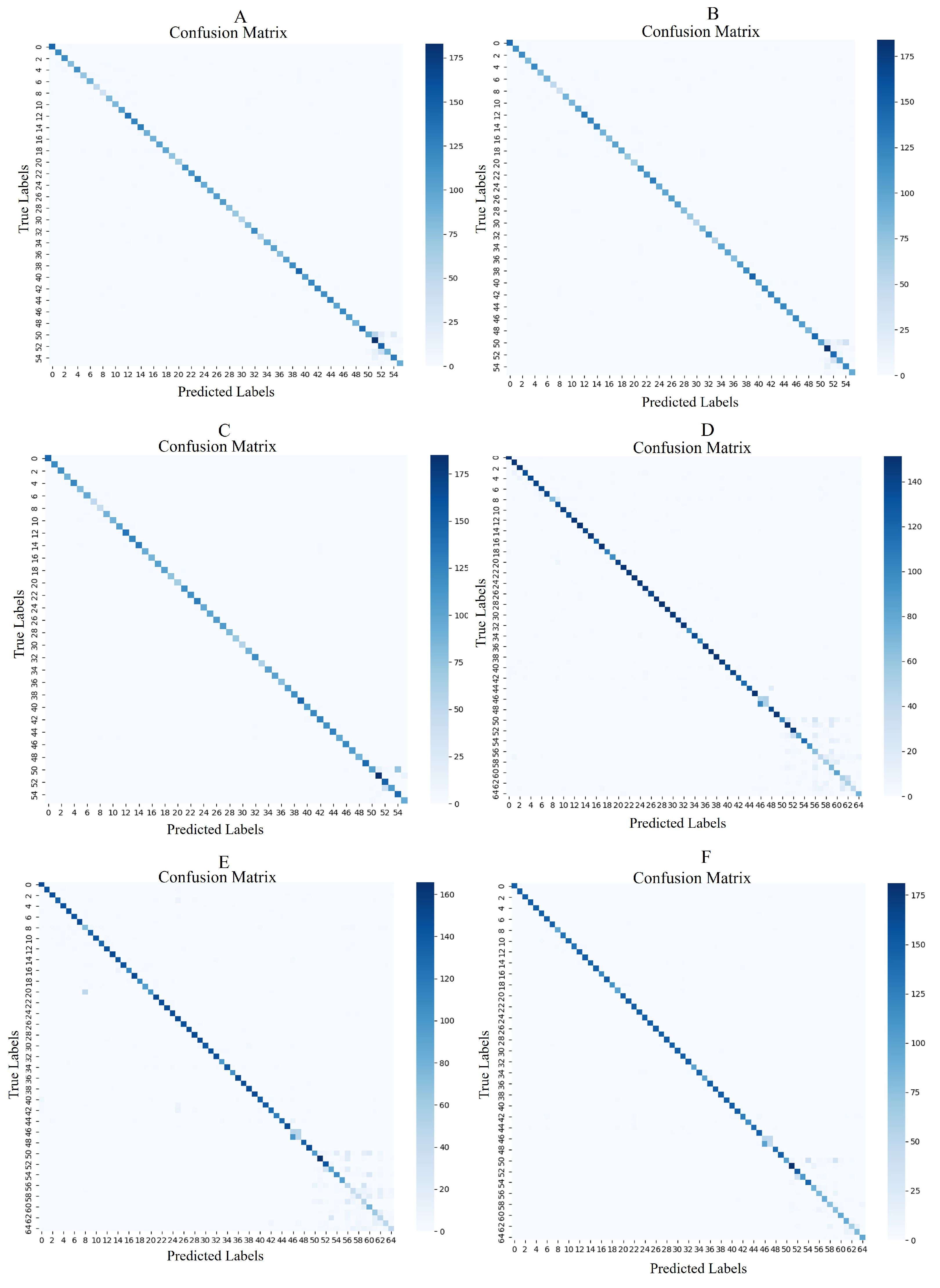

3.2.5. Performance on the Pig Face Closed-Set Recognition (PFCSR)

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

References

- Mahfuz, S.; Mun, H.S.; Dilawar, M.A.; Yang, C.J. Applications of smart technology as a sustainable strategy in modern swine farming. Sustainability 2022, 14, 2607. [Google Scholar] [CrossRef]

- Zhang, C.; Lu, Y. Study on artificial intelligence: The state of the art and future prospects. J. Ind. Inf. Integr. 2021, 23, 100224. [Google Scholar] [CrossRef]

- Marsot, M.; Mei, J.; Shan, X.; Ye, L.; Feng, P.; Yan, X.; Li, C.; Zhao, Y. An adaptive pig face recognition approach using Convolutional Neural Networks. Comput. Electron. Agric. 2020, 173, 105386. [Google Scholar] [CrossRef]

- Adrion, F.; Kapun, A.; Eckert, F.; Holland, E.M.; Staiger, M.; Götz, S.; Gallmann, E. Monitoring trough visits of growing-finishing pigs with UHF-RFID. Comput. Electron. Agric. 2018, 144, 105386. [Google Scholar] [CrossRef]

- Maselyne, J.; Saeys, W.; Briene, P.; Mertens, K.; Vangeyte, J.; De Ketelaere, B.; Hessel, E.F.; Sonck, B.; Van Nuffel, A. Methods to construct feeding visits from RFID registrations of growing-finishing pigs at the feed trough. Comput. Electron. Agric. 2021, 128, 9–19. [Google Scholar] [CrossRef]

- Li, L.; Mu, X.; Li, S.; Peng, H. A review of face recognition technology. IEEE Access 2020, 8, 139110–139120. [Google Scholar] [CrossRef]

- Dong, S.; Wang, P.; Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 2021, 40, 100379. [Google Scholar] [CrossRef]

- Billah, M.; Wang, X.; Yu, J.; Jiang, Y. Real-time goat face recognition using convolutional neural network. Comput. Electron. Agric. 2022, 194, 106730. [Google Scholar] [CrossRef]

- Weng, Z.; Meng, F.; Liu, S.; Zhang, Y.; Zheng, Z.; Gong, C. Cattle face recognition based on a Two-Branch convolutional neural network. Comput. Electron. Agric. 2022, 196, 106871. [Google Scholar] [CrossRef]

- Wan, Z.; Tian, F.; Zhang, C. Sheep face recognition model based on deep learning and bilinear feature fusion. Animals 2023, 13, 1957. [Google Scholar] [CrossRef]

- Meng, Y.; Yoon, S.; Han, S.; Fuentes, A.; Park, J.; Jeong, Y.; Park, D.S. Improving Known–Unknown Cattle’s Face Recognition for Smart Livestock Farm Management. Animals 2023, 13, 3588. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Xuan, C.; Xue, J.; Chen, B.; Ma, Y. LSR-YOLO: A high-precision, lightweight model for sheep face recognition on the mobile end. Animals 2023, 13, 1824. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Qin, J.; Hou, Q.; Gong, S. Cattle face recognition method based on parameter transfer and deep learning. J. Phys. Conf. Ser. 2020, 1453, 012054. [Google Scholar] [CrossRef]

- Wang, R.; Shi, Z.; Li, Q.; Gao, R.; Zhao, C.; Feng, L. Pig face recognition model based on a cascaded network. Appl. Eng. Agric. 2021, 37, 879–890. [Google Scholar] [CrossRef]

- Yan, L.; Miao, Z.; Zhang, W. Pig face detection method based on improved CenterNet algorithm. In Proceedings of the 2022 3rd International Conference on Electronic Communication and Artificial Intelligence (IWECAI), Zhuhai, China, 14–16 January 2022; IEEE: New York, NY, USA, 2022; pp. 174–179. [Google Scholar]

- Hansen, M.F.; Smith, M.L.; Smith, L.N.; Salter, M.G.; Baxter, E.M.; Farish, M.; Grieve, B. Towards on-farm pig face recognition using convolutional neural networks. Comput. Ind. 2018, 98, 145–152. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, T. Two-stage method based on triplet margin loss for pig face recognition. Comput. Electron. Agric. 2022, 194, 106737. [Google Scholar] [CrossRef]

- Ma, R.; Ali, H.; Chung, S.; Kim, S.C.; Kim, H. A lightweight pig face recognition method based on automatic detection and knowledge distillation. Appl. Sci. 2023, 14, 259. [Google Scholar] [CrossRef]

- Wang, R.; Gao, R.; Li, Q.; Dong, J. Pig face recognition based on metric learning by combining a residual network and attention mechanism. Agriculture 2023, 13, 144. [Google Scholar] [CrossRef]

- Musgrave, K.; Belongie, S.; Lim, S.N. Pytorch metric learning. arXiv 2020, arXiv:2008.09164. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4690–4699. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 499–515. [Google Scholar]

- Alexey, D. An image is worth 16x.16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Deng, J.; Guo, J.; Liu, T.; Gong, M.; Zafeiriou, S. Sub-center arcface: Boosting face recognition by large-scale noisy web faces. In Computer Vision—ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XI 16; Springer: Berlin/Heidelberg, Germany, 2020; pp. 741–757. [Google Scholar]

- Xia, P.; Zhang, L.; Li, F. Learning similarity with cosine similarity ensemble. Inf. Sci. 2015, 307, 39–52. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 20 April 2023).

- Guo, X.; Jiang, F.; Chen, Q.; Wang, Y.; Sha, K.; Chen, J. Deep Learning-Enhanced Environment Perception for Autonomous Driving: MDNet with CSP-DarkNet53. Pattern Recognit. 2024, 160, 111174. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; Volume 111174, pp. 13029–13038. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; Fang, J.; Wong, C.; Zeng, Y.; Mammana, L.; et al. ultralytics/yolov5: V6. 2-yolov5 classification models, apple m1, reproducibility, clearml and deci. ai integrations. Zenodo 2022. Available online: https://zenodo.org/records/7002879 (accessed on 25 November 2024).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Wei, X.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Vaze, S.; Han, K.; Vedaldi, A.; Zisserman, A. Open-Set recognition: A good Closed-Set classifier is all you need? arXiv 2021, arXiv:2110.06207. [Google Scholar]

- Streiner, D.L.; Norman, G.R. “Precision” and “accuracy”: Two terms that are neither. J. Clin. Epidemiol. 2006, 59, 327–330. [Google Scholar] [CrossRef]

- Fränti, P.; Mariescu-Istodor, R. Soft precision and recall. Pattern Recognit. Lett. 2023, 167, 115–121. [Google Scholar] [CrossRef]

- Yacouby, R.; Axman, D. Probabilistic extension of precision, recall, and f1 score for more thorough evaluation of classification models. In Proceedings of the First Workshop on Evaluation and Comparison of NLP Systems, Online, 20 November 2020; pp. 79–91. [Google Scholar]

- Zhu, Z.; Gao, Y. Finding cross-border collaborative centres in biopharma patent networks: A clustering comparison approach based on adjusted mutual information. In Complex Networks & Their Applications X: Volume 1, Proceedings of the Tenth International Conference on Complex Networks and Their Applications COMPLEX NETWORKS, Palermo, Italy, 8–10 November; Springer: Berlin/Heidelberg, Germany, 2022; pp. 62–72. [Google Scholar]

- Kvålseth, T.O. On normalized mutual information: Measure derivations and properties. Entropy 2017, 19, 631. [Google Scholar] [CrossRef]

- Neal, L.; Olson, M.; Fern, X.; Wong, W.; Li, F. Open set learning with counterfactual images. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 613–628. [Google Scholar]

- Huang, H.; Wang, Y.; Hu, Q.; Cheng, M.M. Class-specific semantic reconstruction for open set recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4214–4228. [Google Scholar] [CrossRef]

- Kozerawski, J.; Turk, M. One-class meta-learning: Towards generalizable few-shot Open-Set classification. arXiv 2021, arXiv:2109.06859. [Google Scholar]

- Yum, D.H.; Kim, J.S.; Hong, S.J.; Lee, P.J. Distance bounding protocol with adjustable false acceptance rate. IEEE Commun. Lett. 2021, 15, 434–436. [Google Scholar]

- Adachi, K. Correct classification rates in multiple correspondence analysis. J. Jpn. Soc. Comput. Stat. 2004, 17, 1–20. [Google Scholar] [CrossRef][Green Version]

- Kim, S.; Kim, H.I.; Ro, Y.M. Improving open set recognition via visual prompts distilled from common-sense knowledge. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 2786–2794. [Google Scholar]

| Model | Recall | |||

|---|---|---|---|---|

| YOLOV-5 | 0.975 | 0.955 | 0.821 | 0.779 |

| YOLOV-6 | 0.986 | 0.969 | 0.861 | 0.865 |

| YOLOV-7 | 0.980 | 0.964 | 0.840 | 0.876 |

| YOLOV-8 | 0.990 | 0.973 | 0.869 | 0.895 |

| Model | Training Strategies | CSA | AUROC | OSCR | AUPR | F1-Open |

|---|---|---|---|---|---|---|

| ViT-SAL | - | 94.02 ± 0.5 | 92.71 ± 0.2 | 92.71 ± 1.8 | 97.33 ± 0.5 | 89.81 ± 0.4 |

| ViT-SAL-IN21k | ✔ | 93.38 ± 1.1 | 92.95 ± 1.1 | 93.75 ± 1.1 | 98.98 ± 0.3 | 92.58 ± 0.2 |

| ViT-CL | - | 91.69 ± 0.2 | 90.14 ± 0.8 | 91.20 ± 0.2 | 95.13 ± 0.4 | 85.11 ± 1.6 |

| ViT-CL-IN21k | ✔ | 92.25 ± 0.7 | 91.70 ± 0.4 | 91.81 ± 0.6 | 95.77 ± 0.7 | 86.63 ± 1.3 |

| ViT-DL | - | 95.25 ± 0.7 | 94.26 ± 1.1 | 94.21 ± 0.1 | 98.77 ± 0.3 | 92.63 ± 0.3 |

| ViT-DL-IN21K (our) | ✔ | 96.60 ± 0.4 | 95.31 ± 0.2 | 95.87 ± 0.5 | 99.30 ± 0.1 | 93.77 ± 0.2 |

| Size of Gallery | CSA | AUROC | OSCR | AUPR | F1-Open |

|---|---|---|---|---|---|

| 10 | 95.30 ± 1.1 | 94.18 ± 0.4 | 92.01 ± 0.4 | 98.77 ± 0.6 | 92.41 ± 0.1 |

| 20 | 95.71 ± 0.2 | 95.14 ± 0.4 | 93.86 ± 0.2 | 99.11 ± 0.5 | 92.51 ± 0.1 |

| 30 | 96.88 ± 0.4 | 95.31 ± 0.2 | 95.87 ± 0.5 | 99.30 ± 0.1 | 93.77 ± 0.2 |

| 40 | 96.84 ± 0.1 | 95.21 ± 0.6 | 95.76 ± 0.1 | 99.29 ± 0.2 | 93.71 ± 0.4 |

| 50 | 96.00 ± 0.5 | 95.18 ± 1.7 | 95.91 ± 0.3 | 99.23 ± 0.2 | 93.16 ± 1.2 |

| Model | CSA | AUROC | OSCR | AUPR | F1-Open |

|---|---|---|---|---|---|

| Res18−SAL | 90.91 | 89.24 | 90.16 | 94.37 | 83.74 |

| Res18-DL-IN21k (our) | 93.98 | 90.02 | 91.11 | 93.17 | 84.12 |

| Res50−SAL | 93.47 | 91.55 | 92.36 | 94.58 | 84.24 |

| Res50-DL-IN21k (our) | 94.27 | 92.33 | 94.68 | 96.76 | 86.10 |

| Dataset | Model | AMI | NMI | CSA | P-R | MAP@R | F1-Score | Precision@1 |

|---|---|---|---|---|---|---|---|---|

| Dataset1 | Res18-DL-IN21k | 93.63 ± 1.1 | 94.04 ± 0.3 | 93.98 ± 0.4 | 92.80 ± 0.6 | 92.09 ± 0.1 | 94.64 ± 0.4 | 93.98 ± 0.3 |

| Res50-DL-IN21k | 93.73 ± 0.2 | 94.17 ± 0.7 | 94.27 ± 0.1 | 92.04 ± 0.6 | 91.42 ± 0.1 | 94.94 ± 0.5 | 94.27 ± 0.2 | |

| ViT-DL-IN21K | 97.06 ± 0.4 | 97.26 ± 0.2 | 96.60 ± 0.6 | 95.10 ± 0.1 | 94.64 ± 0.1 | 97.28 ± 0.1 | 96.60 ± 0.1 | |

| Dataset2 | Res18-DL-IN21k | 89.25 ± 1.2 | 89.92 ± 0.4 | 88.09 ± 0.5 | 82.16 ± 0.7 | 80.28 ± 0.2 | 86.80 ± 0.1 | 88.09 ± 0.4 |

| Res50-DL-IN21k | 89.14 ± 0.3 | 89.82 ± 0.6 | 87.29 ± 0.2 | 87.29 ± 0.8 | 79.99 ± 0.8 | 85.76 ± 0.2 | 87.26 ± 0.3 | |

| ViT-DL-IN21K | 94.72 ± 0.5 | 95.05 ± 0.3 | 93.587 ± 0.5 | 86.93 ± 0.4 | 85.32 ± 0.1 | 92.93 ± 0.1 | 93.58 ± 0.4 |

| Gallery Size | CSA | Precision | Recall | F1-Score | NMI | AMI | Precision@1 | R-P | MAP@R |

|---|---|---|---|---|---|---|---|---|---|

| 10 | 96.44 | 97.16 | 97.20 | 96.99 | 96.98 | 96.76 | 96.44 | 95.43 | 95.12 |

| 20 | 96.64 | 97.48 | 97.40 | 97.26 | 97.29 | 97.08 | 96.64 | 95.24 | 94.84 |

| 30 | 96.76 | 97.53 | 97.55 | 97.36 | 97.32 | 97.11 | 96.76 | 95.34 | 94.93 |

| 40 | 96.54 | 97.48 | 97.39 | 97.22 | 97.19 | 96.97 | 96.54 | 95.02 | 94.56 |

| 50 | 96.60 | 97.53 | 97.47 | 97.28 | 97.26 | 97.06 | 96.60 | 95.10 | 94.64 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, R.; Ali, H.; Waqar, M.M.; Kim, S.C.; Kim, H. Pig Face Open Set Recognition and Registration Using a Decoupled Detection System and Dual-Loss Vision Transformer. Animals 2025, 15, 691. https://doi.org/10.3390/ani15050691

Ma R, Ali H, Waqar MM, Kim SC, Kim H. Pig Face Open Set Recognition and Registration Using a Decoupled Detection System and Dual-Loss Vision Transformer. Animals. 2025; 15(5):691. https://doi.org/10.3390/ani15050691

Chicago/Turabian StyleMa, Ruihan, Hassan Ali, Malik Muhammad Waqar, Sang Cheol Kim, and Hyongsuk Kim. 2025. "Pig Face Open Set Recognition and Registration Using a Decoupled Detection System and Dual-Loss Vision Transformer" Animals 15, no. 5: 691. https://doi.org/10.3390/ani15050691

APA StyleMa, R., Ali, H., Waqar, M. M., Kim, S. C., & Kim, H. (2025). Pig Face Open Set Recognition and Registration Using a Decoupled Detection System and Dual-Loss Vision Transformer. Animals, 15(5), 691. https://doi.org/10.3390/ani15050691