EDC-YOLO-World-DB: A Model for Dairy Cow ROI Detection and Temperature Extraction Under Complex Conditions

Simple Summary

Abstract

1. Introduction

- A physical modelling-based dual-path image enhancement method is employed to improve the structural information and boundary contours of low-light and over-exposed images.

- Within the model, textual priors are employed to establish spatial structural relationships between irrelevant ROIs. Text-guided feature alignment and relational modelling then constrain cross-regional semantic consistency, thereby enhancing the robustness of detection and localisation.

- An Efficient Dynamic Convolution structure is introduced to improve the network and correlation difference between different ROI image information and semantic information. Concurrently, a Dual Bidirectional Feature Pyramid Network (DB) structure is adopted to achieve cross-level feature fusion of dual-path information flow, enabling the model to better recognise fine-grained features.

- Considering the deformability central symmetry of the ROI structure, this study optimises the training strategy by employing a task alignment metric (TAM) to enhance semantic feature learning, introducing Gaussian soft-constrained centre sampling to improve adaptability to deformable regions, and designing a hybrid IoU loss (CIoU + GIoU) to stabilise bounding box regression under blurred boundaries and target shifts.

2. Materials and Analysis

2.1. Data Collection

- Each cow was restrained at the feed bunk using a neck rail;

- A handheld thermo-hygrometer was placed at a fixed position close to the cow and kept stationary;

- A veterinary mercury thermometer was inserted approximately 5 cm into the rectum to measure rectal temperature;

- After about 5 min, when the readings of the thermo-hygrometer and mercury thermometer had stabilised, the air temperature and humidity parameters of the infrared thermal imager were adjusted according to the current ambient conditions, and full-body thermal images were acquired from the rear and lateral views at a distance of approximately 1.5 m from the cow;

- After IRT image acquisition, the corresponding rectal temperature value was recorded;

- If the cow moved or the image was blurred, the measurements were repeated until a clear and complete thermal image was obtained.

2.2. Dataset Structure

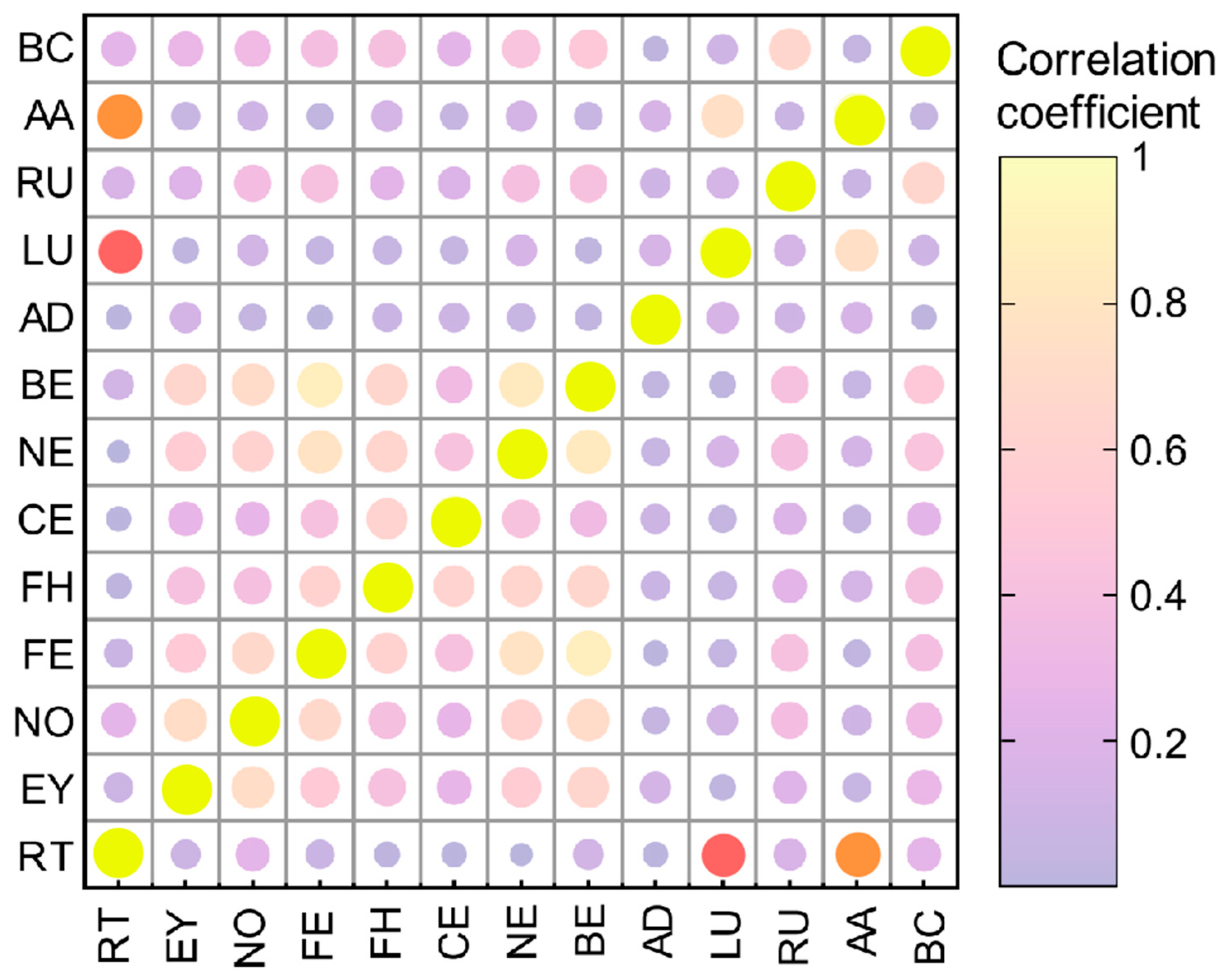

2.3. Data Analysis and ROI Selection

3. Methodology

3.1. Dual-Path Image Enhancement Method

3.1.1. Light Intensity Calculation

3.1.2. Low-Light Cow Image Enhancement Based on URetinex-Net

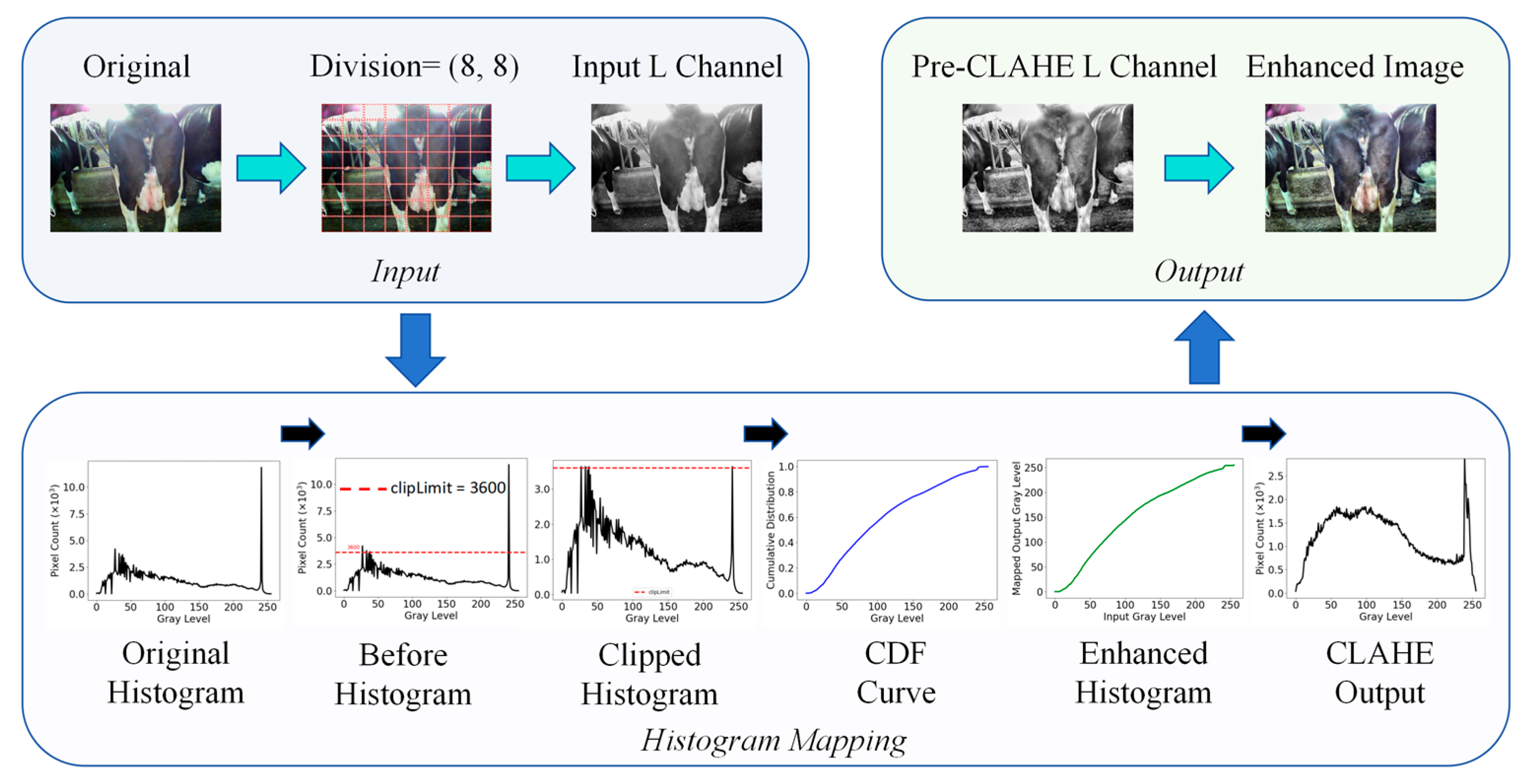

3.1.3. CLAHE-Based Overexposed Cow Image Enhancement

3.2. Improvements to YOLO-World Based on Reinforced Spatial and Structural Relationships

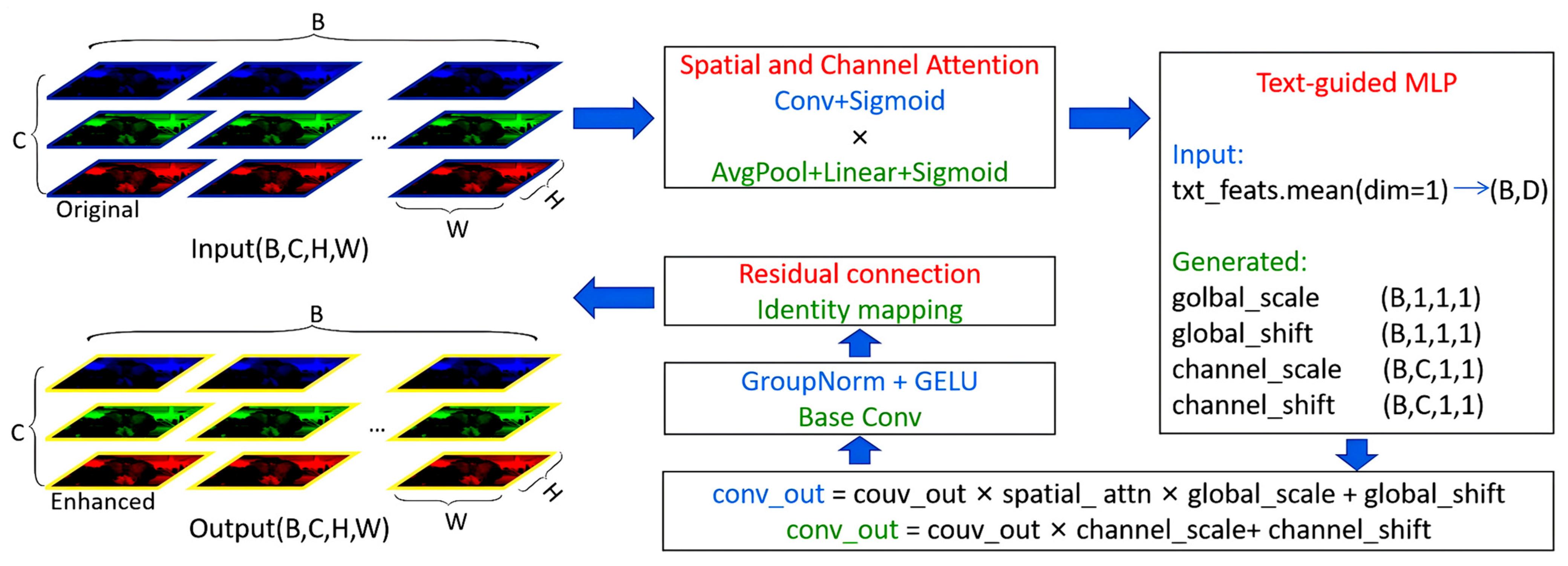

3.2.1. The Spatial Structural Relationship Between Text and ROI

3.2.2. EDC Module

3.2.3. Neck Structure Based on Dual Bidirectional Feature Pyramid Network (BiFPN)

3.3. Improvements to Training Strategies

3.3.1. Task Alignment Metric

3.3.2. Gaussian Soft-Constrained Centre Sampling

3.3.3. Mixed IoU Loss Function

3.4. Evaluation Criteria

4. Results

4.1. Image Enhancement Effect Evaluation

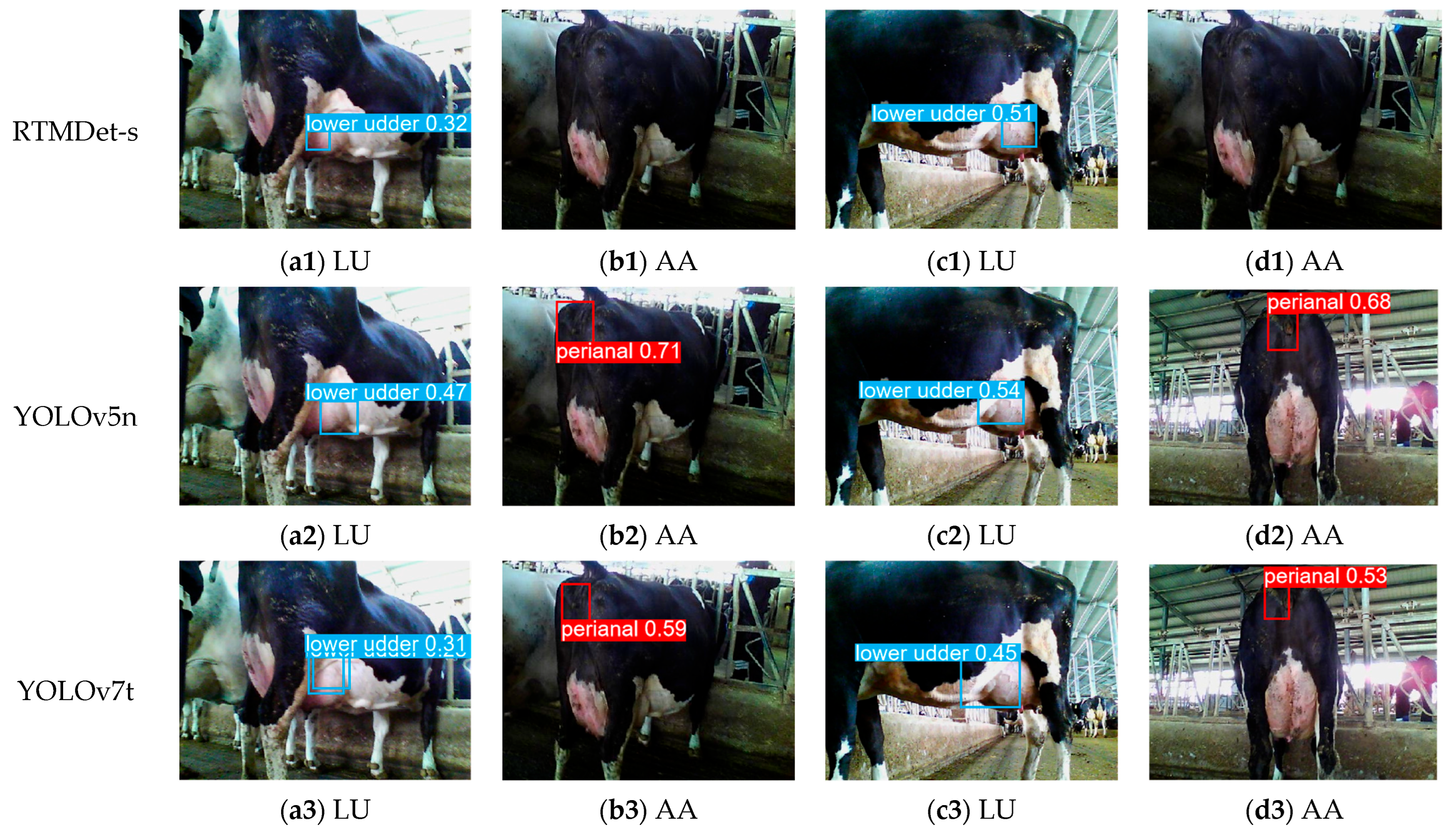

4.2. Performance Experiments of Different Models

4.3. Effectiveness Evaluation of Multi-Text Input in Spatial Structure Relationship Modelling

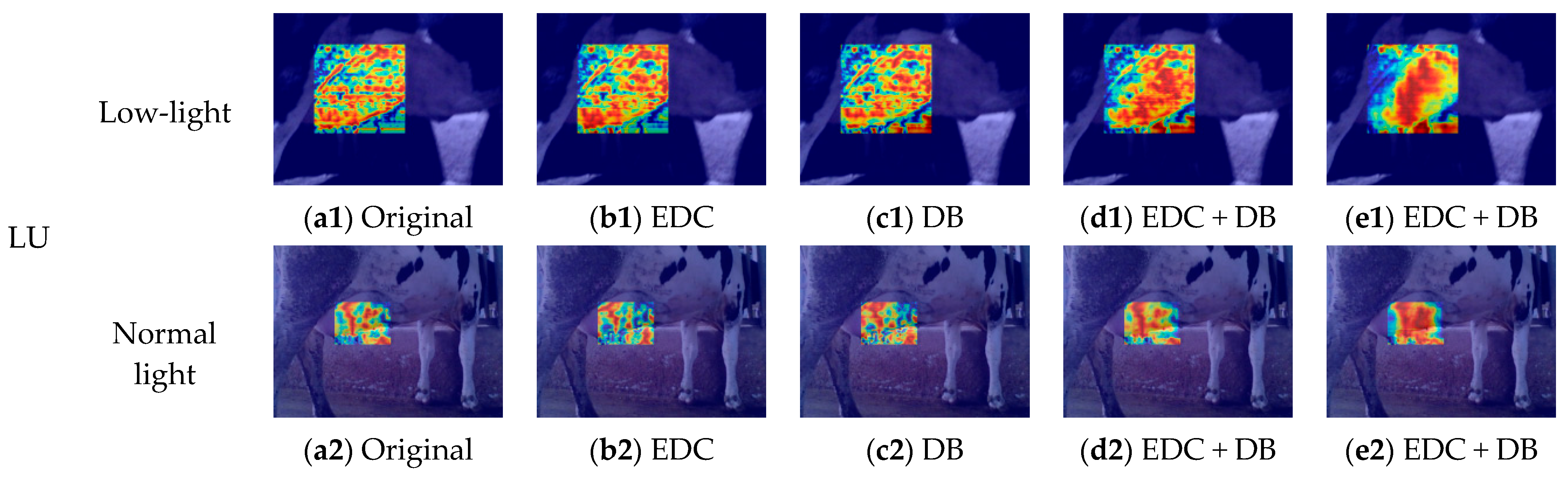

4.4. Comparison and Analysis of Visual Effects of Improved Modules Based on EigenCAM

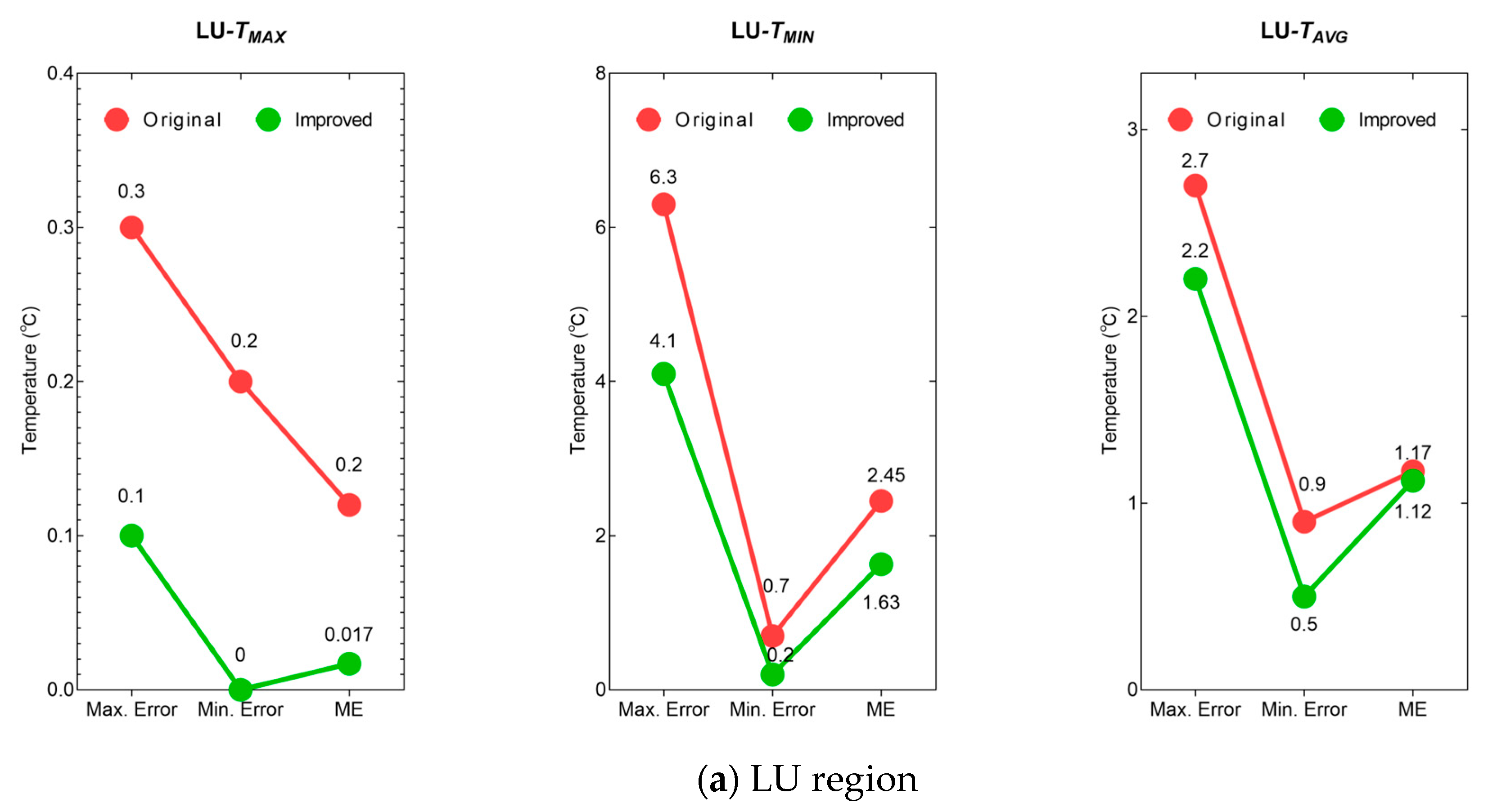

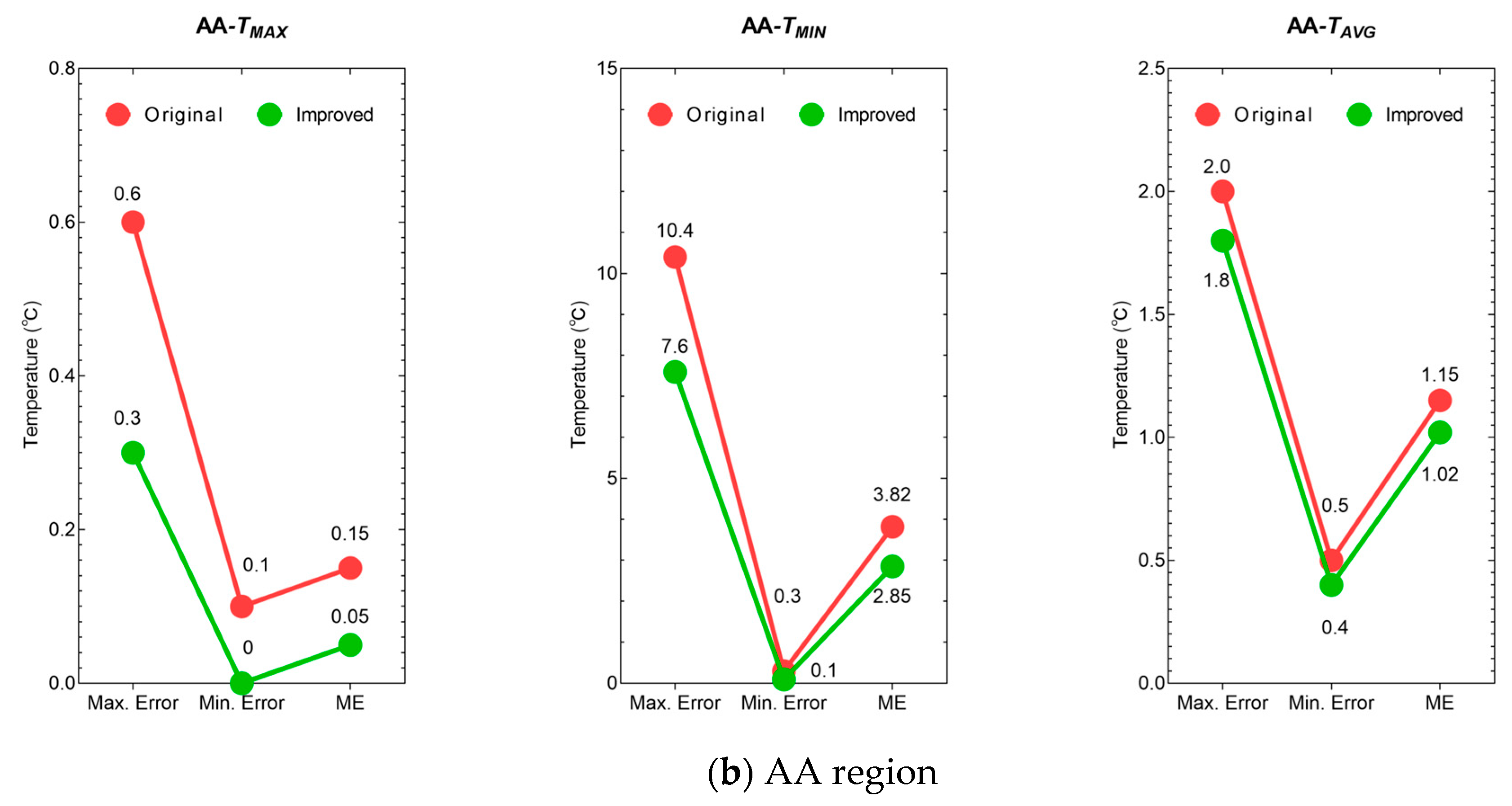

4.5. Temperature Extraction and Validation

5. Discussion

- URetinex-Net and CLAHE respectively address detail loss in low-light images and brightness inconsistencies in overexposed images through image enhancement. Combined with subsequent cross-layer fusion via a bidirectional pyramid, this enhances overall ROI texture discernibility and boundary clarity while improving robustness to lighting variations.

- Text-guided spatial structural relationships substantially mitigated colour interference and enhanced cross-scale consistency. Visualisation results demonstrate that text guidance concentrates heatmaps on structurally more plausible regions, while boundary alignment strategies better approximate actual anatomical contours. This indicates that weakly supervised semantic–geometric coupling effectively reduces errors stemming from visual discrepancies between colour and texture.

- EDC and DB synergistically enhance the separability and reusability of cross-modal and cross-layer features. Ablation experiments demonstrate that introducing either EDC or DB alone yields modest improvements in R and mAP50. However, DB slightly reduces P values under weak correlations, indicating that cross-layer multimodal fusion requires robust semantic alignment capabilities. When both techniques are concurrently applied to refine the model, P, R, and mAP50 improve synchronously, with heatmap distributions concentrating more sharply on the region of interest (ROI). This demonstrates the excellent enhancement effect of coupling dynamic semantic modulation with learning-based cross-layer fusion for fine-grained structural recognition.

- The improved training strategy significantly enhances positive sample quality and localisation stability. This optimisation enables the final model to achieve P = 88.65%, R = 85.77%, and mAP50 = 89.33%, representing improvements of 2.79%, 3.01%, and 1.92%, respectively, over the unmodified version.

- With the proposed method, the errors in extracting , , and were significantly reduced. Specifically, for LU, the ME of , , and decreased by 66.6%, 33.5%, and 4.27%, respectively, compared to the original values; for AA, the ME of , , and decreased by 66.6%, 25.4%, and 11.3%, respectively.

- Beyond statistical performance, the temperature extraction results are also physiologically consistent. Under increased heat load, central thermoregulatory control adjusts peripheral blood flow so that changes in core temperature are rapidly reflected at the perianal surface, which lies close to the rectum and pelvic cavity, while the more peripheral and strongly heat-dissipating lower udder tends to remain slightly cooler. The preserved RT–AA–LU gradient and the close agreement between model-derived AA and LU temperatures and rectal temperature, therefore, indicate that the network is capturing meaningful thermoregulatory signals rather than merely fitting image-level patterns.

6. Limitations and Future Research

- Real-time Performance: The image enhancement processing procedure impacts the real-time detection capability of the model. Future research will explore lightweight end-to-end micro-enhancement strategies and incorporate acceleration techniques such as NPU/FP16/INT8.

- Occlusion: Tail wagging and dirt coverage may trigger false positives or negatives. Future research will incorporate temporal and short-term trajectory correlation to mitigate single-frame image uncertainty.

- Textual Prior Quality: The detail and accuracy of textual descriptions influence EDC’s weight distribution effectiveness. Subsequent research will establish more precise and verifiable anatomical corpora, alongside automated text enhancement and alignment mechanisms.

- Temperature error: Irrelevant pixels may lead to elevated errors in and . Future research will focus on refining the ROI segmentation of dairy cows to minimise the potential for interference from extraneous pixels.

- Fur colour pattern: In Holstein cows, the udder is predominantly white but may contain small black patches and is connected to surrounding irregular black–white fur patterns. Even these occasional dark areas can noticeably degrade model performance, as the network can only learn from shape cues and two fur colours. This study mainly addresses the effect of small black patches and black–white junctions around the udder; future research will further refine the model for cows with predominantly white udders.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kamil, S.; Marta, H.; Kamil, D.; Michal, Z.; Olgierd, U. Video-Based Automated Lameness Detection for Dairy Cows. Sensors 2025, 25, 5771. [Google Scholar] [CrossRef]

- Pezeshki, A.; Stordeur, P.; Wallemacq, H.; Schynts, F.; Stevens, M.; Boutet, P.; Peelman, L.J.; De Spiegeleer, B.; Duchateau, L.; Bureau, F.; et al. Variation of Inflammatory Dynamics and Mediators in Primiparous Cows After Intramammary Challenge with Escherichia coli. Vet. Res. 2011, 42, 15. [Google Scholar] [CrossRef]

- Metzner, M.; Sauter-Louis, C.; Seemueller, A.; Petzl, W.; Zerbe, H. Infrared thermography of the udder after experimentally induced Escherichia coli mastitis in cows. Vet. J. 2015, 204, 360–362. [Google Scholar] [CrossRef]

- Janghoon, J.; Houggu, L. Impact of Relative Humidity on Heat Stress Responses in Early-Lactation Holstein Cows. Animals 2025, 15, 1503. [Google Scholar] [CrossRef]

- Souza, J.; Querioz, J.; Santos, V.; Dantas, M.; Lima, R.; Lima, P.; Costa, L. Cutaneous Evaporative Thermolysis and Hair Coat Surface Temperature of Calves Evaluated with the Aid of a Gas Analyzer and Infrared Thermography. Comput. Electron. Agric. 2018, 154, 222–226. [Google Scholar] [CrossRef]

- Collier, R.; Baumgard, L.; Zimbelman, R.; Xiao, Y. Heat Stress: Physiology of Acclimation and Adaptation. Anim. Front. 2019, 9, 12–19. [Google Scholar] [CrossRef]

- Church, J.; Hegadoren, P.; Paetkau, M.; Miller, C.; Regev-Shoshani, G.; Schaefer, A.; Schwartzkopf-Genswein, K. Influence of Environmental Factors on Infrared Eye Temperature Measurements in Cattle. Res. Vet. Sci. 2014, 96, 220–226. [Google Scholar] [CrossRef] [PubMed]

- Peng, D.; Chen, S.; Li, G.; Chen, J.; Wang, J.; Gu, X. Infrared Thermography Measured Body Surface Temperature and its Relationship with Rectal Remperature in Dairy Cows Under Different Temperature-Humidity Indexes. Int. J. Biometeorol. 2019, 63, 327–336. [Google Scholar] [CrossRef]

- Gebremedhin, K.; Wu, B. Modeling Heat Loss from the Udder of a Dairy Cow. J. Therm. Biol. 2016, 59, 34–38. [Google Scholar] [CrossRef] [PubMed]

- Passawat, T.; Adisorn, Y.; Theera, R. Effect of Heat Stress on Subsequent Estrous Cycles Induced by PGF2α in Cross-Bred Holstein Dairy Cows. Animals 2024, 14, 2009. [Google Scholar]

- Zhenlong, W.; Sam, W.; Dong, L.; Tomas, N. How AI Improves Sustainable Chicken Farming: A Literature Review of Welfare, Economic, and Environmental Dimensions. Agriculture 2025, 19, 2028. [Google Scholar] [CrossRef]

- Geqi, Y.; Zhengxiang, S.; Hao, L. Critical Temperature-Humidity Index Thresholds Based on Surface Temperature for Lactating Dairy Cows in a Temperate Climate. Agriculture 2021, 11, 970. [Google Scholar] [CrossRef]

- Theusme, C.; Avendaño-Reyes, L.; Macías-Cruz, U.; Castañeda-Bustos, V.; García-Cueto, R.; Vicente-Pérez, R.; Mellado, M.; Meza-Herrera, C.; Vargas-Villamil, L.; Pérez-Gutiérrez, L. Prediction of Rectal Temperature in Holstein Heifers Using Infrared Thermography, Respiration Frequency, and Climatic Variables. Int. J. Biometeorol. 2022, 16, 2489–2500. [Google Scholar] [CrossRef]

- Balhara, A.K.; Hasan, J.M.; Hooda, E.; Kumar, K.; Ghanghas, A.; Sangwan, S.; Balhara, S.; Phulia, S.K.; Yadav, S.; Boora, A. Prediction of Core Body Temperature Using Infrared Thermography in Buffaloes. Ital. J. Anim. Sci. 2024, 23, 834–841. [Google Scholar] [CrossRef]

- Zhang, C.; Wu, X.; Xiao, D.; Zhang, X.; Lei, X.; Lin, S. An Automatic Ear Temperature Monitoring Method for Group-Housed Pigs Adopting Infrared Thermography. Animals 2025, 15, 2279. [Google Scholar] [CrossRef] [PubMed]

- Travain, T.; Colombo, E.S.; Heinzl, E.; Bellucci, D.; Previde, E.P.; Valsecchi, P. Hot dogs: Thermography in the Assessment of Stress in Dogs (Canis familiaris)—A Pilot Study. J. Vet. Behav. Clin. Appl. Res. 2015, 10, 17–23. [Google Scholar] [CrossRef]

- Xie, Q.; Wu, M.; Bao, J.; Zheng, P.; Liu, W.; Liu, X.; Yu, H. A Deep Learning-based Detection Method for Pig Body Temperature Using Infrared Thermography. Comput. Electron. Agric. 2023, 213, 108200. [Google Scholar] [CrossRef]

- Satheesan, L.; Kamboj, A.; Dang, A.K. Assessment of Mammary Stress in Early Lactating Crossbred (Alpine × Beetal) Does During Heat Stress in Conjunction with Milk Somatic Cells and Non-invasive Indicators. Small Rumin. Res. 2024, 240, 107375. [Google Scholar] [CrossRef]

- Wang, Y.; Chu, M.; Kang, X.; Liu, G. A Deep Learning Approach Combining DeepLabV3+and Improved YOLOv5 to Detect Dairy Cow Mastitis. Comput. Electron. Agric. 2024, 216, 108507. [Google Scholar] [CrossRef]

- Hao, J.; Zhang, H.; Han, Y.; Wu, J.; Zhou, L.; Luo, Z.; Du, Y. Sheep Face Detection Based on an Improved RetinaFace Algorithm. Animals 2023, 13, 2458. [Google Scholar] [CrossRef] [PubMed]

- Fang, C.; Zheng, H.; Yang, J.; Deng, H.; Zhang, T. Study on Poultry Pose Estimation Based on Multi-Parts Detection. Animals 2022, 12, 1322. [Google Scholar] [CrossRef]

- Sun, L.; Liu, G.; Yang, H.; Jiang, X.; Liu, J.; Wang, X.; Yang, H.; Yang, S. LAD-RCNN: A Powerful Tool for Livestock Face Detection and Normalization. Animals 2023, 13, 1446. [Google Scholar] [CrossRef]

- Pu, P.; Wang, J.; Yan, G.; Jiao, H.; Li, H.; Lin, H. EnhancedMulti-Scenario Pig Behavior Recognition Based on YOLOv8n. Animals 2025, 15, 2927. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, G.; Wang, J. Automated Detection of Sheep Eye Temperature Using Thermal Images and Improved YOLOv7. Comput. Electron. Agric. 2025, 230, 109925. [Google Scholar] [CrossRef]

- Wang, Y.; Kang, X.; Chu, M.; Liu, G. Deep Learning-based Automatic Dairy Cow ocular Surface Temperature Detection From Thermal Images. Comput. Electron. Agric. 2022, 202, 107429. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, Z.; Zhang, T.; Lu, C.; Zhang, Z.; Ye, J.; Yang, J.; Yang, D.; Fang, C. Improved YOLO-Goose-Based Method for Individual Identification of Lion-Head Geese and Egg Matching: Methods and Experimental Study. Agriculture 2025, 15, 1345. [Google Scholar] [CrossRef]

- Liu, H.; Wang, Y.; Zhai, C.; Wu, H.; Fu, H.; Feng, H.; Zhao, X. DWG-YOLOv8: A Lightweight Recognition Method for Broccoli in Multi-Scene Field Environments Based on Improved YOLOv8s. Agriculture 2025, 15, 2361. [Google Scholar] [CrossRef]

- Niu, Z.; Pi, H.; Jing, D.; Liu, D. PII-GCNet: Lightweight Multi-Modal CNN Network for Efficient Crowd Counting and Localization in UAV RGB-T Images. Electronics 2024, 13, 4298. [Google Scholar] [CrossRef]

- Xu, Z.; Zhao, Y.; Yin, Z.; Yu, Q. Optimized BottleNet Transformer Model with Graph Sampling and Counterfactual Attention for Cow Individual Identification. Comput. Electron. Agric. 2024, 218, 108703. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, B.; Jiang, Y.; Xu, Z.D.; Wang, X.; Liu, T.; Zhu, W. Lightweight Instance Segmentation for Rapid Leakage Detection in Shield Tunnel Linings Under Extreme Low-light Conditions. Autom. Constr. 2025, 177, 106368. [Google Scholar] [CrossRef]

- Desai, M.; Mewada, H.; Pires, I.M.P.; Roy, S. Evaluating the Performance of the YOLO Object Detection Framework on COCO Dataset and Real-World Scenarios. Procedia Comput. Sci. 2024, 251, 157–163. [Google Scholar] [CrossRef]

- Ghassemi, J.; Lee, H.G. Coat Color Affects Cortisol and Serotonin Levels in the Serum and Hairs of Holstein Dairy Cows Exposed to Cold Winter. Domest. Anim. Endocrinol. 2023, 82, 106768. [Google Scholar]

- Zhong, F.; Shen, W.; Yu, H.; Wang, G.; Hu, J. Dehazing & Reasoning YOLO: Prior Knowledge-guided Network for Object Detection in Foggy Weather. Pattern Recognit. 2024, 156, 110756. [Google Scholar] [CrossRef]

- Vasile, C.; Denisa, R.C.; Claudiu, C.; Ovidiu, F.T.; Marcel, L.; Daniel, R.O. Migration to Italy and Integration into the European Space from the Point of View of Romanians. Genealogy 2025, 9, 109. [Google Scholar] [CrossRef]

- Li, J.; Jia, M.; Li, B.; Meng, L.; Zhu, L. Multi-Grade Road Distress Detection Strategy Based on Enhanced YOLOv8 Model. Animals 2024, 14, 3832. [Google Scholar] [CrossRef]

- Claudio, U.; Maximilliano, V. Intelligent Systems for Autonomous Mining Operations: Real-Time Robust Road Segmentation. Systems 2025, 13, 801. [Google Scholar] [CrossRef]

- Mohamed, H.E.D.; Fadl, A.; Anas, O.; Wageeh, Y.; ElMasry, N.; Nabil, A.; Atia, A. MSR-YOLO: Method to Enhance Fish Detection and Tracking in Fish Farms. Procedia Comput. Sci. 2020, 170, 539–546. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic Convolution: Attention Over Convolution Kernels. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11027–11036. [Google Scholar]

- Cui, Y.; Liu, Z.; Li, Q.; Chan, A.B.; Xue, C.J. Bayesian Nested Neural Networks for Uncertainty Calibration and Adaptive Compression. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 2392–2401. [Google Scholar]

- Yunfan, W.; Lin, Y.; Pengze, Z.; Xin, Y.; Chuanchuan, S.; Yi, Z.; Aamir, H. YOLOv8n-RMB: UAV Imagery Rubber Milk Bowl Detection Model for Autonomous Robots’ Natural Latex Harvest. Agriculture 2025, 15, 2075. [Google Scholar]

- Mazur, K.; Lempitsky, V. I Cloud Transformers: A Universal Approach to Point Cloud Processing Tasks. In Proceedings of the International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10695–10704. [Google Scholar]

- Kaneko, S.; Martins, J.R.R.A. Simultaneous Optimization of Design and Takeoff Trajectory for an eVTOL Aircraft. Aerosp. Sci. Technol. 2024, 155, 109617. [Google Scholar] [CrossRef]

- Su, K.; Cao, L.; Zhao, B.; Li, N.; Wu, D.; Han, X. N-IoU: Better IoU-based Bounding Box Regression Loss for Object Detection. Neural Comput. Appl. 2024, 36, 3049–3063. [Google Scholar] [CrossRef]

- Francesco, C.; Alessandro, C. Real-Time Search and Rescue with Drones: A Deep Learning Approach for Small-Object Detection Based on YOLO. Drones 2025, 9, 514. [Google Scholar]

| Parameter | Value |

|---|---|

| RGB pixels | 640 × 480 |

| Infrared resolution | 320 × 240 |

| Emission | 0.98 |

| Field of view | 45° × 34° |

| Spatial resolution | 3.7 mrad |

| Thermal sensitivity NETD 1 | 50 mK |

| Temperature measurement range | −20–250 °C |

| Dataset Structure | Illumination Conditions | ||

|---|---|---|---|

| Low Light | Normal Light | Overexposed | |

| Training set | 733 | 955 | 624 |

| Validation set | 245 | 318 | 208 |

| Test set | 245 | 318 | 208 |

| Cow ID | RT (°C) | LU (°C) | AA (°C) |

|---|---|---|---|

| Cow 1 | 38.9 | 36.5 | 37.6 |

| Cow 2 | 38.8 | 36.7 | 37.4 |

| Cow 3 | 39.0 | 37.2 | 38.1 |

| Cow 4 | 38.2 | 36.4 | 37.1 |

| Cow 5 | 38.6 | 35.2 | 37.2 |

| Cow 6 | 38.6 | 35.7 | 37.3 |

| Cow 7 | 38.8 | 36.5 | 37.2 |

| Cow 8 | 38.4 | 36.2 | 37.4 |

| Cow 9 | 38.4 | 36.4 | 36.9 |

| Cow 10 | 39.7 | 37.5 | 38.5 |

| Text | Description |

|---|---|

| LU | Next to the hind leg of the cow, where the hind leg connects to the RU, the RU is not visible when the LU is visible |

| AA | Located at the top of the RU, above the hindquarters, adjacent to the stifle |

| RU | From the rear of the cow, it can be observed located below the AA region, adjacent to the hind legs, and connected to the LU at the lowest point |

| Hind legs | Next to the LU, RU, and AA |

| Hind quarters | Including the AA, upper side of the tail, and entire hindquarters |

| Text | ROI | Results | ||||||

|---|---|---|---|---|---|---|---|---|

| LU | AA | RU | Hind Legs | Hind Quarters | P | R | mAP50 | |

| 2T → 2C | √ 1 | √ | × 2 | × | × | 82.25 | 78.95 | 85.74 |

| 3T → 2C | √ | √ | √ | × | × | 83.63 | 78.64 | 83.09 |

| √ | √ | × | √ | × | 82.25 | 78.95 | 85.74 | |

| √ | √ | × | × | √ | 82.25 | 78.95 | 85.74 | |

| 4T → 2C | √ | √ | √ | √ | × | 84.77 | 81.84 | 85.61 |

| √ | √ | √ | × | √ | 83.13 | 80.32 | 84.97 | |

| √ | √ | × | √ | √ | 83.77 | 81.84 | 85.61 | |

| 5T → 2C | √ | √ | √ | √ | √ | 85.86 | 82.76 | 87.41 |

| Condition | RT (°C) | Actual Temperature (°C) | Predicted Temperature (°C) | ||

|---|---|---|---|---|---|

| LU | AA | LU | AA | ||

| Low light | 38.6 | 35.2 | 37.2 | 35.1 | 37.2 |

| 38.6 | 35.7 | 37.3 | 35.7 | 37.2 | |

| Normal light | 38.9 | 36.5 | 37.6 | 36.4 | 37.5 |

| 38.8 | 36.7 | 37.4 | 36.7 | 37.4 | |

| Overexposed | 38.4 | 36.4 | 36.9 | 36.3 | 36.7 |

| 39.7 | 37.5 | 38.5 | 37.4 | 38.2 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, H.; Kang, Z.; Xue, H.; Hu, J.; Norton, T. EDC-YOLO-World-DB: A Model for Dairy Cow ROI Detection and Temperature Extraction Under Complex Conditions. Animals 2025, 15, 3361. https://doi.org/10.3390/ani15233361

Song H, Kang Z, Xue H, Hu J, Norton T. EDC-YOLO-World-DB: A Model for Dairy Cow ROI Detection and Temperature Extraction Under Complex Conditions. Animals. 2025; 15(23):3361. https://doi.org/10.3390/ani15233361

Chicago/Turabian StyleSong, Hang, Zhongwei Kang, Hang Xue, Jun Hu, and Tomas Norton. 2025. "EDC-YOLO-World-DB: A Model for Dairy Cow ROI Detection and Temperature Extraction Under Complex Conditions" Animals 15, no. 23: 3361. https://doi.org/10.3390/ani15233361

APA StyleSong, H., Kang, Z., Xue, H., Hu, J., & Norton, T. (2025). EDC-YOLO-World-DB: A Model for Dairy Cow ROI Detection and Temperature Extraction Under Complex Conditions. Animals, 15(23), 3361. https://doi.org/10.3390/ani15233361