SATSN: A Spatial-Adaptive Two-Stream Network for Automatic Detection of Giraffe Daily Behaviors

Simple Summary

Abstract

1. Introduction

- I.

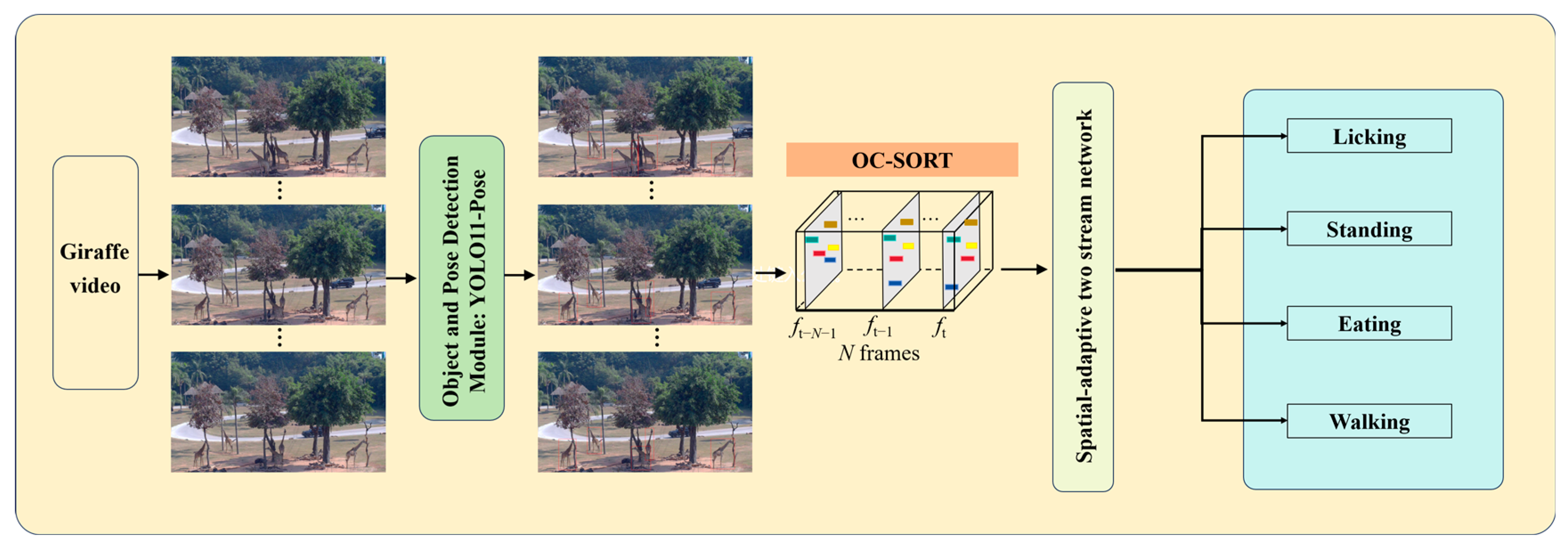

- We propose an automated multi-behavior detection method for giraffes, integrating object detection, keypoint estimation, multi-object tracking, and behavior detection modules. In addition, a mouth-region-based strategy is designed specifically for detecting licking behavior. The proposed method enables efficient recognition of various daily behaviors, including licking, eating, standing, and walking.

- II.

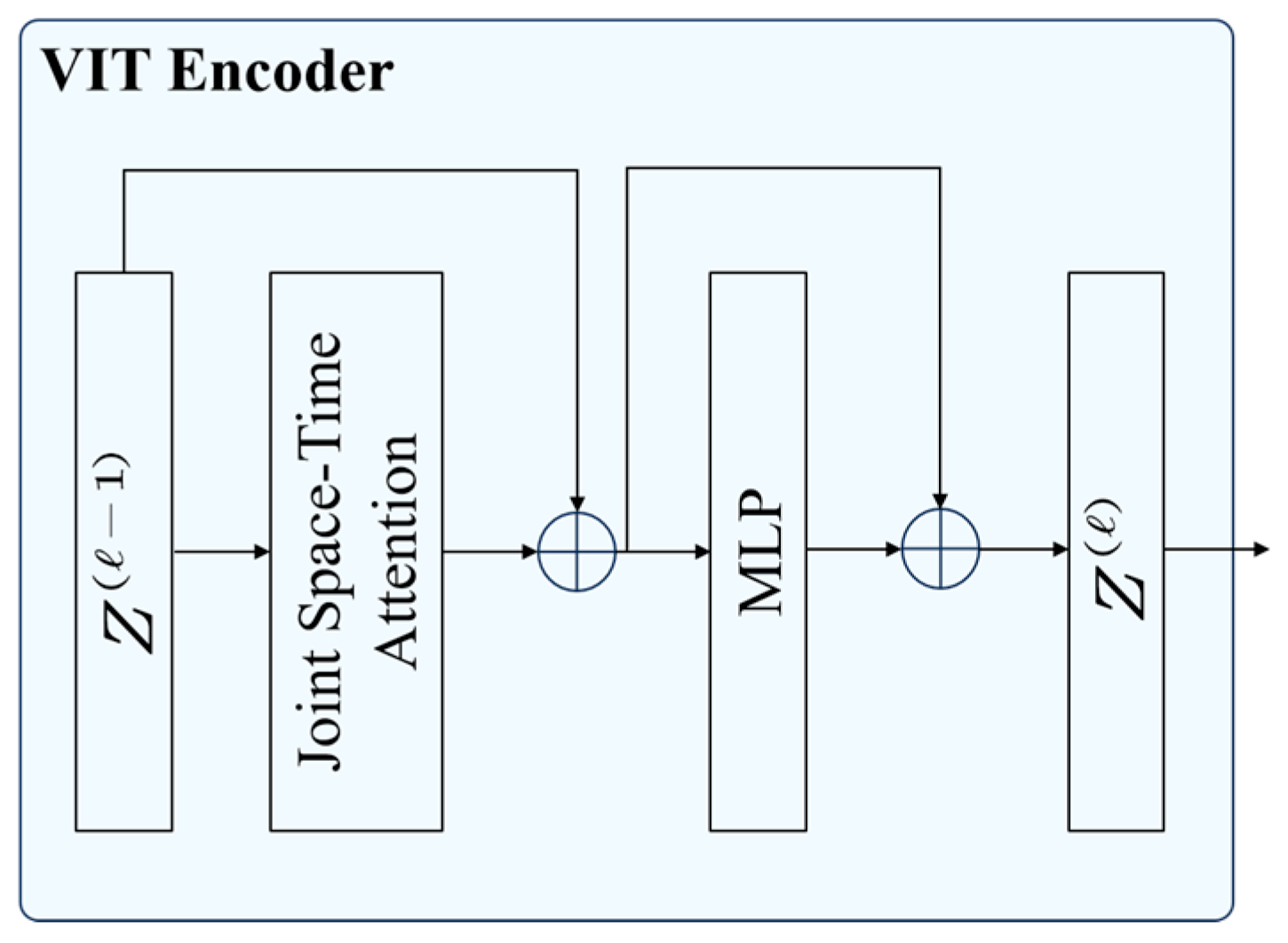

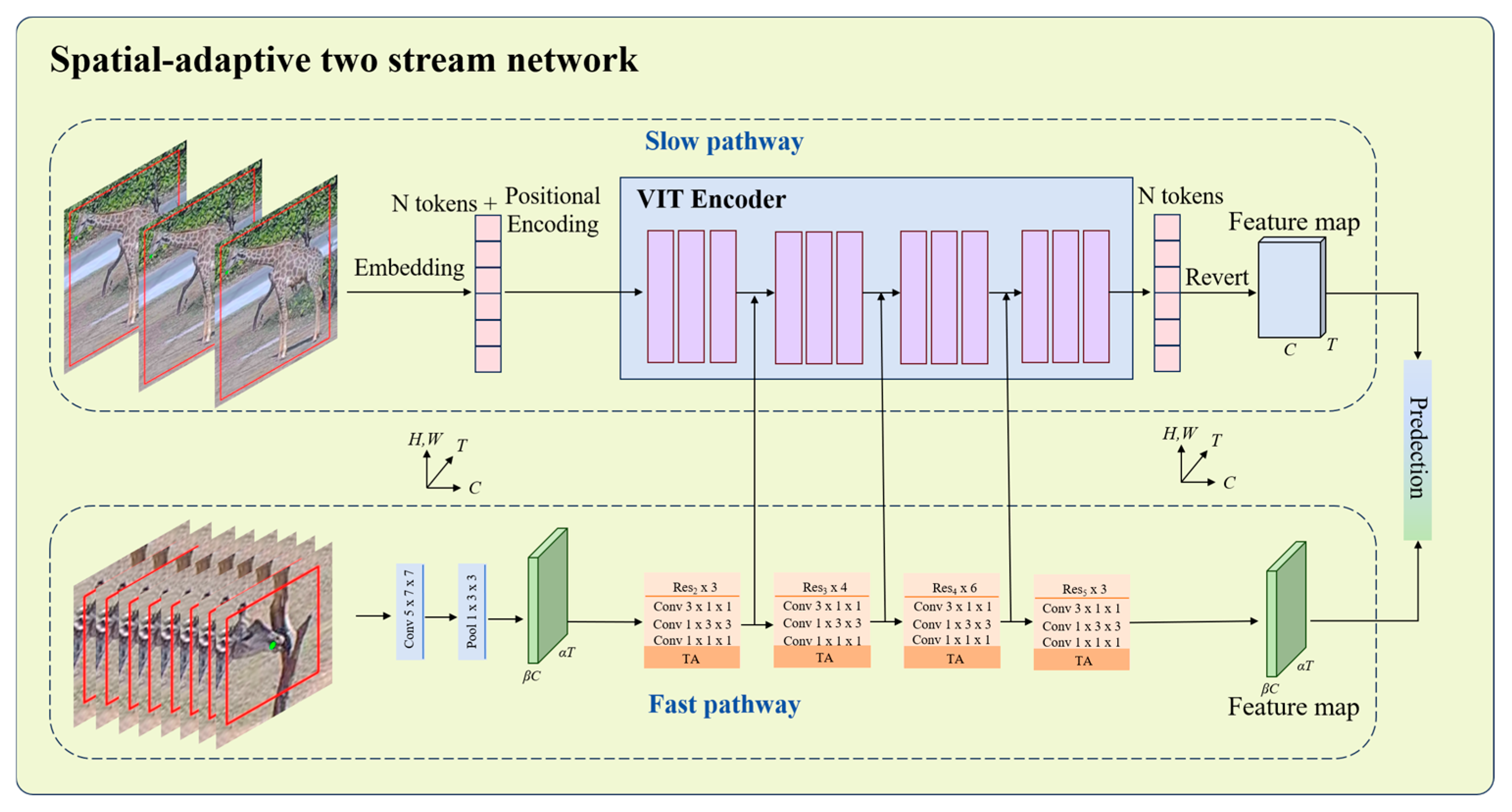

- In the behavior detection module, the SlowFast network is structurally enhanced to improve spatiotemporal modeling capabilities. Specifically, a Video Transformer (ViT) encoder is integrated into the slow pathway to strengthen the modeling of long-range spatiotemporal dependencies, while a Temporal Attention (TA) module is embedded in the fast pathway to improve responsiveness to motion changes. These architectural modifications significantly enhance the model’s ability to improve classification accuracy.

- III.

- Experimental results demonstrate the effectiveness of the proposed method in detecting giraffe daily behaviors within zoo environments. This study introduces a novel approach for monitoring such behavior, offering practical value for the timely surveillance and scientific management of giraffes’ physical and psychological well-being in zoological settings.

2. Methods

2.1. Materials

2.1.1. Animals and Video Acquisition

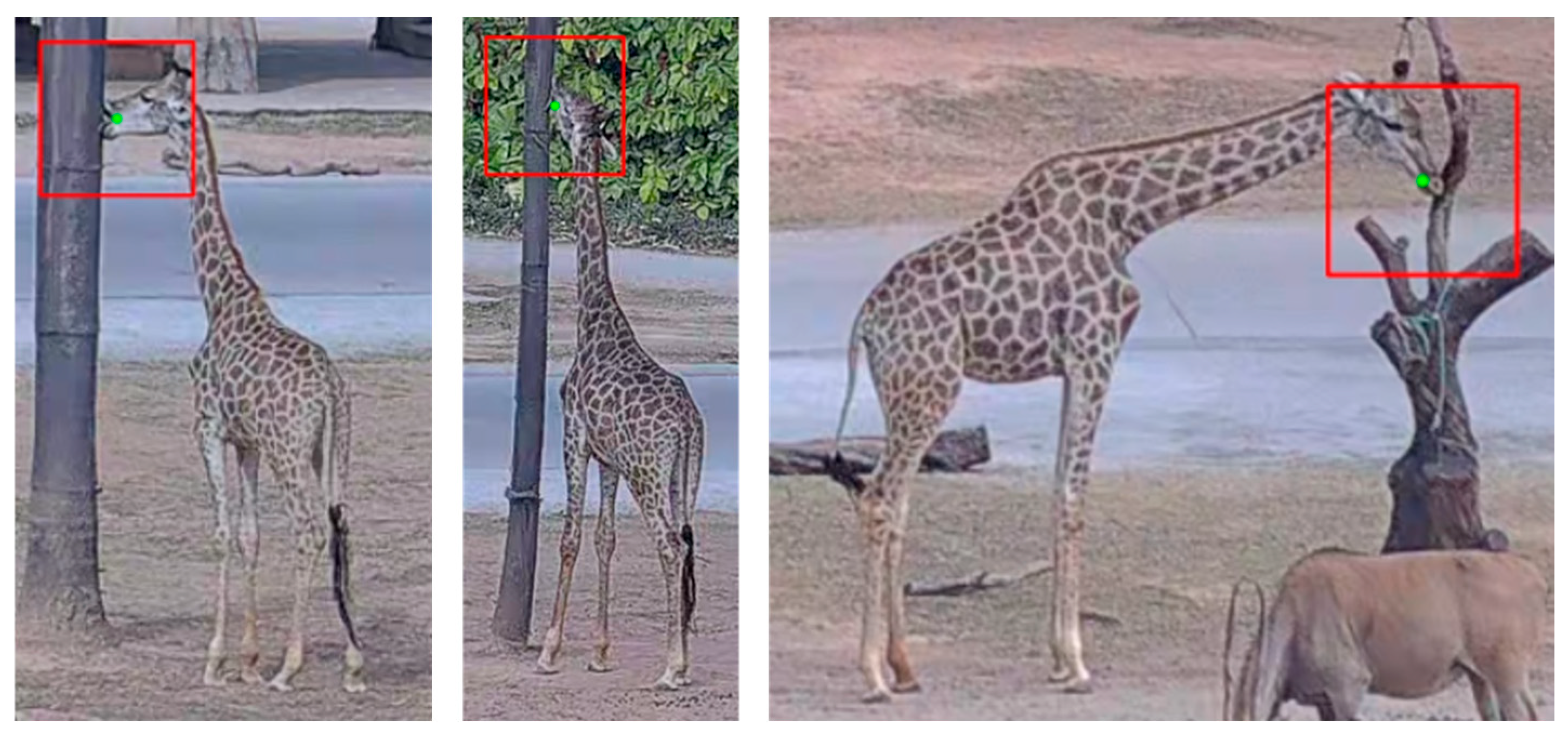

2.1.2. Data Preprocessing

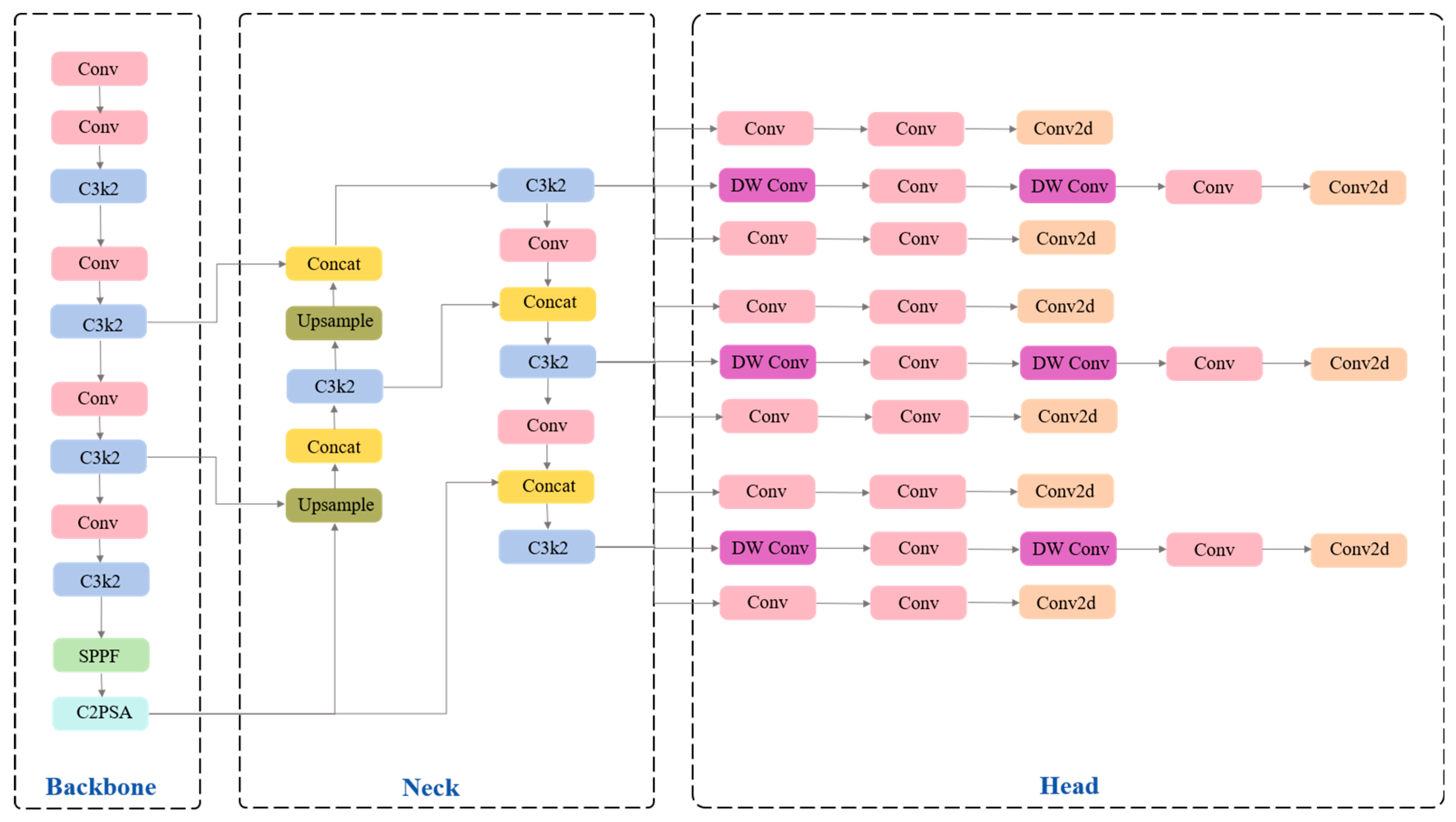

2.2. YOLO11-Pose Network

2.3. Spatial-Adaptive Two Stream Network

2.4. TA (Temporal Attention) Module

2.5. OC-SORT

2.6. Setup

3. Results

3.1. Evaluation Metrics

3.2. Experimental Results

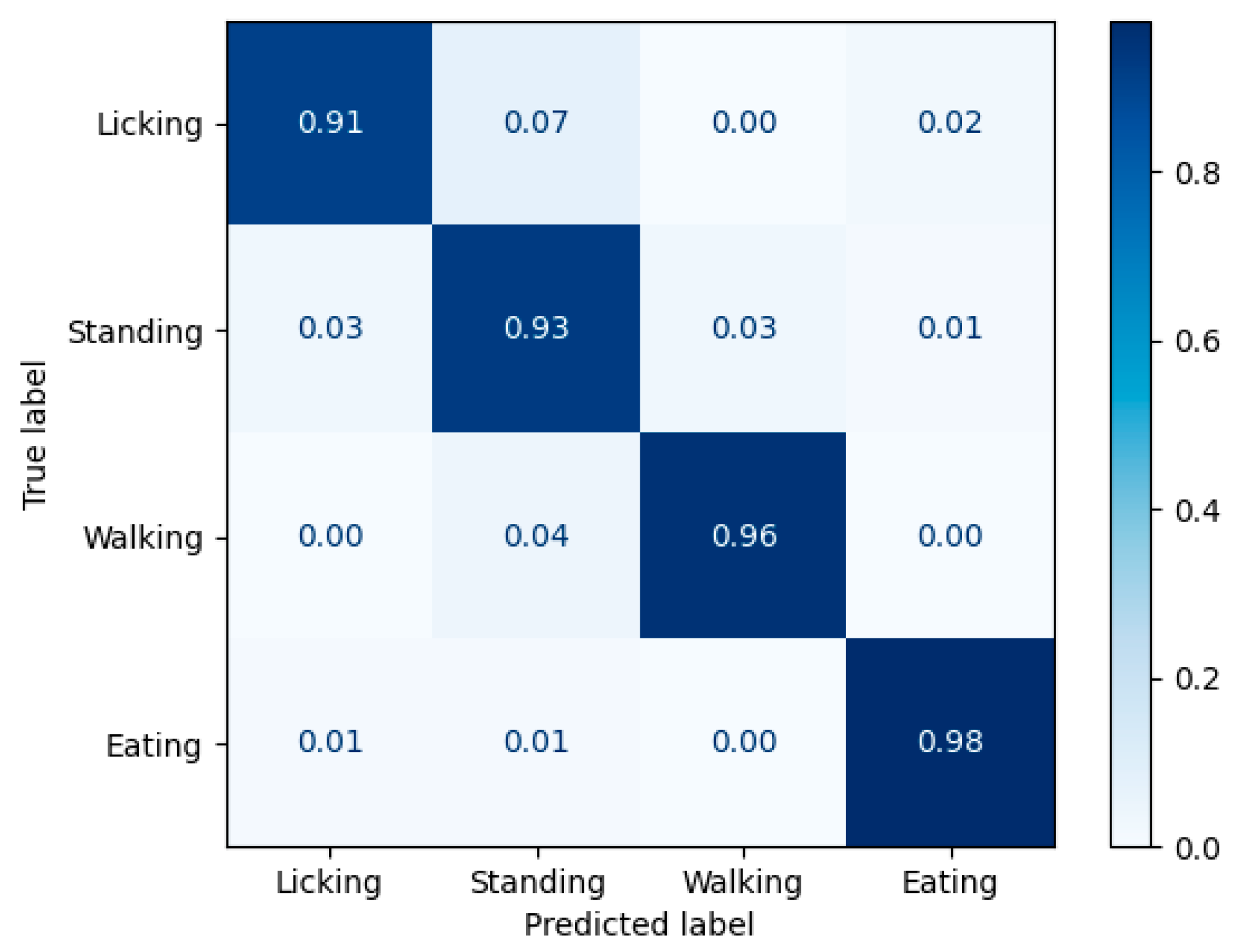

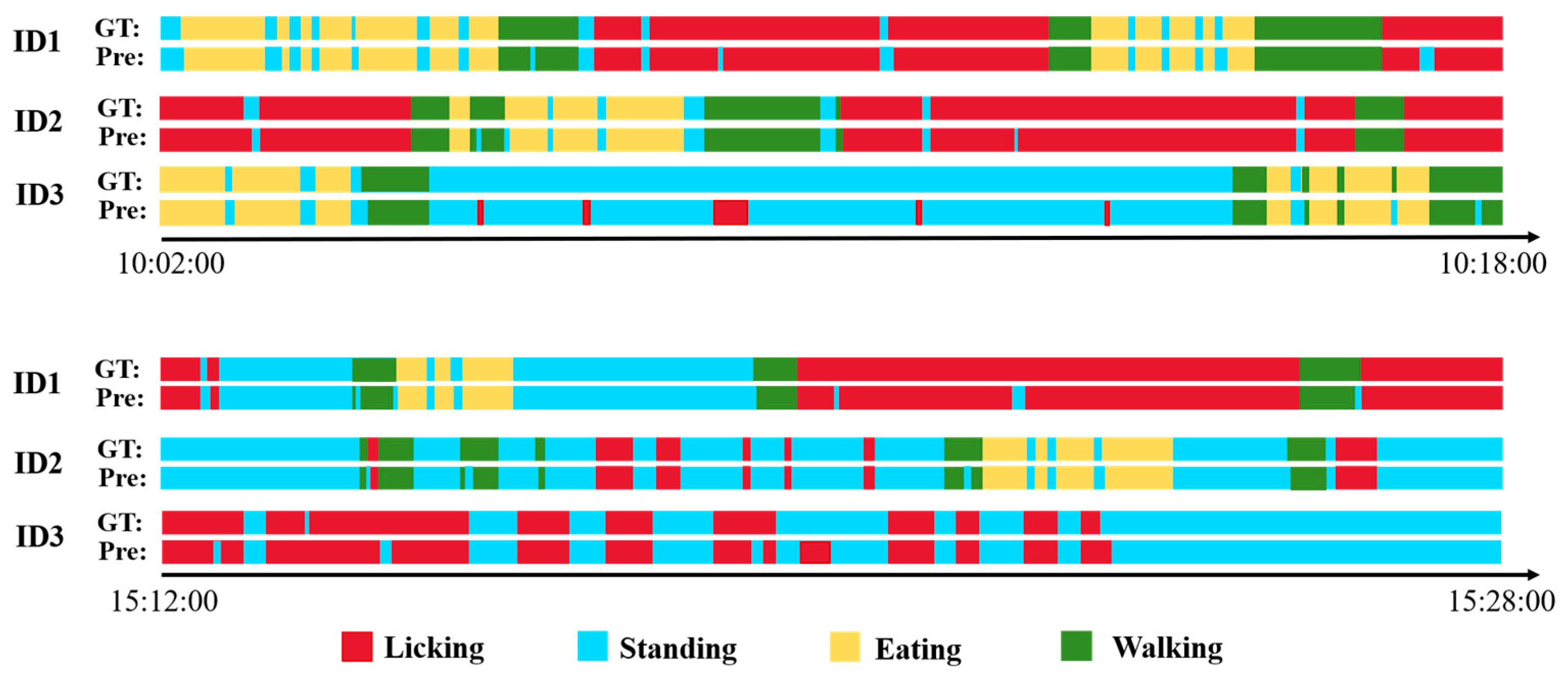

3.3. Ablation Experiments and Comparison Different Behavioral Detection Methods

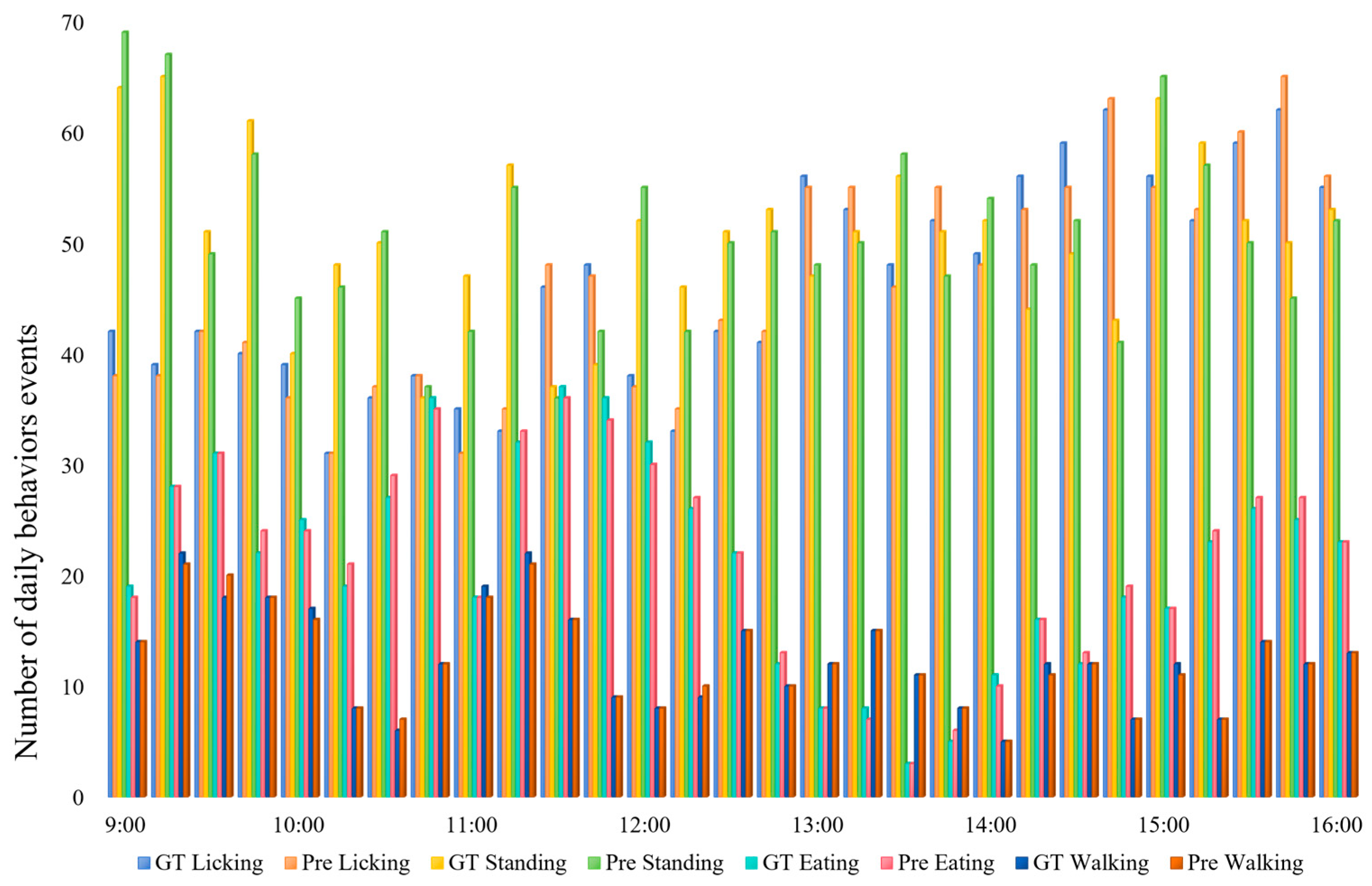

3.4. Giraffe Daily Behaviors Statistics

4. Discussion

4.1. Advantages of SATSN

4.2. Performance Analysis

4.3. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bashaw, M.J.; Tarou, L.R.; Maki, T.S.; Maple, T.L. A Survey Assessment of Variables Related to Stereotypy in Captive Giraffe and Okapi. Appl. Anim. Behav. Sci. 2001, 73, 235–247. [Google Scholar] [CrossRef]

- Wark, J.D.; Cronin, K.A. The Behavior Patterns of Giraffes (Giraffa camelopardalis) Housed across 18 US Zoos. PeerJ 2024, 12, e18164. [Google Scholar] [CrossRef]

- Terlouw, E.M.C.; Lawrence, A.B.; Illius, A.W. Influences of Feeding Level and Physical Restriction on Development of Stereotypies in Sows. Anim. Behav. 1991, 42, 981–991. [Google Scholar] [CrossRef]

- Redbo, I. Changes in Duration and Frequency of Stereotypies and Their Adjoining Behaviours in Heifers, before, during and after the Grazing Period. Appl. Anim. Behav. Sci. 1990, 26, 57–67. [Google Scholar] [CrossRef]

- Mason, G.J. Stereotypies: A Critical Review. Anim. Behav. 1991, 41, 1015–1037. [Google Scholar] [CrossRef]

- Bandeli, M.; Mellor, E.L.; Kroshko, J.; Maherali, H.; Mason, G.J. The Welfare Problems of Wide-Ranging Carnivora Reflect Naturally Itinerant Lifestyles. R. Soc. Open Sci. 2023, 10, 230437. [Google Scholar] [CrossRef]

- Mason, G.J.; Veasey, J.S. How Should the Psychological Well-being of Zoo Elephants Be Objectively Investigated? Zoo. Biol. 2010, 29, 237–255. [Google Scholar] [CrossRef] [PubMed]

- Mason, G.; Clubb, R.; Latham, N.; Vickery, S. Why and How Should We Use Environmental Enrichment to Tackle Stereotypic Behaviour? Appl. Anim. Behav. Sci. 2007, 102, 163–188. [Google Scholar] [CrossRef]

- Mason, G.J.; Latham, N. Can’t Stop, Won’t Stop: Is Stereotypy a Reliable Animal Welfare Indicator? Anim. Welf. 2004, 13, S57–S69. [Google Scholar] [CrossRef]

- McBride, S.D.; Long, L. Management of Horses Showing Stereotypic Behaviour, Owner Perception and the Implications for Welfare. Vet. Rec. 2001, 148, 799–802. [Google Scholar] [CrossRef]

- Hall, N.J. Persistence and Resistance to Extinction in the Domestic Dog: Basic Research and Applications to Canine Training. Behav. Process. 2017, 141, 67–74. [Google Scholar] [CrossRef] [PubMed]

- Carlstead, K.; Mellen, J.; Kleiman, D.G. Black Rhinoceros (Diceros bicornis) in US Zoos: I. Individual Behavior Profiles and Their Relationship to Breeding Success. Zoo. Biol. 1999, 18, 17–34. [Google Scholar] [CrossRef]

- Tuyttens, F.A.M.; de Graaf, S.; Heerkens, J.L.T.; Jacobs, L.; Nalon, E.; Ott, S.; Stadig, L.; Van Laer, E.; Ampe, B. Observer Bias in Animal Behaviour Research: Can We Believe What We Score, If We Score What We Believe? Anim. Behav. 2014, 90, 273–280. [Google Scholar] [CrossRef]

- Alghamdi, S.; Zhao, Z.; Ha, D.S.; Morota, G.; Ha, S.S. Improved Pig Behavior Analysis by Optimizing Window Sizes for Individual Behaviors on Acceleration and Angular Velocity Data. J. Anim. Sci. 2022, 100, skac293. [Google Scholar] [CrossRef]

- Arcidiacono, C.; Mancino, M.; Porto, S.M.C.; Bloch, V.; Pastell, M. IoT Device-Based Data Acquisition System with on-Board Computation of Variables for Cow Behaviour Recognition. Comput. Electron. Agric. 2021, 191, 106500. [Google Scholar] [CrossRef]

- Turner, K.E.; Sohel, F.; Harris, I.; Ferguson, M.; Thompson, A. Lambing Event Detection Using Deep Learning from Accelerometer Data. Comput. Electron. Agric. 2023, 208, 107787. [Google Scholar] [CrossRef]

- Hakansson, F.; Jensen, D.B. Automatic Monitoring and Detection of Tail-Biting Behavior in Groups of Pigs Using Video-Based Deep Learning Methods. Front. Vet. Sci. 2023, 9, 1099347. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Li, D.; Huang, J.; Chen, Y. Automated Video Behavior Recognition of Pigs Using Two-Stream Convolutional Networks. Sensors 2020, 20, 1085. [Google Scholar] [CrossRef]

- Li, B.; Xu, W.; Chen, T.; Cheng, J.; Shen, M. Recognition of Fine-Grained Sow Nursing Behavior Based on the SlowFast and Hidden Markov Models. Comput. Electron. Agric. 2023, 210, 107938. [Google Scholar] [CrossRef]

- Tu, S.; Du, J.; Liang, Y.; Cao, Y.; Chen, W.; Xiao, D.; Huang, Q. Tracking and Behavior Analysis of Group-Housed Pigs Based on a Multi-Object Tracking Approach. Animals 2024, 14, 2828. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Gu, C.; Sun, C.; Ross, D.A.; Vondrick, C.; Pantofaru, C.; Li, Y.; Vijayanarasimhan, S.; Toderici, G.; Ricco, S.; Sukthankar, R. Ava: A Video Dataset of Spatio-Temporally Localized Atomic Visual Actions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6047–6056. [Google Scholar] [CrossRef]

- Orban, D.A.; Siegford, J.M.; Snider, R.J. Effects of Guest Feeding Programs on Captive Giraffe Behavior. Zoo Biol. 2016, 35, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Seeber, P.A.; Ciofolo, I.; Ganswindt, A. Behavioural Inventory of the Giraffe (Giraffa camelopardalis). BMC Res. Notes 2012, 5, 650. [Google Scholar] [CrossRef] [PubMed]

- Hara, K.; Kataoka, H.; Satoh, Y. Learning Spatio-Temporal Features with 3d Residual Networks for Action Recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 3154–3160. [Google Scholar] [CrossRef]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. Slowfast Networks for Video Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6202–6211. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics Yolo11. gitHub repository. 2024. Available online: https://github.com/ultralytics/ultralytics (accessed on 22 September 2025).

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A Video Vision Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 6836–6846. [Google Scholar] [CrossRef]

- Bertasius, G.; Wang, H.; Torresani, L. Is Space-Time Attention All You Need for Video Understanding? arXiv 2021, arXiv:2102.05095. [Google Scholar] [CrossRef]

- Cao, J.; Pang, J.; Weng, X.; Khirodkar, R.; Kitani, K. Observation-Centric Sort: Rethinking Sort for Robust Multi-Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9686–9696. [Google Scholar] [CrossRef]

- Hripcsak, G.; Rothschild, A.S. Agreement, the f-Measure, and Reliability in Information Retrieval. J. Am. Med. Inform. Assoc. 2005, 12, 296–298. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Vondrick, C.; Murphy, K.; Sukthankar, R.; Schmid, C. Actor-Centric Relation Network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 318–334. [Google Scholar] [CrossRef]

- Tong, Z.; Song, Y.; Wang, J.; Wang, L. Videomae: Masked Autoencoders Are Data-Efficient Learners for Self-Supervised Video Pre-Training. Adv. Neural Inf. Process. Syst. 2022, 35, 10078–10093. [Google Scholar] [CrossRef]

| Behavior | Behavior Description |

|---|---|

| Licking | Movement of the tongue across trees, troughs, or other non-food objects. |

| Standing | Maintains both forelimbs and both hindlimbs to support the body and does not engage in any of the other behaviors listed. |

| Walking | Significant change in body position such as moving in a normal position. |

| Eating | Ingestion of food in troughs or nibbling low on ground forage. |

| Hyperparameters | Batch Size | Epochs | Learning Rate | Momentum |

|---|---|---|---|---|

| Value | 4 | 200 | 0.02 | 0.9 |

| Different Input Scheme | AP | Precision | Recall | F1-Measure |

|---|---|---|---|---|

| A | 88.38% ± 0.10 | 88.90% ± 0.07 | 88.38% ± 0.05 | 88.63% ± 0.05 |

| B | 91.69% ± 0.27 | 92.33% ± 0.14 | 91.29% ± 0.23 | 91.81% ± 0.16 |

| C | 87.70% ± 0.09 | 87.24% ± 0.10 | 89.50% ± 0.11 | 88.36% ± 0.05 |

| Model | Description | mAP | Model Size (MB) | Speed (FPS) |

|---|---|---|---|---|

| SlowFast | Original SlowFast architecture | 89.58% | 273 | 4.2 |

| SlowFast + TA | Integrated TA module into the fast pathway | 90.13% | 289 | 2.3 |

| SlowFast + VIT Encoder | Replaced the slow pathway’s 3D ResNet with ViT encoder | 91.00% | 323 | 4.9 |

| SATSN (Ours) | ViT encoder in slow pathway + TA module in fast pathway + localized region feature extraction strategy | 93.99% | 332 | 5.7 |

| Model | mAP | Licking (AP) | Standing (AP) | Walking (AP) | Eating (AP) | Model Size (MB) |

|---|---|---|---|---|---|---|

| SATSN (Ours) | 93.99% ± 0.01 | 91.69% ± 0.27 | 92.30% ± 0.07 | 95.90% ± 0.09 | 96.08% ± 0.12 | 332 |

| SlowOnly | 89.02% ± 0.13 | 85.82% ± 0.19 | 87.38% ± 0.18 | 93.85% ± 0.09 | 89.25% ± 0.23 | 258 |

| ACRN | 85.87% ± 0.12 | 73.39% ± 0.16 | 86.70% ± 0.16 | 96.24% ± 0.15 | 87.35% ± 0.19 | 737 |

| VideoMAE | 89.42% ± 0.24 | 84.77% ± 0.14 | 87.64% ± 0.15 | 96.01% ± 0.11 | 90.06% ± 0.11 | 1064 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gan, H.; Wu, X.; Chen, J.; Wang, J.; Fang, Y.; Xue, Y.; Jiang, T.; Chen, H.; Zhang, P.; Dong, G.; et al. SATSN: A Spatial-Adaptive Two-Stream Network for Automatic Detection of Giraffe Daily Behaviors. Animals 2025, 15, 2833. https://doi.org/10.3390/ani15192833

Gan H, Wu X, Chen J, Wang J, Fang Y, Xue Y, Jiang T, Chen H, Zhang P, Dong G, et al. SATSN: A Spatial-Adaptive Two-Stream Network for Automatic Detection of Giraffe Daily Behaviors. Animals. 2025; 15(19):2833. https://doi.org/10.3390/ani15192833

Chicago/Turabian StyleGan, Haiming, Xiongwei Wu, Jianlu Chen, Jingling Wang, Yuxin Fang, Yuqing Xue, Tian Jiang, Huanzhen Chen, Peng Zhang, Guixin Dong, and et al. 2025. "SATSN: A Spatial-Adaptive Two-Stream Network for Automatic Detection of Giraffe Daily Behaviors" Animals 15, no. 19: 2833. https://doi.org/10.3390/ani15192833

APA StyleGan, H., Wu, X., Chen, J., Wang, J., Fang, Y., Xue, Y., Jiang, T., Chen, H., Zhang, P., Dong, G., & Xue, Y. (2025). SATSN: A Spatial-Adaptive Two-Stream Network for Automatic Detection of Giraffe Daily Behaviors. Animals, 15(19), 2833. https://doi.org/10.3390/ani15192833