FSCA-YOLO: An Enhanced YOLO-Based Model for Multi-Target Dairy Cow Behavior Recognition

Simple Summary

Abstract

1. Introduction

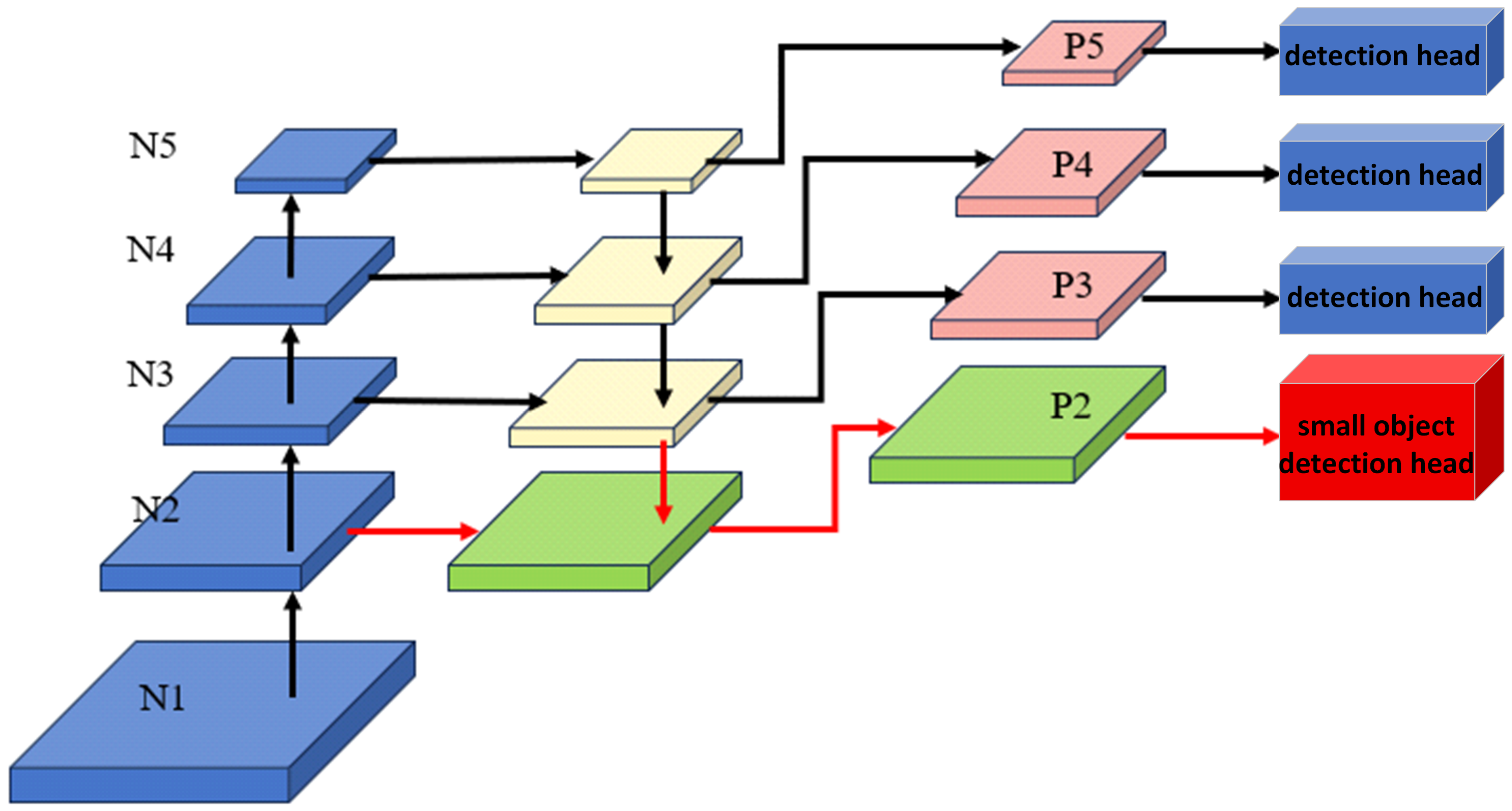

- Adding a small-object detection head to enhance the recognition of tiny dairy cow targets [39].

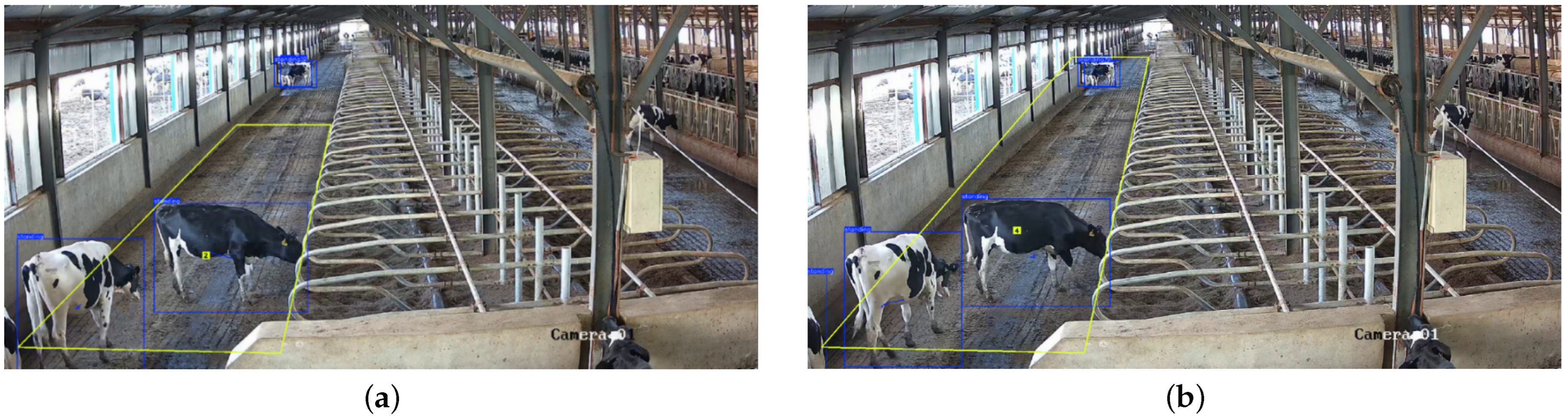

- To support downstream applications such as behavior-specific cow counting and tracking in defined zones, the model was integrated with Open Source Computer Vision Library (OpenCV)-based tools [17,41,42]. This integration broadens the applicability of the system and promotes the development of the emerging paradigm of the “digital dairy farm”.

2. Materials and Methods

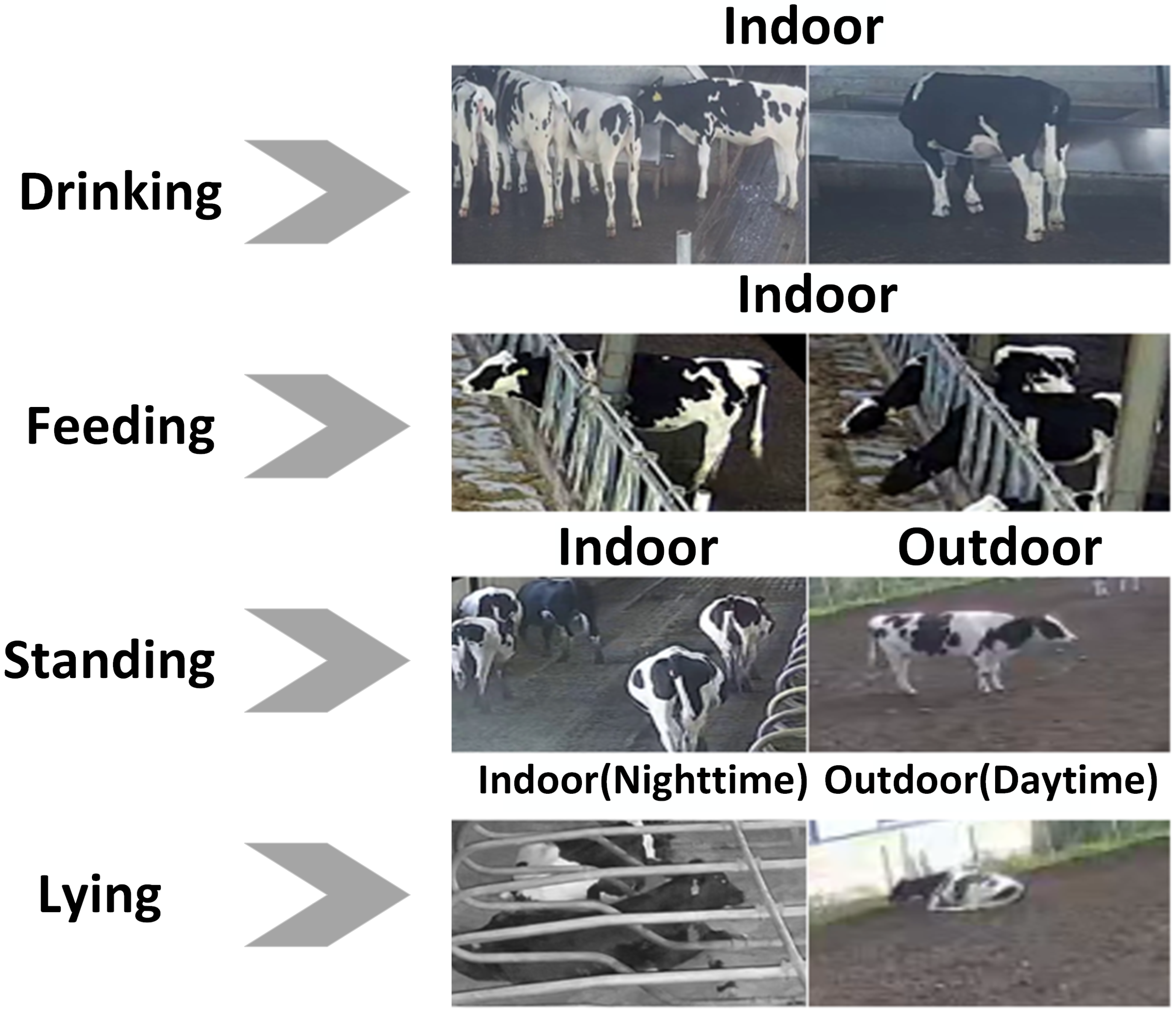

2.1. Dataset Preparation

2.1.1. Data Acquisition

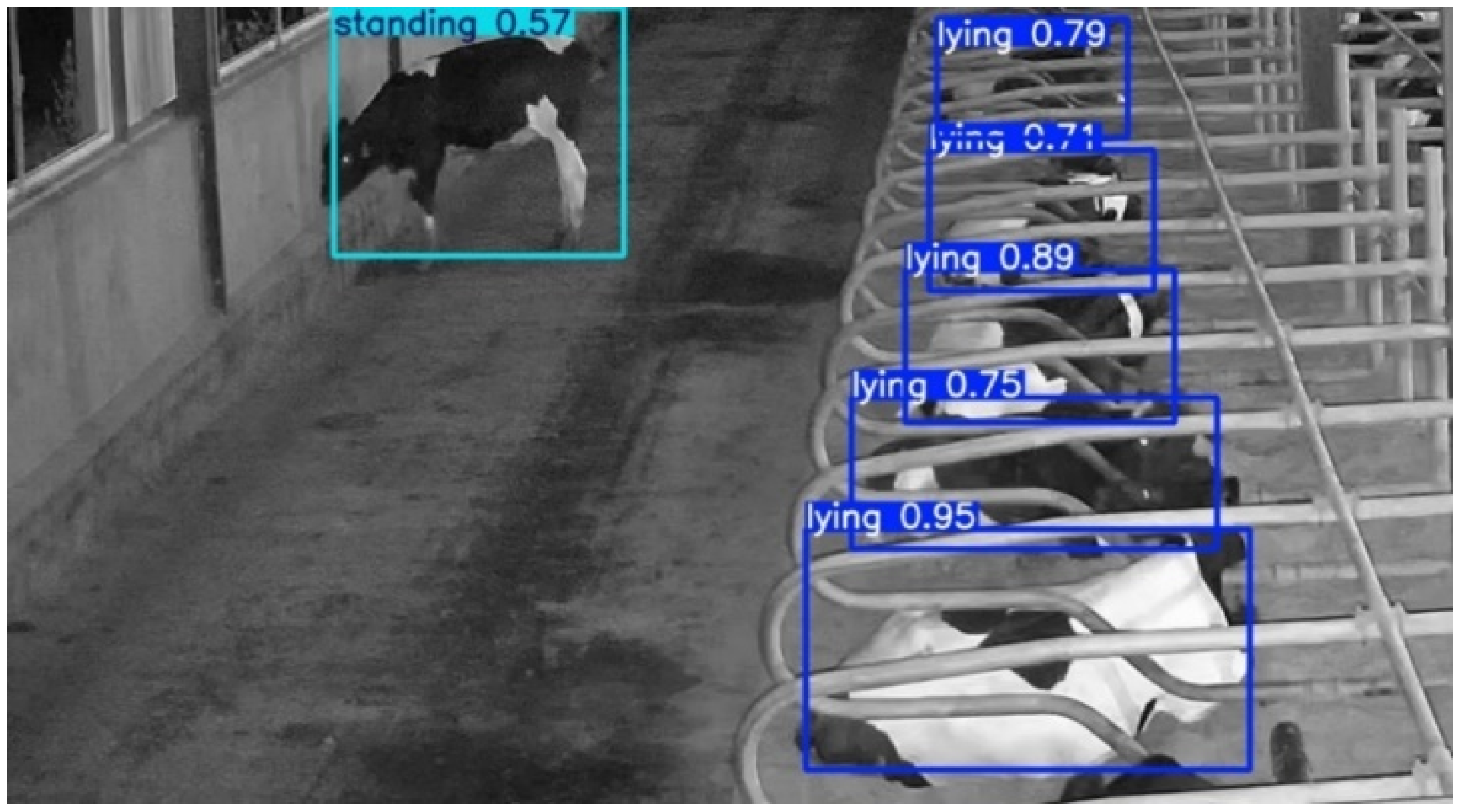

- Camera 1, indicated in blue, was positioned to monitor the walking lane and partially covered the bedding area, making it suitable for recording both the standing behavior during the day and infrared images of the lying behavior at night.

- Camera 2, marked in red, focused on the feeding area, and was used primarily to capture the feeding behavior.

- Camera 3, marked in green, centered on the water trough and was used to collect data on the drinking behavior.

- Camera 4, indicated in orange, monitored the outdoor area and was used to capture both the daytime lying behavior and the standing behavior outside the barn.

2.1.2. Data Preprocessing

- For feeding behavior, when cows feed side by side and occlusion occurs, targets with more than 50% occlusion or less than 15% visible area near the image edge were not labeled.

- For lying behavior, due to the unique coat patterns of dairy cows, some lying cows with fully black backs that closely resemble the background were excluded from annotation. This exclusion accounts for 3.2% (12/372) of lying candidates, where 12 denotes excluded cows with completely black backs that were visually indistinguishable from the background, and 372 is the total number of lying candidates (excluded + labeled instances).

- For standing behavior, only cows whose four legs were in contact with the ground or whose legs were naturally bent during movement were labeled.

- For drinking behavior, as such actions occur only near the water trough, only targets where the cow’s head entered the trough area were annotated.

2.2. Network Structure of FSCA-YOLO

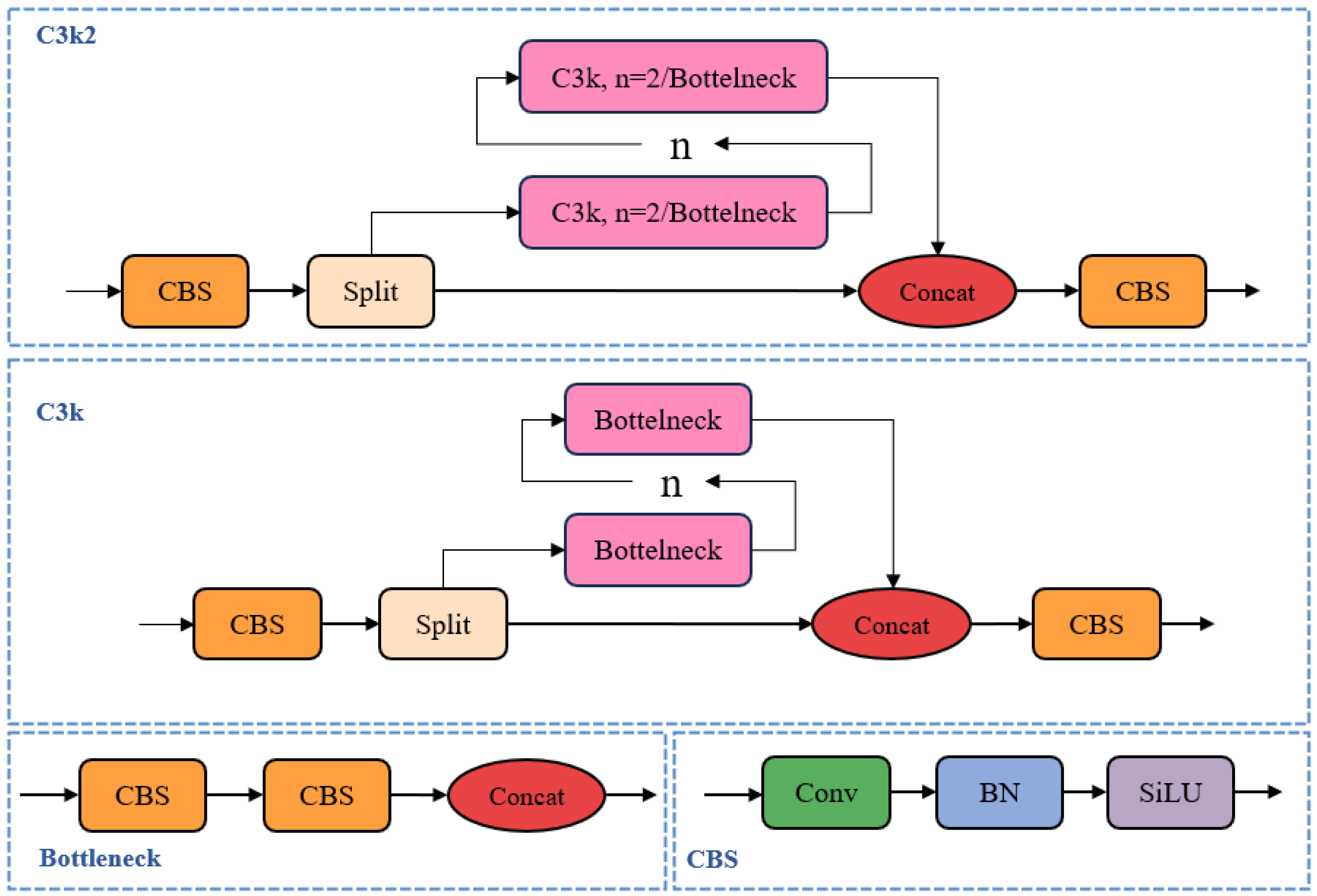

2.2.1. YOLOv11 Backbone Network

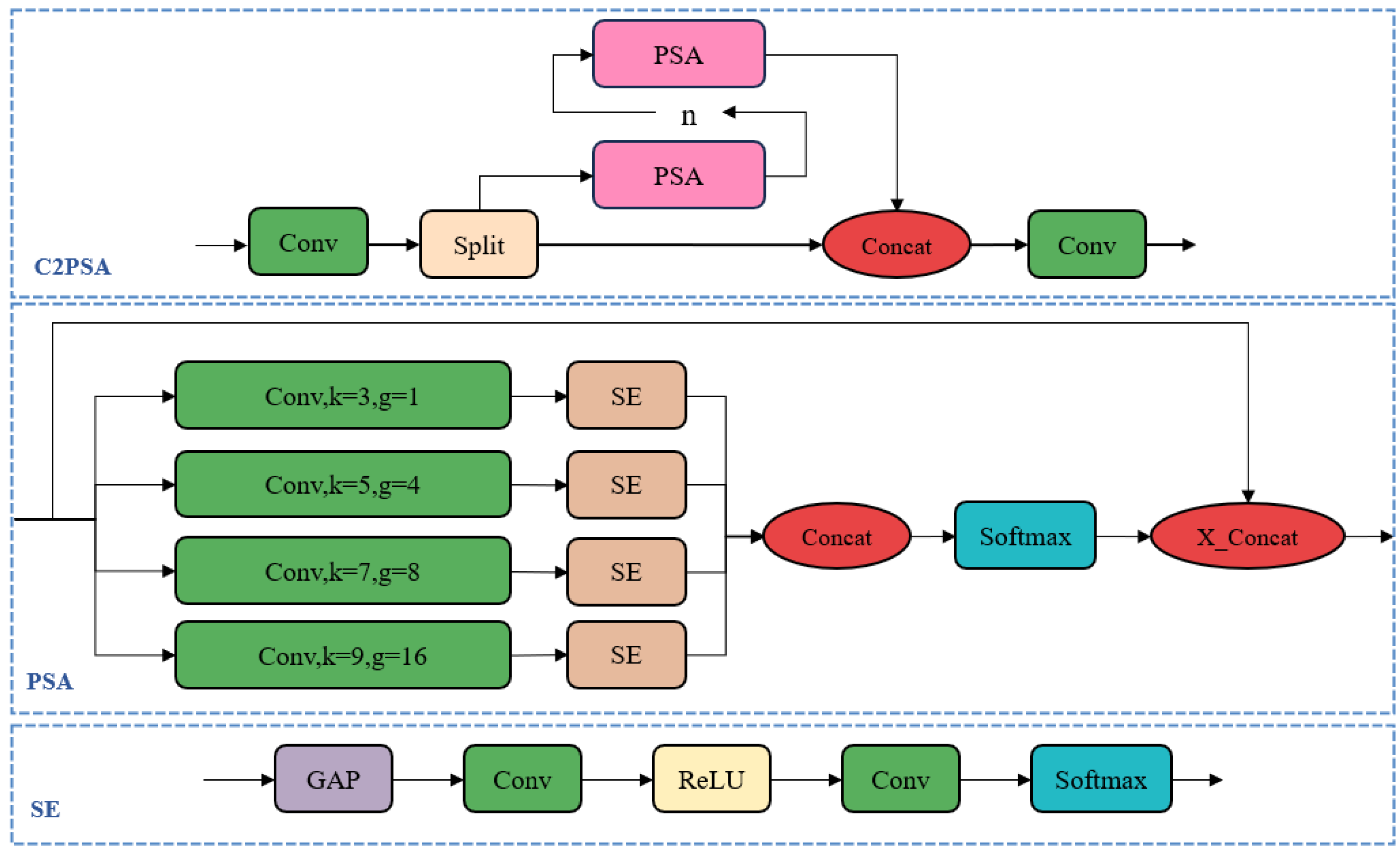

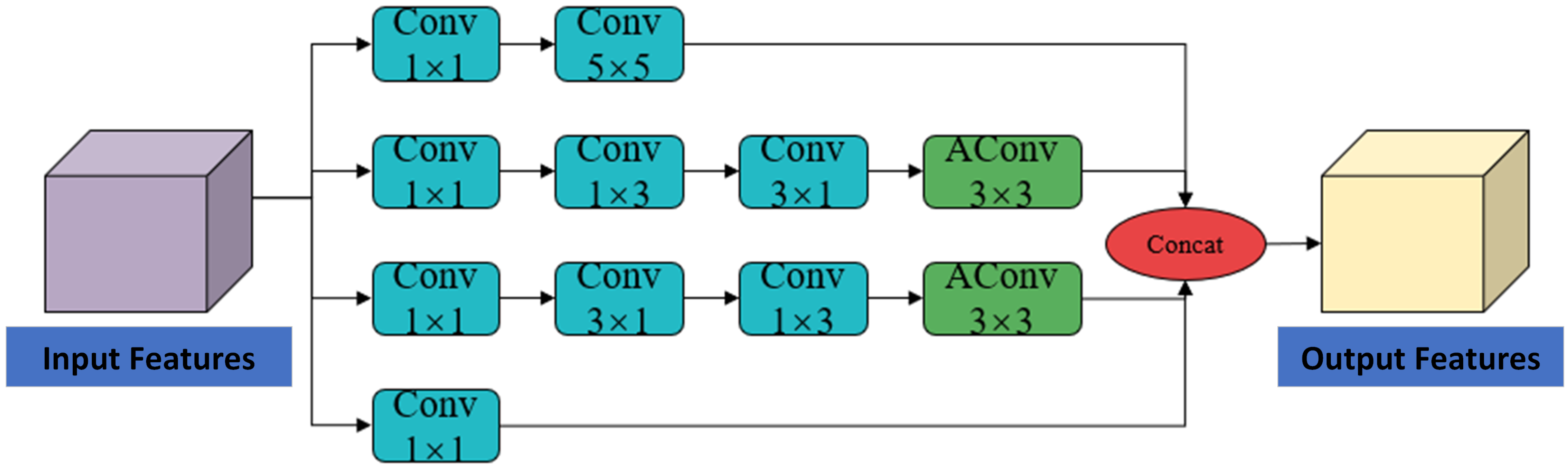

2.2.2. FEM-SCAM Integration

2.2.3. P2 Head Addition

2.2.4. CoordAtt Integration

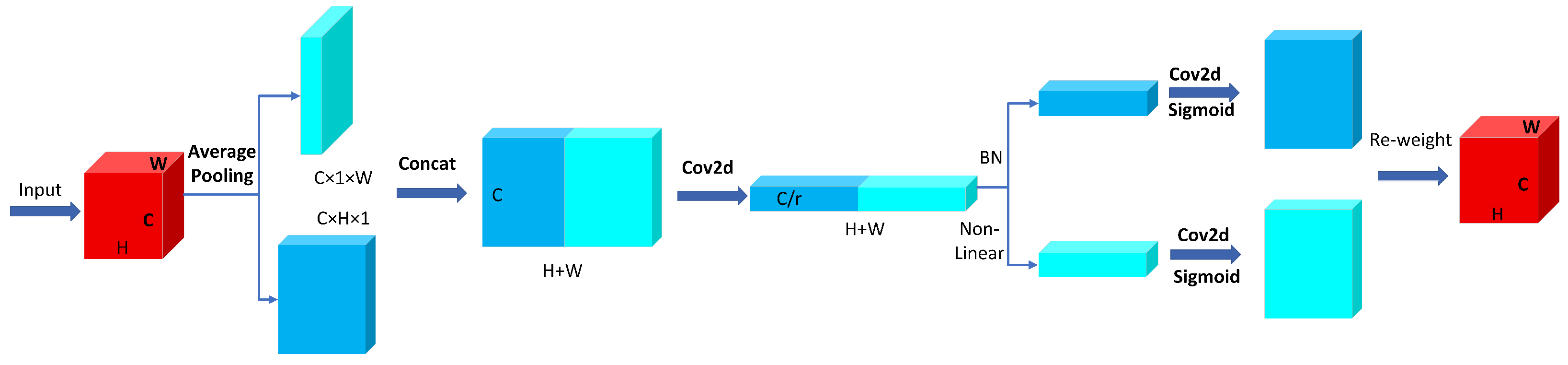

- Information Decomposition. For an input feature map of size C × H × W, Global Average Pooling is applied separately along the horizontal and vertical directions, compressing the 2D spatial information into 1D vectors. This results in a horizontal feature map of size C × H × 1 and a vertical feature map of size C × 1 × W. This decomposition effectively captures directional information in the feature map and prepares for subsequent positional encoding.

- Feature Transformation. The horizontal and vertical features are concatenated along the spatial dimension to form a feature of size C × (H + W) × 1, which is then processed through a 1 × 1 convolution (Conv2d) followed by an activation function. After that, the feature is split along the spatial dimension into two separate tensors. Each tensor is then passed through another convolution operation followed by a Sigmoid activation function, producing attention vectors for the horizontal and vertical directions, respectively.

- Reweighting. The attention vectors obtained in the second step are broadcasted to the original feature map size C × H × W. These vectors are then multiplied element-wise with the original input feature map to produce the final attention-enhanced features.

2.2.5. Improved SIoU Loss Function

2.2.6. FSCA-YOLO Model

2.3. Experimental Environment and Parameter Settings

2.4. Evaluation Metrics

3. Results

3.1. Loss Function Comparison

3.2. Attention Mechanisms Comparison

3.3. Ablation Study

3.4. Comparative Experiment

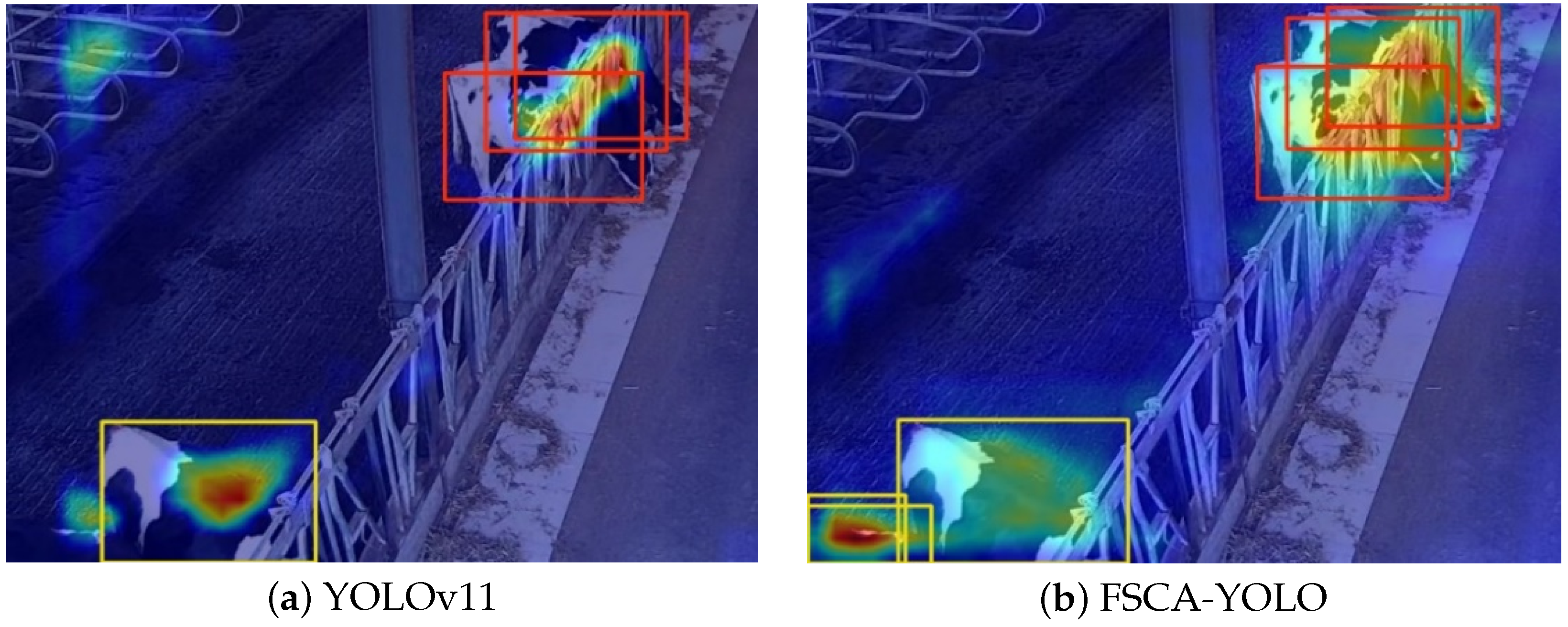

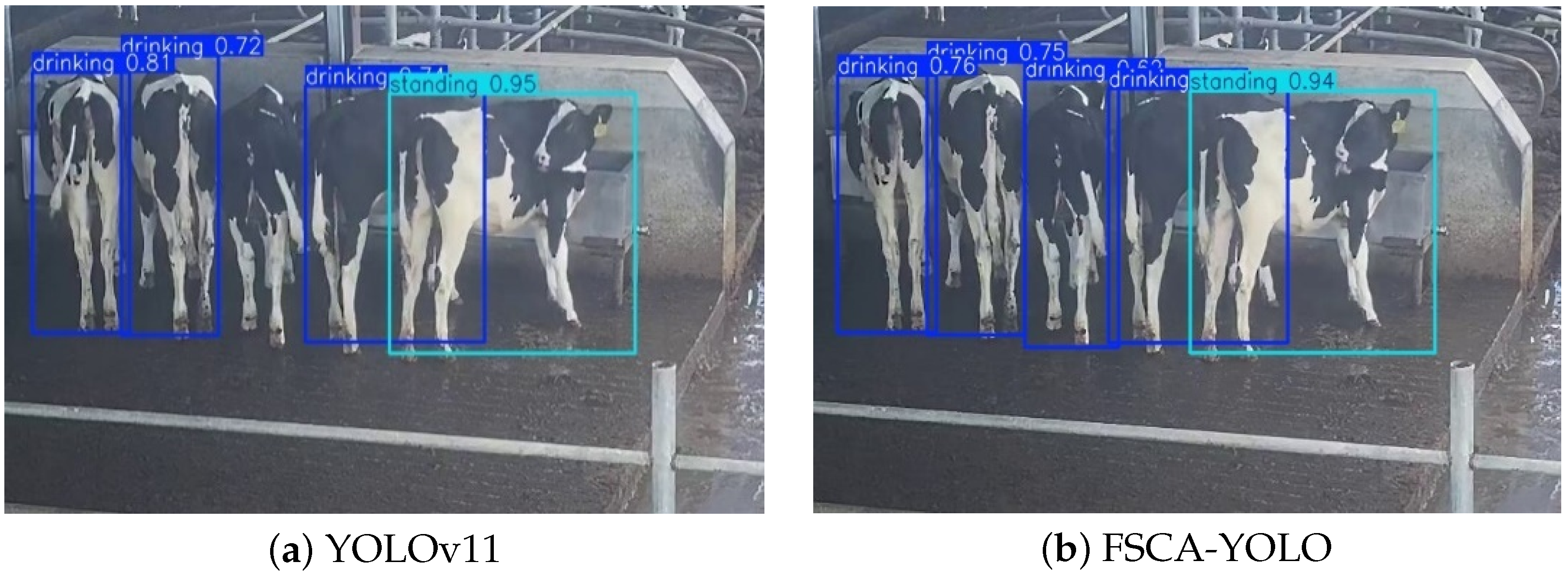

3.5. Visualization Results and Analysis

3.6. Cow Counting via FSCA-YOLO

4. Discussion

4.1. Monocular Camera Depth Limitations

4.2. Behavior Tracking and Annotation Challenges

4.3. Potential of Multimodal Sensing Integration

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stygar, A.H.; Gómez, Y.; Berteselli, G.V.; Dalla Costa, E.; Canali, E.; Niemi, J.K.; Llonch, P.; Pastell, M. A systematic review on commercially available and validated sensor technologies for welfare assessment of dairy cattle. Front. Vet. Sci. 2021, 8, 634338. [Google Scholar] [CrossRef]

- Chelotti, J.; Martinez-Rau, L.; Ferrero, M.; Vignolo, L.; Galli, J.; Planisich, A.; Rufiner, H.L.; Giovanini, L. Livestock feeding behavior: A tutorial review on automated techniques for ruminant monitoring. arXiv 2023, arXiv:2312.09259. [Google Scholar] [CrossRef]

- Cavallini, D.; Giammarco, M.; Buonaiuto, G.; Vignola, G.; De Matos Vettori, J.; Lamanna, M.; Prasinou, P.; Colleluori, R.; Formigoni, A.; Fusaro, I. Two years of precision livestock management: Harnessing ear tag device behavioral data for pregnancy detection in free-range dairy cattle on silage/hay-mix ration. Front. Anim. Sci. 2025, 6, 1547395. [Google Scholar] [CrossRef]

- Markov, N.; Stoycheva, S.; Hristov, M.; Mondeshka, L. Digital management of technological processes in cattle farms: A review. J. Cent. Eur. Agric. 2022, 23, 486–495. [Google Scholar] [CrossRef]

- Curti, P.d.F.; Selli, A.; Pinto, D.L.; Merlos-Ruiz, A.; Balieiro, J.C.d.C.; Ventura, R.V. Applications of livestock monitoring devices and machine learning algorithms in animal production and reproduction: An overview. Anim. Reprod. 2023, 20, e20230077. [Google Scholar] [CrossRef]

- Himu, H.A.; Raihan, A. Digital transformation of livestock farming for sustainable development. J. Vet. Med. Sci. 2024, 1, 1–8. [Google Scholar]

- Tantalaki, N.; Souravlas, S.; Roumeliotis, M. Data-driven decision making in precision agriculture: The rise of big data in agricultural systems. J. Agric. Food Inf. 2019, 20, 344–380. [Google Scholar] [CrossRef]

- Fuentes, S.; Viejo, C.G.; Tongson, E.; Dunshea, F.R. The livestock farming digital transformation: Implementation of new and emerging technologies using artificial intelligence. Anim. Health Res. Rev. 2022, 23, 59–71. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2012, 15, 1192–1209. [Google Scholar] [CrossRef]

- Zhao, T.; Li, D.; Cui, P.; Zhang, Z.; Sun, Y.; Meng, X.; Hou, Z.; Zheng, Z.; Huang, Y.; Liu, H. A self-powered flexible displacement sensor based on triboelectric effect for linear feed system. Nanomaterials 2023, 13, 3100. [Google Scholar] [CrossRef]

- Rui, C.; Hongwei, L.; Jing, T. Structure design and simulation analysis of inductive displacement sensor. In Proceedings of the 2018 13th IEEE Conference on Industrial Electronics and Applications (ICIEA), Wuhan, China, 31 May–2 June 2018; IEEE: New York, NY, USA, 2018; pp. 1620–1626. [Google Scholar]

- Iqbal, M.W.; Draganova, I.; Morel, P.C.; Morris, S.T. Validation of an accelerometer sensor-based collar for monitoring grazing and rumination behaviours in grazing dairy cows. Animals 2021, 11, 2724. [Google Scholar] [CrossRef]

- Lamanna, M.; Bovo, M.; Cavallini, D. Wearable collar technologies for dairy cows: A systematized review of the current applications and future innovations in precision livestock farming. Animals 2025, 15, 458. [Google Scholar] [CrossRef]

- Pichlbauer, B.; Chapa Gonzalez, J.M.; Bobal, M.; Guse, C.; Iwersen, M.; Drillich, M. Evaluation of different sensor systems for classifying the behavior of dairy cows on pasture. Sensors 2024, 24, 7739. [Google Scholar] [CrossRef]

- Shen, W.; Cheng, F.; Zhang, Y.; Wei, X.; Fu, Q.; Zhang, Y. Automatic recognition of ingestive-related behaviors of dairy cows based on triaxial acceleration. Inf. Process. Agric. 2020, 7, 427–443. [Google Scholar] [CrossRef]

- Yuan, Z.; Wang, S.; Wang, C.; Zong, Z.; Zhang, C.; Su, L.; Ban, Z. Research on Calf Behavior Recognition Based on Improved Lightweight YOLOv8 in Farming Scenarios. Animals 2025, 15, 898. [Google Scholar] [CrossRef]

- Bumbálek, R.; Zoubek, T.; Ufitikirezi, J.d.D.M.; Umurungi, S.N.; Stehlík, R.; Havelka, Z.; Kuneš, R.; Bartoš, P. Implementation of Machine Vision Methods for Cattle Detection and Activity Monitoring. Technologies 2025, 13, 116. [Google Scholar] [CrossRef]

- Cao, Z.; Li, C.; Yang, X.; Zhang, S.; Luo, L.; Wang, H.; Zhao, H. Semi-automated annotation for video-based beef cattle behavior recognition. Sci. Rep. 2025, 15, 17131. [Google Scholar] [CrossRef]

- Ayadi, S.; Ben Said, A.; Jabbar, R.; Aloulou, C.; Chabbouh, A.; Achballah, A.B. Dairy cow rumination detection: A deep learning approach. In Distributed Computing for Emerging Smart Networks, Proceedings of the Second International Workshop, DiCES-N 2020, Bizerte, Tunisia, 18 December 2020, Proceedings; Springer: Cham, Switzerland, 2020; pp. 123–139. [Google Scholar]

- Wu, D.; Wang, Y.; Han, M.; Song, L.; Shang, Y.; Zhang, X.; Song, H. Using a CNN-LSTM for basic behaviors detection of a single dairy cow in a complex environment. Comput. Electron. Agric. 2021, 182, 106016. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Q.; Zhang, L.; Li, J.; Li, M.; Liu, Y.; Shi, Y. Progress of machine vision technologies in intelligent dairy farming. Appl. Sci. 2023, 13, 7052. [Google Scholar] [CrossRef]

- Lodkaew, T.; Pasupa, K.; Loo, C.K. CowXNet: An automated cow estrus detection system. Expert Syst. Appl. 2023, 211, 118550. [Google Scholar] [CrossRef]

- Guo, Y.; He, D.; Chai, L. A machine vision-based method for monitoring scene-interactive behaviors of dairy calf. Animals 2020, 10, 190. [Google Scholar] [CrossRef]

- Wang, Z.; Hua, Z.; Wen, Y.; Zhang, S.; Xu, X.; Song, H. E-YOLO: Recognition of estrus cow based on improved YOLOv8n model. Expert Syst. Appl. 2024, 238, 122212. [Google Scholar] [CrossRef]

- Giannone, C.; Sahraeibelverdy, M.; Lamanna, M.; Cavallini, D.; Formigoni, A.; Tassinari, P.; Torreggiani, D.; Bovo, M. Automated dairy cow identification and feeding behaviour analysis using a computer vision model based on YOLOv8. Smart Agric. Technol. 2025, 12, 101304. [Google Scholar] [CrossRef]

- Lamanna, M.; Muca, E.; Giannone, C.; Bovo, M.; Boffo, F.; Romanzin, A.; Cavallini, D. Artificial intelligence meets dairy cow research: Large language model’s application in extracting daily time-activity budget data for a meta-analytical study. J. Dairy Sci. 2025, 108, 10203–10219. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Shi, G.; Zhu, C. Dynamic serpentine convolution with attention mechanism enhancement for beef cattle behavior recognition. Animals 2024, 14, 466. [Google Scholar] [CrossRef]

- Li, G.; Sun, J.; Guan, M.; Sun, S.; Shi, G.; Zhu, C. A New Method for non-destructive identification and Tracking of multi-object behaviors in beef cattle based on deep learning. Animals 2024, 14, 2464. [Google Scholar] [CrossRef] [PubMed]

- Rohan, A.; Rafaq, M.S.; Hasan, M.J.; Asghar, F.; Bashir, A.K.; Dottorini, T. Application of deep learning for livestock behaviour recognition: A systematic literature review. Comput. Electron. Agric. 2024, 224, 109115. [Google Scholar] [CrossRef]

- Yu, R.; Wei, X.; Liu, Y.; Yang, F.; Shen, W.; Gu, Z. Research on automatic recognition of dairy cow daily behaviors based on deep learning. Animals 2024, 14, 458. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.; Fang, J.; Wang, X.; Zhao, Y. DMSF-YOLO: Cow Behavior Recognition Algorithm Based on Dynamic Mechanism and Multi-Scale Feature Fusion. Sensors 2025, 25, 3479. [Google Scholar] [CrossRef]

- Jia, Q.; Yang, J.; Han, S.; Du, Z.; Liu, J. CAMLLA-YOLOv8n: Cow Behavior Recognition Based on Improved YOLOv8n. Animals 2024, 14, 3033. [Google Scholar] [CrossRef]

- Zheng, Z.; Li, J.; Qin, L. YOLO-BYTE: An efficient multi-object tracking algorithm for automatic monitoring of dairy cows. Comput. Electron. Agric. 2023, 209, 107857. [Google Scholar] [CrossRef]

- Russello, H.; van der Tol, R.; Kootstra, G. T-LEAP: Occlusion-robust pose estimation of walking cows using temporal information. Comput. Electron. Agric. 2022, 192, 106559. [Google Scholar] [CrossRef]

- Fuentes, A.; Han, S.; Nasir, M.F.; Park, J.; Yoon, S.; Park, D.S. Multiview monitoring of individual cattle behavior based on action recognition in closed barns using deep learning. Animals 2023, 13, 2020. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Zheng, J.; Wu, H.; Zhang, H.; Wang, Z.; Xu, W. Insulator-defect detection algorithm based on improved YOLOv7. Sensors 2022, 22, 8801. [Google Scholar] [CrossRef]

- Jeune, P.L.; Mokraoui, A. Rethinking intersection over union for small object detection in few-shot regime. arXiv 2023, arXiv:2307.09562. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Jayasingh, S.K.; Naik, P.; Swain, S.; Patra, K.J.; Kabat, M.R. Integrated crowd counting system utilizing IoT sensors, OpenCV and YOLO models for accurate people density estimation in real-time environments. In Proceedings of the 2024 1st International Conference on Cognitive, Green and Ubiquitous Computing (IC-CGU), Bhubaneswar, India, 1–2 March 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Nguyen, C.; Wang, D.; Von Richter, K.; Valencia, P.; Alvarenga, F.A.; Bishop-Hurley, G. Video-based cattle identification and action recognition. In Proceedings of the 2021 Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 29 November–1 December 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar]

- Rao, S.N. YOLOv11 Architecture Explained: Next-Level Object Detection with Enhanced Speed and Accuracy. Medium, 22 October 2024. Available online: https://medium.com/@nikhil-rao-20/yolov11-explained-next-level-object-detection-with-enhanced-speedand-accuracy-2dbe2d376f71 (accessed on 25 February 2025).

- He, L.h.; Zhou, Y.z.; Liu, L.; Cao, W.; Ma, J.H. Research on object detection and recognition in remote sensing images based on YOLOv11. Sci. Rep. 2025, 15, 14032. [Google Scholar] [CrossRef]

- Zhang, H.; Zu, K.; Lu, J.; Zou, Y.; Meng, D. EPSANet: An efficient pyramid squeeze attention block on convolutional neural network. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 1161–1177. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Qiu, Z.; Zhao, Z.; Chen, S.; Zeng, J.; Huang, Y.; Xiang, B. Application of an improved YOLOv5 algorithm in real-time detection of foreign objects by ground penetrating radar. Remote Sens. 2022, 14, 1895. [Google Scholar] [CrossRef]

- Wang, R.; Gao, R.; Li, Q.; Zhao, C.; Ma, W.; Yu, L.; Ding, L. A lightweight cow mounting behavior recognition system based on improved YOLOv5s. Sci. Rep. 2023, 13, 17418. [Google Scholar] [CrossRef]

- Yu, Z.; Liu, Y.; Yu, S.; Wang, R.; Song, Z.; Yan, Y.; Li, F.; Wang, Z.; Tian, F. Automatic detection method of dairy cow feeding behaviour based on YOLO improved model and edge computing. Sensors 2022, 22, 3271. [Google Scholar] [CrossRef]

- Chopra, K.; Hodges, H.R.; Barker, Z.E.; Diosdado, J.A.V.; Amory, J.R.; Cameron, T.C.; Croft, D.P.; Bell, N.J.; Thurman, A.; Bartlett, D.; et al. Bunching behavior in housed dairy cows at higher ambient temperatures. J. Dairy Sci. 2024, 107, 2406–2425. [Google Scholar] [CrossRef]

- Zhou, M.; Tang, X.; Xiong, B.; Koerkamp, P.G.; Aarnink, A. Effectiveness of cooling interventions on heat-stressed dairy cows based on a mechanistic thermoregulatory model. Biosyst. Eng. 2024, 244, 114–121. [Google Scholar] [CrossRef]

- Talmón, D.; Jasinsky, A.; Menegazzi, G.; Chilibroste, P.; Carriquiry, M. O56 Feeding strategy and Holstein strain affect the energy efficiency of lactating dairy cows. Anim.-Sci. Proc. 2023, 14, 580. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth map prediction from a single image using a multi-scale deep network. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Cui, X.Z.; Feng, Q.; Wang, S.Z.; Zhang, J.H. Monocular depth estimation with self-supervised learning for vineyard unmanned agricultural vehicle. Sensors 2022, 22, 721. [Google Scholar] [CrossRef] [PubMed]

- Shu, F.; Lesur, P.; Xie, Y.; Pagani, A.; Stricker, D. SLAM in the field: An evaluation of monocular mapping and localization on challenging dynamic agricultural environment. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Tucson, AZ, USA, 28 February–4 March 2021; pp. 1761–1771. [Google Scholar]

- Zhao, C.; Sun, Q.; Zhang, C.; Tang, Y.; Qian, F. Monocular depth estimation based on deep learning: An overview. Sci. China Technol. Sci. 2020, 63, 1612–1627. [Google Scholar] [CrossRef]

- Bhoi, A. Monocular depth estimation: A survey. arXiv 2019, arXiv:1901.09402. [Google Scholar] [CrossRef]

- Jahn, S.; Schmidt, G.; Bachmann, L.; Louton, H.; Homeier-Bachmann, T.; Schütz, A.K. Individual behavior tracking of heifers by using object detection algorithm YOLOv4. Front. Anim. Sci. 2025, 5, 1499253. [Google Scholar] [CrossRef]

- Meng, H.; Zhang, L.; Yang, F.; Hai, L.; Wei, Y.; Zhu, L.; Zhang, J. Livestock biometrics identification using computer vision approaches: A review. Agriculture 2025, 15, 102. [Google Scholar] [CrossRef]

- Andrew, W.; Gao, J.; Mullan, S.; Campbell, N.; Dowsey, A.W.; Burghardt, T. Visual identification of individual Holstein-Friesian cattle via deep metric learning. Comput. Electron. Agric. 2021, 185, 106133. [Google Scholar] [CrossRef]

- Islam, H.Z.; Khan, S.; Paul, S.K.; Rahi, S.I.; Sifat, F.H.; Sany, M.M.H.; Sarker, M.S.A.; Anam, T.; Polas, I.H. Muzzle-based cattle identification system using artificial intelligence (AI). arXiv 2024, arXiv:2407.06096. [Google Scholar] [CrossRef]

- Tjandrasuwita, M.; Sun, J.J.; Kennedy, A.; Chaudhuri, S.; Yue, Y. Interpreting expert annotation differences in animal behavior. arXiv 2021, arXiv:2106.06114. [Google Scholar] [CrossRef]

- Davani, A.M.; Díaz, M.; Prabhakaran, V. Dealing with disagreements: Looking beyond the majority vote in subjective annotations. Trans. Assoc. Comput. Linguist. 2022, 10, 92–110. [Google Scholar] [CrossRef]

- Klie, J.C.; Castilho, R.E.d.; Gurevych, I. Analyzing dataset annotation quality management in the wild. Comput. Linguist. 2024, 50, 817–866. [Google Scholar] [CrossRef]

- Porto, S.M.; Arcidiacono, C.; Anguzza, U.; Cascone, G. The automatic detection of dairy cow feeding and standing behaviours in free-stall barns by a computer vision-based system. Biosyst. Eng. 2015, 133, 46–55. [Google Scholar] [CrossRef]

- Arablouei, R.; Wang, Z.; Bishop-Hurley, G.J.; Liu, J. Multimodal sensor data fusion for in-situ classification of animal behavior using accelerometry and GNSS data. Smart Agric. Technol. 2023, 4, 100163. [Google Scholar] [CrossRef]

- Caja, G.; Castro-Costa, A.; Knight, C.H. Engineering to support wellbeing of dairy animals. J. Dairy Res. 2016, 83, 136–147. [Google Scholar] [CrossRef]

- Lamanna, M.; Muca, E.; Buonaiuto, G.; Formigoni, A.; Cavallini, D. From posts to practice: Instagram’s role in veterinary dairy cow nutrition education—How does the audience interact and apply knowledge? A survey study. J. Dairy Sci. 2025, 108, 1659–1671. [Google Scholar] [CrossRef] [PubMed]

| Configuration Item | Parameter Value |

|---|---|

| CPU | Intel(R) Xeon(R) Gold 5218R |

| GPU | GeForce RTX 2080 Ti |

| Memory | 94 GB |

| Operating System | Ubuntu 16.04 |

| Development Environment | Python 3.9 |

| Accelerated Environment | CUDA 11.1 |

| Hyperparameter | Value |

|---|---|

| Optimization | SGD |

| Initial Learning Rate | 0.01629 |

| Momentum | 0.98 |

| Weight Decay | |

| Batch Size | 8 |

| Epochs | 100 |

| Loss Function | Precision (%) | Recall (%) | mAP (%) |

|---|---|---|---|

| DIoU | 93.5 | 90.8 | 91.5 |

| GIoU | 93.9 | 91.2 | 92.1 |

| CIoU | 94.1 | 90.3 | 92.4 |

| SIoU | 94.3 | 91.8 | 93.1 |

| Attention Model | Precision (%) | Recall (%) | mAP (%) |

|---|---|---|---|

| SE | 93.8 | 90.6 | 92.4 |

| CBAM | 94.2 | 90.8 | 92.8 |

| CoordAtt | 94.6 | 91.1 | 93.1 |

| FEM-SCAM | CoordAtt | SIoU | 4Head | Precision (%) | Recall (%) | mAP (%) |

|---|---|---|---|---|---|---|

| 94.1 | 90.3 | 92.4 | ||||

| ✓ | 94.6 | 91.1 | 93.1 | |||

| ✓ | ✓ | 94.8 | 91.5 | 93.5 | ||

| ✓ | ✓ | ✓ | 95.2 | 91.9 | 94.1 | |

| ✓ | ✓ | ✓ | ✓ | 95.7 | 92.1 | 94.5 |

| Model | Precision (%) | Recall (%) | mAP (%) |

|---|---|---|---|

| Faster R-CNN | 90.2 | 87.0 | 87.1 |

| SSD | 90.3 | 87.8 | 90.3 |

| YOLOv5 | 92.2 | 86.2 | 91.9 |

| YOLOv8 | 92.8 | 88.1 | 90.2 |

| YOLOv11 | 94.1 | 90.3 | 92.4 |

| FSCA-YOLO | 95.7 | 92.1 | 94.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Long, T.; Yu, R.; You, X.; Shen, W.; Wei, X.; Gu, Z. FSCA-YOLO: An Enhanced YOLO-Based Model for Multi-Target Dairy Cow Behavior Recognition. Animals 2025, 15, 2631. https://doi.org/10.3390/ani15172631

Long T, Yu R, You X, Shen W, Wei X, Gu Z. FSCA-YOLO: An Enhanced YOLO-Based Model for Multi-Target Dairy Cow Behavior Recognition. Animals. 2025; 15(17):2631. https://doi.org/10.3390/ani15172631

Chicago/Turabian StyleLong, Ting, Rongchuan Yu, Xu You, Weizheng Shen, Xiaoli Wei, and Zhixin Gu. 2025. "FSCA-YOLO: An Enhanced YOLO-Based Model for Multi-Target Dairy Cow Behavior Recognition" Animals 15, no. 17: 2631. https://doi.org/10.3390/ani15172631

APA StyleLong, T., Yu, R., You, X., Shen, W., Wei, X., & Gu, Z. (2025). FSCA-YOLO: An Enhanced YOLO-Based Model for Multi-Target Dairy Cow Behavior Recognition. Animals, 15(17), 2631. https://doi.org/10.3390/ani15172631