Simple Summary

Accurately tracking the growth of fish is essential for profitable and sustainable fish farming. However, the traditional method of catching and measuring fish by hand is slow, costly, and highly stressful for the animals, which can harm their health and slow their growth. To solve this, we developed a new artificial intelligence (AI) system called ECR-MobileNet. It acts like a “smart camera,” instantly and accurately estimating the length and weight of a largemouth bass from just a picture. Our AI is uniquely designed to be both precise and extremely efficient. It intelligently focuses on the most important parts of the fish’s image and is specially trained to be fair and accurate for fish of all sizes, from the smallest to the largest. We then streamlined this AI to make it so lightweight that it can run on small, affordable computers. Our method proved to be more accurate than 14 other modern AI models, providing near-instant results. This technology offers a stress-free way to monitor fish, helping farmers use feed more efficiently, reduce waste, and improve their profits and making modern, intelligent aquaculture a practical and accessible reality for farms of all sizes.

Abstract

The precise, non-destructive monitoring of fish length and weight is a core technology for advancing intelligent aquaculture. However, this field faces dual challenges: traditional contact-based measurements induce stress and yield loss. In addition, existing computer vision methods are hindered by prediction biases from imbalanced data and the deployment bottleneck of balancing high accuracy with model lightweighting. This study aims to overcome these challenges by developing an efficient and robust deep learning framework. We propose ECR-MobileNet, a lightweight framework built on MobileNetV3-Small. It features three key innovations: an efficient channel attention (ECA) module to enhance feature discriminability, an original adaptive multi-scale contrastive regression (AMCR) loss function that extends contrastive learning to multi-dimensional regression for length and weight simultaneously to mitigate data imbalance, and a dependency-graph-based (DepGraph) structured pruning technique that synergistically optimizes model size and performance. On our multi-scene largemouth bass dataset, the pruned ECR-MobileNet-P model comprehensively outperformed 14 mainstream benchmarks. It achieved an R2 of 0.9784 and a root mean square error (RMSE) of 0.4296 cm for length prediction, as well as an R2 of 0.9740 and an RMSE of 0.0202 kg for weight prediction. The model’s parameter count is only 0.52 M, with a computational load of 0.07 giga floating-point operations per second (GFLOPs) and a CPU latency of 10.19 ms, achieving Pareto optimality. This study provides an edge-deployable solution for stress-free biometric monitoring in aquaculture and establishes an innovative methodological paradigm for imbalanced regression and task-oriented model compression.

1. Introduction

In recent years, the aquaculture industry has undergone rapid global expansion, particularly in the culture of high-value fish species [1]. The industrial scale of largemouth bass (Micropterus salmoides), a species of immense economic value in global aquaculture, continues to expand worldwide. According to the China Fishery Statistical Yearbook (2024), the production of farmed largemouth bass in China reached 852,000 t in 2023, accounting for the vast majority of global output [2]. Within intensive aquaculture systems, the ability to precisely monitor the growth status of bass has become a central requirement for driving the industry’s intelligent transformation. This is especially true for the non-invasive, real-time prediction of key biological indicators such as body length and weight. Accurate growth data provide a direct basis for formulating scientific feeding strategies, effectively reducing feed costs, and improving the feed conversion ratio (FCR) [3]; furthermore, such data are fundamental for yield estimation and market planning. These factors directly influence the profitability, resource utilization efficiency, and market competitiveness of aquaculture enterprises [4]. Therefore, developing efficient and accurate technologies for growth prediction is a critical step in advancing the transition of the largemouth bass industry toward intelligent, precision-oriented, and sustainable practices [5].

Presently, the conventional measurement of bass body length and weight remains heavily reliant on manual sampling, a procedure that typically involves netting, anesthetizing, and physically handling the fish. This approach presents significant limitations. First, it is exceedingly labor-intensive and inefficient. For instance, sampling a 3.3-hectare (approximately 50-mu) pond requires 3–5 workers for over four hours, incurring high labor costs and rendering a full-pond census in large-scale farms practically infeasible [6]. Second, netting and direct human contact induce severe stress responses in fish, which have led to annual yield losses of 5–10% and increased susceptibility to pathogen infections [7]. These stressors can also significantly suppress feeding behavior and growth rates, and even elevate mortality, thereby directly compromising production performance [8]. Finally, the reliability of manual measurements is often compromised by their dependence on operator experience, making it difficult to ensure data repeatability and accuracy [9]. While early research attempted to address these issues using traditional mathematical models based on morphological parameters, these models were constrained by their reliance on limited, manually collected data samples. Consequently, they struggled to capture the complex non-linear relationship between body length and weight, exhibited poor generalization ability, and ultimately failed to eliminate the dependence on conventional measurement methods [10].

The rapid development of computer vision (CV) and deep learning technologies in recent years has offered non-contact solutions to address the aforementioned challenges [11]. Early CV techniques, which relied on traditional image processing to extract contour features, demonstrated poor robustness in underwater environments characterized by complex backgrounds and fluctuating lighting conditions [12]. With the advent of deep learning, convolutional neural networks (CNNs) have spurred significant progress in this domain, owing to their end-to-end feature learning capabilities [13]. From the classic AlexNet [14] to deeper architectures like ResNet [15], CNN models have consistently demonstrated superior performance across a range of agricultural image recognition tasks [16]. More recently, the vision transformer (ViT) has been introduced for biometric prediction, leveraging its capacity for global feature capture [17]. However, its high computational cost restricts its practical application on resource-constrained edge devices [18].

To address this challenge, model lightweighting has emerged as a key research focus, aiming to reduce parameter counts and computational complexity while maintaining accuracy [19]. Its application is particularly crucial in the context of aquaculture. This has led to the development of lightweight networks such as MobileNet [20,21,22], ShuffleNet [23,24], and GhostNet [25,26,27]. Zhang et al. [28] combined pose recognition with binocular vision to predict fish weight, achieving an error rate as low as 2.87%; however, their model was not optimized for edge deployment. Jansi Rani et al. [29] utilized YOLOv8 and preprocessing techniques to tackle the problem of turbid water, but the model modifications increased the computational load. Yan et al. [30] developed a lightweight YOLOv5m to meet the demands of underwater robots, yet their work did not focus on the prediction of growth indicators. Zheng et al. [31] employed video object segmentation to improve recognition rates in complex environments, albeit at a high computational cost. Xue et al. [32] used 3D imaging to predict the swimming speed of rainbow trout, but the complex workflow rendered the method unsuitable for real-time applications.

However, despite these explorations into diverse model architectures and application scenarios, existing studies have generally overlooked two critical issues. The first is the prevalent problem of sample size imbalance in aquaculture datasets, where samples of medium-sized fish far outnumber those of extreme sizes. This imbalance can impair the model’s predictive performance on long-tail data. Second, how to systematically achieve extreme model lightweighting to meet the demands of future large-scale deployment on low-cost edge devices remains an open challenge.

To address the aforementioned challenges, this study proposes a novel deep learning framework named ECR-MobileNet, designed for the high-precision and high-efficiency prediction of the body length and weight of largemouth bass. The main contributions of this paper are as follows:

- We designed and introduced the adaptive multi-scale contrastive regression (AMCR) loss function, specifically tailored for imbalanced regression, into the task of fish biometric parameter prediction. Combined with the efficient channel attention (ECA) module, this approach significantly improved the model’s predictive accuracy on long-tail data and its overall robustness.

- We proposed and validated a complete technical pipeline, from high-accuracy model training to extreme lightweight deployment. By employing a general structured pruning technique based on dependency graphs (DepGraph), implemented via the Torch-Pruning (v1.5.2) library, we substantially compressed the model’s parameter count and computational load by 44.1% and 41.7%, respectively, while maintaining or even improving prediction accuracy.

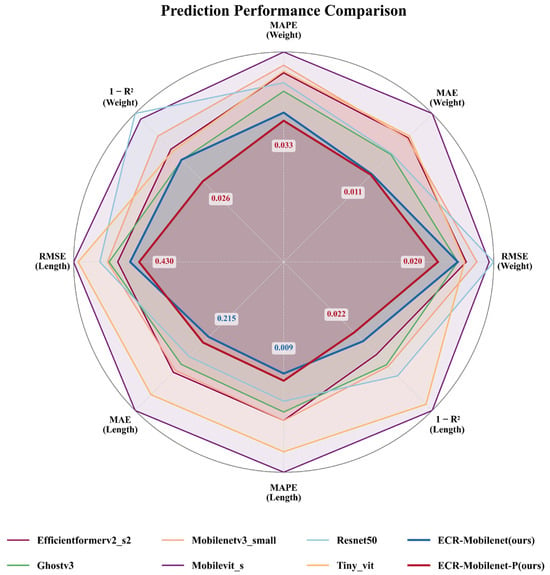

- Through extensive comparative experiments and ablation studies, we systematically validated the independent contributions and synergistic effects of each innovative module within our proposed framework. This work provides solid empirical evidence and establishes a new performance benchmark for future model design and optimization strategies in this field. The comprehensive performance of our method on the bass biometric prediction task is illustrated in Figure 1, visually substantiating the above contributions.

Figure 1. Radar chart comparing the performance of various models on the largemouth bass body length and weight prediction task. The chart illustrates the comparison across eight key regression metrics, including the mean absolute error (MAE), mean absolute percentage error (MAPE), root mean square error (RMSE), and 1 − R2. For all metrics, lower values indicate superior performance; consequently, a smaller polygon area closer to the center signifies better comprehensive performance. The results demonstrate that our proposed ECR-MobileNet (blue) significantly outperforms all other comparative models across every metric, validating the effectiveness of its design (Contribution 1). Furthermore, its pruned version, ECR-MobileNet-P (red), achieves even greater performance while drastically reducing model complexity, convincingly demonstrating the success of our proposed lightweighting pipeline (Contribution 2).

Figure 1. Radar chart comparing the performance of various models on the largemouth bass body length and weight prediction task. The chart illustrates the comparison across eight key regression metrics, including the mean absolute error (MAE), mean absolute percentage error (MAPE), root mean square error (RMSE), and 1 − R2. For all metrics, lower values indicate superior performance; consequently, a smaller polygon area closer to the center signifies better comprehensive performance. The results demonstrate that our proposed ECR-MobileNet (blue) significantly outperforms all other comparative models across every metric, validating the effectiveness of its design (Contribution 1). Furthermore, its pruned version, ECR-MobileNet-P (red), achieves even greater performance while drastically reducing model complexity, convincingly demonstrating the success of our proposed lightweighting pipeline (Contribution 2).

2. Materials and Methods

2.1. Dataset Description

The accurate acquisition of fish biometric parameters is a critical prerequisite for overcoming key technological bottlenecks in intelligent aquaculture. To address the limitations of conventional manual measurements—namely, their inefficiency and stress-inducing nature—and the poor generalization of existing computer vision models in authentic aquaculture environments (e.g., fluctuating illumination, water turbidity, and complex fish poses). Systematically covering the entire value chain from farming to market distribution, this dataset is designed to provide a foundational resource for the development of highly robust models for growth parameter prediction.

2.1.1. Data Acquisition and Scenario Design

A total of 120 healthy largemouth bass samples (ranging from 158 to 920 g) were systematically collected from aquaculture environments in Xiangtan, Changsha, and Jishou, Hunan Province. These environments included high-density earthen ponds (in Xiangtan) and indoor recirculating concrete tanks (in Jishou), with water temperatures maintained between 22 and 28 °C and pH levels of 7.0–8.5. Based on the characteristics of key stages in the aquaculture value chain, three distinct scientific scenarios were designed:

- Standardized lateral view for on-farm monitoring: In this scenario, we placed the fish in a glass tank and captured images laterally from the outside of the tank. This approach was designed to acquire high-quality images with a clean background, uniform lighting, and relatively standard fish poses, serving as ideal conditions for the model to learn baseline morphological data. This scenario was conducted in a controlled environment at the Xiangtan aquaculture base with the objective of acquiring standard lateral-view images rich in information for precise modeling. We captured images of 60 fish using a ZED 2i stereo camera (Stereolabs, San Francisco, CA, USA). To challenge and enhance the model’s environmental adaptability, this scenario specifically included two critical conditions: clear water and simulated turbid water (see Figure 2a). This was intended to train the model to cope with varying levels of water transparency. In this stage, approximately 660 high-quality data entries, each comprising an RGB image and its corresponding depth map, were collected.

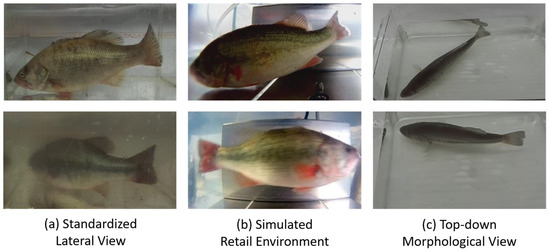

Figure 2. Representative images from the multi-scene largemouth bass dataset, designed to address key challenges in the aquaculture industry chain. (a) The left column displays standardized lateral-view measurement scenarios, with samples in clear (top) and simulated turbid (bottom) water to test the model’s environmental adaptability. (b) The middle column presents simulated flow-through environment monitoring scenarios, featuring challenges such as variable lighting conditions and complex non-rigid poses. (c) The right column shows top-down morphometric measurement scenarios for capturing body width and dorsal contour information, which is crucial for accurate biomass estimation.

Figure 2. Representative images from the multi-scene largemouth bass dataset, designed to address key challenges in the aquaculture industry chain. (a) The left column displays standardized lateral-view measurement scenarios, with samples in clear (top) and simulated turbid (bottom) water to test the model’s environmental adaptability. (b) The middle column presents simulated flow-through environment monitoring scenarios, featuring challenges such as variable lighting conditions and complex non-rigid poses. (c) The right column shows top-down morphometric measurement scenarios for capturing body width and dorsal contour information, which is crucial for accurate biomass estimation. - Simulated retail environment for market-chain tracking: In contrast to Scenario 1, this scenario utilized a waterproof high-definition camera to capture footage underwater, inside a tank that simulated a retail environment. This design was intended to replicate more challenging real-world conditions, including light refraction from underwater imaging, dynamic illumination from surface reflections, more complex backgrounds, and the uncooperative, non-rigid poses of fish in a confined space. We procured 30 live specimens from an aquatic market in Changsha and recorded underwater lateral-view videos using a waterproof high-definition camera (Fuli, Model A8, Shenzhen, China). This scenario simulated the temporary holding tanks common at retail terminals. The core challenges were complex dynamic lighting (e.g., surface reflections), diverse backgrounds (retail tanks), and uncooperative, non-rigid poses adopted by the fish due to spatial constraints. Using key-frame extraction techniques, we obtained approximately 340 dynamic images featuring diverse poses (see Figure 2b), which significantly enhanced the dataset’s diversity and robustness for complex real-world scenarios.

- Top-down morphological view for on-farm biomass estimation: At the Jishou aquaculture base, we again utilized the ZED 2i camera to capture images of 30 fish from a direct top-down perspective (see Figure 2c). This viewpoint is crucial for biomass estimation and is intended to complement the information provided by lateral views. The fish width and dorsal contour data available from this perspective are key variables for accurately estimating the condition factor and body weight. This provided the necessary data dimensionality for the model to overcome the limitations of a single viewpoint and establish a more precise non-linear mapping between body length and weight.

2.1.2. Ground Truth Annotation and Quality Control

Immediately following image acquisition, physical measurements were performed on each fish to acquire its ground truth (GT) data. To ensure accuracy and minimize stress, fish were briefly sedated in a buffered tricaine methanesulfonate (MS-222) solution at a concentration of approximately 100 mg/L. The exposure time to the sedative was around 2–3 min. The handling time out of water was kept under 60 s per fish. Body weight was recorded to the nearest gram using an electronic scale, and total length—the straight-line distance from the snout tip to the end of the caudal fin—was measured to the nearest millimeter using a standard measuring tape. Following measurements, fish were transferred to a dedicated recovery tank with fresh, aerated water and monitored until normal activity resumed, typically within 5–10 min, before being returned to their holding tanks.

All measurement data were rigorously linked to their corresponding images via a unique identifier. Specifically, each fish was assigned a numbered tag, which was recorded alongside its measurements and was visible in the initial photograph of each sequence to ensure a foolproof one-to-one correspondence. To ensure data quality, we employed a two-stage quality assurance (QA) protocol. The first stage involved a rapid screening process to discard images exhibiting severe motion blur, partial occlusion greater than 30%, or significant overexposure. The second stage consisted of a meticulous review of the remaining images to confirm that fish contours were clear and features were intact, leading to the removal of any ambiguous or compromised samples. Ultimately, this QA protocol resulted in a final dataset of 1284 high-quality images retained from an initial pool of 1354.

2.1.3. Dataset Statistical Analysis and Partitioning

The final dataset exhibited broad coverage in terms of sample size. The total length ranged from 17.5 to 34.0 cm (mean: 24.64 cm, standard deviation [SD]: 2.58 cm), while the body weight ranged from 158 to 920 g (mean: 420 g, SD: 120 g). Notably, the coefficient of variation (CV) for weight (28.6%) was significantly higher than that for total length (10.5%). This disparity indicates substantial individual variation in body condition, even among fish of similar lengths. This wide distribution is by design, as the samples were intentionally selected to span the full growth cycle from advanced fingerlings to market-ready adults, thereby enabling the development of a model capable of continuous growth monitoring. This characteristic not only provides a rich data foundation for the model to learn the complex, non-linear relationship between length and weight but also constitutes a core challenge of this research.

To ensure an unbiased model evaluation, we employed a stratified sampling strategy based on total length to partition the dataset into training, validation, and test sets at a ratio of 8:1:1. This approach ensured that the distribution of key biometric indicators in each subset was consistent with that of the overall dataset. The detailed partitioning and statistics are presented in Table 1.

Table 1.

Dataset partitioning and statistics.

2.1.4. Data Preparation and Augmentation

To ensure the uniformity of input data, enhance model generalization, and mitigate the risk of overfitting on a limited dataset, we constructed a systematic data preparation and augmentation pipeline. This pipeline, strictly adhering to standard principles of machine learning data processing, sequentially performed data cleaning and alignment, targeted data augmentation, and normalization. Crucially, the training set was treated differently from the validation and test sets to ensure an objective and unbiased evaluation of the model’s performance.

For the training set, we employed a composite strategy that combined geometric and photometric transformations to simulate the natural morphological variations and lighting disturbances found in authentic aquaculture environments. On the geometric level, random horizontal flipping was applied to enhance the model’s invariance to fish orientation, while random rotation simulated the natural tilting poses of fish in water. At the photometric level, color jittering was used to simulate color deviations under varying water quality and lighting conditions. Furthermore, random grayscale conversion enhanced the model’s robustness in scenarios with color distortion by reducing its over-reliance on color information.

In addition, to support the contrastive regularization training strategy, we further introduced asymmetric data augmentation. This process involved generating a pair of views for each training image with differing levels of perturbation. The two views remained semantically consistent but possessed subtle differences in their visual representations, thereby constructing positive pairs to strengthen the model’s ability to learn intrinsic feature associations.

Upon conversion to tensors, all images were normalized using the mean [0.485, 0.456, 0.406] and standard deviation [0.229, 0.224, 0.225] derived from the ImageNet dataset. This process stabilizes the model training and accelerates convergence. To address the significant disparities in physical units and numerical ranges between the two regression targets (total length and weight), this study performed label standardization. The standardization parameters (i.e., mean and standard deviation) were fitted exclusively on the training set labels and subsequently applied across all data subsets. This strategy ensures a balanced contribution of different physical scales to the loss function during multi-task regression.

Table 2 summarizes the specific pipeline and parameter settings for data augmentation and preprocessing. The training set underwent a series of augmentations—including geometric flipping, rotation, color jittering, and grayscaling—to expand its diversity. In contrast, the validation and test sets were subjected only to consistent resizing and normalization to avoid introducing additional perturbations.

Table 2.

Image data augmentation and preprocessing pipeline and parameters.

In summary, rigorous data cleaning and alignment ensured the integrity and consistency between the input images and their corresponding output labels. By employing a differentiated augmentation strategy and proper standardization, we effectively expanded data diversity while strictly controlling the risk of data leakage. This provides a high-quality, standardized data foundation for the subsequent training and evaluation of the high-precision, lightweight models for fish growth parameter prediction.

2.2. AMCR: Adaptive Multi-Scale Contrastive Regression Loss Function

Inspired by the InfoNCE [33] and ConR [34] methods, the AMCR loss function is specifically designed and optimized for the characteristics of deep regression tasks, effectively enhancing the accuracy and robustness of biometric parameter prediction.

In the context of predicting the length and weight of largemouth bass, AMCR first generates semantically consistent positive pairs by applying a series of augmentations—such as cropping, rotation, and color jittering—to the original fish images. It then dynamically constructs anchor-positive–negative relationships based on the similarity of the ground truth labels. By fusing supervised contrastive learning with information from the label space, the AMCR loss function drives samples with similar lengths and weights to cluster together in the feature space while pushing samples with significant biometric differences apart. This mechanism enhances the model’s generalization ability, particularly for imbalanced data distributions.

2.2.1. Problem Formulation

This research addresses the problem of non-contact, high-precision, computer vision-based prediction of key biometric parameters (i.e., total length and body weight) for largemouth bass in aquaculture. Let be a training dataset of N samples, where represents the input image and is its corresponding ground truth label vector. The label vector comprises two key biometric indicators: , where denotes the total length and represents the body weight. Consequently, the label space is two-dimensional continuous space, making this a multi-dimensional regression task. This study places a special focus on a prevalent challenge in authentic aquaculture environments: the imbalanced distribution of labels. Specifically, the number of samples representing medium-sized bass in the dataset far outnumbers those of extreme sizes (i.e., very small or very large), causing the distribution of labels to exhibit a long-tailed characteristic. We define a deep learning model as , which is composed of a feature extractor, , and a regressor, . The objective of this research is to train the model M such that for any given input image , its predicted output, , closely aligns with the corresponding ground truth label, .

2.2.2. Imbalanced Contrastive Regression

In regression tasks, the structure of the label space is expected to reflect semantic relationships within the feature space. However, an imbalanced data distribution disrupts this mapping, leading to a confounding effect where the feature statistics of minority and majority samples overlap, even when their ground truth labels differ significantly [35]. To address this challenge, we propose the AMCR framework. It innovatively integrates supervised information from the label space into a contrastive learning paradigm, with the goal of constructing a distributionally balanced feature space and thereby establishing a more robust feature similarity metric.

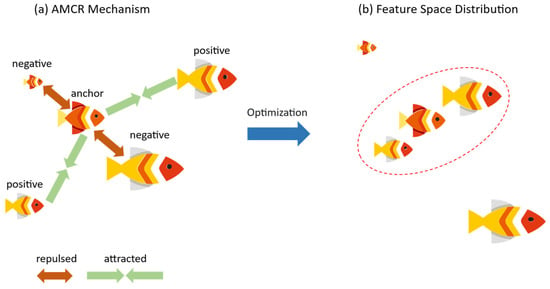

In essence, AMCR is a continuous extension of the InfoNCE loss. First, to introduce sample diversity, we apply data augmentation to each input image to generate two augmented views. The resulting set of augmented samples is defined as , where is an augmented image, and is its corresponding original ground truth label. The core of AMCR lies in its dynamic strategy for selecting positive and negative pairs. For each augmented sample , AMCR first determines its positive and negative counterparts. Since the label remains unchanged after augmentation, every sample has at least one positive pair (i.e., the other view from the same original image). If a sample also has at least one negative pair, it is treated as an ‘anchor’. The regularization process of AMCR then pulls this anchor closer to its positive pairs in the feature space while simultaneously pushing it away from its negative pairs. This core concept is illustrated in Figure 3.

Figure 3.

Conceptual diagram of the core principle behind adaptive multi-scale contrastive regression (AMCR). (a) The contrastive learning mechanism: centered on an anchor sample, the model is trained to attract positive samples with similar biometric features (Attraction) and repel negative samples with dissimilar features (Repulsion) in the feature space. (b) The desired feature space distribution after training: Following optimization with AMCR, samples with similar biometric characteristics (e.g., fish of comparable size) form compact clusters.

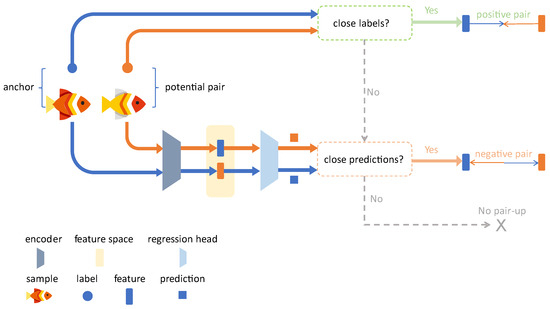

Pair Selection. Given a pair of augmented samples, and , each sample is first passed through the feature encoder to extract feature vectors and . These are subsequently fed into the regressor to obtain the predicted values and . The predicted values and the ground truth labels are jointly used to determine whether the two samples should form a positive pair, a negative pair, or not be paired at all. The detailed decision-making process is illustrated in Figure 4. To measure the similarity between multi-dimensional labels, we made a key extension to the basic contrastive regression method. We first define a similarity threshold, . Given a multi-dimensional distance function, , if the distance between two label vectors, and , satisfies Dist , they are considered similar, denoted as . In this study, we employed the Manhattan distance to compute the combined difference across the total length () and body weight () dimensions: . As shown in Figure 4, based on the similarity measure defined above, the pairing rules are defined as follows:

Positive pair: formed if and only if the ground truth labels of the two samples are similar, i.e., .

Negative pair: formed when the ground truth labels of the two samples are dissimilar but their model predictions are erroneously judged to be similar .

No pair: when neither of the above conditions is met, the samples do not participate in contrastive learning.

Anchor selection. For each augmented sample , the set of all its positive feature vectors is denoted as j, and the set of all its negative feature vectors is denoted as , where and represent the number of positive and negative samples, respectively. If , the sample is selected as an anchor and participates in the AMCR regularization process.

Figure 4.

Workflow of the pair selection mechanism in AMCR. (1) Positive pair: a positive pair is formed if the ground truth labels are similar . (2) Negative pair: a negative pair is assigned if the positive pair condition is not met, but the predicted values are erroneously judged to be similar , compelling the model to correct this error. (3) No pair: in all other cases, the pair does not participate in the contrastive loss computation.

For each candidate anchor sample , AMCR defines its contrastive loss as . If sample j is not selected as an anchor, then . If sample j is selected as an anchor concurrently performs two optimization tasks: it pulls the anchor closer to its positive samples , and it dynamically repels the negative samples based on their label dissimilarity. Specifically, the repulsive force exerted on a negative sample is proportional to , meaning that negative samples with greater label dissimilarity are pushed away more forcefully.

where is a temperature hyperparameter. The term , is a repulsion weight for each negative pair, defined as:

where is the ground truth label corresponding to the feature vector . The repulsion strength for each sample is dynamically adjusted by the label distribution to enhance optimization for minority samples; here, , where is a density weight calculated from the empirical label distribution. The similarity function generates the coefficient , which satisfies a dual constraint: and . Finally, the overall AMCR regularization term is the average of the anchor losses over the entire set of augmented samples:

2.2.3. Theoretical Insight

We followed the theoretical analysis framework from the original contrastive learning literature to theoretically validate the effectiveness of our proposed AMCR method. We derived a theoretical upper bound for the probability of mislabeling on minority class samples, which is constrained by our proposed loss function.

Here, A is the set of anchors, and is a negative sample image. The term represents the probability that the predicted value of a negative sample erroneously falls within the multi-dimensional similarity region centered at the anchor’s predicted value with radius . This similarity region is defined as . We interpret as the probability of “prediction collapse” for sample . The left-hand side of inequality (4) represents the weighted sum of these prediction collapse probabilities for all negative pairs during training. This formulation explicitly reveals that the AMCR loss, , places an upper bound on this total probability. Therefore, minimizing necessarily leads to a reduction in both the number of anchors (i.e., misclassified pairs) and the degree of prediction collapse.

Furthermore, each collapse probability is modulated by the weight , whose value is proportional to the ground truth label dissimilarity . This mechanism implements a differentiated penalty for severe prediction errors. Consequently, optimizing our proposed AMCR loss can significantly reduce the misclassification probability for minority samples. By strengthening the representation learning for minority classes through this weighting mechanism, it enhances the model’s overall performance and predictive fairness.

2.3. Overall Model Architecture

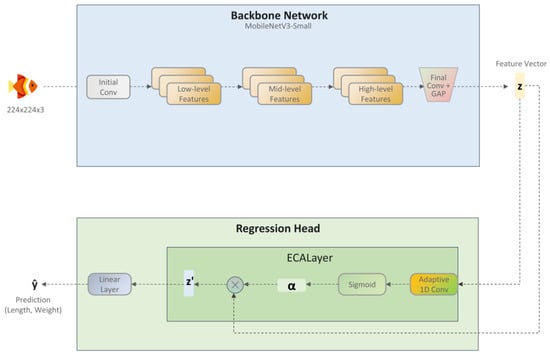

To achieve non-contact prediction of the body length and weight of largemouth bass, this study proposes a lightweight deep learning model named ECR-MobileNet (Efficient Channel Attention Regression MobileNet). The model adheres to the standard backbone-and-head design paradigm. It utilizes MobileNetV3-Small as its core backbone network, integrated with an ECA module and a simple linear regression head. This architecture strikes a balance between high-precision prediction and low computational complexity, making it particularly suitable for real-time fish growth monitoring on resource-constrained edge devices. As illustrated in Figure 5, the architecture primarily consists of two components: the backbone network and the regression head.

Figure 5.

The overall architecture of the proposed ECR-MobileNet. The model comprises two main components: (1) A lightweight backbone network based on MobileNetV3-Small, which performs hierarchical feature extraction to output a feature vector . (2) An efficient regression head, which first uses an ECA layer to recalibrate the channel-wise importance of z (yielding z’) and then employs a final linear layer to predict the biometric parameters (i.e., total length and weight).

2.3.1. Feature Extraction Backbone

The choice of a backbone network is a core determinant of a model’s performance and efficiency. In aquaculture applications, models must satisfy the dual requirements of high inference speed and low computational resource consumption while ensuring robust feature extraction capabilities. To this end, our study selected MobileNetV3-Small, a benchmark model for lightweight networks, as the primary feature extraction backbone. This choice not only represents an excellent balance between efficiency and performance but is also highly suitable for real-time applications in resource-constrained environments.

The central advantage of MobileNetV3 lies in its relentless pursuit of computational efficiency, achieved through several key design innovations:

Depth-wise separable convolutions: This technique decomposes a standard convolution into two distinct steps: a depth-wise convolution and a point-wise convolution. This approach achieves a feature extraction efficacy comparable to that of standard convolutions while significantly reducing both the parameter count and the computational load (FLOPs).

Inverted residuals and linear bottlenecks: This structure employs an “expand-convolve-compress” feature transformation pattern, where feature extraction and processing occur in a high-dimensional space. Simultaneously, linear bottleneck layers and residual connections are introduced at the low-dimensional input and output stages. This design effectively preserves the integrity of the information flow and mitigates the potential information loss caused by the ReLU activation function in low-dimensional spaces, thereby enhancing the quality of feature representations.

NAS-based architecture search: The architecture of MobileNetV3 is not entirely handcrafted; instead, it was optimized using neural architecture search (NAS) techniques tailored to the latency characteristics of specific hardware, such as mobile CPUs. This ensures that the model achieves a Pareto-optimal trade-off between speed and accuracy on real-world devices, providing strong support for practical deployment.

Departing from the common practice of relying on large pre-trained models, this study adopted a strategy of training the model from scratch, without using pre-trained weights from general-purpose datasets like ImageNet. Although this choice poses greater challenges for model convergence, its advantage is that the features extracted during training are exclusively tailored to our proprietary largemouth bass dataset. This avoids potential performance bottlenecks that can arise from domain discrepancies in pre-trained models. Training from scratch provides a more rigorous experimental foundation for validating the effectiveness of our proposed overall framework on task-specific data.

2.3.2. Efficient Channel Attention Regression Head

Conventional regression heads typically employ one or more fully connected (FC) layers to directly map a feature vector to the output values. This design treats each channel in the feature vector equally, failing to account for the significant differences in information content across various channels. In fine-grained tasks such as bass parameter prediction, certain feature channels often contain more discriminative information and contribute more significantly to the final prediction than others.

To enable the model to adaptively focus on the feature channels most critical to the current prediction task, this study introduces a key enhancement to the regression head design by incorporating the ECA mechanism. Proposed in ECA-Net [36], the core idea of the ECA mechanism is to significantly optimize the computational efficiency of the attention module while maintaining its performance. Compared to the Squeeze-and-Excitation (SE) attention mechanism, which is widely used in models like MobileNetV3, ECA offers two notable advantages:

- Avoidance of dimensionality reduction bottlenecks: The SE module facilitates channel interaction through two FC layers: the first compresses the channel dimension, and the second restores it. However, the authors of ECA-Net demonstrated through experiments that this dimensionality reduction is not essential for learning effective channel attention and may even be detrimental to model performance. Consequently, the ECA module discards the dimensionality reduction step and performs interactions directly on the original channel dimensions, thereby eliminating this potential bottleneck.

- Efficient local cross-channel interaction: ECA proposes an efficient local interaction strategy where the attention weight for each channel is learned by considering information from only its nearest neighboring channels, rather than engaging in global interaction with all channels. This strategy can be implemented with high efficiency using a one-dimensional (1D) convolution with a kernel size of

Furthermore, the authors of ECA-Net proposed that this interaction range, , should not be fixed but should instead be proportional to the channel dimension C. Thus, the kernel size is adaptively determined via the following mapping:

where denotes the nearest odd number, and and are tunable hyperparameters. This adaptive strategy enables the model to automatically select the optimal local interaction range for different network layers, thereby further enhancing model performance and generalization ability.

In our study, the final regression head was designed as follows: The feature vector output by the backbone network is first fed into an ECA layer. Within this layer, the aforementioned adaptive 1D convolution is performed, and a Sigmoid activation function is applied to compute an attention weight scalar in the [0, 1] range for each channel. Subsequently, these attention weights are multiplied channel-wise with the original feature vector to obtain a channel-recalibrated feature vector . Finally, the recalibrated vector is passed through a standard fully connected layer, which directly maps it to the final output vector , containing the two predicted targets (total length and weight).

This ECA + Linear regression head design constitutes a computationally efficient prediction module. Under the premise of introducing almost no additional computational overhead, it endows the model with the ability to dynamically focus on key feature channels. This capability is particularly crucial for a model learning task-specific effective feature representations from scratch, as it helps accelerate model convergence and improve the discriminability of the learned features.

2.4. Design of the Hybrid Loss Function

We selected the mean absolute error (MAE), also known as L1 loss, as the primary regression loss term. Compared to the mean squared error (MSE) loss, L1 loss offers several significant advantages. The MSE loss, which calculates the mean of the squared errors between predicted and true values, tends to amplify the influence of outliers, making the model training susceptible to interference from extreme data points. In contrast, the L1 loss uses the mean of the absolute errors, exhibiting greater robustness to outliers and effectively preventing significant performance degradation when handling datasets containing noise or outliers.

Furthermore, the gradient of the L1 loss is a constant value, which contributes to more stable training, particularly in the initial stages, and reduces the risk of exploding gradients. Its optimization process also tends to produce sparse solutions, which can implicitly perform feature selection. Conversely, the gradient of the MSE loss grows linearly with the error, which can lead to instability during the early phases of training. Additionally, the physical interpretation of the L1 loss is more intuitive, as it directly corresponds to the mean absolute error, making it easier to understand the model’s prediction bias. Therefore, in regression tasks where robustness, sparsity, or sensitivity to absolute error is prioritized, L1 loss is the superior choice. It is defined as follows:

where is the number of samples in a batch, and and are the ground truth and predicted output vectors for the sample, respectively.

To compensate for the shortcomings of the primary regression loss and enhance the model’s ability to handle imbalanced data, we introduced the adaptive contrastive regularization loss, denoted as , as a regularization term. By incorporating , we not only require the model’s predicted values to approach the ground truth but also impose a strong constraint at the feature space level. The model must learn a structured feature representation capable of distinguishing between samples with different size biases. This mechanism, through its contrastive learning strategy of “pulling similar samples closer and pushing dissimilar ones apart,” effectively prevents minority class samples from being marginalized in the feature space. This, in turn, significantly improves the model’s generalization ability and predictive fairness across the entire sample space.

Ultimately, the model’s optimization objective is to minimize the total loss function, , which is a weighted sum of the L1 loss and the AMCR loss:

where β is a hyperparameter used to balance the importance of the primary regression task and the contrastive regularization task. Guided by this hybrid loss function, the model simultaneously optimizes for two goals: predictive accuracy and feature space structure, thereby synergistically enhancing overall performance.

2.5. Model Compression and Fine-Tuning

To enhance the model’s deployment efficiency on resource-constrained edge devices, we further explored a model lightweighting pipeline after the initial training phase was completed. We employed a structured pruning technique, which directly reduces the model’s parameter count and computational complexity by physically removing entire structural units from the network.

2.5.1. General Structured Pruning Based on Dependency Graphs

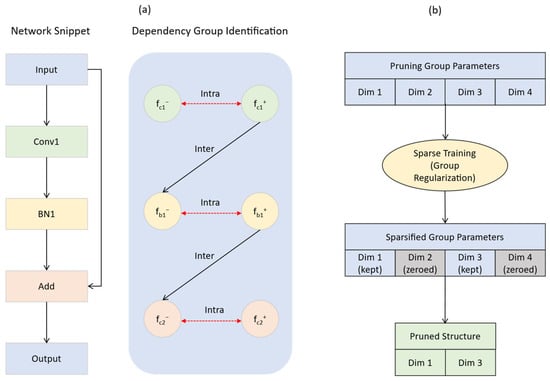

To address the prevalent issue of structural coupling in neural networks—where parameters in different layers are interdependent due to network connections and must be pruned simultaneously—and to enable general-purpose structured pruning for arbitrary network architectures, this study adopted the advanced dependency graph (DepGraph) method [37], utilizing the implementation provided in the Torch-Pruning library (v1.5.2). The core idea of DepGraph is to automatically model the complex dependency relationships within a network. Its key steps and innovations are as follows:

Network decomposition and dependency graph construction: DepGraph first decomposes the network into fine-grained basic components, with each component representing a parameterized layer or a non-parameterized operation. Each component, , is then further resolved into an input node, , and an output node, . Based on this representation, DepGraph automatically constructs a dependency graph. Inter-layer dependency: If the output of component is directly connected to the input of component , a dependency, , exists. Intra-layer dependency: If the input and output of a component, fᵢ, share the same pruning scheme, , a dependency, , exists. The dependency graph D only records the direct dependencies between adjacent layers or within a layer’s input/output nodes. It serves as a transitive reduction in the original dense grouping matrix G, containing the same information but with the minimum number of edges. As illustrated in Figure 6a, a network segment containing a convolutional layer and a BN layer is decomposed into input and output nodes, and a dependency graph comprising both inter- and intra-layer dependencies is constructed.

Figure 6.

Diagram of the structured pruning process based on a dependency graph (DepGraph). (a) The first stage involves decomposition and dependency graph construction, where an original network module is abstracted to identify both intra-layer and inter-layer parameter dependency groups. (b) The second stage is group-level sparse training, where group sparsity regularization is applied to drive the weights of entire redundant dimensions to zero, leading to a final pruned structure. This entire process achieves model compression while ensuring structural integrity.

Automatic identification of dependency groups: Based on the constructed dependency graph D, DepGraph identifies all maximal connected subgraphs using a graph traversal algorithm. Each connected subgraph constitutes an indivisible dependency group. As shown in Figure 6a under “Dependency Group Identification,” through graph traversal, all nodes in the dependency graph are identified as a single pruning group due to their connectivity. A pruning operation on any parameter within a group will have its effects automatically, synchronously, and safely propagated to all other related parameters in the same group via these dependency relationships. This process rigorously ensures the structural integrity and functional validity of the network after pruning.

Traditional methods assess importance at the single-layer or parameter level, making it difficult to accurately measure the overall contribution of a structurally coupled dependency group. DepGraph addresses this issue by applying sparsity training at the dependency group level. This is achieved by introducing a group-level L2-norm regularization term:

where indexes the prunable dimensions within group g. An adaptive shrinkage strength, , is employed, defined as , where is a hyperparameter. This strategy applies stronger shrinkage to dimensions of lower importance, thereby learning a consistent sparsity pattern within the group.

After sparsity training, dimensions of low importance within a group are sparsified to zero and can thus be safely removed, a process illustrated in Figure 6b. This mechanism enables effective group-level pruning, even with simple norm-based criteria. The complete process is depicted in Figure 6, which clearly demonstrates how to start from a network block, construct the dependency graph of its internal components, and subsequently perform sparsity training on the resulting dependency group to ultimately remove redundant dimensions and achieve model compression.

2.5.2. Pruning and Fine-Tuning Pipeline

Based on the DepGraph methodology, we executed the following: “pruning and fine-tuning” pipeline on our trained largemouth bass prediction model:

- Dependency-graph construction and group identification: The well-trained, high-accuracy bass prediction model, along with a sample input, was fed into the torch-pruning framework. The framework automatically performed network decomposition, constructed the dependency graph D, and identified all indivisible dependency groups through graph traversal.

- Group-level sparsity training: The model underwent a short period of retraining on the original training set, with group-level sparsity regularization applied. This step learned a consistent sparsity mask for each dimension within every dependency group, thereby identifying redundant dimensions.

- Pruning execution: guided by the sparsity masks learned during training, all dimensions or entire dependency groups identified as redundant were iteratively removed until a predefined global pruning ratio was achieved. Key safeguard: to preserve the core prediction functionality, the final linear layer of the regression head was explicitly excluded from the pruning scope.

- Fine-tuning: Structured pruning inevitably causes a temporary degradation in model performance. After the pruning was completed, the compacted model was fine-tuned on the original training set. This fine-tuning process involved training for a limited number of epochs with a small learning rate. The objective was to help the model recover and optimize its predictive accuracy on the new, pruned architecture, ultimately aiming to match or even surpass its pre-pruning performance level.

3. Results

3.1. Experimental Setup and Evaluation Metrics

To ensure the scientific rigor, objectivity, and reproducibility of our research findings, this section provides a detailed account of the hardware and software environments, data processing pipeline, specific parameters for model training and evaluation, and the metrics used to assess model performance.

3.1.1. Experimental Environment and Hyperparameter Settings

The experiments in this study were conducted in the following hardware and software environment. The hardware platform was a workstation equipped with an Intel Core i5-12600KF CPU, 32 GB of DDR4 memory, and an NVIDIA GeForce RTX 4060 Ti GPU. High-performance NVMe SSDs were used for system storage. The operating system was Windows 10 Professional.

The software environment was built upon the Python v3.8.19 programming language. The deep learning framework employed was PyTorch v1.13.1 with CUDA v11.7 acceleration enabled. The key dependent libraries included the following: OpenCV v4.10.0 and TorchVision v0.14.1 for computer vision tasks; Torch-Pruning v1.5.2 for model compression; NumPy v1.24.3 and SciPy v1.10.1 for scientific computing; and Pandas v1.5.3 for data manipulation. The GPU acceleration environment was facilitated by the CUDA Toolkit v11.7 and cuDNN v8.5.0, with versions strictly aligned with the PyTorch framework to ensure computational stability. The baseline hyperparameter settings are detailed in Table 3.

Table 3.

Baseline hyperparameter settings.

3.1.2. Evaluation Metrics

To comprehensively evaluate the model’s performance on the bass length and weight prediction tasks, this study employed the following four widely used regression evaluation metrics:

- Root mean square error (RMSE): . This metric measures the degree of dispersion between predicted and true values and is more sensitive to larger errors. A lower RMSE value indicates higher prediction accuracy.

- Mean absolute error (MAE): . This metric reflects the average absolute magnitude of the prediction error and is less sensitive to outliers. It provides a direct representation of the actual level of prediction error.

- Mean absolute percentage error (MAPE): . This metric quantifies the relative error in percentage form, eliminating the influence of scale and facilitating comparisons across prediction tasks with different dimensions.

- Coefficient of determination (R2): . This metric indicates the explanatory power of the model for the variance in the target variable. Its value ranges from 0 to 1, with a value closer to 1 signifying a better model fit.

3.2. Comparative Experiments

To systematically validate the effectiveness of our ECR-MobileNet model, which integrates ECA and AMCR, we conducted a benchmark study against 14 mainstream lightweight models. The selected models, including classic CNNs, hybrid architectures, and vision transformers, were chosen based on their best-performing variants with a parameter count under 25 M. To ensure a fair comparison, all models were trained from scratch on the largemouth bass dataset and evaluated on an independent test set, under the unified experimental environment described in Section 3.1.1.

3.2.1. Performance Analysis of Length and Weight Prediction

We evaluated our proposed models against 14 mainstream lightweight architectures on the largemouth bass biometric prediction task. The results, summarized in Table 4 and Table 5, demonstrate the superior accuracy of our approach.

Table 4.

Comparative results of the length prediction experiment.

Table 5.

Comparative results of the weight prediction experiment.

For length prediction (Table 4), our unpruned ECR-MobileNet achieved a highly competitive RMSE of 0.4565 and the lowest MAE (0.2149) and MAPE (0.93%) among all baseline models. Crucially, the pruned ECR-MobileNet-P model further improved performance, attaining a state-of-the-art RMSE of 0.4296 and an R2 of 0.9784. This result shows a 3.6% RMSE reduction compared to the best-performing baseline, ShuffleNetV2-x1.0, highlighting that our pruning strategy enhances generalization, rather than degrading accuracy.

The performance advantage was even more significant in the more challenging weight prediction task (Table 5). The ECR-MobileNet-P model ranked first across all four metrics, achieving an RMSE of 0.0202, MAE of 0.0108, MAPE of 3.31%, and an R2 of 0.9740. This outcome indicates that our integrated framework, combining the ECA module, AMCR loss, and structured pruning, effectively models the complex, non-linear relationship between fish morphology and weight, setting a new performance benchmark for this task.

3.2.2. Comprehensive Evaluation

A comprehensive analysis of the results reveals the consistent cross-task superiority of the proposed ECR-MobileNet framework. In both length and weight prediction tasks, our models systematically outperformed the 14 baseline models, which include classic CNNs, hybrid architectures, and vision transformers.

Notably, the pruned ECR-MobileNet-P consistently delivered the best overall performance, demonstrating that our “pruning as optimization” pipeline is highly effective. This approach not only reduces model complexity but also acts as a powerful regularizer, refining feature representations to enhance predictive accuracy. The consistent top-ranking performance across two distinct but related biological prediction tasks validates the robustness and generalizability of our integrated design, which synergistically combines attention mechanisms ECA, imbalanced regression techniques AMCR, and structured pruning. This establishes a new state-of-the-art performance benchmark in non-contact fish biometric assessment.

3.3. Analysis of Model Compression and Fine-Tuning Results

To evaluate the performance of our proposed ECR-MobileNet model and its pruned version in terms of computational efficiency and deployment feasibility, we conducted a comprehensive quantitative analysis of their model complexity. This analysis was benchmarked against a series of mainstream models, using metrics that include parameter count (M), computational load (GFLOPs), single-core CPU inference latency (ms), and memory footprint (MB).

3.3.1. Inherent Efficiency of ECR-MobileNet

First, even before pruning, our proposed ECR-MobileNet model already demonstrated exceptional efficiency. As detailed in Table 6, ECR-MobileNet has a parameter count of only 0.93 M and a computational load of just 0.12 GFLOPs. This complexity is substantially lower than that of most baseline models. Its computational load is comparable to that of MobileNetV3-Small but is significantly lower than that of ShuffleNetV2-x1.0 (0.30 GFLOPs). This inherent efficiency is primarily attributed to its lightweight MobileNetV3-Small backbone and our custom-designed ECA regression head, which introduces an attention mechanism with almost no additional computational burden.

Table 6.

Comparative analysis of model complexity and lightweighting results.

3.3.2. Extreme Lightweighting Achieved Through Structured Pruning

A core contribution of this research is the validation of the significant potential of advanced structured pruning techniques in achieving extreme model lightweighting. By adopting the dependency-graph-based pruning strategy combined with fine-tuning, we obtained the ECR-MobileNet-P model. This model demonstrated remarkable optimization in its efficiency metrics. Its parameter count was reduced to 0.52 M, and its computational load was a mere 0.07 GFLOPs. Compared to the unpruned ECR-MobileNet, the parameter count and computational load were reduced by 44.1% and 41.7%, respectively. When benchmarked against ShuffleNetV2-x1.0, the most efficient of the baseline models, the pruned model’s parameter count was only 41.6% of the latter’s, and its computational load dropped to an impressive 23.3%.

This enhancement in efficiency directly translates to faster real-world inference speeds. The CPU latency of ECR-MobileNet-P was only 10.19 ms, making it 29.5% faster than its unpruned counterpart and superior to all baseline models. Concurrently, its memory footprint was compressed to 2.00 MB, the lowest among all models, which substantially lowers the hardware threshold for deployment.

3.3.3. Comprehensive Evaluation of Performance and Efficiency

Synthesizing the performance analysis from Section 3.2, our proposed ECR-MobileNet-P model achieves a Pareto-optimal state, excelling in both the performance and efficiency dimensions. The model outperforms all 14 baseline models in prediction accuracy for both fish length and weight, with the minor exception of MAE and MAPE in length prediction, where it is marginally surpassed by the unpruned ECR-MobileNet. Simultaneously, it secures the top rank across all four key efficiency metrics: parameter count, computational load, inference latency, and memory footprint.

This achievement holds significant practical value, as it fully validates the effectiveness of the “advanced model architecture (ECA+AMCR) + general structured pruning” technical pipeline. Not only did we design a base model that combines high accuracy with inherent efficiency, but we also, through a subsequent automated pruning process, pushed its efficiency to the extreme while maintaining or even enhancing its predictive accuracy. This work provides a solid technical foundation and a viable solution for developing real-time, high-precision fish phenotyping systems intended for either resource-constrained embedded devices or high-throughput online servers.

3.4. Ablation Studies

To rigorously evaluate the independent contributions of each innovative technique proposed in this study, as well as their synergistic effects, we designed and conducted a series of detailed ablation studies. We established a baseline model comprising a MobileNetV3-Small backbone, a simple linear regression head, and training with the standard MSE loss function. We then progressively introduced or replaced components with our proposed enhancements and evaluated the performance of all model combinations on both the length and weight prediction tasks.

3.4.1. Ablation Analysis on the Length Prediction Task

We conducted detailed ablation studies to dissect the contribution of each component within our ECR-MobileNet framework. The results for the length prediction task are presented in Table 7.

Table 7.

Ablation study results for the length prediction task.

The analysis demonstrates that each proposed module—the L1 loss, the efficient channel attention (ECA) module, and the adaptive multi-scale contrastive regression (AMCR) loss—independently improved model performance over the baseline. For instance, replacing the standard MSE with L1 loss enhanced robustness to outliers (lower MAE/MAPE), while integrating the ECA module improved feature discriminability, leading to a reduction in all error metrics.

More importantly, the studies revealed a strong synergistic effect among the components. The combination of L1 loss and the ECA module (ECA+L1) yielded significant improvements beyond their individual contributions, achieving an RMSE of 0.4951. The full model, integrating all three components, achieved the global optimum across all metrics (RMSE: 0.4565, R2: 0.9757). This confirms that our design is not a simple aggregation of techniques, but a cohesive system where the robustness of L1 loss, the feature enhancement of ECA, and the imbalance-resistance of AMCR work in concert to achieve state-of-the-art performance. A similar synergistic pattern was observed in the weight prediction task (Table 8), further validating the stability and effectiveness of our proposed framework.

Table 8.

Ablation study results for the weight prediction task.

3.4.2. Ablation Analysis on the Weight Prediction Task

On the weight prediction task, we observed a pattern highly consistent with that of the length task, further validating the generalizability and stability of our proposed methods. As shown in Table 8, the independent contributions of L1 loss and the ECA module were once again confirmed. Introducing either L1 loss or the ECA module alone yielded significant performance improvements; notably, the ECA module substantially reduced MAPE from 4.61 to 4.22%. The ECA+L1 combination performed exceptionally well, achieving an RMSE of 0.0233 and a MAPE of 3.55%, making it the best-performing of all pairwise combinations and proving the immense power of integrating structural optimization with a robust loss function.

Ultimately, the full model integrating all three enhancements once again achieved an overwhelming victory, securing the global best for all metrics. It particularly excelled by lowering the MAE to 0.0109 and the MAPE to 3.50%. This result reconfirms that our proposed trinity of optimization strategies is the optimal solution for achieving high-precision weight prediction.

Based on the systematic ablation studies described above, we draw the following conclusions: Each enhancement proposed in this study—replacing MSE with the more robust L1 loss, integrating the efficient ECA module into the regression head, and introducing the AMCR loss designed for imbalanced data—makes an independent, positive contribution to the final model performance. More importantly, these techniques are not a simple aggregation but, rather, mutually reinforcing, creating a synergistic effect that ultimately enabled our complete model to achieve state-of-the-art (SOTA) performance on both tasks.

3.5. Visualization Analysis

To provide a more intuitive demonstration of the training stability of our proposed ECR-MobileNet model and the superior performance of its pruned version, ECR-MobileNet-P, we conducted a series of visualization analyses. These analyses include the evolution of the loss function during the training process, the fit between predicted and true values on the test set for the final models, and a comparison of error distributions against the baseline model.

3.5.1. Analysis of the Training Process

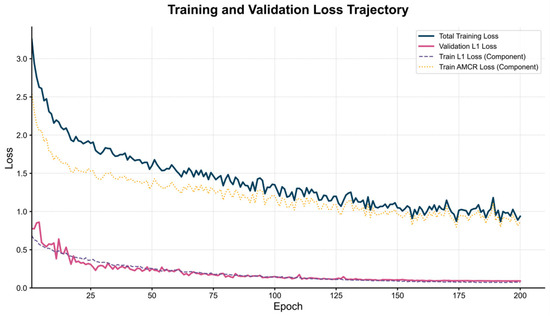

Figure 7 presents the loss function curves of the ECR-MobileNet model over 200 training epochs, comprising four key trajectories: Total Training Loss, Validation L1 Loss, Train L1 Loss Component, and Train AMCR Loss Component.

Figure 7.

Training and validation loss trajectories for the ECR-MobileNet model. The figure illustrates the loss dynamics over 200 training epochs. The total training loss (solid dark blue line) smoothly decreases, indicating stable model convergence. The validation L1 loss (solid pink line) and the training L1 loss component (dashed purple line) maintain a synchronized descent throughout the process, showing no signs of overfitting and thus demonstrating the model’s strong generalization ability.

Several key observations can be drawn from the figure:

Stable model convergence: The total training loss curve (solid dark blue line) exhibits a smooth, monotonically decreasing trend. The rapid decay in the initial phase indicates that the model efficiently captures the fundamental patterns in the data, while the gradual convergence to a stable range in the later stages validates the effectiveness and stability of the training process.

Assured generalization ability: The validation L1 loss (solid pink line) and the training L1 loss component (dashed purple line) consistently maintain a highly synchronized downward trend, with no signs of the validation loss increasing at any point. This confirms the efficacy of our data augmentation strategies and the AMCR regularization, which significantly suppresses the risk of overfitting and ensures the model’s generalization performance.

Synergistic regularization of AMCR: The AMCR loss component (dotted orange line) decreases steadily, with its optimization trajectory highly coordinated with that of the main loss function. This indicates that the contrastive regularization term continuously guides the structuring of the feature space. Through joint optimization with the regression task, it drives the model to converge towards a more discriminative solution space.

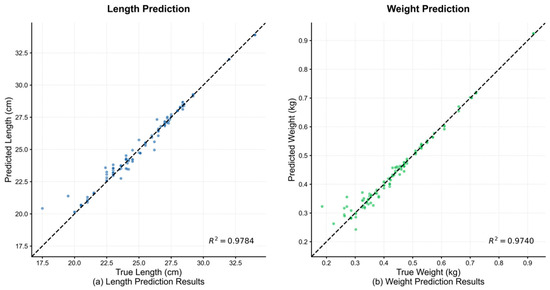

3.5.2. Visualization of Prediction Performance

To intuitively assess the prediction accuracy of the pruned ECR-MobileNet-P model, Figure 8 displays scatter plots of the predicted versus true values for both body length and weight on the test set. In the plots, the horizontal and vertical axes represent the true and predicted values, respectively, while the dashed black line indicates the ideal fit reference, y = x.

Figure 8.

Performance visualization of the ECR-MobileNet-P model on the length and weight prediction tasks. The scatter plots clearly show that, for both tasks, the predicted values exhibit a high degree of linear agreement with the true values, as the data points are tightly clustered around the ideal reference line, y = x.

Figure 8a illustrates the results for length prediction. The data points are tightly clustered along the y = x reference line, exhibiting a strong linear trend. The coefficient of determination reached 0.9784, visually confirming the high degree of agreement between the predicted and true values. Figure 8b shows the results for weight prediction, where the data points also form a compact linear distribution, with an R2 value of 0.9740. This further substantiates the model’s high-precision fitting capability on the weight prediction task.

Collectively, both plots corroborate the quantitative conclusions from Section 3.2: ECR-MobileNet-P achieves high correlation with the ground truth and demonstrates exceptional regression accuracy on both the length and weight prediction tasks.

3.5.3. Comparative Analysis of Error Distributions

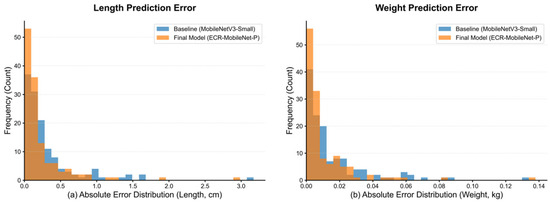

To quantitatively assess the performance advantage of the proposed method over the baseline model, Figure 9 presents histograms of the absolute prediction errors on the test set.

Figure 9.

Histograms of prediction error distributions for the final and baseline models. The figure compares the error distributions of the final model, ECR-MobileNet-P (orange), and the baseline model (blue) for length and weight prediction.

Figure 9a,b display the absolute error distributions for the length and weight tasks, respectively. In both prediction tasks, the frequency of ECR-MobileNet-P (orange) in the low-error intervals (length error < 0.2 cm, weight error < 0.01 kg) is significantly higher than that of the baseline model (blue). This indicates that its predictions exhibit a superior concentration of accuracy. Conversely, the baseline model shows a distinct heavy-tail phenomenon in the high-error intervals (length error > 1.0 cm, weight error > 0.04 kg), whereas the tail of the ECR-MobileNet-P distribution rapidly converges to near-zero frequency, effectively suppressing the occurrence of extreme prediction errors.

This difference in distribution morphology demonstrates that the proposed ECR-MobileNet-P not only reduces the systematic bias (indicated by the leftward shift of the distribution) but also decreases the prediction variance (evidenced by the increased kurtosis of the distribution). This enables the model to exhibit stronger robustness on complex samples.

4. Discussion

By proposing the ECR-MobileNet-P model, this study successfully addresses the problem of non-contact prediction for the body length and weight of largemouth bass, providing an efficient and accurate solution for intelligent aquaculture. In the following sections, we discuss the interpretation of our results, compare them with the existing literature, and elaborate on the implications for aquaculture, the limitations of our study, and future research directions to comprehensively evaluate the contributions and potential impact of our work.

4.1. Interpretation of Results

The experimental results demonstrate that the ECR-MobileNet-P model achieved state-of-the-art performance on both the length and weight prediction tasks. Specifically, the model achieved an RMSE of 0.4296 cm, an MAE of 0.2310 cm, a MAPE of 0.99%, and an R2 of 0.9784 for length prediction. For weight prediction, it achieved an RMSE of 0.0202 kg, an MAE of 0.0108 kg, a MAPE of 3.31%, and an R2 of 0.9740. These metrics surpassed those of all 14 baseline models, which included various types of lightweight deep learning architectures such as EfficientNetV2, GhostV3, and vision transformers, covering both convolutional neural network and vision transformer paradigms (see Section 3.2 for details). This level of accuracy, which significantly reduces the typical 5–10% error margin of manual methods, establishes a new state-of-the-art benchmark for non-contact fish biometrics and addresses a key challenge in deploying precise computer vision systems in real-world aquaculture.

The superior performance of ECR-MobileNet-P can be attributed to the following key designs:

- ECA mechanism: By adaptively adjusting channel weights, the ECA module [36] enhanced the model’s ability to focus on critical features, which is crucial for distinguishing salient morphological landmarks from a cluttered and dynamic underwater background.

- AMCR loss function: Through its contrastive learning strategy, AMCR dynamically constructed positive and negative pair relationships among samples, effectively mitigating the data imbalance problem and ensuring fair predictions across different fish sizes.

- Structured pruning: By employing the dependency-graph-based DepGraph pruning technique [37], the parameter count of ECR-MobileNet-P was reduced from 0.93 M to 0.52 M, and the computational load from 0.12 GFLOPs to 0.07 GFLOPs—a reduction in 44.1% and 41.7%, respectively. This method is particularly suitable for compact architectures like MobileNetV3, as it correctly identifies and preserves complex inter-layer dependencies, preventing structural damage that simpler pruning methods might cause. After fine-tuning, the model’s performance not only recovered but surpassed that of the original model, validating the potential of pruning as an optimization tool.

The ablation studies further quantified the contribution of each component. The combination of the ECA module and the AMCR loss function significantly enhanced model performance, while the pruning technique acted as a form of regularization by reducing redundant parameters, further refining the feature representations (see Section 3.4 for details). The model’s CPU inference latency of just 10.19 ms and its low memory footprint of 2.00 MB—far below other lightweight models—demonstrate its practical utility in resource-constrained environments.

4.2. Comparison with Existing Literature

The present study represents a significant advancement over the existing body of literature. Previous research on fish biometric prediction has primarily focused on either traditional machine learning methods or computationally intensive deep learning models. For instance, Zhang et al. [28] employed statistical morphological analysis and machine learning to estimate tilapia biomass. While their approach achieved high accuracy, it was constrained by its reliance on manually extracted features, resulting in poor generalization. In contrast, our study leverages deep learning for automatic feature extraction, making it applicable to a broader range of species and environments, and it particularly excels in its robustness under complex underwater conditions.

A review by Saleh et al. [38] on the application of computer vision and deep learning for underwater fish classification highlighted the challenges that complex aquatic environments pose to model performance. Our work addresses this specific gap by utilizing a multi-scene dataset and robust data augmentation strategies, which significantly enhanced the model’s robustness in such challenging environments. Unlike the studies by Jansi Rani et al. [29] and Yan et al. [30], our research places a dual emphasis on both prediction accuracy and computational efficiency, making it suitable for deployment on edge devices in small-scale aquaculture farms. Furthermore, while the review by Yan et al. [30] underscored the sustainable impact of AI in aquaculture, it did not delve into the practical deployment challenges of lightweight models. Our work directly confronts this issue. By combining structured pruning with the ECA module, we achieved substantial reductions in parameter count and computational load, thus addressing a critical void in the literature regarding the practical implementation of efficient models. Furthermore, our work extends the findings of recent studies on imbalanced regression. For instance, while Yang et al. [35] laid the theoretical groundwork for deep imbalanced regression, their methods were primarily tested on general-purpose datasets. Our study is one of the first to successfully adapt and extend these principles, specifically through our AMCR loss, to a complex, real-world biological application with continuous, multi-dimensional targets. Similarly, our work builds upon the non-contact measurement systems reviewed by Li et al. [6] and Saleh et al. [38] by explicitly tackling the dual challenges of data imbalance and model lightweighting, which were identified as key bottlenecks for practical deployment.

4.3. Implications for Aquaculture

The successful development of the ECR-MobileNet-P model provides the aquaculture industry with an efficient, accurate, and non-invasive tool for growth monitoring. Traditional manual measurement methods are not only labor-intensive but also induce stress in fish, adversely affecting their health and overall farming efficiency. In contrast, the model proposed in this study enables non-destructive, real-time monitoring through computer vision, which can significantly reduce manual intervention and fish stress while simultaneously improving the accuracy and efficiency of data acquisition.

By providing real-time predictions of fish length and weight, aquaculture farms can more precisely calculate feed requirements, thereby reducing waste and enhancing economic returns [5]. Accurate growth parameter prediction also facilitates more precise biomass estimation, which supports production planning and market-making decisions at the farm level. The non-contact nature of the measurement reduces physical disturbance to the fish, lowering stress levels and promoting sustainable aquaculture practices [38]. Furthermore, owing to the lightweight design of ECR-MobileNet-P, the model can be deployed on edge devices at small-scale farms without relying on high-performance servers. This substantially lowers the barrier to technology adoption and offers a viable intelligent solution for small and medium-sized aquaculture enterprises [39].

4.4. Limitations

Despite the significant achievements of this study, several limitations should be acknowledged:

- The current dataset was primarily collected from specific aquaculture environments in Hunan Province, which may not fully represent the diverse farming conditions (e.g., water quality, illumination) found in different regions globally. Therefore, the model’s generalization ability in other environments requires further validation.

- Although this study enhanced the model’s robustness through a multi-scene dataset, extreme conditions—such as severely turbid water or rapidly swimming fish—may still affect prediction accuracy. This necessitates further testing under such challenging scenarios.