Enhanced YOLOv8 for Robust Pig Detection and Counting in Complex Agricultural Environments

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset Sources and Analysis

2.2. Data Preprocessing and Dataset Construction

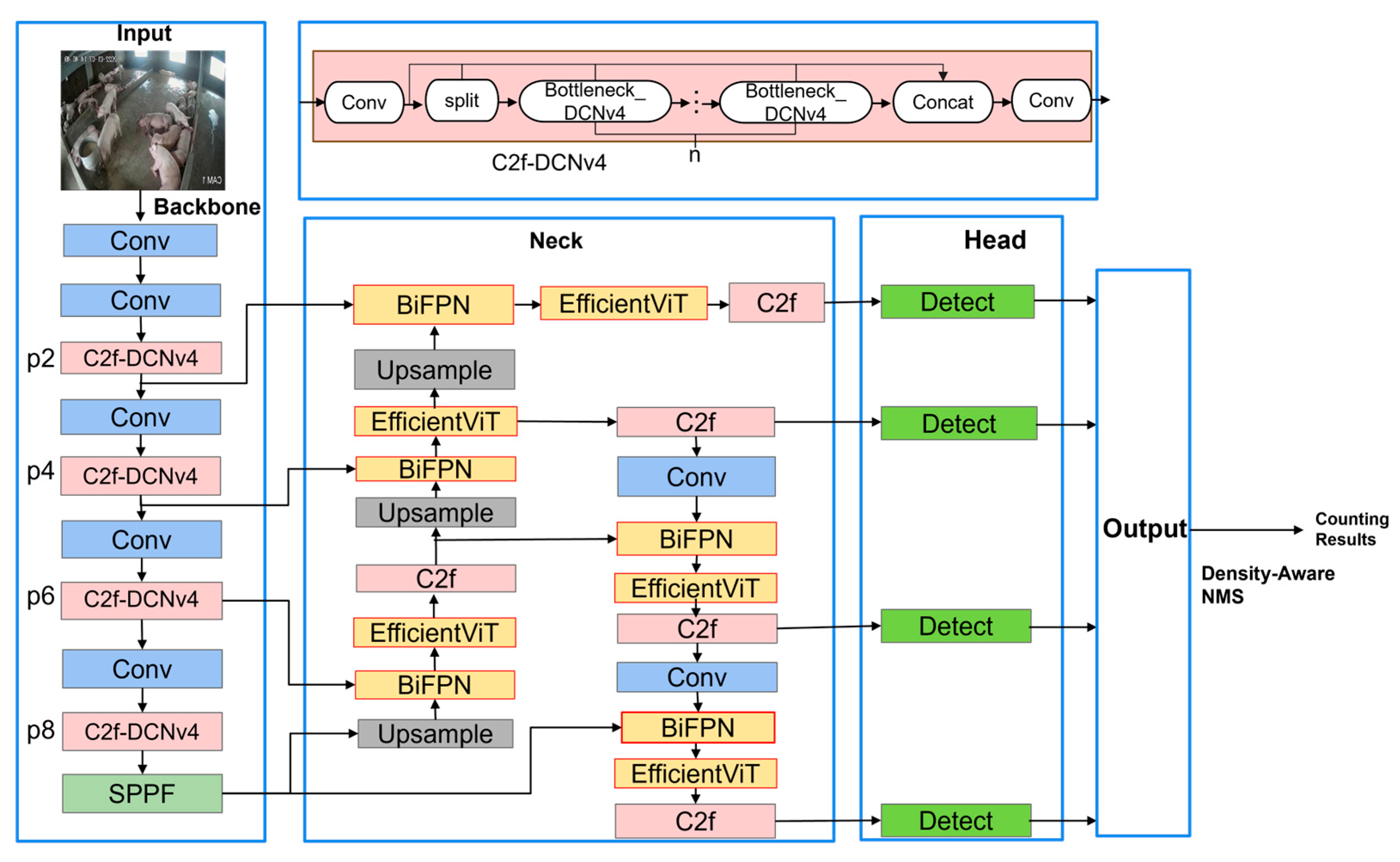

2.3. EAPC-YOLO Architecture

2.3.1. Overall Architecture

2.3.2. DCNv4: Deformable Convolution v4

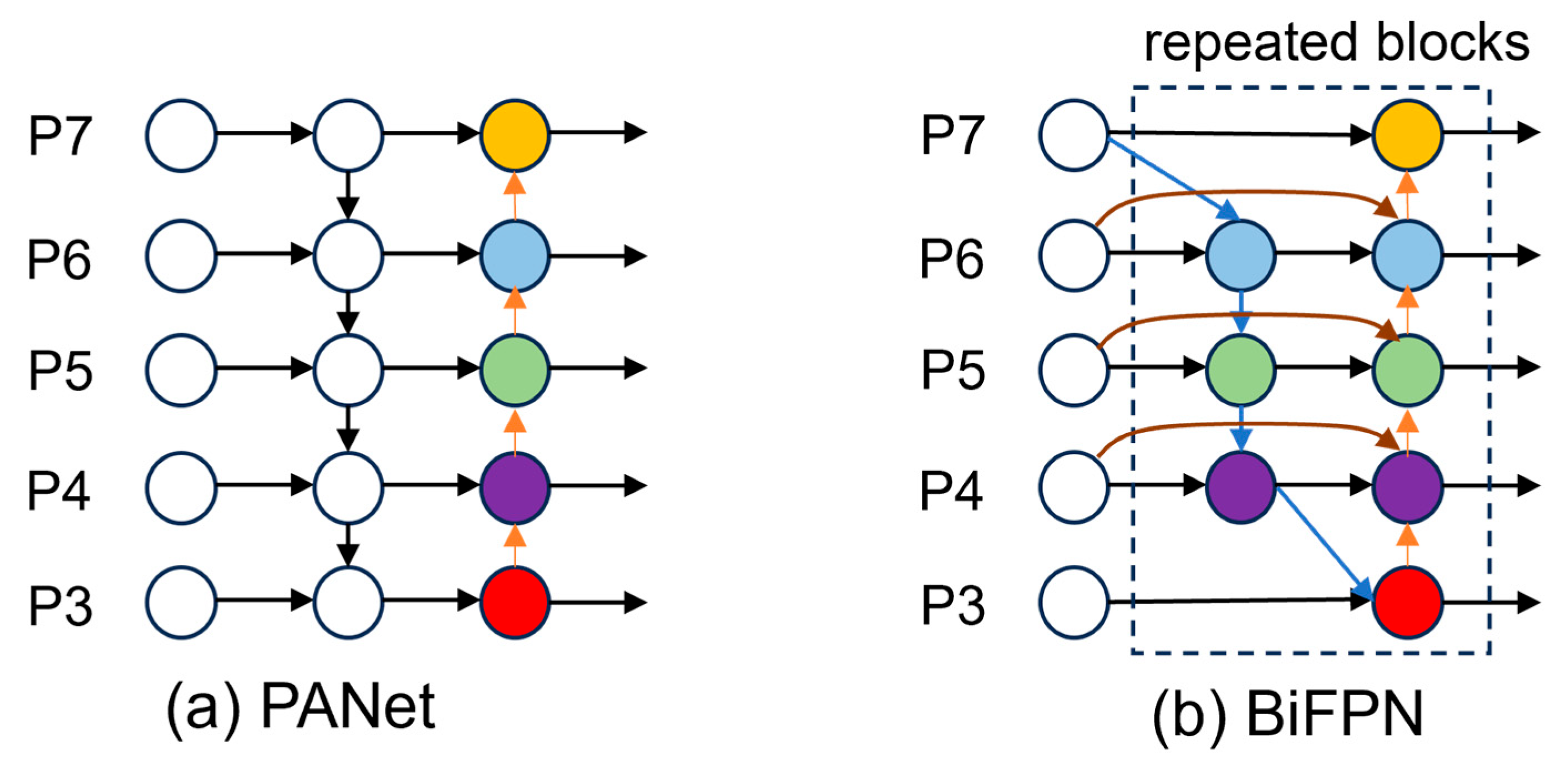

2.3.3. BiFPN Feature Fusion Network with Enhanced Detection Head

2.3.4. EfficientViT Linear Attention Mechanism

2.3.5. PIoU v2 Loss Function

2.4. Density-Aware Post-Processing Module

2.5. Performance Evaluation

3. Results and Analysis

3.1. Experimental Setup

3.2. Overall Performance Comparison

3.3. Ablation Study

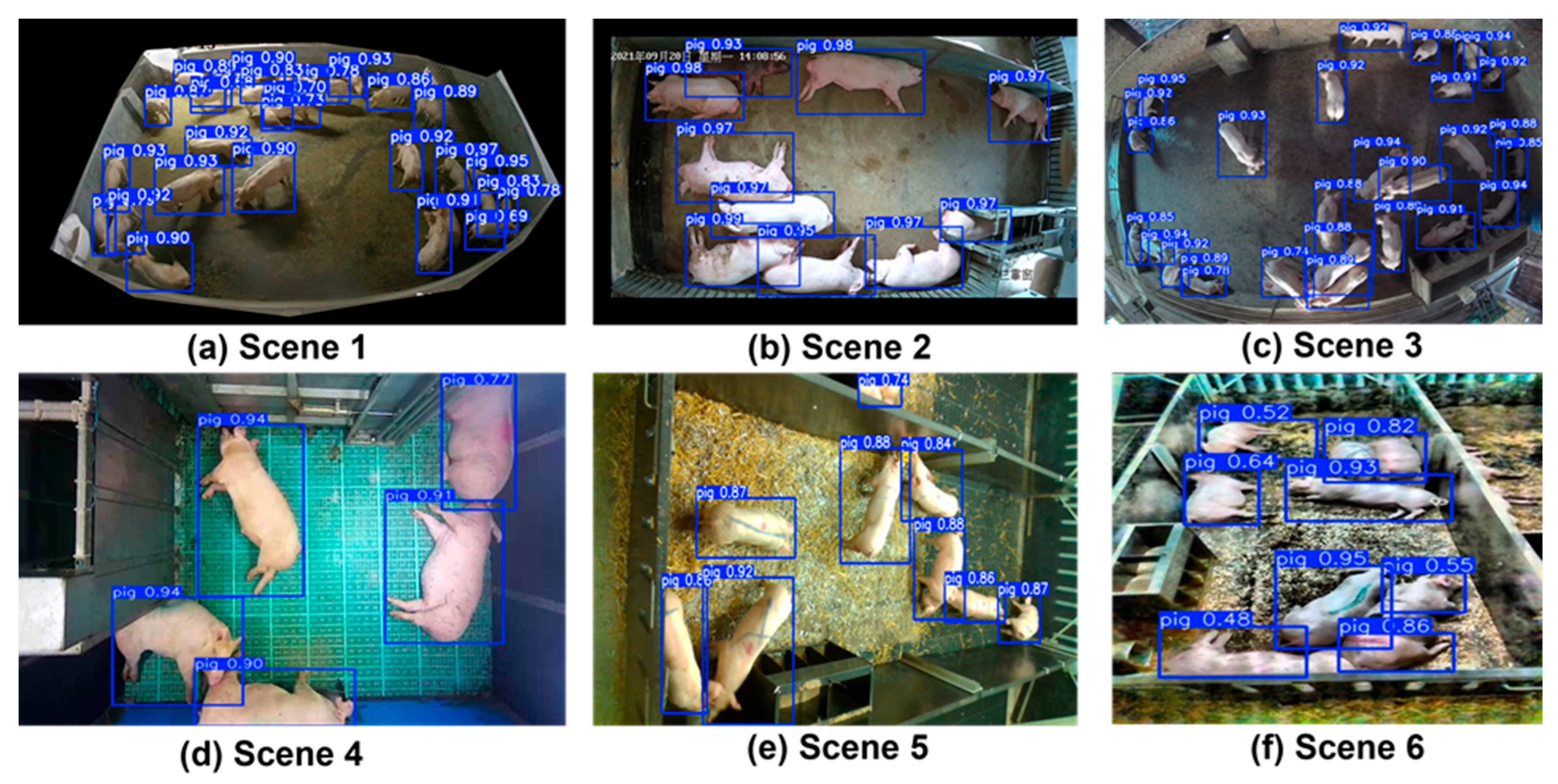

3.4. Environmental Robustness Analysis

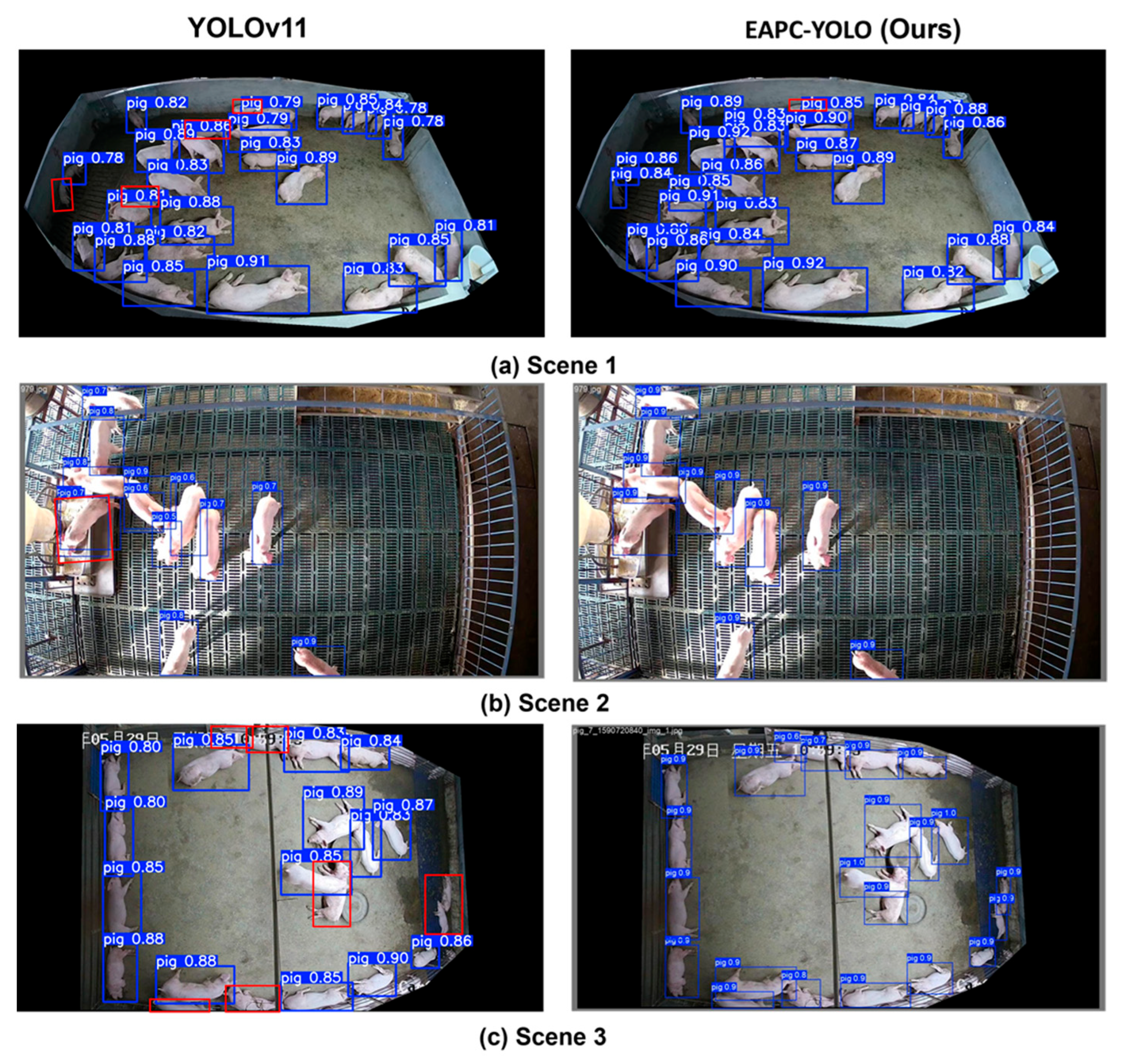

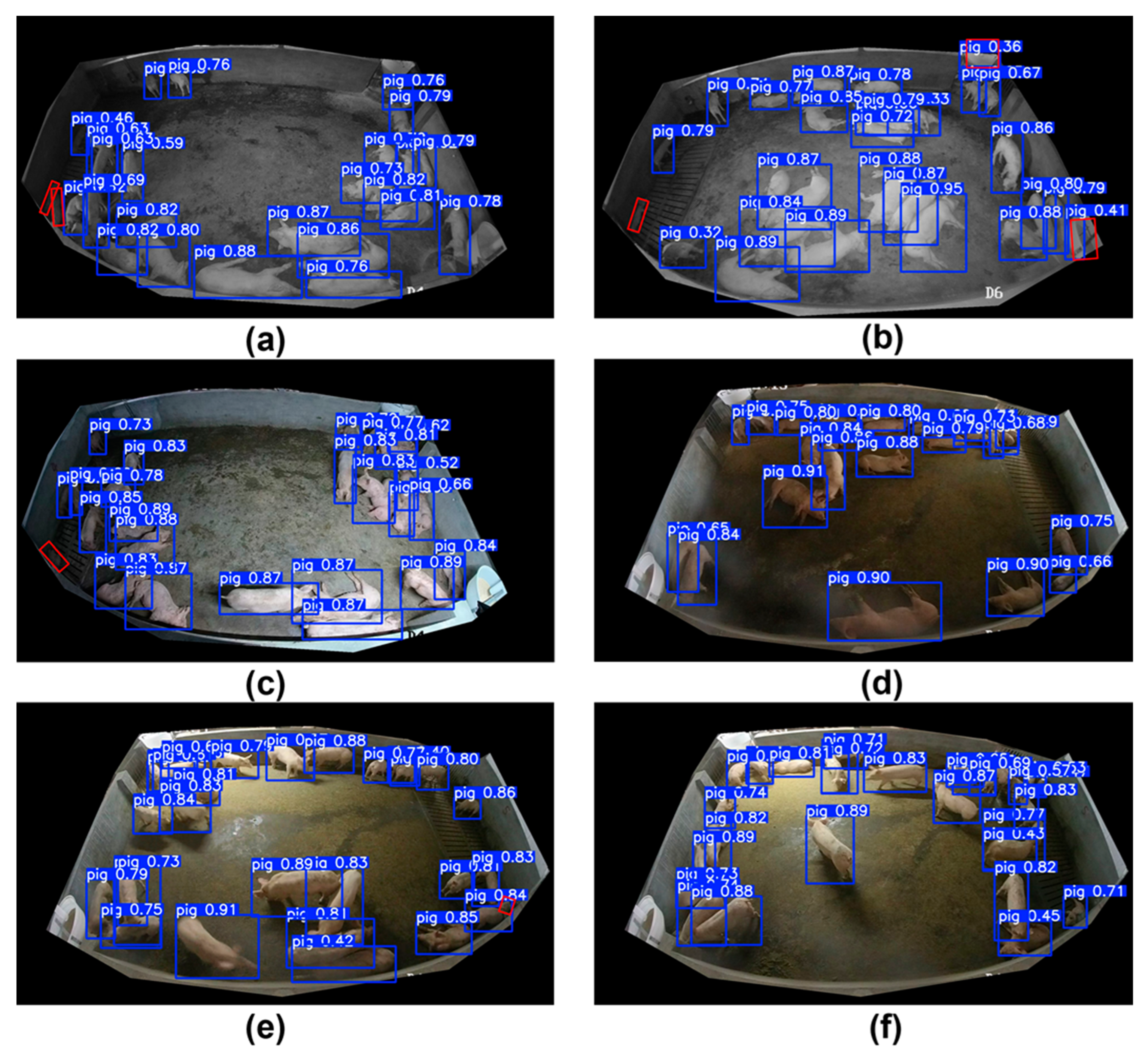

3.5. High-Density Challenging Scenarios Analysis

3.6. Computational Efficiency Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Heilig, G.K. World population trends: How do they affect global food security? Food Secur. Differ. Scales Demogr. Biophys. Socio-Econ. Consid. 2025, 2025, 25. [Google Scholar]

- Wang, S.; Jiang, H.; Qiao, Y.; Jiang, S.; Lin, H.; Sun, Q. The research progress of vision-based artificial intelligence in smart pig farming. Sensors 2022, 22, 6541. [Google Scholar] [CrossRef] [PubMed]

- OECD/FAO. OECD-FAO Agricultural Outlook 2021–2030; OECD Publishing: Paris, France, 2021. [Google Scholar]

- Nasirahmadi, A.; Sturm, B.; Edwards, S.; Jeppsson, K.H.; Olsson, A.C.; Müller, S.; Hensel, O. Deep learning and machine vision approaches for posture detection of individual pigs. Sensors 2019, 19, 3738. [Google Scholar] [CrossRef] [PubMed]

- Seo, J.; Ahn, H.; Kim, D.; Lee, S.; Chung, Y.; Park, D. EmbeddedPigDet—Fast and accurate pig detection for embedded board implementations. Appl. Sci. 2020, 10, 2878. [Google Scholar] [CrossRef]

- Shao, X.; Liu, C.; Zhou, Z.; Xue, W.; Zhang, G.; Liu, J.; Yan, H. Research on Dynamic Pig Counting Method Based on Improved YOLOv7 Combined with DeepSORT. Animals 2024, 14, 1227. [Google Scholar] [CrossRef] [PubMed]

- Psota, E.T.; Mittek, M.; Pérez, L.C.; Schmidt, T.; Mote, B. Multi-pig part detection and association with a fully-convolutional network. Sensors 2019, 19, 852. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Xiao, D.; Liu, J.; Tan, Z.; Liu, K.; Chen, M. An improved pig counting algorithm based on YOLOv5 and DeepSORT model. Sensors 2023, 23, 6309. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Li, S.; Li, Z.; Wu, N.; Miao, Q.; Li, S. Crowd-aware Black Pig Detection for Low Illumination. In Proceedings of the 2022 6th International Conference on Video and Image Processing, Shanghai, China, 23–26 December 2022; pp. 42–48. [Google Scholar]

- Campbell, M.; Miller, P.; Díaz-Chito, K.; Hong, X.; McLaughlin, N.; Parvinzamir, F.; Del Rincón, J.M.; O’COnnell, N. A computer vision approach to monitor activity in commercial broiler chickens using trajectory-based clustering analysis. Comput. Electron. Agric. 2024, 217, 108591. [Google Scholar] [CrossRef]

- Mora, M.; Piles, M.; David, I.; Rosa, G.J. Integrating computer vision algorithms and RFID system for identification and tracking of group-housed animals: An example with pigs. J. Anim. Sci. 2024, 102, skae174. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Shi, G.; Jiao, J. YOLOv5-KCB: A new method for individual pig detection using optimized K-means, CA attention mechanism and a bi-directional feature pyramid network. Sensors 2023, 23, 5242. [Google Scholar] [CrossRef] [PubMed]

- Matthews, S.G.; Miller, A.L.; Clapp, J.; Plötz, T.; Kyriazakis, I. Early detection of health and welfare compromises through automated detection of behavioural changes in pigs. Vet. J. 2016, 217, 43–51. [Google Scholar] [CrossRef] [PubMed]

- Han, S.; Zhang, J.; Zhu, M.; Wu, J.; Kong, F. Review of automatic detection of pig behaviours by using image analysis. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2017; Volume 69, p. 012096. [Google Scholar]

- Tscharke, M.; Banhazi, T.M. A brief review of the application of machine vision in livestock behaviour analysis. J. Agric. Inform. 2016, 7, 23–42. [Google Scholar]

- Oliveira, D.A.B.; Pereira, L.G.R.; Bresolin, T.; Ferreira, R.E.P.; Dorea, J.R.R. A review of deep learning algorithms for computer vision systems in livestock. Livest. Sci. 2021, 253, 104700. [Google Scholar] [CrossRef]

- Li, G.; Huang, Y.; Chen, Z.; Chesser Jr, G.D.; Purswell, J.L.; Linhoss, J.; Zhao, Y. Practices and applications of convolutional neural network-based computer vision systems in animal farming: A review. Sensors 2021, 21, 1492. [Google Scholar] [CrossRef] [PubMed]

- Bhujel, A.; Wang, Y.; Lu, Y.; Morris, D.; Dangol, M. A systematic survey of public computer vision datasets for precision livestock farming. Comput. Electron. Agric. 2025, 229, 109718. [Google Scholar] [CrossRef]

- Tian, M.; Guo, H.; Chen, H.; Wang, Q.; Long, C.; Ma, Y. Automated pig counting using deep learning. Comput. Electron. Agric. 2019, 163, 104840. [Google Scholar] [CrossRef]

- Huang, E.; He, Z.; Mao, A.; Ceballos, M.C.; Parsons, T.D.; Liu, K. A semi-supervised generative adversarial network for amodal instance segmentation of piglets in farrowing pens. Comput. Electron. Agric. 2023, 209, 107839. [Google Scholar] [CrossRef]

- Jia, J.; Zhang, S.; Ruan, Q. PCR: A Large-Scale Benchmark for Pig Counting in Real World. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Xiamen, China, 13–15 October 2023; Springer Nature: Singapore, 2023; pp. 227–240. [Google Scholar]

- Tu, S.; Cai, Y.; Liang, Y.; Lei, H.; Huang, Y.; Liu, H.; Xiao, D. Tracking and monitoring of individual pig behavior based on YOLOv5-Byte. Comput. Electron. Agric. 2024, 220, 108880. [Google Scholar] [CrossRef]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2126. [Google Scholar]

- KeDaXunFei. Pig Inventory Challenge. 2021. Available online: http://challenge.xfyun.cn/topic/info?type=pig-check (accessed on 20 June 2025).

- Ke, H.; Li, H.; Wang, B.; Tang, Q.; Lee, Y.H.; Yang, C.F. Integrations of LabelImg, You Only Look Once (YOLO), and Open Source Computer Vision Library (OpenCV) for Chicken Open Mouth Detection. Sens. Mater. 2024, 36, 4903–4913. [Google Scholar] [CrossRef]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A review on yolov8 and its advancements. In International Conference on Data Intelligence and Cognitive Informatics; Springer: Singapore, 2024; pp. 529–545. [Google Scholar]

- Xiong, Y.; Li, Z.; Chen, Y.; Wang, F.; Zhu, X.; Luo, J.; Wang, W.; Lu, T.; Li, H.; Qiao, Y.; et al. Efficient deformable convnets: Rethinking dynamic and sparse operator for vision applications. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5652–5661. [Google Scholar]

- Chen, J.; Mai, H.; Luo, L.; Chen, X.; Wu, K. Effective feature fusion network in BIFPN for small object detection. In Proceedings of the 2021 IEEE international conference on image processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 699–703. [Google Scholar]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. Efficientvit: Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14420–14430. [Google Scholar]

- Liu, C.; Wang, K.; Li, Q.; Zhao, F.; Zhao, K.; Ma, H. Powerful-IoU: More straightforward and faster bounding box regression loss with a nonmonotonic focusing mechanism. Neural Netw. 2024, 170, 276–284. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Wang, H.; Wang, F.; Wang, Y.; Liu, J.; Zhao, L.; Wang, H.; Zhang, F.; Cheng, Q.; Qing, S. Efficient and accurate tobacco leaf maturity detection: An improved YOLOv10 model with DCNv3 and efficient local attention integration. Front. Plant Sci. 2025, 15, 1474207. [Google Scholar] [CrossRef] [PubMed]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS—Improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar]

- Wang, D.; He, D. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, M.L.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

| Density Category | Pig Count Range | Number of Images | Percentage |

|---|---|---|---|

| Sparse | 8–12 pigs | 1600 | 20.0% |

| Low-Medium | 13–18 pigs | 2400 | 30.0% |

| Medium-High | 19–25 pigs | 2800 | 35.0% |

| High-Density | 26–30 pigs | 1200 | 15.0% |

| Total | 8–30 pigs | 8000 | 100% |

| Set | Number of Images | Total Number of Pigs | Maximum in a Single Image | Average Number Per Image | Mean ± SD | IQR |

|---|---|---|---|---|---|---|

| Train | 4800 | 86,400 | 30 | 18 | 18.0 ± 5.2 | [14, 22] |

| Validation | 1600 | 30,400 | 29 | 19 | 19.0 ± 4.8 | [15, 23] |

| Test | 1600 | 33,600 | 30 | 21 | 21.0 ± 5.1 | [17, 25] |

| Total | 8000 | 150,400 | 30 | 18.8 | 18.8 ± 5.1 | [15, 23] |

| Method | Precision (%) | Recall (%) | F1 Score (%) | mAP@0.5 (%) | Average Accuracy (%) | MAE | RMSE | FPS |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s [35] | 85.2 ± 2.1 | 82.7 ± 1.8 | 83.9 ± 1.6 | 87.3 ± 2.3 | 78.4 ± 3.2 | 2.8 ± 0.4 | 4.2 ± 0.6 | 45.2 |

| YOLOv8n [28] | 88.4 ± 1.7 | 86.2 ± 1.5 | 87.3 ± 1.4 | 90.5 ± 1.9 | 83.7 ± 2.8 | 2.2 ± 0.3 | 3.4 ± 0.5 | 48.6 |

| YOLOv11n [36] | 89.8 ± 1.5 | 88.1 ± 1.3 | 88.9 ± 1.2 | 92.4 ± 1.6 | 86.2 ± 2.4 | 1.8 ± 0.3 | 3.0 ± 0.4 | 46.3 |

| FasterRCNN [37] | 90.8 ± 1.4 | 88.9 ± 1.6 | 89.8 ± 1.3 | 92.4 ± 1.7 | 86.8 ± 2.1 | 1.8 ± 0.2 | 2.9 ± 0.4 | 12.3 |

| RetinaNet [38] | 87.3 ± 1.9 | 85.6 ± 1.8 | 86.4 ± 1.7 | 89.7 ± 2.1 | 82.1 ± 2.9 | 2.4 ± 0.3 | 3.6 ± 0.5 | 25.8 |

| DETR [39] | 89.1 ± 1.6 | 87.2 ± 1.4 | 88.1 ± 1.3 | 91.3 ± 1.8 | 84.9 ± 2.6 | 2.0 ± 0.3 | 3.2 ± 0.4 | 15.7 |

| DINO [40] | 91.5 ± 1.3 | 89.7 ± 1.2 | 90.6 ± 1.1 | 91.8 ± 1.5 | 88.2 ± 2.0 | 1.6 ± 0.2 | 2.7 ± 0.3 | 18.4 |

| EAPC-YOLO (Ours) | 94.2 ± 1.2 | 92.6 ± 1.1 | 93.4 ± 1.0 | 95.7 ± 1.2 | 96.8 ± 1.5 | 0.8 ± 0.1 | 1.4 ± 0.2 | 41.5 |

| Models | DCNv4 | BiFPN | EfficientViT | PloU v2 | P2 Head | Density NMS | mAP@0.5 (%) | Average Accuracy (%) |

|---|---|---|---|---|---|---|---|---|

| Baseline | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | 90.5 ± 1.8 | 83.7 ± 2.5 |

| +DCNv4 | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | 91.8 ± 1.6 | 85.1 ± 2.3 |

| +BiFPN | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | 92.1 ± 1.7 | 84.9 ± 2.4 |

| +EfficientViT | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | 91.3 ± 1.5 | 84.6 ± 2.2 |

| +PloU v2 | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | 91.6 ± 1.6 | 84.8 ± 2.1 |

| +P2 Head | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | 92.3 ± 1.4 | 85.4 ± 2.0 |

| +Density NMS | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | 90.8 ± 1.7 | 88.2 ± 1.8 |

| +DCNv4 + BiFPN | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | 93.2 ± 1.3 | 87.3 ± 1.9 |

| +DCNv4 + BiFPN + EfficientViT | ✓ | ✓ | ✓ | ✗ | ✗ | ✗ | 94.1 ± 1.2 | 89.1 ± 1.7 |

| +DCNv4 + BiFPN + EfficientViT + PloU v2 | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ | 94.6 ± 1.1 | 89.8 ± 1.6 |

| +DCNv4 + BiFPN + EfficientViT + PloU v2 + P2 | ✓ | ✓ | ✓ | ✓ | ✓ | ✗ | 95.1 ± 1.0 | 91.2 ± 1.5 |

| EAPC-YOLO (Full) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 95.7 ± 1.2 | 96.8 ± 1.5 |

| Method | Parameters (M) | FLOPs (G) | GPU Memory (MB) | Model Size (MB) |

|---|---|---|---|---|

| YOLOv5s | 7.2 | 16.5 | 1248 | 14.1 |

| YOLOv8n | 3.2 | 8.7 | 982 | 6.2 |

| YOLOv11n | 2.6 | 6.5 | 876 | 5.1 |

| Faster RCNN | 41.8 | 207.4 | 3142 | 167.8 |

| RetinaNet | 36.3 | 145.2 | 2567 | 145.6 |

| DETR | 41.3 | 86.4 | 2891 | 166.2 |

| DINO | 47.2 | 124.8 | 3327 | 189.4 |

| EAPCYOLO | 5.8 | 12.3 | 1456 | 11.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Ma, W.; Wei, Y.; Wang, T. Enhanced YOLOv8 for Robust Pig Detection and Counting in Complex Agricultural Environments. Animals 2025, 15, 2149. https://doi.org/10.3390/ani15142149

Li J, Ma W, Wei Y, Wang T. Enhanced YOLOv8 for Robust Pig Detection and Counting in Complex Agricultural Environments. Animals. 2025; 15(14):2149. https://doi.org/10.3390/ani15142149

Chicago/Turabian StyleLi, Jian, Wenkai Ma, Yanan Wei, and Tan Wang. 2025. "Enhanced YOLOv8 for Robust Pig Detection and Counting in Complex Agricultural Environments" Animals 15, no. 14: 2149. https://doi.org/10.3390/ani15142149

APA StyleLi, J., Ma, W., Wei, Y., & Wang, T. (2025). Enhanced YOLOv8 for Robust Pig Detection and Counting in Complex Agricultural Environments. Animals, 15(14), 2149. https://doi.org/10.3390/ani15142149