Machine Learning and Wavelet Transform: A Hybrid Approach to Predicting Ammonia Levels in Poultry Farms

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area and Measurements

2.2. Machine Learning (ML) Algorithms

2.3. Linear Regression (LR)

2.4. Wavelet Transform (WT)

2.5. Model Evaluation

3. Results

3.1. Data Preprocessing

3.2. Evaluation of LR Models’ Performance

3.3. Evaluation of ML Algorithms’ Performance

3.4. WT Analysis with ML Algorithms

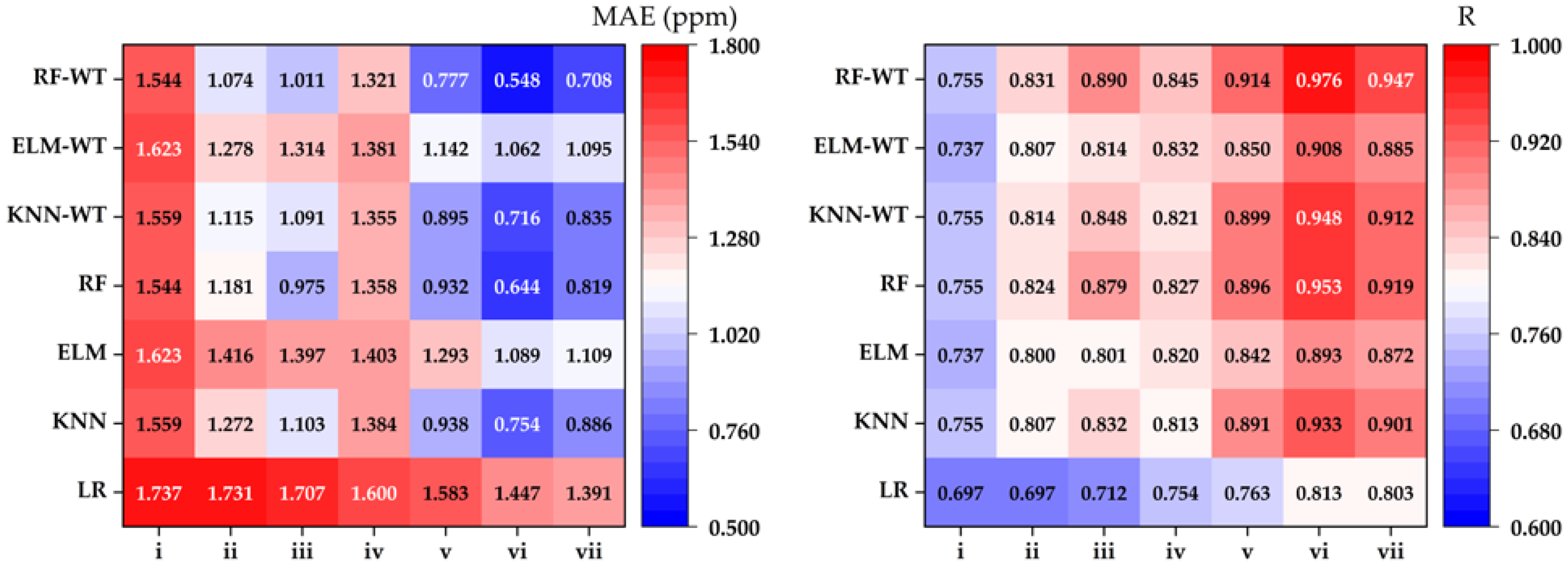

3.5. Performance Comparison of Different Algorithms

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Behera, S.N.; Sharma, M.; Aneja, V.P.; Balasubramanian, R. Ammonia in the atmosphere: A review on emission sources, atmospheric chemistry and deposition on terrestrial bodies. Environ. Sci. Pollut. Res. Int. 2013, 20, 8092–8131. [Google Scholar] [CrossRef] [PubMed]

- Renard, J.J.; Calidonna, S.E.; Henley, M.V. Fate of ammonia in the atmosphere—A review for applicability to hazardous releases. J. Hazard. Mater. 2004, 108, 29–60. [Google Scholar] [CrossRef]

- Phan, N.T.; Kim, K.H.; Shon, Z.H.; Jeon, E.C.; Jung, K.; Kim, N.J. Analysis of ammonia variation in the urban atmosphere. Atmos. Environ. 2013, 65, 177–185. [Google Scholar] [CrossRef]

- Bandyopadhyay, B.; Kumar, P.; Biswas, P. Ammonia catalyzed formation of sulfuric acid in troposphere: The curious case of a base promoting acid rain. J. Phys. Chem. A 2017, 121, 3101–3108. [Google Scholar] [CrossRef]

- Nair, A.A.; Yu, F. Quantification of atmospheric ammonia concentrations: A review of its measurement and modeling. Atmosphere 2020, 11, 1092. [Google Scholar] [CrossRef]

- Bist, R.B.; Subedi, S.; Chai, L.; Yang, X. Ammonia emissions, impacts, and mitigation strategies for poultry production: A critical review. J. Environ. Manag. 2023, 328, 116919. [Google Scholar] [CrossRef] [PubMed]

- Miles, D.M.; Branton, S.L.; Lott, B.D. Atmospheric ammonia is detrimental to the performance of modern commercial broilers. Poult. Sci. 2004, 83, 1650–1654. [Google Scholar] [CrossRef] [PubMed]

- Ritz, C.W.; Fairchild, B.D.; Lacy, M.P. Implications of ammonia production and emissions from commercial poultry facilities: A review. J. Appl. Poult. Res. 2004, 13, 684–692. [Google Scholar] [CrossRef]

- Gates, R.S.; Xin, H.; Casey, K.D.; Liang, Y.; Wheeler, E.F. Method for measuring ammonia emissions from poultry houses. J. Appl. Poult. Res. 2005, 14, 622–634. [Google Scholar] [CrossRef]

- Küçüktopçu, E.; Cemek, B.; Simsek, H. Comparative analysis of single and hybrid machine learning models for daily solar radiation. Energy Rep. 2024, 11, 3256–3266. [Google Scholar] [CrossRef]

- Samani, S.; Vadiati, M.; Nejatijahromi, Z.; Etebari, B.; Kisi, O. Groundwater level response identification by hybrid wavelet–machine learning conjunction models using meteorological data. Environ. Sci. Pollut. Res. Int. 2023, 30, 22863–22884. [Google Scholar] [CrossRef] [PubMed]

- Wei, A.; Li, X.; Yan, L.; Wang, Z.; Yu, X. Machine learning models combined with wavelet transform and phase space reconstruction for groundwater level forecasting. Comput. Geosci. 2023, 177, 105386. [Google Scholar] [CrossRef]

- Küçüktopcu, E.; Cemek, B. Comparison of neuro-fuzzy and neural networks techniques for estimating ammonia concentration in poultry farms. J. Environ. Chem. Eng. 2021, 9, 105699. [Google Scholar] [CrossRef]

- Shahinfar, S.; Khansefid, M.; Haile-Mariam, M.; Pryce, J. Machine learning approaches for the prediction of lameness in dairy cows. Animal 2021, 15, 100391. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Cheng, D.; Deng, Z.; Zong, M.; Deng, X. A novel kNN algorithm with data-driven k parameter computation. Pattern Recognit. Lett. 2018, 109, 44–54. [Google Scholar] [CrossRef]

- Fawagreh, K.; Gaber, M.M.; Elyan, E. Random forests: From early developments to recent advancements. Syst. Sci. Control Eng. 2014, 2, 602–609. [Google Scholar] [CrossRef]

- Huang, G.; Huang, G.B.; Song, S.; You, K. Trends in extreme learning machines: A review. Neural Netw. 2015, 61, 32–48. [Google Scholar] [CrossRef]

- Ding, S.; Xu, X.; Nie, R. Extreme learning machine and its applications. Neural Comput. Appl. 2014, 25, 549–556. [Google Scholar] [CrossRef]

- Saadat, H.B.; Torshizi, R.V.; Manafiazar, G.; Masoudi, A.A.; Ehsani, A.; Shahinfar, S. An initial investigation into the use of machine learning methods for prediction of carcass component yields in F2 broiler chickens. Anim. Prod. Sci. 2024, 64, AN23129. [Google Scholar]

- Zhang, D. Wavelet transform. In Fundamentals of Image Data Mining: Analysis, Features, Classification and Retrieval, 1st ed.; Zhang, D., Ed.; Springer: Cham, Switzerland, 2019; pp. 35–44. [Google Scholar]

- Khorrami, H.; Moavenian, M. A comparative study of DWT, CWT and DCT transformations in ECG arrhythmias classification. Expert. Syst. Appl. 2010, 37, 5751–5757. [Google Scholar] [CrossRef]

- Xue, H.; Li, L.; Wen, P.; Zhang, M. A machine learning-based positioning method for poultry in cage environments. Comput. Electron. Agric. 2023, 208, 107764. [Google Scholar] [CrossRef]

- You, J.; Lou, E.; Afrouziyeh, M.; Zukiwsky, N.M.; Zuidhof, M.J. A supervised machine learning method to detect anomalous real-time broiler breeder body weight data recorded by a precision feeding system. Comput. Electron. Agric. 2021, 185, 106171. [Google Scholar] [CrossRef]

- Wang, J.; Bell, M.; Liu, X.; Liu, G. Machine-learning techniques can enhance dairy cow estrus detection using location and acceleration data. Animals 2020, 10, 1160. [Google Scholar] [CrossRef]

- Tao, W.; Wang, G.; Sun, Z.; Xiao, S.; Pan, L.; Wu, Q.; Zhang, M. Feature optimization method for white feather broiler health monitoring technology. Eng. Appl. Artif. Intell. 2023, 123, 106372. [Google Scholar] [CrossRef]

- Seber, R.T.; de Alencar Nääs, I.; de Moura, D.J.; da Silva Lima, N.D. Classifier’s performance for detecting the pecking pattern of broilers during feeding. AgriEngineering 2022, 4, 789–800. [Google Scholar] [CrossRef]

- Tunca, E.; Köksal, E.S.; Öztürk, E.; Akay, H.; Çetin Taner, S. Accurate estimation of sorghum crop water content under different water stress levels using machine learning and hyperspectral data. Environ. Monit. Assess. 2023, 195, 877. [Google Scholar] [CrossRef]

- Tunca, E.; Köksal, E.S.; Öztürk, E.; Akay, H.; Çetin Taner, S. Accurate leaf area index estimation in sorghum using high-resolution UAV data and machine learning models. Phys. Chem. Earth (Parts A B C) 2024, 133, 103537. [Google Scholar] [CrossRef]

- Gonzalez-Mora, A.F.; Rousseau, A.N.; Loyon, L.; Guiziou, F.; Célicourt, P. Leveraging the use of mechanistic and machine learning models to assess interactions between ammonia concentrations, manure characteristics, and atmospheric conditions in laying-hen manure storage under laboratory conditions. In Intelligence Systems for Earth, Environmental and Planetary Sciences, 1st ed.; Bonakdari, H., Gumiere, S.J., Eds.; Elsevier: Amsterdam, The Netherlands, 2024; pp. 229–259. [Google Scholar]

- Wei, A.; Chen, Y.; Li, D.; Zhang, X.; Wu, T.; Li, H. Prediction of groundwater level using the hybrid model combining wavelet transform and machine learning algorithms. Earth Sci. Inform. 2022, 15, 1951–1962. [Google Scholar] [CrossRef]

- Nishat Toma, R.; Kim, J.M. Bearing fault classification of induction motors using discrete wavelet transform and ensemble machine learning algorithms. Appl. Sci. 2020, 10, 5251. [Google Scholar] [CrossRef]

- Amin, H.U.; Malik, A.S.; Ahmad, R.F.; Badruddin, N.; Kamel, N.; Hussain, M.; Chooi, W.T. Feature extraction and classification for EEG signals using wavelet transform and machine learning techniques. Australas. Phys. Eng. Sci. 2015, 38, 139–149. [Google Scholar] [CrossRef]

- Tuğrul, T.; Hinis, M.A. Improvement of drought forecasting by means of various machine learning algorithms and wavelet transformation. Acta Geophys. 2024, 1–20. [Google Scholar] [CrossRef]

| Instrument | Country | Measured Variables | Specification |

|---|---|---|---|

| Smart Sensor, AR8500 | China | NH3 | Resolution: 0.01 ppm Accuracy: ±0.2% |

| Testo, 605i | Germany | T and RH | Resolution: 0.1 °C and 0.1% RH Accuracy: ±0.5 °C and ±1% RH |

| PCE, PCE-423 | USA | V | Resolution: 0.01 ms−1 Accuracy: ±5% |

| Elektromag, M40P | Türkiye | LMC | Resolution: 1 °C Accuracy: ±1 °C |

| PCE, PH20S | USA | LPH | Resolution: 0.01 pH Accuracy: ±0.1 pH |

| Testo, 875–2i | Germany | LT | Resolution: 0.1 °C Accuracy: ±2 °C |

| LMC (%) | LT (°C) | LPH | T (°C) | RH (%) | V (m s−1) | NH3 (ppm) | ||

|---|---|---|---|---|---|---|---|---|

| Training | Min | 15.02 | 20.00 | 6.02 | 19.10 | 50.35 | 0.11 | 13.00 |

| Max | 42.88 | 33.40 | 8.34 | 32.44 | 79.81 | 2.10 | 26.70 | |

| Mean | 30.69 | 27.87 | 7.49 | 24.73 | 64.66 | 0.56 | 19.38 | |

| SD | 6.62 | 2.49 | 0.54 | 3.32 | 6.39 | 0.54 | 3.04 | |

| Sk | −0.38 | −0.20 | −1.30 | −0.02 | 0.25 | 2.59 | 0.23 | |

| Kr | −0.93 | −0.09 | 0.65 | −0.99 | −0.67 | 7.01 | −0.89 | |

| Testing | Min | 15.75 | 20.05 | 6.05 | 19.10 | 50.76 | 0.12 | 13.10 |

| Max | 41.59 | 33.00 | 8.26 | 31.78 | 79.37 | 2.05 | 26.30 | |

| Mean | 30.16 | 28.02 | 7.46 | 24.90 | 64.59 | 0.52 | 19.13 | |

| SD | 6.75 | 2.35 | 0.54 | 3.36 | 6.65 | 0.46 | 3.06 | |

| Sk | −0.35 | −0.24 | −1.22 | −0.07 | 0.33 | 2.62 | 0.47 | |

| Kr | −1.02 | −0.17 | 0.44 | −0.92 | −0.71 | 7.95 | −0.70 |

| Inputs | Models | Training | Testing | ||

|---|---|---|---|---|---|

| MAE | R | MAE | R | ||

| LMC | LR1 | 1.715 | 0.729 | 1.737 | 0.697 |

| LMC, LPH | LR2 | 1.702 | 0.730 | 1.731 | 0.697 |

| LMC, LPH, LT | LR3 | 1.667 | 0.749 | 1.707 | 0.712 |

| T | LR4 | 1.659 | 0.753 | 1.600 | 0.754 |

| T, RH | LR5 | 1.626 | 0.763 | 1.583 | 0.763 |

| T, RH, V | LR6 | 1.412 | 0.829 | 1.447 | 0.813 |

| LMC, LPH, T | LR7 | 1.402 | 0.811 | 1.391 | 0.803 |

| Inputs | Model | Hyperparameters (k, ls, p) | Training | Testing | ||

|---|---|---|---|---|---|---|

| MAE | R | MAE | R | |||

| LMC | KNN1 | 19, 11, 1 | 1.305 | 0.826 | 1.559 | 0.755 |

| LMC, LPH | KNN2 | 5, 3, 1 | 0.997 | 0.892 | 1.272 | 0.807 |

| LMC, LPH, LT | KNN3 | 5, 5, 1 | 0.847 | 0.914 | 1.103 | 0.832 |

| T | KNN4 | 11, 3, 1 | 1.249 | 0.837 | 1.384 | 0.813 |

| T, RH | KNN5 | 3, 5, 1 | 0.598 | 0.955 | 0.938 | 0.891 |

| T, RH, V | KNN6 | 3, 1, 1 | 0.464 | 0.972 | 0.754 | 0.933 |

| LMC, LPH, T | KNN7 | 5, 5, 1 | 0.584 | 0.960 | 0.886 | 0.901 |

| Inputs | Model | Hyperparameters (nt, d, ss, sl) | Training | Testing | ||

|---|---|---|---|---|---|---|

| MAE | R | MAE | R | |||

| LMC | RF1 | 20, 5, 2, 10 | 1.350 | 0.820 | 1.544 | 0.755 |

| LMC, LPH | RF2 | 25, 10, 3, 5 | 0.891 | 0.915 | 1.181 | 0.824 |

| LMC, LPH, LT | RF3 | 30, 10, 2, 2 | 0.563 | 0.965 | 0.975 | 0.879 |

| T | RF4 | 20, 10, 5, 10 | 1.296 | 0.827 | 1.358 | 0.827 |

| T, RH | RF5 | 30, 10, 2, 2 | 0.485 | 0.975 | 0.932 | 0.896 |

| T, RH, V | RF6 | 25, 10, 2, 3 | 0.343 | 0.986 | 0.644 | 0.953 |

| LMC, LPH, T | RF7 | 40, 10, 2, 2 | 0.445 | 0.979 | 0.819 | 0.919 |

| Inputs | Model | Hyperparameters (hn, af, rp) | Training | Testing | ||

|---|---|---|---|---|---|---|

| MAE | R | MAE | R | |||

| LMC | ELM1 | 160, sigmoid, 0.001 | 1.582 | 0.761 | 1.623 | 0.737 |

| LMC, LPH | ELM2 | 180, sigmoid, 0.001 | 1.391 | 0.803 | 1.416 | 0.800 |

| LMC, LPH, LT | ELM3 | 180, sigmoid, 0.001 | 1.319 | 0.829 | 1.397 | 0.801 |

| T | ELM4 | 120, sigmoid, 0.001 | 1.444 | 0.795 | 1.403 | 0.820 |

| T, RH | ELM5 | 180, sigmoid, 0.0001 | 1.247 | 0.842 | 1.293 | 0.842 |

| T, RH, V | ELM6 | 180, sigmoid, 0.001 | 1.033 | 0.897 | 1.089 | 0.893 |

| LMC, LPH, T | ELM7 | 180, sigmoid, 0.0001 | 0.992 | 0.901 | 1.109 | 0.872 |

| Inputs | D1 | D2 | D3 | D4 | D5 | D6 | D7 | D8 | D9 | D10 |

|---|---|---|---|---|---|---|---|---|---|---|

| LMC | 0.08 | 0.08 | 0.14 | 0.17 | 0.29 | 0.33 | 0.20 | 0.33 | 0.20 | 0.32 |

| LPH | 0.05 | 0.04 | 0.11 | 0.13 | 0.16 | 0.25 | 0.17 | 0.26 | 0.16 | 0.32 |

| LT | −0.01 | −0.04 | −0.09 | −0.16 | −0.26 | −0.15 | −0.25 | −0.24 | −0.09 | 0.31 |

| T | −0.03 | −0.06 | −0.18 | −0.24 | −0.30 | −0.35 | −0.26 | −0.32 | −0.22 | −0.32 |

| RH | 0.03 | 0.05 | 0.10 | 0.11 | 0.11 | 0.24 | −0.01 | 0.11 | 0.22 | 0.30 |

| V | 0.01 | −0.02 | −0.16 | −0.20 | −0.29 | −0.18 | −0.12 | −0.12 | 0.23 | 0.30 |

| Inputs | Without WT | The New Series | With WT |

|---|---|---|---|

| LMC | 0.720 | D1 + D2 + D3 + D4 + D5 + D6 + D7 + D8 + D9 + D10 | 0.720 |

| LPH | 0.547 | D3 + D4 + D5 + D6 + D7 + D8 + D9 + D10 | 0.551 |

| LT | −0.398 | D3 + D4 + D5 + D6 + D7 + D8 + D9 | −0.499 |

| T | 0.754 | D2 + D3 + D4 + D5 + D6 + D7 + D8 + D9 + D10 | 0.755 |

| RH | 0.393 | D3 + D4 + D5 + D6 + D8 + D9 + D10 | 0.463 |

| V | −0.224 | D3 + D4 + D5 + D6 + D8 | −0.432 |

| Input | Model | Training | Testing | ||

|---|---|---|---|---|---|

| MAE | R | MAE | R | ||

| T, RH, V | KNN6-WT | 0.391 | 0.984 | 0.716 | 0.948 |

| RF6-WT | 0.224 | 0.992 | 0.548 | 0.976 | |

| ELM6-WT | 1.018 | 0.901 | 1.062 | 0.908 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Küçüktopçu, E.; Cemek, B.; Simsek, H. Machine Learning and Wavelet Transform: A Hybrid Approach to Predicting Ammonia Levels in Poultry Farms. Animals 2024, 14, 2951. https://doi.org/10.3390/ani14202951

Küçüktopçu E, Cemek B, Simsek H. Machine Learning and Wavelet Transform: A Hybrid Approach to Predicting Ammonia Levels in Poultry Farms. Animals. 2024; 14(20):2951. https://doi.org/10.3390/ani14202951

Chicago/Turabian StyleKüçüktopçu, Erdem, Bilal Cemek, and Halis Simsek. 2024. "Machine Learning and Wavelet Transform: A Hybrid Approach to Predicting Ammonia Levels in Poultry Farms" Animals 14, no. 20: 2951. https://doi.org/10.3390/ani14202951

APA StyleKüçüktopçu, E., Cemek, B., & Simsek, H. (2024). Machine Learning and Wavelet Transform: A Hybrid Approach to Predicting Ammonia Levels in Poultry Farms. Animals, 14(20), 2951. https://doi.org/10.3390/ani14202951