Automatic Perception of Typical Abnormal Situations in Cage-Reared Ducks Using Computer Vision

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

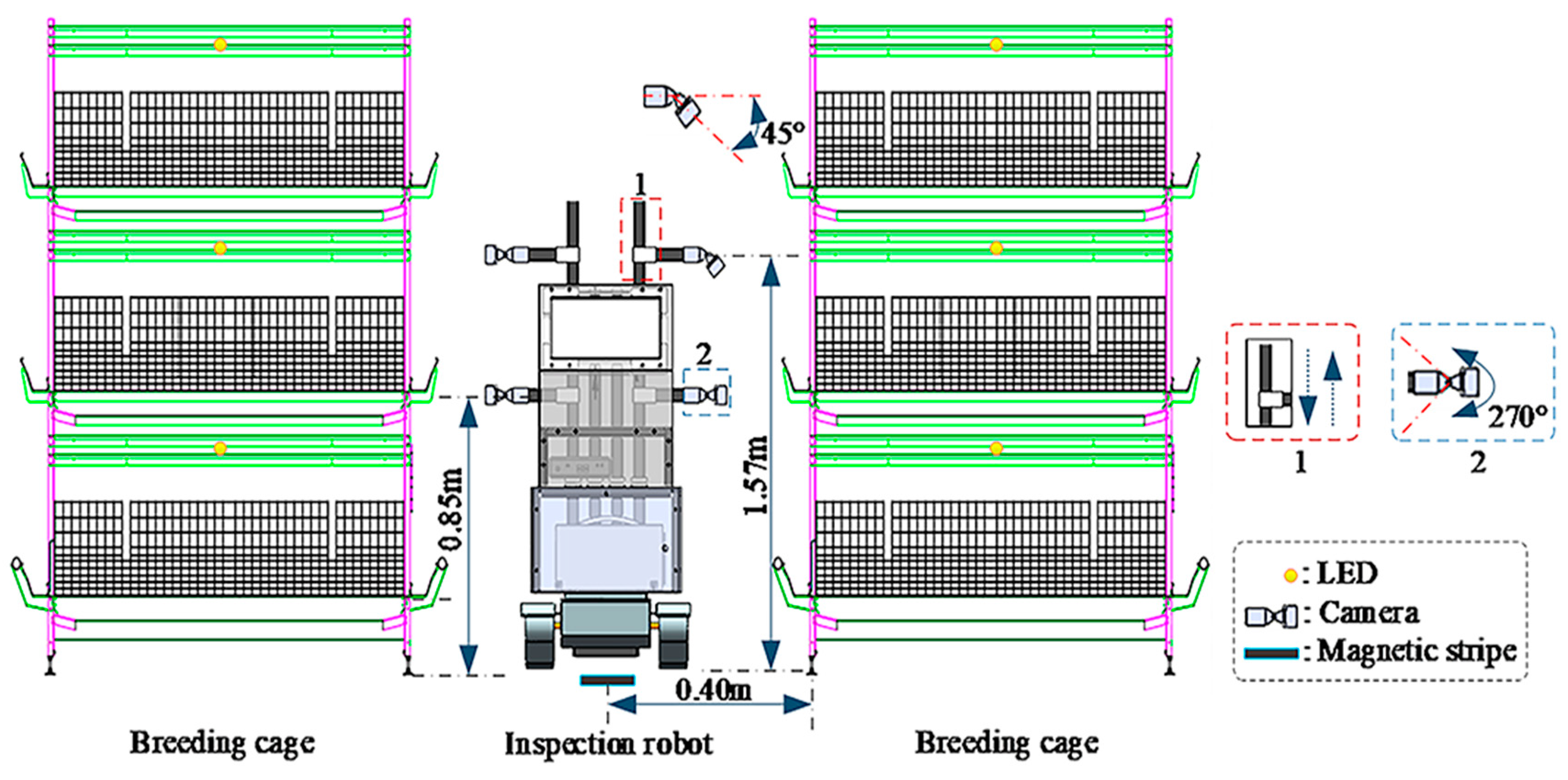

2.1. Image Acquisition and Experimental Material

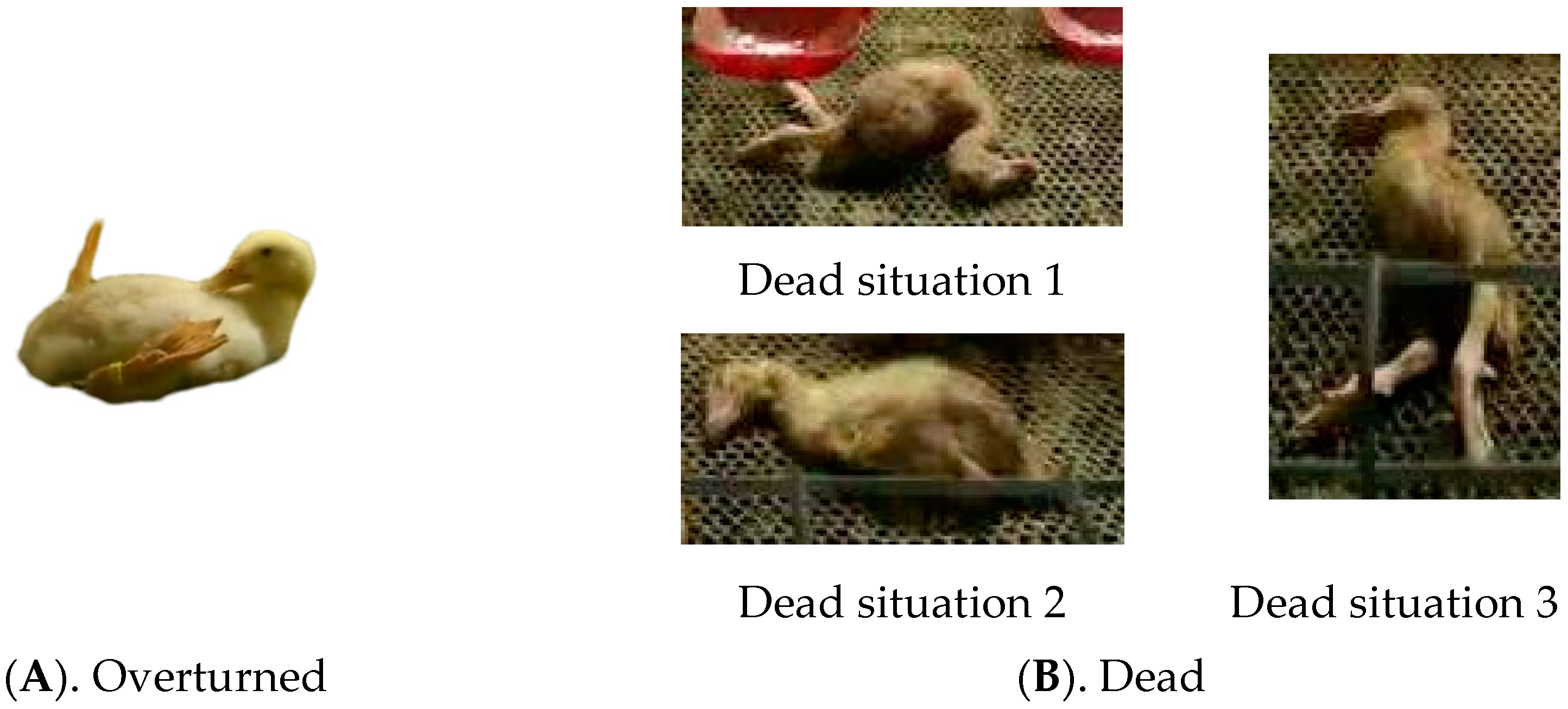

2.2. Preprocessing of Abnormal Cage-Reared Duck Images and Dataset Construction

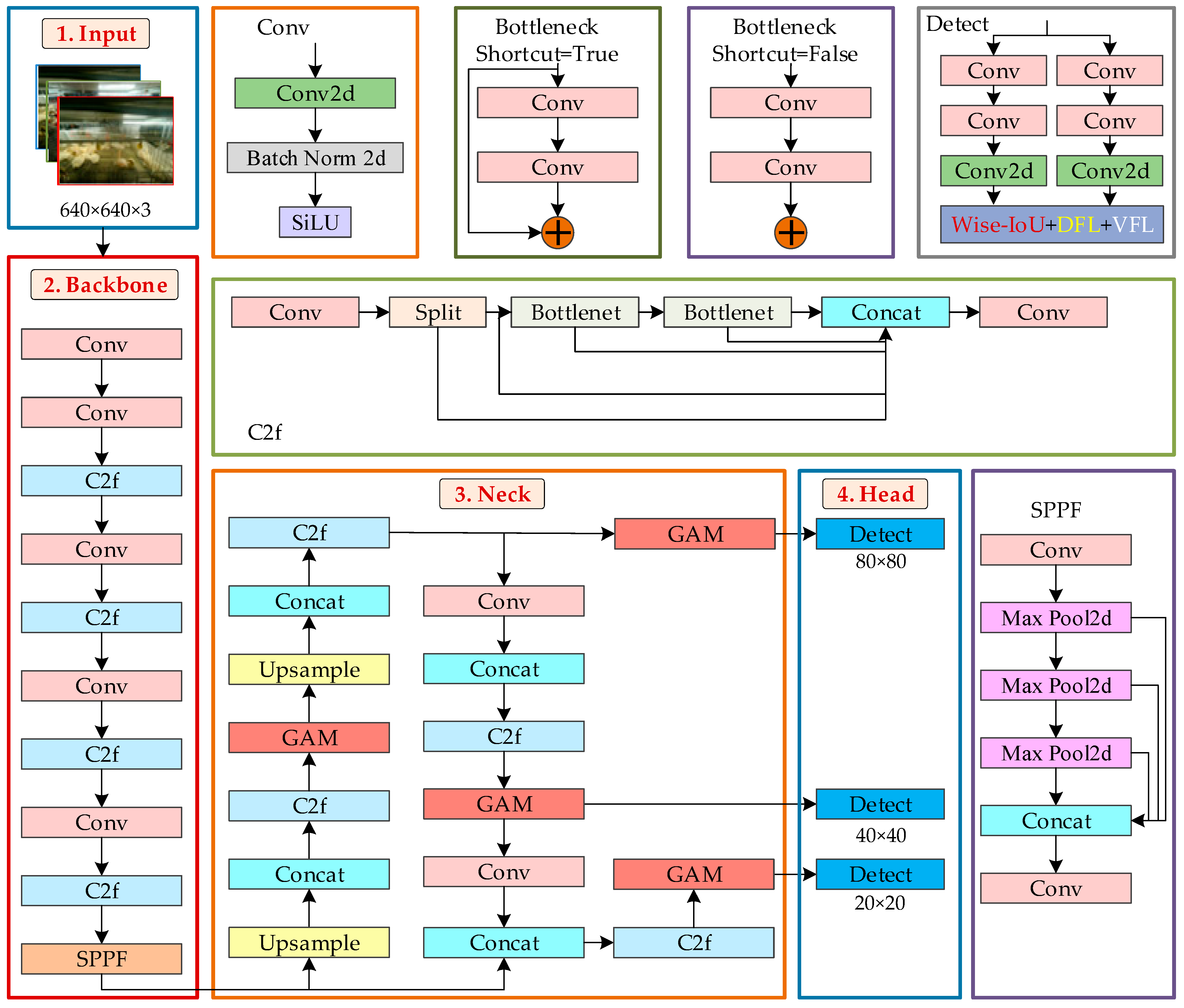

2.3. Detection Method for Abnormal Situations in Cage-Reared Ducks

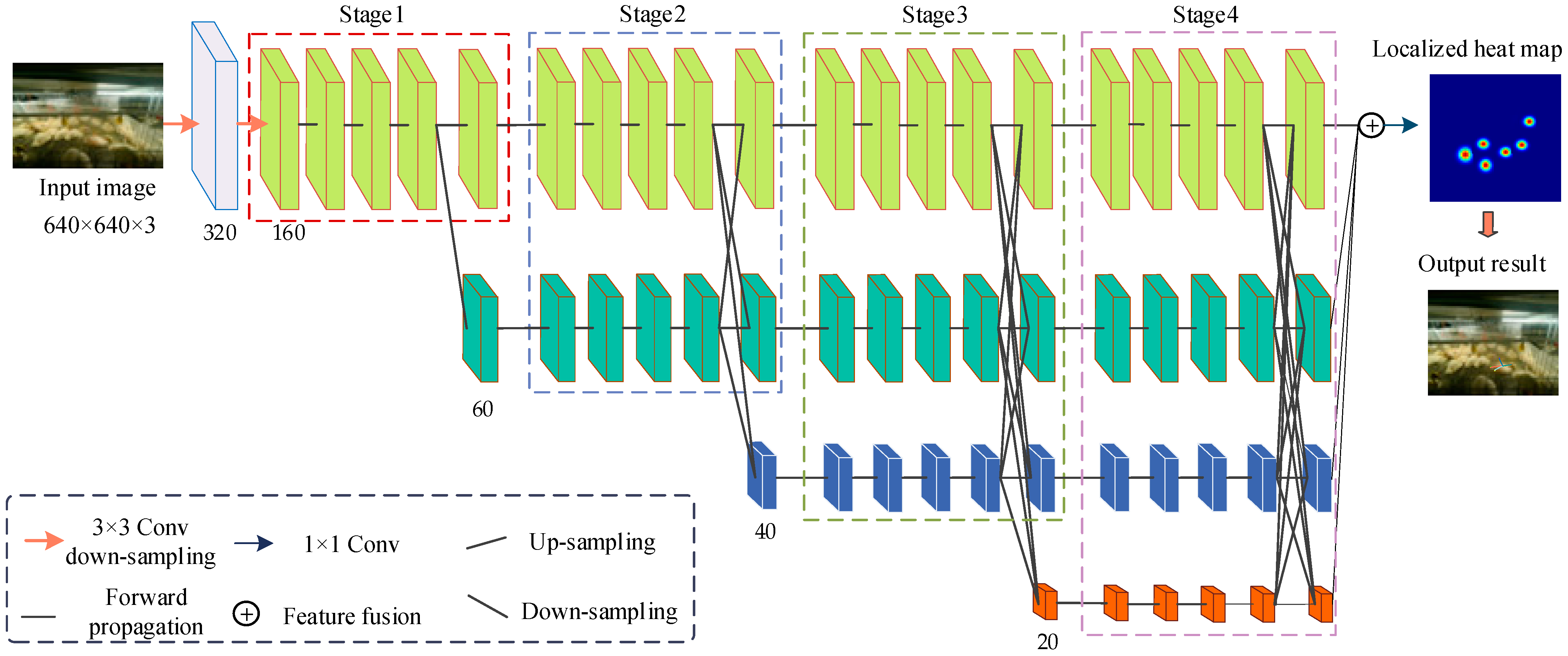

2.3.1. YOLOv8-ACRD Network Structure

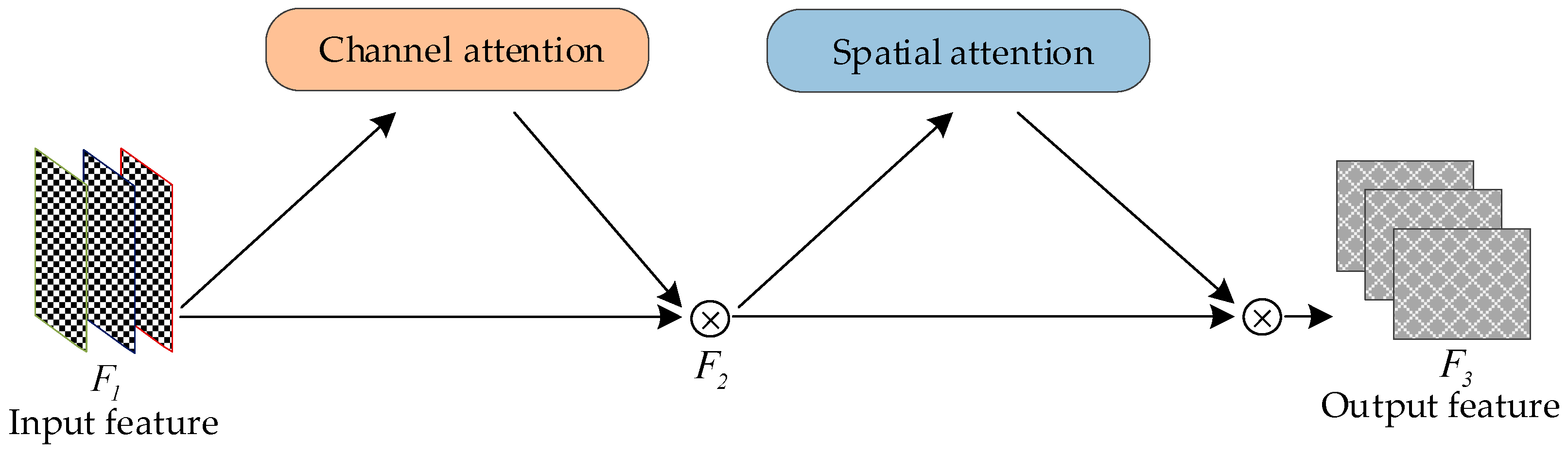

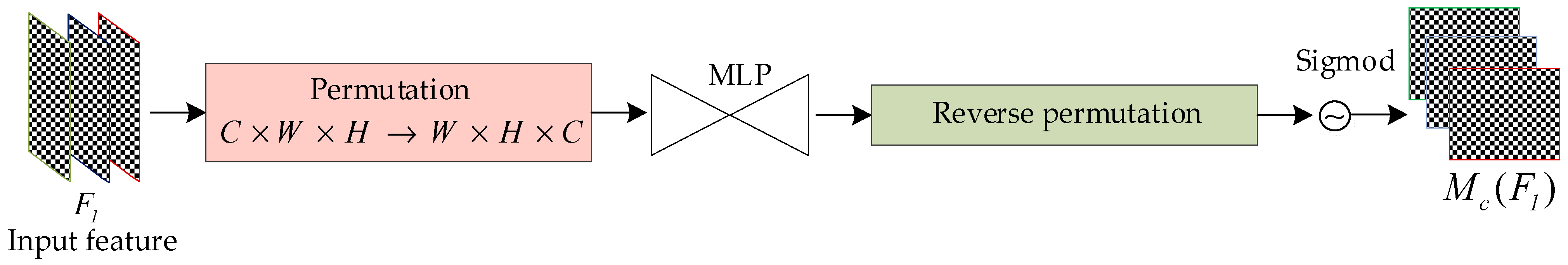

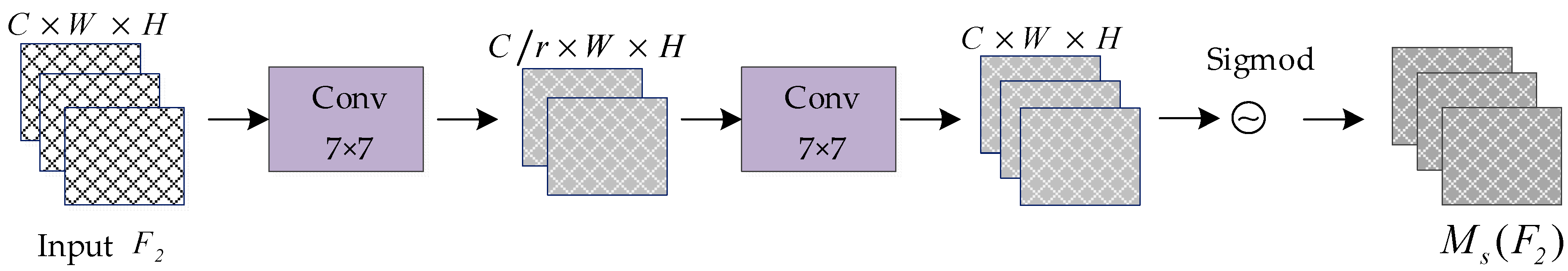

2.3.2. Global Attention Mechanism

2.3.3. The Wise-IoU Loss Function

2.4. Method for Brightness Adjustment in Cage-Reared Duck Images

2.5. Estimation Method for Abnormal Cage-Reared Ducks Posture

2.6. Evaluation Criteria

2.7. Experimental Environment

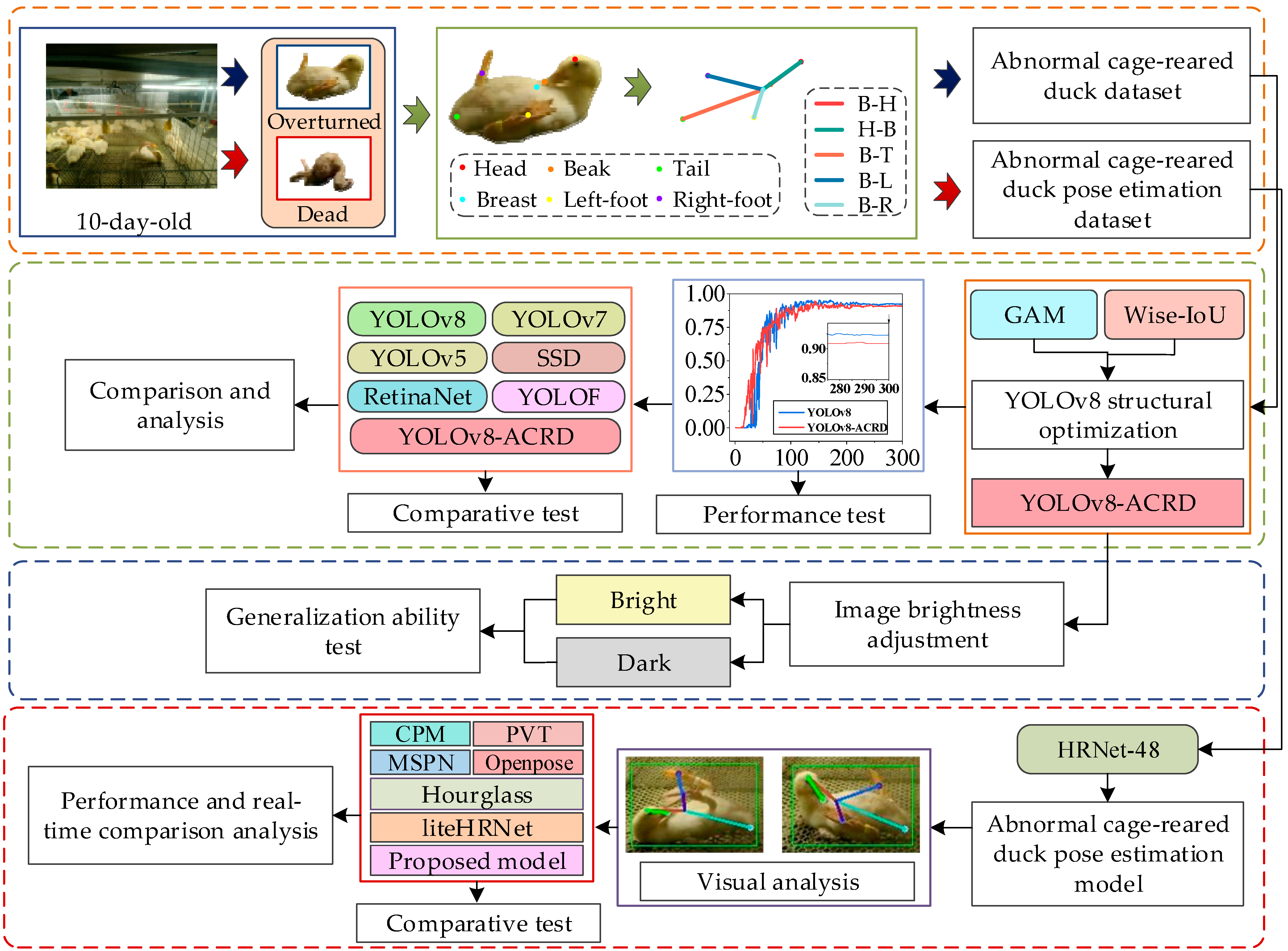

2.8. Experimental Steps

- Images of 10-day-old cage-reared ducks in the abnormal states of ‘overturned’ and ‘dead’ were collected and annotated to establish datasets for abnormal cage-reared ducks and abnormal cage-reared duck pose-estimations.

- Based on the characteristics and experimental environment of cage-reared ducks, multiple GAM modules were densely embedded into the neck of the original YOLOv8 network, and the Wise-IoU loss function was introduced to optimize the detection performance. This led to the development of YOLOv8-ACRD, a network for recognizing abnormal situations in cage-reared ducks.

- The proposed method, based on YOLOv8-ACRD, was tested for accuracy compared with the original YOLOv8 and evaluated against other mainstream methods to assess its effectiveness.

- Brightness was used as a factor, with two levels (‘bright’ and ‘dark’) set to test the generalization ability of the proposed method for identifying abnormal situations in cage-reared ducks.

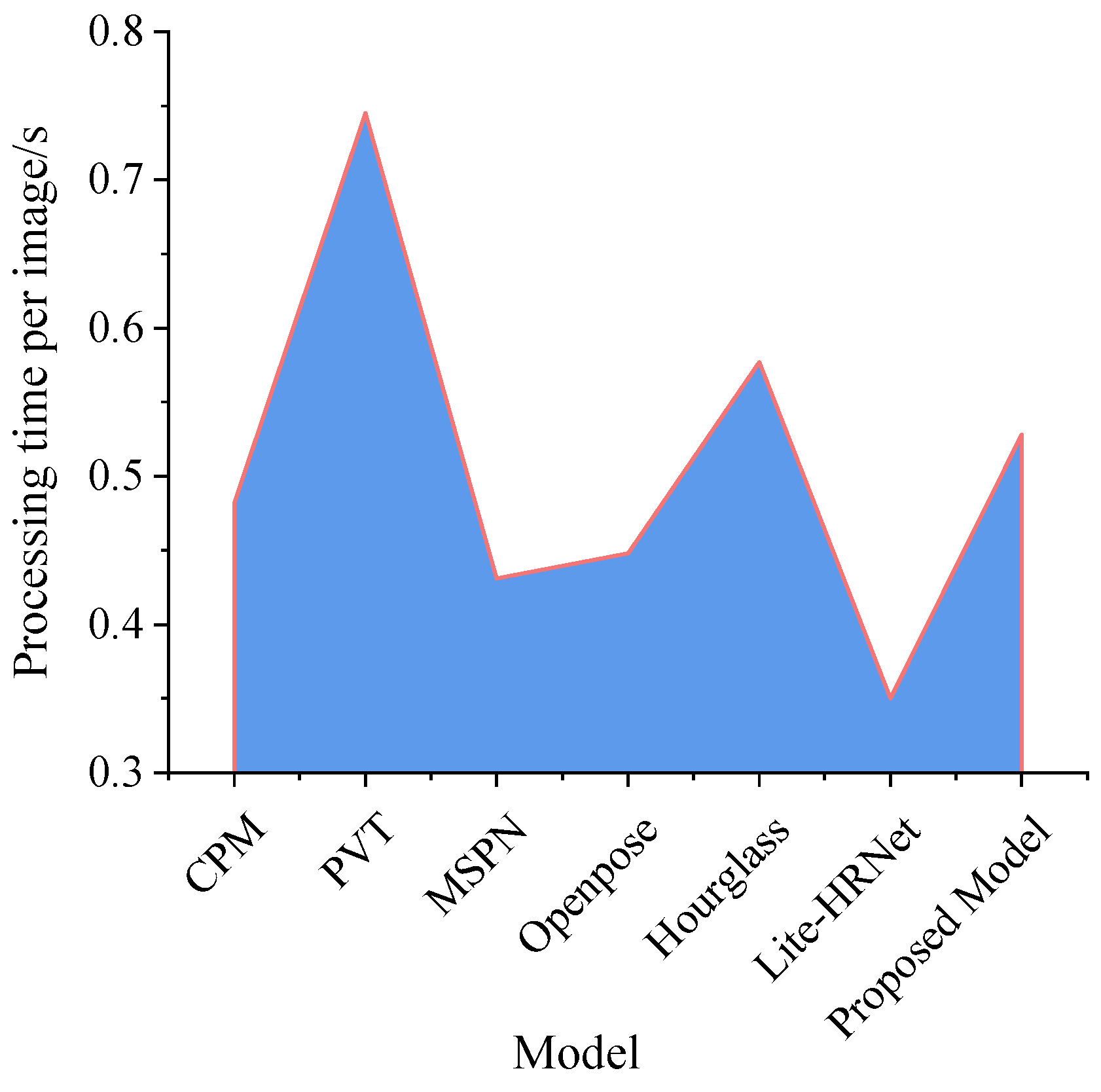

- An abnormal posture estimation model based on HRNet-48 was developed by refining the identification of six key body parts in cage-reared ducks. This model was compared with other commonly used pose-estimation algorithms, and its real-time performance was evaluated.

- The experimental results were discussed, and conclusions were drawn.

3. Results

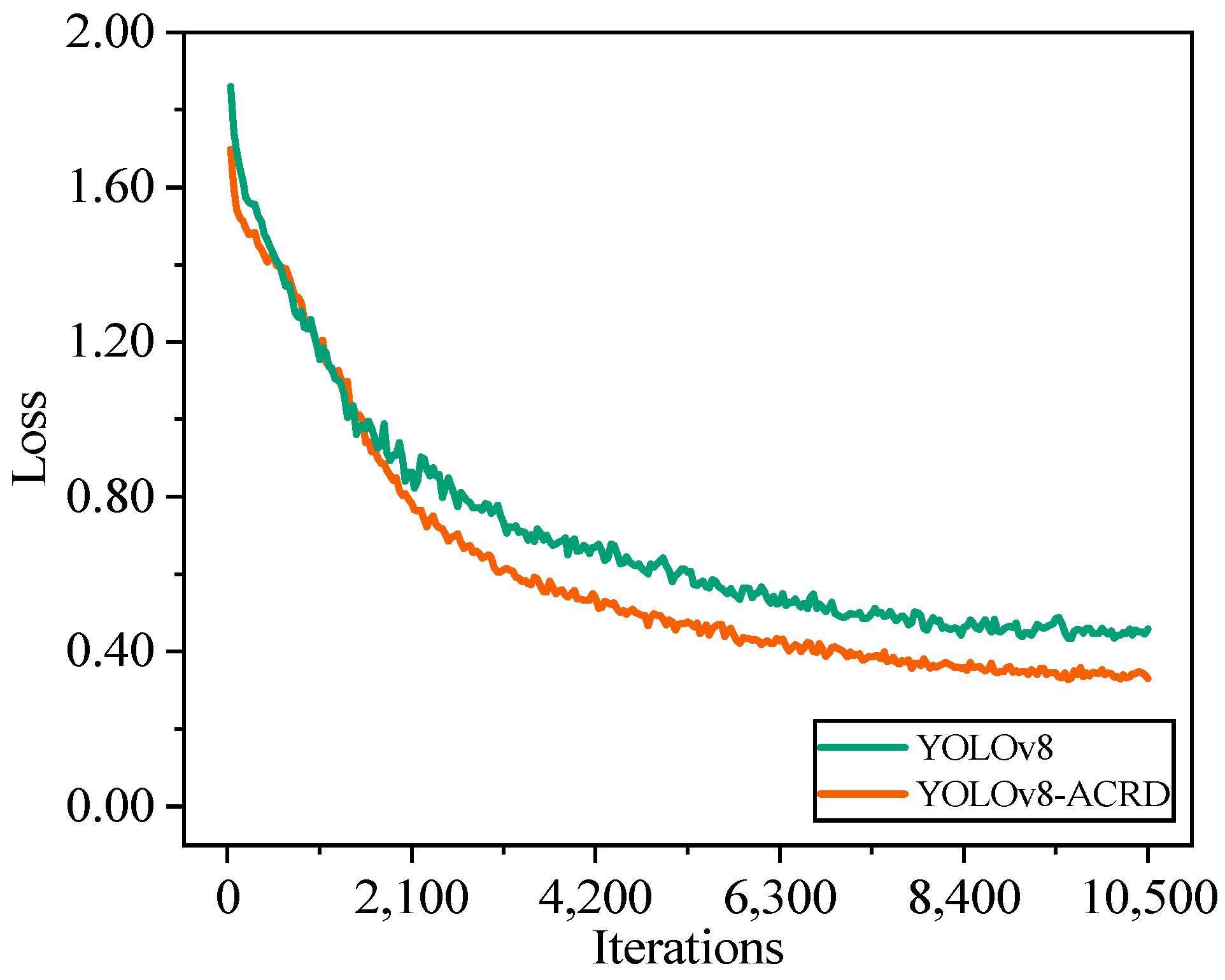

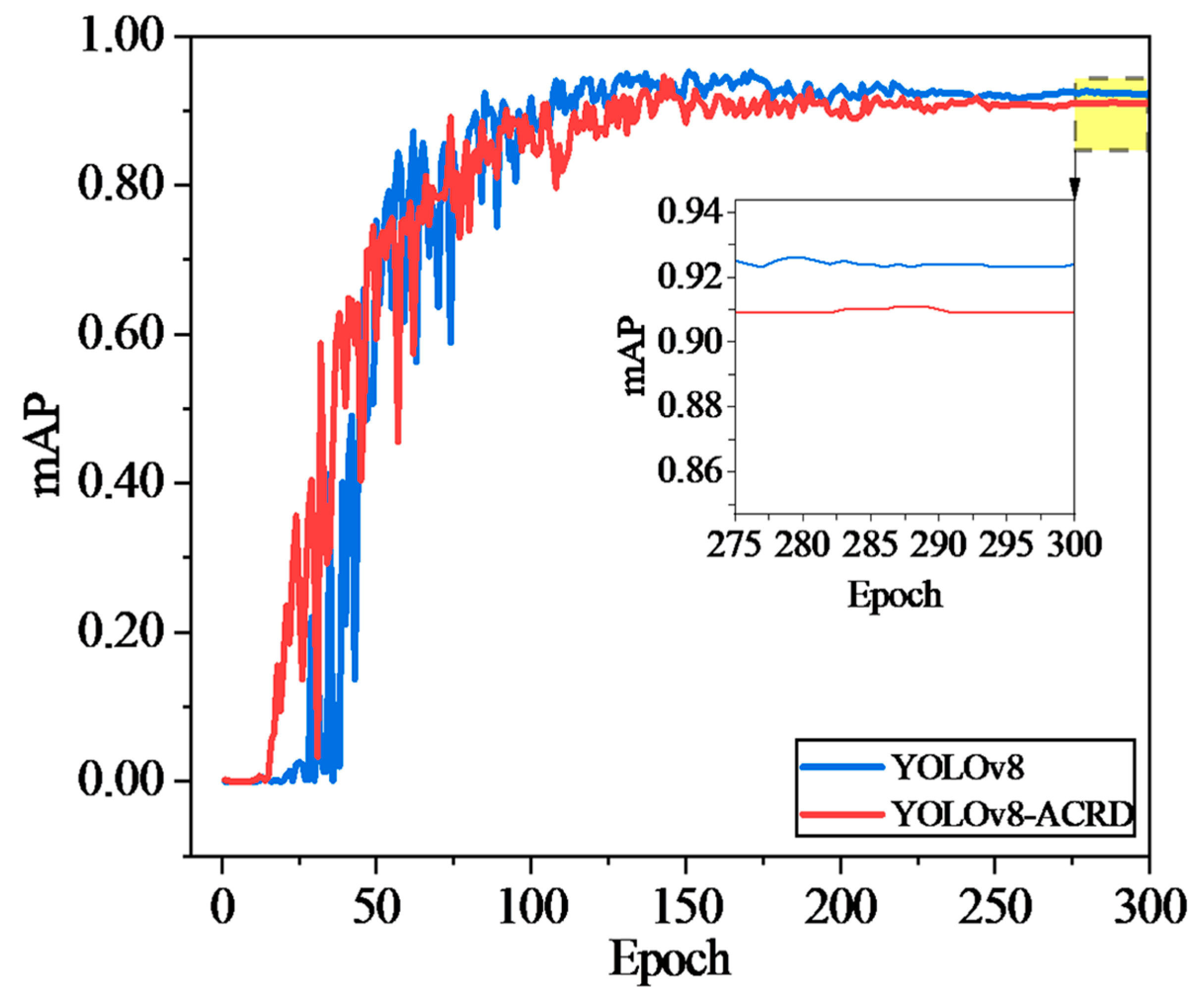

3.1. Abnormal Situation Recognition in Cage-Reared Ducks Based on YOLOv8-ACRD

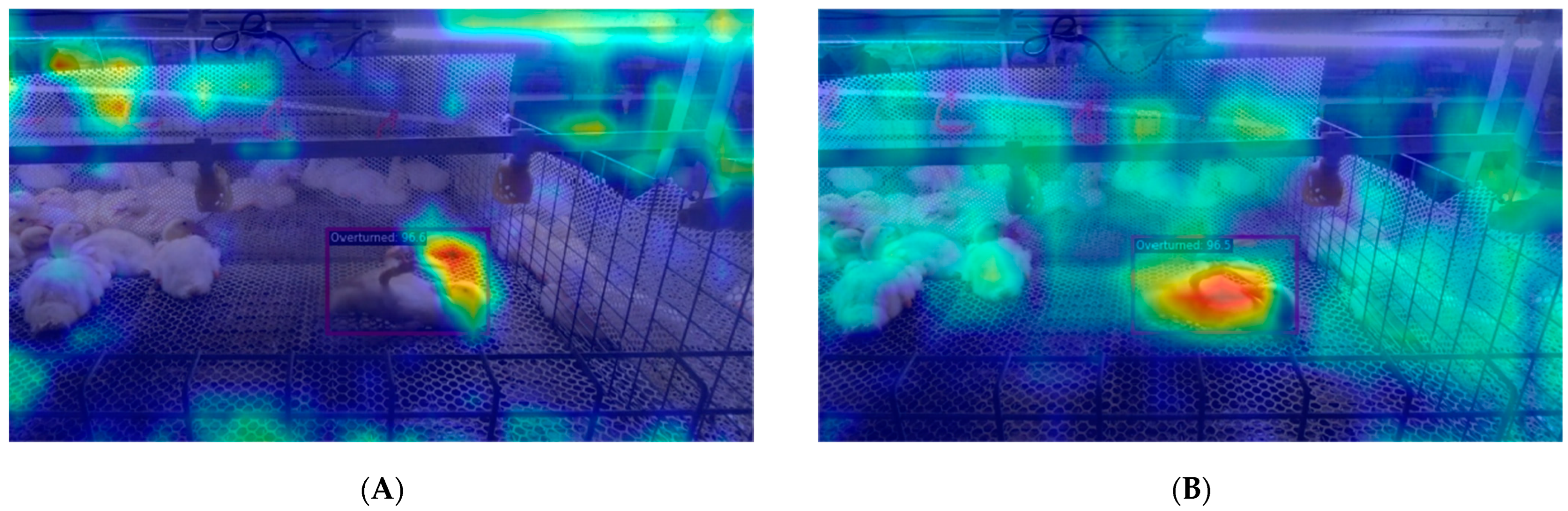

3.1.1. Acquisition of Abnormal Situation Recognition Model for Cage-Reared Ducks and Comparison of Feature Maps

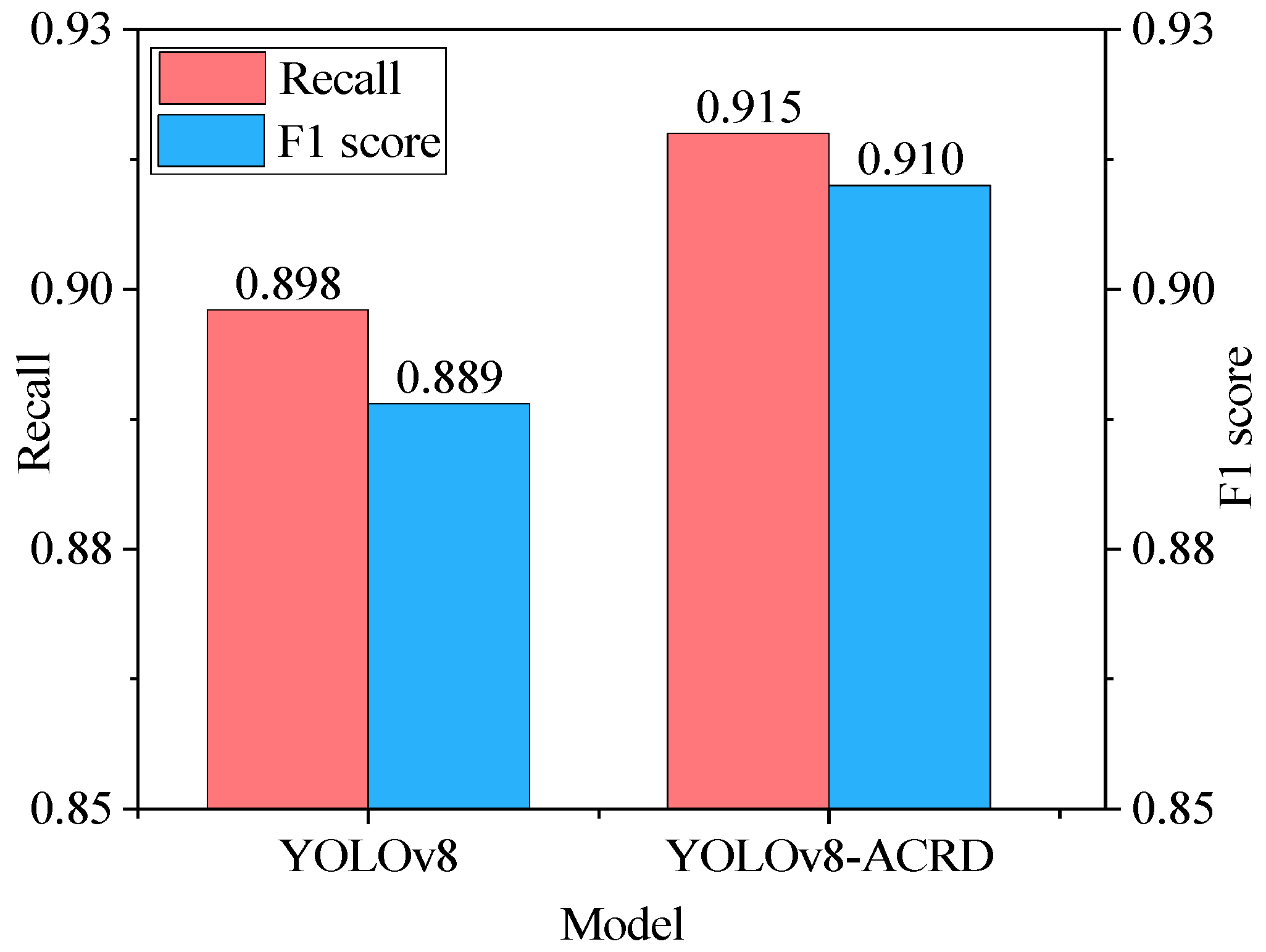

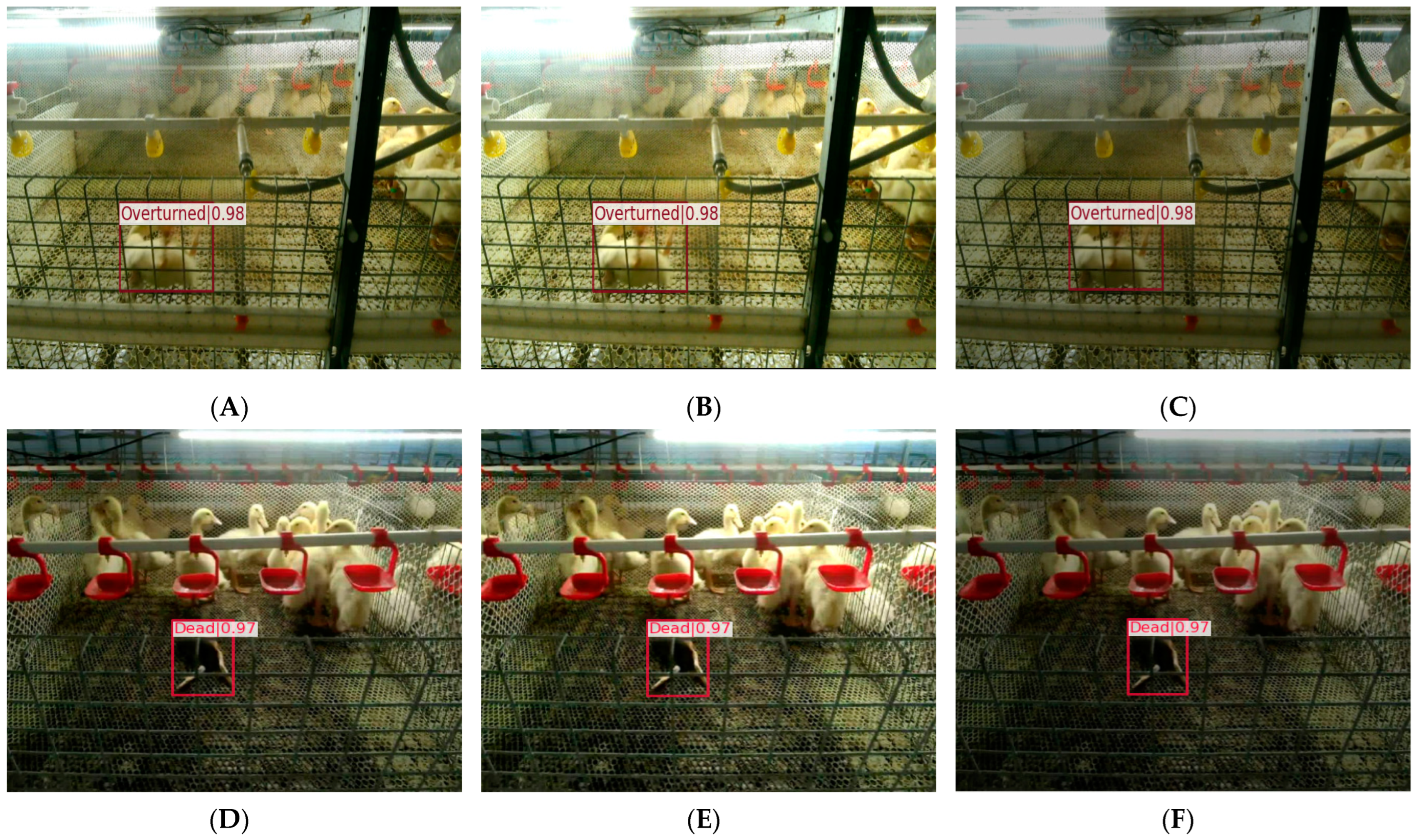

3.1.2. Recognition of Abnormal Situations in Cage-Reared Ducks

3.1.3. Comparison and Analysis

3.2. Perception of Abnormal Situations in Cage-Reared Ducks under Different Lighting Conditions

3.3. Abnormal Cage-Reared Duck Pose Estimation

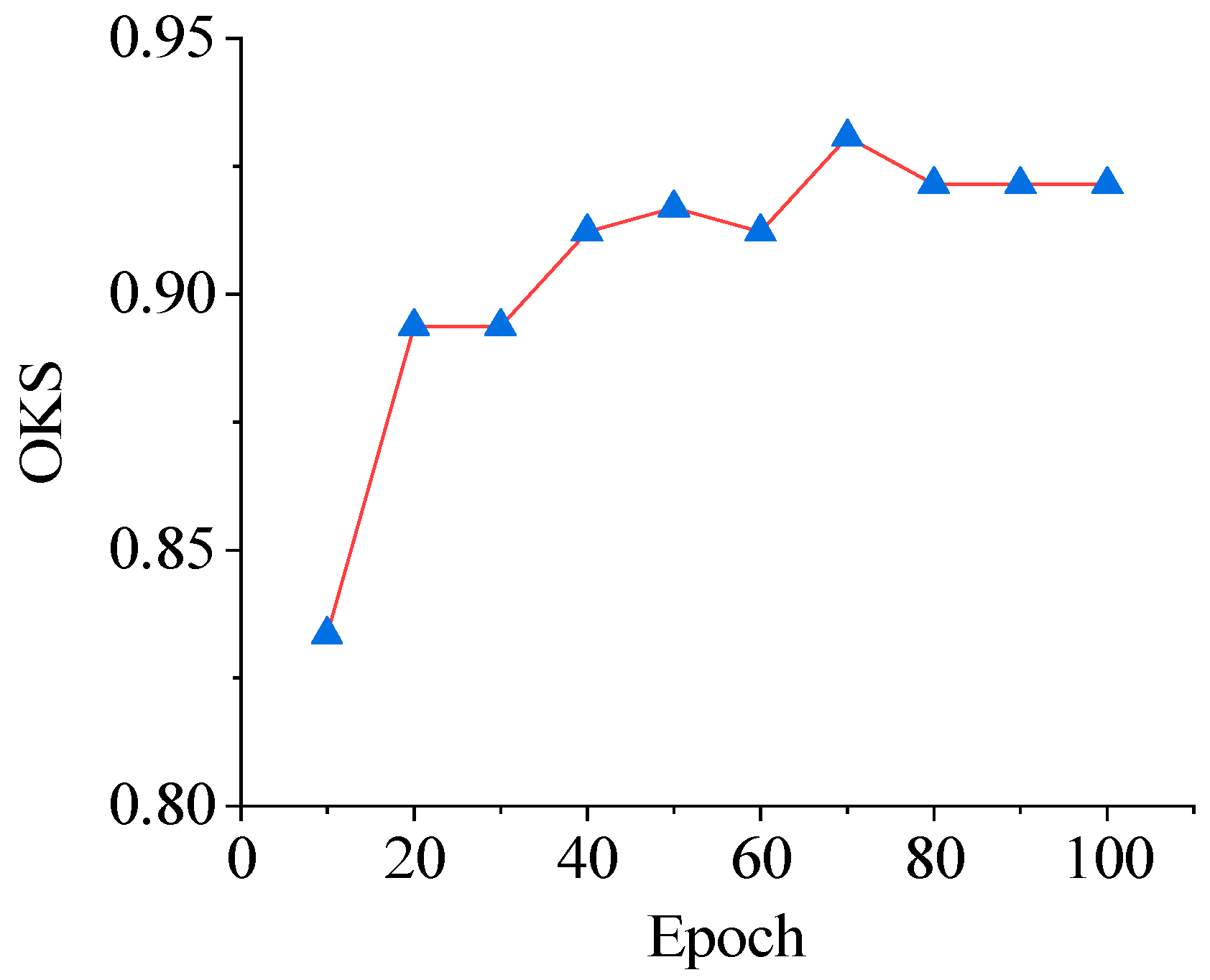

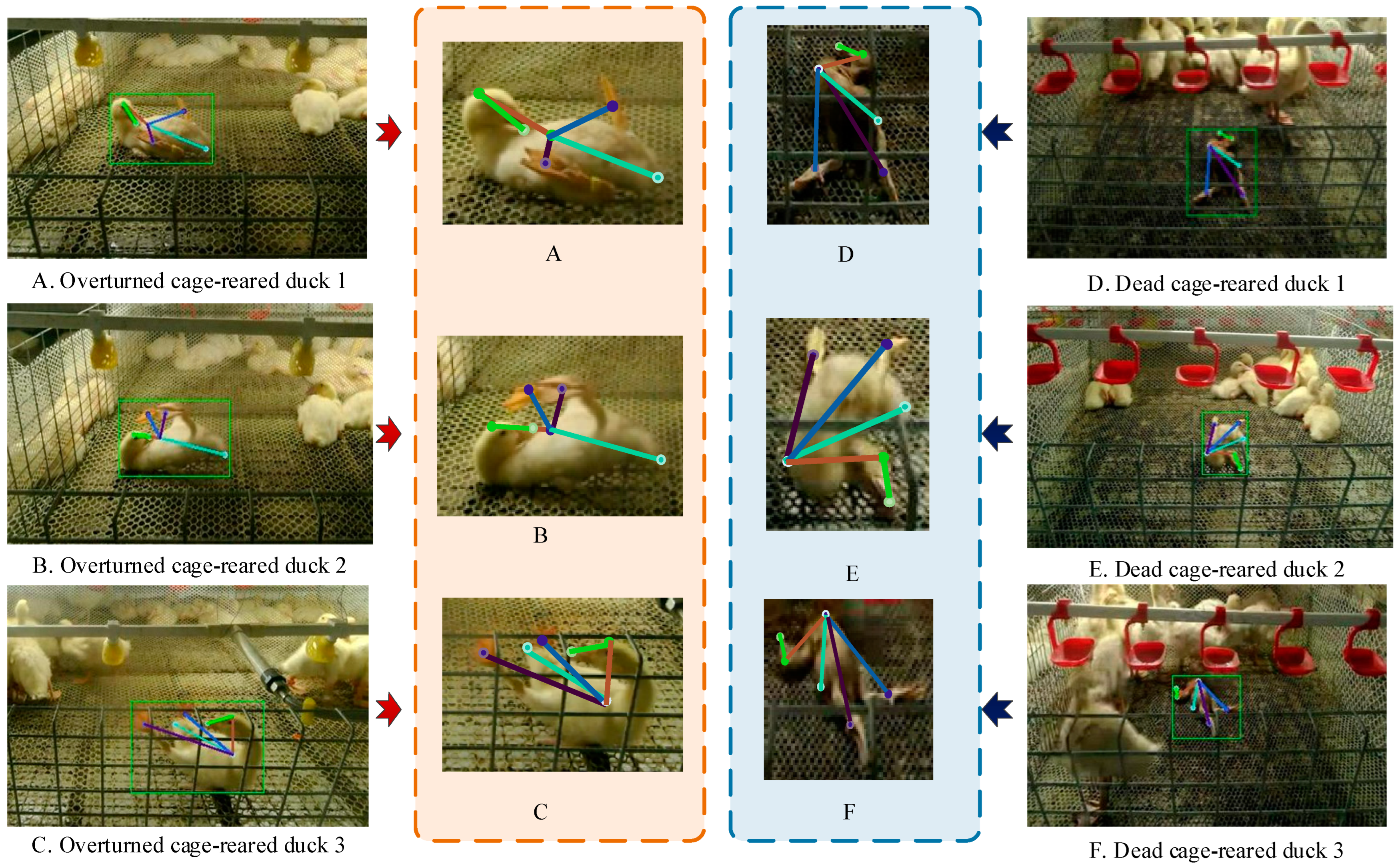

3.3.1. Results of Abnormal Cage-Reared Duck Pose Estimation

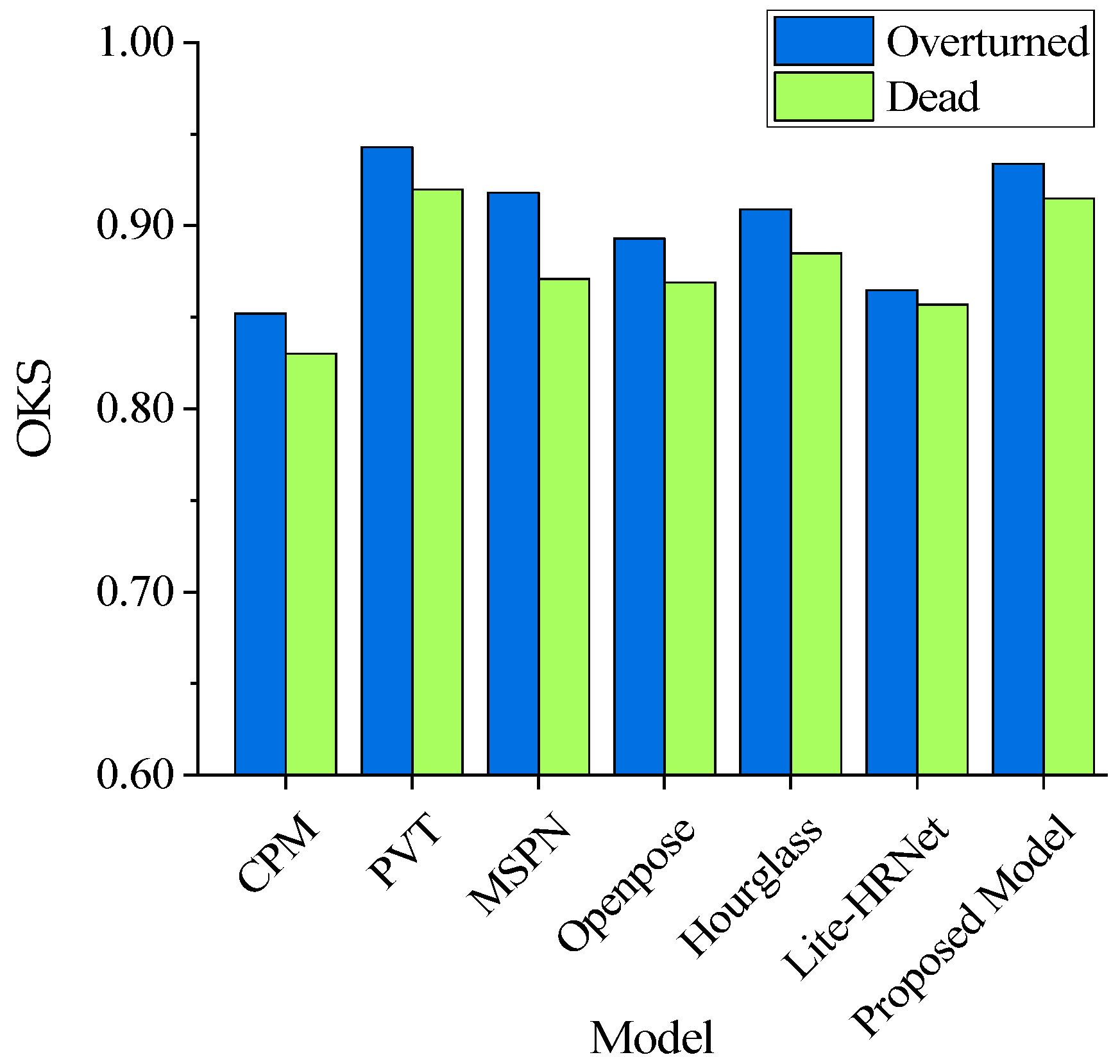

3.3.2. Comparison and Analysis

4. Discussion

4.1. Influence of Different Abnormal States of Cage-Reared Ducks on Model Recognition and Pose-Estimation Accuracy

4.2. Impact of Introducing Attention Mechanism and Optimized Loss Function on Model Performance

4.3. Limitations and Future Directions

- (1)

- Ducks are inherently sensitive and susceptible to stress, making them prone to a range of bacterial and viral diseases. Although various types of abnormal situations can occur, this study specifically focused on detecting two common scenarios: overturned and dead ducks. A gap exists between the diverse range of abnormal conditions observed in real-world cage-reared ducks and those addressed in this study. Future research could incorporate thermal infrared sensing, audio processing, and hyperspectral/near-infrared technologies. A more comprehensive method for identifying various abnormalities in cage-reared ducks can be developed by integrating temperature, sound, and fecal spectral information through multisource data fusion.

- (2)

- The abnormal cage-reared duck dataset and abnormal cage-reared duck pose-estimation dataset were labeled manually, a labor-intensive process. Future research should concentrate on semi-supervised approaches to enhance the model’s performance, with reduced manual effort and data requirements.

- (3)

- As ducks aged, their appearance and shape changed significantly. The experimental subjects in this study were limited to 10-day-old ducks. Future research should incorporate age gradients to improve the robustness of this model.

- (4)

- This study did not address the simultaneous detection and classification of multiple abnormal ducks, nor did it effectively estimate the posture of heavily obscured ducks. Given the high-density nature of poultry farming, future research will focus on the multi-target detection of abnormal ducks in such scenarios, as well as on feature generation and completion.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hou, S.; Liu, L. Waterfowl Industry and Technology Development Report 2023. Chin. J. Anim. Sci. 2024, 60, 318–321. [Google Scholar] [CrossRef]

- Zhu, M.; Bai, H.; Wei, C.; Zhou, F.; Peng, H.; Li, C.; Liao, Y. Effects of different breeding modes on growth performance, slaughtering performance and breeding benefit of meat ducks. China Poult. 2023, 45, 116–120. [Google Scholar] [CrossRef]

- Duan, E.; Han, G.; Zhao, S.; Ma, Y.; Lv, Y.; Bai, Z. Regulation of Meat Duck Activeness through Photoperiod Based on Deep Learning. Animals 2023, 13, 3520. [Google Scholar] [CrossRef] [PubMed]

- Hao, H.; Fang, P.; Duan, E.; Yang, Z.; Wang, L.; Wang, H. A Dead Broiler Inspection System for Large-Scale Breeding Farms Based on Deep Learning. Agriculture 2022, 12, 1176. [Google Scholar] [CrossRef]

- Shahbazi, M.; Mohammadi, K.; Derakhshani, S.M.; Groot Koerkamp, P.W.G. Deep Learning for Laying Hen Activity Recognition Using Wearable Sensors. Agriculture 2023, 13, 738. [Google Scholar] [CrossRef]

- Yang, X.; Zhao, Y.; Street, G.; Huang, Y.; To, S.; Purswell, J. Classification of broiler behaviours using triaxial accelerometer and machine learning. Animal 2021, 15, 100269. [Google Scholar] [CrossRef] [PubMed]

- Mei, W.; Yang, X.; Zhao, Y.; Wang, X.; Dai, X.; Wang, K. Identification of aflatoxin-poisoned broilers based on accelerometer and machine learning. Biosyst. Eng. 2023, 227, 107–116. [Google Scholar] [CrossRef]

- Mao, A.; Huang, E.; Wang, X.; Liu, K. Deep learning-based animal activity recognition with wearable sensors: Overview, challenges, and future directions. Comput. Electron. Agric. 2023, 211, 108043. [Google Scholar] [CrossRef]

- Xue, H.; Li, L.; Wen, P.; Zhang, M. A machine learning-based positioning method for poultry in cage environments. Comput. Electron. Agric. 2023, 208, 107764. [Google Scholar] [CrossRef]

- Alindekon, S.; Rodenburg, T.; Langbein, J.; Puppe, B.; Wilmsmeier, O.; Louton, H. Setting the stage to tag “n” track: A guideline for implementing, validating and reporting a radio frequency identification system for monitoring resource visit behavior in poultry. Poult. Sci. 2023, 102, 102799. [Google Scholar] [CrossRef]

- Yin, P.; Tong, Q.; Li, B.; Zheng, W.; Wang, Y.; Peng, H.; Xue, X.; Wei, S. Spatial distribution, movement, body damage, and feather condition of laying hens in a multi-tier system. Poult. Sci. 2024, 103, 103202. [Google Scholar] [CrossRef]

- Cui, Y.; Kong, X.; Chen, C.; Li, Y. Research on broiler health status recognition method based on improved YOLOv5. Smart Agric. Technol. 2023, 6, 100324. [Google Scholar] [CrossRef]

- Zhuang, X.; Zhang, T. Detection of sick broilers by digital image processing and deep learning. Biosyst. Eng. 2019, 179, 106–116. [Google Scholar] [CrossRef]

- Yang, D.; Zhang, R.; Chen, H.; Bao, H.; Xuan, F.; Gao, Y. Calculation of Feather Coverage and Relationship between Coverage and Body Temperature in Laying Hens. Trans. Chin. Soc. Agric. Mach. 2022, 53, 242–251. [Google Scholar] [CrossRef]

- Aydin, A. Using 3D vision camera system to automatically assess the level of inactivity in broiler chickens. Comput. Electron. Agric. 2017, 135, 4–10. [Google Scholar] [CrossRef]

- Xiao, L.; Ding, K.; Gao, Y.; Rao, X. Behavior-induced health condition monitoring of caged chickens using binocular vision. Comput. Electron. Agric. 2019, 156, 254–262. [Google Scholar] [CrossRef]

- Bist, R.; Yang, X.; Subedi, S.; Chai, L. Mislaying behavior detection in cage-free hens with deep learning technologies. Poult. Sci. 2023, 102, 102729. [Google Scholar] [CrossRef] [PubMed]

- Cuan, K.; Zhang, T.; Li, Z.; Huang, J.; Ding, Y.; Fang, C. Automatic Newcastle disease detection using sound technology and deep learning method. Comput. Electron. Agric. 2022, 194, 106740. [Google Scholar] [CrossRef]

- Shen, M.; Lu, P.; Liu, L.; Sun, Y.; Xu, Y.; Qin, F. Body Temperature Detection Method of Ross Broiler Based on Infrared Thermography. Trans. Chin. Soc. Agric. Mach. 2019, 50, 222–229. [Google Scholar] [CrossRef]

- Degu, M.; Simegn, G. Smartphone based detection and classification of poultry diseases from chicken fecal images using deep learning techniques. Smart Agric. Technol. 2023, 4, 100221. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, S.; Yan, Y.; Heng, Y.; Gong, D.; Tang, S. Method for real-time behavior recognition of cage-reared laying ducks by enhancing YOLOv4. Trans. Chin. Soc. Agric. Mach. 2023, 54, 266–276. [Google Scholar] [CrossRef]

- Glenn, J.; Ayush, C.; Jing, Q. Ultralytics YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 20 April 2024).

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Virtual Event, 19–25 June 2021. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–22 June 2023. [Google Scholar] [CrossRef]

- House, G.M.; Sobotik, E.B.; Nelson, J.R.; Archer, G.S. Experimental monochromatic light-emitting diode fixture impacts Pekin duck stress and eye development. Poult. Sci. 2021, 100, 101507. [Google Scholar] [CrossRef]

- House, G.M.; Sobotik, E.B.; Nelson, J.R.; Archer, G.S. Effects of Ultraviolet Light Supplementation on Pekin Duck Production, Behavior, and Welfare. Animals 2020, 10, 833. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Bai, Z.; Meng, L.; Han, G.; Duan, E. Pose Estimation and Behavior Classification of Jinling White Duck Based on Improved HRNet. Animals 2023, 13, 2878. [Google Scholar] [CrossRef] [PubMed]

- Kovács, L.; Bódis, B.M.; Benedek, C. LidPose: Real-Time 3D Human Pose Estimation in Sparse Lidar Point Clouds with Non-Repetitive Circular Scanning Pattern. Sensors 2024, 24, 3427. [Google Scholar] [CrossRef] [PubMed]

- Ding, J.; Niu, S.; Nie, Z.; Zhu, W. Research on Human Posture Estimation Algorithm Based on YOLO-Pose. Sensors 2024, 24, 3036. [Google Scholar] [CrossRef] [PubMed]

- Niu, Z.; Lu, K.; Xue, J.; Wang, J. Skeleton Cluster Tracking for robust multi-view multi-person 3D human pose estimation. Comput. Vis. Image Underst. 2024, 246, 104059. [Google Scholar] [CrossRef]

- Hu, X.; Liu, C. Animal Pose Estimation Based on Contrastive Learning with Dynamic Conditional Prompts. Animals 2024, 14, 1712. [Google Scholar] [CrossRef] [PubMed]

- Fodor, I.; Sluis, M.; Jacobs, M.; Klerk, B.; Bouwman, A.; Ellen, E. Automated pose estimation reveals walking characteristics associated with lameness in broilers. Poult. Sci. 2023, 102, 102787. [Google Scholar] [CrossRef]

- Wen, C.; Wu, D.; Hu, H.; Pan, W. Pose estimation-dependent identification method for field moth images using deep learning architecture. Biosyst. Eng. 2015, 136, 117–128. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, L.; Tang, J.; Tang, C.; An, R.; Han, R.; Zhang, Y. GRMPose: GCN-based real-time dairy goat pose estimation. Comput. Electron. Agric. 2024, 218, 108662. [Google Scholar] [CrossRef]

- Jiang, Y.; Yang, K.; Zhu, J.; Qin, L. YOLO-Rlepose: Improved YOLO Based on Swin Transformer and Rle-Oks Loss for Multi-Person Pose Estimation. Electronics 2024, 13, 563. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Hong, M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–23 June 2022. [Google Scholar] [CrossRef]

- Jocher, G. Ultralytics/Yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 22 November 2022).

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You Only Look One-level Feature. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Wei, S.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional Pose Machines. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR), LaS Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Li, X.; Fan, D.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE International Conference on Computer Vision (CVPR), Virtual Event, 19–25 June 2021. [Google Scholar] [CrossRef]

- Li, W.; Wang, Z.; Yin, B.; Peng, Q.; Du, Y.; Xiao, T.; Yu, G.; Lu, H.; Wei, Y.; Sun, J. Rethinking on Multi-Stage Networks for Human Pose Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.; Sheikh, Y. OpenPose: Realtime MultiPerson 2D Pose Estimation using Part Affinity Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Newell, A.; Yang, K.; Deng, J. Stacked Hourglass Networks for Human Pose Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Yu, C.; Xiao, B.; Gao, C.; Yuan, L.; Zhang, L.; Sang, N.; Sang, N.; Wang, J. Lite-HRNet: A Lightweight High-Resolution Network. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), Virtual Event, 19–25 June 2021. [Google Scholar] [CrossRef]

represents the multiplication of elements.

represents the multiplication of elements.

represents the multiplication of elements.

represents the multiplication of elements.

represents the Sigmoid operation.

represents the Sigmoid operation.

| Attribute | Parameter |

|---|---|

| Resolution and FPS | 1280 × 720 30 fps |

| Dimensions (mm) | 90 × 25 × 25 |

| RGB FOV (H × V) | 69° × 42° |

| Depth FOV | 87° × 58° |

| Ideal range (m) | <3 |

| Interface type | USB 3 |

| Dataset | Labels | Abnormal Situations and Key Points Definitions |

|---|---|---|

| Abnormal cage-reared ducks dataset | Overturned | Cage-reared ducks exhibit a supine posture with both feet pointing upwards, their backs pressed against the ground, heads and necks inclined away from the ground, with a tendency to sway from side to side. |

| Dead | Cage-reared ducks adhere to the ground in a deformed posture, remain stationary, with stains covering their feathers. | |

| Abnormal cage-reared ducks pose-estimation dataset | Head | Cage-reared ducks crown feather region |

| Beak | Cage-reared ducks upper beak region | |

| Breast | Cage-reared ducks breast feather region | |

| Tail | Cage-reared ducks tail feather region | |

| Left foot | Facing the cage-reared ducks, the left palm area of the cage-reared ducks | |

| Right foot | Facing the cage-reared ducks, the right palm area of the cage-reared ducks |

| Abnormal Cage-Reared Ducks Dataset | ||

|---|---|---|

| Train | Test | |

| Overturned | 3154 | 603 |

| Dead | 1297 | 237 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, S.; Bai, Z.; Huo, L.; Han, G.; Duan, E.; Gong, D.; Gao, L. Automatic Perception of Typical Abnormal Situations in Cage-Reared Ducks Using Computer Vision. Animals 2024, 14, 2192. https://doi.org/10.3390/ani14152192

Zhao S, Bai Z, Huo L, Han G, Duan E, Gong D, Gao L. Automatic Perception of Typical Abnormal Situations in Cage-Reared Ducks Using Computer Vision. Animals. 2024; 14(15):2192. https://doi.org/10.3390/ani14152192

Chicago/Turabian StyleZhao, Shida, Zongchun Bai, Lianfei Huo, Guofeng Han, Enze Duan, Dongjun Gong, and Liaoyuan Gao. 2024. "Automatic Perception of Typical Abnormal Situations in Cage-Reared Ducks Using Computer Vision" Animals 14, no. 15: 2192. https://doi.org/10.3390/ani14152192

APA StyleZhao, S., Bai, Z., Huo, L., Han, G., Duan, E., Gong, D., & Gao, L. (2024). Automatic Perception of Typical Abnormal Situations in Cage-Reared Ducks Using Computer Vision. Animals, 14(15), 2192. https://doi.org/10.3390/ani14152192