DCNN for Pig Vocalization and Non-Vocalization Classification: Evaluate Model Robustness with New Data

Abstract

:Simple Summary

Abstract

1. Introduction

- Design a new pig vocalization and non-vocalization classification model using deep learning network architecture and audio feature extraction methods.

- Implement various audio feature extraction methods and compare the classification performance results using a deep learning model.

- Propose a novel feature extraction method to enrich the input information that can improve the classification accuracy of the model. This proposed method is robust enough to classify pig vocalization and non-vocalization in different data collection environments.

- Create datasets of pig vocalization and non-vocalization to handle the problem of insufficient data.

- Compare the performance of the various audio feature extraction methods. The proposed method improves the classification performance and efficiently classifies pig vocalization and non-vocalization.

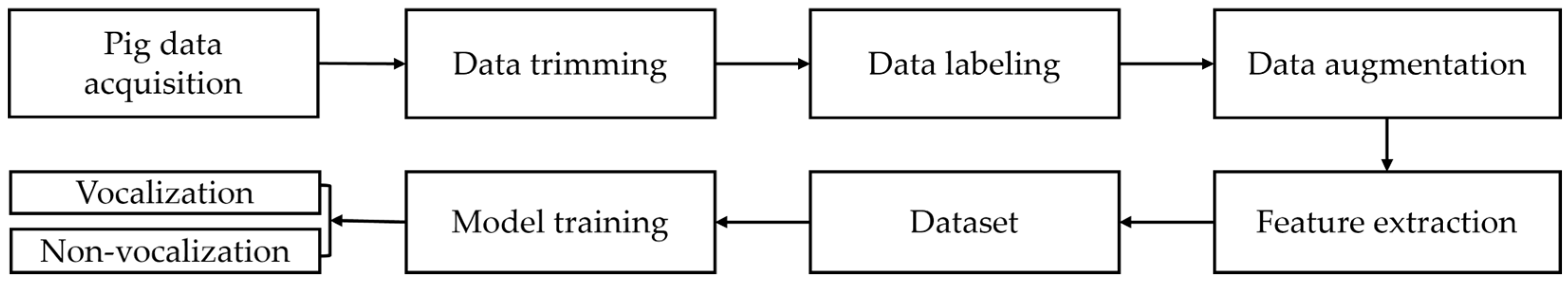

2. Materials and Methods

2.1. Data Acquisition

2.2. Data Preprocessing

2.3. Audio Data Augmentation

- Pitch-shifting is a digital signal processing technique that alters the pitch of an audio signal without changing its duration [28]. Each audio sample was pitch-shifted by random values from 0 to 4 and 12 bins per octave.

- Time-shifting involves displacing audio to the left or right by a randomly determined duration. When shifting audio to the left (forward) by x seconds, the initial x seconds were designated 0. Conversely, when shifting audio to the right (backward) by x seconds, the last x seconds were designated 0.

- Time-stretching involves adjusting the speed of the audio sample, either slowing it down or speeding it up without affecting the pitch of the sound. In this study, each sample underwent time stretching using a stretch factor of 1.0.

- Background-noising is an intentional addition of background noise to an audio sample. In this study, each audio sample was added with white noise. Each background-noising z was calculated using z = x + w·y, where x represents the audio signal of the original sample, y denotes the signal with the background scene, and w serves as a weighting parameter. Notably, the weighting parameter w has been selected from a uniform distribution randomly in the range of [0.0, 1.0].

2.4. Audio Feature Extraction

2.4.1. MFCC (Mel-Frequency Cepstral Coefficients)

2.4.2. Mel-Spectrogram

2.4.3. Chroma

2.4.4. Tonnetz

2.4.5. Mixed-MMCT

2.5. Deep CNN Architecture

2.6. Experimental Setting

2.7. Evaluation Criteria

- Accuracy serves as an intuitive performance metric specifically designed to characterize the effectiveness of an algorithm in classification tasks. It qualifies the ratio of correctly predicted samples to the overall sample count, as demonstrated by Equation (1).Accuracy = (TP + TN)/(TP + TN + FP + FN)

- Precision is a metric focused on evaluating the accuracy of positive predictions. Specifically, it calculates the precision for the minority class, representing the ratio of correctly predicted positive samples to the total predictive positive samples. The computation of precision is outlined in Equation (2).Precision = TP/(TP + FP)

- Recall is a metric that calculates the number of accurate positive predictions made among all possible positive predictions. In contrast to precision, which focuses solely on accurate positive predictions out of all positive predictions, recall encompasses a broader scope. The computation of recall is defined in Equation (3).Recall = TP/(TP + FN)

- F1-score provides a consolidated measure by combining precision and recall into a single metric encompassing both aspects. It has the ability to convey scenarios with high precision and poor recall, as well as situations with poor precision and perfect recall. The computation of the F1-score is outlined in Equation (4).where true positive (TP) signifies a correctly classified positive sample, true negative (TN) denotes the number of predictions accurately identifying the sample as negative, false positive (FP) represents the number of samples wrongly classified as positive, and false negative (FN) refers to the quantity of samples inaccurately identified as negative.F1-score = 2 × (Precision × Recall)/(Precision + Recall)

3. Results

3.1. Experimental Results

3.2. Robustness Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liao, J.; Li, H.; Feng, A.; Wu, X.; Luo, Y.; Duan, X.; Ni, M.; Li, J. Domestic pig sound classification based on TransformerCNN. Appl. Intell. 2023, 53, 4907–4923. [Google Scholar] [CrossRef]

- Popescu, A. Pork market crisis in Romania: Pig livestock, pork production, consumption, import, export, trade balance and price. Sci. Pap. Ser. Manag. Econ. Eng. Agric. Rural Dev. 2020, 20, 461–474. [Google Scholar]

- Liang, Y.; Cheng, Y.; Xu, Y.; Hua, G.; Zheng, Z.; Li, H.; Han, L. Consumer preferences for animal welfare in China: Optimization of pork production-marketing chains. Animals 2022, 12, 3051. [Google Scholar] [CrossRef]

- Hou, Y.; Li, Q.; Wang, Z.; Liu, T.; He, Y.; Li, H.; Ren, Z.; Guo, X.; Yang, G.; Liu, Y. Study on a Pig Vocalization Classification Method Based on Multi-Feature Fusion. Sensors 2024, 24, 313. [Google Scholar] [CrossRef]

- Dohlman, E.; Hansen, J.; Boussios, D. USDA Agricultural Projections to 2031; United States Department of Agriculture: Washington, DC, USA, 2022. [Google Scholar]

- Benjamin, M.; Yik, S. Precision livestock farming in swine welfare: A review for swine practitioners. Animals 2019, 9, 133. [Google Scholar] [CrossRef]

- Norton, T.; Chen, C.; Larsen, M.L.V.; Berckmans, D. Precision livestock farming: Building ‘digital representations’ to bring the animals closer to the farmer. Animal 2019, 13, 3009–3017. [Google Scholar] [CrossRef]

- Berckmans, D. General introduction to precision livestock farming. Anim. Front. 2017, 7, 6–11. [Google Scholar] [CrossRef]

- Berckmans, D. Precision livestock farming technologies for welfare management in intensive livestock systems. Rev. Sci. Tech 2014, 33, 189–196. [Google Scholar] [CrossRef] [PubMed]

- García, R.; Aguilar, J.; Toro, M.; Pinto, A.; Rodríguez, P. A systematic literature review on the use of machine learning in precision livestock farming. Comput. Electron. Agric. 2020, 179, 105826. [Google Scholar] [CrossRef]

- Arulmozhi, E.; Bhujel, A.; Moon, B.-E.; Kim, H.-T. The application of cameras in precision pig farming: An overview for swine-keeping professionals. Animals 2021, 11, 2343. [Google Scholar] [CrossRef]

- Krampe, C.; Serratosa, J.; Niemi, J.K.; Ingenbleek, P.T. Consumer perceptions of precision livestock farming—A qualitative study in three european countries. Animals 2021, 11, 1221. [Google Scholar] [CrossRef] [PubMed]

- Kopler, I.; Marchaim, U.; Tikász, I.E.; Opaliński, S.; Kokin, E.; Mallinger, K.; Neubauer, T.; Gunnarsson, S.; Soerensen, C.; Phillips, C.J. Farmers’ perspectives of the benefits and risks in precision livestock farming in the EU pig and poultry sectors. Animals 2023, 13, 2868. [Google Scholar] [CrossRef] [PubMed]

- Morrone, S.; Dimauro, C.; Gambella, F.; Cappai, M.G. Industry 4.0 and precision livestock farming (PLF): An up to date overview across animal productions. Sensors 2022, 22, 4319. [Google Scholar] [CrossRef] [PubMed]

- Vranken, E.; Berckmans, D. Precision livestock farming for pigs. Anim. Front. 2017, 7, 32–37. [Google Scholar] [CrossRef]

- Weary, D.M.; Ross, S.; Fraser, D. Vocalizations by isolated piglets: A reliable indicator of piglet need directed towards the sow. Appl. Anim. Behav. Sci. 1997, 53, 249–257. [Google Scholar] [CrossRef]

- Appleby, M.C.; Weary, D.M.; Taylor, A.A.; Illmann, G. Vocal communication in pigs: Who are nursing piglets screaming at? Ethology 1999, 105, 881–892. [Google Scholar] [CrossRef]

- Marx, G.; Horn, T.; Thielebein, J.; Knubel, B.; Von Borell, E. Analysis of pain-related vocalization in young pigs. J. Sound Vib. 2003, 266, 687–698. [Google Scholar] [CrossRef]

- Ferrari, S.; Silva, M.; Guarino, M.; Berckmans, D. Analysis of cough sounds for diagnosis of respiratory infections in intensive pig farming. Trans. ASABE 2008, 51, 1051–1055. [Google Scholar] [CrossRef]

- Cordeiro, A.F.d.S.; Nääs, I.d.A.; da Silva Leitão, F.; de Almeida, A.C.; de Moura, D.J. Use of vocalisation to identify sex, age, and distress in pig production. Biosyst. Eng. 2018, 173, 57–63. [Google Scholar] [CrossRef]

- Hillmann, E.; Mayer, C.; Schön, P.-C.; Puppe, B.; Schrader, L. Vocalisation of domestic pigs (Sus scrofa domestica) as an indicator for their adaptation towards ambient temperatures. Appl. Anim. Behav. Sci. 2004, 89, 195–206. [Google Scholar] [CrossRef]

- Guarino, M.; Jans, P.; Costa, A.; Aerts, J.-M.; Berckmans, D. Field test of algorithm for automatic cough detection in pig houses. Comput. Electron. Agric. 2008, 62, 22–28. [Google Scholar] [CrossRef]

- Yin, Y.; Tu, D.; Shen, W.; Bao, J. Recognition of sick pig cough sounds based on convolutional neural network in field situations. Inf. Process. Agric. 2021, 8, 369–379. [Google Scholar] [CrossRef]

- Shen, W.; Ji, N.; Yin, Y.; Dai, B.; Tu, D.; Sun, B.; Hou, H.; Kou, S.; Zhao, Y. Fusion of acoustic and deep features for pig cough sound recognition. Comput. Electron. Agric. 2022, 197, 106994. [Google Scholar] [CrossRef]

- Shen, W.; Tu, D.; Yin, Y.; Bao, J. A new fusion feature based on convolutional neural network for pig cough recognition in field situations. Inf. Process. Agric. 2021, 8, 573–580. [Google Scholar] [CrossRef]

- Wang, Y.; Li, S.; Zhang, H.; Liu, T. A lightweight CNN-based model for early warning in sow oestrus sound monitoring. Ecol. Inform. 2022, 72, 101863. [Google Scholar] [CrossRef]

- Nanni, L.; Maguolo, G.; Paci, M. Data augmentation approaches for improving animal audio classification. Ecol. Inform. 2020, 57, 101084. [Google Scholar] [CrossRef]

- Salamon, J.; Bello, J.P. Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Rezapour Mashhadi, M.M.; Osei-Bonsu, K. Speech emotion recognition using machine learning techniques: Feature extraction and comparison of convolutional neural network and random forest. PLoS ONE 2023, 18, e0291500. [Google Scholar] [CrossRef] [PubMed]

- Mishra, S.P.; Warule, P.; Deb, S. Speech emotion recognition using MFCC-based entropy feature. Signal Image Video Process. 2024, 18, 153–161. [Google Scholar] [CrossRef]

- Das, A.K.; Naskar, R. A deep learning model for depression detection based on MFCC and CNN generated spectrogram features. Biomed. Signal Process. Control 2024, 90, 105898. [Google Scholar] [CrossRef]

- Zaman, K.; Sah, M.; Direkoglu, C.; Unoki, M. A Survey of Audio Classification Using Deep Learning. IEEE Access 2023, 11, 106620–106649. [Google Scholar] [CrossRef]

- Patnaik, S. Speech emotion recognition by using complex MFCC and deep sequential model. Multimed. Tools Appl. 2023, 82, 11897–11922. [Google Scholar] [CrossRef]

- Joshi, D.; Pareek, J.; Ambatkar, P. Comparative Study of Mfcc and Mel Spectrogram for Raga Classification Using CNN. Indian J. Sci. Technol. 2023, 16, 816–822. [Google Scholar] [CrossRef]

- Shah, A.; Kattel, M.; Nepal, A.; Shrestha, D. Chroma Feature Extraction. In Chroma Feature Extraction Using Fourier Transform; Kathmandu University: Kathmandu, Nepal, 2019. [Google Scholar]

- Islam, R.; Tarique, M. A novel convolutional neural network based dysphonic voice detection algorithm using chromagram. Int. J. Electr. Comput. Eng. 2022, 12, 5511–5518. [Google Scholar] [CrossRef]

- Islam, R.; Abdel-Raheem, E.; Tarique, M. Early detection of COVID-19 patients using chromagram features of cough sound recordings with machine learning algorithms. In Proceedings of the 2021 International Conference on Microelectronics (ICM), Osaka, Japan, 19–21 March 2021; pp. 82–85. [Google Scholar]

- Patni, H.; Jagtap, A.; Bhoyar, V.; Gupta, A. Speech emotion recognition using MFCC, GFCC, chromagram and RMSE features. In Proceedings of the 2021 8th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 26–27 August 2021; pp. 892–897. [Google Scholar]

- Chittaragi, N.B.; Koolagudi, S.G. Dialect identification using chroma-spectral shape features with ensemble technique. Comput. Speech Language 2021, 70, 101230. [Google Scholar] [CrossRef]

- Humphrey, E.J.; Bello, J.P.; LeCun, Y. Moving beyond feature design: Deep architectures and automatic feature learning in music informatics. In Proceedings of the International Soceity of Music Information Retrieval Conference (ISMIR), Porto, Portugal, 8–12 October 2012; pp. 403–408. [Google Scholar]

- Yust, J. Generalized Tonnetze and Zeitnetze, and the topology of music concepts. J. Math. Music 2020, 14, 170–203. [Google Scholar] [CrossRef]

- Wang, Y.; Fagiani, F.E.; Ho, K.E.; Matson, E.T. A Feature Engineering Focused System for Acoustic UAV Payload Detection. In Proceedings of the ICAART (3), Online, 3–5 February 2022; pp. 470–475. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- McFee, B.; Raffel, C.; Liang, D.; Ellis, D.P.; McVicar, M.; Battenberg, E.; Nieto, O. librosa: Audio and music signal analysis in python. In Proceedings of the 14th Python in Science Conference, Austin, TX, USA, 6–12 July 2015; pp. 18–25. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Palanisamy, K.; Singhania, D.; Yao, A. Rethinking CNN models for audio classification. arXiv 2020, arXiv:2007.11154. [Google Scholar]

- Doherty, H.G.; Burgueño, R.A.; Trommel, R.P.; Papanastasiou, V.; Harmanny, R.I. Attention-based deep learning networks for identification of human gait using radar micro-Doppler spectrograms. Int. J. Microw. Wirel. Technol. 2021, 13, 734–739. [Google Scholar] [CrossRef]

- Ghosal, D.; Kolekar, M.H. Music Genre Recognition Using Deep Neural Networks and Transfer Learning. In Proceedings of the Interspeech, Hyderabad, India, 2–6 September 2018; pp. 2087–2091. [Google Scholar]

- Chung, Y.; Oh, S.; Lee, J.; Park, D.; Chang, H.-H.; Kim, S. Automatic detection and recognition of pig wasting diseases using sound data in audio surveillance systems. Sensors 2013, 13, 12929–12942. [Google Scholar] [CrossRef] [PubMed]

- Burgos, W. Gammatone and MFCC Features in Speaker Recognition. Ph.D. Thesis, Florida Institute of Technology, Melbourne, FL, USA, 2014. [Google Scholar]

- Su, Y.; Zhang, K.; Wang, J.; Madani, K. Environment sound classification using a two-stream CNN based on decision-level fusion. Sensors 2019, 19, 1733. [Google Scholar] [CrossRef] [PubMed]

- Xing, Z.; Baik, E.; Jiao, Y.; Kulkarni, N.; Li, C.; Muralidhar, G.; Parandehgheibi, M.; Reed, E.; Singhal, A.; Xiao, F. Modeling of the latent embedding of music using deep neural network. arXiv 2017, arXiv:1705.05229. [Google Scholar]

| Dataset | Type | Growth Stage | Amount | Min dBFS | Max dBFS | Average dBFS |

|---|---|---|---|---|---|---|

| Nias | Vocalization | Growing fattening (30–110 kg) | 2000 | −36.56 | −6.98 | −24.86 |

| Non-Vocalization | 2000 | −37.97 | −24.01 | −28.58 | ||

| Gimje | Vocalization | Weaning (5–30 kg) | 2000 | −35.74 | −9.03 | −26.07 |

| Non-Vocalization | 2000 | −39.26 | −21.08 | −29.30 | ||

| Jeongeup | Vocalization | Fattening (60–110 kg) | 2000 | −23.60 | −5.96 | −18.99 |

| Non-Vocalization | 2000 | −24.72 | −18.98 | −22.69 |

| Dataset | Methods | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| Nias | MFCC | 95.44 | 95.48 | 95.43 | 95.46 |

| Mel-spectrogram | 98.25 | 98.29 | 98.23 | 98.26 | |

| Chroma | 91.41 | 91.51 | 91.39 | 91.45 | |

| Tonnetz | 85.03 | 85.33 | 85.02 | 85.17 | |

| Mixed-MMCT | 99.50 | 99.51 | 99.50 | 99.50 | |

| Gimje | MFCC | 95.06 | 95.08 | 95.07 | 95.07 |

| Mel-spectrogram | 98.78 | 98.79 | 98.78 | 98.79 | |

| Chroma | 87.72 | 87.86 | 87.77 | 87.81 | |

| Tonnetz | 80.78 | 81.11 | 80.81 | 80.96 | |

| Mixed-MMCT | 99.56 | 99.56 | 99.57 | 99.57 | |

| Jeongeup | MFCC | 97.34 | 97.35 | 97.35 | 97.35 |

| Mel-spectrogram | 98.87 | 98.87 | 98.88 | 98.87 | |

| Chroma | 93.44 | 93.59 | 93.42 | 93.51 | |

| Tonnetz | 79.66 | 80.29 | 79.62 | 79.95 | |

| Mixed-MMCT | 99.67 | 99.65 | 99.66 | 99.66 |

| Training Set | Test Set | Methods | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|

| NGdb (Nias + Gimje) | Jeongeup | MFCC | 92.45 | 93.18 | 92.45 | 92.81 |

| Mel-spectrogram | 86.98 | 89.60 | 86.98 | 88.27 | ||

| Chroma | 54.25 | 72.16 | 54.25 | 61.94 | ||

| Tonnetz | 59.10 | 74.62 | 59.10 | 65.96 | ||

| Mixed-MMCT | 90.05 | 91.70 | 90.05 | 90.87 | ||

| NJdb (Nias + Jeongeup) | Gimje | MFCC | 80.72 | 82.58 | 80.73 | 81.64 |

| Mel-spectrogram | 96.35 | 96.50 | 96.35 | 96.42 | ||

| Chroma | 51.85 | 68.26 | 51.85 | 58.93 | ||

| Tonnetz | 65.40 | 65.84 | 65.40 | 65.62 | ||

| Mixed-MMCT | 99.22 | 99.23 | 99.23 | 99.23 | ||

| GJdb (Gimje + Jeongeup) | Nias | MFCC | 68.00 | 79.66 | 68.00 | 73.37 |

| Mel-spectrogram | 94.62 | 95.08 | 94.63 | 94.85 | ||

| Chroma | 60.10 | 70.19 | 60.10 | 64.75 | ||

| Tonnetz | 63.30 | 78.65 | 63.30 | 70.15 | ||

| Mixed-MMCT | 97.75 | 97.83 | 97.75 | 97.79 |

| Methods | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| MFCC | 80.39 | 85.14 | 80.39 | 82.70 |

| Mel-spectrogram | 92.65 | 93.73 | 92.65 | 93.19 |

| Chroma | 55.40 | 70.20 | 55.40 | 61.93 |

| Tonnetz | 62.60 | 73.04 | 62.60 | 67.42 |

| Mixed-MMCT | 95.67 | 96.25 | 95.68 | 95.96 |

| Methods | GFLOPS | Trainable Parameters (M) | Inference Time (s) |

|---|---|---|---|

| MFCC | 0.145 | 4.36 | 0.031 |

| Mel-spectrogram | 0.939 | 33.03 | 0.039 |

| Chroma | 0.086 | 2.30 | 0.023 |

| Tonnetz | 0.086 | 2.30 | 0.023 |

| Mixed-MMCT | 1.21 | 41.22 | 0.046 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pann, V.; Kwon, K.-s.; Kim, B.; Jang, D.-H.; Kim, J.-B. DCNN for Pig Vocalization and Non-Vocalization Classification: Evaluate Model Robustness with New Data. Animals 2024, 14, 2029. https://doi.org/10.3390/ani14142029

Pann V, Kwon K-s, Kim B, Jang D-H, Kim J-B. DCNN for Pig Vocalization and Non-Vocalization Classification: Evaluate Model Robustness with New Data. Animals. 2024; 14(14):2029. https://doi.org/10.3390/ani14142029

Chicago/Turabian StylePann, Vandet, Kyeong-seok Kwon, Byeonghyeon Kim, Dong-Hwa Jang, and Jong-Bok Kim. 2024. "DCNN for Pig Vocalization and Non-Vocalization Classification: Evaluate Model Robustness with New Data" Animals 14, no. 14: 2029. https://doi.org/10.3390/ani14142029

APA StylePann, V., Kwon, K.-s., Kim, B., Jang, D.-H., & Kim, J.-B. (2024). DCNN for Pig Vocalization and Non-Vocalization Classification: Evaluate Model Robustness with New Data. Animals, 14(14), 2029. https://doi.org/10.3390/ani14142029