Simple Summary

Managing fish feeding well is important for both making fish farming better and keeping aquatic environments healthy. By looking at the sounds fish make, this study suggests a new way to learn about how they eat. We turn these sounds into pictures and use advanced computer methods to figure out the different ways people eat. Our method uses a strong deep learning model that can correctly group the eating habits of a certain type of fish, which helps us figure out how much and how often they eat. With a 97% success rate, this method shows a lot of promise for better running fish farms and protecting marine ecosystems. In the future, researchers might be able to add more types of data to this method, which could give us even more information about how to farm fish sustainably and manage ecosystems.

Abstract

Understanding the feeding dynamics of aquatic animals is crucial for aquaculture optimization and ecosystem management. This paper proposes a novel framework for analyzing fish feeding behavior based on a fusion of spectrogram-extracted features and deep learning architecture. Raw audio waveforms are first transformed into Log Mel Spectrograms, and a fusion of features such as the Discrete Wavelet Transform, the Gabor filter, the Local Binary Pattern, and the Laplacian High Pass Filter, followed by a well-adapted deep model, is proposed to capture crucial spectral and spectral information that can help distinguish between the various forms of fish feeding behavior. The Involutional Neural Network (INN)-based deep learning model is used for classification, achieving an accuracy of up to 97% across various temporal segments. The proposed methodology is shown to be effective in accurately classifying the feeding intensities of Oplegnathus punctatus, enabling insights pertinent to aquaculture enhancement and ecosystem management. Future work may include additional feature extraction modalities and multi-modal data integration to further our understanding and contribute towards the sustainable management of marine resources.

1. Introduction

The Oplegnathus punctatus has high economic value because of its fast growth rate, low breeding cost, high survival rate, and high nutritional value [1]. This species is of significant commercial value in aquaculture, with unique feeding behaviors that make it a compelling study subject. It also serves as a model organism for optimizing feeding aquaculture practices. In recent years, it has become an economically important fish species for farming in China. Aquaculture encompasses more than just fish, including other aquatic organisms, such as shellfish and crustaceans, which play an essential role in addressing the ever-increasing global demand for seafood [2]. The optimal feeding of marine organisms in aquacultures plays a significant role in nutritional management and the growth rate [3]. The challenge of optimally feeding aquatic organisms arises from their varied metabolic demands and the difficulties in feeding various species a sufficient amount of feed to avoid underfeeding or overfeeding [4]. Underfeeding leads to slow growth and weakens their immunity against diseases, leading to poor health and a reduced growth rate [5]. Malnutrition and failures to thrive result in reduced yields and the growth of commercial aquaculture systems. In contrast, overfeeding leads to low feed efficiency, high concentrations of organic matter, and the proliferation of harmful bacteria that decrease water quality [5]. All of these factors reduce fish health and diminish the sustainability of aquaculture commercial systems [6].

Deep learning models have already been used in environmental sound classification [7,8], image classification [9,10], signal classification [11,12], and many medical applications [13,14,15]. Deep learning techniques have been used in aquaculture to address the problems of overfeeding and underfeeding, while simultaneously creating an efficient feeding system [16]. The ability of deep learning models to identify complex patterns from vast datasets presents an opportunity to improve aquaculture farms.

Traditionally, aquaculture utilized manual observation approaches, timed feeding, and nutritionally balanced feeds [17]. However, these techniques are not flexible enough to be made sustainable and result in underfeeding or overfeeding [18]. Given these impacts, it is evident that controlling fish growth and well-being by investing in intelligent feeding is a necessary practice to maintain profitable and sustainable aquaculture. The economic relevance and flexibility of O. punctatus make it a suitable species for studying feeding responses and optimizing growth in aquaculture. Our research intends to apply its unique properties to develop new strategies for enhancing feeding effectiveness and promoting growth in aquaculture environments. We have utilized deep learning to recognize feeding responses, i.e., observable behaviors associated with feeding activities, such as surface movements, acoustic signals, or visual aids, and our results demonstrate the potential of this approach in optimizing fish growth, feeding efficiency, and overall well-being in commercial aquaculture environments. This paper examines how deep learning can enhance the productivity, profitability, and well-being of O. punctatus in controlled aquaculture environments with a low computational architecture while striving for high performance.

2. Literature Review

The growth, health, and sustainability of aquaculture depend on fish-feeding behavior [16,19]. Researchers can improve fish growth by studying their feeding behavior, while reducing waste and environmental impact. Various studies have applied deep learning techniques to investigate fish feeding habits. Du et al. [16] developed a novel MobileNetV3-SBSC model for analyzing fish feeding behaviors by utilizing a Mel spectrogram—which visually depicts audio frequencies mapped to the human-perceptible Mel scale—and its attributes to categorize three levels of feeding intensity: vigorous, moderate, and none. They achieved 85.9% accuracy, representing substantial progress toward using deep learning to decode aquaculture-related actions. Cui et al. [19] leveraged Mel Spectrogram features and a convolutional neural network to classify fish feeding behaviors, obtaining a mean average precision of 74%. This measure considers both precision and recall rather than mere accuracy. Ubina et al. [20] assessed fish feeding intensity and employed a three-dimensional convolutional neural network alongside Optical Flow Frames, which visually portray the motion of objects between successive images or video frames by calculating the direction and magnitude of pixel or regional movement. Their model achieved an accuracy of 95%, demonstrating the utilization of advanced deep learning architectures in distinguishing highly complex behaviors in aquaculture systems.

Zhou et al. [21] utilized a CNN with computer vision techniques for fish feeding behavior analysis. CNN was used to detect saliency in images in real-time. A multi-crop voting strategy was used to classify feeding states in Nile tilapia. Their approach was able to classify feeding behaviors with an accuracy of 90%, showing the continued importance of integrating traditional computer vision methods with a deep learning approach to create systems that can robustly analyze behavior.

Similarly, the authors of the LC-GhostNet model, Du et al. [22], presented another fish feeding behavior analysis system, which introduces a new Feature Fusion Strategy, i.e., a technique that integrates multiple feature sets to create a more comprehensive data representation. Their model achieved an accuracy of 97.941%. They represent a notable improvement in fish feeding behavior analysis as their results show the potential of more sophisticated deep learning architectures for precise behavioral analyses in aquaculture. Zhang et al. [23] proposed the MSIF-MobileNetV3 model for analyzing fish feeding behavior through multi-scale information fusion (MSIF). The authors obtained an accuracy of 96.4%. This study reflects a recent trend of incorporating complex attention mechanisms into deep learning architectures for behavioral analysis tasks. Zhang et al. [24] created a MobileNetV2-SENet-based accounting method to identify feeding behavior in fish farms. The model achieved an accuracy of 97.76%. The authors demonstrated the feasibility of using pre-trained models and attention mechanisms to accurately classify complex behaviors in aquaculture. Yang et al. [25] developed a Dual Attention Network using Efficientnet-B2 to analyze short-term feeding behavior in fish farms. Their model achieved an accuracy of 89.56%. Although this accuracy is lower than in other similar studies, the authors argued that integrating attention mechanisms and efficient network architectures was shown to be critical for precise behavioral tasks.

Feng et al. [26] developed a lightweight 3D ResNet-GloRe network to classify fish feeding intensity in real-time video streams. Their model outperforms traditional 3D ResNet networks, achieving 92.68% classification accuracy. It also showcased the feasibility of incorporating relational reasoning modules for precise behavioral examination in aquaculture video streams. In their investigation, Zeng et al. [27] utilized acoustic signals and an improved Swin Transformer model to research fish feeding behaviors. Their Audio Spectrum Swin Transformer model, or ASST, achieved an accuracy of 96.16%, highlighting the effectiveness of attention mechanisms and spectrogram-based qualities for significantly accurate behavioral analysis in aquaculture. Meanwhile, Kong et al. [28] established a recurrent system using energetic learning to investigate fish feeding states. By combining the VGG16 network with lively learning strategies, their model accomplished 98% accuracy, requiring few labeled examples typically necessary for supervised coaching. This highlights the potential of lively learning approaches for productively categorizing behaviors in aquaculture with minimal supervised training data. Hu et al. [29] developed a fish feeding system using computer vision techniques. Instead of relying on underwater image analysis, their system utilized deep learning models to recognize the size of water waves caused by fish feeding, achieving an accuracy of 93.2% and providing an alternative solution for real-world aquacultures. Zheng et al. [30] focused on analyzing golden pompano school feeding behavior using a spatiotemporal analysis of a STAN (spectral Temporal Attention Network) to an aquaculture feeding behavior in aquaculture. The model achieved test results that outperformed all other proposed alternatives and subsequently demonstrated the efficiency of combining both temporal and spectral features to conduct highly precise behavioral analysis in fish in aquaculture tanks. Jayasundara et al. [31] introduced deep learning automatic fish grading using image-based quality assessment. The FishNET-S and FishNET-T model intelligently assessed quality in images for a series of grading use cases, reaching model testing accuracies of up to 84.1%. The analysis of these studies are summarized in Table 1.

Table 1.

Analysis of earlier studies on fish feeding behavior using deep learning.

3. Motivation and Significant Contributions

While various deep-learning architectures have been adopted for fish behavior analysis, we see a gap in the use of lightweight models. While many lightweight models like MobileNet demonstrate high accuracy, they may not be optimized for real-time analysis in resource-constrained environments. In aquaculture, edge devices often have limited computational power and memory, necessitating models that are not only lightweight but also maintain high accuracy. Furthermore, traditional lightweight models may not be tailored to the unique demands of fish-feeding behavior analysis, where the data are derived from acoustic signals rather than conventional image-based sources. This requires specialized feature extraction and handling, which many existing lightweight networks may not address adequately. Many of the existing models incur high computational costs and inference times, rendering them impractical for real-time deployment in aquaculture, while some studies adopt feature extraction techniques, and there is a lack of effort to explore state-of-the-art feature extraction such as how to perform feature extraction tailored specifically for audio-based analysis (e.g., Log Mel Spectrogram extraction). Many of the existing methods are likely not to satisfy the fish farmers’ requirement of low computation costs and inference times, especially if the objective is to deploy the model in aquaculture habitats. There is a gap in creating models specifically optimized for efficiency without compromising accuracy.

In this paper, a novel Involutional Neural Network (INN) [32] architecture was developed for fish behavior analysis in aquaculture. Unlike traditional Convolutional Neural Networks (CNNs), which use fixed kernels to extract features, INNs employ learnable filters that adapt based on context and allow for more flexible feature extraction. This adaptability makes INNs efficient in terms of computational resources and ideal for deployment in environments with limited capacity. Compared to transformers, which focus on self-attention mechanisms for capturing long-range dependencies, INNs maintain a focus on local features with a simpler structure, resulting in a more lightweight architecture. The proposed architecture is of a reduced size and exhibits significantly lower computational costs and faster inference times compared to traditional models for this application. Therefore, due to simplicity and speed, the developed architecture aims to tackle the identified research gaps by proposing Involutional Neural Network (INN) architecture tailored for reduced size and computational efficiency.

The significant contributions of this work are as follows:

- The utilization of Involutional Neural Network architecture for fish behavior analysis.

- We employ Log Mel Spectrograms converted from audio waveforms and subsequent feature extraction methods from Log Mel Spectrograms.

- Our proposed method prioritizes efficiency without compromising accuracy, making it suitable for real-time deployment in aquaculture environments.

4. Materials and Methods

4.1. Experimental Setup and Materials

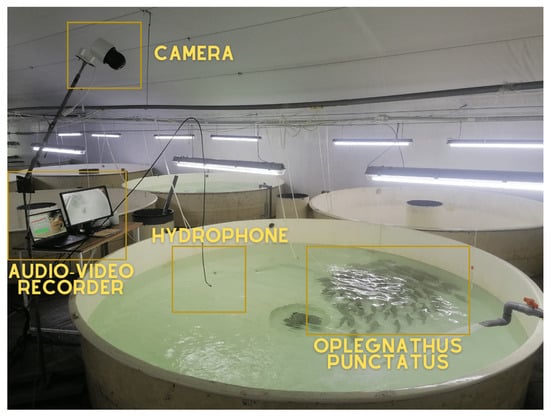

Our experimental facility was established within the cutting-edge labs of Mingbo Aquatic Co., Ltd., situated in Yantai, Shandong Province, China. Figure 1 depicts the comprehensive apparatus employed in our investigation. This system integrated a Recirculating Aquaculture System (RAS), a battery of video capture technologies, and leading-edge acoustic data collection instruments to ensure thorough information aggregation. The RAS included culture tanks measuring 330 cm in diameter and 60 cm in depth. Within this system, environmental parameters were tightly regulated, such as maintaining temperature at precisely 26 ± 1 °C, dissolved oxygen levels exceeding 5.5 mg/L, a pH equilibrium of 7.2 ± 0.5, nitrate levels under 0.5 mg/L, and ammonia concentrations capped at ≤0.8 mg/L. The RAS was outfitted with an aeration unit, lighting fixtures, and a sophisticated water purification system to provide optimal circumstances for the O. punctatus.

Figure 1.

The structure of the experimental system of recirculating aquaculture.

As shown in Figure 1, the experimental setup accommodated five culture tanks, each dedicated to rearing varying numbers of fish—5, 15, 40, 70, and 100 individuals. This diversity in population size permitted the investigation of fish feeding practices under assorted conditions. Our video acquisition system employed a Hikvision (City Of Industry, CA, USA) color camera (model: DS-2SC3Q140MY-TE) with 4MP resolution and a frame rate of 25 fps. This assemblage was augmented with an IEEE802.3af standard POE switch (model: DS-3E0105P-E/M) and a Hikvision video recorder (model: DS-7104 N-F1) to guarantee high-quality video material throughout the experiment.

Concurrently, the acoustic data collection system incorporated an omnidirectional LST-DH01 digital hydrophone, exhibiting an operating frequency range of 1 Hz–100 kHz, with a sensitivity of 209 ± 1.5 dB. This hydrophone offered horizontal and vertical directivity of ±2 dB @ 100 kHz, 360° and a maximum sampling rate of 256KSPS. The auditory component of our information aggregation was further strengthened by a Legion Y7000 computer, equipped with an Intel Core i7–10750-H processor and 16 GB of RAM.

During the experiment, the O. punctatus were nourished with Santong Bio-engineering (Weifang, China) Co., Ltd.’s Sea Boy UFO series feed. The nutritional composition of this feed comprised a crude protein content of ≥48.0%, crude fat of ≥9.0%, lysine of ≥2.5%, and total phosphorus of ≥1.5%. The pellet size was standardized at 5 mm. Throughout the experiment, the feeding was was carried out in the exact location to maintain a constant distance from both the camera and the hydrophone. It was provided by experienced aquaculture technicians. Before the experiment, around 230 fish with an average mass of 200 ± 50 g underwent a month-long acclimatization period within the experimental system.

4.1.1. Audio and Video Acquisition

Video data acquisition was executed precisely using the Hikvision camera positioned above the rearing tank, capturing comprehensive scene information. Operating at a rate of 25 frames per second, the camera recorded images, subsequently saving them to the video recorder. This process ensured a detailed temporal record of the fish within the rearing tank.

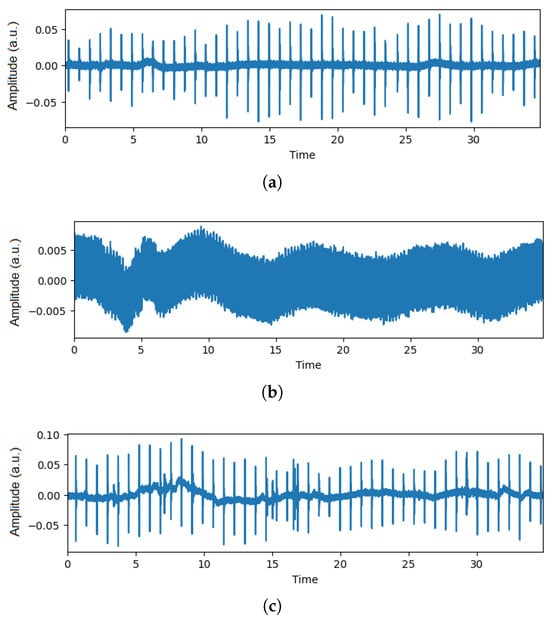

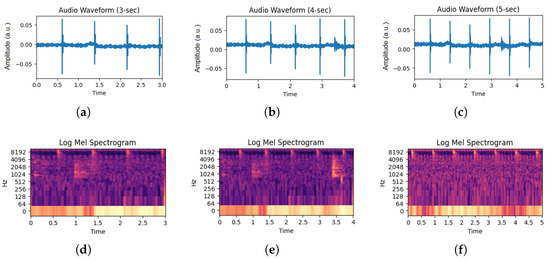

Simultaneously, audio data acquisition focused on capturing the sounds associated with fish feeding. The recording process transpired at a sample rate of 256 kHz. Fish were fed twice daily at 7:30 a.m. and 3:30 p.m., adhering to established guidelines for feeding in realistic aquaculture production environments. The feeding volume equated to 1.5% of the fish’s weight. Audio acquisition commenced 5 min before each feeding session and extended 5 min post-feeding, ensuring a comprehensive audio dataset that encapsulated the entire feeding event. The start and stop times for the feeding events were based on the fish’s activity level, indicating when feeding behavior began and ended. Figure 2 shows the audio waveforms captured during the different feeding intensities. It can be seen that the maximum amplitude goes up to 0.05 a.u. for the ‘None’ class, 0.007 a.u. for the ’Medium’ class, and 0.30 a.u. for the ‘Strong’ class. To illustrate the behavior of each class in acoustic signals, the y-axis was adjusted based on the maximum amplitude.

Figure 2.

Audio waveforms captured from aquaculture: (a) Audio of None class, (b) Audio of Medium class, and (c) Audio of Strong class.

4.1.2. Audio and Video Preprocessing

The collected dataset comprised 160 audio data samples gathered over 16 days during active fish feeding. Sophisticated tools such as Hikvision’s VSPlayer for video handling and Adobe Audition for audio handling were employed to synchronize the audio streams with corresponding video clips. This effort was undertaken to ensure an accurate assessment of feeding dynamics.

It was important to confirm that the audio streams were synchronized with matching video clips to guarantee precise timeline alignment. We were able to build a reliable foundation for examining feeding behavior by using this process, allowing us to more precisely identify instances of feeding and categorize them with enhanced precision.

Following the established feeding intensity standards in aquaculture [27] and drawing on expertise from experienced aquaculture technicians, the feeding intensity classifications were decided. The audio data were separated into three groups depending on the strength of feeding: “strong,” “moderate,” and “none.” The description of these classifications is displayed in Table 2.

Table 2.

The classification standards for fish feeding activity.

Using the synchronized audio, audio segments matching each feeding intensity classification (which included “strong”, “moderate”, and “none”) were extracted. These segments were arranged relating to the strength and timeline that corresponded to where they were categorized in the video. Through employing this extraction process, it was confirmed that every single audio segment precisely represented the particular level of feeding strength.

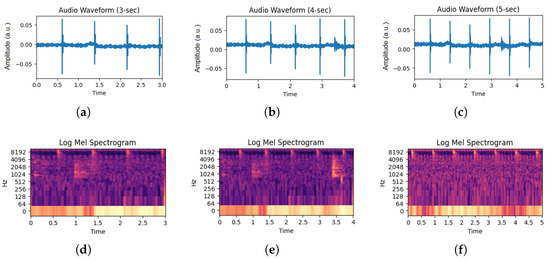

To consider the variations in the strength of feeding over time, each audio clip was subsequently divided into periods of three seconds, four seconds, and five seconds. Through the process of timeline segmentation, a wide range of feeding behavior patterns were obtained, resulting in the establishment of a comprehensive database suitable for training and evaluating deep learning models. The amplitude of the signals from all three segmented classes were normalized to ensure consistency in signal magnitude representation. This dataset was created by selecting training and testing samples from each time category using a random selection of audio clips. The 80–20 format was used to select the samples. For three-second audio clips, 8055 audio clips were used for training and 1151 audio clips were used for testing. Similarly, 8123 audio clips were used for training and 1161 audio clips for testing for four-seconds audio clips. Additionally, 8234 audio clips were used for training and 1177 audio clips were used for testing for five-second audio clips.

4.2. Proposed Approach

In the pursuit of exploring the complex patterns within the audio data, an important step involved the transformation of raw audio waveforms into Log Mel Spectrogram images. This process holds significance in terms of (a) capturing the overall complex frequency characteristics of the fish feeding sounds, and (b) the representation of one-dimensional data to two-dimensional data as spectrogram images.

4.2.1. Log Mel Spectrogram

A Mel spectrogram is a representation of the audio signal in the Mel frequency domain, offering a perceptually relevant visualization of the signal’s frequency components [7]. It plays a significant role in speech and audio processing, providing a more human-centric representation of sound frequencies [8].

The transformation from raw audio waveforms to Mel spectrograms involves several steps. The Mel scale, a perceptual scale of pitches approximating the human ear’s response to different frequencies, is utilized to convert the linear frequency scale into a logarithmic Mel scale. This conversion is achieved through the following formula [12]:

Here, represents the linear frequency in hertz.

Following the Mel scale conversion, the audio signal is divided into small overlapping frames, and a Fast Fourier Transform (FFT) is applied to each frame to obtain the magnitude spectrum. The resulting spectrum is then passed through a filter bank designed according to the Mel scale. This process effectively captures the energy distribution across several frequency bands in a more perceptually relevant manner.

The Log Mel Spectrogram equation can be expressed as [33]:

where:

- is the Mel spectrogram magnitude at frequency f and time t,

- represents the filter bank response for the m-th Mel filter at frequency f in Mel filter bank ,

- denotes the magnitude spectrum of the audio signal in the m-th Mel filter at time t.

The Mel filter bank [34] is a collection of bandpass filters that linearly transforms the frequencies into the logarithmic Mel scale. Each filter in the bank has a different frequency range, and the response is the sum of applying these filters to the signal; this shows how much energy or power is present in each frequency band. Mel filter banks can be computed from Equation (3):

where is linear frequency and are center frequencies on the Mel-scale.

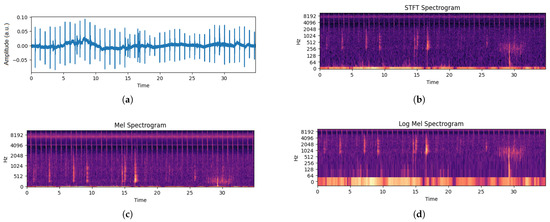

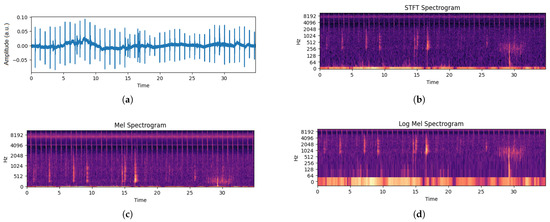

The Log Mel Spectrogram (LMS) encapsulates frequency information, providing a foundation for subsequent feature extraction and model training. The LMS allows for a representation of the acoustic complexities and classification of the associated feeding intensities in O. punctatus aquaculture. Figure 3 depicts the process of converting an audio sample into an LMS spectrogram image. Figure 4 illustrates samples from each class in Log Mel Spectrogram images. Both of these figures use magma colormap.

The next step involves extracting distinctive features from the LMS. The selected features are the Discrete Wavelet Transform (DWT) [35], Gabor filter [36], Local Binary Pattern (LBP) [37], and Laplacian high-pass filter [38]. The DWT provides a comprehensive multi-scale analysis for identifying patterns across different frequency bands, thus resulting in differentiating feeding intensities [39]. Gabor filters enhance the local frequency features, aiding in the detection of specific modulation frequencies that correspond to different feeding behaviors [40]. The LBP captures local texture information, which helps in recognizing subtle differences in the spectrogram, making it valuable for detailed acoustic analysis [41]. The Laplacian high-pass filter highlights significant transitions in the frequency content, enhancing the visibility of important features for fish-feeding behavior classification [42]. A combined image was created by the fusion of all of these features.

Figure 3.

Conversion of audio waveforms to Log Mel Spectrograms (using magma colormap): (a) audio waveform, (b) corresponding short-term Fourier Transform, (c) Mel spectrogram, and (d) Log Mel Spectrogram.

Figure 4.

Segmentation of audio waveforms and their corresponding Log Mel Spectrograms (using magma colormap): (a) Audio of None class, (b) Audio of Medium class, (c) Audio of Strong class, (d) Log Mel Spectrogram of None class, (e) Log Mel Spectrogram of Medium class, and (f) Log Mel Spectrogram of Strong class.

4.2.2. Discrete Wavelet Transform (DWT)

The Discrete Wavelet Transform (DWT) [35] represents a powerful tool for extracting both time and frequency information from signals. In the context of the LMS, the DWT can decompose the signal into different frequency components, allowing for the analysis of signal patterns at various scales [39]. This multiscale analysis is crucial for identifying unique features in acoustic signals, which is useful in classification tasks. Applied to the Mel spectrogram , the DWT decomposes the image into Approximate and Detail coefficients across different scales. The mathematical expression for the DWT can be defined as:

Here, and represent the scale and wavelet functions, respectively.

The 2D Discrete Wavelet Transform (DWT) is applied to the Mel spectrogram to decompose it into approximation and detail coefficients. The transformation is performed in both the frequency f and time t dimensions. The 2D DWT coefficients and are computed using:

Here, and are the scaling and wavelet functions, respectively. At level 3 of the decomposition, the detail coefficients are set to zero, effectively removing the high-frequency components and denoising the signal. The denoised coefficients are denoted as . The denoised coefficients, along with the approximation coefficients , are used to reconstruct the synthesized image using the Inverse 2D DWT (IDWT) operation and are given by:

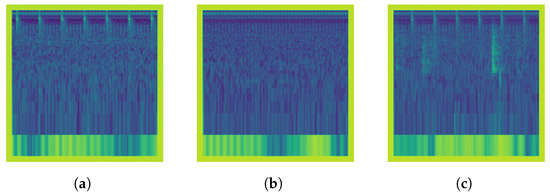

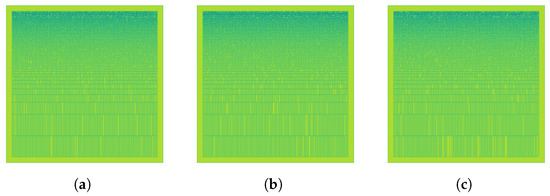

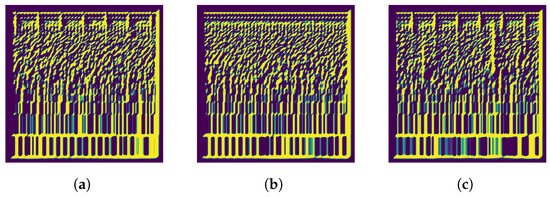

This reconstructed denoised image represents the Mel spectrogram with the high-frequency noise removed, enhancing the clarity of the underlying features. The samples from each DWT extracted features are illustrated in Figure 5. The process is expressed in Algorithm 1.

| Algorithm 1 Denoising the Mel Spectrogram image using DWT |

Input: Mel spectrogram Apply 2D-DWT to and obtain coefficients and Level 3 Decomposition: for to do for k in the range of coefficients at level 3 do ▹ Remove high-frequency components end for end for Reconstruct denoised coefficients: Reconstruct synthesized image: Output: Denoised Mel spectrogram |

4.2.3. Gabor Filter

Gabor filters can extract features related to the modulation frequencies of the signal. This technique is effective in enhancing the local frequency features, thus aiding in the differentiation of complex acoustic patterns [40]. The Gabor filter [36] is defined in the spectral domain as:

Here, and represent the rotated and scaled coordinates, is the Wavelength of the sinusoidal factor, is the orientation of the Gabor filter, is the phase offse, is the standard deviation of the Gaussian envelope, and is the spectral aspect ratio. The application of the Gabor filter to the Mel spectrogram is and is expressed as:

And the application of the Gabor filter to the Mel spectrogram can be represented as:

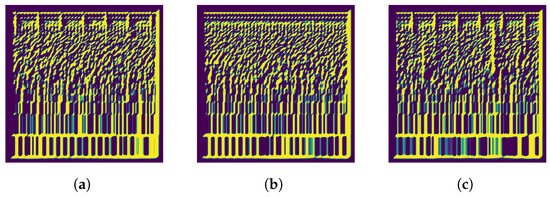

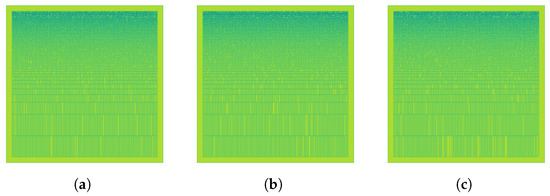

This operation will yield the Mel spectrogram after being filtered by the Gabor filter with the specified parameters. In this study, we set the parameters as: , , , , and . These parameters were selected after a series of experiments in which we tested a range of values to determine the optimal settings. We aimed to identify parameter values that would provide distinct differences among the three feeding intensity classes: “Strong”, “Medium”, and “None” as illsutarated in Figure 6.

4.2.4. Local Binary Pattern

The Local Binary Pattern (LBP) [37] operator captures local patterns within the Mel spectrogram. The LBP can be used to capture local texture patterns, which are critical for recognizing subtle differences in acoustic signals [41]. The application of the LBP to the Mel spectrogram image is expressed as:

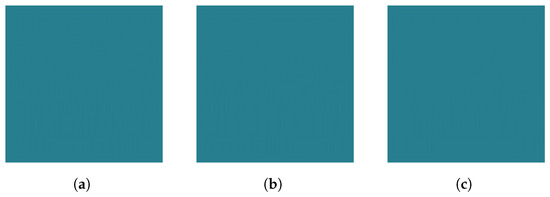

Here, represents the intensity value of the neighboring pixel, and is the intensity of the center pixel at coordinates . Samples from each class are presented in Figure 7.

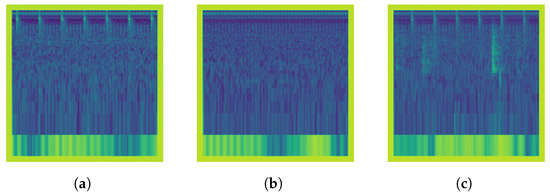

4.2.5. Laplacian High Pass Filter

The Laplacian High Pass Filter (LHPF) [38] is applied to the Mel spectrogram image to enhance fine details and edges. When applied to the LMS, it highlights the rapid changes in the frequency content, making it easier to identify significant transitions in the signal. This is particularly useful for enhancing the contrast of the LMS image and emphasizing the important features for classification tasks [42]. The operation of the Laplacian High Pass Filter involves subtracting a smoothed version of the image from the original image and can be expressed as:

Here, the is the Mel spectrogram after processing; is the original Mel spectrogram; is the 2D Gaussian kernel with standard deviation ; and and represent the second partial derivatives with respect to f and t, respectively. Samples from each class are illustrated in Figure 8.

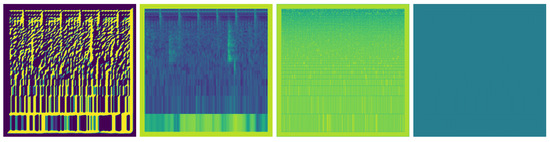

4.2.6. Combination of Extracted Features

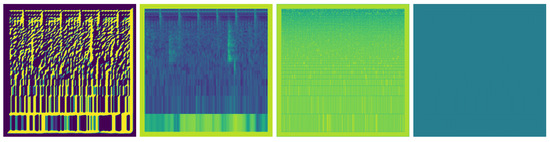

Finally, the images resulting from each feature extraction method are horizontally concatenated to form a composite image as illustrated in Figure 9. This combination of distinctive features presents a holistic representation, capturing complex details of the O. punctatus feeding intensity in a unified format. This feature set, which contains both temporal and spectral information for comprehending fish feeding dynamics, is used as the input for later model training and analysis. The process is illustrated in Algorithm 2.

| Algorithm 2 Preprocessing of the Log Mel Spectrogram image and resulting feature image |

Input: Mel spectrogram image Output: Concatenated processed image Step 1: Denoising with DWT Step 2: Filtering with Gabor filter Step 3: Operation with LBP Step 4: Filtering with Laplacian High Pass Filter (LHPF) Step 5: Concatenation Return |

Figure 5.

Discrete wavelet transform extracted from the corresponding Log Mel Spectrogram Images: (a) None, (b) Medium, and (c) Strong.

Figure 6.

Gabor filter applied on Log Mel Spectrogram Images of each class: (a) None, (b) Medium, and (c) Strong.

Figure 7.

Local Binary Pattern extracted from Log Mel Spectrogram Images of each class: (a) None, (b) Medium, and (c) Strong.

Figure 8.

Laplacian High Pass filter applied on Log Mel Spectrogram Images of each class: (a) None, (b) Medium, and (c) Strong.

Figure 9.

Combined Features extracted from LMS for a sample of ’Strong’ class as an input to the model.

4.3. Network Architecture

In our experiments, we used an Involutional Neural Network (INN) with self-attention. The INN is a novel architecture that utilizes involution layers to effectively capture both local and global features for the purpose of classification. This architecture has been purposefully designed to have a small number of parameters in order to make it deployable on edge devices that have a limited number of computational resources.

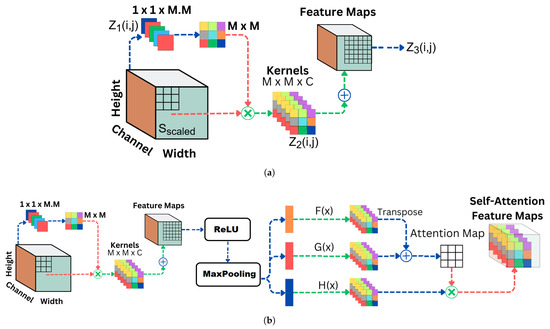

4.3.1. Involutional Layer with Self-Attention

One of the key components that contributes to the perception of the high-performance of the involution network is the mechanism of the involution layer, which replaces the traditional convolutional layers [32]. Through the process of learning dynamic receptive fields for each output vector position, the involution layer becomes more flexible, allowing it to adapt to a variety of contexts for feature extraction. Convolutional neural networks are used to generate feature maps by applying a set of learnable filters to the input image. The operation of the involution layer on the input feature , where f represents the spectral frequency and t represents the time, is presented in the following manner:

Here, represents the output at position in the feature map., denotes the input image values at position , is the weight corresponding to the convolutional filter at position , and b is the bias term. This convolutional operation is applied across the spectral dimensions of the input image. The parameters include the filter size and the bias term b. The activation functions ReLU () are applied after the convolution to introduce non-linearity.

To further enhance the network’s capability to capture long-range dependencies, a self-attention mechanism is incorporated. This mechanism enables the model to weigh the importance of different spectral positions in the input, allowing it to focus on relevant regions while suppressing irrelevant information. The self-attention mechanism is mathematically expressed as:

Here, , , and represent the query, key, and value matrices, respectively, and is the dimensionality of the critical vectors.

4.3.2. Involutional Neural Network Architecture

The overall architecture of the INN with self-attention involves stacking multiple involution layers with intermittent self-attention modules. The images were fed to the INN with a size of . A rescaling layer of range was also introduced. In INN, the Adam optimizer was used, the total number of epochs was 50, the batch size was 32 uniformly, the Rectified Linear Unit (ReLU) activation function was used for the first four layers, and the Softmax activation function was utilized for the final layer. The general architecture of INN implementation is depicted in Figure 10. The parameters of the filters, the strided max-pooling function, and the receptive field are described in the following:

- Layer 1: The first layer of the model consists of involution operations, as shown in Figure 10a. It has three output channels and uses a dynamic kernel of size . The stride is set to 1, and the reduction ratio is 2. Following the involution operation, a max-pooling layer with a pool size of is applied, and ReLU activation is used.

- Layer 2: Similar to the first layer, the second layer also uses involution operations with similar parameters. It uses a dynamic kernel and has three output channels. The reduction ratio is 2, and the stride is set to 1. ReLU activation comes after the involution operation, and a max-pooling layer with a pool size of is applied.

- Layer 3: The third layer continues with involution operations. It has three output channels and uses a dynamic kernel of size . The stride is set to 1, and the reduction ratio is 2. After the involution operation, a max-pooling layer with a pool size of is applied, followed by ReLU activation.

- Layer 4: The fourth layer reshapes the output of the third layer into a format suitable for further processing. It reshapes the output into ‘(height × width, 3)’.

- Layer 5: The fifth layer applies a self-attention mechanism to capture dependencies between different patches of the feature map as illustrated in Figure 10b. It uses an attention width of 15 and a sigmoid activation function.

- Layer 6: Following the self-attention layer, the output is flattened to prepare it for the fully connected layers.

- Layer 7: The sixth layer consists of a fully connected dense layer with 32 hidden units and ReLU activation.

- Layer 8: The final layer is another fully connected dense layer with three output units, corresponding to the number of classes in the dataset. It does not have an explicit activation function as it is followed by the softmax activation applied during model compilation for probabilistic classification.

Figure 10.

Involutional Neural Network: (a) involution layer, (b) involution layer with self-attention.

The summary of the proposed model is given in Table 3.

Table 3.

Summary of the proposed Involutional Neural Network.

The model is trained using the processed Mel spectrogram images as input and the corresponding feeding intensity labels. Training involves minimizing the categorical cross-entropy loss function to ensure the model accurately predicts the feeding intensity. The loss function is given as:

where N represents the total number of samples; is the one-hot encoded ground truth for sample i; and class k, is the predicted probability for sample i and class k.

4.4. Performance Evaluation Metrics

Performance Evaluation Metrics for multi-class classification such as Accuracy, Macro Weighted Precision , Macro Weighted Recall , and Macro F1-score have been used.

Accuracy for multi-class classification is given as:

Precision and recall for binary-class classification for a generic class k are given as:

For multi-class classification, MWP and MWR are used and given as:

The Macro F1-score for multi-class classification is given as:

5. Results and Discussion

5.1. Results and Comparison

We distributed the dataset into three sub-datasets, with each representing a different duration of audio segments: 3 s, 4 s, and 5 s. Each sub-dataset was split into training and testing sets in an 80–20 ratio to ensure robust evaluation of the model’s performance. We utilized the proposed Involutional Neural Network (INN). On dataset of 3-s dataset, the INN achieved an accuracy of 97.11%. On a dataset of 4-s, the model achieved an accuracy of 92.73%, while on a dataset of 5-s, the model achieved an accuracy of 90.56%. Table 4 shows the performance achieved by the INN on each dataset.

Table 4.

Comparison of the performance of INN on sub-datasets.

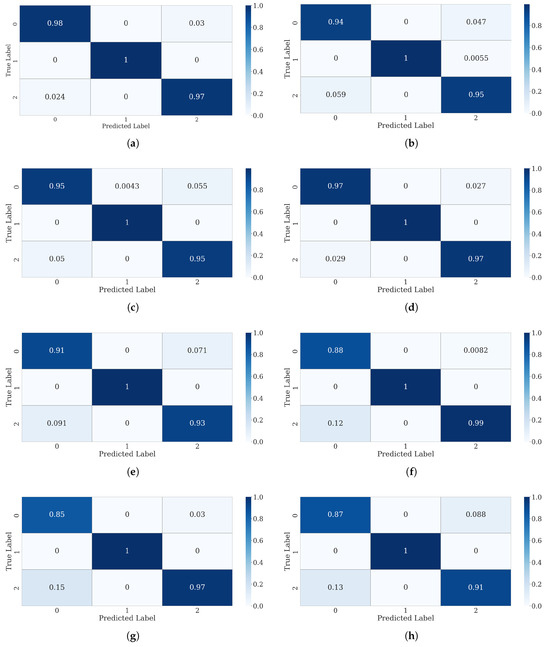

Since the model achieved better accuracy on a 5-s dataset, we have utilized several pre-trained transfer learning models for comparison. Figure A1 illustrates the confusion matrices of the proposed model and the pre-trained models. Although the confusion matrices do not illustrate a significant difference among the models, a closer look at parameters provides a different outlook. Table 5 illustrates the performance observations of various deep learning models, including the proposed Involutional Neural Network (INN), alongside well-established architectures such as VGG16, VGG19, ResNet50, Xception, EfficientNet-B0, InceptionV3, and MobileNetV2.

Table 5.

Comparison of the performance of Pretrained Transfer Learning Models on 5-s dataset.

As observed, the proposed INN demonstrates a substantially smaller model size and number of parameters compared to variant counterparts. This feature makes it a strong deployment candidate in resource-constrained environments, especially in edge computing, where memory and computational resources are more expensive. The lightweight architecture of the INN also manifests excellent classification accuracy, outperforming several established models with significantly larger parameter counts—a strong indication of its capability to encapsulate intricate patterns within audio features and accurately classifying feeding intensities. Moreover, the efficient training and inference times of the INN highlighted its potential suitability in real-time applications, where quick predictions are crucial in ensuring timely decision-making processes.

VGG16 and VGG19 demonstrate higher parameter counts and larger model sizes compared to the INN despite their simplicity and effectiveness, which, while achieving reasonable accuracies, quantify slower training and inference times and computational overhead, which are less attractive in edge computing scenarios; ResNet50 and Xception also show competitive accuracies yet much slower training and inference times and computational overhead compared to the INN. The EfficientNet-B0, however, demonstrates an interesting trade-off between model complexity and accuracy. Having fewer parameters and a smaller model size compared to a few of its counterparts, the EfficientNet-B0 still managed to demonstrate commendable accuracy (competing closely with the VGG19) and relatively faster training and inference times, making it a strong choice for applications that require a balance between model performance and computational efficiency.

InceptionV3 and MobileNetV2 manifest distinct characteristics in their parameter counts, model sizes, and performance metrics; the former demonstrates a competitive number of parameters and model sizes compared to ResNet50 and Xception, showcasing near-competitive accuracies and slightly slower training and inference times, which may limit its potential suitability in real-time applications, specifically, compared to the model performance and computational efficiency of the MobileNetV2. The latter has a considerably lower number of parameters and model sizes compared to its counterparts in this comparison, as well as demonstrating impressive accuracies near the competitive level of accuracies exhibited by more complex architectures; moreover, its notably faster training and inference times make it a strong deployment candidate in latency-sensitive application, where real-time predictions are crucial.

5.2. Involutional Neural Networks Compared with Convolutional Neural Networks

Involutional Neural Networks (INNs) differ from traditional Convolutional Neural Networks (CNNs) primarily in how they process input data. Instead of using fixed convolutional kernels to extract features from spectral images, INNs apply involution operations, which involve learnable filter kernels that can adapt to the context of the data. Thus, they flexibly capture local and contextual features related to our dynamic local feature approach. Since our task differs from conventional image-based applications in which CNNs excel at identifying spatial patterns, here we use Mel spectrograms, which represent audio signals in time and frequency dimensions. The structural differences of spectrograms from standard spectral images and their non-spatial nature require an anatomy to capture patterns there. The INN uses more local resupply learning that focuses on the feature extraction’s temporal and frequency characteristics. Considering how Mel spectrograms are dramatically different from the natural form of spectral images, the INN adapts its receptive domain to the surrounding context to extract meaningful features from time-frequency data. With fewer parameters and low computation costs compared to standard CNNs, the INN provides our light feature extraction method that facilitates processing Mel spectrograms. This lightness is a more significant advantage when deploying models on the brink or in surging-platform situations. The implementation of the INN in our experiment is effective due to its flexibility and efficiency of feature extraction from Mel spectrograms. This is critical in pattern recognition and distinguishing the survival time and intensity levels. The INN is resilient enough to handle tasks due to its adaptivity and simplicity, which diverges from the standard spectral images. However, it is less promoted when exposed to performance issues than complex integrative tasks. When given a broader dataset, the model capacity may shrink due to its simplicity and reduced sets of parameters. Major models prefer complex architectures and higher sets of parameters, which provide a shorthand, detailed feature increase in speed and accuracy with leveling. While the INN is efficient, CNNs would perform better when deployed in more enormous datasets tasks or broader complex set tasks.

5.3. Potential Deployment of INN Model on Edge Devices

As edge devices usually have constrained computational resources, the model architecture chosen has a strong influence regarding performance, efficiency, and viability in real-world use-cases. This is especially relevant when considering the proposed implementation of the INN model on edge devices. The implementation strategy for the INN is framing the choice of model in providing a lightweight and efficient model that can be supported in a low-resources environment like edge devices. INNs were developed to maintain high accuracy and effectiveness while reducing the model’s parameters and computational overhead. The INN parameters were kept to a minimum in order to reduce computational overhead while maintaining high accuracy, whereas traditional Convolutional Neural Networks can be resource intensive. INNs have optimized parameters and accuracy, making them efficient. The cost savings from INNs over other CNN-based methods with edge devices come from less model complexity. The convolutional parameters are higher than INNs; this requires less memory capacity because the computational cost during the inference process is lower. Since in most cases the devices are power-limited, the INN model is suitable. Furthermore, the INN uses involution operations over traditional convolutions. Since the computational cost of the latter is more significant but with involution, the computation is less; hence there is less inference time. This is vital for real-time-based edge devices. Although the exact savings depend on various factors, such as the device specification and the model architecture, an overall reduction in resource requirement and processing time is achieved with the INN, hence making it suitable as a lightweight model for an edge network as opposed to a bigger CNN-based model with large resource requirements. The savings might not be sufficient to make an impact, but with computational efficiency even small differences are significant. The traditional CNN-based method requires complex operations and more memory capacity, leading to long inference time and power consideration. This makes INNs a viable source based on deployment area. For instance, an aquaculture monitoring system and real-time data analysis are instances that may require this.

6. Conclusions

This paper presents a novel utilization of the Log Mel Spectrogram features that introduces a holistic approach for identifying fish feeding dynamics. It includes four methods of feature extraction: the Discrete Wavelet Transform, the Gabor filter, the Local Binary Pattern, and the Laplacian High Pass Filter. The Log Mel Spectrogram allows one to obtain a deeper insight into the fish’s feeding behavior. Moreover, it leverages a novel model, the Involutional Neural Network with self-attention capabilities. As a result, the model can efficiently capture the token relationship and self-attention in the obtained feature space. Consequently, the model has a distinguishable performance verified on the different duration intervals, demonstrating high accuracy, precision, recall, and F1-score. The introduced methodology can distinguish between three feeding intensities of O. punctatus, hence revealing evidence about its feeding behavior. As for future work, there are many potential directions to pursue, including integrating more techniques for feature extraction, working with multimodal datasets, and building more sophisticated deep learning models. Involutional Neural Networks have proven to be efficient, simplifying the structure, computation, and number of parameters of the model relative to Convolutional Neural Networks, which can deal with large datasets. By simplifying the network, we reduce its complexity and performance. Convolutional Neural Networks possess more complex architectures and parameter counts and thus are beneficial for large datasets and complicated inquiries because they possess higher accuracy and generalization. Based on this, it is safe to say that it is plausible to deploy INNs to the edge considering all the requirements. This approach can achieve a balance between performance and competence.

Author Contributions

Conceptualization, H.A.U.R.; data curation, M.A.; formal analysis, D.L.; funding acquisition, D.L.; investigation, U.I., Z.D. and M.F.Q.; methodology, U.I., M.A. and M.F.Q.; project administration, Z.M. and H.A.U.R.; resources, Z.D.; software, M.A.; supervision, D.L.; validation, D.L.; visualization, Z.M., M.F.Q. and H.A.U.R.; writing—original draft, U.I. and M.F.Q.; and writing—review and editing, Z.D. and Z.M. All authors have read and agreed to the published version of the manuscript.

Funding

The authors are grateful for financial support for the creation and application of a green and efficient intelligent factory for aquaculture [grant number 2022YFD2001700]; key technology research; and the creation of digital fishery intelligent equipment [grant number 2021TZXD006].

Institutional Review Board Statement

The animal study protocol was approved by the Ethics Committee of Laizhou Mingbo Aquatic Co., Ltd. in June 2022. The approval documentation is included as a supplementary document. The experiment is non-invasive and does not harm the fish or affect their normal growth.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets presented in this article are not readily available because the data are part of an ongoing study. Requests to access the datasets should be directed to corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Figure A1.

Confusion matrices: (a) Involutional Neural Network, (b) VGG16, (c) VGG19, (d) ResNet50, (e) Xception, (f) EfficinetNet-b0, (g) InceptionNetV3, and (h) MobileNetV2.

References

- Liao, J.; Zhang, X.; Kang, S.; Zhang, L.; Zhang, D.; Xu, Z.; Qin, Q.; Wei, J. Establishment and characterization of a brain tissue cell line from spotted knifejaw (Oplegnathus punctatus) and its susceptibility to several fish viruses. J. Fish Dis. 2023, 46, 767–777. [Google Scholar] [CrossRef]

- Boyd, C.E.; D’Abramo, L.R.; Glencross, B.D.; Huyben, D.C.; Juarez, L.M.; Lockwood, G.S.; McNevin, A.A.; Tacon, A.G.J.; Teletchea, F.; Tomasso, J.R., Jr.; et al. Achieving sustainable aquaculture: Historical and current perspectives and future needs and challenges. J. World Aquac. Soc. 2020, 51, 578–633. [Google Scholar] [CrossRef]

- Kandathil Radhakrishnan, D.; AkbarAli, I.; Schmidt, B.V.; John, E.M.; Sivanpillai, S.; Thazhakot Vasunambesan, S. Improvement of nutritional quality of live feed for aquaculture: An overview. Aquac. Res. 2020, 51, 1–17. [Google Scholar] [CrossRef]

- Wang, J.; Rongxing, H.; Han, T.; Zheng, P.; Xu, H.; Su, H.; Wang, Y. Dietary protein requirement of juvenile spotted knifejaw Oplegnathus punctatus. Aquac. Rep. 2021, 21, 100874. [Google Scholar] [CrossRef]

- Li, D.; Wang, Z.; Wu, S.; Miao, Z.; Du, L.; Duan, Y. Automatic recognition methods of fish feeding behavior in aquaculture: A review. Aquaculture 2020, 528, 735508. [Google Scholar] [CrossRef]

- Liu, Z.; Li, X.; Fan, L.; Lu, H.; Liu, L.; Liu, Y. Measuring feeding activity of fish in RAS using computer vision. Aquac. Eng. 2014, 60, 20–27. [Google Scholar] [CrossRef]

- Mushtaq, Z.; Su, S.F. Environmental sound classification using a regularized deep convolutional neural network with data augmentation. Appl. Acoust. 2020, 167, 107389. [Google Scholar] [CrossRef]

- Mushtaq, Z.; Su, S.F.; Tran, Q.V. Spectral images based environmental sound classification using CNN with meaningful data augmentation. Appl. Acoust. 2021, 172, 107581. [Google Scholar] [CrossRef]

- Khan, A.A.; Raza, S.; Qureshi, M.F.; Mushtaq, Z.; Taha, M.; Amin, F. Deep Learning-Based Classification of Wheat Leaf Diseases for Edge Devices. In Proceedings of the 2023 2nd International Conference on Emerging Trends in Electrical, Control, and Telecommunication Engineering (ETECTE), Lahore, Pakistan, 27–29 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Singh, A.; Mushtaq, Z.; Abosaq, H.A.; Mursal, S.N.F.; Irfan, M.; Nowakowski, G. Enhancing Ransomware Attack Detection Using Transfer Learning and Deep Learning Ensemble Models on Cloud-Encrypted Data. Electronics 2023, 12, 3899. [Google Scholar] [CrossRef]

- Qamar, H.G.M.; Qureshi, M.F.; Mushtaq, Z.; Zubariah, Z.; Rehman, M.Z.U.; Samee, N.A.; Mahmoud, N.F.; Gu, Y.H.; Al-masni, M.A. EMG gesture signal analysis towards diagnosis of upper limb using dual-pathway convolutional neural network. Math. Biosci. Eng. 2024, 21, 5712–5734. [Google Scholar] [CrossRef]

- Qureshi, M.F.; Mushtaq, Z.; ur Rehman, M.Z.; Kamavuako, E.N. Spectral image-based multiday surface electromyography classification of hand motions using CNN for human–computer interaction. IEEE Sens. J. 2022, 22, 20676–20683. [Google Scholar] [CrossRef]

- Shahzad, A.; Mushtaq, A.; Sabeeh, A.Q.; Ghadi, Y.Y.; Mushtaq, Z.; Arif, S.; ur Rehman, M.Z.; Qureshi, M.F.; Jamil, F. Automated Uterine Fibroids Detection in Ultrasound Images Using Deep Convolutional Neural Networks. Healthcare 2023, 11, 1493. [Google Scholar] [CrossRef] [PubMed]

- Afshan, N.; Mushtaq, Z.; Alamri, F.S.; Qureshi, M.F.; Khan, N.A.; Siddique, I. Efficient thyroid disorder identification with weighted voting ensemble of super learners by using adaptive synthetic sampling technique. Aims Math. 2023, 8, 24274–24309. [Google Scholar] [CrossRef]

- Khalil, S.; Nawaz, U.; Zubariah; Mushtaq, Z.; Arif, S.; ur Rehman, M.Z.; Qureshi, M.F.; Malik, A.; Aleid, A.; Alhussaini, K. Enhancing Ductal Carcinoma Classification Using Transfer Learning with 3D U-Net Models in Breast Cancer Imaging. Appl. Sci. 2023, 13, 4255. [Google Scholar] [CrossRef]

- Du, Z.; Cui, M.; Wang, Q.; Liu, X.; Xu, X.; Bai, Z.; Sun, C.; Wang, B.; Wang, S.; Li, D. Feeding intensity assessment of aquaculture fish using Mel Spectrogram and deep learning algorithms. Aquac. Eng. 2023, 102, 102345. [Google Scholar] [CrossRef]

- Føre, M.; Frank, K.; Norton, T.; Svendsen, E.; Alfredsen, J.A.; Dempster, T.; Eguiraun, H.; Watson, W.; Stahl, A.; Sunde, L.M.; et al. Precision fish farming: A new framework to improve production in aquaculture. Biosyst. Eng. 2018, 173, 176–193. [Google Scholar] [CrossRef]

- O’Donncha, F.; Stockwell, C.L.; Planellas, S.R.; Micallef, G.; Palmes, P.; Webb, C.; Filgueira, R.; Grant, J. Data Driven Insight Into Fish Behaviour and Their Use for Precision Aquaculture. Front. Anim. Sci. 2021, 2, 695054. [Google Scholar] [CrossRef]

- Cui, M.; Liu, X.; Zhao, J.; Sun, J.; Lian, G.; Chen, T.; Plumbley, M.D.; Li, D.; Wang, W. Fish Feeding Intensity Assessment in Aquaculture: A New Audio Dataset AFFIA3K and a Deep Learning Algorithm. In Proceedings of the 2022 IEEE 32nd International Workshop on Machine Learning for Signal Processing (MLSP), Xi’an, China, 22–25 August 2022; pp. 1–6, ISSN 2161-0371. [Google Scholar] [CrossRef]

- Ubina, N.; Cheng, S.C.; Chang, C.C.; Chen, H.Y. Evaluating fish feeding intensity in aquaculture with convolutional neural networks. Aquac. Eng. 2021, 94, 102178. [Google Scholar] [CrossRef]

- Zhou, C.; Xu, D.; Chen, L.; Zhang, S.; Sun, C.; Yang, X.; Wang, Y. Evaluation of fish feeding intensity in aquaculture using a convolutional neural network and machine vision. Aquaculture 2019, 507, 457–465. [Google Scholar] [CrossRef]

- Du, Z.; Xu, X.; Bai, Z.; Liu, X.; Hu, Y.; Li, W.; Wang, C.; Li, D. Feature fusion strategy and improved GhostNet for accurate recognition of fish feeding behavior. Comput. Electron. Agric. 2023, 214, 108310. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, C.; Du, R.; Kong, Q.; Li, D.; Liu, C. MSIF-MobileNetV3: An improved MobileNetV3 based on multi-scale information fusion for fish feeding behavior analysis. Aquac. Eng. 2023, 102, 102338. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, J.; Li, B.; Liu, Y.; Zhang, H.; Duan, Q. A MobileNetV2-SENet-based method for identifying fish school feeding behavior. Aquac. Eng. 2022, 99, 102288. [Google Scholar] [CrossRef]

- Yang, L.; Yu, H.; Cheng, Y.; Mei, S.; Duan, Y.; Li, D.; Chen, Y. A dual attention network based on efficientNet-B2 for short-term fish school feeding behavior analysis in aquaculture. Comput. Electron. Agric. 2021, 187, 106316. [Google Scholar] [CrossRef]

- Feng, S.; Yang, X.; Liu, Y.; Zhao, Z.; Liu, J.; Yan, Y.; Zhou, C. Fish feeding intensity quantification using machine vision and a lightweight 3D ResNet-GloRe network. Aquac. Eng. 2022, 98, 102244. [Google Scholar] [CrossRef]

- Zeng, Y.; Yang, X.; Pan, L.; Zhu, W.; Wang, D.; Zhao, Z.; Liu, J.; Sun, C.; Zhou, C. Fish school feeding behavior quantification using acoustic signal and improved Swin Transformer. Comput. Electron. Agric. 2023, 204, 107580. [Google Scholar] [CrossRef]

- Kong, Q.; Du, R.; Duan, Q.; Zhang, Y.; Chen, Y.; Li, D.; Xu, C.; Li, W.; Liu, C. A recurrent network based on active learning for the assessment of fish feeding status. Comput. Electron. Agric. 2022, 198, 106979. [Google Scholar] [CrossRef]

- Hu, W.C.; Chen, L.B.; Huang, B.K.; Lin, H.M. A Computer Vision-Based Intelligent Fish Feeding System Using Deep Learning Techniques for Aquaculture. IEEE Sens. J. 2022, 22, 7185–7194. [Google Scholar] [CrossRef]

- Zheng, K.; Yang, R.; Li, R.; Guo, P.; Yang, L.; Qin, H. A spatiotemporal attention network-based analysis of golden pompano school feeding behavior in an aquaculture vessel. Comput. Electron. Agric. 2023, 205, 107610. [Google Scholar] [CrossRef]

- Jayasundara, J.M.V.D.B.; Ramanayake, R.M.L.S.; Senarath, H.M.N.B.; Herath, H.M.S.L.; Godaliyadda, G.M.R.I.; Ekanayake, M.P.B.; Herath, H.M.V.R.; Ariyawansa, S. Deep learning for automated fish grading. J. Agric. Food Res. 2023, 14, 100711. [Google Scholar] [CrossRef]

- Irfan, M.; Mushtaq, Z.; Khan, N.A.; Althobiani, F.; Mursal, S.N.F.; Rahman, S.; Magzoub, M.A.; Latif, M.A.; Yousufzai, I.K. Improving Bearing Fault Identification by Using Novel Hybrid Involution-Convolution Feature Extraction With Adversarial Noise Injection in Conditional GANs. IEEE Access 2023, 11, 118253–118267. [Google Scholar] [CrossRef]

- Qureshi, M.F.; Mushtaq, Z.; Rehman, M.Z.U.; Kamavuako, E.N. E2CNN: An Efficient Concatenated CNN for Classification of Surface EMG Extracted From Upper Limb. IEEE Sens. J. 2023, 23, 8989–8996. [Google Scholar] [CrossRef]

- Sigurdsson, S.; Petersen, K.B.; Lehn-Schiøler, T. Mel Frequency Cepstral Coefficients: An Evaluation of Robustness of MP3 Encoded Music. In Proceedings of the 7th International Conference on Music Information Retrieval, Victoria, BC, Canada, 8–12 October 2006; pp. 286–289. [Google Scholar]

- Othman, G.; Zeebaree, D.Q. The Applications of Discrete Wavelet Transform in Image Processing: A Review. J. Soft Comput. Data Min. 2020, 1, 31–43. [Google Scholar]

- Hammouche, R.; Attia, A.; Akhrouf, S.; Akhtar, Z. Gabor filter bank with deep autoencoder based face recognition system. Expert Syst. Appl. 2022, 197, 116743. [Google Scholar] [CrossRef]

- Vu, H.N.; Nguyen, M.H.; Pham, C. Masked face recognition with convolutional neural networks and local binary patterns. Appl. Intell. 2022, 52, 5497–5512. [Google Scholar] [CrossRef] [PubMed]

- Fu, G.; Zhao, P.; Bian, Y. $p$-Laplacian Based Graph Neural Networks. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 6878–6917, ISSN 2640-3498. [Google Scholar]

- Mallat, S.G. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Jain, A.K.; Farrokhnia, F. Unsupervised texture segmentation using Gabor filters. Pattern Recognit. 1991, 24, 1167–1186. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Gonzalez, R.C. Digital Image Processing; Pearson Education: Chennai, India, 2009. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).