1. Introduction

Chicken is one of the most popular meats in the world. Therefore, the chicken breeding industry has great economic value. In the process of chicken breeding, cageless rearing is more conducive to the growth of chickens than cage rearing [

1,

2]. Proper breeding density can improve the growth performance of chickens, as well as their immunity and carcass yield [

3,

4,

5], while too intensive feeding can negatively affect the health of chickens [

6,

7]. Moreover, many steps such as welfare breeding [

8], feed feeding, stocking, and slaughtering are needed to obtain accurate quantities. Therefore, rapid and accurate estimation of flock density is a very important research field.

Traditional poultry farming requires artificial observation of behavior and health status, manual feeding, and counting. This requires a lot of labor, which increases the cost of breeding. An irregular and inaccurate manual operation may cause harm to chickens. Moreover, due to the small space and high density of chickens, if the staff do not carry out complete disinfection before entering the chicken house, they may pass germs to the chickens, which spread quickly, causing serious losses [

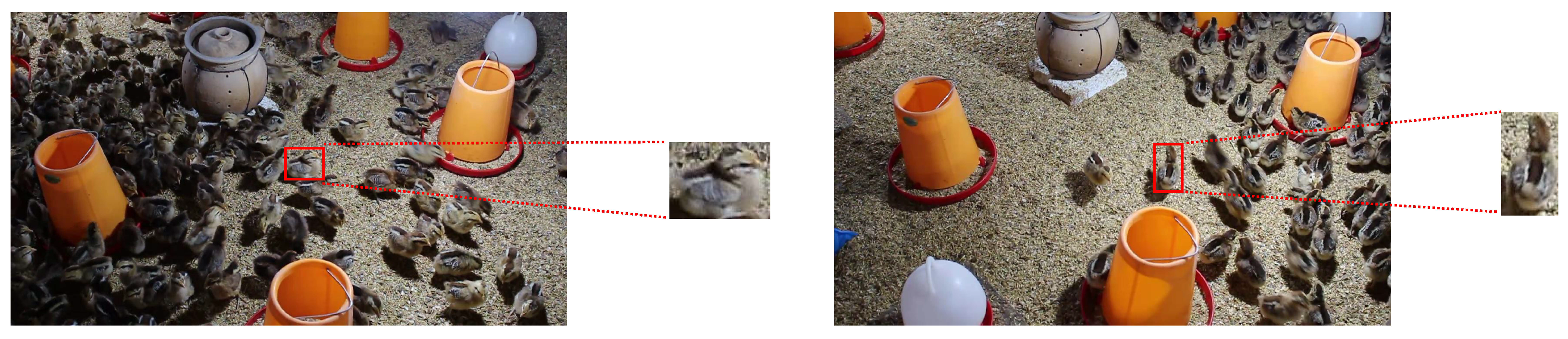

9]. It is obviously difficult for chicken farm managers to count manually, which can lead to wrong amounts, low efficiency, and subjective influences. In particular, overlapping chickens and different perspectives can cause large errors with complex backgrounds. These problems are common in large-scale breeding enterprises. Therefore, it is necessary to develop and implement efficient, automated, and highly accurate counting methods, so as to fully improve efficiency and truly realize intelligent and automated management.

Digital technology with intelligent monitoring methods is widely used in poultry health and welfare management [

10], which can realize rapid, accurate, automatic, non-invasive monitoring in the process of poultry breeding, and help replace some labor-intensive tasks in poultry breeding. A novel fully automated and non-invasive monitoring system was proposed to detect lame behavior according to the important correlation between characteristic variables and broiler gait scores [

11]. A real-time automatic feed weight monitoring system was developed to automatically detect the intake and weight of a single turkey, to study the feed conversion rate and feeding behavior of a single turkey in a population environment [

12]. A segmentation algorithm could effectively separate a broiler from the background, extract the pose information of the broiler, and accurately and quickly identify the health status of the broiler with an accuracy rate of 99.47% [

13]. However, these methods belong to shallow learning, which limits performance when dealing with complex problems, due to a lack of deep topology and big data support.

Deep learning is a method of data representation-based learning that consists of multiple processing layers, to learn data representations with multiple levels of abstraction [

14]. An attention encoder and convolutional neural network (CNN) were used to detect and classify chicks in different living stages, with an accuracy of 95.2% [

15]. CNN was used to monitor the opening beak behavior, spatial dispersion, and movement of chickens [

16]. A deep neural network (DNN) and cross-entropy in information theory were used to train rooster and hen classifiers with an average accuracy of 96.85%, which provided a feasible method for the estimation of sex ratio [

17]. A camera-based system was developed to monitor chickens and detect injuries caused by pecking at each other using neural networks [

18]. A DNN-based pose estimation method was first applied to classify and identify the poses of broilers [

19]. Experiments showed that chickens in standing, walking, running, eating, and resting states could be identified.

Counting refers to estimating the number of objects in a target area, to obtain information and perform timely control operations, and is currently widely used in various fields [

20,

21,

22]. In the field of chicken counting, some researchers have conducted related studies. One study applied a localized fully convolutional network (LCFCN) to count and locate chickens from images of the pins with an average absolute error (MAE) of 0.56 [

23]. A fully convolutional network termed DenseFCN was designed, and a point-supervised method was used to count chickens in the image, with an accuracy of 93.84% and speed and 9.27 frames per second (FPS) [

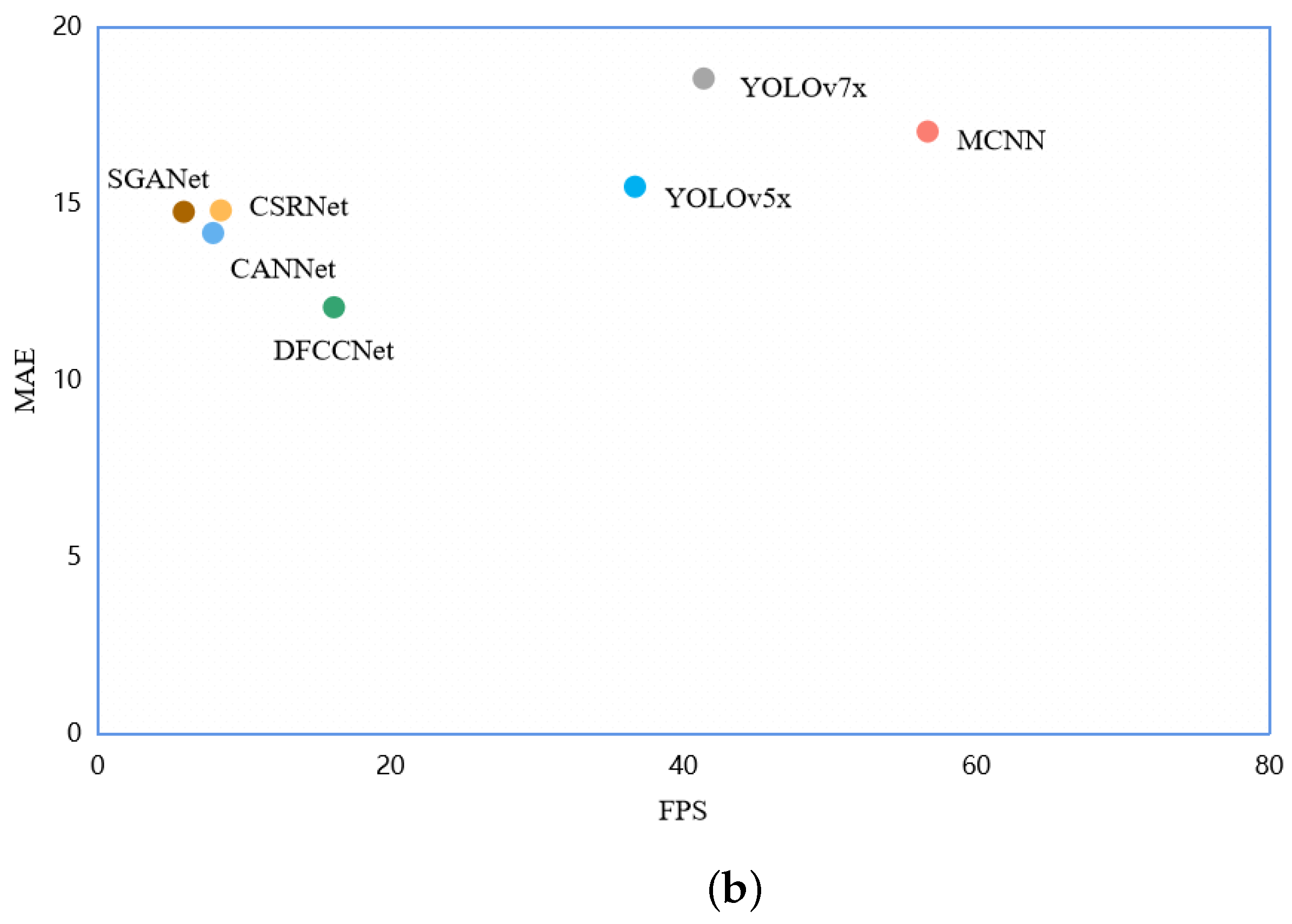

24]. A novel framework called YOLOX-birth growth death (Y-BGD) was proposed for automatic and accurate cage-free broiler counting, with an average accuracy of 98.13% [

25]. A automatic system based on YOLOv5 was applied to count chickens in the image with an average accuracy rate of 95.87% [

26]. We analyzed the datasets used in these studies, in which [

23,

24,

25] counted chickens in sparse scenes, with an average number of chickens per image of 27.89, 24.36, and 2.38, respectively. Ref. [

26] counted chickens in both sparse and dense scenes, with an average number of chickens per picture of 155.37. However, the counting objects in all of these studies were large chickens. In real farming environments, large and small chickens are not the same in farming scenes; the same number of small chickens are farmed in smaller areas, and small chickens are more likely to congregate together, thus creating more serious occlusion and shadowing. More research is needed to address this situation.

At present, the mainstream counting technologies can be divided into two types, namely object detection and density map regression. Object detection can identify objects in images and determine their size and position using prediction boxes. The number of prediction boxes can be used to count. Object detection is mainly applied to two counting scenes: one is to directly detect and count chickens in sparse scenes [

26], and the other is to count chickens passing through the counting line in the corridor by combining tracking algorithms [

25]. But the accuracy is greatly affected in dense environments with heavy occlusion. Density map regression is mainly used in high-density scenes, which can obtain accurate counting by learning the mapping relationship between the picture and the real density map. This method has been applied in aquaculture. A lightweight fish counting model based on density plot regression was used for counting high-density fish [

27]. Multi-scale modules and attention mechanisms were integrated into a network based on density map regression in fish counting, with an accuracy of 97.1% [

28]. Although these experiments were conducted in a dense environment, their experimental environment was also ideal. Serious occlusion and complex backgrounds could still lead to poor stability and accuracy of the model, and it is necessary to further improve the performance.

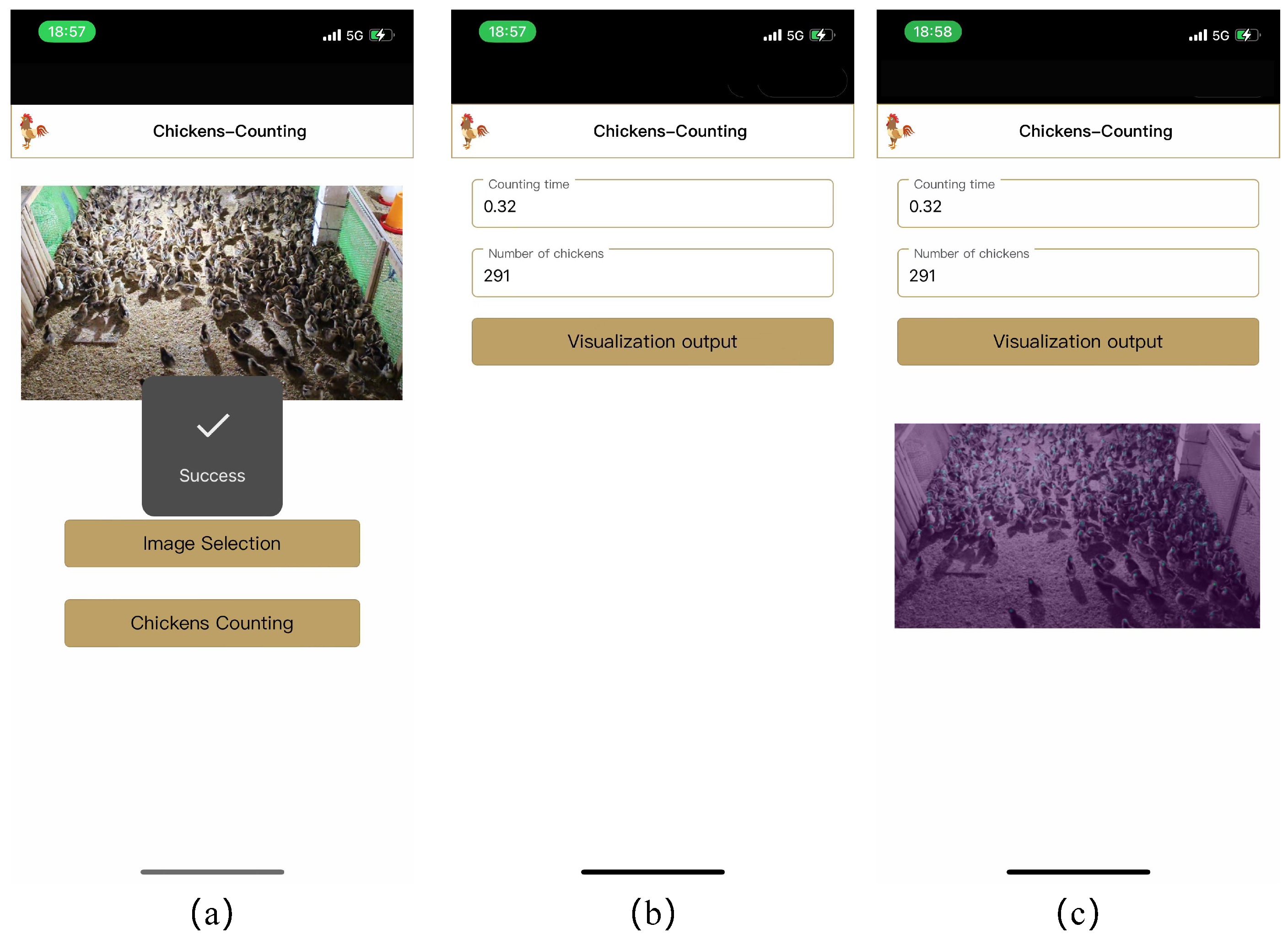

Lightweight models are crucial in practical applications. Deep learning models usually require a large amount of computational resources, such as CPU, GPU, and so on. If the computational burden is too heavy, this will cause the model to run slowly, or even not be able to run on resource-constrained devices. This will make the model unable to meet the requirements of real-time calculation and portability, hindering its application in practice. Therefore, it is important to take into account the weight of the model, while ensuring the correctness of the model.

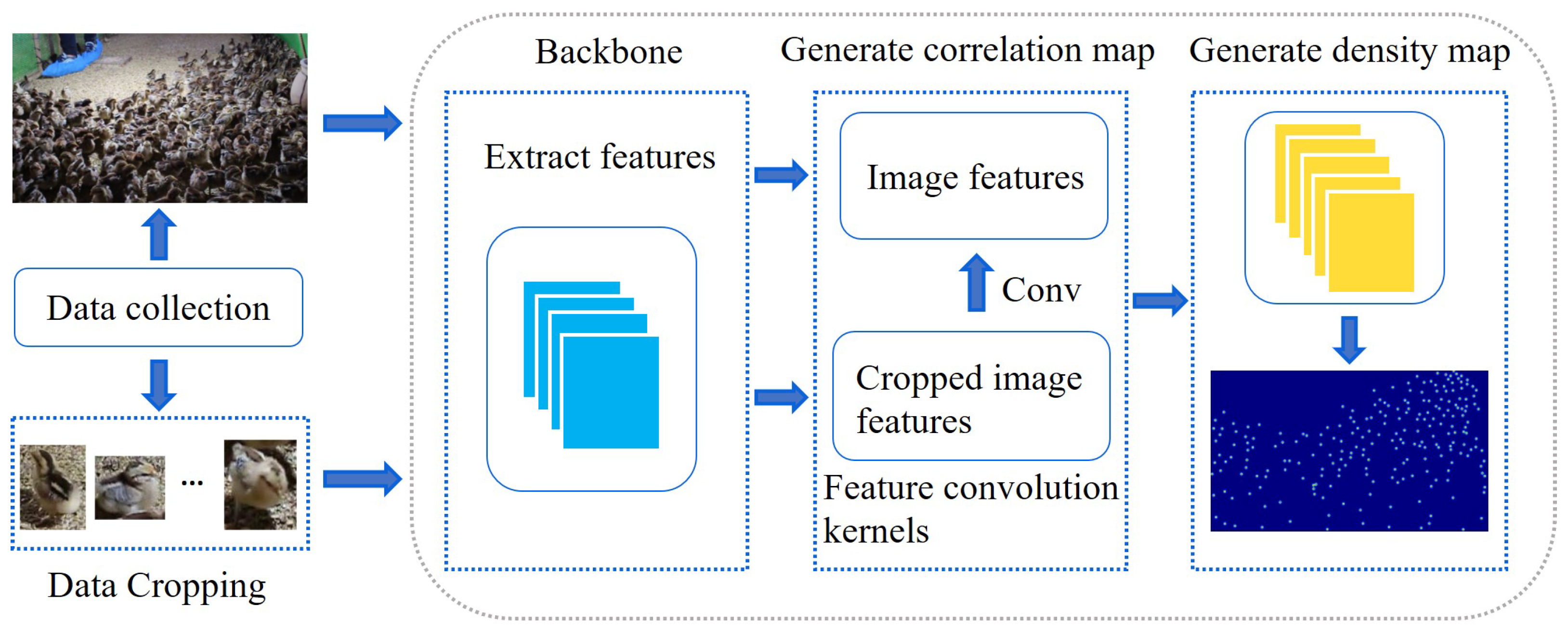

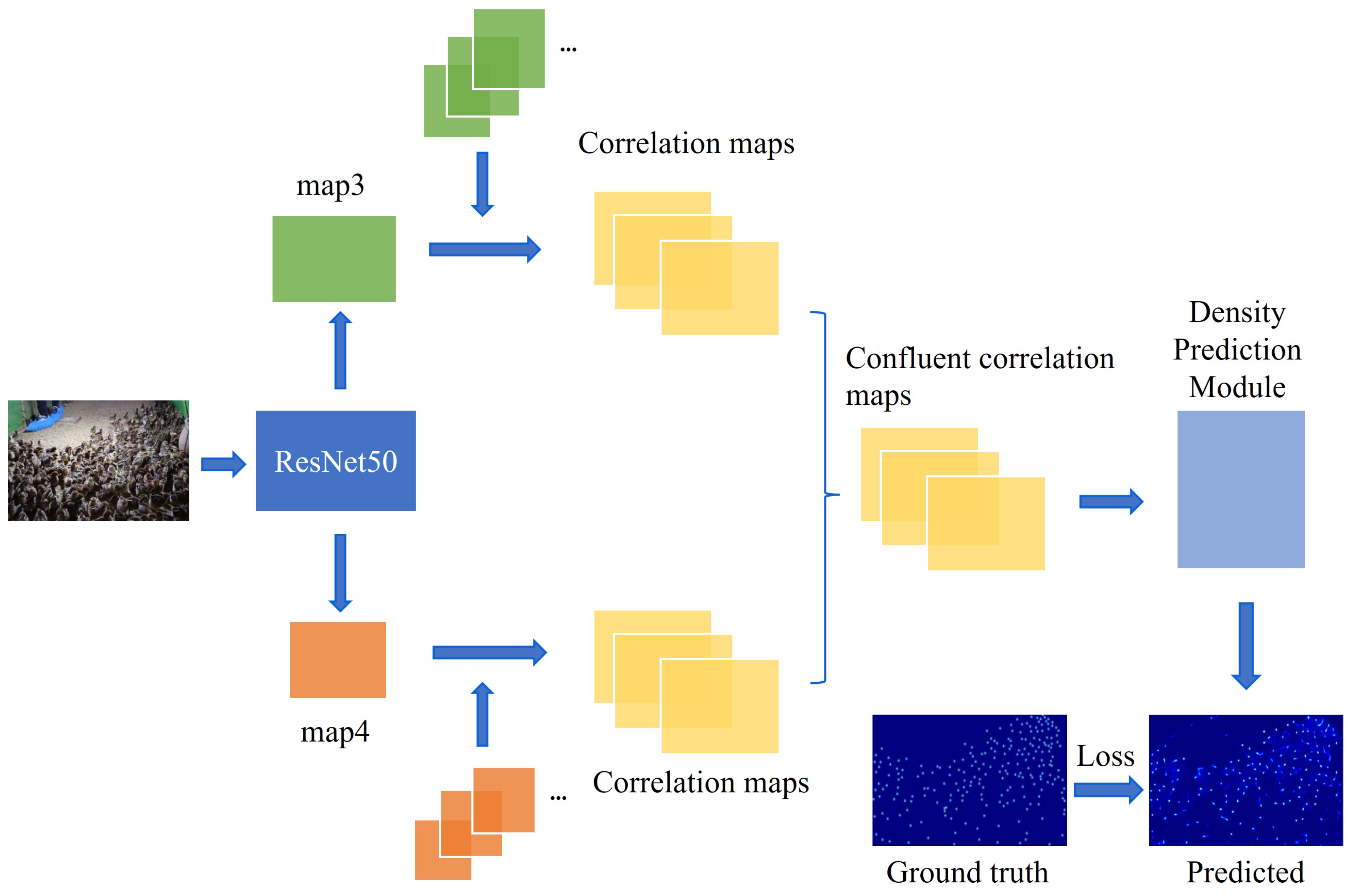

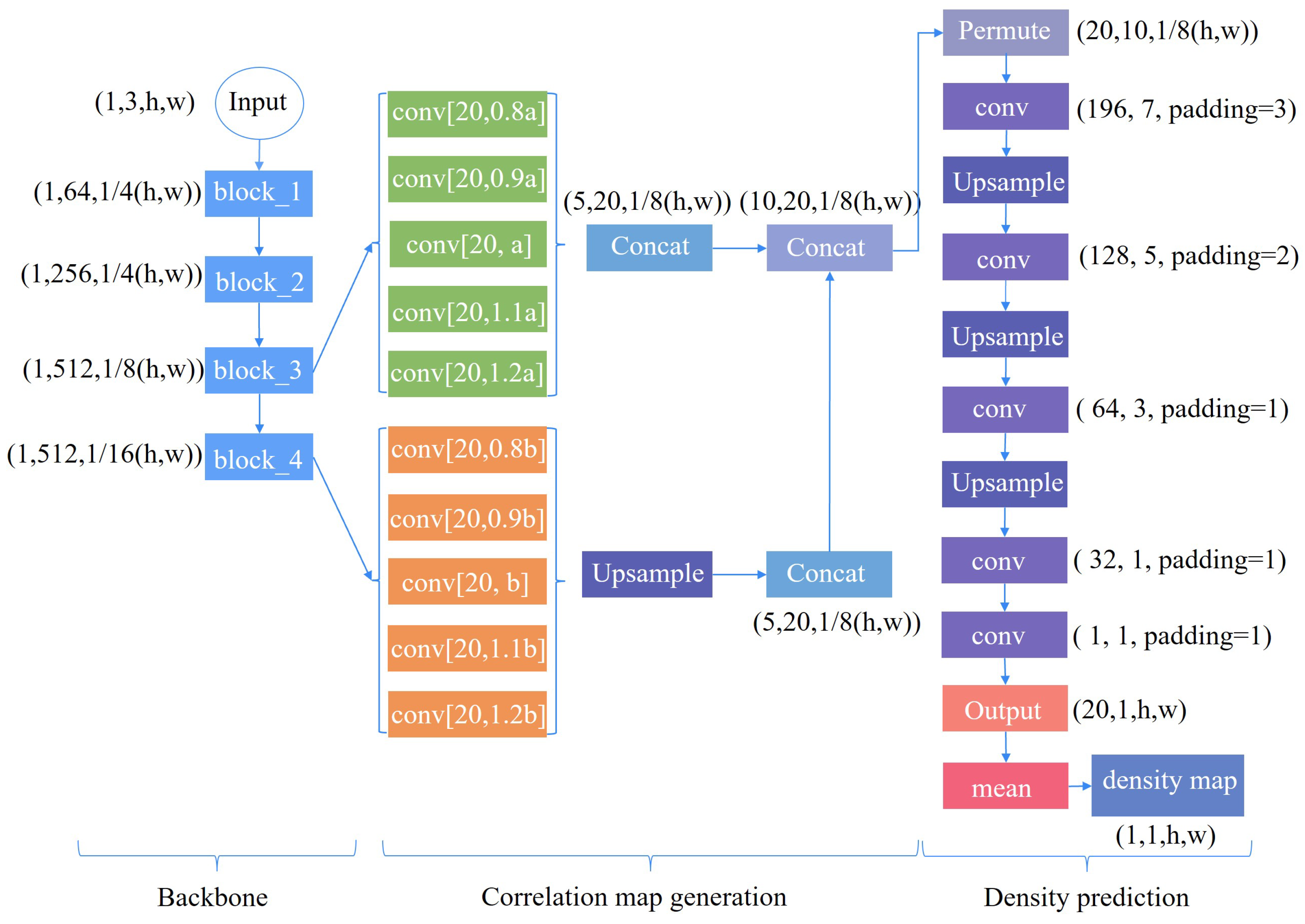

Aiming at avoiding heavy computational effort, and further improving the chicken counting accuracy under serious occlusion and complex environments, a lightweight model based on density map regression is proposed. The main contributions can be summarized as follows:

- (1)

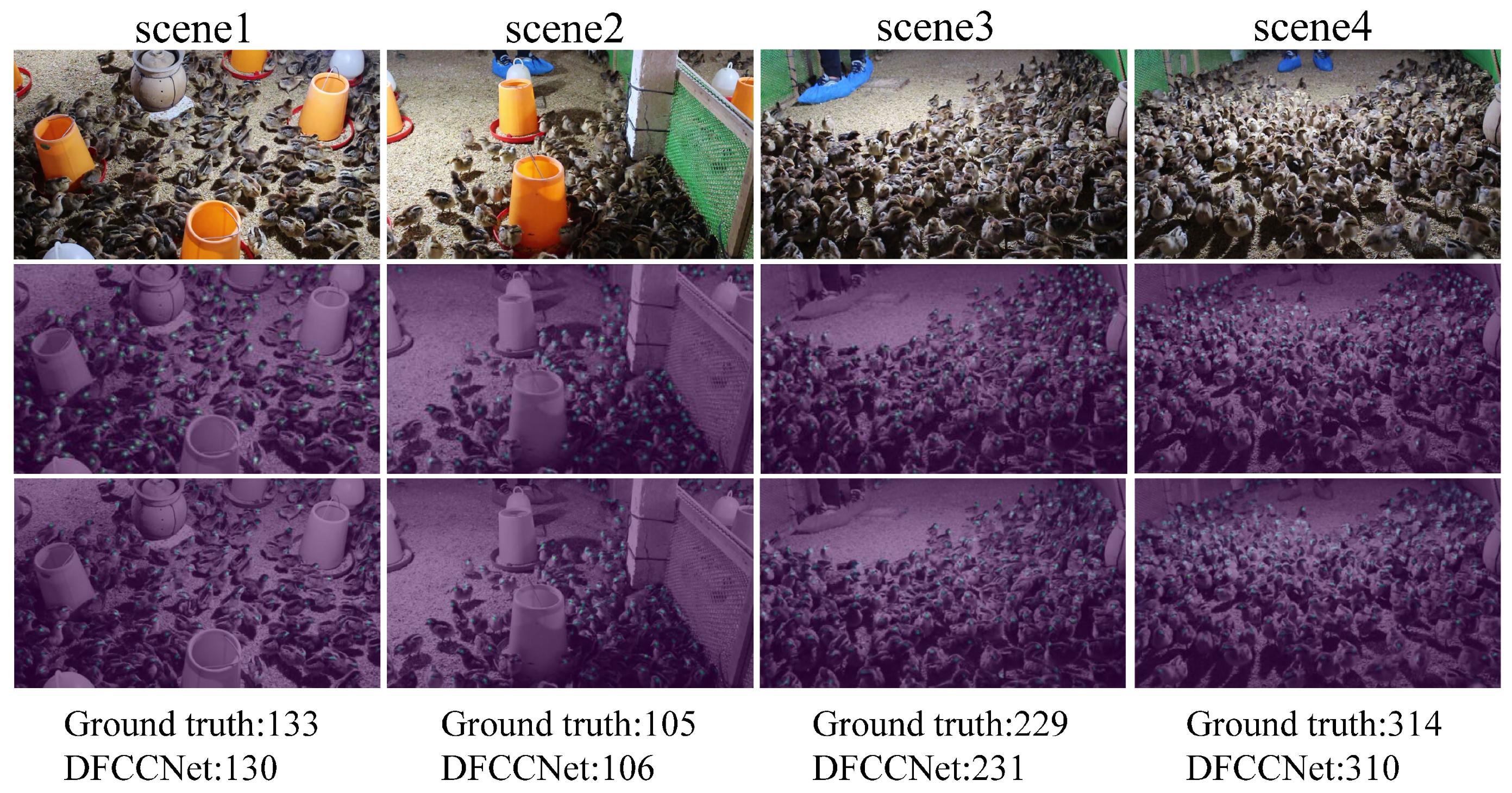

A lightweight framework, DFCCNet, was designed by improving a feature convolution kernels module and proposing a density map module, satisfying the requirements for fast computing power and high detection accuracy in dense-flock chicken-counting tasks;

- (2)

Feature fusion is adopted to obtain more feature information with insufficient lighting; a multi-scaling mechanism can be used for solving irregular sizes; and feature convolution kernels are employed to address serious occlusion;

- (3)

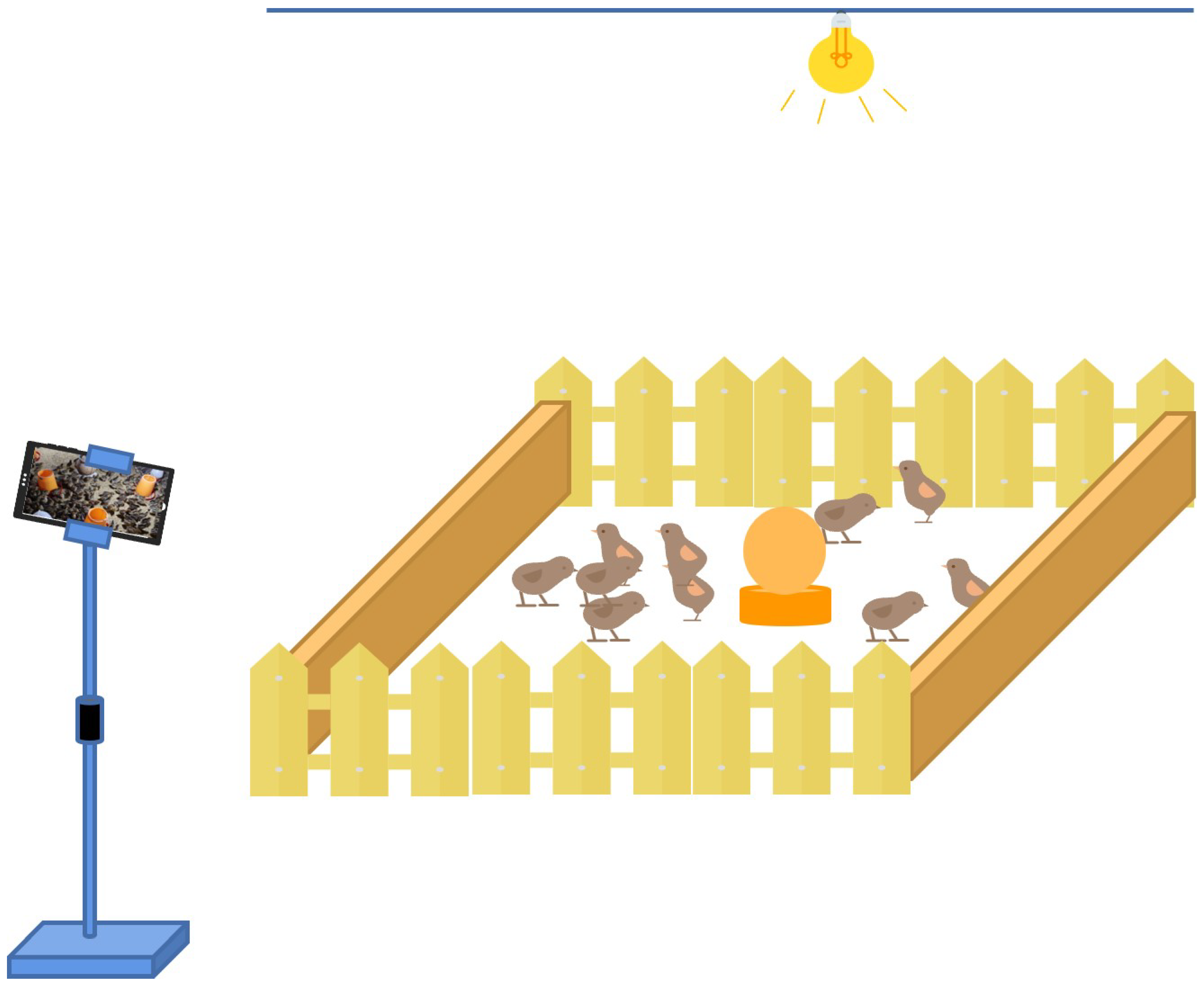

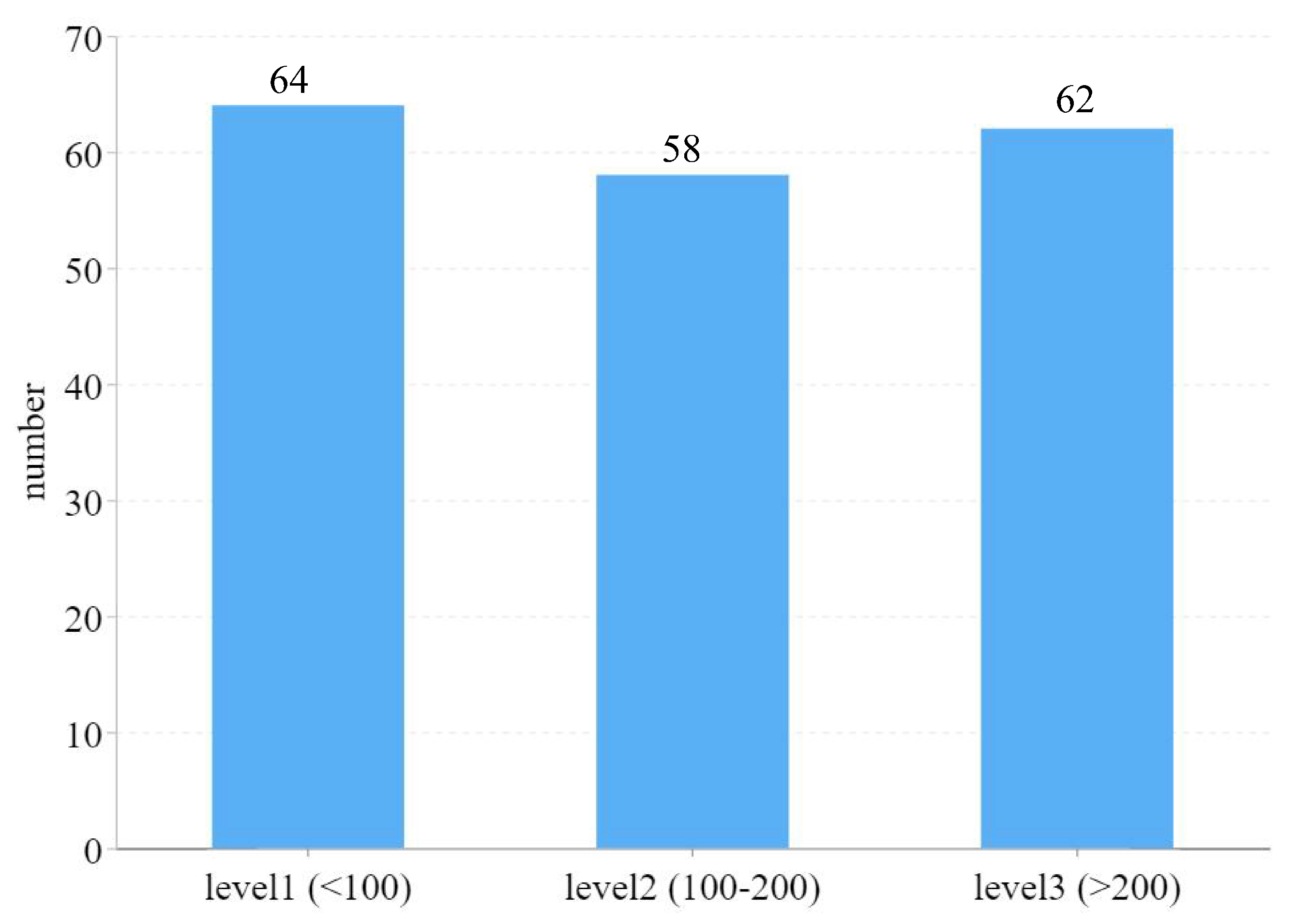

A self-built dataset called Dense-Chicken was collected and constructed in a complex feeding environment, which contains 600 images of 99,916 chickens, in dot annotation files and box annotation files. It can be shared for researchers.

The rest of this article is organized as follows:

Section 2 provides an overall description of the proposed models and methods.

Section 3 describes the details of dataset preparation, which was used to train and evaluate the proposed network. The experimental results and a performance analysis of the proposed network are presented. Finally,

Section 4 concludes the work by providing a summary and prospects of the proposed technique.