Simple Summary

Identifying silkworm pupae species and sex accurately is essential for the hybrid pairing of corresponding species in sericulture, which guarantees the quality of the silkworm eggs and silk. However, there is no cost-effective method that offers a labor-saving and intelligent solution for this. In this study, machine learning and deep learning are used for the automatic recognition of pupae species and sex, either separately or simultaneously, based on the patterns perceived from images. A vast number of postural images of pupae were used for global modeling to eliminate the impact of posture on recognition rate. Six traditional descriptors and six deep learning descriptors were employed for feature extraction and then combined with three machine learning classifiers for identification. Based on that, the model with the best identification performance was screened out, and it can serve as a reference for sericulture breeding.

Abstract

Hybrid pairing of the corresponding silkworm species is a pivotal link in sericulture, ensuring egg quality and directly influencing silk quantity and quality. Considering the potential of image recognition and the impact of varying pupal postures, this study used machine learning and deep learning for global modeling to identify pupae species and sex separately or simultaneously. The performance of traditional feature-based approaches, deep learning feature-based approaches, and their fusion approaches were compared. First, 3600 images of the back, abdomen, and side postures of 5 species of male and female pupae were captured. Next, six traditional descriptors, including the histogram of oriented gradients (HOG), and six deep learning descriptors, including ConvNeXt-S, were utilized to extract significant species and sex features. Finally, classification models were constructed using the multilayer perceptron (MLP), support vector machine, and random forest. The results indicate that the {HOG + ConvNeXt-S + MLP} model excelled, achieving 99.09% accuracy for separate species and sex recognition and 98.40% for simultaneous recognition, with precision–recall and receiver operating characteristic curves ranging from 0.984 to 1.0 and 0.996 to 1.0, respectively. In conclusion, it can capture subtle distinctions between pupal species and sexes and shows promise for extensive application in sericulture.

1. Introduction

Being the birthplace of global sericulture and the foremost silk producer and exporter, China has boosted rural revitalization and sericulturists’ earnings. For example, sericulturists in Guangxi Province, China, earned CNY 20.818 billion from cocoon sales in 2021 []. In addition, sericulture impacts the global economy and culture considerably. Sorting silkworm pupae sexes affects the hybridization rate of eggs and the market competitiveness of silk []. However, various factors, such as production seasonality, brief pupal duration, and rising labor costs, impose challenges in the sorting process [].

Since the last century, researchers have been developing nondestructive and reliable sex sorting methods. Initially, biologists developed silkworm species with sex-linked traits to ease pupal sex sorting [,]; however, these represent only a small fraction of the species. DNA and amino acid analyses can determine sex but are destructive [,]. Recently, powerful spectral and visual techniques have been proposed to identify pupal sexes. These techniques include magnetic resonance imaging (MRI) [], X-ray imaging [], hyper-spectral imaging (HSI) [,], near-infrared (NIR) spectroscopy [], and image recognition []. Among these, NIR is frequently used for sex determination based on differences in morphology, gonadal traits such as eggs, and water content between male and female pupae [,,,,]. Having addressed sericulture challenges in areas like Shandong, China, Zhu et al. developed an NIR system with 97.5% accuracy and a sorting rate of 7.7 pupae per second []. However, NIR requires frequent manual modeling, and the relatively high cost of its components limits its applicability.

Leveraging the stable genetic traits of pupae, low-cost image recognition has shown significant promise in sex identification. Kamtongdee et al. and Tao et al. identified pupa sex based on gonadal traits through image analysis [,,,]. However, these methods require precise pupa positioning, making them less suitable for production lines. Note that methods using pupal appearance can overcome this drawback. Liang et al. achieved a 98% sex recognition rate using machine learning [], and Yu et al. obtained 97% accuracy using deep learning []. However, their studies must address the impact of varying pupae posture on feature extraction, and large datasets are required to train the model to ensure practical feasibility.

As the field advances, research on pupae species identification has been conducted alongside studies on sex identification [,]. Identifying pupal species provides an objective basis for silkworm identity, thereby reducing species mix-ups in breeding and ensuring correct hybridization of the corresponding species. However, there is no reliable and cost-effective method that offers an intelligent and labor-saving solution for this. In response, this study combines image recognition with machine learning and deep learning techniques to develop a model to identify pupae species and sex, either separately or simultaneously, to meet the needs of silkworm breeding factories. Through global modeling of the pupal back, abdomen, and side postures, this proposed method effectively addresses interference caused by changing pupal positions [,].

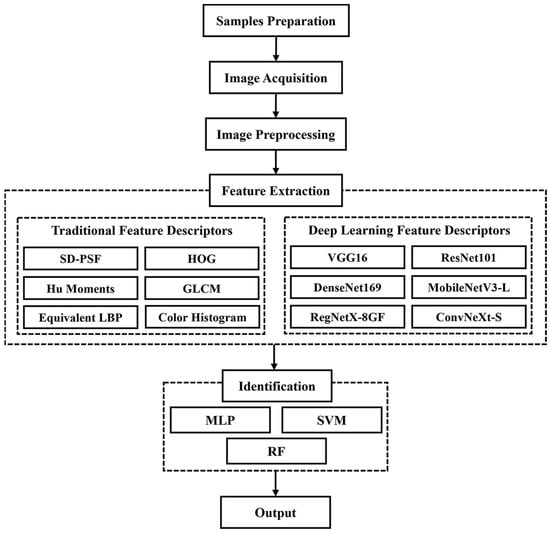

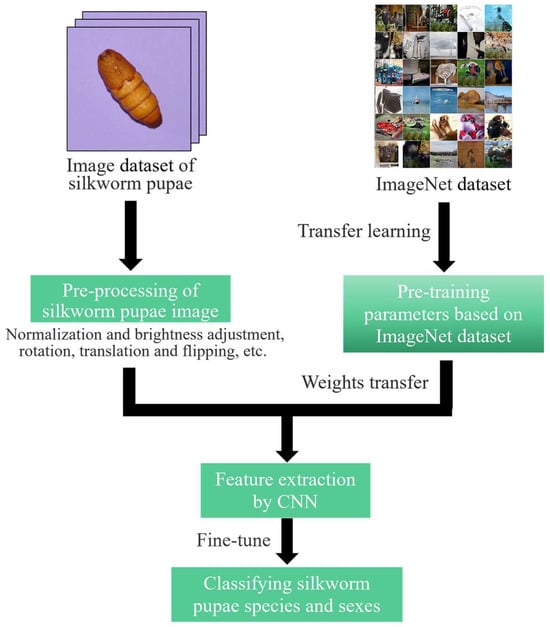

Figure 1 shows a flowchart of the proposed method. First, images of pupae from both sexes across five species were captured. Then, six traditional descriptors (self-designed pupal shape feature (SD-PSF), Hu moments, histogram of oriented gradients (HOG), equivalent local binary pattern (LBP), gray-level co-occurrence matrix (GLCM), and color histogram) and six deep learning descriptors (VGG16, ResNet101, DenseNet169, MobileNetV3-L, RegNetX-8GF, and ConvNeXt-S) were used to extract features from pupae images. The extracted features were then input to three classifiers, namely multilayer perceptron (MLP), support vector machine (SVM), and random forest (RF), to perform the recognition task.

Figure 1.

Flowchart of the identification of silkworm pupae species and sex.

In summary, this paper makes the following contributions:

- (1)

- An economical and intelligent solution for sericulture breeding has been proposed.

- (2)

- A global modeling approach was proposed that, using the constructed pupae image set, addressed posture-related identification challenges.

- (3)

- The {HOG + ConvNeXt-S + MLP} model with the top recognition rate was screened based on an integrated analysis.

- (4)

- The advantages and disadvantages of the proposed model were compared with other techniques.

2. Materials and Methods

2.1. Sample Preparation

Live pupae from five species bred in autumn 2022 were sourced from the Institute of Sericulture Science and Technology Research in Chongqing, China, including the 7532 and 872 species from the Japanese system and the 871, FuRong, and HaoYue species from the Chinese system. Furthermore, skilled workers selected 120 female and 120 male pupae from each species based on the gonadal texture of their tails.

2.2. Image Acquisition and Data Partitioning

To capture pupae images, a system was configured using a digital single-lens reflex camera (D90, Nikon, Tokyo, Japan), paired with a zoom lens (AF-S NIKKOR 18–105 mm f/3.5–5.6 G ED, Nikon, Tokyo, Japan) mounted on a tripod (CVT–999RM, YunTeng, Zhongshan, China). The shooting distance of the lens to the pupa was 34 cm. Under indirect natural light, images were acquired with an F5.6 aperture, sensitivity of 100, automatic exposure, and white balance. The captured images were saved in JPEG format with a resolution of 2848 × 4288 pixels.

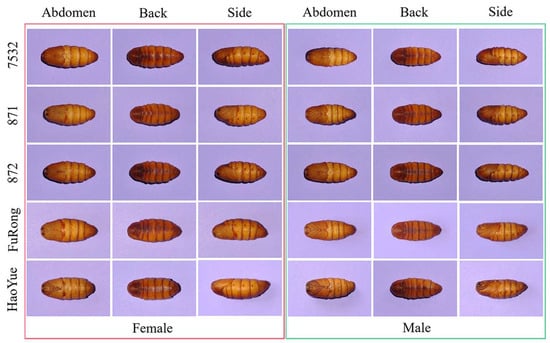

Image acquisition for each species was conducted between the 9th and 11th days during the pupal stage. As shown in Figure 2, each pupa was imaged from the back, abdomen, and side views; therefore, a dataset of 3600 images was collected, representing male and female pupae from 5 species (10 classes with 360 images each). Given the significant differences in pupae weight by species and sex [,], weight served as the basis for data partitioning. For traditional approaches, the datasets were split at a ratio of 8:2 for training and testing. For deep learning and fusion approaches, the split was 8:1:1 for the training, calibration, and testing sets.

Figure 2.

An example of back, abdomen, and side posture images of five species of male and female pupae. 7532, 871, 872, FuRong, HaoYue represent the five silkworm species.

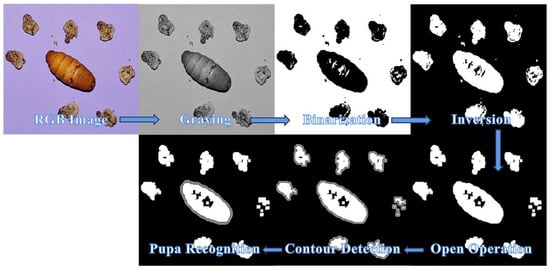

2.3. Image Preprocessing

To precisely separate the pupa from the background, the image preprocessing flow was based on sampled images and involved steps such as graying, binarization, image inversion, open operation, and contour detection. Figure 3 illustrates these operations, during which an area threshold was established for all identified contours to reduce interference from molts during image acquisition. After identifying the pupa, each image was cropped to 320 × 320 pixels (centered around the pupa mass) and then processed for feature extraction.

Figure 3.

Pupa detection under strong interference.

2.4. Feature Extraction

2.4.1. Traditional Feature-Based Approaches

Features function as distinctive attributes for classifying input patterns. Traditional features are the basics of visual attributes, including shape, texture, color, and transform-based features []. Herein, six traditional descriptors were employed to extract features: SD-PSF, HOG, Hu moment, equivalent LBP, GLCM, and color histogram (Table 1).

Table 1.

Number of features extracted by six traditional descriptors and their descriptions.

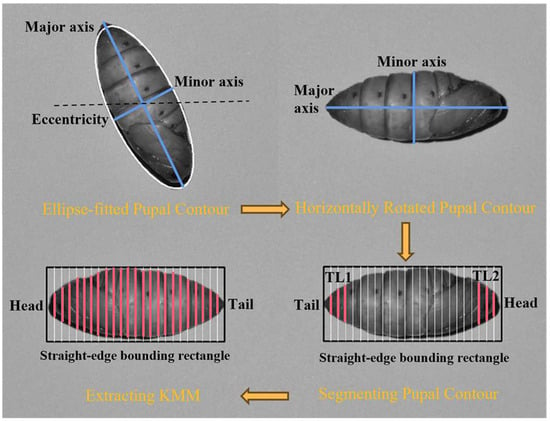

The SD-PSF is derived from the pupal contour, and it includes parameters such as perimeter, area, and dispersity, as well as the minimum, average, and maximum radii, and the radius ratio. It also covers the major and minor axes, aspect ratio, rectangularity, circularity, and compactness by fitting the contour to shapes, e.g., rectangle, circle, and ellipse. In addition, the minor axis at K/24 of the pupal major axis (KMM, where K ranges from 1 to 23) represents the change in the contour’s curvature. As shown in Figure 4, the pupal contour can be placed horizontally using its major axis and the eccentricity of its minimum-fit ellipse. Furthermore, the KMM can be calculated using its straight-edge bounding rectangle. In addition, the pupal head and tail are determined by comparing TL1 and TL2. Herein, if TL2 > TL1, the pupal contour is flipped to maintain the order for feature extraction.

Figure 4.

The process of extracting KMM. The black dashed line represents the horizontal line; the pink solid line and its length represent the KMM and its size.TL: total length.

HOG is a descriptor characterizing an image’s local gradient direction and intensity, and is robust to illumination shifts and invariant to local geometric and photometric transformations []. Hu moments, geometrically invariant moments formed from second- and third-order central moments, excel in pattern recognition and image matching because of their robustness against zooming, translation, rotation, and mirroring [].

GLCM, which is frequently employed in statistical texture analysis, extracts texture features from a gray-level co-occurrence matrix and captures an image’s details, including direction, interval, change range, and speed []. On the other hand, LBP operators highlight the local image texture structure and resist gray-scale variations []. Among them, the equivalent LBP takes advantage of numerical variations in the sequence of differential values between a central pixel and its surrounding pixels.

Color histograms serve as statistical representations of the frequency distribution of color intensity levels in images []. The color histogram, which decouples color information from grayscale and characterizes images based on hue, saturation, and intensity, is particularly well-suited for research in machine vision [].

2.4.2. Deep Learning Feature-Based Approaches

CNN can extract features directly from images through weight sharing and convolutional processes []. Table 2 describes the six CNN architectures explored in this study: VGG16 [], ResNet101 [], DenseNet169 [], MobileNetV3-L [], RegNetX-8GF [], and ConvNeXt-S [].

Table 2.

Details of the six CNN architectures.

Figure 5 shows the research framework for the deep learning approaches. Initially, data augmentation was employed to enhance training performance and prevent model overfitting []. This process included the various transformations, such as flips, translations, rotations, and brightness adjustments, encountered when photographing the pupae. Transfer learning was used to expedite model convergence and boost accuracy []. Particularly, weights pretrained on ImageNet were used to initialize the CNN model, followed by fine-tuning at a lower learning rate (LR).

Figure 5.

The research framework for deep learning feature-based approaches.

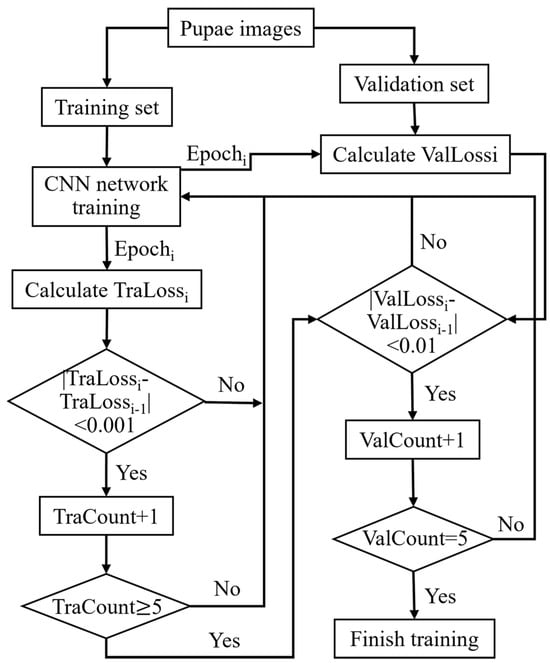

For both training and validation sets, the batch size was set to 64. During CNN training, stochastic gradient descent with a momentum of 0.9 and cross-entropy were employed as the optimizer and loss functions, respectively. A dynamic LR strategy was used, starting with an initial LR of 0.0001 and reducing it by a factor of 0.8 every five epochs of training. As shown in Figure 6, the early stopping method was employed to handle overfitting and halt training early. Herein, convergence began if training loss fluctuated by <0.001 over five epochs. If the validation loss shifts < 0.005 in another five epochs, it is considered fully converged, leading to early termination. Particularly, the best model parameters are saved from peak validation accuracy.

Figure 6.

Flowchart of the early stopping method. Tra: train, Val: validation.

2.5. Identification

In this step, features extracted from the pupa image are input into a classifier for identification. The classifiers include MLP, SVM, and RF, and their parameters and settings are shown in Table 3. Herein, the traditional feature-based approach determines the optimal model hyperparameters through a grid search based on the highest accuracy of the classifier in five-fold cross-validation. These hyperparameters under consideration include the hidden layer size of the MLP, the penalty factor and kernel function’s penalty factor of the SVM, and the number of decision trees in the RF.

Table 3.

Details of parameter settings for each classifier.

2.6. Performance Evaluation

The performance of the proposed model was evaluated based on accuracy, precision, recall, and F1-score. Identifying pupae species and sex involves multi-class classifications; therefore, the arithmetic mean of the metrics was used for all classes, as described in Equations (1)–(4).

2.7. Hardware and Software

This study was executed on a workstation equipped with an Xeon® Platinum 8353P @ 2.60 GHz processor (Intel, Santa Clara, CA, USA) and an GeForce RTX 3090 24 GB GPU (Nvidia, Santa Clara, CA, USA), and the primary programming language was Python.

3. Results and Discussion

3.1. Traditional Feature-Based Approaches

Table 4 shows the accuracy of the traditional descriptors for identifying pupae species and sex separately and simultaneously. Various descriptors, including singles and hybrids of two descriptors, were evaluated.

Table 4.

Classification accuracy of traditional feature-based approaches.

For the single descriptors, the MLP and SVM classifiers using {HOG} and RF using {Color Histogram} achieved peak accuracy rates of 88.47%, 89.44%, and 83.61% in {Species + Sex}, respectively. For species recognition, they reached 91.53%, 92.08%, and 87.92%, respectively. Conversely, {GLCM} was the least effective descriptor among all classifiers.

The hybrid of two descriptors was realized by concatenating the feature vectors of two single descriptors. Here, both MLP and SVM achieved 96.81% in species recognition using {HOG + Color Histogram} and 94.44% and 94.58%, respectively, for {Species + Sex} identification. Additionally, using {SD-PSF + Color Histogram}, the best recognition rates achieved by RF were 93.06% for species and 89.72% for {Species + Sex}. These results surpass the single descriptors, suggesting that the machine learning classifier can learn higher-dimensional vectors and achieve better classification. In contrast, {Hu Moments + GLCM} and {Equivalent LBP + GLCM} were the two least effective hybrid descriptors. Furthermore, {SD-PSF} and related descriptors excelled in sex recognition. Specifically, MLP and SVM with {SD-PSF + Equivalent LBP} and RF with {SD-PSF + Hu Moments} reached recognition rates of 97.78%, 97.50%, and 96.11%, respectively.

3.2. Deep Learning Feature-Based Approaches

Table 5 shows the classification accuracy of the deep learning descriptors in terms of pupae species and sexes.

Table 5.

Classification accuracy of deep learning feature-based approaches.

For single descriptors, MLP, SVM, and RF with {ConvNeXt-S} achieved the highest accuracies for {Species, Sex, Species + Sex} at {97.20%, 96.97%, 95.28%}, {98.74%, 98.64%, 97.68%}, and {97.45%, 96.89%, 94.72%}, respectively. Thus, {ConvNeXt-S} was considered the best choice for the single descriptor. In contrast, {MobileNetV3-L} and {RegNetX-8GF} were the least effective descriptors among all classifiers.

For the hybrids of two descriptors that include {ConvNeXt-S}, MLP achieved the highest recognition rates for {Species, Sex, Species + Sex} using {ResNet101 + ConvNeXt-S} at {98.08%, 97.73%, 95.98%}, and the SVM reached {98.36%, 97.88%, 96.46%} with {DenseNet169 + ConvNeXt-S}. The RF obtained the highest recognition rates of {97.17%, 90.58%} for {Sex, Species + Sex} using {RegNetX-8GF + ConvNeXt-S}. However, these results show a decrease compared to those obtained using single descriptors. This indicates that deep learning performance relies not only on feature dimensions but also on the dataset scale and the interplay between descriptor structures. Moreover, descriptors linked to {MobileNetV3-L} were found to underperform in the classification tasks.

3.3. Fusion Approaches

Based on the above experimental results, this study selected the optimal traditional and deep learning descriptors from each classifier to conduct fusion experiments. Before the descriptors were concatenated, each descriptor’s feature dimensions were compressed to 500. As shown in Table 6, the MLP with {HOG + ConvNeXt-S} reached recognition rates of {99.09%, 99.09%, 98.40%} for {Species, Sex, Species + Sex}, while the RF with {Color Histogram + RegNet-8GF + ConvNeXt-S} obtained the lowest accuracy at {66.84%, 89.92%, 73.01%} for the same categories. Even though the accuracy of the {HOG + ResNet101 + ConvNeXt-S + MLP} model is lower compared to the {HOG + ConvNeXt-S + MLP} model, and the accuracy of the {HOG + DenseNet169 + ConvNeXt-S + SVM} model is lower compared to the {HOG + ConvNeXt-S + SVM} model, both can serve as references for more complex future classification tasks.

Table 6.

Classification accuracy of fusion approaches.

3.4. Comparison of Results by Different Feature Extraction Approaches

The experimental results in Table 4, Table 5 and Table 6 show that the {HOG + ConvNeXt-S + MLP} model achieved the top recognition rates of {99.09%, 99.09%, 98.40%} for {Species, Sex, Species + Sex}. This model outperformed the optimal traditional model {HOG + Color Histogram + SVM} by {2.28%, 2.15%, 3.82%} and the optimal deep learning model {ConvNeXt-S + SVM} by {0.35%, 0.45%, 0.72%}.

Table 7 compares the precision, recall, and F1-score of three models. For all models, precision outperforms recall, thereby suggesting fewer false positives. Based on these results, the features extracted by the deep learning approaches exhibit superior performance compared to the traditional approaches. For example, even when SVM uses {ConvNeXt-S}, its precision, recall, and F1 score (0.9772, 0.9767, and 0.9769) surpass the {HOG + Color Histogram} (0.9468, 0.9458, and 0.9463). In addition, the {HOG + ConvNeXt-S + MLP} model also achieved the highest precision, recall, and F1-score (0.9840, 0.9838, and 0.9839).

Table 7.

Precision, recall, and F1-score of the selected descriptor and classifier combinations.

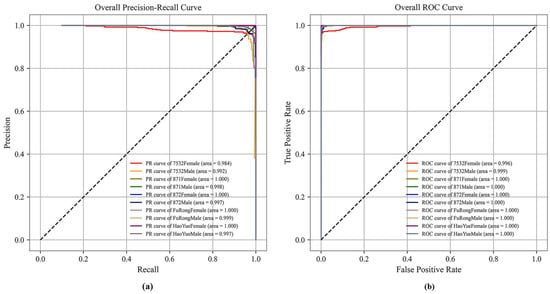

Figure 7 shows the precision–recall (PR) and receiver operating characteristic (ROC) curves to visualize model performance. The area under the PR and ROC curves for all classes in the {HOG + ConvNeXt-S + MLP} model ranges from 0.984 to 1.0 and 0.996 to 1.0, respectively. Here, the areas under the PR and ROC curves for 871Female, 872Female, FuRongFemale, and HaoYueFemale all reached 1.0. This reflects the high reliability of machine and deep learning methods for recognizing pupae species and sex through images.

Figure 7.

(a) PR curve and (b) ROC curve of the {HOG + ConvNeXt-S +MLP} model.

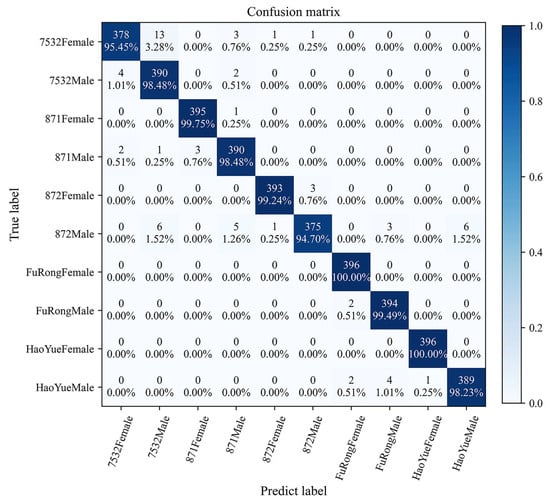

Figure 8 further illustrates the more intuitive classification efficacy of the {HOG + ConvNeXt-S + MLP} model. The accuracy for all classes under this model ranges from 94.70% to 100%, with eight classes exceeding 98%. Particularly, the classification accuracies for the FuRongFemale and HaoYueFemale classes are 100%, corresponding to the RP and ROC curve results. In addition, misclassification mainly occurs between pupae of different sexes within the same species and those of the same sex across different species. On one hand, the {HOG + ConvNeXt-S + MLP} largely misclassifies 7532Male as 7532Female (and vice versa). On the other hand, it predominantly misclassifies 872Male as 7532Male, 871Male, FuRongMale, or HaoYueMale.

Figure 8.

The confusion matrix of the {HOG + ConvNeXt-S +MLP} model.

3.5. Comparison with Other Techniques

Compared to MRI [], X-ray imaging [], HSI [], and NIR [], the proposed global model has a lower cost; it is also nondestructive and nonradiative, unlike X-ray, DNA, and amino acid analysis [,]. The proposed model is based on stable genetic pupae traits unaffected by breeding conditions, thereby reducing the frequent modeling needs of NIR. It can also mitigate the effects of pupal postures on feature extraction and bypass precise positioning for methods based on the gonadal traits [,]. Furthermore, to the best of our knowledge, this is the first study to introduce global modeling to predict pupae species and sex, and it achieved 99.09% accuracy for separate identification and 98.40% for simultaneous recognition. Thus, the proposed global model is more suitable for practical application in sericulture.

4. Conclusions

Based on a dataset of posture images of silkworm pupae, this study aims to establish a global model to accurately identify the species and sex of pupae separately or simultaneously. Three machine learning classifiers (MLP, SVM, and RF) employed traditional descriptors (SD-PSF, HOG, Hu moments, equivalent LBP, GLCM, and color histogram) and deep learning descriptors (VGG16, ResNet101, DenseNet169, MobileNetV3-L, RegNetX-8GF, and ConvNeXt-S) to construct classification models. Next, the model performance of traditional, deep learning, and their fusion approaches were evaluated and compared. The findings demonstrate that the {HOG + ConvNeXt-S + MLP} model achieved top recognition rates of 99.09%, 99.09%, and 98.40% for species, sex, and species + sex, respecively. Additionally, its precision, recall, and F1-score were 0.9840, 0.9838, and 0.9839, with all classes having achieved an area under the PR and ROC curves exceeding 0.984 and 0.996, respectively. These results validate the effectiveness of machine learning and deep learning in recognizing the species and sexes of pupae through image analysis. Future research will collect a broader range of pupal images from different species and sexes to assess the proposed model’s generalizability under varied conditions. This will include the development of a finer-grained neural network based on HOG and ConvNeXt with the aim of improving the detection of subtle variations across species and sexes. Additional efforts will focus on modeling using datasets from diverse breeding batches to precisely identify stable hereditary phenotypic traits within individual pupal species.

Author Contributions

Conceptualization, H.H. and T.Z.; methodology, H.H.; software, H.H. and S.Z.; validation, H.H. and X.C.; formal analysis, H.H.; investigation, H.H., X.C, Y.W. and L.S.; resources, T.Z., B.X. and L.S.; data curation, H.H. and Y.W.; writing—original draft preparation, H.H.; writing—review and editing, T.Z. and S.Z.; visualization, H.H. and D.Z.; supervision, T.Z. and F.D.; project administration, L.S. and B.X.; funding acquisition, T.Z., F.D., H.H. and S.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the China Agriculture Research System of the MOF and MARA (Grant Nos. CARS-18-ZJ0102 and CARS-18-ZJ0103), the Chongqing Postgraduate Research Innovation Project (Grant No. CYS23225), the Chongqing Business Development Special Fund (No. 20230228001237334), and the Sichuan Science and Technology Program (No. 2023NSFSC0498).

Institutional Review Board Statement

Not applicable. This experiment was only an animal identification study without animal ethology.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be available from the corresponding author upon reasonable request. The data are not publicly available because they are part of an ongoing study.

Acknowledgments

The authors would like to thank their schools and colleges, as well as the funders of the project. All support and assistance are sincerely appreciated. Additionally, we sincerely appreciate the work of the editor and the reviewers of the present paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Guangxi Sericulturists’ Income from Cocoon Sales Surpassed 20 Billion Yuan for the First Time Last Year. Available online: http://www.moa.gov.cn/ztzl/wcbgclz/qglb/202209/t20220923_6411531.htm (accessed on 20 August 2022).

- Guo, F.; He, F.; Tao, D.; Li, G. Automatic Exposure Correction Algorithm for Online Silkworm Pupae (Bombyx Mori) Sex Classification. Comput. Electron. Agric. 2022, 198, 107108. [Google Scholar] [CrossRef]

- Tao, D.; Wang, Z.; Li, G.; Qiu, G. Accurate Identification of the Sex and Species of Silkworm Pupae Using Near Infrared Spectroscopy. J. Appl. Spectrosc. 2018, 85, 949–952. [Google Scholar] [CrossRef]

- Kim, K.; Seo, S.; Kim, M.; Ji, S.; Sung, G.; Kim, Y.; Ju, W.; Kwon, H.; Sohn, B.; Kang, P. Breeding of Biparental Sex-Limited Larval Marking Yellow Cocoon Variety. Int. J. Ind. Entomol. 2016, 32, 54–59. [Google Scholar] [CrossRef][Green Version]

- Ma, S.; Zhang, S.; Wang, F.; Liu, Y.; Liu, Y.; Xu, H.; Liu, C.; Lin, Y.; Zhao, P.; Xia, Q. Highly Efficient and Specific Genome Editing in Silkworm Using Custom TALENs. PLoS ONE 2012, 7, e45035. [Google Scholar] [CrossRef]

- Kiuchi, T.; Koga, H.; Kawamoto, M.; Shoji, K.; Sakai, H.; Arai, Y.; Ishihara, G.; Kawaoka, S.; Sugano, S.; Shimada, T.; et al. A Single Female-Specific Pirna Is the Primary Determiner of Sex in The Silkworm. Nature 2014, 509, 633–636. [Google Scholar] [CrossRef]

- Li, S.; Yu, X. Amino acid composition analysis of male and female silkworm silk. Jiangsu Seric. 2012, 34, 12–15. [Google Scholar]

- Liu, C.; Ren, Z.H.; Wang, H.Z.; Yang, P.Q.; Zhang, X.L. Analysis on Gender of Silkworms by MRI Technology. In Proceedings of the 2008 International Conference on BioMedical Engineering and Informatics, Sanya, China, 27–30 May 2008; IEEE: Sanya, China, 2008; pp. 8–12. [Google Scholar]

- Cai, J.; Yuan, L.; Liu, B.; Sun, L. Nondestructive Gender Identification of Silkworm Cocoons Using X-ray Imaging with Multivariate Data Analysis. Anal. Methods 2014, 6, 7224–7233. [Google Scholar] [CrossRef]

- Tao, D.; Wang, Z.; Li, G.; Xie, L. Sex Determination of Silkworm Pupae Using VIS-NIR Hyperspectral Imaging Combined with Chemometrics. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2019, 208, 7–12. [Google Scholar] [CrossRef]

- Tao, D.; Qiu, G.; Li, G. A Novel Model for Sex Discrimination of Silkworm Pupae from Different Species. IEEE Access 2019, 7, 165328–165335. [Google Scholar] [CrossRef]

- Jin, T.; Liu, L.; Tang, X.; Chen, H. Differentiation of Male, Female and Dead Silkworms While in the Cocoon by near Infrared Spectroscopy. J. Infrared Spectrosc. 1995, 3, 89. [Google Scholar] [CrossRef]

- Joseph Raj, A.N.; Sundaram, R.; Mahesh, V.G.V.; Zhuang, Z.; Simeone, A. A Multi-Sensor System for Silkworm Cocoon Gender Classification via Image Processing and Support Vector Machine. Sensors 2019, 19, 2656. [Google Scholar] [CrossRef]

- Yan, H.; Liang, M.; Guo, C.; Zhang, Y.; Zhang, G. A study on method of online discrimination of male and female silkworm pupae by near infrared spectroscopy. Sci. Seric. 2018, 44, 283–289. [Google Scholar] [CrossRef]

- Lin, X.; Zhuang, Y.; Dan, T.; Guanglin, L.; Xiaodong, Y.; Jie, S.; Xuwen, L. The Model Updating Based on near Infrared Spectroscopy for the Sex Identification of Silkworm Pupae from Different Varieties by A Semi-Supervised Learning with Pre-Labeling Method. Spectrosc. Lett. 2019, 52, 642–652. [Google Scholar] [CrossRef]

- Ma, Y.; Xu, Y.; Yan, H.; Zhang, G. On-Line Identification of Silkworm Pupae Gender by Short-Wavelength near Infrared Spectroscopy and Pattern Recognition Technology. J. Infrared Spectrosc. 2021, 29, 207–215. [Google Scholar] [CrossRef]

- Tao, D.; Li, G.; Qiu, G.; Chen, S.; Li, G. Different Variable Selection and Model Updating Strategies about Sex Classification of Silkworm Pupae. Infrared Phys. Technol. 2022, 127, 104471. [Google Scholar] [CrossRef]

- Fu, X.; Zhao, S.; Luo, H.; Tao, D.; Wu, X.; Li, G. Sex Classification of Silkworm Pupae from Different Varieties by near Infrared Spectroscopy Combined with Chemometrics. Infrared Phys. Technol. 2023, 129, 104553. [Google Scholar] [CrossRef]

- Zhu, Z.; Yuan, H.; Song, C.; Li, X.; Fang, D.; Guo, Z.; Zhu, X.; Liu, W.; Yan, G. High-Speed Sex Identification and Sorting of Living Silkworm Pupae Using near-Infrared Spectroscopy Combined with Chemometrics. Sens. Actuators B Chem. 2018, 268, 299–309. [Google Scholar] [CrossRef]

- Kamtongdee, C.; Sumriddetchkajorn, S.; Sa-ngiamsak, C. Feasibility Study of Silkworm Pupa Sex Identification with Pattern Matching. Comput. Electron. Agric. 2013, 95, 31–37. [Google Scholar] [CrossRef]

- Sumriddetchkajorn, S.; Kamtongdee, C.; Chanhorm, S. Fault-Tolerant Optical-Penetration-Based Silkworm Gender Identification. Comput. Electron. Agric. 2015, 119, 201–208. [Google Scholar] [CrossRef]

- Tao, D.; Wang, Z.; Li, G.; Qiu, G. Silkworm pupa image restoration based on aliasing resolving algorithm and identifying male and female. Trans. Chin. Soc. Agric. Eng. 2016, 32, 168–174. [Google Scholar]

- Tao, D.; Wang, Z.; Li, G.; Qiu, G. Radon Transform-Based Motion Blurred Silkworm Pupa Image Restoration. Int. J. Agric. Biol. Eng. 2019, 12, 152–159. [Google Scholar] [CrossRef]

- Liang, P.; Sun, H.; Zhang, G.; Fang, A.; Zhou, E. Classification method for silkworm pupae based on principal component analysis and BP neural network. Jiangsu Agric. Sci. 2016, 44, 428–430+582. [Google Scholar] [CrossRef]

- Yu, Y.; Gao, P.; Zhao, Y.; Pan, G.; Chen, T. Automatic identification of female and male silkworm pupa based on deep convolution neural network. Sci. Seric. 2020, 46, 197–203. [Google Scholar] [CrossRef]

- Qiu, G.; Tao, D.; Xiao, Q.; Li, G. Simultaneous Sex and Species Classification of Silkworm Pupae by NIR Spectroscopy Combined with Chemometric Analysis. J. Sci. Food Agric. 2021, 101, 1323–1330. [Google Scholar] [CrossRef]

- Tao, D.; Wang, Z.; Li, G.; Xie, L. Simultaneous Species and Sex Identification of Silkworm Pupae Using Hyperspectral Imaging Technology. Spectrosc. Lett. 2018, 51, 446–452. [Google Scholar] [CrossRef]

- Zhao, Y.; Ou, Y.; Li, J.; Liu, L.W.; Zhang, G. Construction and preliminary analysis of a silkworm pupa morphology database based on image recognition. Newsl. Sericultural Sci. 2016, 36, 14–17. [Google Scholar]

- Seo, Y.; Morishima, H.; Hosokawa, A. Separation of Male and Female Silkworm Pupae by Weight Prediction of Separability. J. Jpn. Soc. Agric. Mach. 1985, 47, 191–195. [Google Scholar]

- Ahmad Loti, N.N.; Mohd Noor, M.R.; Chang, S.-W. Integrated Analysis of Machine Learning and Deep Learning in Chili Pest and Disease Identification. J. Sci. Food Agric. 2021, 101, 3582–3594. [Google Scholar] [CrossRef]

- Tian, S.; Bhattacharya, U.; Lu, S.; Su, B.; Wang, Q.; Wei, X.; Lu, Y.; Tan, C.L. Multilingual Scene Character Recognition with Co-Occurrence of Histogram of Oriented Gradients. Pattern Recognit. 2016, 51, 125–134. [Google Scholar] [CrossRef]

- Lv, C.; Zhang, P.; Wu, D. Gear Fault Feature Extraction Based on Fuzzy Function and Improved Hu Invariant Moments. IEEE Access 2020, 8, 47490–47499. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution Gray-Scale and Rotation Invariant Texture Classification with Local Binary Patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Deng, X.; Lan, Y.; Hong, T.; Chen, J. Citrus Greening Detection Using Visible Spectrum Imaging and C-SVC. Comput. Electron. Agric. 2016, 130, 177–183. [Google Scholar] [CrossRef]

- Chen, X.; Li, J.; Liu, H.; Wang, Y. A Fast Multi-Source Information Fusion Strategy Based on Deep Learning for Species Identification of Boletes. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2022, 274, 121137. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.-C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Radosavovic, I.; Kosaraju, R.P.; Girshick, R.; He, K.; Dollár, P. Designing Network Design Spaces. arXiv 2020. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar]

- Fabijańska, A.; Danek, M.; Barniak, J. Wood Species Automatic Identification from Wood Core Images with a Residual Convolutional Neural Network. Comput. Electron. Agric. 2021, 181, 105941. [Google Scholar] [CrossRef]

- Yuan, P.; Qian, S.; Zhai, Z.; FernánMartínez, J.; Xu, H. Study of Chrysanthemum Image Phenotype On-Line Classification Based on Transfer Learning and Bilinear Convolutional Neural Network. Comput. Electron. Agric. 2022, 194, 106679. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).