Intelligent Perception-Based Cattle Lameness Detection and Behaviour Recognition: A Review

Abstract

:Simple Summary

Abstract

1. Introduction

2. Cattle Lameness Detection and Scoring

2.1. Cattle Lameness

2.2. Manual Cattle Lameness Detection Approaches

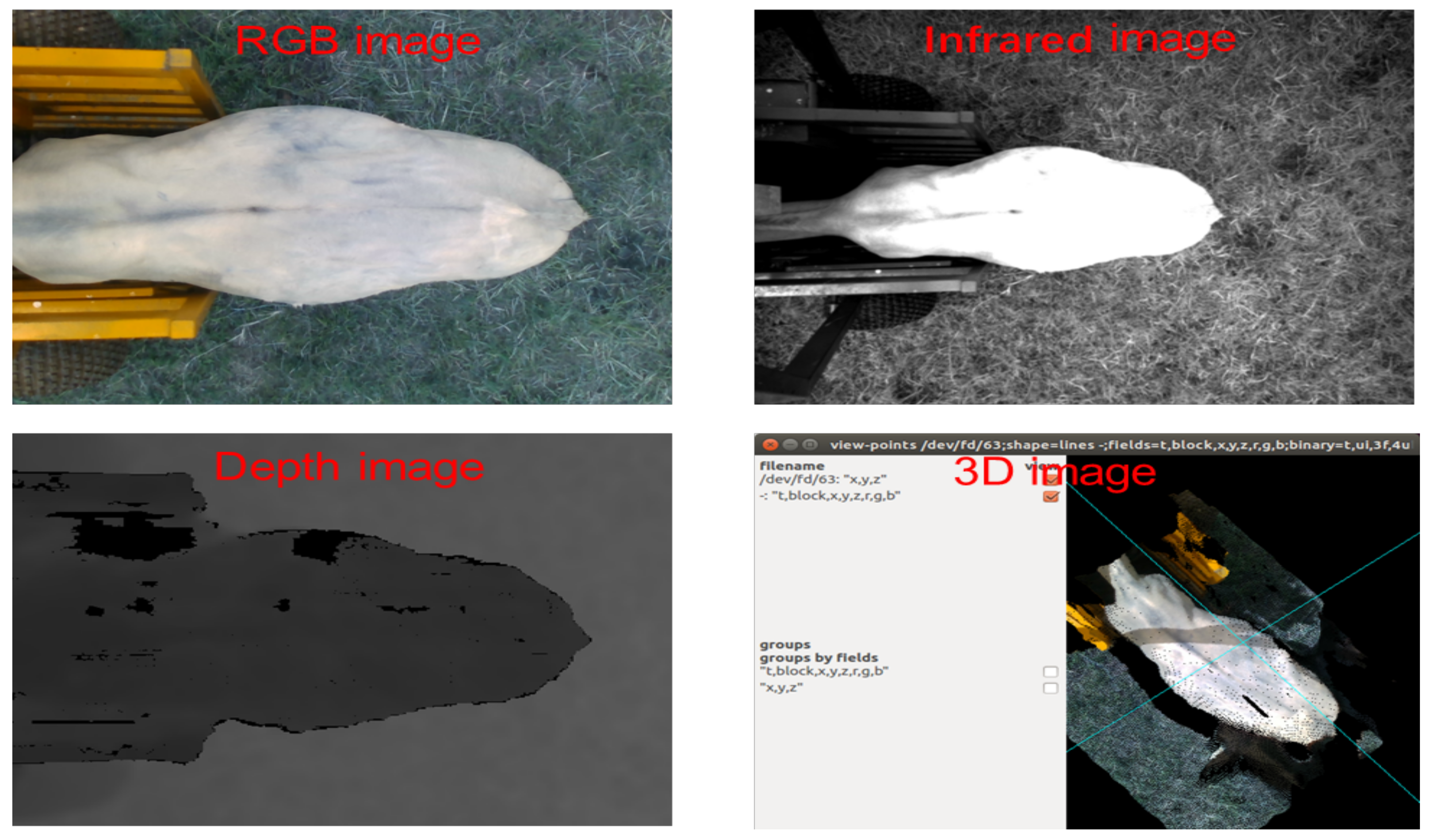

2.3. Automatic Cattle Lameness Detection Approaches

2.3.1. Kinetic Approaches

| Work | Sensor | Dataset Size (Cattle Number) | Traits | Model | Automation Level | Results |

|---|---|---|---|---|---|---|

| kinetic | ||||||

| Liu et al. [37] | force plate | 346 | vertical kinetic | logistic regression | medium | sensitivity = 51.92% |

| Dunthorn et al. [41] | 3D force-plate | 85 | leg force | logistic regression | medium | sensitivity = 90.0% |

| Nechanitzky et al. [45] | weighing platform | 44 | weight and laying time | logistic regression | medium | sensitivity = 81.0% |

| Chapinal et al. [36] | camera weighing platform | 57 | step frequency laying time, weight | logistic regression | high | Area under the curve = 83.0% |

| Chapinal and Tucker [46] | camera weighing platform | 257 | step number and gait | statistic analysis | high | sensitivity ≥ 0.96 |

| Zillner et al. [47] | clock | 53 | walking speed | analysis of variance | low | sensitivity = 71.43% |

| kinematic | ||||||

| Van Nuffel et al. [48] | gaitwise system | 61 | gait | linear discriminant | medium | sensitivity = 88.0% |

| Pluk et al. [40] | camera | 85 | step overlap | regression model | medium | = 80.90% |

| Poursaberi et al. [49] | camera | 156 | back curvature | image analysis | high | accuracy = 96.7% |

| Poursaberi et al. [50] | camera | 1200 | posture and movement | image analysis | high | accuracy = 92.0% |

| Viazzi et al. [34] | camera | 90 | posture and movement | image analysis | high | accuracy = 76.0% |

| Viazzi et al. [38] | 3D camera | 273 | back posture | decision tree | high | accuracy = 90.0% |

| Van Hertem et al. [35] | 3D camera | 186 | gait | logistic regression model | high | accuracy = 60.2% |

| Van Hertem et al. [51] | 3D camera | 208 | back posture | binary GLMM | high | accuracy = 79.8% |

| Wu et al. [42] | camera | 50 | step size | long short-term memory | high | accuracy = 98.57% |

| Zhao et al. [12] | camera | 98 | leg swing | decision tree classifier | high | sensitivity = 90.25% |

| Beer et al. [52] | Camera | 63 | gait | logistic regression model | medium | sensitivity = 90.2% |

| Jiang et al. [53] | camera | 30 | walking characteristics | double normal distribution statistical | high | accuracy = 93.75% |

| Jabbar et al. [54] | 3D camera | 22 | height variation | support vector machine | high | accuracy = 95.7% |

| Kang et al. [55] | camera | 100 | supporting phase | data analysis | high | accuracy = 95.7% |

| Piette et al. [56] | camera | 209 | back posture | threefold cross validation | high | accuracy = 82.0% |

| In direct | ||||||

| De Mol et al. [57] | 3D accelerometers | 100 | lying time | dynamic linear model | high | sensitivity = 85.5% |

| Kamphuis et al. [58] | pedometers, weigh scales milk meters | 292 | live weight, steps milk yield | dynamic linear model | high | sensitivity = 80.0% |

| Miekley et al. [59] | milk meter pedometers | 338 | milk yield feeding patterns | principal component analysis | high | sensitivity = 87·8% |

| Kramer et al. [60] | milk meter neck transponders | 125 | milk yield and activity | fuzzy logic model | high | sensitivity ≥ 70.0% |

| Chapinal et al. [44] | camera | 153 | gait score, walking speed lying behaviour | discriminant analysis | high | sensitivity = 67.0% |

| Garcia et al. [61] | automatic milking system | 88 | milk yield and activity variables | discriminant analysis | high | sensitivity ≥ 80.0% |

| Wood et al. [62] | Infrared thermometry | 153 | foot temperature | linear regression | high | coefficient = 62.3% |

| Lin et al. [63] | infrared thermometers | 990 | foot-surface temperatures | linear regression | high | sensitivity = 78.5% |

| Jabbar et al. [54] | 3D camera | 22 | shape index and curvedness | SVM | high | accuracy = 95.7% |

| Taneja et al. [64] | camera | 150 | step count, lying time, swaps | K-Nearest Neighbours | high | accuracy = 87.0% |

| Jiang et al. [53] | camera | 30 | pixel distribution characteristics | statistical model | high | accuracy = 93.75% |

2.3.2. Kinematic Approaches

2.3.3. Indirect Approaches

2.4. Limitations of Automated Lameness Detection Systems

2.5. Cattle Behaviours

2.6. Manual Approaches for Cattle Behaviour Monitoring and Recognition

2.7. Automatic Approaches for Cattle Behaviour Monitoring and Recognition

2.7.1. Contact Sensor-Based Approaches

2.7.2. Non-Contact Sensor-Based Approach

2.8. Cattle Behavioural Change Detection and Quantification

2.9. Limitations of Existing Approaches

3. Challenges and Future Research Trends

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Herrero, M.; Henderson, B.; Havlík, P.; Thornton, P.K.; Conant, R.T.; Smith, P.; Wirsenius, S.; Hristov, A.N.; Gerber, P.; Gill, M.; et al. Greenhouse gas mitigation potentials in the livestock sector. Nat. Clim. Chang. 2016, 6, 452–461. [Google Scholar] [CrossRef] [Green Version]

- Henchion, M.; Hayes, M.; Mullen, A.M.; Fenelon, M.; Tiwari, B. Future protein supply and demand: Strategies and factors influencing a sustainable equilibrium. Foods 2017, 6, 53. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thornton, P.K. Livestock production: Recent trends, future prospects. Philos. Trans. R. Soc. B Biol. Sci. 2010, 365, 2853–2867. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fournel, S.; Rousseau, A.N.; Laberge, B. Rethinking environment control strategy of confined animal housing systems through precision livestock farming. Biosyst. Eng. 2017, 155, 96–123. [Google Scholar] [CrossRef]

- García, R.; Aguilar, J.; Toro, M.; Pinto, A.; Rodríguez, P. A systematic literature review on the use of machine learning in precision livestock farming. Comput. Electron. Agric. 2020, 179, 105826. [Google Scholar] [CrossRef]

- Neethirajan, S.; Reimert, I.; Kemp, B. Measuring Farm Animal Emotions—Sensor-Based Approaches. Sensors 2021, 21, 553. [Google Scholar] [CrossRef]

- Buller, H.; Blokhuis, H.; Lokhorst, K.; Silberberg, M.; Veissier, I. Animal welfare management in a digital world. Animals 2020, 10, 1779. [Google Scholar] [CrossRef]

- Kendrick, K.M. Intelligent perception. Appl. Anim. Behav. Sci. 1998, 57, 213–231. [Google Scholar] [CrossRef]

- King, A. The future of agriculture. Nature 2017, 544, S21–S23. [Google Scholar] [CrossRef] [Green Version]

- Bahlo, C.; Dahlhaus, P.; Thompson, H.; Trotter, M. The role of interoperable data standards in precision livestock farming in extensive livestock systems: A review. Comput. Electron. Agric. 2019, 156, 459–466. [Google Scholar] [CrossRef]

- Qiao, Y.; Truman, M.; Sukkarieh, S. Cattle segmentation and contour extraction based on Mask R-CNN for precision livestock farming. Comput. Electron. Agric. 2019, 165, 104958. [Google Scholar] [CrossRef]

- Zhao, K.; Bewley, J.; He, D.; Jin, X. Automatic lameness detection in dairy cattle based on leg swing analysis with an image processing technique. Comput. Electron. Agric. 2018, 148, 226–236. [Google Scholar] [CrossRef]

- Wu, D.; Wang, Y.; Han, M.; Song, L.; Shang, Y.; Zhang, X.; Song, H. Using a CNN-LSTM for basic behaviors detection of a single dairy cow in a complex environment. Comput. Electron. Agric. 2021, 182, 106016. [Google Scholar] [CrossRef]

- Qiao, Y.; Kong, H.; Clark, C.; Lomax, S.; Su, D.; Eiffert, S.; Sukkarieh, S. Intelligent perception for cattle monitoring: A review for cattle identification, body condition score evaluation, and weight estimation. Comput. Electron. Agric. 2021, 185, 106143. [Google Scholar] [CrossRef]

- Berckmans, D. Precision livestock farming technologies for welfare management in intensive livestock systems. Sci. Tech. Rev. Off. Int. Des Epizoot. 2014, 33, 189–196. [Google Scholar] [CrossRef] [PubMed]

- He, D.; Liu, D.; Zhao, K. Review of perceiving animal information and behavior in precision livestock farming. Trans. Chin. Soc. Agric. Mach. 2016, 47, 231–244. [Google Scholar]

- Qiao, Y.; Su, D.; Kong, H.; Sukkarieh, S.; Lomax, S.; Clark, C. Individual Cattle Identification Using a Deep Learning Based Framework. IFAC-PapersOnLine 2019, 52, 318–323. [Google Scholar] [CrossRef]

- Van Nuffel, A.; Zwertvaegher, I.; Van Weyenberg, S.; Pastell, M.; Thorup, V.; Bahr, C.; Sonck, B.; Saeys, W. Lameness detection in dairy cows: Part 2. Use of sensors to automatically register changes in locomotion or behavior. Animals 2015, 5, 861–885. [Google Scholar] [CrossRef] [Green Version]

- Schlageter-Tello, A.; Bokkers, E.A.; Koerkamp, P.W.G.; Van Hertem, T.; Viazzi, S.; Romanini, C.E.; Halachmi, I.; Bahr, C.; Berckmans, D.; Lokhorst, K. Manual and automatic locomotion scoring systems in dairy cows: A review. Prev. Vet. Med. 2014, 116, 12–25. [Google Scholar] [CrossRef]

- Gardenier, J.; Underwood, J.; Clark, C. Object Detection for Cattle Gait Tracking. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2206–2213. [Google Scholar]

- O’Leary, N.; Byrne, D.; O’Connor, A.; Shalloo, L. Invited review: Cattle lameness detection with accelerometers. J. Dairy Sci. 2020, 103, 3895–3911. [Google Scholar] [CrossRef] [Green Version]

- Sprecher, D.; Hostetler, D.; Kaneene, J. A lameness scoring system that uses posture and gait to predict dairy cattle reproductive performance. Theriogenology 1997, 47, 1179–1187. [Google Scholar] [CrossRef]

- Enting, H.; Kooij, D.; Dijkhuizen, A.; Huirne, R.; Noordhuizen-Stassen, E. Economic losses due to clinical lameness in dairy cattle. Livest. Prod. Sci. 1997, 49, 259–267. [Google Scholar] [CrossRef]

- Kang, X.; Zhang, X.D.; Liu, G. A Review: Development of Computer Vision-Based Lameness Detection for Dairy Cows and Discussion of the Practical Applications. Sensors 2021, 21, 753. [Google Scholar]

- Buisman, L.L.; Alsaaod, M.; Bucher, E.; Kofler, J.; Steiner, A. Objective assessment of lameness in cattle after foot surgery. PLoS ONE 2018, 13, e0209783. [Google Scholar] [CrossRef]

- Bennett, R.; Barker, Z.; Main, D.; Whay, H.; Leach, K. Investigating the value dairy farmers place on a reduction of lameness in their herds using a willingness to pay approach. Vet. J. 2014, 199, 72–75. [Google Scholar] [CrossRef] [PubMed]

- Alsaaod, M.; Fadul, M.; Steiner, A. Automatic lameness detection in cattle. Vet. J. 2019, 246, 35–44. [Google Scholar] [CrossRef] [PubMed]

- Schlageter-Tello, A.; Bokkers, E.; Koerkamp, P.; Van Hertem, T.; Viazzi, S.; Romanini, C.; Halachmi, I.; Bahr, C.; Berckmans, D.; Lokhorst, K. Comparison of locomotion scoring for dairy cows by experienced and inexperienced raters using live or video observation methods. Anim. Welf. 2015, 24, 69–79. [Google Scholar] [CrossRef]

- Winckler, C.; Willen, S. The reliability and repeatability of a lameness scoring system for use as an indicator of welfare in dairy cattle. Acta Agric. Scand. Sect. A-Anim. Sci. 2001, 51, 103–107. [Google Scholar] [CrossRef]

- Thomsen, P.; Munksgaard, L.; Tøgersen, F. Evaluation of a lameness scoring system for dairy cows. J. Dairy Sci. 2008, 91, 119–126. [Google Scholar] [CrossRef] [PubMed]

- Renn, N.; Onyango, J.; McCormick, W. Digital infrared thermal imaging and manual lameness scoring as a means for lameness detection in cattle. Vet. Clin. Sci. 2014, 2, 16–23. [Google Scholar]

- Gardenier, J.; Underwood, J.; Weary, D.; Clark, C. Pairwise comparison locomotion scoring for dairy cattle. J. Dairy Sci. 2021, 104, 6185–6193. [Google Scholar] [CrossRef]

- Song, X.; Leroy, T.; Vranken, E.; Maertens, W.; Sonck, B.; Berckmans, D. Automatic detection of lameness in dairy cattle—Vision-based trackway analysis in cow’s locomotion. Comput. Electron. Agric. 2008, 64, 39–44. [Google Scholar] [CrossRef]

- Viazzi, S.; Bahr, C.; Schlageter-Tello, A.; Van Hertem, T.; Romanini, C.; Pluk, A.; Halachmi, I.; Lokhorst, C.; Berckmans, D. Analysis of individual classification of lameness using automatic measurement of back posture in dairy cattle. J. Dairy Sci. 2013, 96, 257–266. [Google Scholar] [CrossRef] [Green Version]

- Van Hertem, T.; Viazzi, S.; Steensels, M.; Maltz, E.; Antler, A.; Alchanatis, V.; Schlageter-Tello, A.A.; Lokhorst, K.; Romanini, E.C.; Bahr, C.; et al. Automatic lameness detection based on consecutive 3D-video recordings. Biosyst. Eng. 2014, 119, 108–116. [Google Scholar] [CrossRef]

- Chapinal, N.; De Passillé, A.; Rushen, J.; Wagner, S. Automated methods for detecting lameness and measuring analgesia in dairy cattle. J. Dairy Sci. 2010, 93, 2007–2013. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, J.; Dyer, R.M.; Neerchal, N.K.; Tasch, U.; Rajkondawar, P.G. Diversity in the magnitude of hind limb unloading occurs with similar forms of lameness in dairy cows. J. Dairy Res. 2011, 78, 168–177. [Google Scholar] [CrossRef] [PubMed]

- Viazzi, S.; Bahr, C.; Van Hertem, T.; Schlageter-Tello, A.; Romanini, C.; Halachmi, I.; Lokhorst, C.; Berckmans, D. Comparison of a three-dimensional and two-dimensional camera system for automated measurement of back posture in dairy cows. Comput. Electron. Agric. 2014, 100, 139–147. [Google Scholar] [CrossRef]

- Thorup, V.M.; Munksgaard, L.; Robert, P.E.; Erhard, H.; Thomsen, P.; Friggens, N. Lameness detection via leg-mounted accelerometers on dairy cows on four commercial farms. Animal 2015, 9, 1704–1712. [Google Scholar] [CrossRef] [Green Version]

- Pluk, A.; Bahr, C.; Leroy, T.; Poursaberi, A.; Song, X.; Vranken, E.; Maertens, W.; Van Nuffel, A.; Berckmans, D. Evaluation of step overlap as an automatic measure in dairy cow locomotion. Trans. ASABE 2010, 53, 1305–1312. [Google Scholar] [CrossRef]

- Dunthorn, J.; Dyer, R.M.; Neerchal, N.K.; McHenry, J.S.; Rajkondawar, P.G.; Steingraber, G.; Tasch, U. Predictive models of lameness in dairy cows achieve high sensitivity and specificity with force measurements in three dimensions. J. Dairy Res. 2015, 82, 391–399. [Google Scholar] [CrossRef]

- Wu, D.; Wu, Q.; Yin, X.; Jiang, B.; Wang, H.; He, D.; Song, H. Lameness detection of dairy cows based on the YOLOv3 deep learning algorithm and a relative step size characteristic vector. Biosyst. Eng. 2020, 189, 150–163. [Google Scholar] [CrossRef]

- Pastell, M.; Hänninen, L.; De Passillé, A.; Rushen, J. Measures of weight distribution of dairy cows to detect lameness and the presence of hoof lesions. J. Dairy Sci. 2010, 93, 954–960. [Google Scholar] [CrossRef] [Green Version]

- Chapinal, N.; De Passille, A.; Weary, D.; Von Keyserlingk, M.; Rushen, J. Using gait score, walking speed, and lying behavior to detect hoof lesions in dairy cows. J. Dairy Sci. 2009, 92, 4365–4374. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nechanitzky, K.; Starke, A.; Vidondo, B.; Müller, H.; Reckardt, M.; Friedli, K.; Steiner, A. Analysis of behavioral changes in dairy cows associated with claw horn lesions. J. Dairy Sci. 2016, 99, 2904–2914. [Google Scholar] [CrossRef] [Green Version]

- Chapinal, N.; Tucker, C. Validation of an automated method to count steps while cows stand on a weighing platform and its application as a measure to detect lameness. J. Dairy Sci. 2012, 95, 6523–6528. [Google Scholar] [CrossRef] [PubMed]

- Zillner, J.C.; Tücking, N.; Plattes, S.; Heggemann, T.; Büscher, W. Using walking speed for lameness detection in lactating dairy cows. Livest. Sci. 2018, 218, 119–123. [Google Scholar] [CrossRef]

- Van Nuffel, A.; Saeys, W.; Sonck, B.; Vangeyte, J.; Mertens, K.C.; De Ketelaere, B.; Van Weyenberg, S. Variables of gait inconsistency outperform basic gait variables in detecting mildly lame cows. Livest. Sci. 2015, 177, 125–131. [Google Scholar] [CrossRef]

- Poursaberi, A.; Bahr, C.; Pluk, A.; Van Nuffel, A.; Berckmans, D. Real-time automatic lameness detection based on back posture extraction in dairy cattle: Shape analysis of cow with image processing techniques. Comput. Electron. Agric. 2010, 74, 110–119. [Google Scholar] [CrossRef]

- Poursaberi, A.; Bahr, C.; Pluk, A.; Berckmans, D.; Veermäe, I.; Kokin, E.; Pokalainen, V. Online lameness detection in dairy cattle using Body Movement Pattern (BMP). In Proceedings of the 2011 11th International Conference on Intelligent Systems Design and Applications, Cordoba, Spain, 22–24 November 2011; pp. 732–736. [Google Scholar]

- Van Hertem, T.; Bahr, C.; Tello, A.S.; Viazzi, S.; Steensels, M.; Romanini, C.; Lokhorst, C.; Maltz, E.; Halachmi, I.; Berckmans, D. Lameness detection in dairy cattle: Single predictor v. multivariate analysis of image-based posture processing and behaviour and performance sensing. Animal 2016, 10, 1525–1532. [Google Scholar] [CrossRef] [Green Version]

- Beer, G.; Alsaaod, M.; Starke, A.; Schuepbach-Regula, G.; Müller, H.; Kohler, P.; Steiner, A. Use of extended characteristics of locomotion and feeding behavior for automated identification of lame dairy cows. PLoS ONE 2016, 11, e0155796. [Google Scholar] [CrossRef] [Green Version]

- Jiang, B.; Song, H.; He, D. Lameness detection of dairy cows based on a double normal background statistical model. Comput. Electron. Agric. 2019, 158, 140–149. [Google Scholar] [CrossRef]

- Jabbar, K.A.; Hansen, M.F.; Smith, M.L.; Smith, L.N. Early and non-intrusive lameness detection in dairy cows using 3-dimensional video. Biosyst. Eng. 2017, 153, 63–69. [Google Scholar] [CrossRef]

- Kang, X.; Zhang, X.; Liu, G. Accurate detection of lameness in dairy cattle with computer vision: A new and individualized detection strategy based on the analysis of the supporting phase. J. Dairy Sci. 2020, 103, 10628–10638. [Google Scholar] [CrossRef] [PubMed]

- Piette, D.; Norton, T.; Exadaktylos, V.; Berckmans, D. Individualised automated lameness detection in dairy cows and the impact of historical window length on algorithm performance. Animal 2020, 14, 409–417. [Google Scholar] [CrossRef] [PubMed]

- De Mol, R.; André, G.; Bleumer, E.; Van der Werf, J.; De Haas, Y.; Van Reenen, C. Applicability of day-to-day variation in behavior for the automated detection of lameness in dairy cows. J. Dairy Sci. 2013, 96, 3703–3712. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kamphuis, C.; Frank, E.; Burke, J.; Verkerk, G.; Jago, J. Applying additive logistic regression to data derived from sensors monitoring behavioral and physiological characteristics of dairy cows to detect lameness. J. Dairy Sci. 2013, 96, 7043–7053. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Miekley, B.; Traulsen, I.; Krieter, J. Principal component analysis for the early detection of mastitis and lameness in dairy cows. J. Dairy Res. 2013, 80, 335–343. [Google Scholar] [CrossRef]

- Kramer, E.; Cavero, D.; Stamer, E.; Krieter, J. Mastitis and lameness detection in dairy cows by application of fuzzy logic. Livest. Sci. 2009, 125, 92–96. [Google Scholar] [CrossRef]

- Garcia, E.; Klaas, I.; Amigo, J.; Bro, R.; Enevoldsen, C. Lameness detection challenges in automated milking systems addressed with partial least squares discriminant analysis. J. Dairy Sci. 2014, 97, 7476–7486. [Google Scholar] [CrossRef] [PubMed]

- Wood, S.; Lin, Y.; Knowles, T.; Main, D.J. Infrared thermometry for lesion monitoring in cattle lameness. Vet. Rec. 2015, 176, 308. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.C.; Mullan, S.; Main, D.C. Optimising lameness detection in dairy cattle by using handheld infrared thermometers. Vet. Med. Sci. 2018, 4, 218–226. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Taneja, M.; Byabazaire, J.; Jalodia, N.; Davy, A.; Olariu, C.; Malone, P. Machine learning based fog computing assisted data-driven approach for early lameness detection in dairy cattle. Comput. Electron. Agric. 2020, 171, 105286. [Google Scholar] [CrossRef]

- Pluk, A.; Bahr, C.; Poursaberi, A.; Maertens, W.; Van Nuffel, A.; Berckmans, D. Automatic measurement of touch and release angles of the fetlock joint for lameness detection in dairy cattle using vision techniques. J. Dairy Sci. 2012, 95, 1738–1748. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pastell, M.; Tiusanen, J.; Hakojärvi, M.; Hänninen, L. A wireless accelerometer system with wavelet analysis for assessing lameness in cattle. Biosyst. Eng. 2009, 104, 545–551. [Google Scholar] [CrossRef]

- Norring, M.; Häggman, J.; Simojoki, H.; Tamminen, P.; Winckler, C.; Pastell, M. Lameness impairs feeding behavior of dairy cows. J. Dairy Sci. 2014, 97, 4317–4321. [Google Scholar] [CrossRef] [PubMed]

- Jiang, B.; Yin, X.; Song, H. Single-stream long-term optical flow convolution network for action recognition of lameness dairy cow. Comput. Electron. Agric. 2020, 175, 105536. [Google Scholar] [CrossRef]

- Bonk, S.; Burfeind, O.; Suthar, V.; Heuwieser, W. Evaluation of data loggers for measuring lying behavior in dairy calves. J. Dairy Sci. 2013, 96, 3265–3271. [Google Scholar] [CrossRef] [Green Version]

- Mattachini, G.; Antler, A.; Riva, E.; Arbel, A.; Provolo, G. Automated measurement of lying behavior for monitoring the comfort and welfare of lactating dairy cows. Livest. Sci. 2013, 158, 145–150. [Google Scholar] [CrossRef]

- Jónsson, R.; Blanke, M.; Poulsen, N.K.; Caponetti, F.; Højsgaard, S. Oestrus detection in dairy cows from activity and lying data using on-line individual models. Comput. Electron. Agric. 2011, 76, 6–15. [Google Scholar] [CrossRef]

- Ishihara, A.; Bertone, A.L.; Rajala-Schultz, P.J. Association between subjective lameness grade and kinetic gait parameters in horses with experimentally induced forelimb lameness. Am. J. Vet. Res. 2005, 66, 1805–1815. [Google Scholar] [CrossRef]

- Rodríguez, A.; Olivares, F.; Descouvieres, P.; Werner, M.; Tadich, N.; Bustamante, H. Thermographic assessment of hoof temperature in dairy cows with different mobility scores. Livest. Sci. 2016, 184, 92–96. [Google Scholar] [CrossRef]

- Alsaaod, M.; Römer, C.; Kleinmanns, J.; Hendriksen, K.; Rose-Meierhöfer, S.; Plümer, L.; Büscher, W. Electronic detection of lameness in dairy cows through measuring pedometric activity and lying behavior. Appl. Anim. Behav. Sci. 2012, 142, 134–141. [Google Scholar] [CrossRef]

- Harris-Bridge, G.; Young, L.; Handel, I.; Farish, M.; Mason, C.; Mitchell, M.A.; Haskell, M.J. The use of infrared thermography for detecting digital dermatitis in dairy cattle: What is the best measure of temperature and foot location to use? Vet. J. 2018, 237, 26–33. [Google Scholar] [CrossRef] [PubMed]

- Van De Gucht, T.; Saeys, W.; Van Meensel, J.; Van Nuffel, A.; Vangeyte, J.; Lauwers, L. Farm-specific economic value of automatic lameness detection systems in dairy cattle: From concepts to operational simulations. J. Dairy Sci. 2018, 101, 637–648. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fuentes, A.; Yoon, S.; Park, J.; Park, D.S. Deep learning-based hierarchical cattle behavior recognition with spatio-temporal information. Comput. Electron. Agric. 2020, 177, 105627. [Google Scholar] [CrossRef]

- Venter, Z.S.; Hawkins, H.J.; Cramer, M.D. Cattle don’t care: Animal behaviour is similar regardless of grazing management in grasslands. Agric. Ecosyst. Environ. 2019, 272, 175–187. [Google Scholar] [CrossRef]

- Moran, J.; Doyle, R. Cow Talk: Understanding Dairy Cow Behaviour to Improve Their Welfare on Asian Farms; CSIRO Publishing: Clayton, Victoria, Australia, 2015. [Google Scholar]

- González, L.; Bishop-Hurley, G.; Handcock, R.N.; Crossman, C. Behavioral classification of data from collars containing motion sensors in grazing cattle. Comput. Electron. Agric. 2015, 110, 91–102. [Google Scholar] [CrossRef]

- González, L.; Tolkamp, B.; Coffey, M.; Ferret, A.; Kyriazakis, I. Changes in feeding behavior as possible indicators for the automatic monitoring of health disorders in dairy cows. J. Dairy Sci. 2008, 91, 1017–1028. [Google Scholar] [CrossRef] [Green Version]

- Dutta, R.; Smith, D.; Rawnsley, R.; Bishop-Hurley, G.; Hills, J.; Timms, G.; Henry, D. Dynamic cattle behavioural classification using supervised ensemble classifiers. Comput. Electron. Agric. 2015, 111, 18–28. [Google Scholar] [CrossRef]

- Müller, R.; Schrader, L. A new method to measure behavioural activity levels in dairy cows. Appl. Anim. Behav. Sci. 2003, 83, 247–258. [Google Scholar] [CrossRef]

- McGowan, J.; Burke, C.; Jago, J. Validation of a technology for objectively measuring behaviour in dairy cows and its application for oestrous detection. In Proceedings-New Zealand Society of Animal Production; New Zealand Society of Animal Production: Palmerston North, New Zealand, 2007; Volume 67, p. 136. [Google Scholar]

- Ledgerwood, D.; Winckler, C.; Tucker, C. Evaluation of data loggers, sampling intervals, and editing techniques for measuring the lying behavior of dairy cattle. J. Dairy Sci. 2010, 93, 5129–5139. [Google Scholar] [CrossRef]

- Rydhmer, L.; Zamaratskaia, G.; Andersson, H.; Algers, B.; Guillemet, R.; Lundström, K. Aggressive and sexual behaviour of growing and finishing pigs reared in groups, without castration. Acta Agric. Scand Sect. A 2006, 56, 109–119. [Google Scholar] [CrossRef]

- Bozkurt, Y.; Ozkaya, S.; Ap Dewi, I. Association between aggressive behaviour and high-energy feeding level in beef cattle. Czech J. Anim. Sci. 2006, 51, 151. [Google Scholar] [CrossRef] [Green Version]

- Matthews, S.G.; Miller, A.L.; Clapp, J.; Plötz, T.; Kyriazakis, I. Early detection of health and welfare compromises through automated detection of behavioural changes in pigs. Vet. J. 2016, 217, 43–51. [Google Scholar] [CrossRef] [Green Version]

- Oczak, M.; Ismayilova, G.; Costa, A.; Viazzi, S.; Sonoda, L.T.; Fels, M.; Bahr, C.; Hartung, J.; Guarino, M.; Berckmans, D.; et al. Analysis of aggressive behaviours of pigs by automatic video recordings. Comput. Electron. Agric. 2013, 99, 209–217. [Google Scholar] [CrossRef]

- Geers, R.; Puers, B.; Goedseels, V.; Wouters, P. Electronic Identification, Monitoring and Tracking of Animals; CAB International: Wallingford, UK, 1997. [Google Scholar]

- Sambraus, H.H.; Brummer, H.; Putten, G.V.; Schäfer, M.; Wennrich, G. Nutztier Ethologie: Das Verhalten landwirtschaftlicher Nutztiere; eine Angewandte Verhaltenskunde fuer die Praxis; Verlag Paul Parey: Hamburg, Germany, 1978. [Google Scholar]

- Pereira, D.F.; Miyamoto, B.C.; Maia, G.D.; Sales, G.T.; Magalhães, M.M.; Gates, R.S. Machine vision to identify broiler breeder behavior. Comput. Electron. Agric. 2013, 99, 194–199. [Google Scholar] [CrossRef]

- Peng, Y.; Kondo, N.; Fujiura, T.; Suzuki, T.; Ouma, S.; Wulandari; Yoshioka, H.; Itoyama, E. Dam behavior patterns in Japanese black beef cattle prior to calving: Automated detection using LSTM-RNN. Comput. Electron. Agric. 2020, 169, 105178. [Google Scholar] [CrossRef]

- Liu, D.; Oczak, M.; Maschat, K.; Baumgartner, J.; Pletzer, B.; He, D.; Norton, T. A computer vision-based method for spatial-temporal action recognition of tail-biting behaviour in group-housed pigs. Biosyst. Eng. 2020, 195, 27–41. [Google Scholar] [CrossRef]

- Handcock, R.N.; Swain, D.L.; Bishop-Hurley, G.J.; Patison, K.P.; Wark, T.; Valencia, P.; Corke, P.; O’Neill, C.J. Monitoring animal behaviour and environmental interactions using wireless sensor networks, GPS collars and satellite remote sensing. Sensors 2009, 9, 3586–3603. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tsai, D.M.; Huang, C.Y. A motion and image analysis method for automatic detection of estrus and mating behavior in cattle. Comput. Electron. Agric. 2014, 104, 25–31. [Google Scholar] [CrossRef]

- Martiskainen, P.; Järvinen, M.; Skön, J.P.; Tiirikainen, J.; Kolehmainen, M.; Mononen, J. Cow behaviour pattern recognition using a three-dimensional accelerometer and support vector machines. Appl. Anim. Behav. Sci. 2009, 119, 32–38. [Google Scholar] [CrossRef]

- Tani, Y.; Yokota, Y.; Yayota, M.; Ohtani, S. Automatic recognition and classification of cattle chewing activity by an acoustic monitoring method with a single-axis acceleration sensor. Comput. Electron. Agric. 2013, 92, 54–65. [Google Scholar] [CrossRef]

- Smith, D.; Rahman, A.; Bishop-Hurley, G.J.; Hills, J.; Shahriar, S.; Henry, D.; Rawnsley, R. Behavior classification of cows fitted with motion collars: Decomposing multi-class classification into a set of binary problems. Comput. Electron. Agric. 2016, 131, 40–50. [Google Scholar] [CrossRef]

- Williams, M.; Mac Parthaláin, N.; Brewer, P.; James, W.; Rose, M. A novel behavioral model of the pasture-based dairy cow from GPS data using data mining and machine learning techniques. J. Dairy Sci. 2016, 99, 2063–2075. [Google Scholar] [CrossRef] [Green Version]

- Williams, M.; James, W.; Rose, M. Fixed-time data segmentation and behavior classification of pasture-based cattle: Enhancing performance using a hidden Markov model. Comput. Electron. Agric. 2017, 142, 585–596. [Google Scholar] [CrossRef]

- Andriamandroso, A.L.H.; Lebeau, F.; Beckers, Y.; Froidmont, E.; Dufrasne, I.; Heinesch, B.; Dumortier, P.; Blanchy, G.; Blaise, Y.; Bindelle, J. Development of an open-source algorithm based on inertial measurement units (IMU) of a smartphone to detect cattle grass intake and ruminating behaviors. Comput. Electron. Agric. 2017, 139, 126–137. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, H.; Zhao, K.; Liu, G. Cow movement behavior classification based on optimal binary decision-tree classification model. Trans. Chin. Soc. Agric. Eng. 2018, 34, 202–210. [Google Scholar]

- Rahman, A.; Smith, D.; Little, B.; Ingham, A.; Greenwood, P.; Bishop-Hurley, G. Cattle behaviour classification from collar, halter, and ear tag sensors. Inf. Process. Agric. 2018, 5, 124–133. [Google Scholar] [CrossRef]

- Achour, B.; Belkadi, M.; Aoudjit, R.; Laghrouche, M. Unsupervised automated monitoring of dairy cows’ behavior based on Inertial Measurement Unit attached to their back. Comput. Electron. Agric. 2019, 167, 105068. [Google Scholar] [CrossRef]

- Peng, Y.; Kondo, N.; Fujiura, T.; Suzuki, T.; Yoshioka, H.; Itoyama, E. Classification of multiple cattle behavior patterns using a recurrent neural network with long short-term memory and inertial measurement units. Comput. Electron. Agric. 2019, 157, 247–253. [Google Scholar] [CrossRef]

- Riaboff, L.; Aubin, S.; Bedere, N.; Couvreur, S.; Madouasse, A.; Goumand, E.; Chauvin, A.; Plantier, G. Evaluation of pre-processing methods for the prediction of cattle behaviour from accelerometer data. Comput. Electron. Agric. 2019, 165, 104961. [Google Scholar] [CrossRef]

- Williams, L.R.; Moore, S.T.; Bishop-Hurley, G.J.; Swain, D.L. A sensor-based solution to monitor grazing cattle drinking behaviour and water intake. Comput. Electron. Agric. 2020, 168, 105141. [Google Scholar] [CrossRef]

- Shen, W.; Cheng, F.; Zhang, Y.; Wei, X.; Fu, Q.; Zhang, Y. Automatic recognition of ingestive-related behaviors of dairy cows based on triaxial acceleration. Inf. Process. Agric. 2020, 7, 427–443. [Google Scholar] [CrossRef]

- Tran, D.N.; Nguyen, T.N.; Khanh, P.C.P.; Trana, D.T. An IoT-based Design Using Accelerometers in Animal Behavior Recognition Systems. IEEE Sens. J. 2021. [Google Scholar] [CrossRef]

- Porto, S.M.; Arcidiacono, C.; Anguzza, U.; Cascone, G. The automatic detection of dairy cow feeding and standing behaviours in free-stall barns by a computer vision-based system. Biosyst. Eng. 2015, 133, 46–55. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Gao, R.; Wu, H. Cow behavior recognition based on image analysis and activities. Int. J. Agric. Biol. Eng. 2017, 10, 165–174. [Google Scholar]

- Ahn, S.J.; Ko, D.M.; Choi, K.S. Cow behavior recognition using motion history image feature. In International Conference Image Analysis and Recognition; Springer: Berlin/Heidelberg, Germany, 2017; pp. 626–633. [Google Scholar]

- Guo, Y.; Zhang, Z.; He, D.; Niu, J.; Tan, Y. Detection of cow mounting behavior using region geometry and optical flow characteristics. Comput. Electron. Agric. 2019, 163, 104828. [Google Scholar] [CrossRef]

- Yin, X.; Wu, D.; Shang, Y.; Jiang, B.; Song, H. Using an EfficientNet-LSTM for the recognition of single Cow’s motion behaviours in a complicated environment. Comput. Electron. Agric. 2020, 177, 105707. [Google Scholar] [CrossRef]

- Achour, B.; Belkadi, M.; Filali, I.; Laghrouche, M.; Lahdir, M. Image analysis for individual identification and feeding behaviour monitoring of dairy cows based on convolutional neural networks (cnn). Biosyst. Eng. 2020, 198, 31–49. [Google Scholar] [CrossRef]

- Guo, Y.; Qiao, Y.; Sukkarieh, S.; Chai, L.; He, D. BiGRU-Attention Based Cow Behavior Classification Using Video Data For Precision Livestock Farming. Trans. ASABE 2021. [Google Scholar] [CrossRef]

- Yin, L.; Liu, C.; Hong, T.; Zhou, H.; Kae Hsiang, K. Design of system for monitoring dairy cattle’s behavioral features based on wireless sensor networks. Trans. Chin. Soc. Agric. Eng. 2010, 26, 203–208. [Google Scholar]

- Barriuso, A.L.; Villarrubia González, G.; De Paz, J.F.; Lozano, Á.; Bajo, J. Combination of Multi-Agent Systems and Wireless Sensor Networks for the Monitoring of Cattle. Sensors 2018, 18, 108. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Werner, J.; Leso, L.; Umstatter, C.; Niederhauser, J.; Kennedy, E.; Geoghegan, A.; Shalloo, L.; Schick, M.; O’Brien, B. Evaluation of the RumiWatchSystem for measuring grazing behaviour of cows. J. Neurosci. Methods 2018, 300, 138–146. [Google Scholar] [CrossRef] [PubMed]

- Roelofs, J.B.; van Eerdenburg, F.J.; Soede, N.M.; Kemp, B. Pedometer readings for estrous detection and as predictor for time of ovulation in dairy cattle. Theriogenology 2005, 64, 1690–1703. [Google Scholar] [CrossRef]

- Palmer, M.A.; Olmos, G.; Boyle, L.A.; Mee, J.F. Estrus detection and estrus characteristics in housed and pastured Holstein–Friesian cows. Theriogenology 2010, 74, 255–264. [Google Scholar] [CrossRef]

- Gibbons, J.M.; Lawrence, A.B.; Haskell, M.J. Consistency of aggressive feeding behaviour in dairy cows. Appl. Anim. Behav. Sci. 2009, 121, 1–7. [Google Scholar] [CrossRef]

- Šimić, R.; Matković, K.; Ostović, M.; Pavičić, Ž.; Mihaljević, Ž. Influence of an enriched environment on aggressive behaviour in beef cattle. Vet. Stanica 2018, 49, 239–245. [Google Scholar]

- Tscharke, M.; Banhazi, T.M. A brief review of the application of machine vision in livestock behaviour analysis. Agrárinformatika/J. Agric. Inform. 2016, 7, 23–42. [Google Scholar]

- Xue, T.; Qiao, Y.; Kong, H.; Su, D.; Pan, S.; Rafique, K.; Sukkarieh, S. One-shot Learning-based Animal Video Segmentation. IEEE Trans. Ind. Inform. 2021. [Google Scholar] [CrossRef]

- Huang, L.; Guo, H.; Rao, Q.; Hou, Z.; Li, S.; Qiu, S.; Fan, X.; Wang, H. Body Dimension Measurements of Qinchuan Cattle with Transfer Learning from LiDAR Sensing. Sensors 2019, 19, 5046. [Google Scholar] [CrossRef] [Green Version]

- Gao, R.; Gu, J.; Liang, J. Cow Behavioral Recognition Using Dynamic Analysis. In Proceedings of the 2017 International Conference on Smart Grid and Electrical Automation (ICSGEA), Changsha, China, 27–28 May 2017; pp. 335–338. [Google Scholar]

- Meunier, B.; Pradel, P.; Sloth, K.H.; Cirié, C.; Delval, E.; Mialon, M.M.; Veissier, I. Image analysis to refine measurements of dairy cow behaviour from a real-time location system. Biosyst. Eng. 2018, 173, 32–44. [Google Scholar] [CrossRef]

- Kashiha, M.; Pluk, A.; Bahr, C.; Vranken, E.; Berckmans, D. Development of an early warning system for a broiler house using computer vision. Biosyst. Eng. 2013, 116, 36–45. [Google Scholar] [CrossRef]

- Nunes, L.; Ampatzidis, Y.; Costa, L.; Wallau, M. Horse foraging behavior detection using sound recognition techniques and artificial intelligence. Comput. Electron. Agric. 2021, 183, 106080. [Google Scholar] [CrossRef]

- Jung, D.H.; Kim, N.Y.; Moon, S.H.; Jhin, C.; Kim, H.J.; Yang, J.S.; Kim, H.S.; Lee, T.S.; Lee, J.Y.; Park, S.H. Deep Learning-Based Cattle Vocal Classification Model and Real-Time Livestock Monitoring System with Noise Filtering. Animals 2021, 11, 357. [Google Scholar] [CrossRef] [PubMed]

- Meen, G.; Schellekens, M.; Slegers, M.; Leenders, N.; van Erp-van der Kooij, E.; Noldus, L.P. Sound analysis in dairy cattle vocalisation as a potential welfare monitor. Comput. Electron. Agric. 2015, 118, 111–115. [Google Scholar] [CrossRef]

- Röttgen, V.; Becker, F.; Tuchscherer, A.; Wrenzycki, C.; Düpjan, S.; Schön, P.C.; Puppe, B. Vocalization as an indicator of estrus climax in Holstein heifers during natural estrus and superovulation. J. Dairy Sci. 2018, 101, 2383–2394. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chelotti, J.O.; Vanrell, S.R.; Rau, L.S.M.; Galli, J.R.; Planisich, A.M.; Utsumi, S.A.; Milone, D.H.; Giovanini, L.L.; Rufiner, H.L. An online method for estimating grazing and rumination bouts using acoustic signals in grazing cattle. Comput. Electron. Agric. 2020, 173, 105443. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Edwards, S.A.; Sturm, B. Implementation of machine vision for detecting behaviour of cattle and pigs. Livest. Sci. 2017, 202, 25–38. [Google Scholar] [CrossRef] [Green Version]

- Overton, M.; Sischo, W.; Temple, G.; Moore, D. Using time-lapse video photography to assess dairy cattle lying behavior in a free-stall barn. J. Dairy Sci. 2002, 85, 2407–2413. [Google Scholar] [CrossRef]

- Butt, B. Seasonal space-time dynamics of cattle behavior and mobility among Maasai pastoralists in semi-arid Kenya. J. Arid. Environ. 2010, 74, 403–413. [Google Scholar] [CrossRef]

- MacKay, J.; Turner, S.; Hyslop, J.; Deag, J.; Haskell, M. Short-term temperament tests in beef cattle relate to long-term measures of behavior recorded in the home pen. J. Anim. Sci. 2013, 91, 4917–4924. [Google Scholar] [CrossRef] [PubMed]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, E5716–E5725. [Google Scholar] [CrossRef] [Green Version]

- Xu, B.; Wang, W.; Falzon, G.; Kwan, P.; Guo, L.; Chen, G.; Tait, A.; Schneider, D. Automated cattle counting using Mask R-CNN in quadcopter vision system. Comput. Electron. Agric. 2020, 171, 105300. [Google Scholar] [CrossRef]

- Jones, J.W.; Antle, J.M.; Basso, B.; Boote, K.J.; Conant, R.T.; Foster, I.; Godfray, H.C.J.; Herrero, M.; Howitt, R.E.; Janssen, S.; et al. Toward a new generation of agricultural system data, models, and knowledge products: State of agricultural systems science. Agric. Syst. 2017, 155, 269–288. [Google Scholar] [CrossRef]

- Rojo-Gimeno, C.; van der Voort, M.; Niemi, J.K.; Lauwers, L.; Kristensen, A.R.; Wauters, E. Assessment of the value of information of precision livestock farming: A conceptual framework. NJAS-Wagening. J. Life Sci. 2019, 90, 100311. [Google Scholar] [CrossRef]

| Behaviour | Description |

|---|---|

| Grazing | Head is placed in or over feed or pasture, while cattle searches, masticates, or sorts the feed (silage) or pasture |

| Exploring | Head is in close proximity to or in contact with the ground, using the nose to detect smells or food |

| Grooming | Turns head towards abdomen with a stretched neck, using their tongue to groom the body |

| Mounting | Animal climbs on any part of the body or head of another animal |

| Ruminating | The cow regurgitates feed, or swallows masticated feed and regurgitates it |

| Lying | The cow lies in any position except flat on its side |

| Walking | The position of the body and four legs changes, with the head and neck not moving |

| Standing | The cow stands on all four legs with its head erect and without swinging its head from side to side |

| Aggressive | Causes actual or potential harm (e.g., threat) to other animals |

| Work | Sensor | Behaviour Type | Feature | Model | Automation Level | Average Accuracy |

|---|---|---|---|---|---|---|

| Contact sensor based approach | ||||||

| Martiskainen et al. [97] | 3D accelerometer | standing, lying, ruminating, feeding, normal, lame walking, lying down, and standing up | Statistical features | SVM | low | 94.50% |

| Tani et al. [98] | single-axis accelerator | chewing | sound spectrogram | pattern matching | low | over 90.0% |

| González et al. [80] | GPS and 3D accelerometers | foraging, ruminating, traveling, resting, and others | Statistical features | Statistical analysis | medium | 90.5% |

| Smith et al. [99] | motion collars | grazing, walking, ruminating, resting, and others | head position and motion intensity | Binary time series classifiers | medium | 82.25% |

| Williams et al. [100] | GPS | grazing, resting, and walking | statistical features | machine learning | medium | 85.0% |

| Williams et al. [101] | GPS data | grazing, resting, and walking | behaviour-labelled GPS data | hidden Markov model | medium | 94.0% |

| Andriamandroso et al. [102] | IMU | grass intake and ruminating | statistical features | two-step discrimination tree | low | 92.0% |

| Wang et al. [103] | 3D accelerometer | standing, lying, normal walking, active walking, standing up, and lying down | statistical features | binary decision-tree | medium | 76.47% |

| Rahman et al. [104] | 3D accelerometer | grazing, standing, or ruminating | statistical features | Stratified Cross Validation | medium | 91.2% |

| Achour et al. [105] | IMU | lying, standing, lying down, standing up, walking, and stationary behaviours | statistical features | Finite Mixture Models | medium | 99.0% |

| Peng et al. [106] | IMU | feeding, lying, ruminating licking salt, moving, social licking, and head butting | motion data | LSTM-RNN model | medium | 88.65% |

| Riaboff et al. [107] | 3D accelerometer | grazing, walking lying, and standing | statistical features | decision tree | medium | 95.0% |

| Williams et al. [108] | 3D accelerometer | drinking | statistical features | accelerometer algorithm | medium | 95.0% |

| Peng et al. [93] | IMU | ruminating (lying), ruminating (standing), lying normal, standing normal, feeding, lying final, and standing final | deep learning features | LSTM-RNN | high | 77.56% |

| Shen et al. [109] | 3D accelerometer | eating, ruminating, and other behaviours | time/frequency-domain features | K-nearest neighbour | high | 93.25% |

| Tran et al. [110] | 3D accelerometer | walking, feeding, lying, and standing | statistical features | Random Forest algorithm | high | 94.75% |

| Non-contact sensor-based approach | ||||||

| Tsai and Huang [96] | camera | estrus and mating behaviour | changes of moving object lengths | motion analysis | medium | 99.67% |

| Dutta et al. [82] | camera | grazing, ruminating, resting, walking, and other | sensor data and behaviour observations | bagging ensemble classification | medium | 96% |

| Porto et al. [111] | camera | feeding and standing | image detectors | Viola–Jones algorithm | medium | 86.5% |

| Gu et al. [112] | camera | estrus and hoof disease behaviours | minimum bounding box area | Dynamic Analysis | medium | 83.40% |

| Ahn et al. [113] | camera | mounting, walking, running, tail wagging, and foot stamping | motion history image feature | SVM | medium | 82.83% |

| Guo et al. [114] | camera | mounting behaviour | geometric and optical flow characteristics | SVM | medium | 90.9% |

| Yin et al. [115] | camera | lying, standing, walking, drinking, and feeding | visual features | EfficientNet-LSTM | high | 97.87% |

| Achour et al. [116] | camera | standing and feeding | visual features | CNN | high | 92.00% |

| Fuentes et al. [77] | camera | 15 types: standing, lying, lying, and others | 3D-CNN features | deep learning | high | 78.80% |

| Wu et al. [13] | camera | drinking, ruminating, walking, standing, and lying | visual features | CNN-LSTM | high | 97.60% |

| Guo et al. [117] | camera | exploring, feeding, grooming, standing, and walking | visual features | BiGUR-attention | high | over 82% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiao, Y.; Kong, H.; Clark, C.; Lomax, S.; Su, D.; Eiffert, S.; Sukkarieh, S. Intelligent Perception-Based Cattle Lameness Detection and Behaviour Recognition: A Review. Animals 2021, 11, 3033. https://doi.org/10.3390/ani11113033

Qiao Y, Kong H, Clark C, Lomax S, Su D, Eiffert S, Sukkarieh S. Intelligent Perception-Based Cattle Lameness Detection and Behaviour Recognition: A Review. Animals. 2021; 11(11):3033. https://doi.org/10.3390/ani11113033

Chicago/Turabian StyleQiao, Yongliang, He Kong, Cameron Clark, Sabrina Lomax, Daobilige Su, Stuart Eiffert, and Salah Sukkarieh. 2021. "Intelligent Perception-Based Cattle Lameness Detection and Behaviour Recognition: A Review" Animals 11, no. 11: 3033. https://doi.org/10.3390/ani11113033

APA StyleQiao, Y., Kong, H., Clark, C., Lomax, S., Su, D., Eiffert, S., & Sukkarieh, S. (2021). Intelligent Perception-Based Cattle Lameness Detection and Behaviour Recognition: A Review. Animals, 11(11), 3033. https://doi.org/10.3390/ani11113033