Emotion Recognition in Cats

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

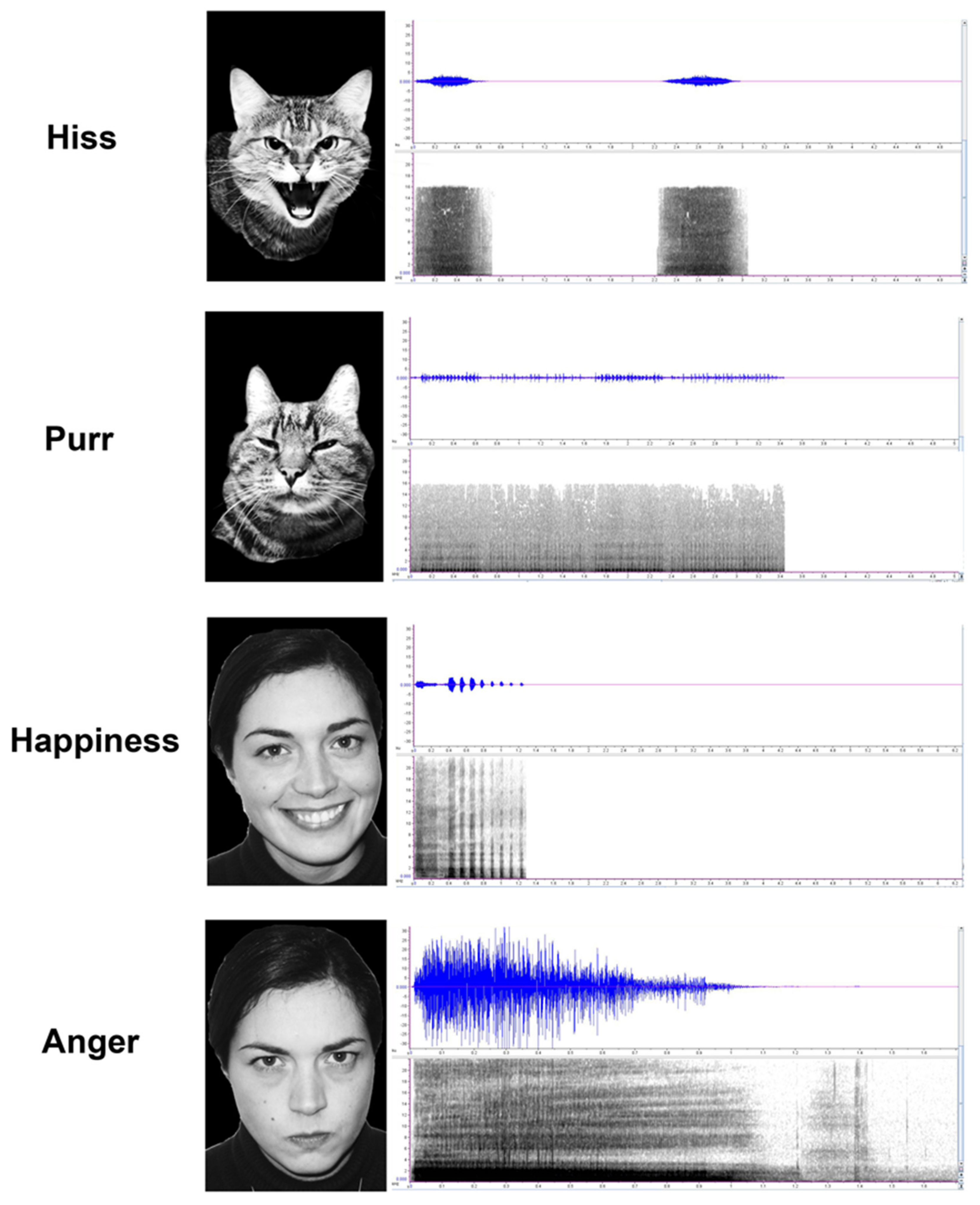

2.2. Emotional Stimuli

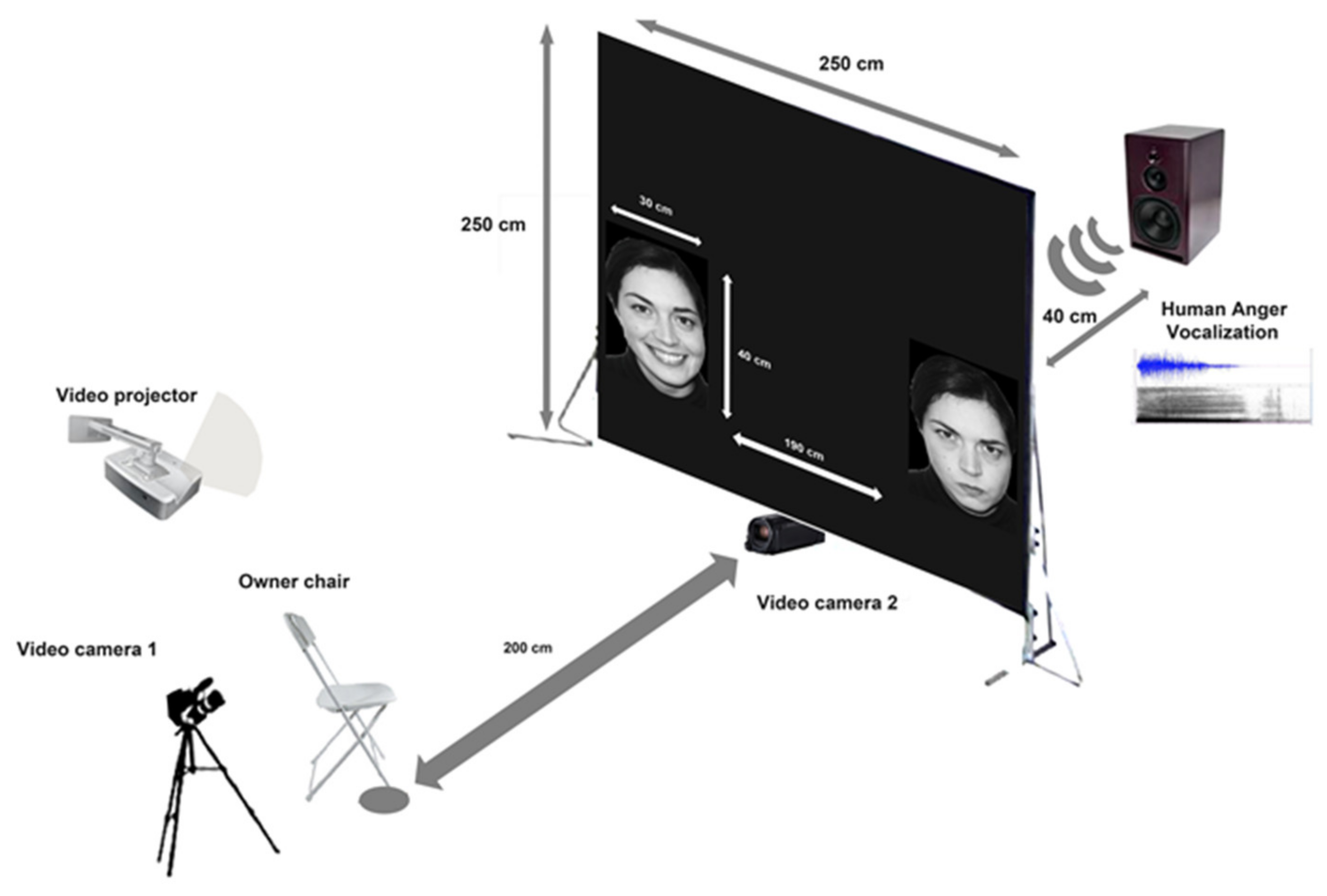

2.3. Experimental Setup

2.4. Procedure

2.5. Ethical Statement

2.6. Data Analysis

2.6.1. Looking Preference

2.6.2. Behavioral Score

3. Results

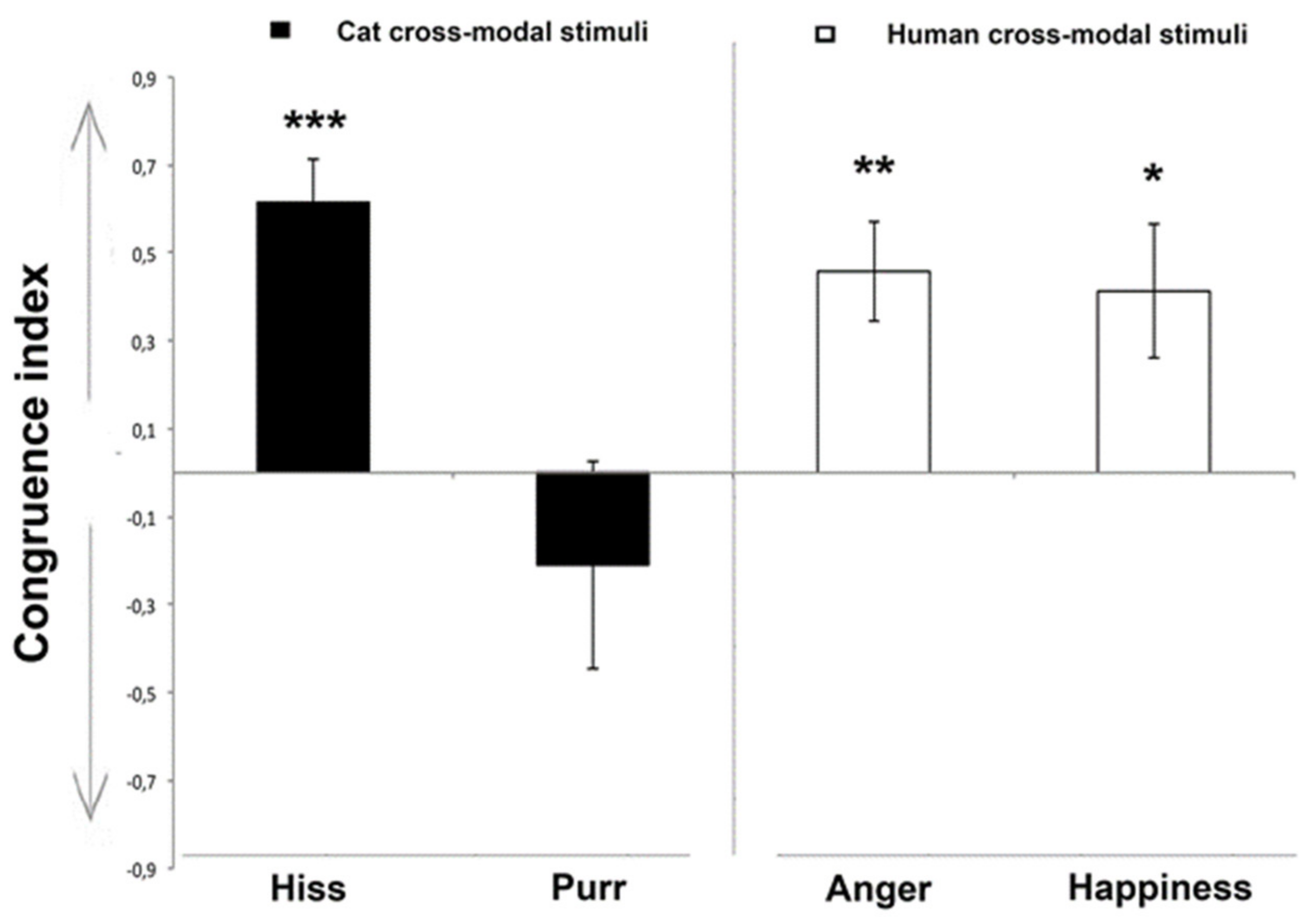

3.1. Looking Preference

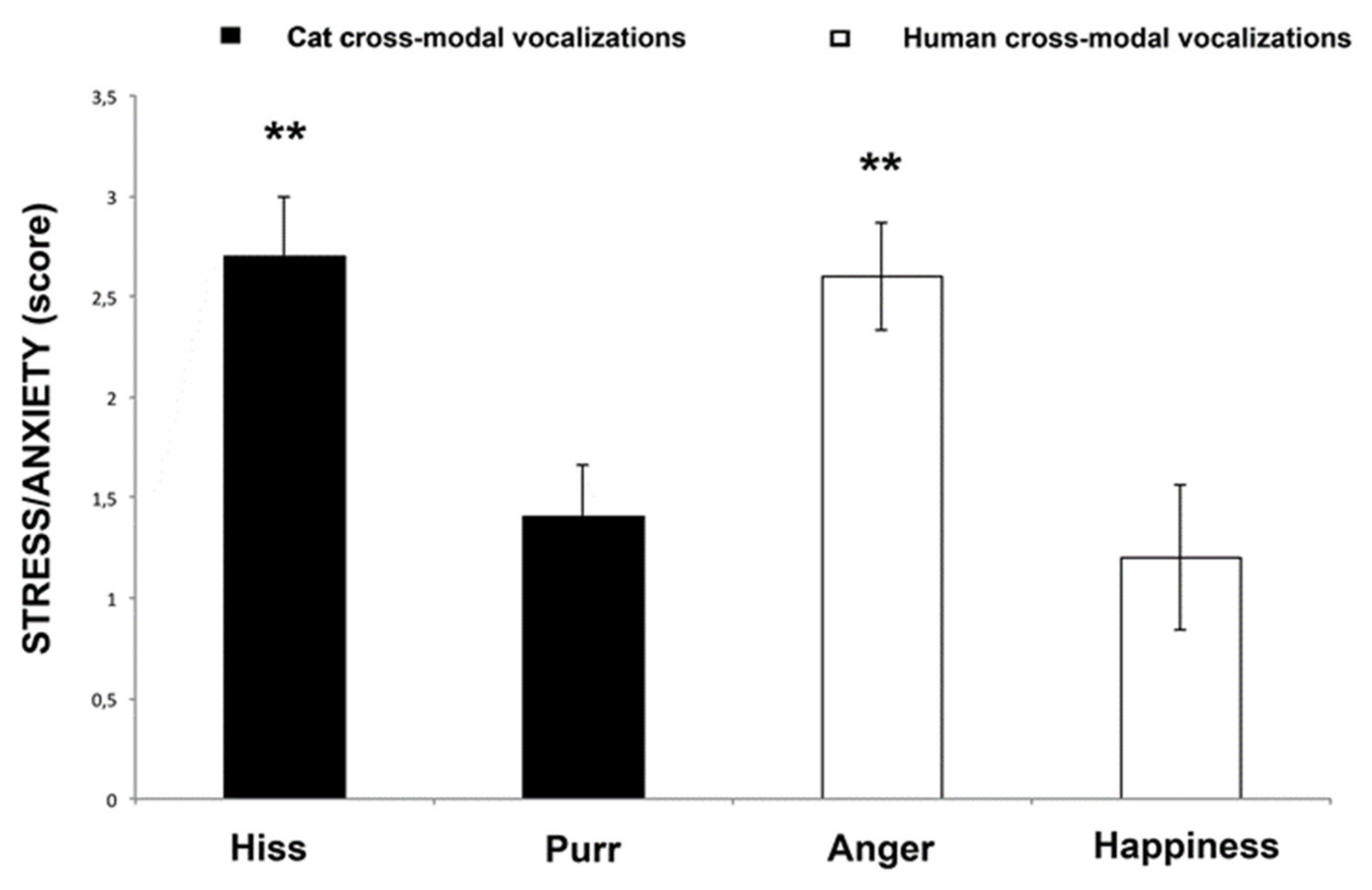

3.2. Behavioral Score

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Campanella, S.; Belin, P. Integrating face and voice in person perception. Trends. Cogn. Sci. 2007, 11, 535–543. [Google Scholar] [CrossRef] [PubMed]

- Sliwa, J.; Duhamel, J.R.; Pascalis, O.; Wirth, S. Spontaneous voice face identity matching by rhesus monkeys for familiar conspecifics and humans. Proc. Natl. Acad. Sci. USA 2011, 108, 1735–1740. [Google Scholar] [CrossRef] [PubMed]

- Saito, A.; Shinozuka, K. Vocal recognition of owners by domestic cats (Felis catus). Anim. Cogn. 2013, 16, 685–690. [Google Scholar] [CrossRef]

- Sankey, C.; Henry, S.; André, N.; Richard-Yris, M.A.; Hausberger, M. Do horses have a concept of person? PLoS ONE 2011, 6, e18331. [Google Scholar] [CrossRef]

- Huber, L.; Racca, A.; Scaf, B.; Virányi, Z.; Range, F. Discrimination of familiar human faces in dogs (Canis familiaris). Learn. Motiv. 2013, 44, 258–269. [Google Scholar] [CrossRef] [PubMed]

- Coulon, M.; Deputte, B.L.; Heyman, Y.; Baudoin, C. Individual recognition in domestic cattle (Bos taurus): Evidence from 2D-images of heads from different breeds. PLoS ONE 2009, 4, e4441. [Google Scholar] [CrossRef] [PubMed]

- Kendrick, K.M.; Atkins, K.; Hinton, M.R.; Heavens, P.; Keverne, B. Are faces special for sheep? Evidence from facial and object discrimination learning tests showing effects of inversion and social familiarity. Behav. Process. 1996, 38, 19–35. [Google Scholar] [CrossRef]

- Wathan, J.; Proops, L.; Grounds, K.; McComb, K. Horses discriminate between facial expressions of conspecifics. Sci. Rep. 2016, 6, 38322. [Google Scholar] [CrossRef] [PubMed]

- Racca, A.; Amadei, E.; Ligout, S.; Guo, K.; Meints, K.; Mills, D. Discrimination of human and dog faces and inversion responses in domestic dogs (Canis familiaris). Anim. Cogn. 2010, 13, 525–533. [Google Scholar] [CrossRef]

- Siniscalchi, M.; Laddago, S.; Quaranta, A. Auditory lateralization of conspecific and heterospecific vocalizations in cats. Laterality 2016, 21, 215–227. [Google Scholar] [CrossRef]

- Siniscalchi, M.; Quaranta, A.; Rogers, L.J. Hemispheric specialization in dogs for processing different acoustic stimuli. PLoS ONE 2008, 3, e3349. [Google Scholar] [CrossRef]

- Andics, A.; Gácsi, M.; Faragó, T.; Kis, A.; Miklósi, Á. Voice-sensitive regions in the dog and human brain are revealed by comparative fMRI. Curr. Biol. 2014, 24, 574–578. [Google Scholar] [CrossRef] [PubMed]

- Watts, J.M.; Stookey, J.M. Vocal behaviour in cattle: The animal’s commentary on its biological processes and welfare. Appl. Anim. Behav. Sci. 2000, 67, 15–33. [Google Scholar] [CrossRef]

- Düpjan, S.; Tuchscherer, A.; Langbein, J.; Schön, P.C.; Manteuffel, G.; Puppe, B. Behavioural and cardiac responses towards conspecific distress calls in domestic pigs (Sus scrofa). Physiol. Behav. 2011, 103, 445–452. [Google Scholar] [CrossRef]

- Lemasson, A.; Boutin, A.; Boivin, S.; Blois-Heulin, C.; Hausberger, M. Horse (Equus caballus) whinnies: A source of social information. Anim. Cogn. 2009, 12, 693–704. [Google Scholar] [CrossRef] [PubMed]

- Casey, R.A.; Bradshaw, J.W.S. The effects of additional socialisation for kittens in a rescue centre on their behaviour and suitability as a pet. Appl. Anim. Behav. Sci. 2008, 114, 196–205. [Google Scholar] [CrossRef]

- Koba, Y.; Tanida, H. How do miniature pigs discriminate between people? Discrimination between people wearing coveralls of the same colour. Appl. Anim. Behav. Sci. 2001, 73, 45–58. [Google Scholar] [CrossRef]

- Taylor, A.A.; Davis, H. Individual humans as discriminative stimuli for cattle (Bos taurus). Appl. Anim. Behav. Sci. 1998, 58, 13–21. [Google Scholar] [CrossRef]

- Tallet, C.; Rakotomahandry, M.; Guérin, C.; Lemasson, A.; Hausberger, M. Postnatal auditory preferences in piglets differ according to maternal emotional experience with the same sounds during gestation. Sci. Rep. 2016, 6, 37238. [Google Scholar] [CrossRef]

- d’Ingeo, S.; Quaranta, A.; Siniscalchi, M.; Stomp, M.; Coste, C.; Bagnard, C.; Hausberger, M.; Cousillas, H. Horses associate individual human voices with the valence of past interactions: A behavioural and electrophysiological study. Sci. Rep. 2019, 9, 11568. [Google Scholar] [CrossRef]

- Sankey, C.; Richard-Yris, M.A.; Leroy, H.; Henry, S.; Hausberger, M. Positive interactions lead to lasting positive memories in horses, Equus caballus. Anim. Behav. 2010, 79, 869–875. [Google Scholar] [CrossRef]

- Knolle, F.; Goncalves, R.P.; Morton, A.J. Sheep recognize familiar and unfamiliar human faces from two-dimensional images. Royal Soc. Open Sci. 2017, 4, 171228. [Google Scholar] [CrossRef] [PubMed]

- Kendrick, K.M.; Atkins, K.; Hinton, M.R.; Broad, K.D.; Fabre-Nys, C.; Keverne, B. Facial and vocal discrimination in sheep. Anim. Behav. 1995, 49, 1665–1676. [Google Scholar] [CrossRef]

- Stone, S.M. Human facial discrimination in horses: Can they tell us apart? Anim. Cogn. 2010, 13, 51–61. [Google Scholar] [CrossRef]

- Bensoussan, S.; Tigeot, R.; Lemasson, A.; Meunier-Salaün, M.C.; Tallet, C. Domestic piglets (Sus scrofa domestica) are attentive to human voice and able to discriminate some prosodic features. Appl. Anim. Behav. Sci. 2019, 210, 38–45. [Google Scholar] [CrossRef]

- Adachi, I.; Kuwahata, H.; Fujita, K. Dogs recall their owner’s face upon hearing the owner’s voice. Anim. Cogn. 2007, 10, 17–21. [Google Scholar] [CrossRef]

- Briefer, E.; McElligott, A.G. Mutual mother-offspring vocal recognition in an ungulate hider species (Capra hircus). Anim. Cogn. 2011, 14, 585–598. [Google Scholar] [CrossRef] [PubMed]

- Leroux, M.; Hetem, R.S.; Hausberger, M.; Lemasson, A. Cheetahs discriminate familiar and unfamiliar human voices. Sci. Rep. 2018, 8, 15516. [Google Scholar] [CrossRef]

- Gergely, A.; Petró, E.; Oláh, K.; Topál, J. Auditory–visual matching of conspecifics and non conspecifics by dogs and human infants. Animals 2019, 9, 17. [Google Scholar] [CrossRef]

- Proops, L.; McComb, K. Cross-modal individual recognition in domestic horses (Equus caballus) extends to familiar humans. Proc. R. Soc. B 2012, 279, 3131–3138. [Google Scholar] [CrossRef]

- Lampe, J.F.; Andre, J. Cross-modal recognition of human individuals in domestic horses (Equus caballus). Anim. Cogn. 2012, 15, 623–630. [Google Scholar] [CrossRef] [PubMed]

- Pitcher, B.J.; Briefer, E.F.; Baciadonna, L.; McElligott, A.G. Cross-modal recognition of familiar conspecifics in goats. Royal Soc. Open Sci. 2017, 4, 160346. [Google Scholar] [CrossRef] [PubMed]

- Adachi, I.; Hampton, R.R. Rhesus monkeys see who they hear: Spontaneous cross-modal memory for familiar conspecifics. PLoS ONE 2011, 6, e23345. [Google Scholar] [CrossRef] [PubMed]

- Kondo, N.; Izawa, E.; Watanabe, S. Crows cross-modally recognize group members but not non-group members. Proc. R. Soc. B 2012, 279, 1937–1942. [Google Scholar] [CrossRef] [PubMed]

- Takagi, S.; Arahori, M.; Chijiiwa, H.; Saito, A.; Kuroshima, H.; Fujita, K. Cats match voice and face: Cross-modal representation of humans in cats (Felis catus). Anim. Cogn. 2019, 22, 901–906. [Google Scholar] [CrossRef] [PubMed]

- Calvert, G.A. Crossmodal processing in the human brain: Insights from functional neuroimaging studies. Cereb. Cortex. 2001, 11, 1110–1123. [Google Scholar] [CrossRef]

- Adachi, I.; Kuwahata, H.; Fujita, K.; Tomonaga, M.; Matsuzawa, T. Japanese macaques form a cross-modal representation of their own species in their first year of life. Primates 2006, 47, 350–354. [Google Scholar] [CrossRef]

- Yuval-Greenberg, S.; Deouell, L.Y. The dog’s meow: Asymmetrical interaction in cross-modal object recognition. Exp. Brain Res. 2009, 193, 603–614. [Google Scholar] [CrossRef]

- Proops, L.; McComb, K.; Reby, D. Cross-modal individual recognition in domestic horses (Equus caballus). Proc. Natl. Acad. Sci. USA 2009, 106, 947–951. [Google Scholar] [CrossRef]

- Albuquerque, N.; Guo, K.; Wilkinson, A.; Savalli, C.; Otta, E.; Mills, D. Dogs recognize dog and human emotions. Biol. Lett. 2016, 12, 20150883. [Google Scholar] [CrossRef]

- Ghazanfar, A.A.; Logothetis, N.K. Neuroperception: Facial expressions linked to monkey calls. Nature 2003, 423, 937. [Google Scholar] [CrossRef]

- Galvan, M.; Vonk, J. Man’s other best friend: Domestic cats (F. silvestris catus) and their discrimination of human emotion cues. Anim. Cogn. 2016, 19, 193–205. [Google Scholar] [CrossRef] [PubMed]

- Driscoll, C.; Clutton-Brock, J.; Kitchener, A.C.; O’Brien, S.J. The taming of the cat. Sci. Am. 2009, 300, 68–75. [Google Scholar] [CrossRef] [PubMed]

- Turner, D.C. The ethology of the human–cat relationship. Swiss. Arch. Vet. Med. 1991, 133, 63–70. [Google Scholar]

- Miklósi, Á.; Pongrácz, P.; Lakatos, G.; Topál, J.; Csányi, V. A comparative study of the use of visual communicative signals in interactions between dogs (Canis familiaris) and humans and cats (Felis catus) and humans. J. Comp. Psychol. 2005, 119, 179–186. [Google Scholar] [CrossRef] [PubMed]

- Edwards, C.; Heiblum, M.; Tejeda, A.; Galindo, F. Experimental evaluation of attachment behaviours in owned cats. J. Vet. Behav. 2007, 2, 119–125. [Google Scholar] [CrossRef]

- Topàl, J.; Miklòsi, Á.; Csànyi, V.; Dòka, A. Attachment behaviour in dogs (Canis familiaris): A new application of Ainsworth’s (1969) Strange Situation Test. J. Comp. Psychol. 1998, 112, 219–229. [Google Scholar] [CrossRef]

- Siniscalchi, M.; Stipo, C.; Quaranta, A. “Like Owner, Like Dog”: Correlation between the Owner’s Attachment Profile and the Owner-Dog Bond. PLoS ONE 2013, 8, e78455. [Google Scholar] [CrossRef]

- Pongrácz, P.; Szapu, J.S.; Faragó, T. Cats (Felis silvestris catus) read human gaze for referential information. Intelligence 2018, 74, 43–52. [Google Scholar] [CrossRef]

- Merola, I.; Lazzaroni, M.; Marshall-Pescini, S.; Prato-Previde, E. Social referencing and cat–human communication. Anim. Cogn. 2015, 18, 639–648. [Google Scholar] [CrossRef]

- Saito, A.; Shinozuka, K.; Ito, Y.; Hasegawa, T. Domestic cats (Felis catus) discriminate their names from other words. Sci. Rep. 2019, 9, 5394. [Google Scholar] [CrossRef] [PubMed]

- Siniscalchi, M.; d’Ingeo, S.; Fornelli, S.; Quaranta, A. Lateralized behavior and cardiac activity of dogs in response to human emotional vocalizations. Sci. Rep. 2018, 8, 77. [Google Scholar] [CrossRef]

- Smith, A.V.; Proops, L.; Grounds, K.; Wathan, J.; Scott, S.K.; McComb, K. Domestic horses (Equus caballus) discriminate between negative and positive human nonverbal vocalisations. Sci. Rep. 2018, 8, 13052. [Google Scholar] [CrossRef] [PubMed]

- Siniscalchi, M.; d’Ingeo, S.; Quaranta, A. Orienting asymmetries and physiological reactivity in dogs’ response to human emotional faces. Learn. Behav. 2018, 46, 574–585. [Google Scholar] [CrossRef] [PubMed]

- Smith, A.V.; Proops, L.; Grounds, K.; Wathan, J.; McComb, K. Functionally relevant responses to human facial expressions of emotion in the domestic horse (Equus caballus). Biol. Lett. 2016, 12, 20150907. [Google Scholar] [CrossRef]

- Siniscalchi, M.; d’Ingeo, S.; Quaranta, A. The dog nose “KNOWS” fear: Asymmetric nostril use during sniffing at canine and human emotional stimuli. Behav. Brain Res. 2016, 304, 34–41. [Google Scholar] [CrossRef]

- Siniscalchi, M.; d’Ingeo, S.; Minunno, M.; Quaranta, A. Communication in dogs. Animals 2018, 8, 131. [Google Scholar] [CrossRef]

- Albuquerque, N.; Guo, K.; Wilkinson, A.; Resende, B.; Mills, D.S. Mouth-licking by dogs as a response to emotional stimuli. Behav. Process. 2018, 146, 42–45. [Google Scholar] [CrossRef]

- Siniscalchi, M.; d’Ingeo, S.; Quaranta, A. Lateralized Functions in the Dog Brain. Symmetry 2017, 9, 71. [Google Scholar] [CrossRef]

- Nawroth, C.; Albuquerque, N.; Savalli, C.; Single, M.S.; McElligott, A.G. Goats prefer positive human emotional facial expressions. R. Soc. Open Sci. 2018, 5, 180491. [Google Scholar] [CrossRef]

- Rieger, G.; Turner, D.C. How depressive moods affect the behavior of singly living persons toward their cats. Anthrozoos 1999, 12, 224–233. [Google Scholar] [CrossRef]

- Turner, D.C.; Rieger, G. Singly living people and their cats: A study of human mood and subsequent behavior. Anthrozoos 2001, 14, 38–46. [Google Scholar] [CrossRef]

- Sauter, D.A.; Eisner, F.; Ekman, P.; Scott, S.K. Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. Proc. Natl. Acad. Sci. USA 2010, 107, 2408–2412. [Google Scholar] [CrossRef] [PubMed]

- Shreve, K.R.V.; Udell, M.A. What’s inside your cat’s head? A review of cat (Felis silvestris catus) cognition research past, present and future. Anim. Cogn. 2015, 18, 1195–1206. [Google Scholar] [CrossRef] [PubMed]

- Schötz, S.; van de Weijer, J.; Eklund, R. Phonetic Characteristics of Domestic Cat Vocalisations. In The 1st International Workshop on Vocal Interactivity in-and-between Humans, Animals and Robots, VIHAR; University of Skövde: Skövde, Sweden, 2017; pp. 5–6. [Google Scholar]

- Kiley-Worthington, M. Animal language? Vocal communication of some ungulates, canids and felids. Acta Zool. Fenn. 1984, 171, 83–88. [Google Scholar]

- McComb, K.; Taylor, A.M.; Wilson, C.; Charlton, B.D. The cry embedded within the purr. Curr. Biol. 2009, 19, R507–R508. [Google Scholar] [CrossRef]

| Subjects | Age of Adoption | Current Age (Years) |

|---|---|---|

| 1 | 5 months | 11 |

| 2 | 1 week | 4 |

| 3 | 1 month | 3 |

| 4 | 1 month | 3 |

| 5 | 4 months | 8 |

| 6 | 1 week | 7 |

| 7 | 1 week | 6 |

| 8 | 1 week | 6 |

| 9 | 6 months | 7 |

| 10 | 2 months | 9 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quaranta, A.; d’Ingeo, S.; Amoruso, R.; Siniscalchi, M. Emotion Recognition in Cats. Animals 2020, 10, 1107. https://doi.org/10.3390/ani10071107

Quaranta A, d’Ingeo S, Amoruso R, Siniscalchi M. Emotion Recognition in Cats. Animals. 2020; 10(7):1107. https://doi.org/10.3390/ani10071107

Chicago/Turabian StyleQuaranta, Angelo, Serenella d’Ingeo, Rosaria Amoruso, and Marcello Siniscalchi. 2020. "Emotion Recognition in Cats" Animals 10, no. 7: 1107. https://doi.org/10.3390/ani10071107

APA StyleQuaranta, A., d’Ingeo, S., Amoruso, R., & Siniscalchi, M. (2020). Emotion Recognition in Cats. Animals, 10(7), 1107. https://doi.org/10.3390/ani10071107