VS-SLAM: Robust SLAM Based on LiDAR Loop Closure Detection with Virtual Descriptors and Selective Memory Storage in Challenging Environments

Abstract

1. Introduction

- To mitigate the sensitivity of existing descriptors to translational changes, we propose a novel virtual descriptor technique that enhances translational invariance and improves loop closure detection accuracy.

- To further improve the accuracy of loop closure detection in structurally similar environments, we propose an efficient and reliable selective memory storage technique based on scene recognition and key descriptor evaluation, which also reduces the memory consumption of the loop closure database.

- Based on the two proposed techniques, we developed a LiDAR SLAM system with loop closure detection capability, which maintains high accuracy and robustness even in challenging environments with structural similarity.

- Experimental results in self-built simulation, real-world environments, and public datasets demonstrate that VS-SLAM outperforms state-of-the-art methods in terms of memory efficiency, accuracy, and robustness.

2. Related Work

2.1. Sensitivity of LiDAR Descriptors to Translation Changes

2.2. Accuracy and Memory Consumption of Loop Closure Detection

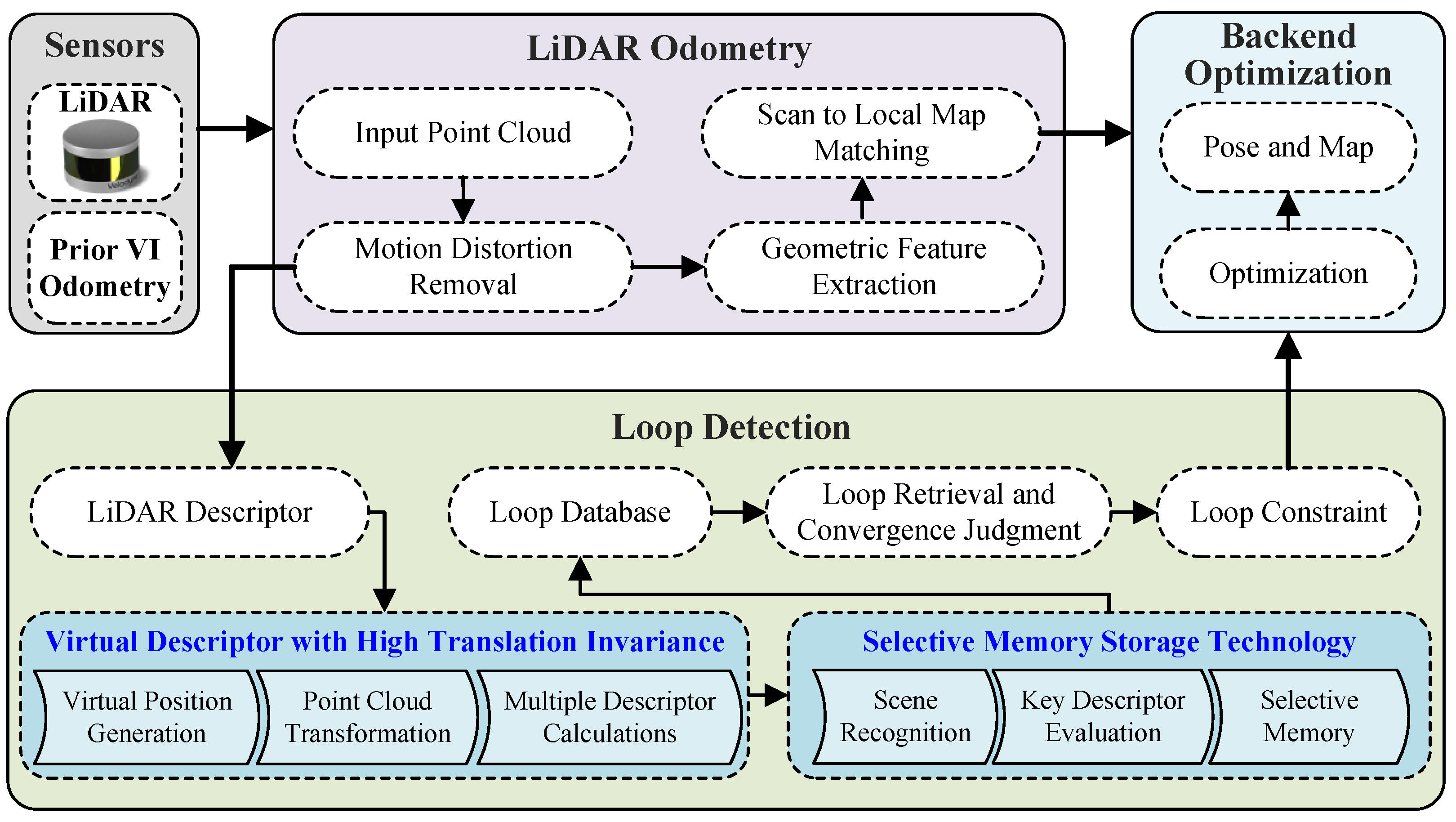

3. System Framework

4. Methods

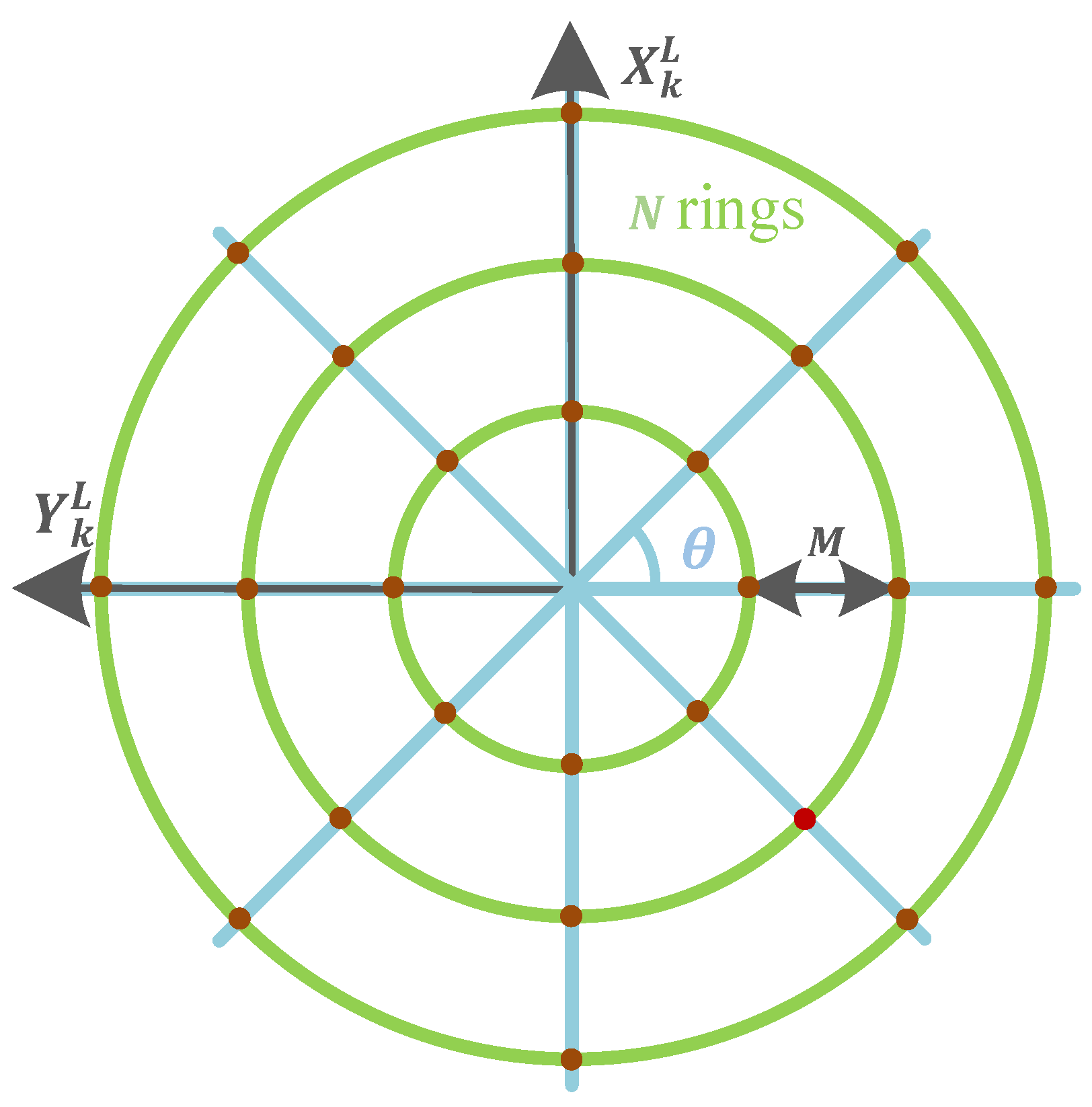

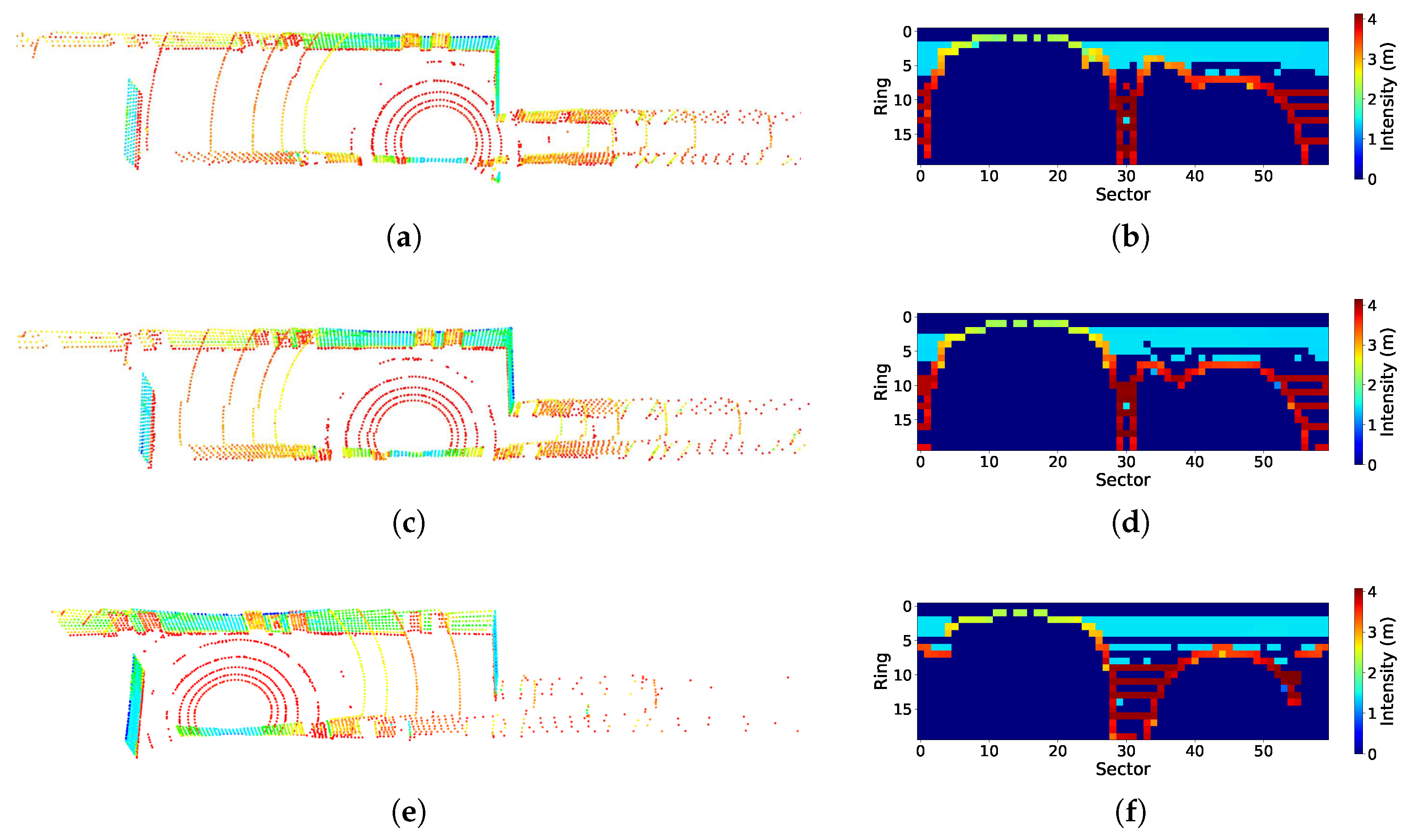

4.1. Virtual Descriptor Technique

4.2. Selective Memory Storage Technology

4.2.1. Scene Recognition

4.2.2. Key Descriptor Evaluation

4.2.3. Selective Memory Storage

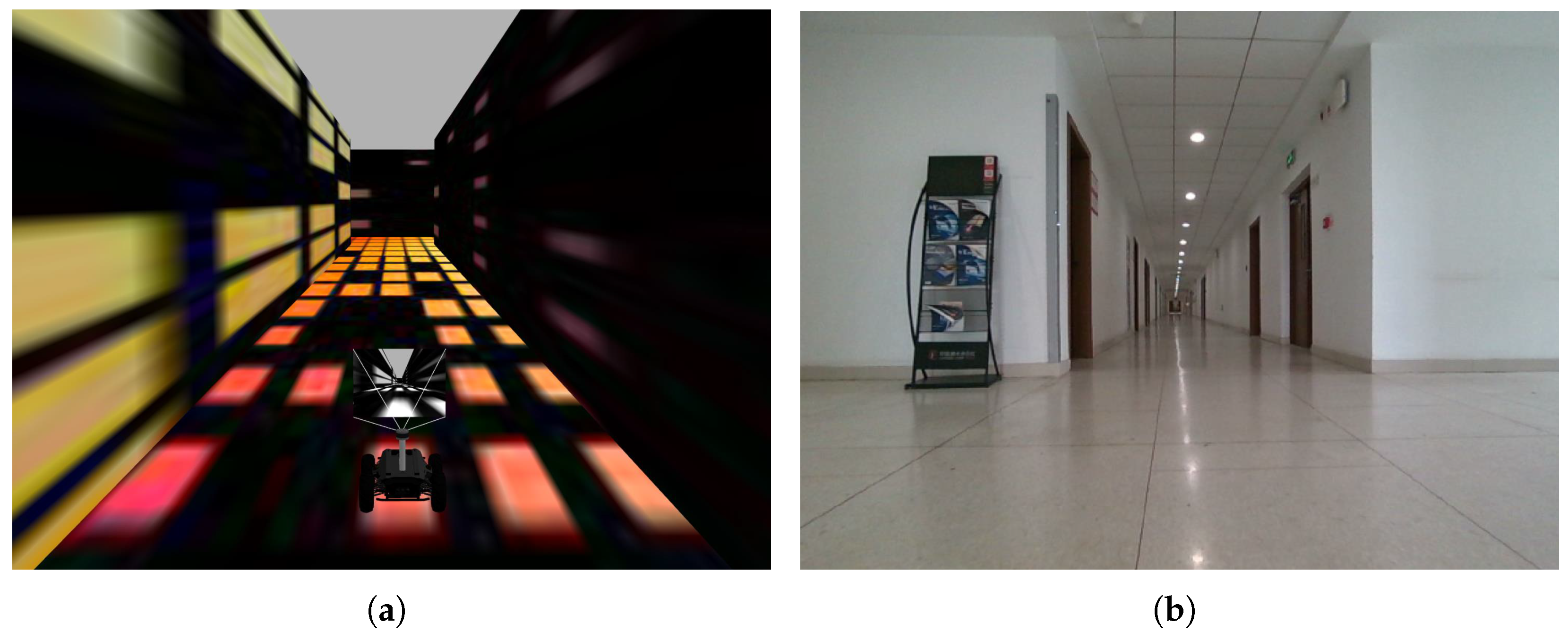

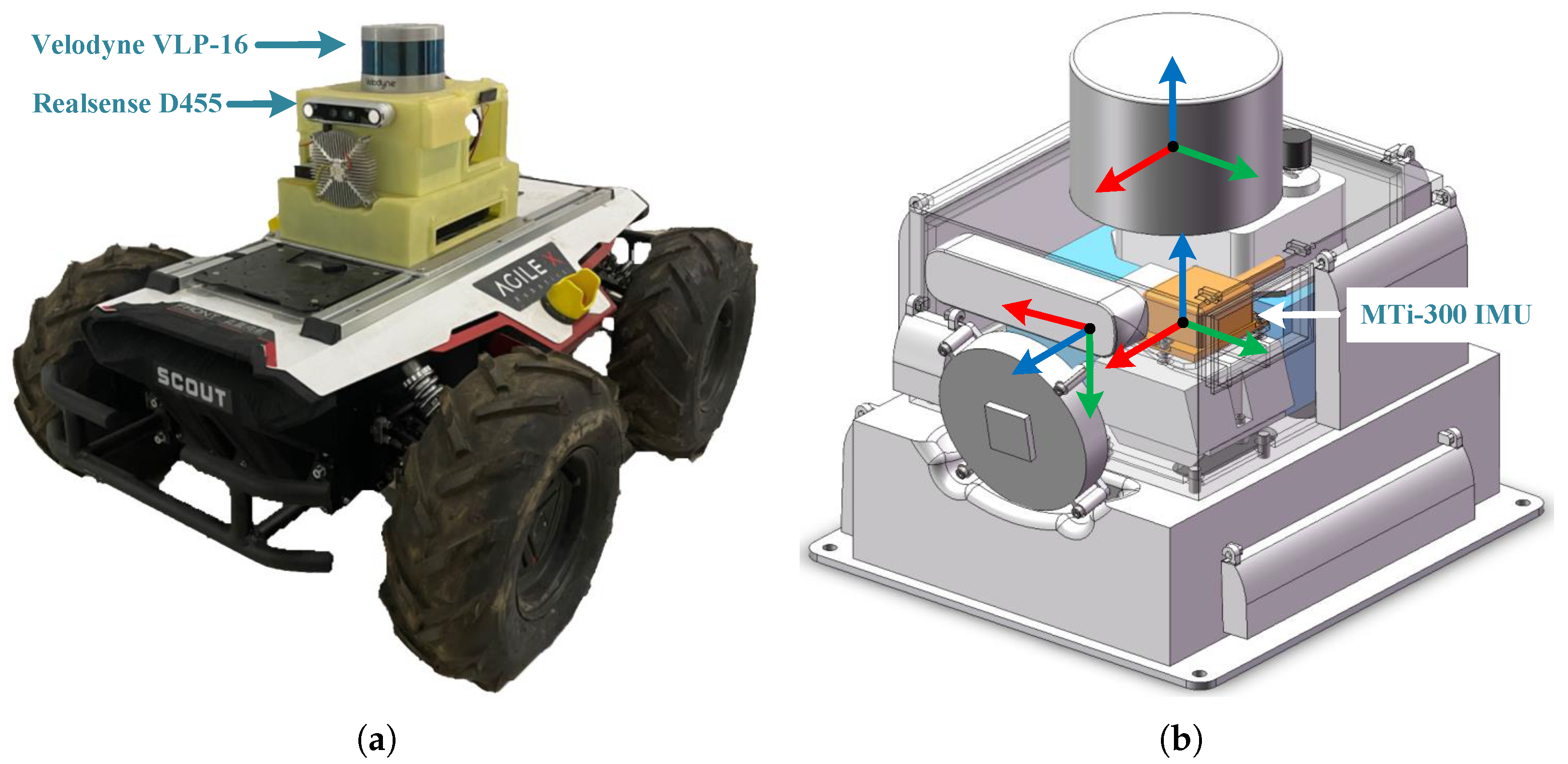

5. Experiments

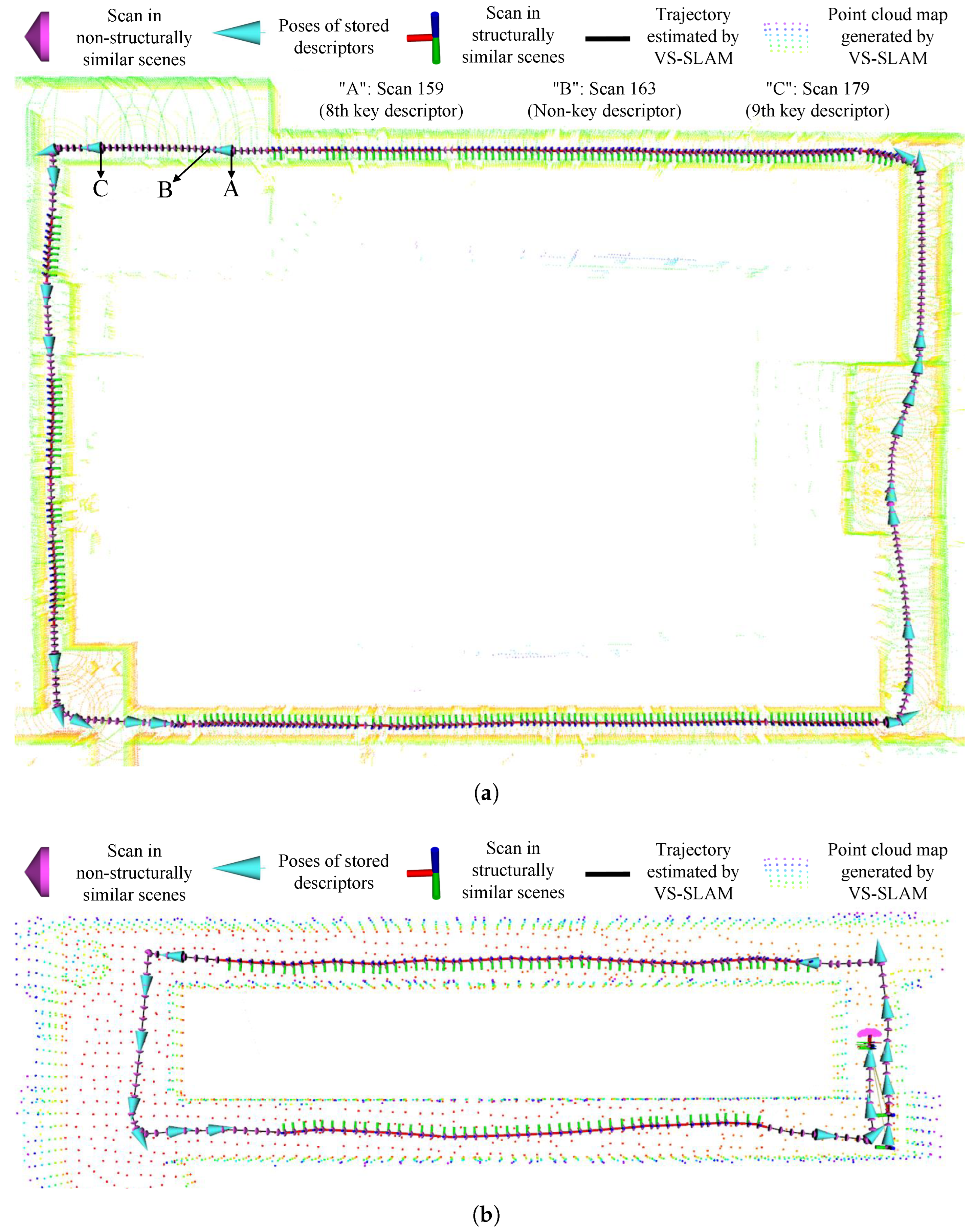

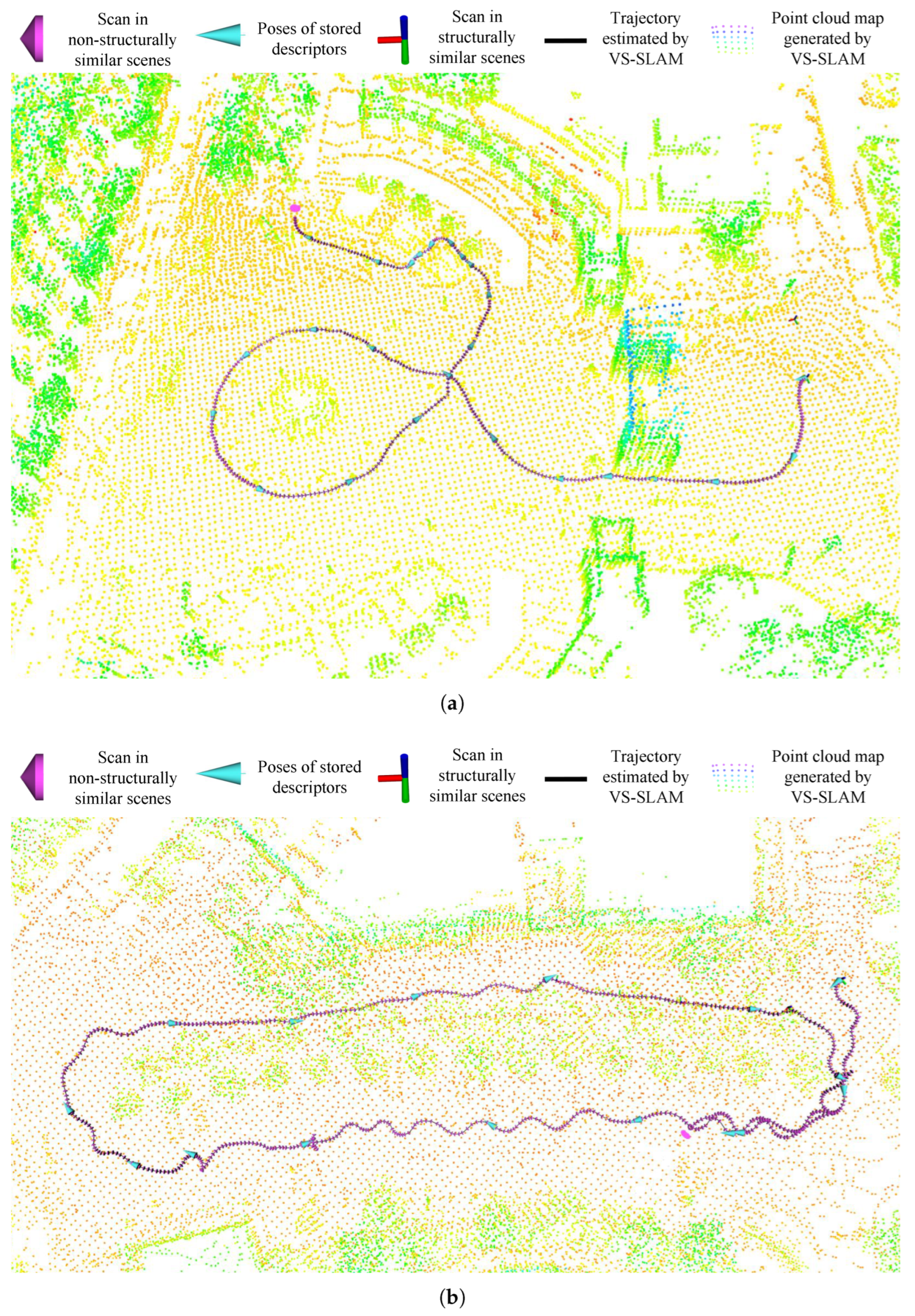

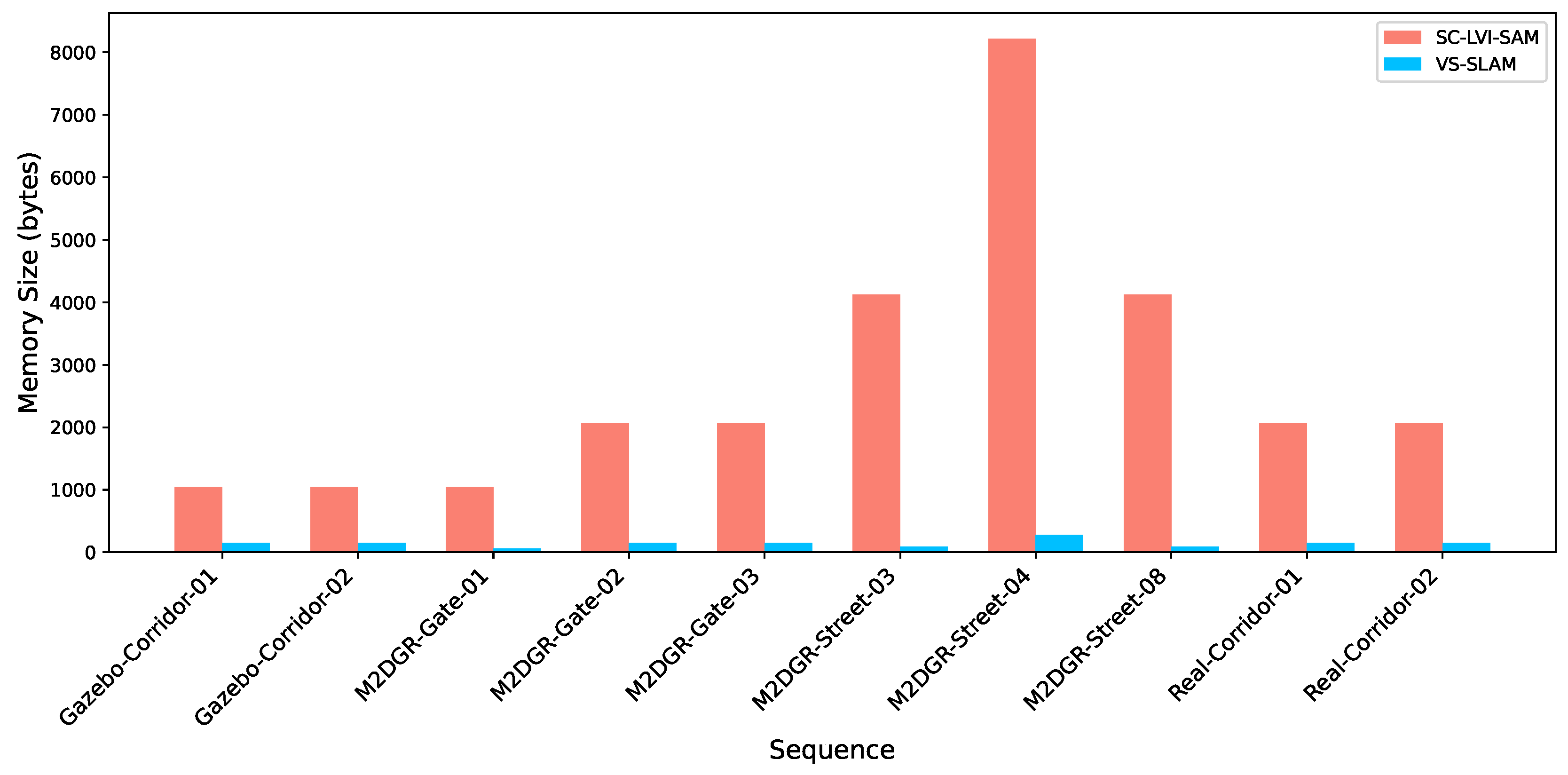

5.1. Selective Memory Storage Technology Testing

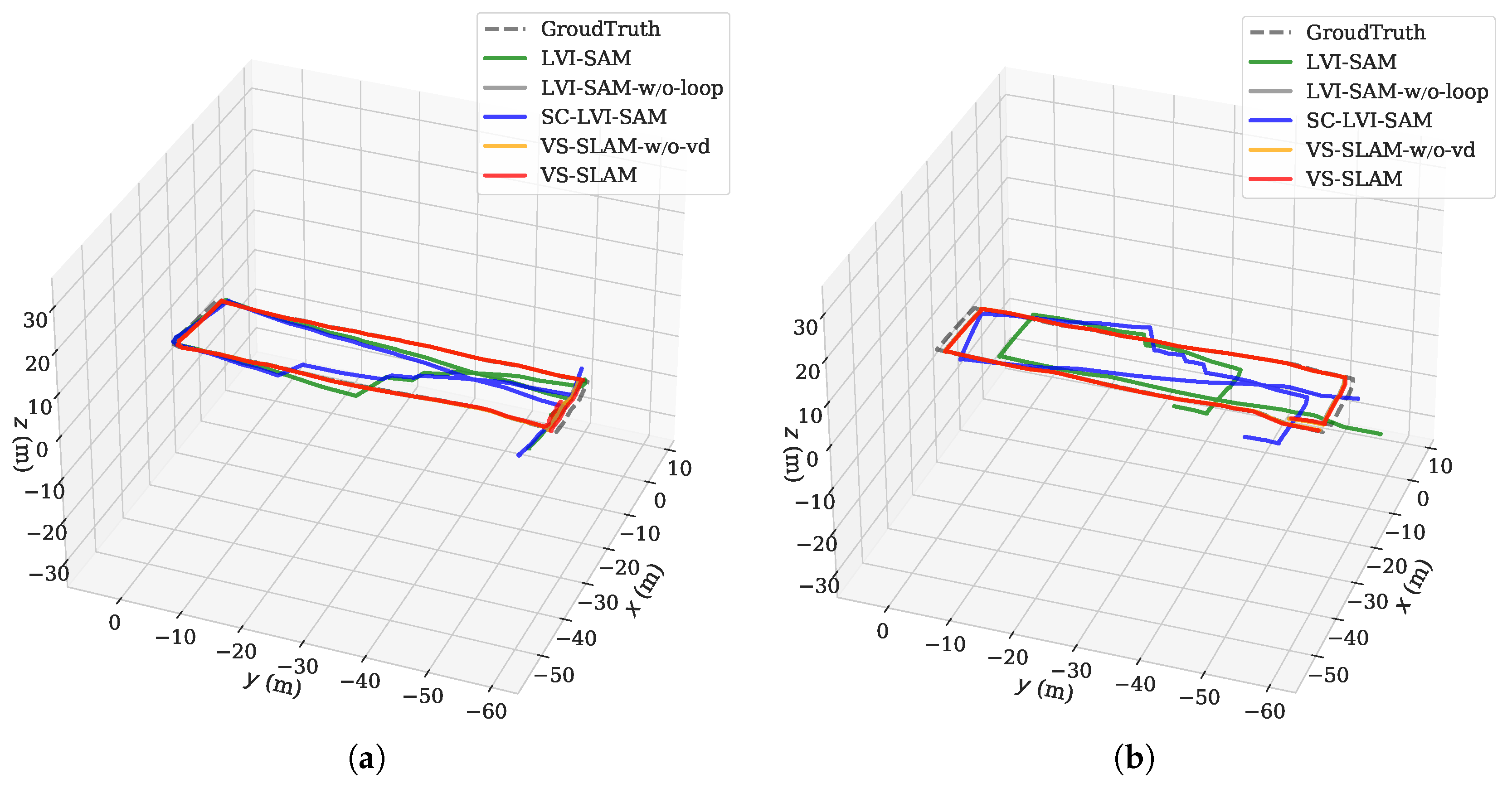

5.2. Comparison of Localization Accuracy and Ablation Study

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ebadi, K.; Bernreiter, L.; Biggie, H.; Catt, G.; Chang, Y.; Chatterjee, A.; Denniston, C.E.; Deschênes, S.P.; Harlow, K.; Khattak, S.; et al. Present and future of SLAM in extreme environments: The DARPA SubT challenge. IEEE Trans. Robot. 2024, 40, 936–959. [Google Scholar] [CrossRef]

- Li, N.; Yao, Y.; Xu, X.; Peng, Y.; Wang, Z.; Wei, H. An Efficient LiDAR SLAM with Angle-Based Feature Extraction and Voxel-based Fixed-Lag Smoothing. IEEE Trans. Instrum. Meas. 2024, 73, 1–13. [Google Scholar] [CrossRef]

- Zhou, H.; Yao, Z.; Lu, M. Lidar/UWB fusion based SLAM with anti-degeneration capability. IEEE Trans. Veh. Technol. 2021, 70, 820–830. [Google Scholar] [CrossRef]

- Bi, Q.; Zhang, X.; Wen, J.; Pan, Z.; Zhang, S.; Wang, R.; Yuan, J. CURE: A Hierarchical Framework for Multi-Robot Autonomous Exploration Inspired by Centroids of Unknown Regions. IEEE Trans. Autom. Sci. Eng. 2023, 21, 3773–3786. [Google Scholar] [CrossRef]

- Zou, Q.; Sun, Q.; Chen, L.; Nie, B.; Li, Q. A comparative analysis of LiDAR SLAM-based indoor navigation for autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6907–6921. [Google Scholar] [CrossRef]

- Roriz, R.; Cabral, J.; Gomes, T. Automotive LiDAR technology: A survey. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6282–6297. [Google Scholar] [CrossRef]

- Yin, H.; Liu, P.X.; Zheng, M. Stereo visual odometry with automatic brightness adjustment and feature tracking prediction. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Zou, Z.; Yuan, C.; Xu, W.; Li, H.; Zhou, S.; Xue, K.; Zhang, F. LTA-OM: Long-term association LiDAR–IMU odometry and mapping. J. Field Robot. 2024, 41, 2455–2474. [Google Scholar] [CrossRef]

- Kim, G.; Kim, A. Scan Context: Egocentric spatial descriptor for place recognition within 3D point cloud map. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4802–4809. [Google Scholar]

- He, L.; Wang, X.; Zhang, H. M2DP: A novel 3D point cloud descriptor and its application in loop closure detection. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 231–237. [Google Scholar]

- Yuan, C.; Lin, J.; Zou, Z.; Hong, X.; Zhang, F. STD: Stable triangle descriptor for 3D place recognition. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 1897–1903. [Google Scholar]

- Zhang, Z.; Huang, Y.; Si, S.; Zhao, C.; Li, N.; Zhang, Y. OSK: A Novel LiDAR Occupancy Set Key-based Place Recognition Method in Urban Environment. IEEE Trans. Instrum. Meas. 2024, 73, 8502115. [Google Scholar] [CrossRef]

- Jiao, J.; Ye, H.; Zhu, Y.; Liu, M. Robust odometry and mapping for multi-LiDAR systems with online extrinsic calibration. IEEE Trans. Robot. 2022, 38, 351–371. [Google Scholar] [CrossRef]

- Jiao, J.; Zhu, Y.; Ye, H.; Huang, H.; Yun, P.; Jiang, L.; Wang, L.; Liu, M. Greedy-based feature selection for efficient LiDAR SLAM. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5222–5228. [Google Scholar]

- Wang, H.; Wang, C.; Chen, C.L.; Xie, L. F-LOAM: Fast LiDAR odometry and mapping. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4390–4396. [Google Scholar]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled lidar inertial odometry via smoothing and mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5135–5142. [Google Scholar]

- Wang, H.; Wang, C.; Xie, L. Intensity Scan Context: Coding intensity and geometry relations for loop closure detection. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2095–2101. [Google Scholar]

- Kim, G.; Choi, S.; Kim, A. Scan Context++: Structural place recognition robust to rotation and lateral variations in urban environments. IEEE Trans. Robot. 2021, 38, 1856–1874. [Google Scholar] [CrossRef]

- Xu, M.; Lin, S.; Wang, J.; Chen, Z. A LiDAR SLAM System with Geometry Feature Group Based Stable Feature Selection and Three-Stage Loop Closure Optimization. IEEE Trans. Instrum. Meas. 2023, 72, 8504810. [Google Scholar] [CrossRef]

- Zhao, X.; Wen, C.; Prakhya, S.M.; Yin, H.; Zhou, R.; Sun, Y.; Xu, J.; Bai, H.; Wang, Y. Multi-Modal Features and Accurate Place Recognition with Robust Optimization for Lidar-Visual-Inertial SLAM. IEEE Trans. Instrum. Meas. 2024, 73, 5033916. [Google Scholar]

- Yuan, C.; Lin, J.; Liu, Z.; Wei, H.; Hong, X.; Zhang, F. BTC: A Binary and Triangle Combined Descriptor for 3D Place Recognition. IEEE Trans. Robot. 2024, 40, 1580–1599. [Google Scholar] [CrossRef]

- Yoon, I.; Islam, T.; Kim, K.; Kwon, C. Viewpoint-Aware Visibility Scoring for Point Cloud Registration in Loop Closure. IEEE Robot. Autom. Lett. 2024, 9, 4146–4153. [Google Scholar] [CrossRef]

- Im, J.U.; Ki, S.W.; Won, J.H. Omni Point: 3D LiDAR-based feature extraction method for place recognition and point registration. IEEE Trans. Intell. Veh. 2024, 9, 5255–5271. [Google Scholar] [CrossRef]

- Shi, C.; Chen, X.; Xiao, J.; Dai, B.; Lu, H. Fast and Accurate Deep Loop Closing and Relocalization for Reliable LiDAR SLAM. IEEE Trans. Robot. 2024, 40, 2620–2640. [Google Scholar] [CrossRef]

- Ebadi, K.; Palieri, M.; Wood, S.; Padgett, C.; Agha-mohammadi, A.A. DARE-SLAM: Degeneracy-aware and resilient loop closing in perceptually-degraded environments. J. Intell. Robot. Syst. 2021, 102, 1–25. [Google Scholar] [CrossRef]

- Song, Z.; Wang, R.; Wu, S.; Wang, Y.; Tong, Y.; Zhang, X. DR-SLAM: Vision-Inertial-Aided Degenerate-Robust LiDAR SLAM Based on Dual Confidence Ellipsoid Oblateness. In Proceedings of the 2024 IEEE 14th International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Copenhagen, Denmark, 16–19 July 2024; pp. 201–206. [Google Scholar]

- Zhang, J.; Kaess, M.; Singh, S. On degeneracy of optimization-based state estimation problems. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 809–816. [Google Scholar]

- Shan, T.; Englot, B.; Ratti, C.; Rus, D. LVI-SAM: Tightly-coupled lidar-visual-inertial odometry via smoothing and mapping. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5692–5698. [Google Scholar]

- Chen, Z.; Xu, Y.; Yuan, S.; Xie, L. iG-LIO: An Incremental GICP-Based Tightly-Coupled LiDAR-Inertial Odometry. IEEE Robot. Autom. Lett. 2024, 9, 1883–1890. [Google Scholar] [CrossRef]

- Tuna, T.; Nubert, J.; Nava, Y.; Khattak, S.; Hutter, M. X-ICP: Localizability-aware LiDAR registration for robust localization in extreme environments. IEEE Trans. Robot. 2024, 40, 452–471. [Google Scholar] [CrossRef]

- Yin, J.; Li, A.; Li, T.; Yu, W.; Zou, D. M2DGR: A multi-sensor and multi-scenario slam dataset for ground robots. IEEE Robot. Autom. Lett. 2021, 7, 2266–2273. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Grupp, M. evo: Python Package for the Evaluation of Odometry and SLAM. 2017. Available online: https://github.com/MichaelGrupp/evo (accessed on 6 December 2024).

- Lu, Y.; Song, D. Robust RGB-D odometry using point and line features. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3934–3942. [Google Scholar]

| Sequence | SC-LVI-SAM [9] | VS-SLAM-w/o-st | VS-SLAM | |||

|---|---|---|---|---|---|---|

| D.C. | M.S. | D.C. | M.S. | D.C. | M.S. | |

| Gazebo-Corridor-01 | 200 | 1048 | 202 | 1048 | 17 | 152 |

| Gazebo-Corridor-02 | 212 | 1048 | 215 | 1048 | 17 | 152 |

| M2DGR-Gate-01 | 211 | 1048 | 218 | 1048 | 5 | 56 |

| M2DGR-Gate-02 | 419 | 2072 | 418 | 2072 | 25 | 152 |

| M2DGR-Gate-03 | 357 | 2072 | 360 | 2072 | 17 | 152 |

| M2DGR-Street-03 | 591 | 4120 | 590 | 4120 | 11 | 88 |

| M2DGR-Street-04 | 1362 | 8216 | 1355 | 8216 | 46 | 280 |

| M2DGR-Street-08 | 587 | 4120 | 580 | 4120 | 16 | 88 |

| Real-Corridor-01 | 434 | 2072 | 432 | 2072 | 23 | 152 |

| Real-Corridor-02 | 423 | 2072 | 431 | 2072 | 21 | 152 |

| Sequence | LVI-SAM [28] | LVI-SAM- w/o-loop [28] | SC-LVI-SAM [9] | VS-SLAM- w/o-vd | VS-SLAM |

|---|---|---|---|---|---|

| Gazebo-Corridor-01 | 5.58 | 0.77 | 6.18 | 0.75 | 0.67 |

| Gazebo-Corridor-02 | 12.20 | 1.08 | 7.04 | 1.10 | 1.05 |

| M2DGR-Gate-01 | 4.58 | 2.26 | 0.14 | 0.14 | 0.13 |

| M2DGR-Gate-02 | 0.30 | 0.30 | 0.31 | 0.31 | 0.30 |

| M2DGR-Gate-03 | 0.15 | 0.15 | 0.15 | 0.15 | 0.14 |

| M2DGR-Street-03 | 0.15 | 0.15 | 0.15 | 0.15 | 0.13 |

| M2DGR-Street-04 | 1.73 | 1.71 | 1.64 | 1.67 | 1.63 |

| M2DGR-Street-08 | 10.56 | 2.47 | 0.71 | 0.71 | 0.65 |

| Real-Corridor-01 | 0.18 | 3.06 | 49.59 | 0.14 | 0.11 |

| Real-Corridor-02 | 0.19 | 2.18 | 56.61 | 0.16 | 0.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Z.; Zhang, X.; Zhang, S.; Wu, S.; Wang, Y. VS-SLAM: Robust SLAM Based on LiDAR Loop Closure Detection with Virtual Descriptors and Selective Memory Storage in Challenging Environments. Actuators 2025, 14, 132. https://doi.org/10.3390/act14030132

Song Z, Zhang X, Zhang S, Wu S, Wang Y. VS-SLAM: Robust SLAM Based on LiDAR Loop Closure Detection with Virtual Descriptors and Selective Memory Storage in Challenging Environments. Actuators. 2025; 14(3):132. https://doi.org/10.3390/act14030132

Chicago/Turabian StyleSong, Zhixing, Xuebo Zhang, Shiyong Zhang, Songyang Wu, and Youwei Wang. 2025. "VS-SLAM: Robust SLAM Based on LiDAR Loop Closure Detection with Virtual Descriptors and Selective Memory Storage in Challenging Environments" Actuators 14, no. 3: 132. https://doi.org/10.3390/act14030132

APA StyleSong, Z., Zhang, X., Zhang, S., Wu, S., & Wang, Y. (2025). VS-SLAM: Robust SLAM Based on LiDAR Loop Closure Detection with Virtual Descriptors and Selective Memory Storage in Challenging Environments. Actuators, 14(3), 132. https://doi.org/10.3390/act14030132