4.1. Single Layer Back-Propagation Neural Network (SLBPNN)

PMSM parameter estimation methods can be broadly classified into model-based approaches [

16,

17,

18] and data-driven approaches [

19,

20,

21]. In the model-based approach, when the mathematical model is linear, the estimation error is small, enabling high-accuracy results. However, PMSM systems exhibit nonlinearities, making it difficult to represent them with a simplified mathematical model, which significantly reduces estimation accuracy. Furthermore, due to the nonlinear relationships among system variables, developing a model-based estimation scheme for PMSM control is highly complex and often impractical. In contrast, data-driven approaches estimate parameters by utilizing both system input and output data. These methods also achieve high accuracy for linear systems; however, when applied to nonlinear systems, the complexity increases, resulting in high-dimensional, multivariable datasets that require extensive analysis. To address this limitation, an alternative strategy has been proposed in which variables with strong correlations to the target parameters are pre-selected, thereby enabling parameter estimation with significantly reduced data analysis requirements. In this study, a PMSM parameter estimation method based on an artificial neural network (ANN) is proposed [

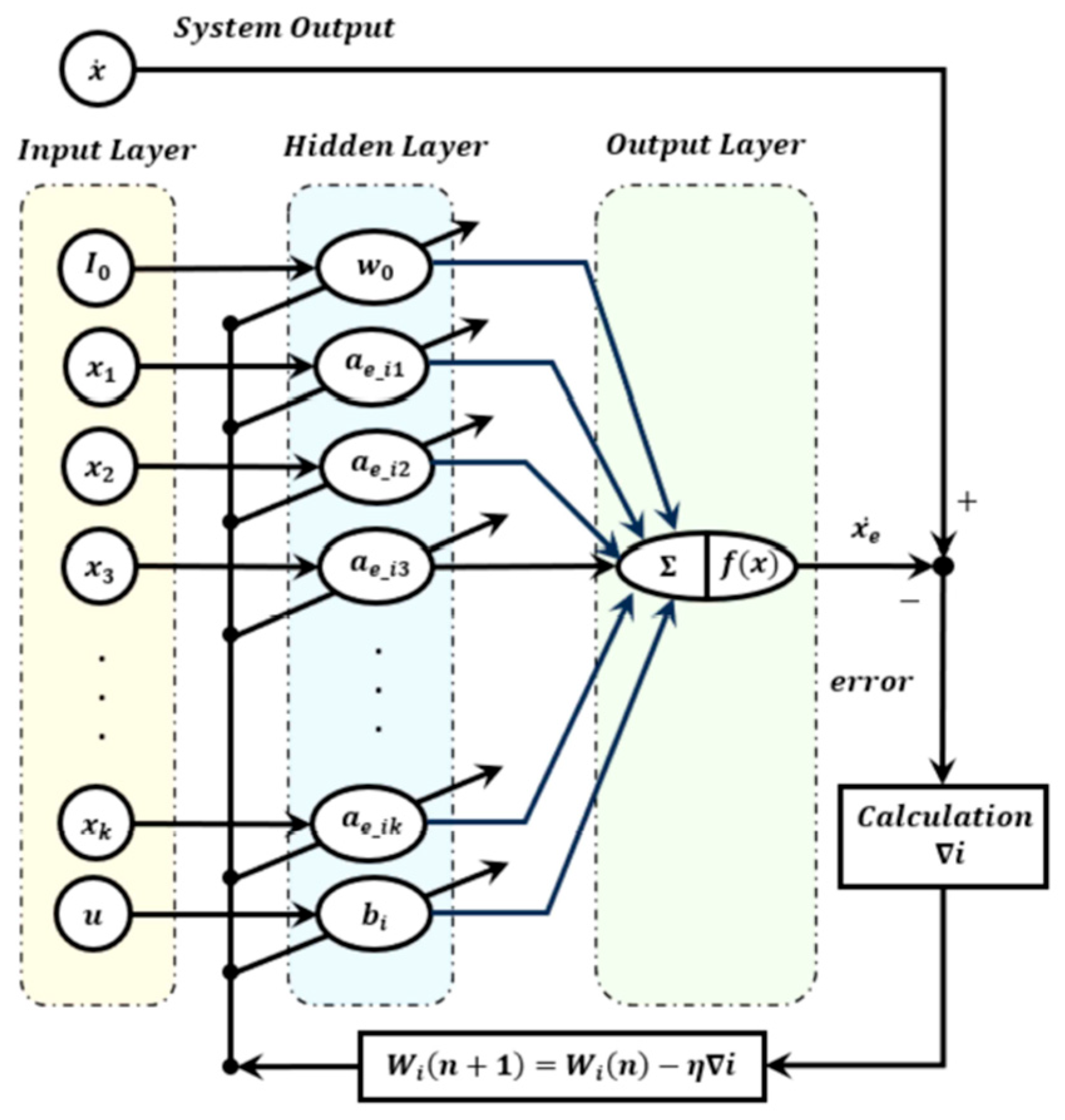

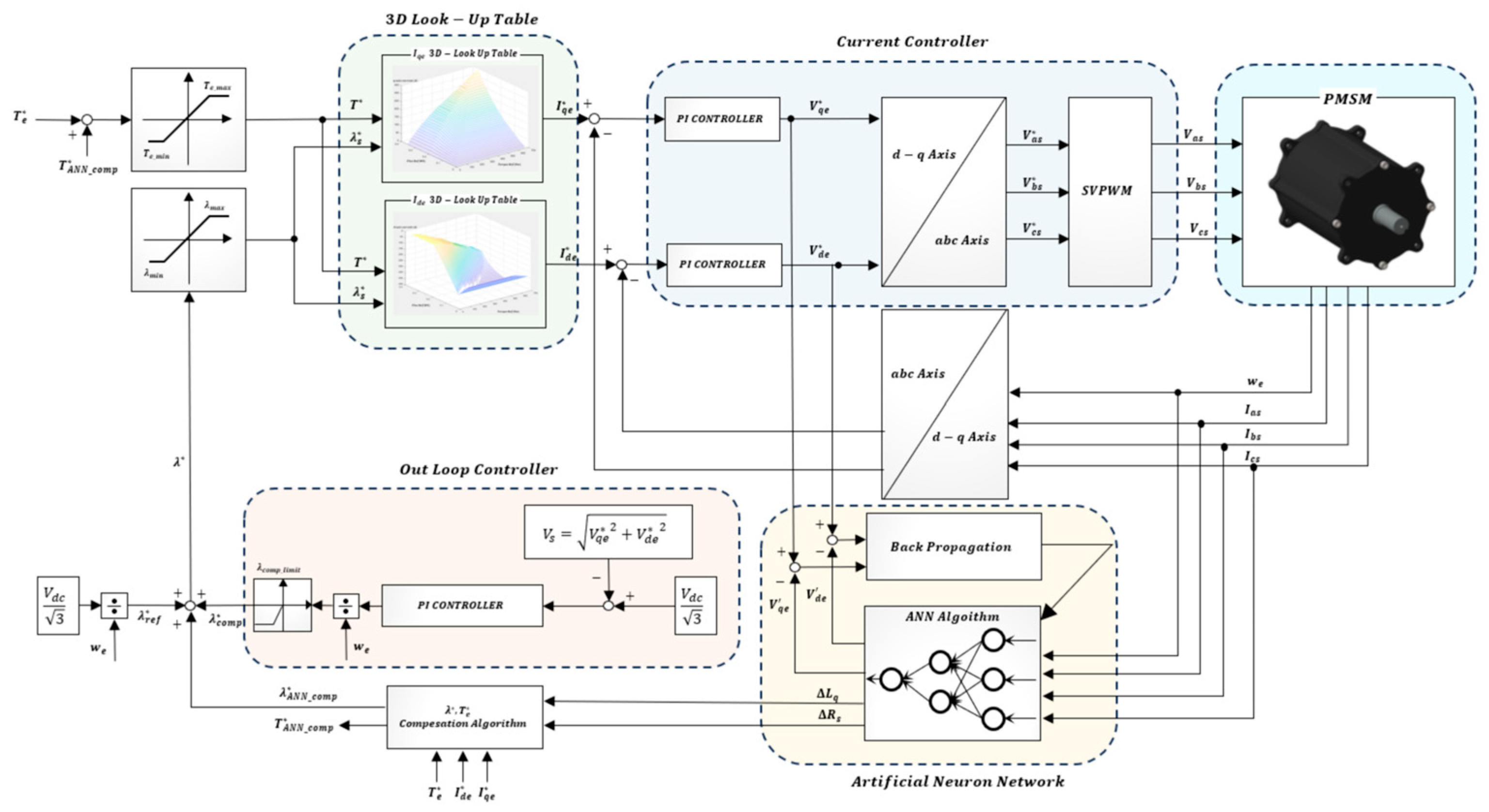

21]. In the ANN-based approach, the target output parameter to be estimated and the relevant input variables of the system are first selected. Subsequently, the number of layers and nodes within the ANN architecture is determined, and the relationships between each node and the target output variable are modeled within the network. The back-propagation (BP) algorithm is then applied to each node of the designed ANN to iteratively update the connection weights (W) by minimizing the estimation error. Through this learning process, the ANN captures the nonlinear mapping between the selected inputs and the target parameter. A block diagram of the proposed ANN-based parameter estimation system is presented in

Figure 6, illustrating the overall structure and data flow of the method.

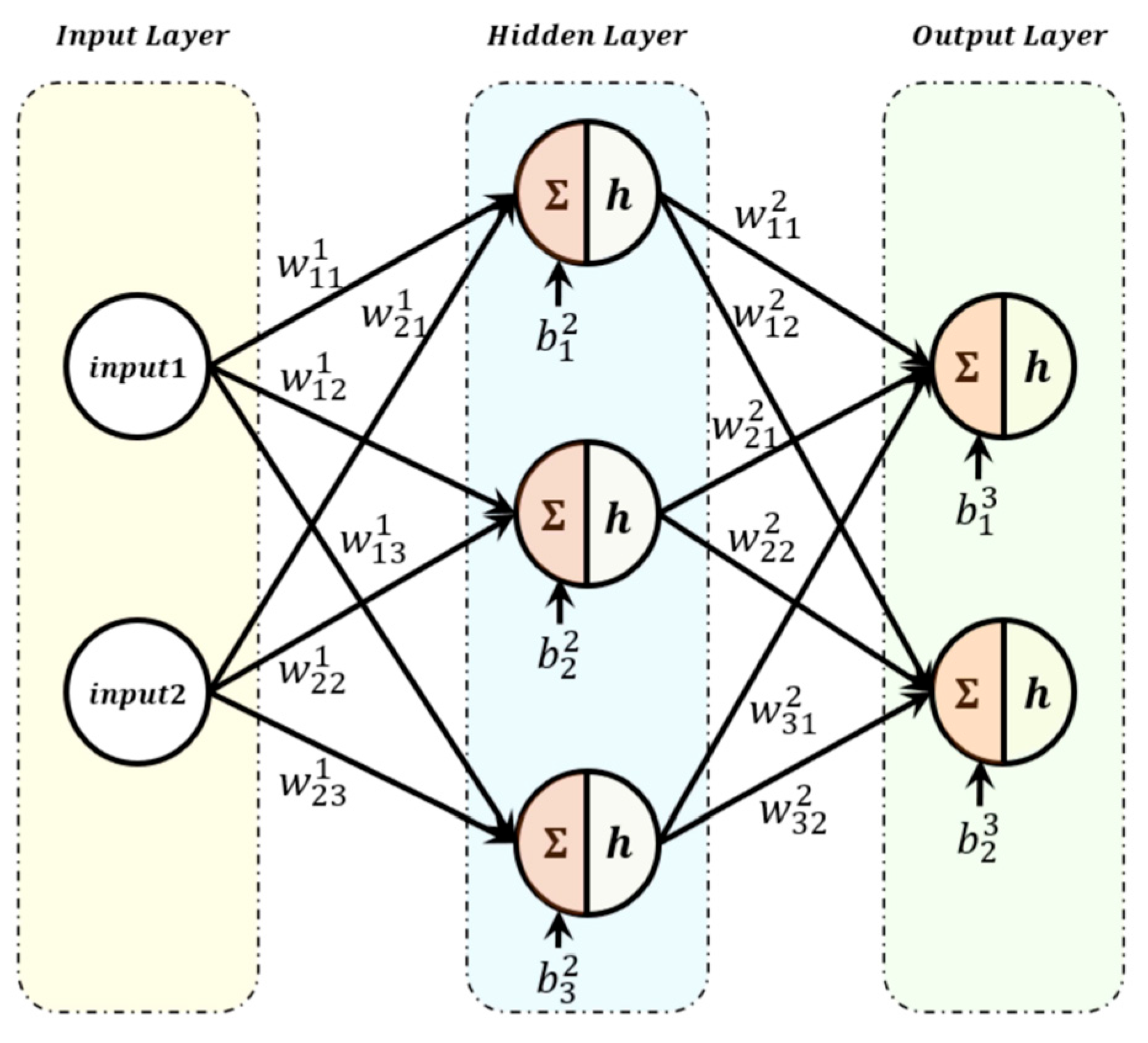

An artificial neural network (ANN) fundamentally consists of three types of layers: an input layer, one or more hidden layers, and an output layer. Each layer is composed of multiple neurons, which serve as the basic processing units.

Figure 6 illustrates the basic structure of an ANN with a single hidden layer. The architecture of an ANN can be flexibly designed by selecting the number of neurons, input variables, and hidden layers according to the application requirements. Each neuron processes the input data through a specific computational operation to produce an estimated output. This operation is performed via an activation function, which typically combines nonlinear transformations with linear operations. By employing nonlinear activation functions in the hidden layers, the ANN can effectively model and track system nonlinearities. A variety of nonlinear activation functions are available for hidden layers, and the choice of activation function significantly influences both the computational complexity and the performance of the ANN. Furthermore, the selection of appropriate input and output variables for the activation function can further enhance performance. In this study, among various activation functions, the Sigmoid function was selected. Using the chosen activation function, the most suitable input and output variables for PMSM parameter estimation were identified. The final design and implementation of the ANN-based approach are presented to evaluate and describe the parameter estimation performance for the PMSM system.

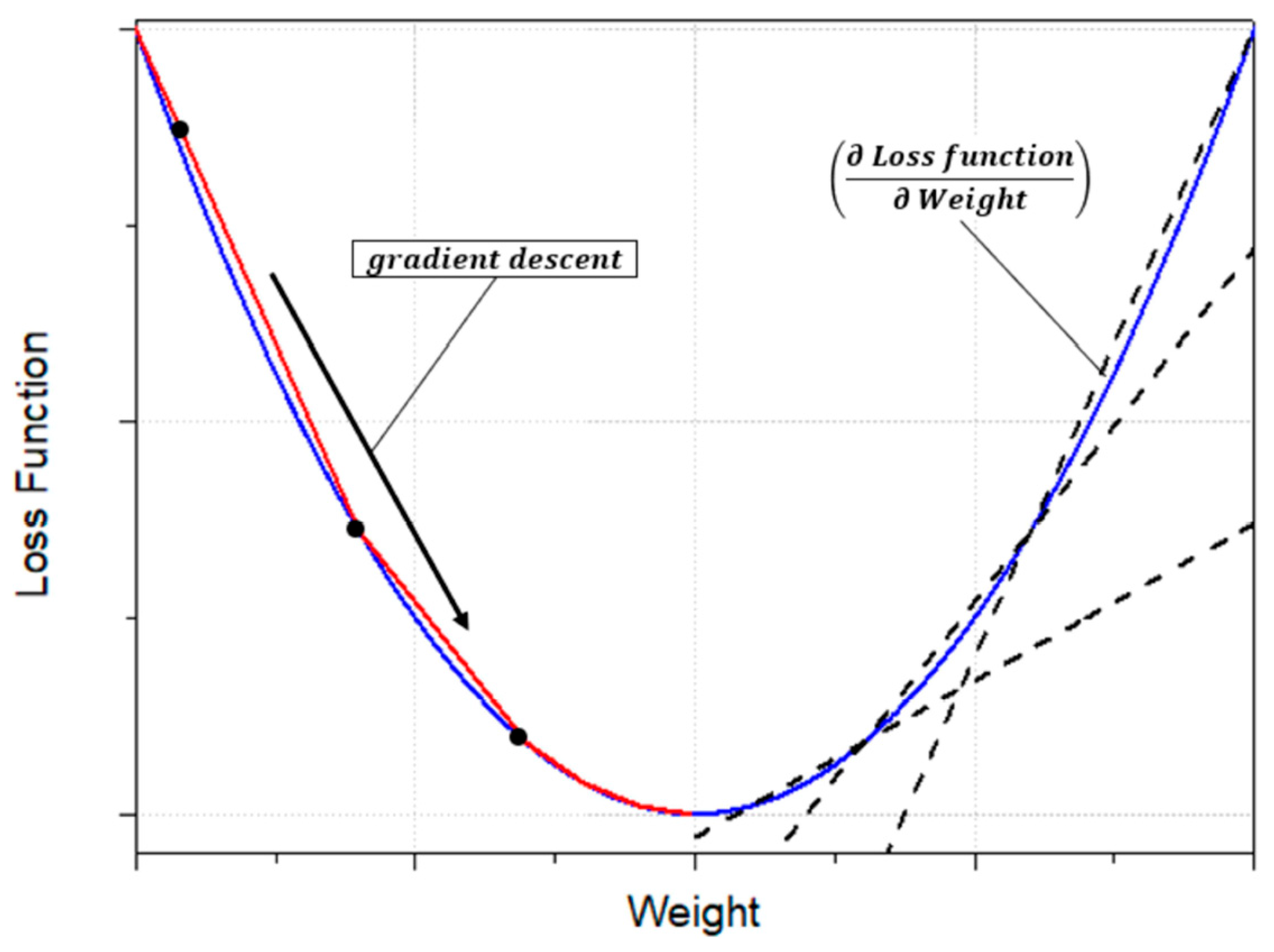

The objective of the artificial neural network (ANN) approach is to learn the optimal weights and biases such that, when the same input values are applied to both the actual nonlinear system and the ANN, the error between their output values is minimized. Continuous research has been conducted on methods for training these weights and biases, with the gradient descent algorithm being the most widely used. In the gradient descent method, the error between the ANN output and the actual system output is defined as a loss function, and the weights of the ANN are iteratively updated in the direction that minimizes this loss function. This optimization process enables the ANN to approximate the nonlinear mapping between the input and output variables of the system, as shown in

Figure 7.

The training algorithm of the artificial neural network (ANN) is designed to iteratively update its parameters until the error is minimized. In this study, the back-propagation (BP) algorithm is employed, in which the weight values of each layer are partially differentiated with respect to the loss function. This process enables the instantaneous update of weights to minimize the output error between the actual system and the ANN. Based on the gradient descent method, the derivatives of the loss function in the hidden layers are driven toward a direction of decreasing magnitude, leading to convergence at the optimal weights and biases that minimize the overall error. Furthermore, the weights updated through BP are scaled by a learning rate,

, which determines the magnitude of weight adjustments. The learning rate is directly related to the rate at which the ANN approaches its optimal solution, allowing control over the convergence speed of the training process. For rapid training, setting a large learning rate can result in divergence or oscillations, preventing convergence to the optimal point. Conversely, a small learning rate can ensure convergence but may significantly increase the time required to reach the optimum and impose greater computational load. Therefore, as previously mentioned, when designing an artificial neural network, it is essential to appropriately select the number of hidden layers, the number of neurons in each hidden layer, the initial weight values, and the learning rate. These design choices have a substantial impact on the overall performance of the ANN.

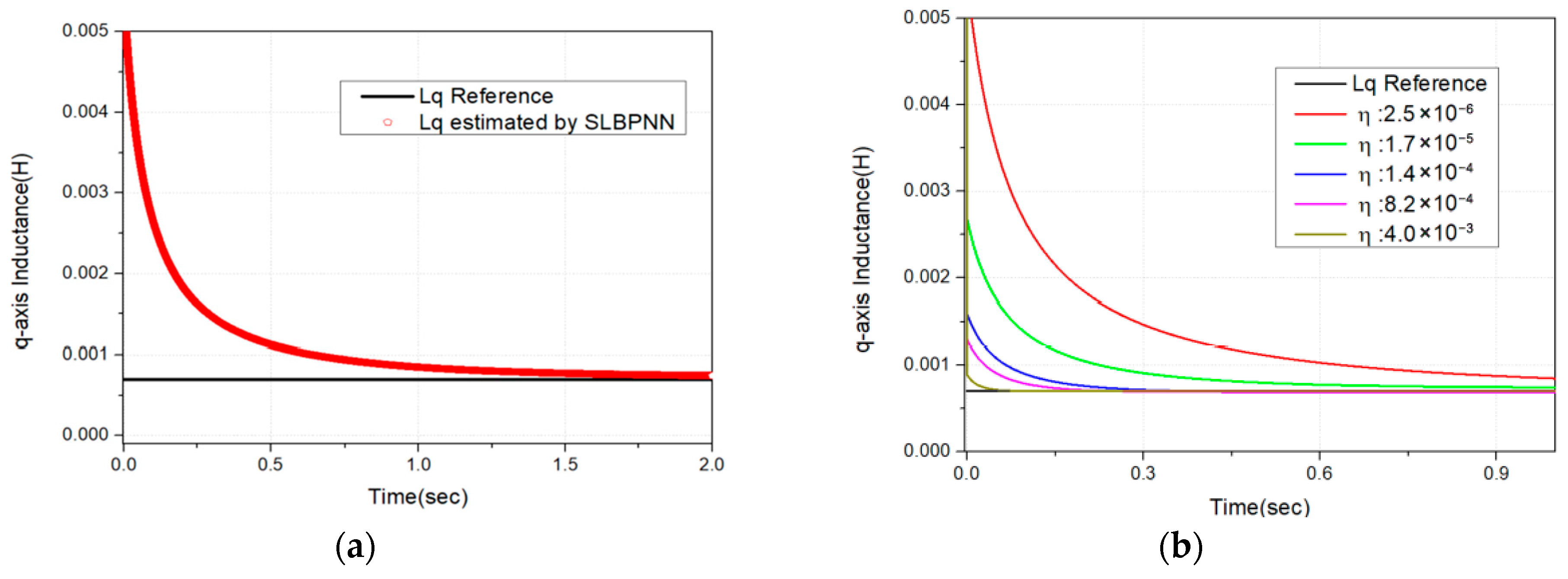

In this study, a Single-Layer Back Propagation Neural Network (SLBPNN) is employed to estimate PMSM parameters, due to its relatively low computational burden, high stability, and strong estimation capability. The SLBPNN approach determines the weights by associating them with the variables to be estimated, as derived from the system state equations [

19]. These weights are then optimized using the gradient descent method to minimize the difference between the actual system output and the SLBPNN output. For clarity of explanation, the system state equations used in the ANN are expressed in Equation (7)

Subsequently, the gradient descent method employed in the artificial neural network is used to minimize the error between the actual system output and the SLBPNN output. The error function is expressed in Equation (8). Furthermore, when the error function in Equation (9) is represented in terms of the weights and the input values from the ANN input layer, it can be reformulated as shown in Equations (8) and (9). By applying the system state equation in Equation (8) to the least squares–based error minimization method described in Equation (10), the resulting expressions can be derived as in Equation (10). The proposed SLBPNN in this study aims to update the weights by minimizing the error defined in Equation (10). This process is carried out through the gradient descent method, in which the error function is partially differentiated with respect to the weights. The resulting weight update equations are presented in Equation (11).

Finally, the weights calculated through the gradient descent method are updated in the SLBPNN algorithm by multiplying them with a predefined learning rate. This process is expressed in Equation (12).

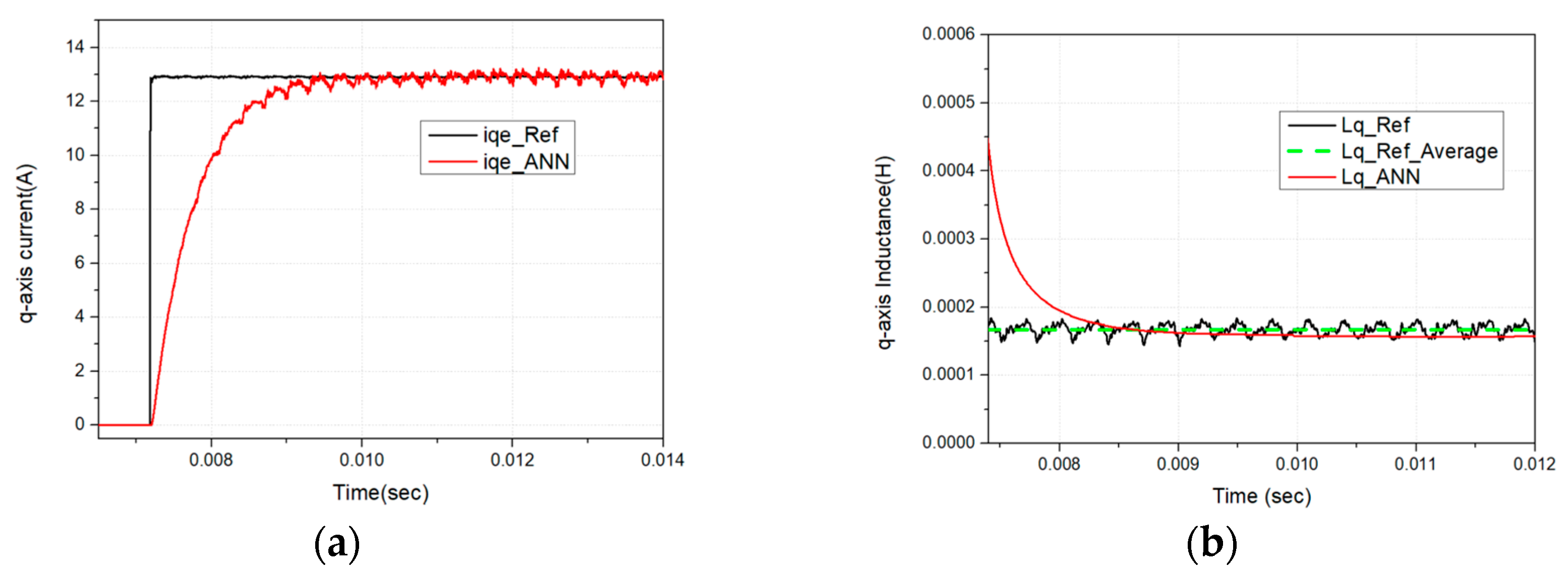

The configuration of the proposed SLBPNN algorithm is shown in

Figure 8. The primary objective is to estimate PMSM parameters using the SLBPNN framework. To achieve this, state variables with significant influence on PMSM parameter estimation are initially preselected through offline simulation. The SLBPNN is then trained to minimize the error between the system output corresponding to these variables and the output of the neural network, thereby enabling accurate parameter estimation. In particular, for PMSM q-axis inductance estimation, the pre-selected input and output variables are determined by applying the previously described discrete mathematical model of the PMSM to the SLBPNN, which serves as the basis for the parameter estimation process.

In the proposed approach, the input neurons of the artificial neural network are selected as the d- and q-axis voltages in the d-q reference frame and the electrical angular velocity of the PMSM, while the outputs are defined as the d- and q-axis currents and the electrical angular velocity. When the PMSM operates at a constant speed and maintains constant current, if the ANN outputs for the d- and q-axis currents match the corresponding outputs of the PMSM current PI controller, it can be inferred that the SLBPNN weights effectively represent the actual PMSM parameters. Therefore, in this study, the SLBPNN weights are designed to track the actual motor parameters. To apply the target motor parameters to the SLBPNN algorithm, the previously derived state equations of the PMSM and the mechanical equations of the motor are rearranged in terms of the d–q axis currents and rotor speed, as expressed in the following Equation (13).

In Equation (13),

denotes the d-axis current,

represents the q-axis current,

is the stator resistance,

(

) denotes the motor inductance,

indicates the electrical angular speed of the motor,

and

represent the d-axis and q-axis voltages, respectively. Furthermore,

denotes the stator flux linkage, P represents the number of pole pairs,

is the viscous friction coefficient, and

denotes the moment of inertia of the motor. In this study, a motor with relatively small differences between the d-axis and q-axis inductances is considered. Under the assumption that the d-axis current is controlled to zero and the viscous friction coefficient

is negligibly small, the load torque

, q-axis current

, and electrical angular speed of the motor

are selected as the input variables. This relationship can be expressed mathematically as shown in Equation (14).

Subsequently, by substituting Equation (14) into the SLBPNN system state Equations (7) and (8), the matrices A and B, which include the motor parameter components, can be derived. By organizing the elements of the A and B matrices, the relationships can be expressed as

,

,

,

,

,

and

. Using these matrix components, the motor parameters can be calculated. When the SLBPNN system equations, which incorporate the motor parameters as constituent elements, are expressed in terms of the error function and gradient vector defined in Equation (9), the gradient vector can be derived and represented through Equation (15).

The system output values are selected as the d- and q-axis current outputs from the current PI controller, and the ANN inputs are composed of the d- and q-axis currents, PMSM electrical angular velocity, d- and q-axis voltages, and the load torque. The loss function used in the back-propagation algorithm—implemented via gradient descent—is defined as the error between the VSI current controller’s d- and q-axis output currents and those produced by the ANN. The learning rate is tuned to ensure convergence toward the point where the loss function is minimized, while the initial weights are set to the PMSM parameter values measured in advance. Subsequently, the number of hidden layers and neurons in a neural network can be determined by the designer according to the characteristics of the system. There is no fixed rule, and these parameters are typically selected empirically to balance model accuracy and computational efficiency. According to Hornik’s theory, a neural network with a single hidden layer is capable of approximating any arbitrary nonlinear function. Based on this theoretical foundation, the proposed SLBPNN in this study is designed with one hidden layer. The number of neurons in the hidden layer was determined through off-line simulations and by referring to empirical formulas presented in previous studies. In these studies, the number of input neurons

and output neurons

were used as parameters in the empirical formula to determine the appropriate number of neurons

in the hidden layer. D. Gao [

22] derived an empirical formula, as shown in Equation (16), to determine the number of neurons in the hidden layer and to establish a correlation among the numbers of input, output, and hidden neurons.

In Equation (16),

denotes the number of neurons in the hidden layer,

represents the number of neurons in the input layer,

is the number of neurons in the output layer. Subsequently, J. W. Jiang [

19] applied this empirical formula to estimate the optimal number of hidden neurons for PMSM parameter identification. Their study demonstrated that as the number of neurons in a single hidden layer increases, the estimation error decreases; however, when more than approximately 11 neurons are used, the reduction in error becomes negligible and remains nearly constant. Although increasing the number of hidden neurons reduces the error, it also increases the computational load on the CPU. Based on these findings, this study selected nine hidden neurons through iterative off-line simulations to achieve a balance between minimizing the estimation error and reducing the computational burden, thereby optimizing the PMSM parameter estimation performance.

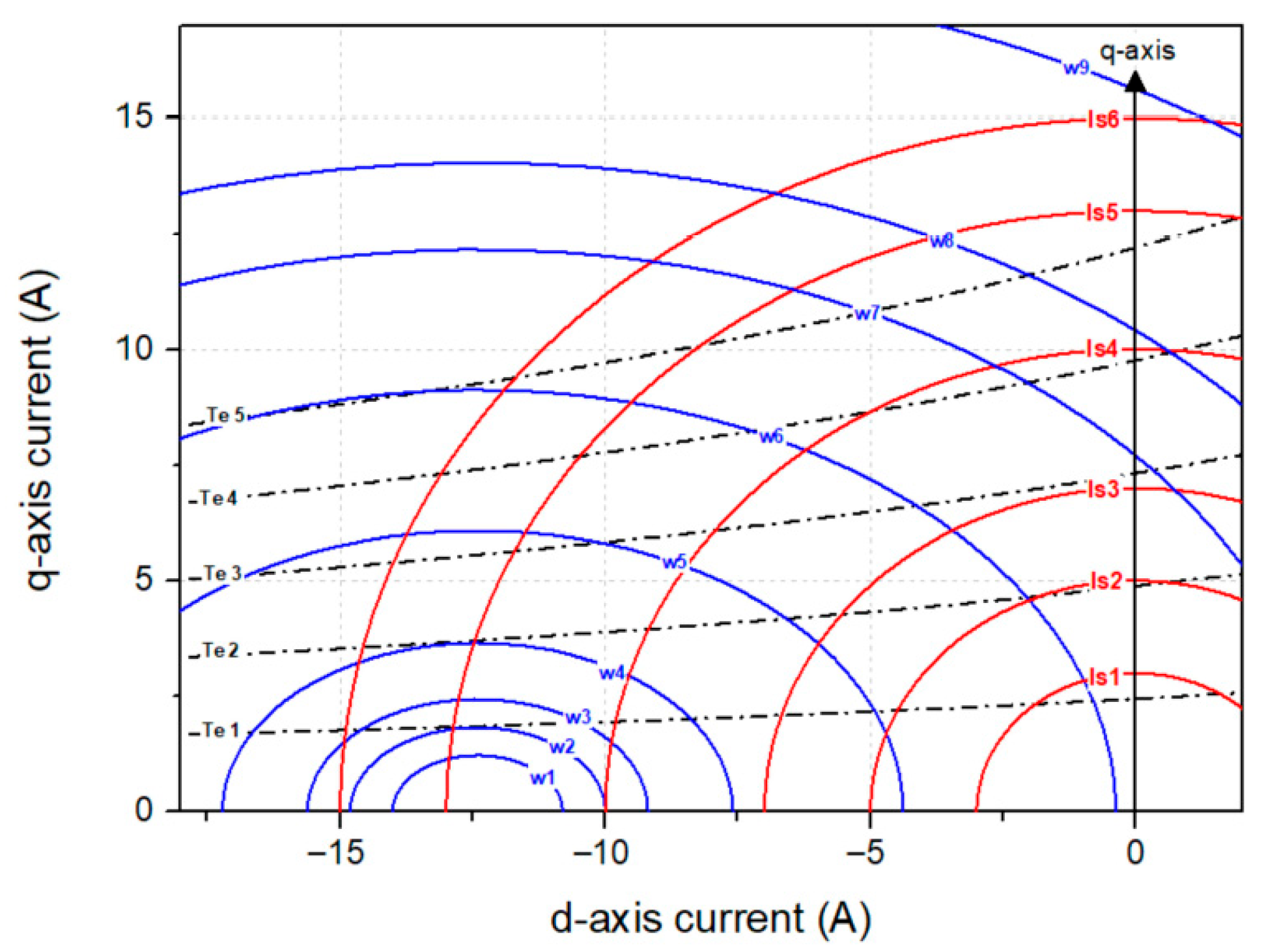

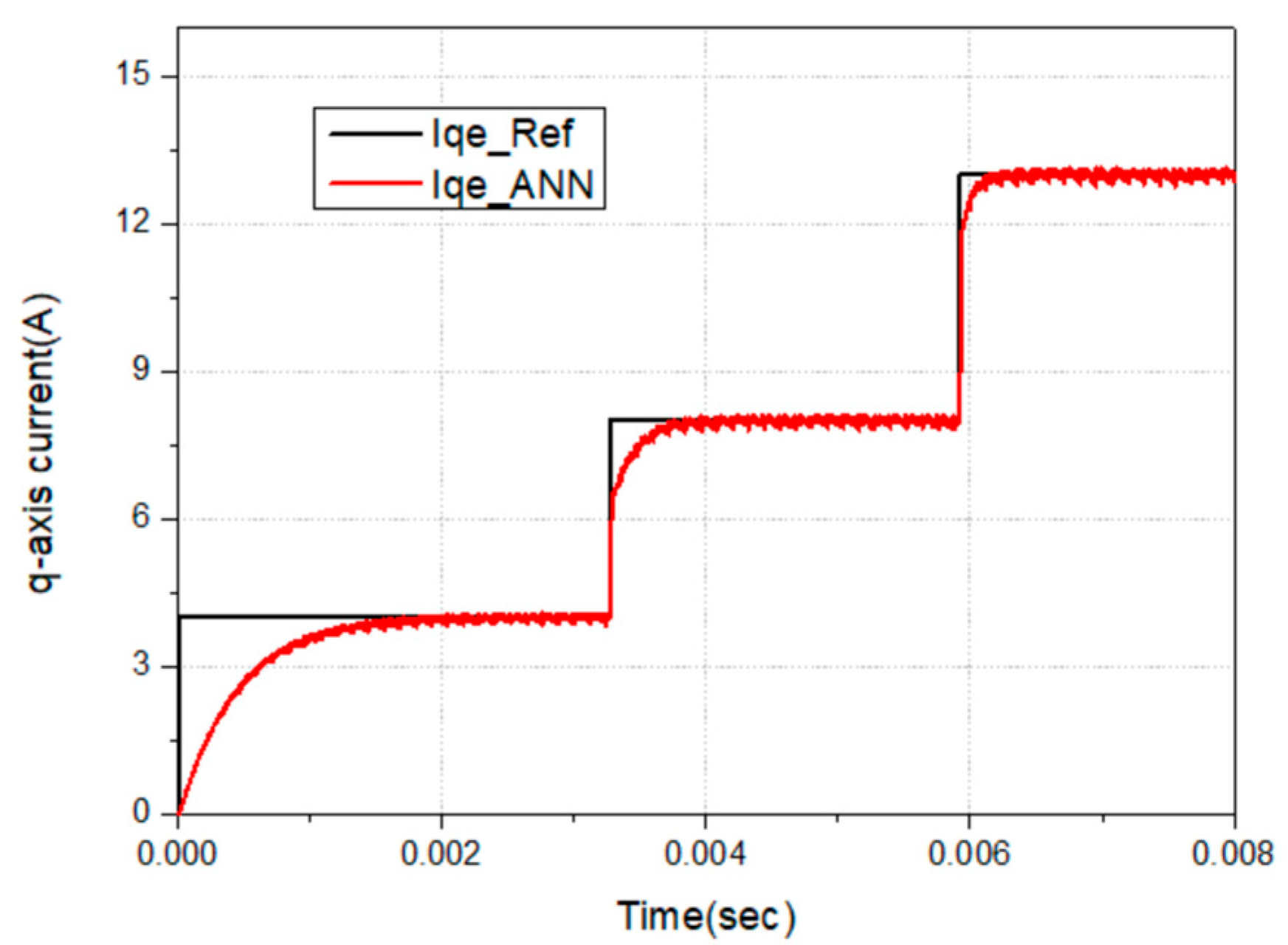

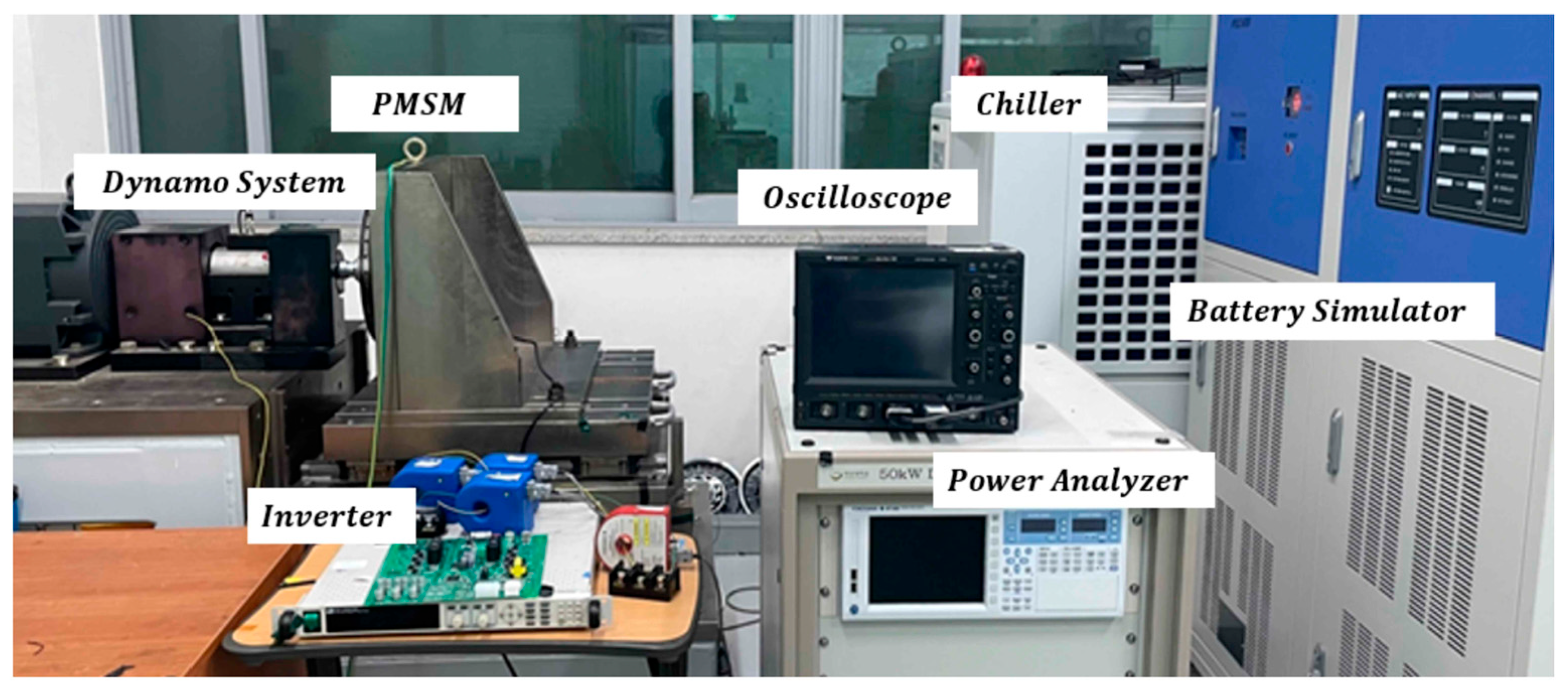

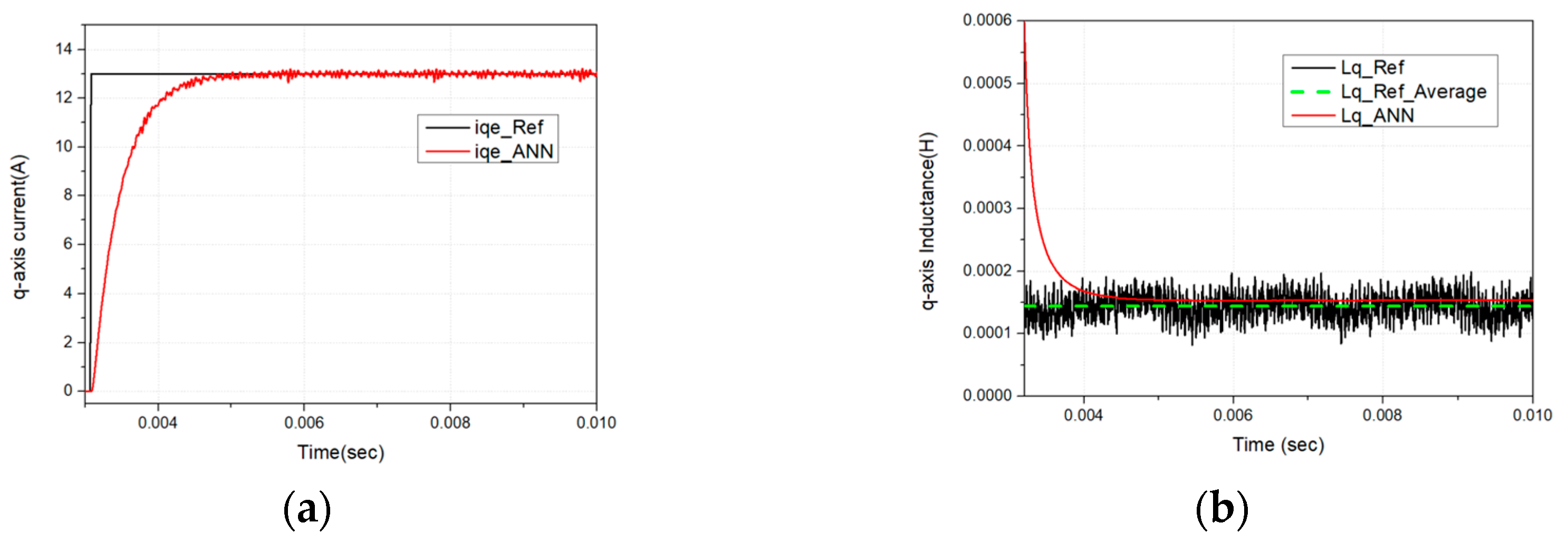

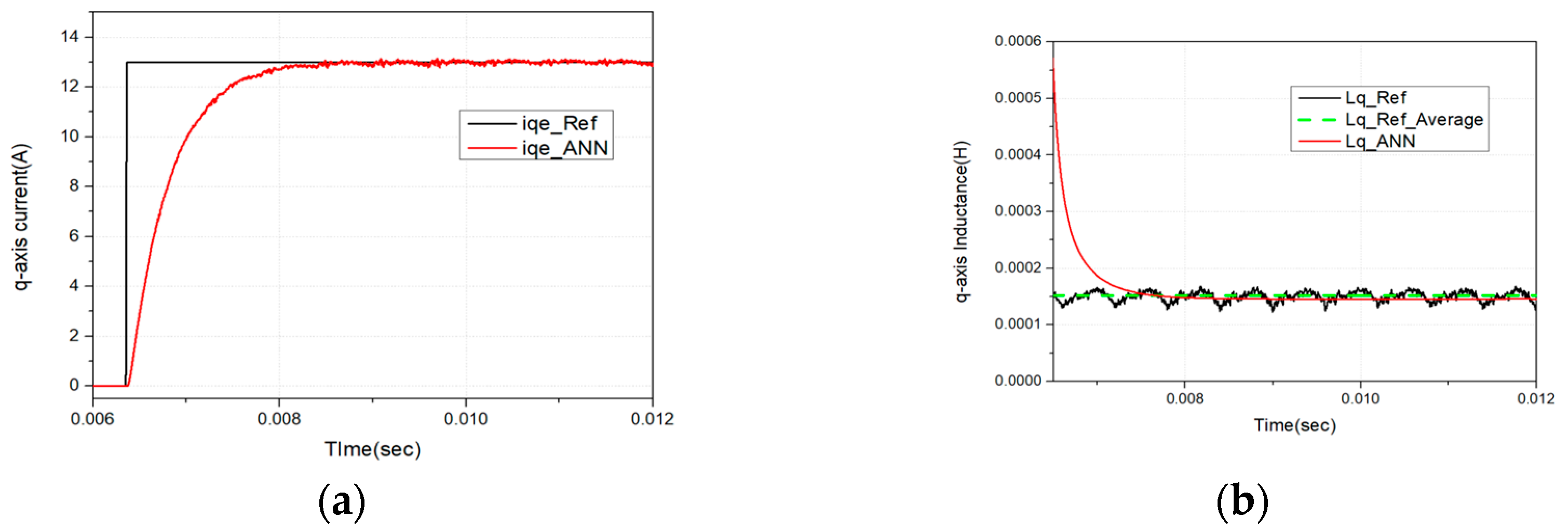

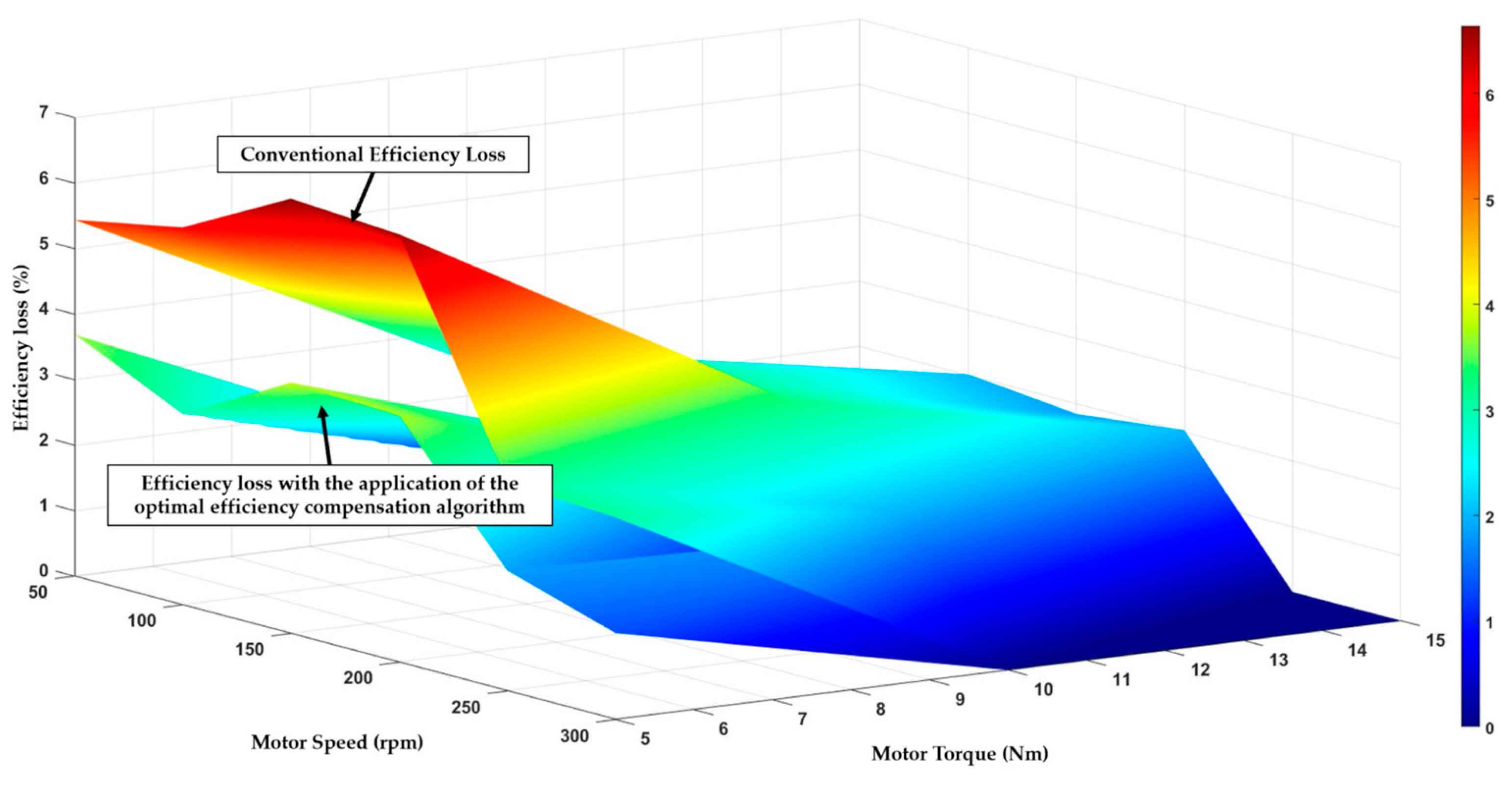

4.2. Proposed PMSM Operating Point Compensation Method

In this study, an algorithm and method are proposed to optimize motor control efficiency by utilizing PMSM parameters estimated through a neural network. Accordingly,

Section 4 describes the method by which the estimated PMSM parameters are utilized to enhance the efficiency of motor control. The proposed algorithm in this study can be divided into four main parts, as shown in

Figure 9. Part 1 generates the d- and q-axis current references for torque tracking by utilizing the flux–torque look-up table (LUT) described in the previous section [

23]. Part 2 consists of the current controller, which receives the current references from the LUT and regulates the motor currents accordingly. Part 3 estimates PMSM parameters using the Single Layer Back Propagation Neural Network (SLBPNN) algorithm and produces compensated flux and torque references based on the updated parameter values. Part 4 implements an outer-loop control algorithm that compensates the flux reference during overmodulation, when the VSI output voltage demand exceeds the maximum available modulation voltage [

24,

25].

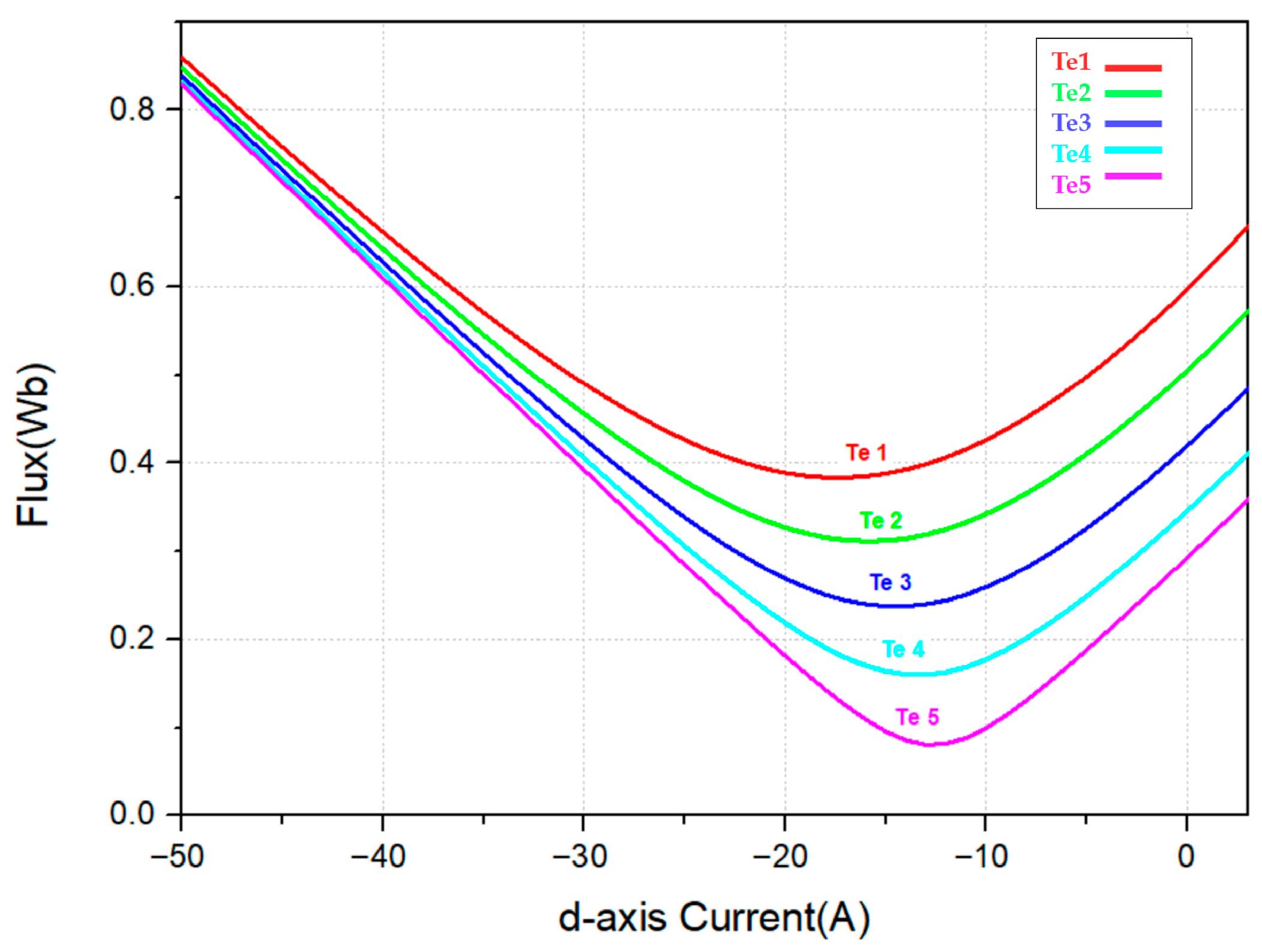

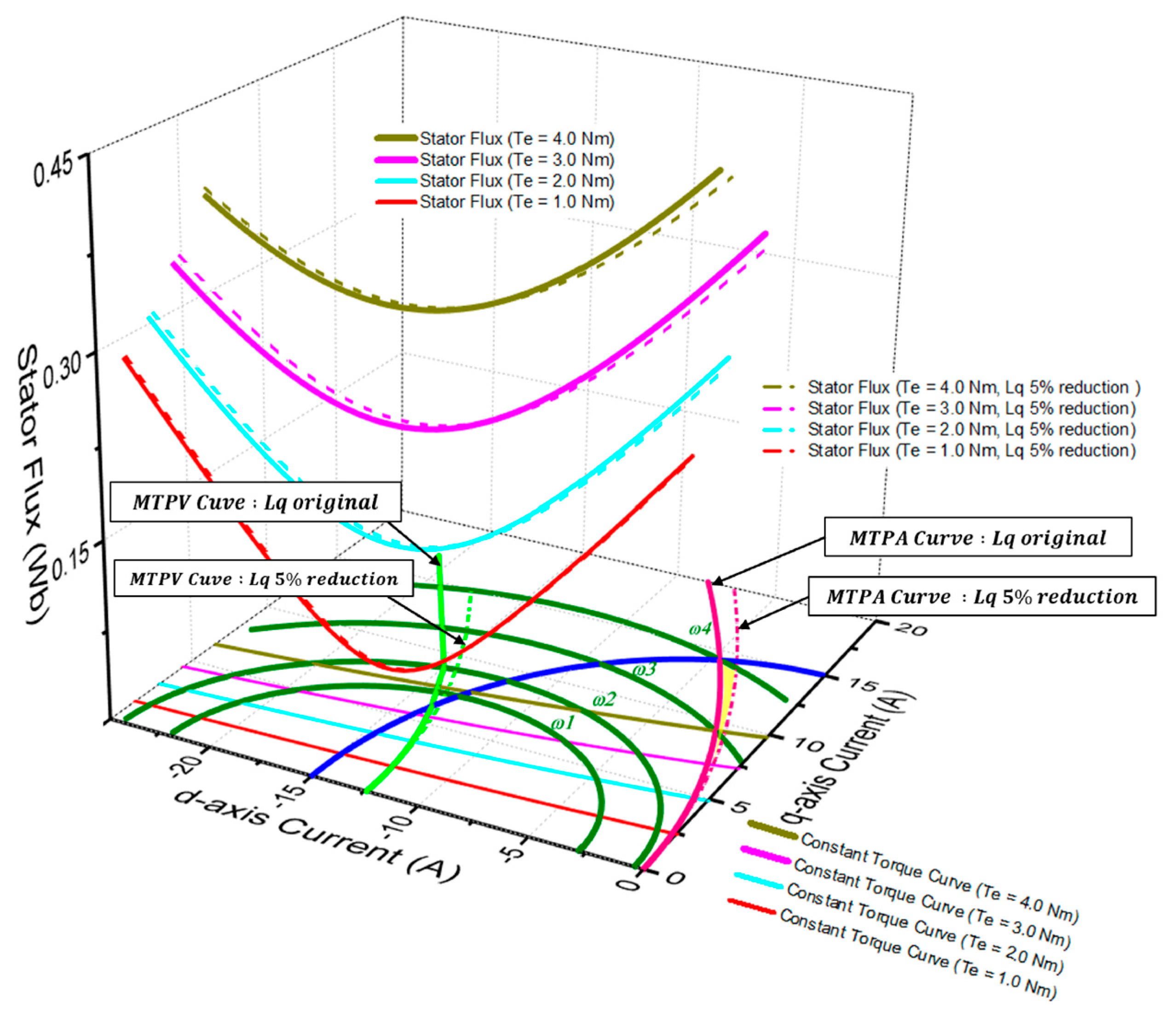

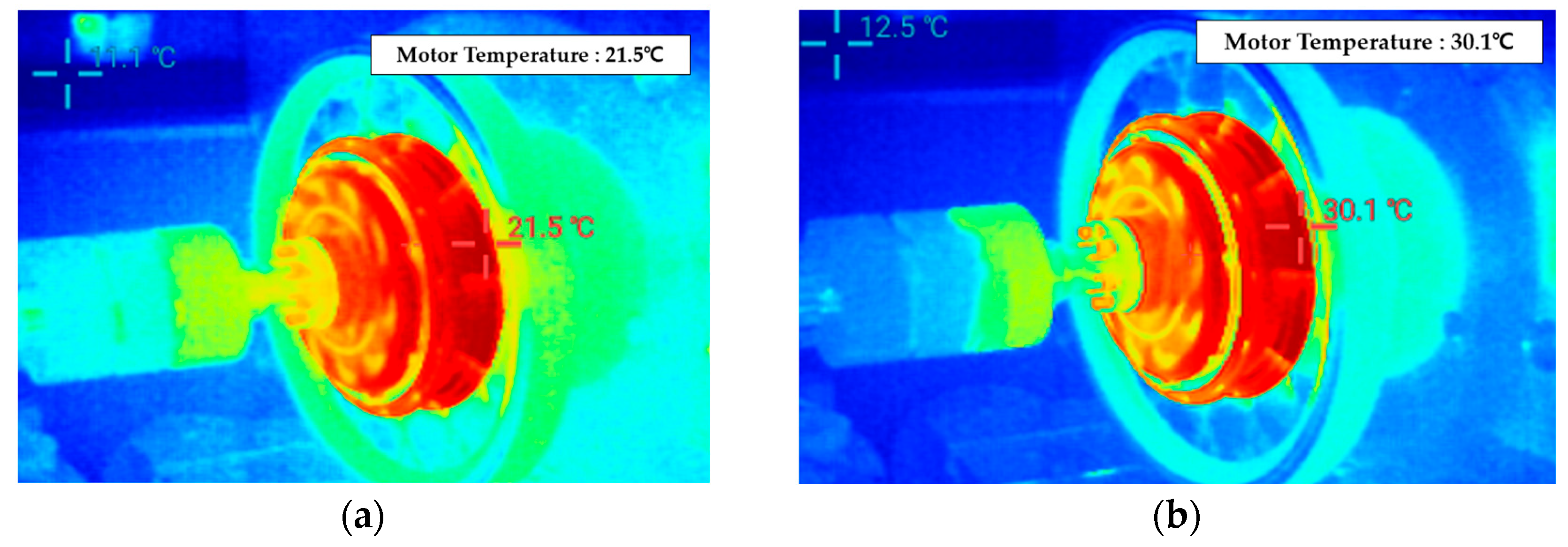

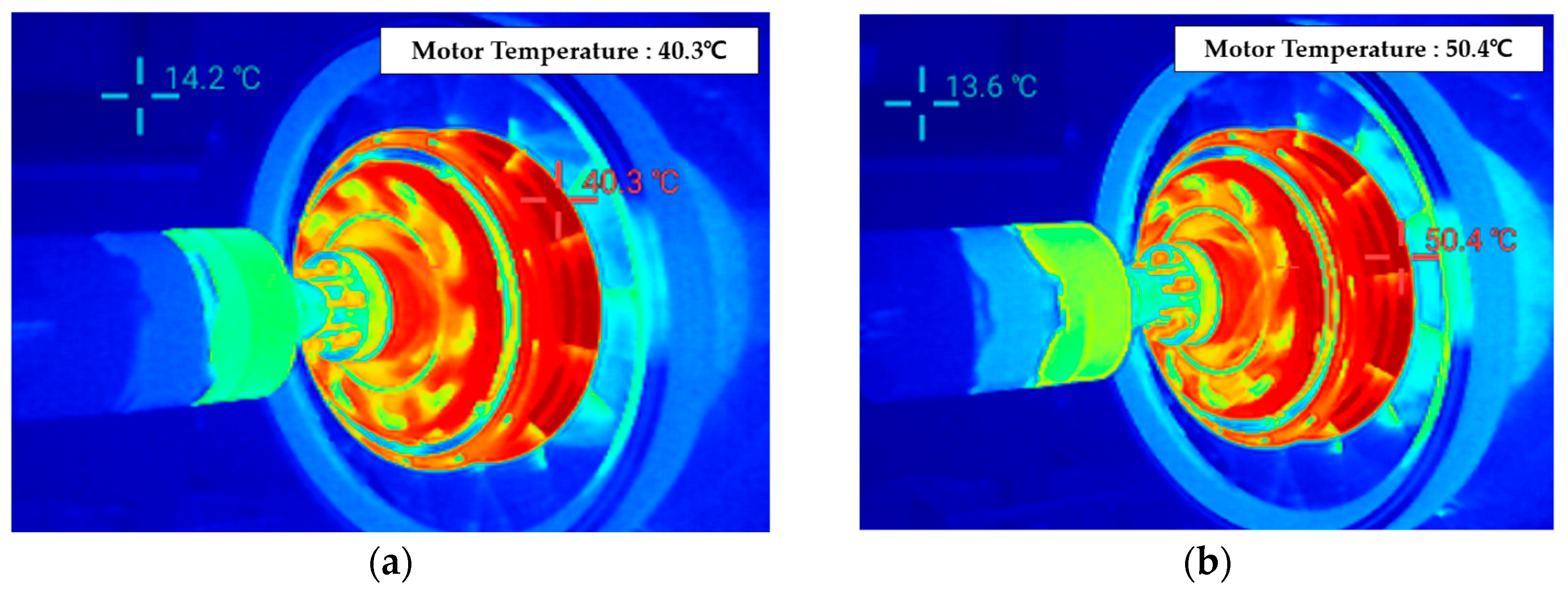

The compensation strategy proposed in this study aims to generate compensated torque and flux reference values that enable optimal operation under parameter variations. Specifically, when parameter deviations occur, the algorithm adjusts the current flux–torque references to maintain optimal operating conditions. The mathematical formulation for the torque reference compensation is presented in Equation (17).

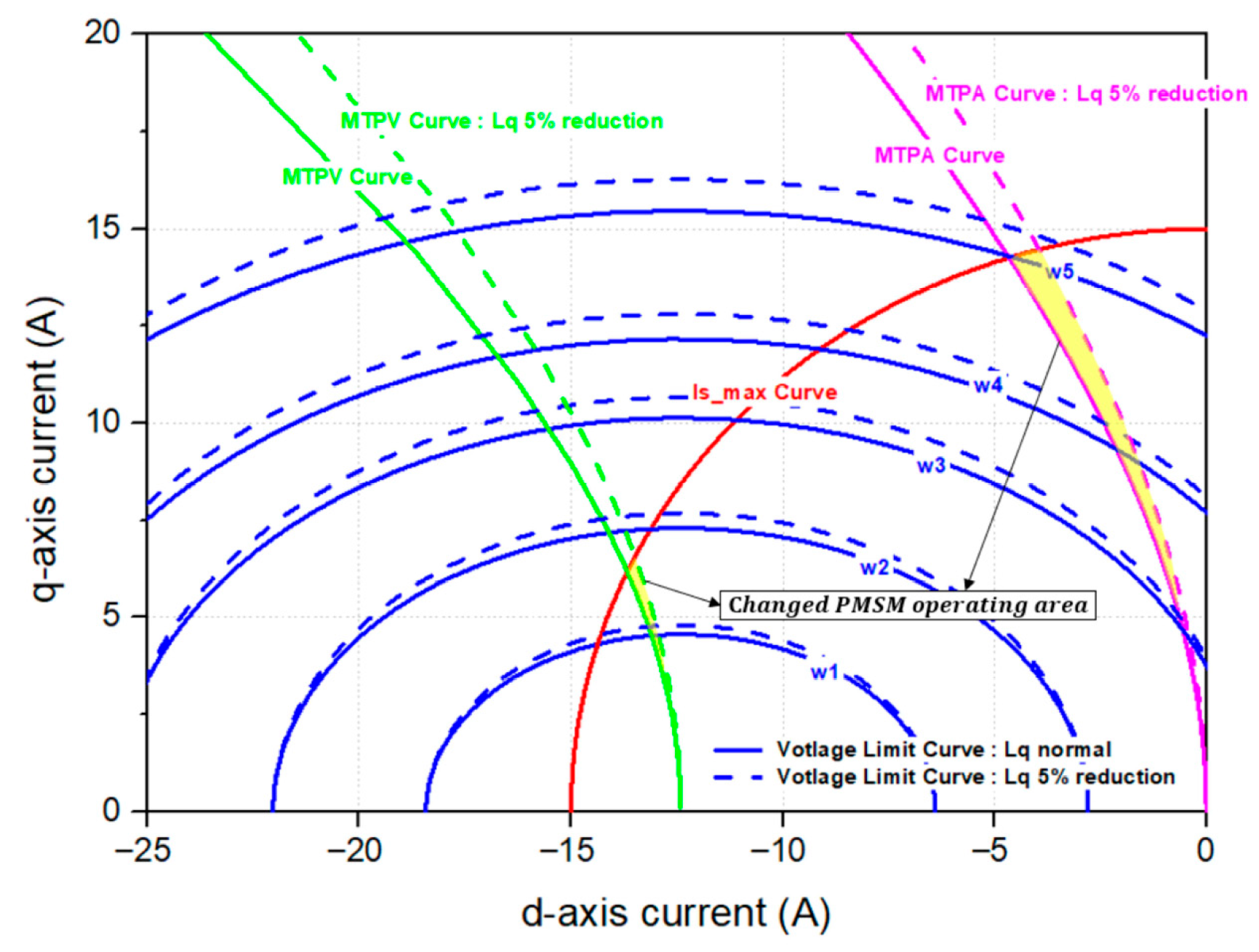

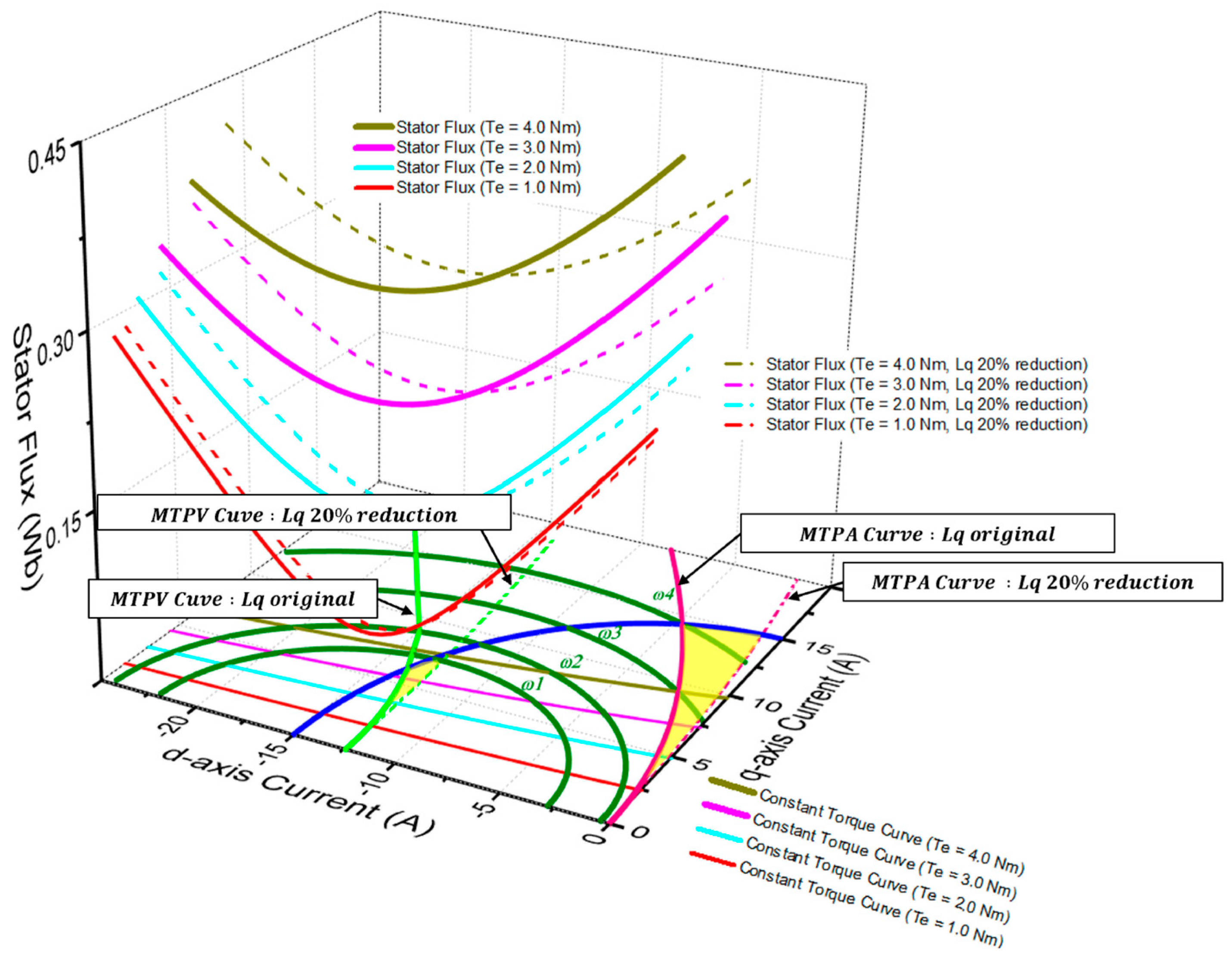

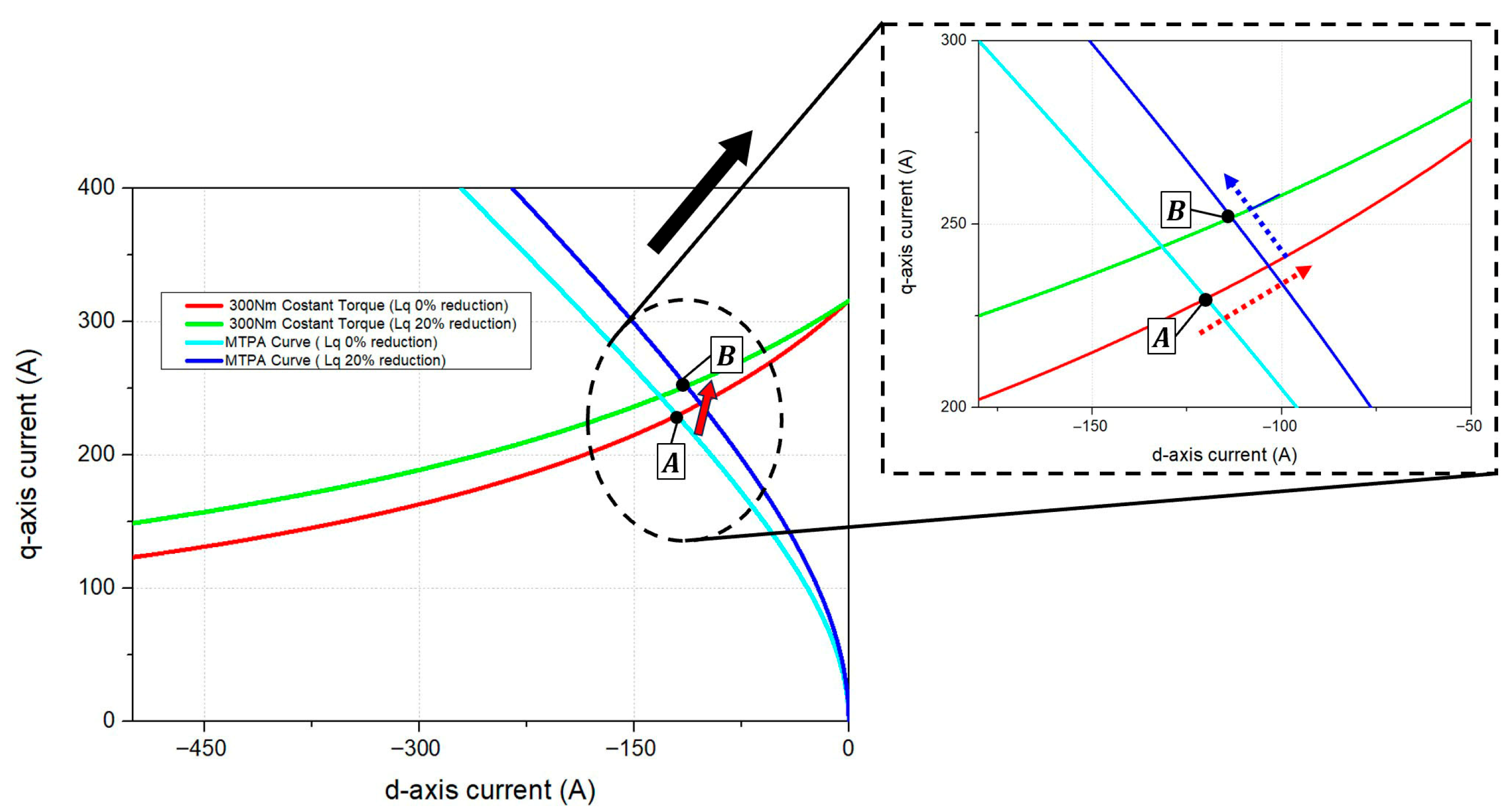

Figure 10 illustrates the change in the MTPA curve and the constant output torque curve on the d-q current plane when parameter variations occur. In the case where the q-axis inductance decreases by 20%, it can be observed that, to produce the same torque reference, the operating point must shift from point A to point B in

Figure 10. However, the conventional flux–torque control algorithm maintains operation at point A under parameter variations, which, as confirmed in the previous section, leads to a reduction in output torque and a corresponding decrease in efficiency. In contrast, the strategy proposed in this study estimates the changed parameter values using the SLBPNN-based method in Part 3 of the control block diagram shown in

Figure 9. Based on the estimated parameters, the torque reference compensation value is calculated using Equation (17).

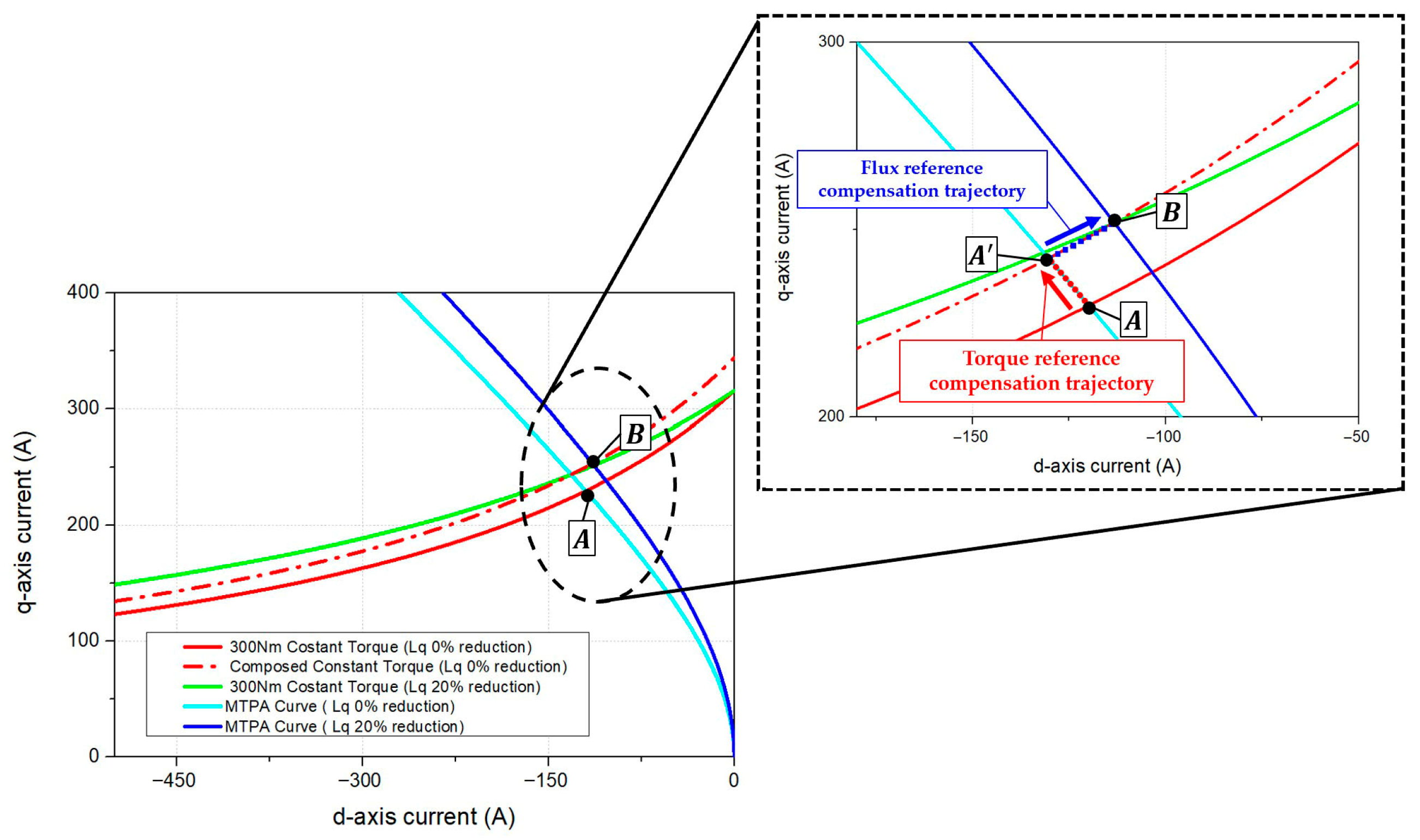

Figure 10 illustrates the torque compensation trajectory of the operating point on the d-q current plane when the proposed torque reference compensation is applied. Torque curve in the look-up table of the conventional algorithm with compensation applied is shown as a red dashed line in

Figure 10. It can be observed that, when torque compensation is applied at point A, the operating point shifts to the right while maintaining the same flux value, thereby compensating for the torque reference. However, torque compensation alone is insufficient to reach point B, which corresponds to the actual target torque under parameter variations. The subsequent section describes the proposed flux reference compensation strategy, as shown in

Figure 9. The flux compensation value is calculated by analytically determining the required d-axis current reference under parameter variations, using the q-axis current reference and compensated torque reference obtained from the LUT with torque compensation. The corresponding mathematical expressions are given in Equations (18) and (19). As mentioned in the previous section, the operating region that achieves the maximum torque for a given current magnitude is defined as the MTPA region. Furthermore, the torque output corresponding to different current combinations affects the efficiency of the system. For the compensation of the stator flux reference, the compensation algorithm must be executed while satisfying the conditions corresponding to the MTPA region. To compute the flux reference compensation value, the PMSM torque equation and the normalized forms of the d- and q-axis current references are expressed in Equation (18).

In Equation (18), the normalized q-axis current

is defined as the ratio of the q-axis current component

to the base current

, while the normalized d-axis current

is expressed as the ratio of the d-axis current component

to the base current

. The base current

is determined by the ratio of the permanent magnet flux linkage

to the saliency difference

. Here,

denotes the permanent magnet flux linkage, and

and

represent the d-axis and q-axis inductances of the PMSM, respectively. The electromagnetic torque of the machine is denoted as

, and the base torque serves as the normalization factor for torque, typically given by

. Consequently, the normalized torque

is defined as the ratio of

to

. To analytically determine the maximum output torque when the stator current magnitude is minimized, the relationship between the maximum torque per unit current and the d- and q-axis current references is expressed in Equation (19), it can be observed that the d- and q-axis current values that produce the maximum torque per unit current satisfy a quartic equation, indicating that four mathematical solutions exist for the current values corresponding to maximum torque [

26]. However, since the PMSM motoring operation region is limited to the second quadrant of the d-q current plane, the valid d-q current combination converges to a single operating point. The optimal d-axis current reference is derived under the Maximum Torque Per Ampere (MTPA) condition in Equation (19). Based on this, the proposed flux compensation strategy calculates the d-axis current reference using the q-axis current reference obtained from the torque compensation process and the parameters estimated via the SLBPNN method, as expressed in Equation (19). The calculated d-axis current reference and the torque reference are then substituted into Equation (6) from the previous section to determine the flux reference value required to achieve the optimal torque output. Furthermore, by substituting the inductance variation in the PMSM estimated through the proposed SLBPNN into Equation (6), it is possible to obtain the compensated flux reference value corresponding to the operating region altered by the parameter variation in the motor. By applying this compensated flux value, together with the original flux reference, to the flux-based PMSM torque control lookup table (LUT), the proposed method can effectively compensate for the reduction in output efficiency caused by the parameter-induced shift in the operating region during motor operation. Ultimately, this approach enables compensation of both the d- and q-axis operating points for improved PMSM performance under varying conditions, as shown in

Figure 11. Using the proposed algorithm, the effectiveness of the presented theory was ultimately verified through both simulation and experiment. The following section provides a detailed description of the simulation setup and experimental validation.