Uncalibrated Adaptive Visual Servoing of Robotic Manipulators with Uncertainties in Kinematics and Dynamics

Abstract

1. Introduction

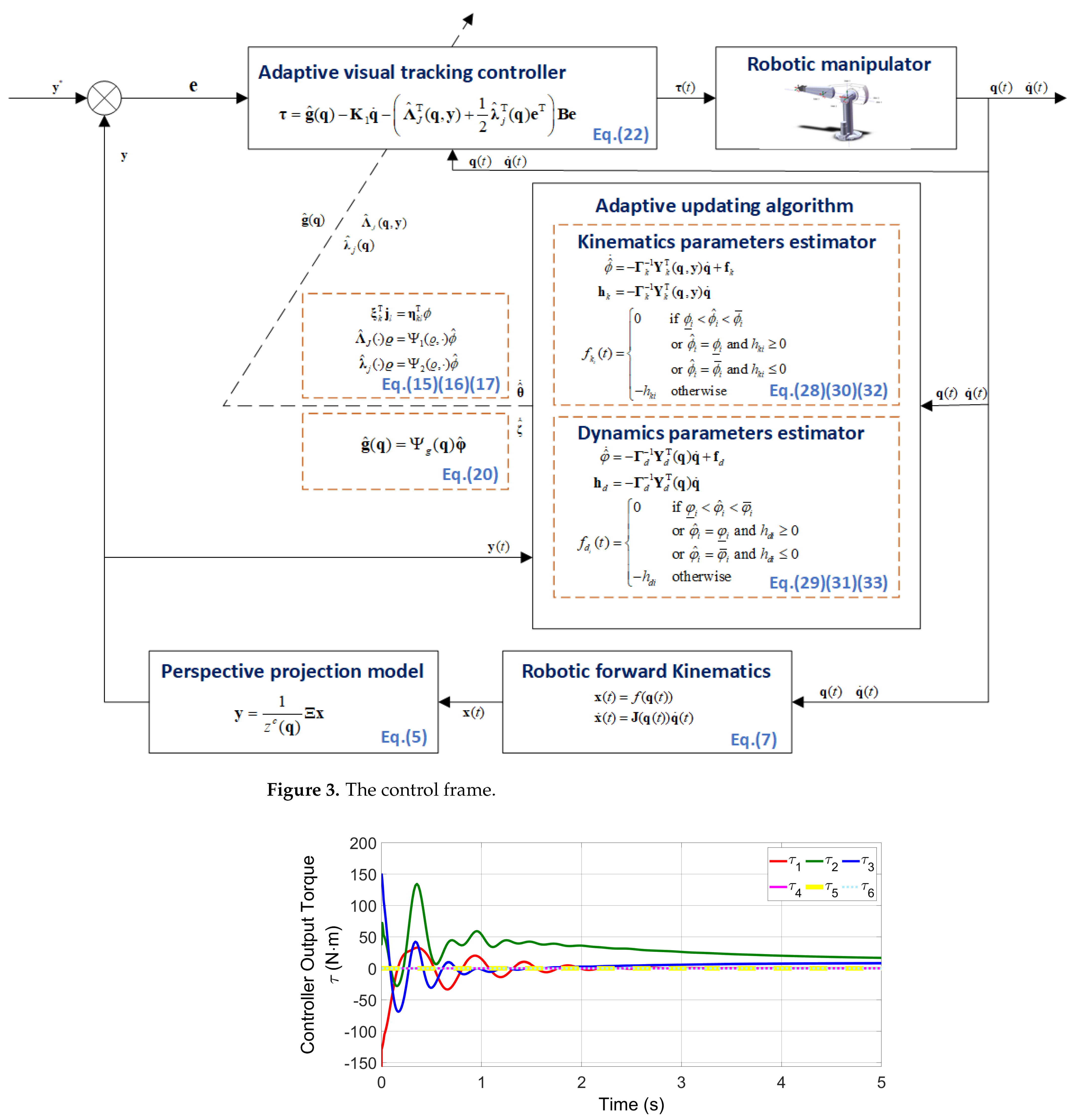

- A depth-independent composite Jacobian matrix is constructed, with which the unknown visual parameters and robotic physical parameters can be well arranged in a uniform linear form so that their uncertainties can be addressed by an adaptive estimation approach. Different from the existing works on the adaptive uncalibrated visual servoing control, e.g., [26,27], the unknown parameters of the visual servoing are updated by one adaptive law, which is capable of reducing the number of adaptive laws greatly.

- In a whole uncalibrated environment, an adaptive visual servoing controller is proposed for robotic manipulators, considering the uncertainties in kinematics and dynamics comprehensively, which means all the data and parameters utilized in the controller can be obtained easily. Particularly, robotic physical parameters adopted in our raised controller can be obtained by an ocular estimation instead of a precise measuring in [22,23]. In addition, different form the related methods [25,27], the one raised in this paper takes the uncertain dynamics caused by the gravitational parameters into account and compensates for the gravity of robotic manipulators.

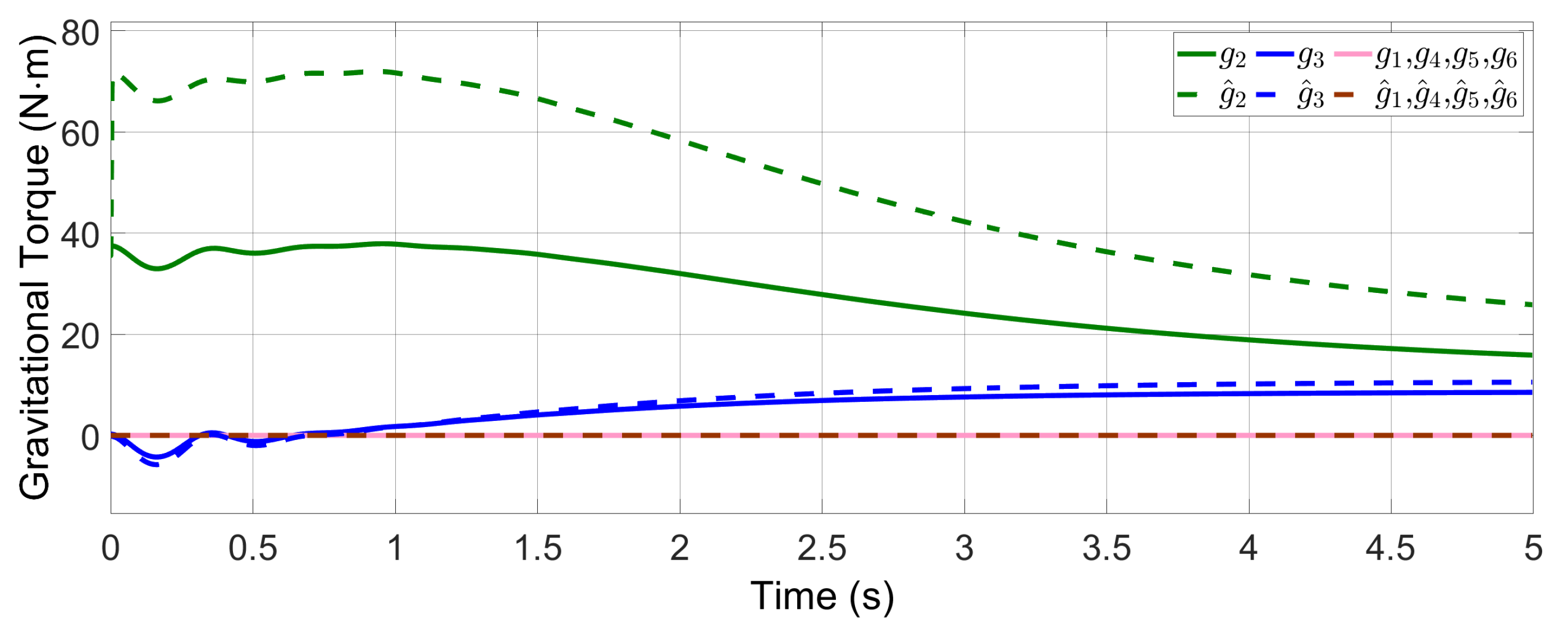

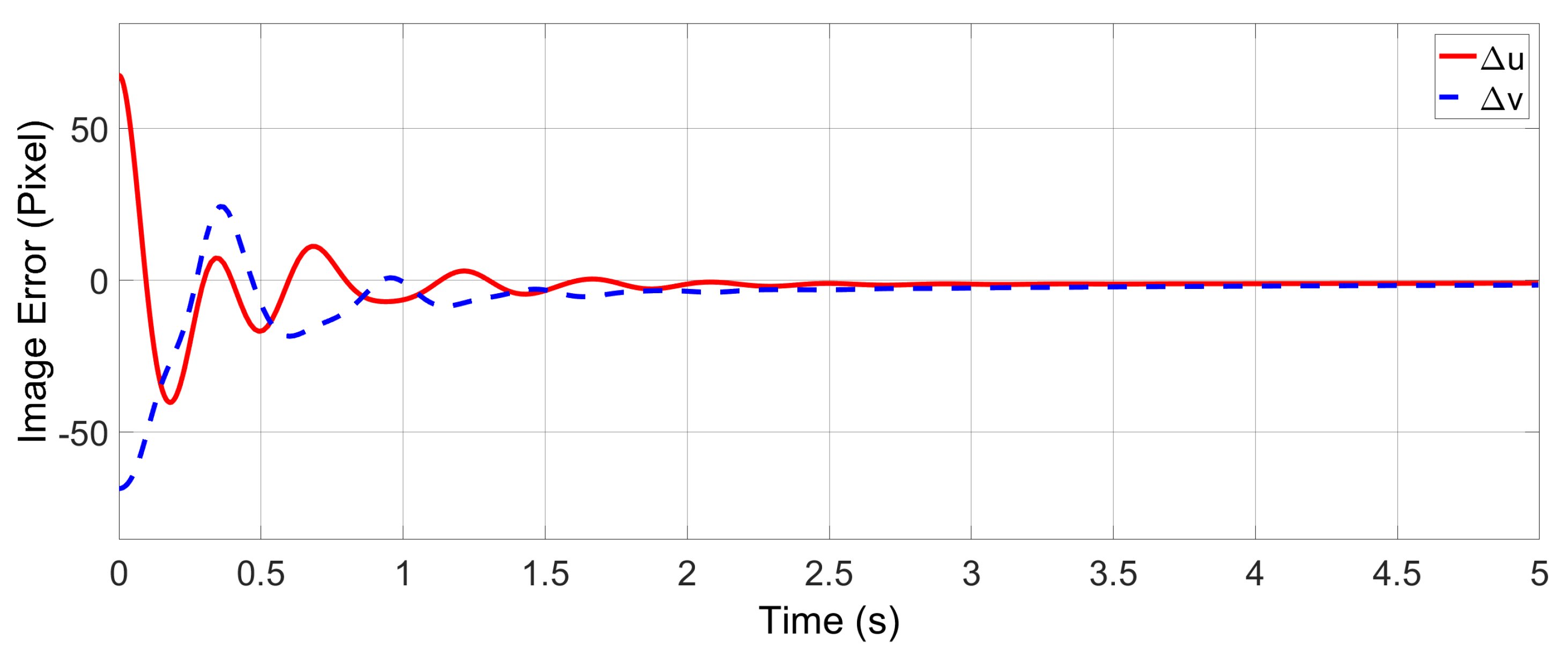

- Apart from these, a novel adaptive estimation algorithm is raised, which is capable of avoiding the possible singularity of the estimated Jacobian matrix, and most importantly ensuring the asymptotic convergence of the image error. Compared with the related work in [22,23,24], the cartesian coordinates of feature points, acquired difficultly in a practical operation, are avoided in the raised adaptive algorithm, and the gravitational torque of robotic manipulators can be compensated well ensuring the image error asymptotically converges to zero better.

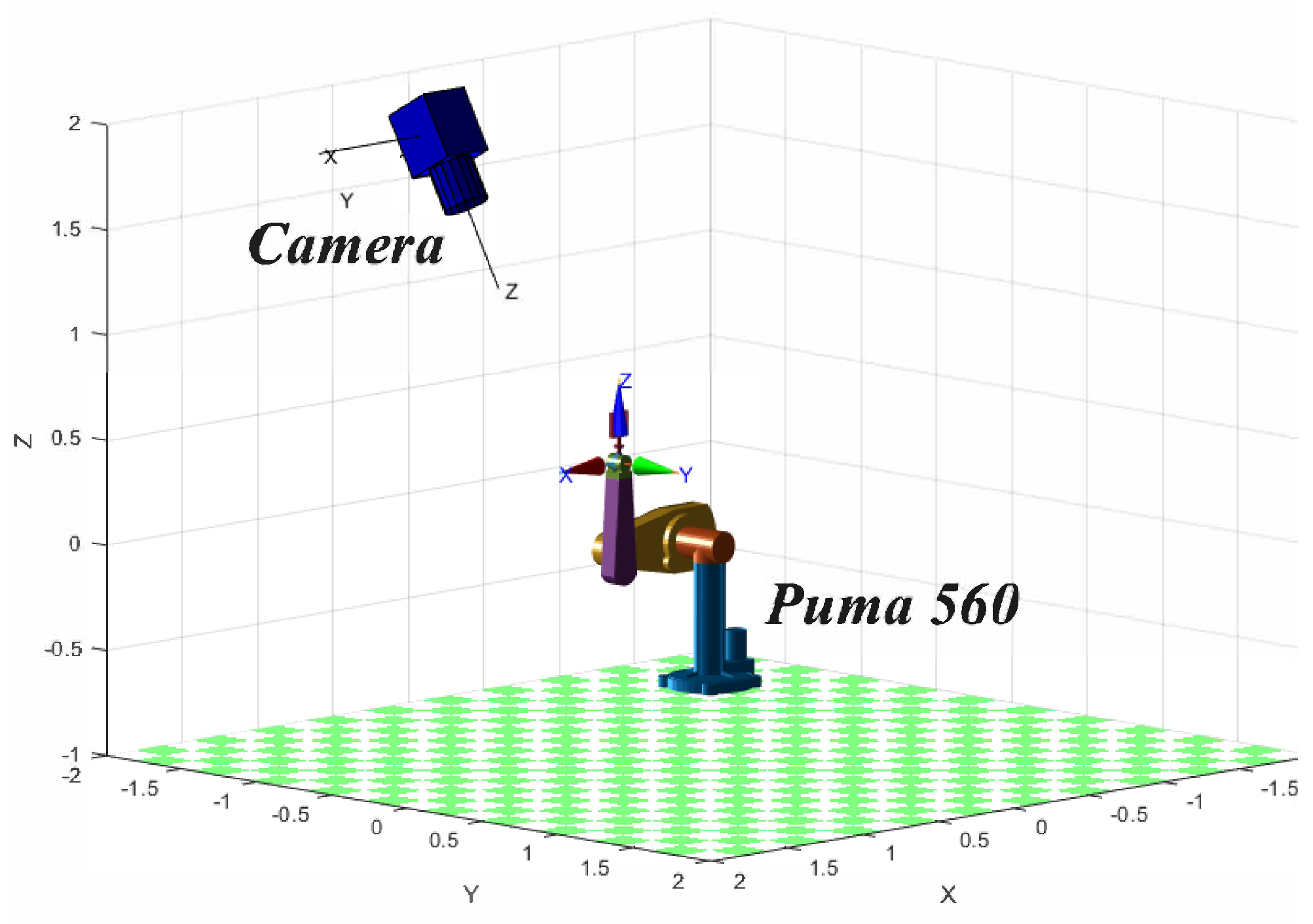

2. Background and Problem Statement

2.1. Perspective Projection Model

2.2. Kinematics and Dynamics Model of Robotic Manipulator

2.2.1. Kinematics Model

2.2.2. Dynamics Model

2.3. Problem Formulation

3. Uncalibrated Adaptive Visual Control Approach and Stability Analysis

3.1. Controller Design

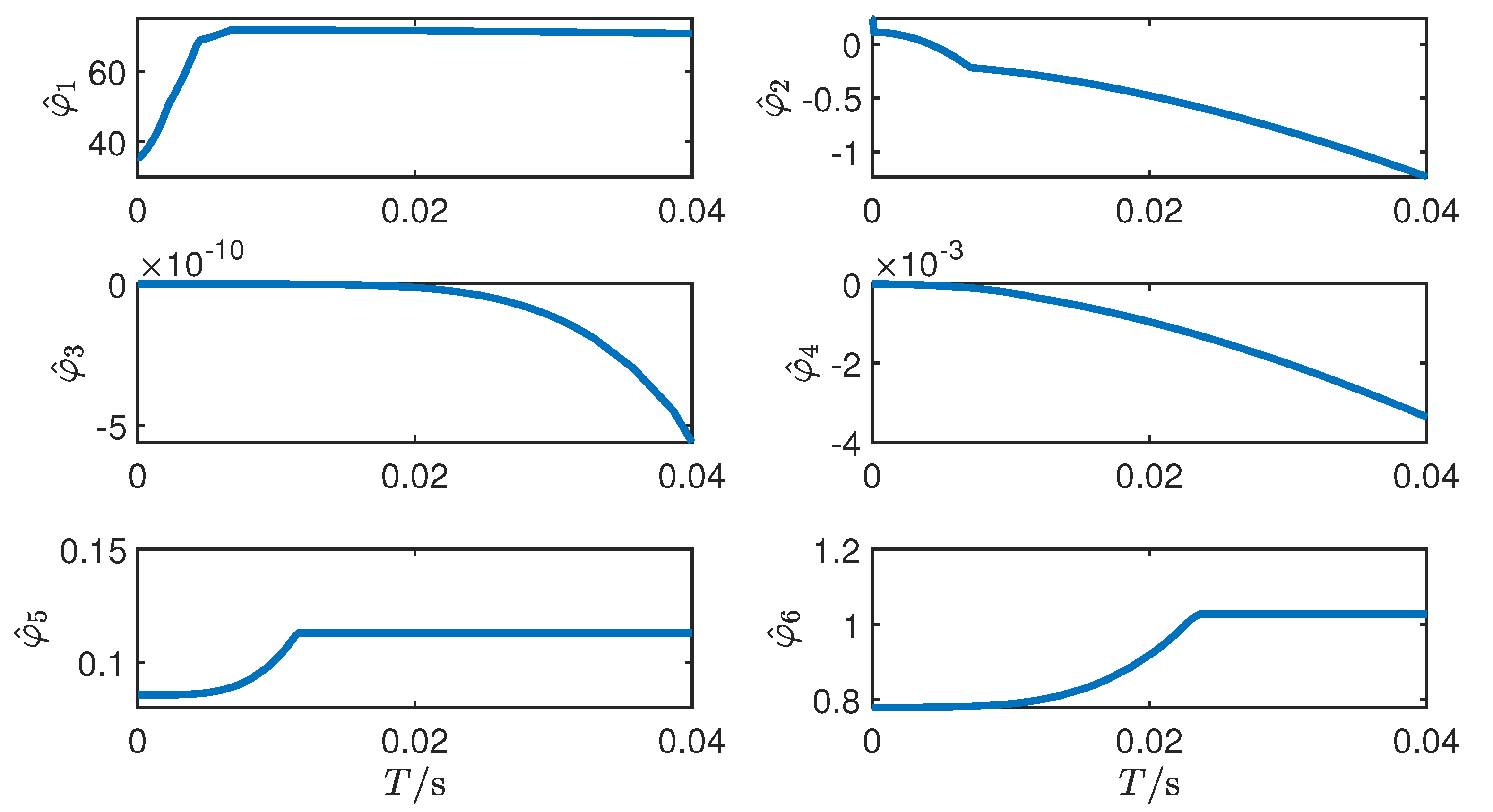

3.2. Parameter Estimation

3.3. Stability Analysis

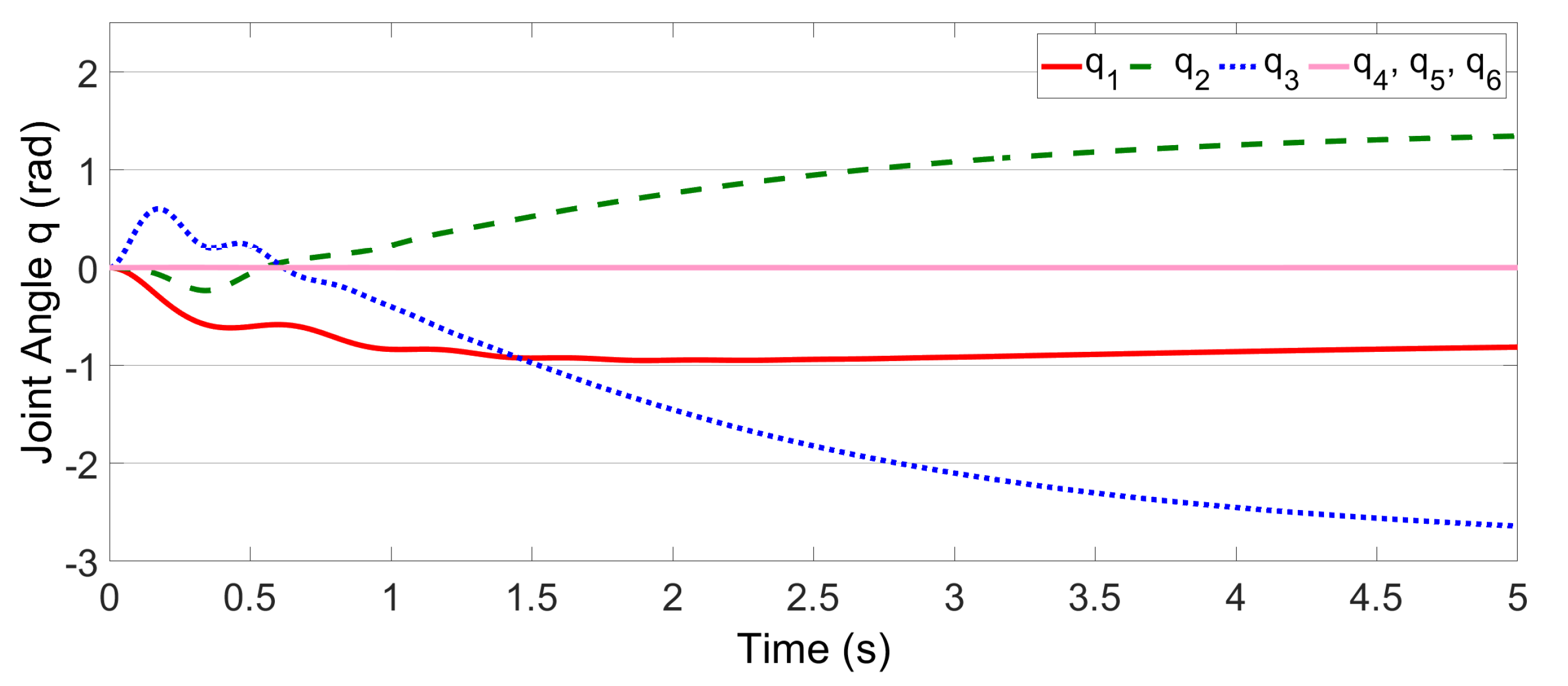

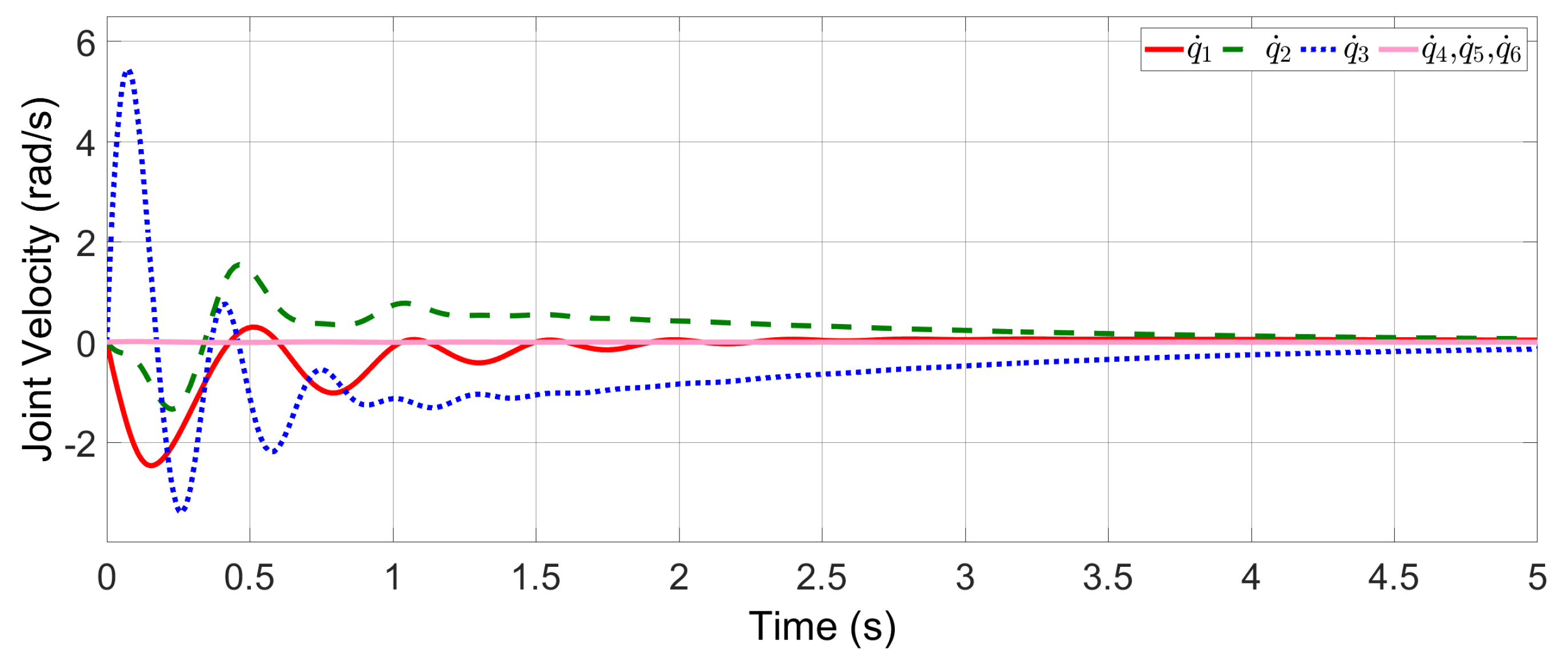

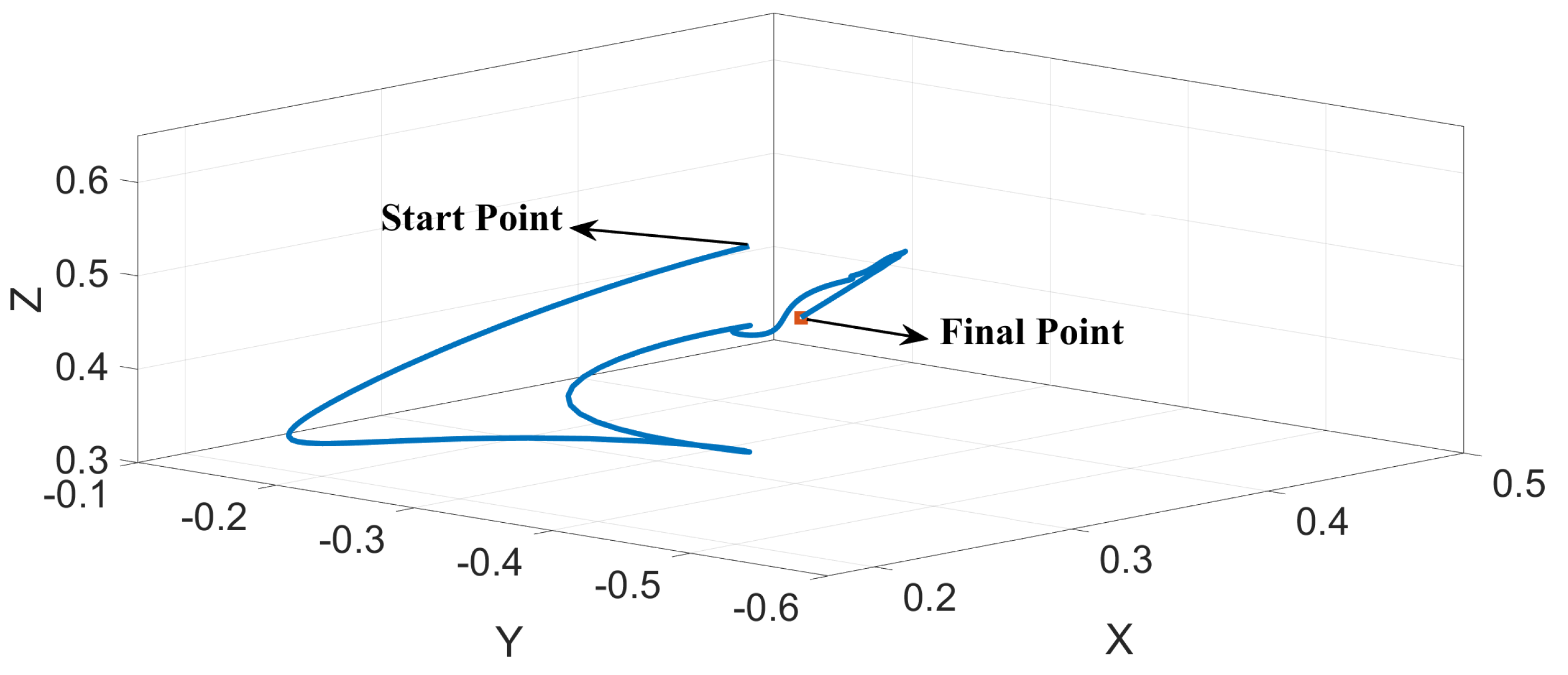

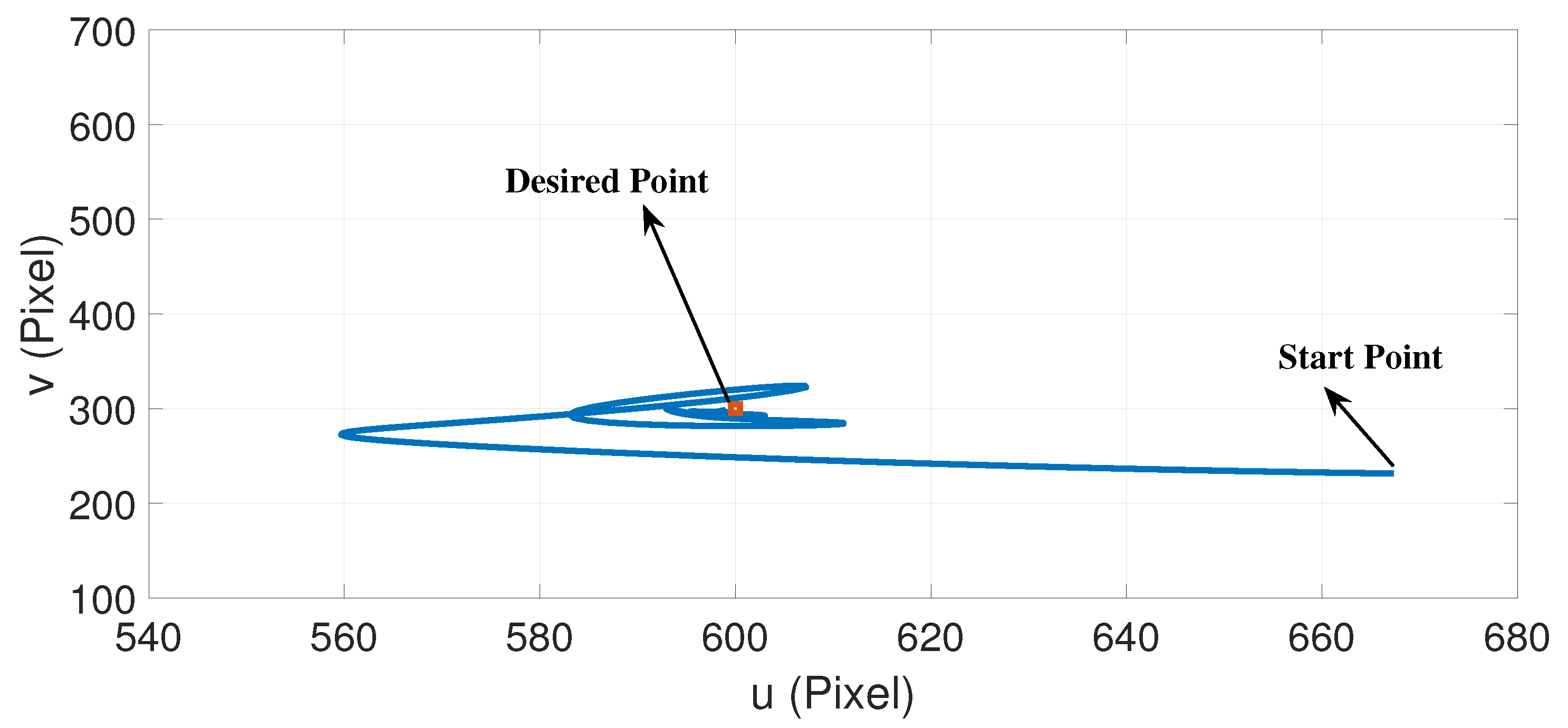

4. Simulation

- Nonlinear dynamics. Although the dynamics of manipulators have been considered in the proposed method, there are still some unmodeled dynamic factors ignored, such as the Coulomb friction, the viscous friction, and the motor dynamics, which may deteriorate system performance and even lead to system collapse in a practical scenario.

- Nonlinear constraint of actuators. The nonlinear constraints widely exist in many physical actuators, which may lead to errors in the practical results and even instability of the system. To address it, the nonlinear constraints of actuators, such as the backlash, dead zone, saturation, and hysteresis, should be compensated in practical applications. Furthermore, the unknown actuator failures should also be considered regarding real-world visual servo tasks, which would lead to catastrophic results once a failure occurs.

- Noise and disturbances. The image-based visual servoing control method is sensitive to noise and disturbances in the image measurements, which can affect the accuracy and stability of the control system. In our research, the image noise and corresponding image processing techniques do not account for this, but they should be considered in practical applications. Additionally, the variations in lighting, occlusions, and other environmental factors should be considered too, which may significantly impact the quality of visual feedback.

- Real-time performance. Visual servoing systems may suffer from latency issues, which may be caused by communication and sampling, thus achieving the real-time performance of manipulators remains a challenge for real-world scenarios.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shirai, Y.; Inoue, H. Guiding a robot by visual feedback in assembling tasks. Pattern Recognit. 1973, 5, 99–108. [Google Scholar] [CrossRef]

- Jing, C.; Xu, H.; Niu, X. Adaptive sliding mode disturbance rejection control with prescribed performance for robotic manipulators. ISA Trans. 2019, 91, 41–51. [Google Scholar] [CrossRef] [PubMed]

- Moreno-Valenzuela, J.; González-Hernández, L. Operational space trajectory tracking control of robot manipulators endowed with a primary controller of synthetic joint velocity. ISA Trans. 2011, 50, 131–140. [Google Scholar] [CrossRef] [PubMed]

- Hill, J. Real time control of a robot with a mobile camera. In Proceedings of the International Symposium on Industrial Robots; Society of Manufacturing Engineers: Dearborn, MI, USA, 1979; pp. 233–246. [Google Scholar]

- Hutchinson, S.; Hager, G.; Corke, P. A tutorial on visual servo control. IEEE Trans. Robot. Autom. 1996, 12, 651–670. [Google Scholar] [CrossRef]

- Staniak, M.; Zieliński, C. Structures of visual servos. Robot. Auton. Syst. 2010, 58, 940–954. [Google Scholar] [CrossRef]

- Tan, M.; Liu, Z.; Chen, C.P.; Zhang, Y. Neuroadaptive asymptotic consensus tracking control for a class of uncertain nonlinear multiagent systems with sensor faults. Inf. Sci. 2022, 584, 685–700. [Google Scholar] [CrossRef]

- Tan, M.; Liu, Z.; Chen, C.P.; Zhang, Y.; Wu, Z. Optimized adaptive consensus tracking control for uncertain nonlinear multiagent systems using a new event-triggered communication mechanism. Inf. Sci. 2022, 605, 301–316. [Google Scholar] [CrossRef]

- Hu, G.; MacKunis, W.; Gans, N.; Dixon, W.E.; Chen, J.; Behal, A.; Dawson, D. Homography-Based Visual Servo Control With Imperfect Camera Calibration. IEEE Trans. Autom. Control 2009, 54, 1318–1324. [Google Scholar] [CrossRef]

- Wang, K.; Liu, Y.; Li, L. Vision-based tracking control of nonholonomic mobile robots without position measurement. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 5265–5270. [Google Scholar]

- Lippiello, V.; Siciliano, B.; Villani, L. Position-Based Visual Servoing in Industrial Multirobot Cells Using a Hybrid Camera Configuration. IEEE Trans. Robot. 2007, 23, 73–86. [Google Scholar] [CrossRef]

- Chaumette, F.; Hutchinson, S. Visual servo control. I. Basic approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]

- Hwang, M.; Chen, Y.J.; Ju, M.Y.; Jiang, W.C. A fuzzy CMAC learning approach to image based visual servoing system. Inf. Sci. 2021, 576, 187–203. [Google Scholar] [CrossRef]

- Qian, J.; Su, J. Online estimation of image Jacobian matrix by Kalman-Bucy filter for uncalibrated stereo vision feedback. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 1, pp. 562–567. [Google Scholar]

- Hosoda, K.; Asada, M. Versatile visual servoing without knowledge of true Jacobian. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’94), Munich, Germany, 12–16 September 1994; Volume 1, pp. 186–193. [Google Scholar]

- Piepmeier, J.; McMurray, G.; Lipkin, H. Uncalibrated dynamic visual servoing. IEEE Trans. Robot. Autom. 2004, 20, 143–147. [Google Scholar] [CrossRef]

- Armstrong Piepmeier, J.; Gumpert, B.; Lipkin, H. Uncalibrated eye-in-hand visual servoing. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 1, pp. 568–573. [Google Scholar]

- Wang, F.; Liu, Z.; Chen, C.; Zhang, Y. Adaptive neural network-based visual servoing control for manipulator with unknown output nonlinearities. Inf. Sci. 2018, 451-452, 16–33. [Google Scholar] [CrossRef]

- Cheah, C.; Hirano, M.; Kawamura, S.; Arimoto, S. Approximate Jacobian control for robots with uncertain kinematics and dynamics. IEEE Trans. Robot. Autom. 2003, 19, 692–702. [Google Scholar] [CrossRef]

- Cai, C.; Dean-León, E.; Mendoza, D.; Somani, N.; Knoll, A. Uncalibrated 3D stereo image-based dynamic visual servoing for robot manipulators. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 63–70. [Google Scholar]

- Cheah, C.C.; Liu, C.; Slotine, J.J.E. Adaptive Vision based Tracking Control of Robots with Uncertainty in Depth Information. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 2817–2822. [Google Scholar]

- Liu, Y.H.; Wang, H.; Wang, C.; Lam, K.K. Uncalibrated visual servoing of robots using a depth-independent interaction matrix. IEEE Trans. Robot. 2006, 22, 804–817. [Google Scholar]

- Wang, H.; Liu, Y.H.; Zhou, D. Dynamic Visual Tracking for Manipulators Using an Uncalibrated Fixed Camera. IEEE Trans. Robot. 2007, 23, 610–617. [Google Scholar] [CrossRef]

- Wang, H.; Jiang, M.; Chen, W.; Liu, Y.H. Visual servoing of robots with uncalibrated robot and camera parameters. Mechatronics 2012, 22, 661–668. [Google Scholar] [CrossRef]

- Liu, Y.H.; Wang, H.; Chen, W.; Zhou, D. Adaptive visual servoing using common image features with unknown geometric parameters. Automatica 2013, 49, 2453–2460. [Google Scholar] [CrossRef]

- Cheah, C.C.; Liu, C.; Slotine, J.J.E. Adaptive Jacobian vision based control for robots with uncertain depth information. Automatica 2010, 46, 1228–1233. [Google Scholar] [CrossRef]

- Wang, F.; Liu, Z.; Chen, C.P.; Zhang, Y. Robust adaptive visual tracking control for uncertain robotic systems with unknown dead-zone inputs. J. Frankl. Inst. 2019, 356, 6255–6279. [Google Scholar] [CrossRef]

- Guo, D.; Sun, F.; Fang, B.; Yang, C.; Xi, N. Robotic grasping using visual and tactile sensing. Inf. Sci. 2017, 417, 274–286. [Google Scholar] [CrossRef]

- Liu, A.; Lai, G.; Liu, W. Adaptive Visual Control of Robotic Manipulator With Uncertainties in Kinematics and Dynamics. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 7232–7237. [Google Scholar]

- Slotine, J.J.; Li, W. On the Adaptive Control of Robot Manipulators. Int. J. Robot. Res. 1987, 6, 49–59. [Google Scholar] [CrossRef]

- Krstic, M.; Kokotovic, P.V.; Kanellakopoulos, I. Nonlinear and Adaptive Control Design; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1995. [Google Scholar]

- Alavandar, S.; Nigam, M. New hybrid adaptive neuro-fuzzy algorithms for manipulator control with uncertainties—Comparative study. ISA Trans. 2009, 48, 497–502. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Xie, L.; Liu, Y. PD plus error-dependent integral nonlinear controllers for robot manipulators with an uncertain Jacobian matrix. ISA Trans. 2012, 51, 792–800. [Google Scholar] [CrossRef]

| Joint | Angle (Rad) | Offset (m) | Length (m) | Twist (Rad) |

|---|---|---|---|---|

| 1 | 0 | 0 | ||

| 2 | 0 | 0.4318 | 0 | |

| 3 | 0.15005 | 0.0203 | ||

| 4 | 0.4318 | 0 | ||

| 5 | 0 | 0 | ||

| 6 | 0 | 0 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lai, G.; Liu, A.; Yang, W.; Chen, Y.; Zhao, L. Uncalibrated Adaptive Visual Servoing of Robotic Manipulators with Uncertainties in Kinematics and Dynamics. Actuators 2023, 12, 143. https://doi.org/10.3390/act12040143

Lai G, Liu A, Yang W, Chen Y, Zhao L. Uncalibrated Adaptive Visual Servoing of Robotic Manipulators with Uncertainties in Kinematics and Dynamics. Actuators. 2023; 12(4):143. https://doi.org/10.3390/act12040143

Chicago/Turabian StyleLai, Guanyu, Aoqi Liu, Weijun Yang, Yuanfeng Chen, and Lele Zhao. 2023. "Uncalibrated Adaptive Visual Servoing of Robotic Manipulators with Uncertainties in Kinematics and Dynamics" Actuators 12, no. 4: 143. https://doi.org/10.3390/act12040143

APA StyleLai, G., Liu, A., Yang, W., Chen, Y., & Zhao, L. (2023). Uncalibrated Adaptive Visual Servoing of Robotic Manipulators with Uncertainties in Kinematics and Dynamics. Actuators, 12(4), 143. https://doi.org/10.3390/act12040143