Abstract

In this paper, an adaptive incremental neural network (INN) fixed-time tracking control scheme based on composite learning is investigated for robot systems under input saturation. Firstly, by integrating the composite learning method into the INN to cope with the inevitable dynamic uncertainty, a novel adaptive updating law of NN weights is designed, which does not need to satisfy the stringent persistent excitation (PE) conditions. In addition, for the saturated input, differing from adding the auxiliary system, this paper introduces a hyperbolic tangent function to deal with the saturation nonlinearity by converting the asymmetric input constraints into the symmetric ones. Moreover, the fixed-time control approach and Lyapunov theory are combined to ensure that all the signals of the robot closed-loop control systems converge to a small neighborhood of the origin in a fixed time. Finally, numerical simulation results verify the effectiveness of the fixed-time control and composite learning algorithm.

1. Introduction

With the development of automation technology towards intelligent manufacturing, robots have been widely used in military rescue, intelligent assembly, human robot cooperation tasks, and other industrial manufacturing fields. On many occasions, robots and humans share the same workspace. If robot tasks are not performed accurately, unexpected accidents will occur. Therefore, how to accurately control the robots to perform the required tasks has always been an exceedingly important research direction [1,2,3]. Many scholars have proposed the model-based control schemes in which the inherent dynamics of the robot are fully known. However, in practice, due to the uncertainty of the environment and the coupling effect, it is difficult to determine the manipulator model and obtain the required control accuracy correctly and quickly. Thus, in recent years, a great deal of control schemes based on universal approximation such as neural networks (NNs) [4,5,6,7] and fuzzy logic systems (FLSs) [8,9,10] have emerged to deal with the unknown nonlinearity terms in the manipulator systems. If the network nodes were selected unreasonably, this would lead to insufficient learning of the nonlinear terms and the degradation of approximation performance, which was the common weakness in the above-mentioned NN approximation. An effective way to settle this issue is to dynamically increase the number of nodes, which is called the INN [11]. No matter whether the NN or INN, it should be pointed out that the network weight converges to the real value when the sufficient condition for accurate approximation is established, and the PE condition is an important condition to ensure the convergence of estimation [12]. The PE condition is difficult to achieve, so it is often not feasible in practical systems. It is worth emphasizing that the partial PE condition proposed in [13] relaxed the traditional PE, and it was pointed out that any recursive NN input trajectory of the radial basis function neural network (RBFNN) defined in a local regular lattice can be partially activated. In the works [14,15,16,17], this idea was utilized in the adaptive control design of nonlinear systems such as a manipulator, to ensure the stability and realize the convergence of the NN weights. Based on the above discussion, how to solve the problem of insufficient learning caused by improper node selection on the basis of relaxing the strict PE condition still needs to be further discussed.

On the other hand, during the task of a manipulator, the input control torque cannot exceed a certain upper limit value due to the inevitable constraints of electromechanical failure or mechanical structure change. If the torque required by the controller exceeds the torque provided by the actuator, unpredictable mechanical faults may occur, thereby damaging the system performance. Hence, it is a meaningful work to investigate the input saturation [18,19,20,21,22,23,24] for practical nonlinear systems. For uncertain nonlinear systems, considering the effect of input saturations, the authors in [18,19], by constructing an auxiliary system, developed two effective control schemes to restrain them. Subsequently, the works in [22,23,24] extended the above methods to robotic manipulators [22], ships [23], and suspension systems [24] and proposed several control schemes that can suppress the adverse effects of saturation on the actual systems. Obviously, these schemes compensated the saturations’ nonlinearity by introducing an auxiliary system, yet the auxiliary system intensified the complexity of the system structure to a certain extent, expanded the amount of calculation, and may also lead to chattering. In order to amend these flaws, the works in [20,21] designed a hyperbolic tangent function to approximate the saturation nonlinearity for symmetric input constraints, which alleviated the vibration and large amount of calculation caused by the auxiliary system. Nevertheless, it must be emphasized that the above work did not consider the asymmetric saturation of the actuators or require the convergence time of the controlled system. To a certain extent, rapid convergence will lead to the increase of the vibration amplitude. Therefore, under the constraint of input nonlinearity, it is hard to obtain stability and good error convergence, which inspired our research.

In order to achieve high accuracy and fast convergence, the control approach of finite-time convergence is a preferred solution. The pioneering work [25] introduced the concept of finite-time stability and proved that it has high-level robustness and faster transient response. Subsequently, many scholars have followed this work, and many constructive works have emerged [26,27,28,29]. The work [26] presented a smooth finite-time control scheme of strict feedback nonlinear systems, and the work [27] investigated the issue of finite-time for nonlinear systems with unmeasurable states through a quantized feedback control strategy. The research [25,26,27,28,29] limited the convergence time to a range by finite-time control, but all relied on the initial state of the system. For the sake of breaking these restrictions, the authors in [30] proposed a fixed-time control scheme, which is independent of the initial conditions and can be adjusted by the controller parameters. To this end, a large number of adaptive intelligent control algorithms have been developed [31,32,33,34,35]. By applying universal barrier functions, a fixed-time control with symmetric constraints for MIMO uncertain systems was studied in [31]. In [32], the observer-based fixed-time tracking control problem of SISO nonlinear systems was considered. In [33,34], the fixed-time method was extended to multiagent systems and a flexible robotic manipulator. In [35], the authors developed fixed-time control with distrubance for manipulator system, and the work of this paper extends [35] and introduces composite learning approach on the fram work of the NN fixed-time theory.

Inspired by the above discussions, this paper proposes an adaptive INN fixed-time tracking control scheme based on composite learning for the manipulator system under saturated input. The main contributions are as follows:

- A new INN adaptive algorithm based on the composite learning technique is designed. By dynamically generating network nodes and introducing some persistence conditions to adapt to the NN weights, the estimation error information can be properly integrated into the adaptive law, and the estimation performance is improved on the basis of relaxing the traditional PE conditions. Even though the works [14,15,16,17] studied composite learning control, they did not consider dynamically activating network nodes to adjust the NN input, let alone ensuring that the estimation error converged in a fixed time.

- In the framework of the backstepping composite learning approach, the challenge of devising a fixed-time controller with asymmetric actuator saturation of the manipulator system is effectively tackled. Although the authors in [18,19,22,23,24] considered the problem of actuator input saturation, they all solved symmetric saturation by introducing auxiliary systems, which is not tenable for the asymmetric scenario. Instead, this paper not only proposes a feasible asymmetric saturation control scheme, but also ensures fixed-time convergence under the composite learning framework.

The rest of this paper is structured as follows. In Section 2, the system description and some useful lemmas are introduced. Section 3 shows the radial basis function NN and the INN. Section 4 introduces how to design the INN controller based on composite learning in the presence of input saturation and presents the stability analysis. The proposed control scheme in this paper is validated by the simulation in Section 5. Finally, Section 6 gives the conclusion of this paper.

2. System Description

The dynamic model of a manipulator with n degrees of freedom can be described as:

where represents the joint position angle vector, represents the inertia matrix, represents the Coriolis matrix, represents the gravity, and represents the control torque and satisfies

where is the i-th element of the control law . , , , and are the known saturation parameters; nonlinear functions and are unknown, but smoothly continuous; and the assumption that , are satisfied in and , respectively, where , , , and are positive constants.

The manipulator has the following two commonly used properties.

Property 1

([10]). For any θ, , H, C, and G are bounded and their first derivatives are available.

Property 2

([10]). is an antisymmetric matrix, which means, for any , are established.

Lemma 1

([31]). If a positive Lyapunov function satisfies: , where , , , , and , then the system is considered to be stable in fixed-time, whose solution will converge to a compact set , where , and the convergence time of the system satisfies: .

Lemma 2

([31,33]). For any , we have

Lemma 3

([16]). The vector is not used, then its estimate value can be obtained according to the following second-order filter:

where and are estimates of and , respectively. Note that with the frequency w fit low enough, the estimation error of is small enough to make .

Definition 1

([13]). For an n-dimensional bounded column vector , if there are positive constants , , and such that is valid for arbitrary time interval , then the bounded signal is called PE.

3. Radial Basis Function NN Approximator

The RBFNN has a strong nonlinear approximation ability. As long as there are enough nodes, any nonlinear term can be approximated with any accuracy. However, due to the limited number of nodes in practical applications, there will be an approximation error. The expression of the RBFNN approximating the nonlinear term is

where denotes the network weight, denotes the regression vector, denotes the approximation error, denotes the input vector of the network, n denotes the dimension of the nonlinear term to be estimated, k denotes the number of nodes in the neural network, and m denotes the dimension of the network input vector.

The Gaussian function is the most commonly used basis function in the RBFNN because of its simple form and being differentiable at any order, and its expression is as follows:

where denotes the i-th node and denotes the standard deviation.

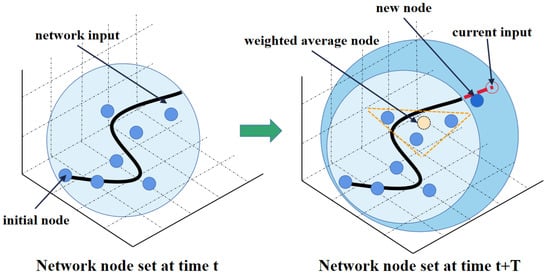

In order to overcome the shortcomings of the inaccurate nodes manually set in advance, the method of incremental learning [11] to generate nodes is introduced, and the node addition process is shown in Figure 1. Firstly, the initial state of the system is set to the first node of the network, i.e.,

Figure 1.

The schematic diagram of the incremental neural network.

In the following process, the network will decide whether to generate nodes according to the distance between the current input and the node set. After each sampling period T, the node set of the network is

where denotes the node set of the network at time t, indicates the newly generated node, while denotes the node set at time . denotes the Euclidean distance between the network input and node set, whose calculation formula is shown as (10), and is the distance threshold parameter to be designed.

where represents the weighted average node of the neural network, which is obtained according to the weighted average of b nodes closest to the current input vector, i.e.,

Finally, the newly generated nodes of the network are

where is the parameter to be designed.

From the above process, it is not difficult to find that, compared with the traditional neural network, the neural network using incremental learning has a significant advantage in that it can ensure that the input is always in the compact domain of the neural network, enabling the maximum activation of the nodes. Since the degree of node activation depends on the Euclidean distance between the current input and the nodes and the nodes of the incremental neural network are generated according to the real-time input, when the current input is not in the network compact domain, the nodes will be automatically added to expand the compact domain, so the effectiveness of the incremental neural network can be better guaranteed. In contrast, the nodes in the traditional NN need to be set up artificially; when the prior knowledge of the complex system is not accurate, the designed node set is far away from the input of the system and cannot be activated effectively, which will lead to a significant reduction in the approximation performance of the network. We call the RBFNN with incremental learning the IRBFNN.

4. Controller Design and Stability Analysis

4.1. Controller Design

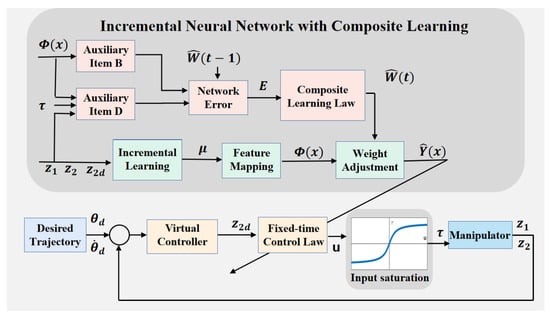

The proposed control scheme is shown in Figure 2. Let and ; the dynamic equation of the system (1) can be rewritten as

Figure 2.

The overview of the proposed control scheme.

The state space equation of the manipulator can be sorted out as

Thus, define the error signal of the system as

where denotes the desired joint position and denotes the virtual control law to be designed later.

The dynamic error equation of the system can be obtained as

According to the backstepping control method and the theory of fixed-time stability, design the virtual control law as

In order to ensure that and its derivate are both continuous, the nonlinear function is designed as

where is a small constant, .

Using the IRBFNN to estimate the unknown model of the system, we obtain

where is the input of the IRBFNN and , , , and represent the item to be estimated, the ideal weight, the regression vector, and the approximation error, respectively. k is the number of nodes of the IRBFNN, and . Thus, the dynamic error equation can be rewritten as

Define the estimated error of the network weight as

where is an estimate of the ideal network weight W.

In order to make the network weights converge in a fixed time without the strict PE condition, we designed the weight update law with composite learning technology [16]. Firstly, the error of the IRBFNN is defined as:

where represents the estimated network weight of the i-th joint and is the corresponding network estimation error, and the auxiliary terms and are defined as follows:

where is a symmetric matrix, and .

Combining (23) and (21), the estimation error of the network (22) can be further arranged as

where and is bounded. In (24), corresponds to the estimation error of the network weight and corresponds to the approximation error, so corresponds to the total error of the IRBFNN.

To calculate in Equation (23), from Equations (13) and (19), we can obtain

where denotes the pseudo inverse of a vector. Since is the angular acceleration of the manipulator and cannot be obtained directly, its estimated value is obtained through the second-order filter shown in Lemma 3, that is

Then, the expression of in Equation (23) can be further arranged as

Obviously, the expression (23) is a solution for the system of differential Equation (29), so the update laws of the auxiliary terms B, D are set as:

Based on the composite learning approach, design by the IRBFNN, as shown in (30):

where , , and are all full-rank diagonal constant matrices and their elements are all positive.

Remark 1.

It is not difficult to find that the weight composite learning law in the Formula (30) combines the position tracking error signal of the current system with the total error signal of the IRBFNN. The idea is to ensure the convergence of the weight by using the feedback effect of the historical error generated by the system and the updating of the weight. It should be noted that, compared with the existing results, the composite learning law designed by us can guarantee not only the weight convergence, but also convergences at a fixed time.

In order to satisfy the input saturation effect of the actuator, introduce the variable to assist the torque design; one has

where denotes a diagonal matrix, represents the upper limit of the torque that the actuator can accept, while represents the parameter related to the expected maximum control law, and

According to (20), we can obtain

In the proposed controller, the relation between the actual control torque and the designed control law u is as follows:

where represents the difference between the actual input saturation effect curve and the ideal value and the assumption that and represents the conversion value of the control law u after the saturation effect. Use the hyperbolic tangent function to solve the input saturation effect, that is

where . According to mean-value theorem, the following equation holds:

where .

According to the fixed-time control theory, the control law of u is constructed as

where , and if , .

Remark 2.

It should be mentioned that the control scheme in this paper can solve the trajectory tracking control task of single-arm manipulator systems with input saturation well. However, if the proposed control scheme is extended to dual-arm manipulator systems, it is necessary to analyze the internal force relationship between the two arms, which makes the controller design process more complex.

4.2. Stability Analysis

The property of the proposed IRBFNN composite learning fixed-time control scheme can be summarized as the following theorem.

Theorem 1.

Consider the manipulator system (1) with input saturation, and design the virtual controllers (17), the actual fixed-time controller (39), and the composite learning law (30), then it can be concluded that:

- (1)

- All the error signals are guaranteed to converge in a fixed time;

- (2)

- The position signal θ converges to a small neighborhood of the desired position in a fixed time;

- (3)

- The joint torque is guaranteed not to transgress the constraints sets.

Proof.

According to the error signal in the controller, choosing the following three candidate Lyapunov functions, we obtain

In accordance with the definition of , the following two situations about the term should be considered:

Case 1: If , it follows that

Case 2: If and according to the inequality ( and are positive), we have

where . Considering that and following the above two cases of analysis yield

According to (24), we have

From Equation (23) and combined with the definition of , it is known that is bounded, so is bounded.

Choose a general candidate Lyapunov function V as

For the convenience and conciseness of the proof process, let

Based on (45), (46), (50), and (51) and Young’s inequality, it can be obtained that

where , satisfy , , , and . According to the above analysis, it is known that , , , and are all bounded. That is, there exists a positive constant such that .

In light of Lemma 1, the related signals that make up the Lyapunov function V will converge to the compact set in a fixed time, and the convergence time satisfies: , where . □

5. Simulation Verification

5.1. Simulation Settings

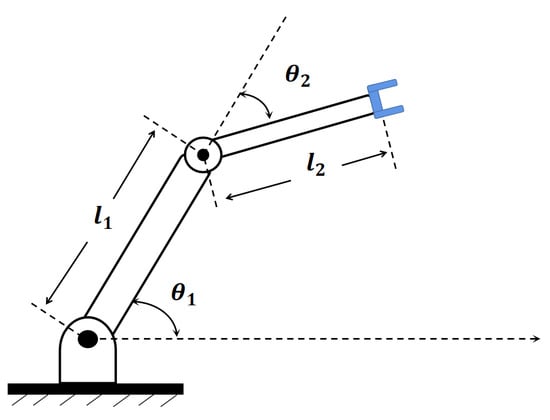

In order to verify the feasibility and effectiveness of the proposed control scheme, a simulation experiment was carried out on a two-DOF manipulator model [14], and the expression is shown in (53), while the model is shown in Figure 3. The meanings of the parameters in the expression are shown in Table 1. The desired trajectory is shown in (54). The mass and length parameters of the linkage manipulator were set as follows kg, kg, N/kg, m, and m. The saturation torque of Joint 1 was selected as , and the saturation torque of Joint 2 was select as , i.e., . The saturation characteristic parameter is given as .

Figure 3.

The model of the 2-DOF manipulator.

Table 1.

The description of the 2-joint manipulator.

The parameters in the virtual controller and actual control law were chosen as , , , and . The relevant parameters of the INN are given as follows: , . The maximum number of nodes in the network is 100, . The parameters of the network weight update rate based on composite learning were chosen as and . The fixed-time-related parameters were set to and .

In order to more intuitively compare the performance of the incremental neural network controller based on composite learning (CLIRBFNN), we set up a group of convincing comparative simulations. The comparative controllers adopted the fixed-time incremental neural network controller (IRBFNN), the general neural network controller (RBFNN), and the PD+ controller (PD+), and the weight update rate of the comparative controller adopted the traditional update rate, that is

where and . The control law of the compared PD+ controller is

where the gravity term G is fully compensated. The values of and were selected as and .

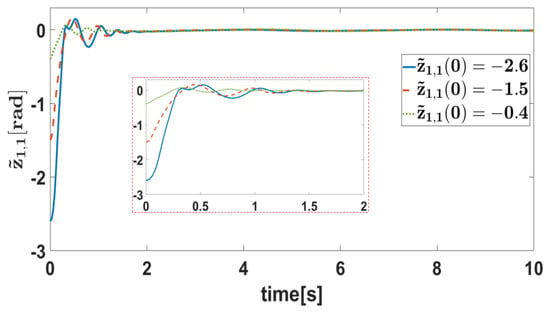

In order to specifically compare the controllers under different initial states, three groups of different initial states were set, i.e., , , and , and the corresponding initial position errors were , , and . The purpose of this setting was to fully investigate the performance of the controller when the initial error is more than 20-times the preset steady-state error. On the other hand, the performance of the two joints under positive and negative errors can be observed. Moreover, the difference between the performance of the controller under a small initial error and a large initial error can be seen.

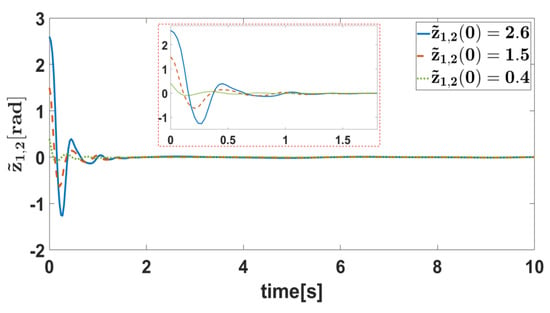

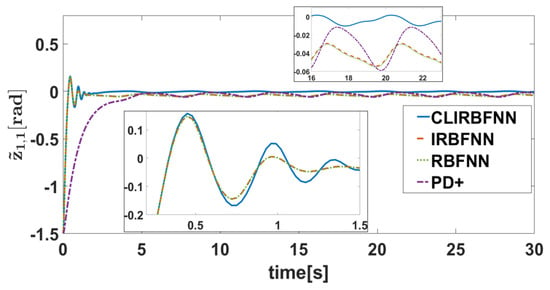

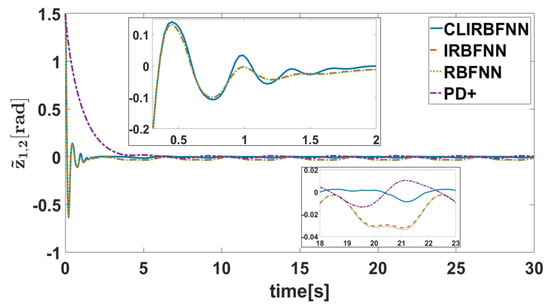

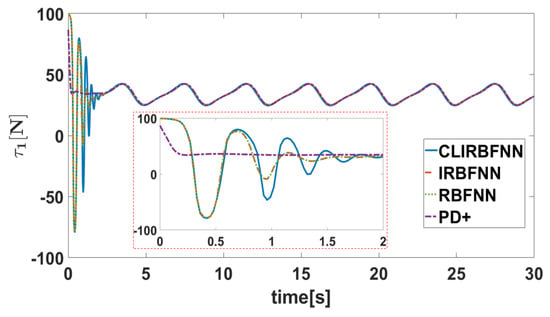

5.2. Result Analysis

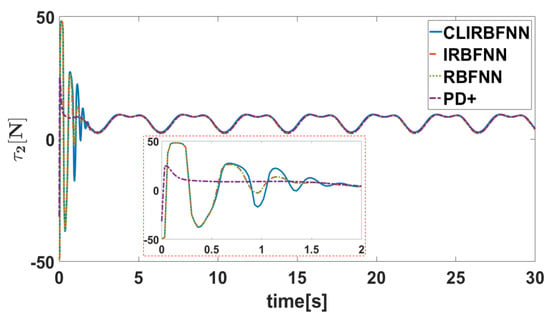

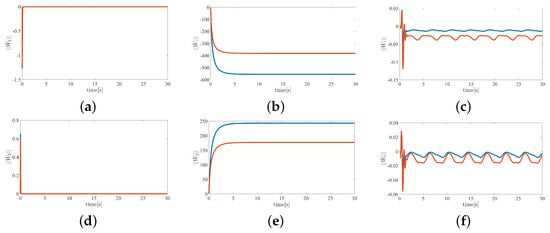

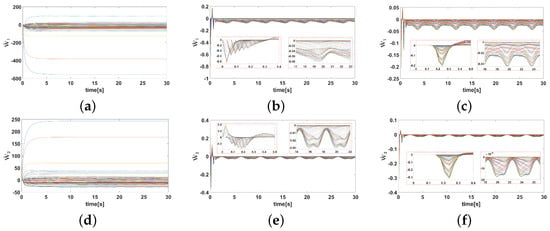

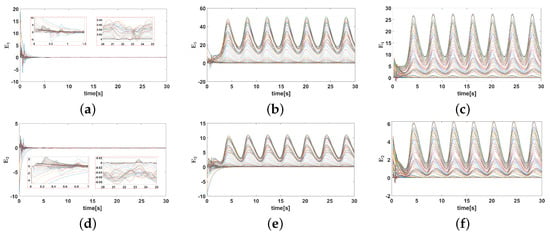

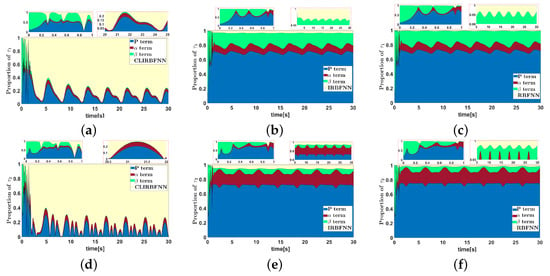

The simulation results are presented in Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12. Figure 4 and Figure 5 show the curves of the tracking error under different initial conditions. It can be seen that different initial values have a certain impact on the transient response, but have little impact on the steady-state error. This means that the designed fixed-time controller has good performance under different initial conditions. Figure 6, Figure 7, Figure 8 and Figure 9 present the tracking errors and control torques of Joint 1 and Joint 2 based on different controllers. It is obviously seen that the controller proposed in this paper can ensure that the error converges to a smaller neighborhood than the other compared controllers. Figure 10 shows the norm of the weight. We can see that the they are all bounded and can converge to constants. Figure 11 shows that each dimension weight value of the CLIRBFNN can eventually converge to the constant value, and adding new nodes to the network, the trained weights do not need to be retrained, which indicates that the CLIRBFNN adopted by the proposed controller has a high calculation efficiency. The weight update rate based on the IRBFNN and RBFNN was designed using Lyapunov stability, which was not applied to the error term of the network, so it can only be guaranteed to converge in a certain equilibrium interval. In terms of amplitude, the weight of the CLIRBFNN is at least three orders of magnitude higher than that of the IRBFNN and RBFNN, which expresses the effectiveness of composite learning in weight training. Figure 12 describes the network estimation error for Joint 1 and Joint 2 under the CLIRBFNN, IRBFNN, and RBFNN, respectively. For the CLIRBFNN, the network estimation error of each dimension will eventually converge to the smallest neighborhood near zero in a fixed time. For the IRBFNN and RBFNN, the network estimation error can only oscillate periodically in a large interval, and the oscillation period is positively related to the period of the desired trajectory.

Figure 4.

Error with different initial values.

Figure 5.

Error with different initial values.

Figure 6.

The tracking error of Joint 1.

Figure 7.

The tracking error of Joint 2.

Figure 8.

The torque of Joint 1.

Figure 9.

The torque of Joint 2.

Figure 10.

The norm of the weights for the two joints based on the CLIRBFNN, IRBFNN, and RBFNN. (a–c) show the norm of the weights for Joint 1. (d–f) show the norm of the weights for Joint 2.

Figure 11.

The weight of two joints based on the CLIRBFNN, IRBFNN, and RBFNN. (a–c) show the weights of Joint 1. (d–f) show the weights of Joint 2.

Figure 12.

The estimation error based on the CLIRBFNN, IRBFNN, and RBFNN. (a–c) show the estimation error of Joint 1. (d–f) show the estimation error of Joint 2.

In order to more quantitatively analyze the role of each control term in the control process, we analyzed the relative proportion of each control term. Blue refers to the first and second terms of in (39); red is the third term of ; green is the fourth term of ; yellow is the NN’s control term. Figure 12 depicts that, in the absence of composite learning control, i.e., the IRBFNN and RBFNN, the weights cannot be well trained, resulting in the proportion of network control terms being less than one-thousandth of the whole control process. Therefore, the approximation performance of the system is degraded, which is also the reason why the steady-state errors in Figure 6 and Figure 7 are larger than that of the proposed controller in this paper. From Figure 13a,d, it can be seen that, in the transient regulation stage, the proportion terms (blue) and fixed-time terms (red and green) account for a large proportion, which indicates that the transient regulation mainly depends on the non-network terms. In the process of steady-state regulation, the proportion of the CLIRBFNN terms exceeds of the total most of the time, and the proportion of the non-network control terms is very small, which shows that the CLIRBFNN compensates the uncertainty terms well and has excellent approximation performance.

Figure 13.

The torque ratio law under different NN control. (a–c) show the proportion of Joint 1. (d–f) show the proportion of Joint 2.

Through the above analysis, the following conclusions can be drawn: the NN fixed-time controller based on composite learning can ensure that the network weights converge when the continuous excitation is not satisfied, and all error signals of the manipulator system can converge to a small neighborhood near zero in a fixed time with the input being saturated.

6. Conclusions

In this paper, a fixed-time adaptive tracking control scheme with actuator saturation was proposed for the manipulator system under the composite learning framework, combined with the INN control scheme. A new incremental neural network adaptive algorithm based on compound learning was designed, so that the estimation error information could be properly integrated into the adaptive law, which improved the estimation performance and relaxed the PE condition. At the same time, a hyperbolic tangent function was designed to solve the problem of asymmetric input saturation, and a backstepping recursive fixed-time control scheme was employed to design the controller. In addition, the stability analysis was carried out by using the fixed-time Lyapunov theory, which not only suppressed the input saturation, but also ensured the fixed-time convergence of all signals. Our future work will extend the presented scheme to a dual-arm robot and verify the proposed control approach by using the Baxter robot.

Author Contributions

Conceptualization, Y.F. and C.Y.; methodology, Y.F. and H.H.; software, Y.F. and H.H.; validation, Y.F., H.H. and C.Y.; resources, C.Y.; writing—original draft preparation, Y.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Nature Science Foundation of China (NSFC) under Grant U20A20200 and the Major Research Grant No. 92148204, in part by Guangdong Basic and Applied Basic Research Foundation under Grants 2019B1515120076 and 2020B1515120054, and in part by the Industrial Key Technologies R & D Program of Foshan under Grant 2020001006308 and Grant 2020001006496.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, Z.; Huang, B.; Ajoudani, A.; Yang, C.; Su, C.; Bicchi, A. Asymmetric bimanual control of dual-arm exoskeletons for human-cooperative manipulations. IEEE Trans. Robot. 2017, 34, 264–271. [Google Scholar] [CrossRef]

- Li, R.; Qiao, H. A survey of methods and strategies for high-precision robotic grasping and assembly tasks—Some new trends. IEEE/ASME Trans. Mechatron. 2019, 24, 2718–2732. [Google Scholar] [CrossRef]

- Zeng, C.; Yang, C.; Chen, Z. Bio-inspired robotic impedance adaptation for human-robot collaborative tasks. Sci. China Inf. Sci. 2020, 63, 170201. [Google Scholar] [CrossRef]

- Lewis, F.L.; Liu, K.; Yesildirek, A. Neural net robot controller with guaranteed tracking performance. IEEE Trans. Neural Netw. 1995, 6, 703–715. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Liu, Y.; He, W.; Qiao, H.; Ji, H. Adaptive-neural-network-based trajectory tracking control for a nonholonomic wheeled mobile robot with velocity constraints. IEEE Trans. Ind. Electron. 2020, 68, 5057–5067. [Google Scholar] [CrossRef]

- Yang, C.; Chen, C.; He, W.; Cui, R.; Li, Z. Robot learning system based on adaptive neural control and dynamic movement primitives. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 777–787. [Google Scholar] [CrossRef]

- Zhao, K.; Song, Y. Neuroadaptive robotic control under time-varying asymmetric motion constraints: A feasibility-condition-free approach. IEEE Trans. Cybern. 2018, 50, 15–24. [Google Scholar] [CrossRef]

- Ma, Z.; Huang, P.; Kuang, Z. Fuzzy approximate learning-based sliding mode control for deploying tethered space robot. IEEE Trans. Fuzzy Syst. 2020, 29, 2739–2749. [Google Scholar] [CrossRef]

- Su, H.; Qi, W.; Chen, J.; Zhang, D. Fuzzy Approximation-based Task-Space Control of Robot Manipulators with Remote Center of Motion Constraint. IEEE Trans. Fuzzy Syst. 2022, 30, 1564–1573. [Google Scholar] [CrossRef]

- Yang, C.; Jiang, Y.; Na, J.; Li, Z.; Cheng, L.; Su, C. Finite-time convergence adaptive fuzzy control for dual-arm robot with unknown kinematics and dynamics. IEEE Trans. Fuzzy Syst. 2018, 27, 574–588. [Google Scholar] [CrossRef]

- Huang, H.; Zhang, T.; Yang, C.; Chen, C.P. Motor learning and generalization using broad learning adaptive neural control. IEEE Trans. Ind. Electron. 2019, 67, 8608–8617. [Google Scholar] [CrossRef]

- Miao, Z.; Liu, Y.; Wang, Y.; Chen, H.; Zhong, H.; Fierro, R. Consensus with persistently exciting couplings and its application to vision-based estimation. IEEE Trans. Cybern. 2019, 51, 2801–2812. [Google Scholar] [CrossRef]

- Wang, C.; Hill, D.J. Learning from neural control. IEEE Trans. Neural Netw. 2006, 17, 130–146. [Google Scholar] [CrossRef]

- Yang, C.; Teng, T.; Xu, B.; Li, Z.; Na, J.; Su, C. Global adaptive tracking control of robot manipulators using neural networks with finite-time learning convergence. Int. J. Control Autom. Syst. 2017, 15, 1916–1924. [Google Scholar] [CrossRef]

- Wang, C.; Wang, M.; Liu, T.; Hill, D.J. Learning from ISS-modular adaptive NN control of nonlinear strict-feedback systems. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1539–1550. [Google Scholar] [CrossRef]

- Pan, Y.; Sun, T.; Liu, Y.; Yu, H. Composite learning from adaptive backstepping neural network control. Neural Netw. 2017, 95, 134–142. [Google Scholar] [CrossRef]

- Guo, K.; Pan, Y.; Yu, H. Composite learning robot control with friction compensation: A neural network-based approach. IEEE Trans. Ind. Electron. 2018, 66, 7841–7851. [Google Scholar] [CrossRef]

- Chen, M.; Ge, S.S.; Ren, B. Adaptive tracking control of uncertain MIMO nonlinear systems with input constraints. Automatica 2011, 47, 452–465. [Google Scholar] [CrossRef]

- Bai, W.; Zhou, Q.; Li, T.; Li, H. Adaptive reinforcement learning neural network control for uncertain nonlinear system with input saturation. IEEE Trans. Cybern. 2019, 50, 3433–3443. [Google Scholar] [CrossRef]

- Wen, C.; Zhou, J.; Liu, Z.; Su, H. Robust adaptive control of uncertain nonlinear systems in the presence of input saturation and external disturbance. IEEE Trans. Autom. Control 2011, 56, 1672–1678. [Google Scholar] [CrossRef]

- Zhou, Q.; Wang, L.; Wu, C.; Li, H.; Du, H. Adaptive fuzzy control for nonstrict-feedback systems with input saturation and output constraint. IEEE Trans. Syst. Man Cybern. Syst. 2016, 47, 1–12. [Google Scholar] [CrossRef]

- He, W.; Dong, Y.; Sun, C. Adaptive neural impedance control of a robotic manipulator with input saturation. IEEE Trans. Syst. Man Cybern. Syst. 2015, 46, 334–344. [Google Scholar] [CrossRef]

- Du, J.; Hu, X.; Krstić, M.; Sun, Y. Robust dynamic positioning of ships with disturbances under input saturation. Automatica 2016, 73, 207–214. [Google Scholar] [CrossRef]

- Li, Y.; Wang, T.; Liu, W.; Tong, S. Neural network adaptive output-feedback optimal control for active suspension systems. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 4021–4032. [Google Scholar] [CrossRef]

- Bhat, S.P.; Bernstein, D.S. Finite-time stability of continuous autonomous systems. SIAM J. Control Optim. 2000, 38, 751–766. [Google Scholar] [CrossRef]

- Cui, B.; Xia, Y.; Liu, K.; Shen, G. Finite-time tracking control for a class of uncertain strict-feedback nonlinear systems with state constraints: A smooth control approach. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 4920–4932. [Google Scholar] [CrossRef]

- Wang, F.; Chen, B.; Lin, C.; Zhang, J.; Meng, X. Adaptive neural network finite-time output feedback control of quantized nonlinear systems. IEEE Trans. Cybern. 2017, 48, 1839–1848. [Google Scholar] [CrossRef]

- Fan, Y.; Li, Y.; Tong, S. Adaptive finite-time fault-tolerant control for interconnected nonlinear systems. Int. J. Robust Nonlinear Control 2021, 31, 1564–1581. [Google Scholar] [CrossRef]

- Lv, M.; Li, Y.; Pan, W.; Baldi, S. Finite-time fuzzy adaptive constrained tracking control for hypersonic flight vehicles with singularity-free switching. IEEE/ASME Trans. Mechatron. 2022, 27, 1594–1605. [Google Scholar] [CrossRef]

- Polyakov, A. Nonlinear feedback design for fixed-time stabilization of linear control systems. IEEE Trans. Autom. Control 2011, 57, 2106–2110. [Google Scholar] [CrossRef]

- Jin, X. Adaptive fixed-time control for MIMO nonlinear systems with asymmetric output constraints using universal barrier functions. IEEE Trans. Autom. Control 2018, 64, 3046–3053. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, F. Observer-based fixed-time neural control for a class of nonlinear systems. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 2892–2902. [Google Scholar] [CrossRef]

- Huang, C.; Liu, Z.; Chen, C.P.; Zhang, Y. Adaptive Fixed-Time Neural Control for Uncertain Nonlinear Multiagent Systems. IEEE Trans. Neural Netw. Learn. Syst. 2022. early access. [Google Scholar] [CrossRef]

- He, W.; Kang, F.; Kong, L.; Feng, Y.; Cheng, G.; Sun, C. Vibration Control of a Constrained Two-Link Flexible Robotic Manipulator With Fixed-Time Convergence. IEEE Trans. Cybern. 2021, 52, 5973–5983. [Google Scholar] [CrossRef]

- Huang, H.; Lu, Z.; Wang, N.; Yang, C. Fixed-time Adaptive Neural Control for Robot Manipulators with Input Saturation and Disturbance. In Proceedings of the 2022 27th International Conference on Automation and Computing (ICAC), Bristol, UK, 1–3 September 2022; p. 22136904. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).