Vibrotactile-Based Operational Guidance System for Space Science Experiments

Abstract

:1. Introduction

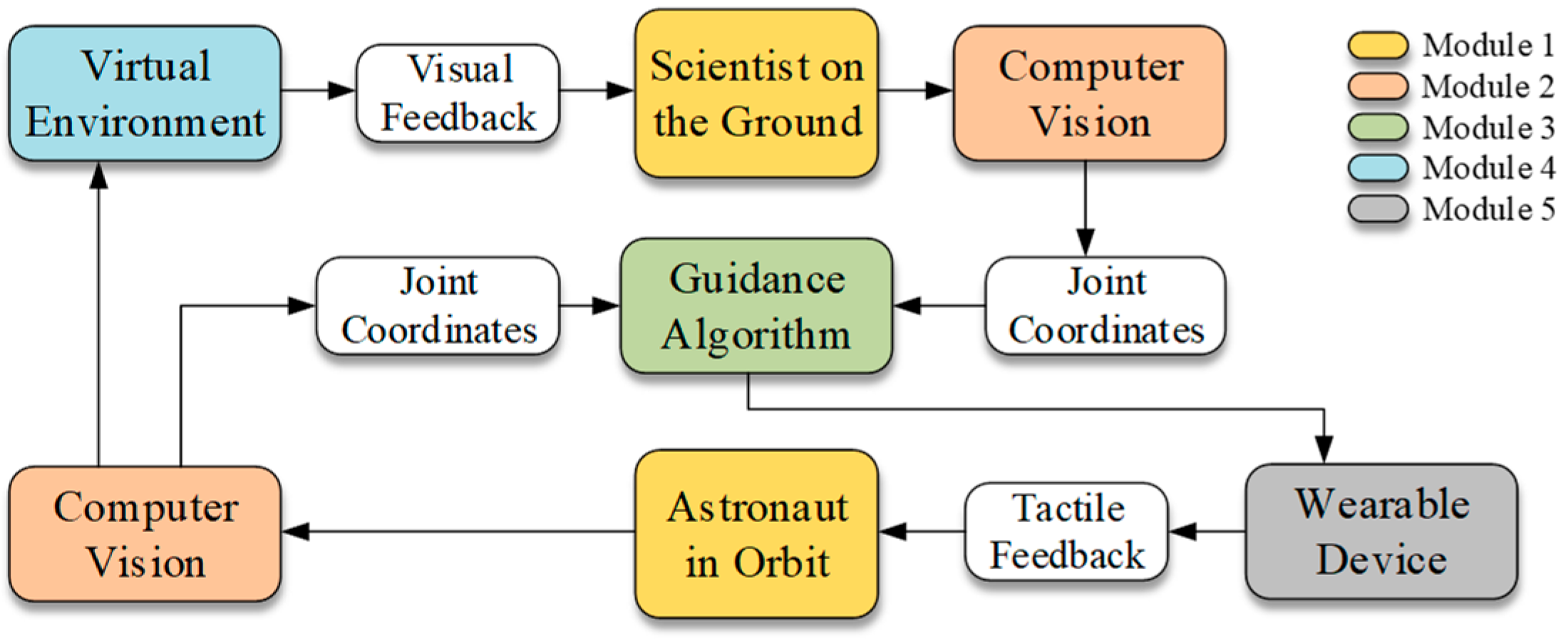

2. The Method of Operational Guidance

3. System Implementation

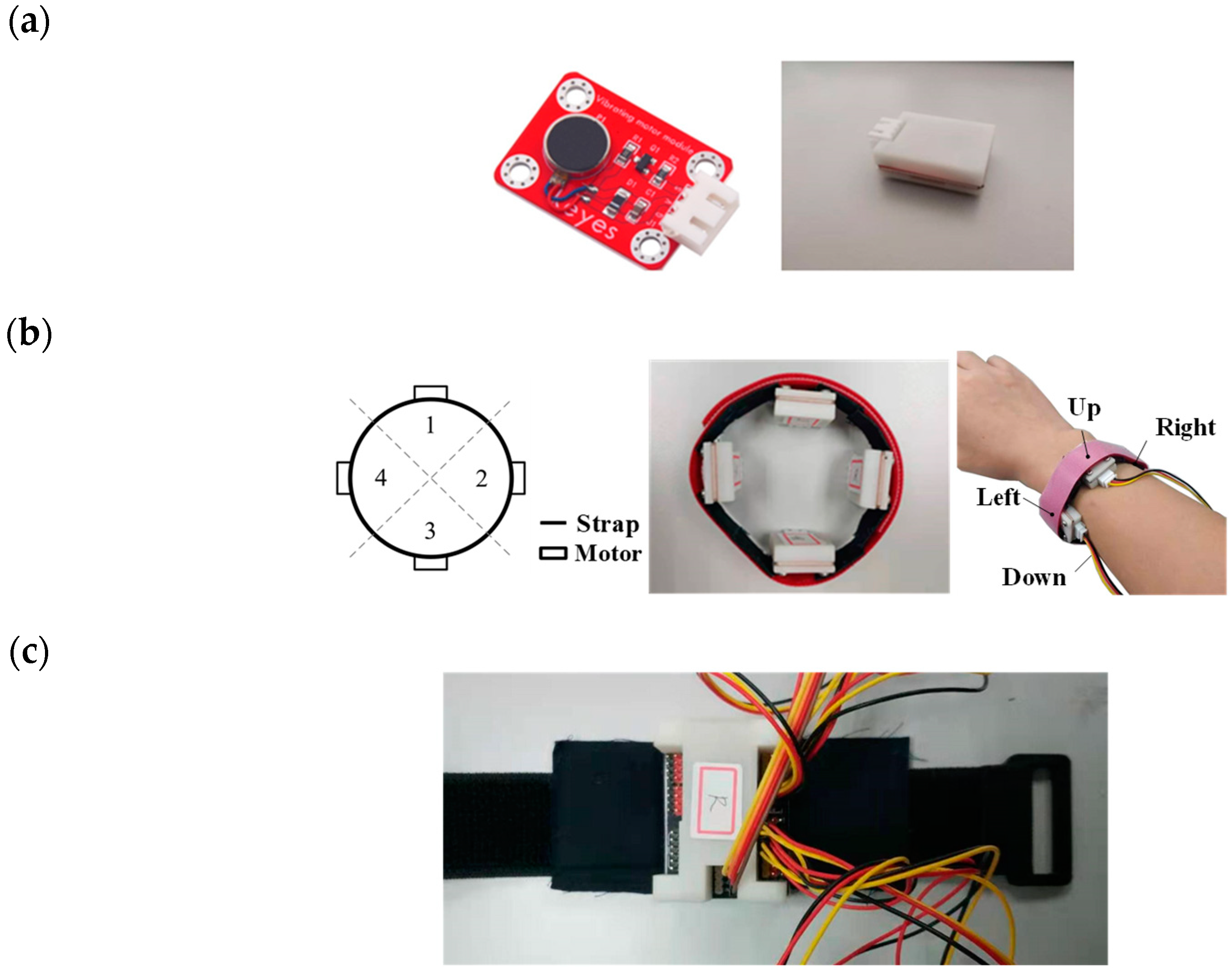

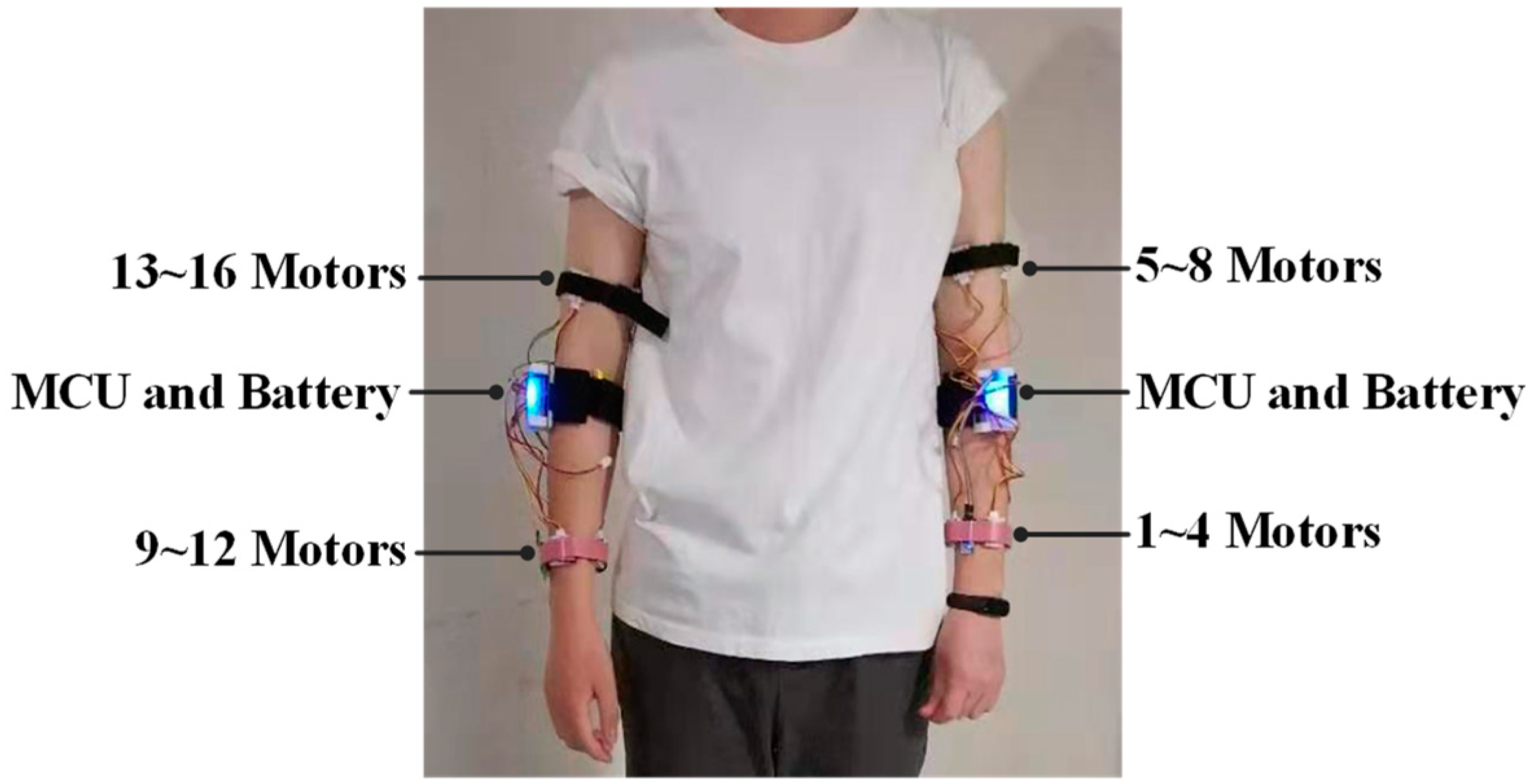

3.1. Wearable Device

3.2. Movements Capture, Data Processing

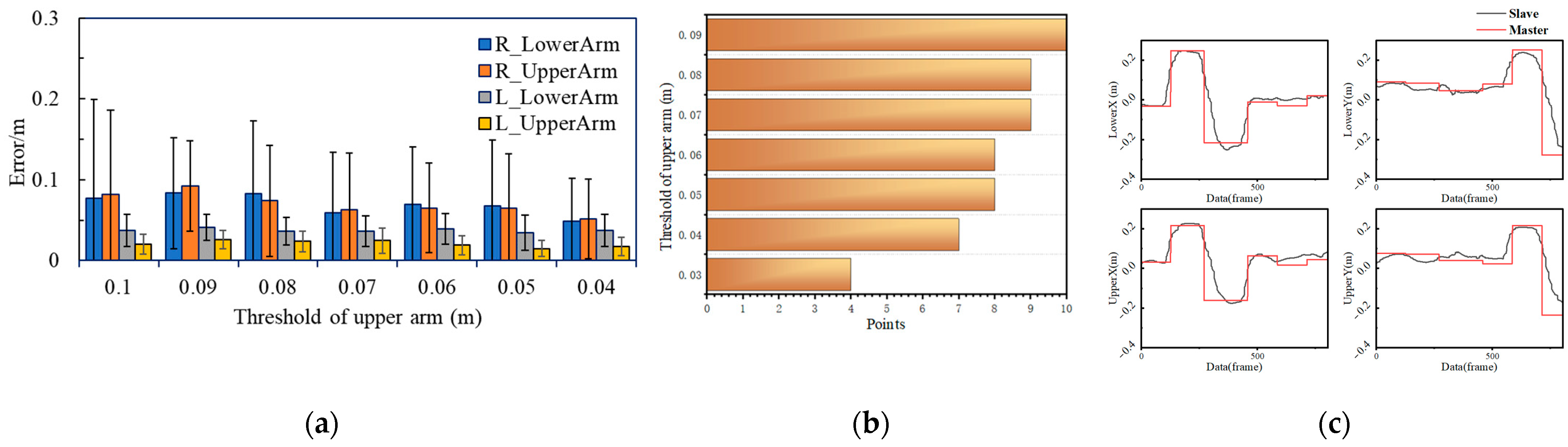

3.3. Guidance Algorithm

4. Experiments

4.1. Experiment 1: Perceptual Test

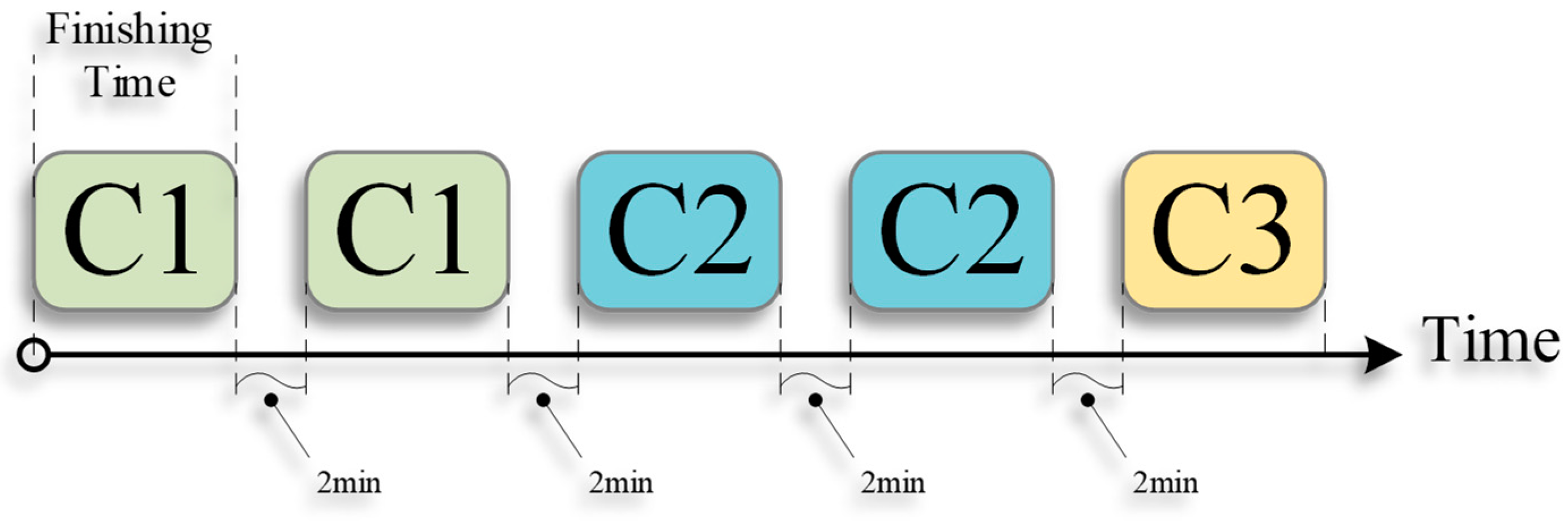

- C1: Using a single vibration source, we conducted 50 trials. For each trial, the wearable device generated a random vibrotactile stimulus;

- C2: Using two vibration sources, we conducted 50 trials, and for each trial, the wearable device randomly and simultaneously generated two vibrotactile stimuli at different positions;

- C3: Using four vibration sources, we conducted 10 trials, and for each trial, the wearable device generated four stimuli on the basis of predetermined order.

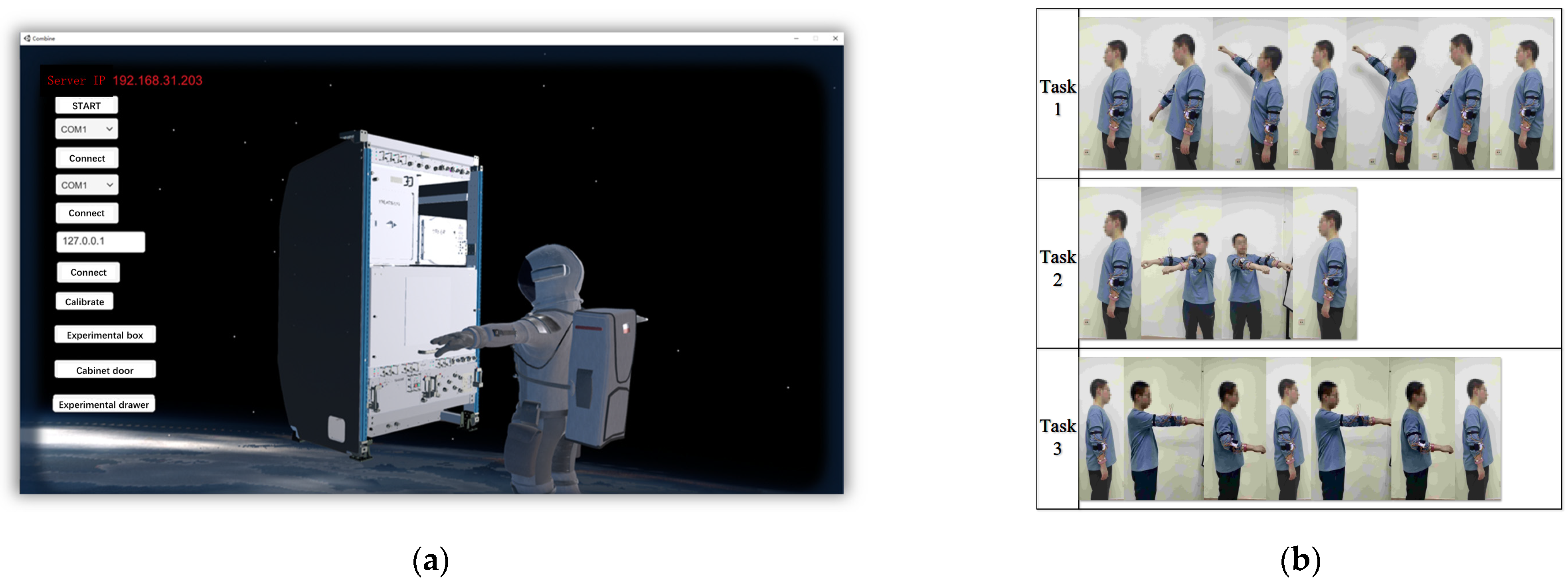

4.2. Experiment 2: Guiding Training

4.3. Experiment 3: Master–Slave Operational Guidance Experiment

5. Results and Discussion

5.1. Experiment 1: Results

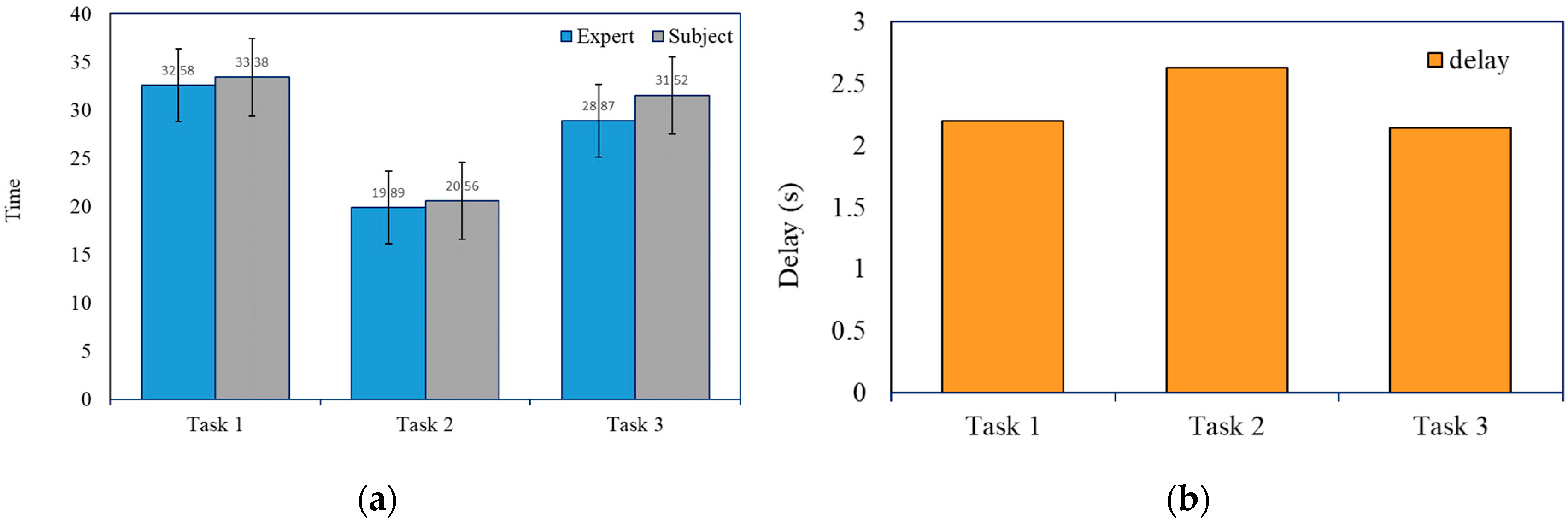

5.2. Experiment 3: Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hai-Peng, J.; Jing-Min, X.; Wei, H.; Yi-Bing, D.; Nan-Ning, Z.H. Space station: Human exploration in space. Acta Autom. Sin. 2019, 45, 1799–1812. [Google Scholar]

- Lin, H.; Wei-Wei, F.; Hai-Ming, W. Analysis and Enlightenment of Scientific Research and Application Activities on ISS. Manned Spacefl. 2019, 6, 834–840. [Google Scholar]

- Karasinski, J.A.; Joyce, R.; Carroll, C.; Gale, J.; Hillenius, S. An augmented reality/internet of things prototype for just-in-time astronaut training. In Proceedings of the International Conference on Virtual, Augmented and Mixed Reality, Vancouver, BC, Canada, 9–14 July 2017; pp. 248–260. [Google Scholar]

- Lieberman, J.; Breazeal, C. TIKL: Development of a wearable vibrotactile feedback suit for improved human motor learning. IEEE Trans. Robot. 2017, 23, 919–926. [Google Scholar] [CrossRef]

- MacLean, K.E. Putting haptics into the ambience. IEEE Trans. Haptics 2009, 2, 123–135. [Google Scholar] [CrossRef]

- Feygin, D.; Keehner, M.; Tendick, R. Haptic guidance: Experimental evaluation of a haptic training method for a perceptual motor skill. In Proceedings of the 10th Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, HAPTICS 2002, Orlando, FL, USA, 24–25 March 2002; pp. 40–47. [Google Scholar]

- Park, W.; Korres, G.; Moonesinghe, T.; Eid, M. Investigating haptic guidance methods for teaching children handwriting skills. IEEE Trans. Haptics 2019, 12, 461–469. [Google Scholar] [CrossRef]

- Rosenthal, J.; Edwards, N.; Villanueva, D.; Krishna, S.; McDaniel, T.; Panchanathan, S. Design, implementation, and case study of a pragmatic vibrotactile belt. IEEE Trans. Instrum. Meas. 2010, 60, 114–125. [Google Scholar] [CrossRef]

- Kim, Y.; Baek, S.; Bae, B.C. Motion capture of the human body using multiple depth sensors. ETRI J. 2019, 39, 181–190. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, J.; Zhang, Y.; Zhu, R. A wearable motion capture device able to detect dynamic motion of human limbs. Nat. Commun. 2020, 11, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Szczęsna, A.; Skurowski, P.; Lach, E.; Pruszowski, P.; Pęszor, D.; Paszkuta, M.; Wojciechowski, K. Inertial motion capture costume design study. Sensors 2017, 17, 612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Z.; Zheng, R.; Kaizuka, T.; Shimono, K.; Nakano, K. The effect of a haptic guidance steering system on fatigue-related driver behavior. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 741–748. [Google Scholar] [CrossRef]

- Devigne, L.; Aggravi, M.; Bivaud, M.; Balix, N.; Teodorescu, C.S.; Carlson, T.; Babel, M. Power wheelchair navigation assistance using wearable vibrotactile haptics. IEEE Trans. Haptics 2020, 13, 52–58. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barontini, F.; Catalano, M.G.; Pallottino, L.; Leporini, B.; Bianchi, M. Integrating wearable haptics and obstacle avoidance for the visually impaired in indoor navigation: A user-centered approach. IEEE Trans. Haptics 2020, 14, 109–122. [Google Scholar] [CrossRef] [PubMed]

- Satpute, S.A.; Canady, J.R.; Klatzky, R.L.; Stetten, G.D. FingerSight: A Vibrotactile Wearable Ring for Assistance with Locating and Reaching Objects in Peripersonal Space. IEEE Trans. Haptics 2019, 13, 325–333. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Li, C.; Wu, C.; Zhao, C.; Sun, J.; Peng, H.; Hu, B. A gait assessment framework for depression detection using kinect sensors. IEEE Sens. J. 2020, 21, 3260–3270. [Google Scholar] [CrossRef]

- Protopapadakis, E.; Voulodimos, A.; Doulamis, A.; Camarinopoulos, S.; Doulamis, N.; Miaoulis, G. Dance pose identification from motion capture data: A comparison of classifiers. Technologies 2018, 6, 31. [Google Scholar] [CrossRef] [Green Version]

- Yang, L.; Yang, B.; Dong, H.; El Saddik, A. 3-D markerless tracking of human gait by geometric trilateration of multiple Kinects. IEEE Syst. J. 2016, 12, 1393–1403. [Google Scholar] [CrossRef]

- Napoli, A.; Glass, S.; Ward, C.; Tucker, C.; Obeid, I. Performance analysis of a generalized motion capture system using microsoft kinect 2.0. Biomed. Signal Process. Control 2017, 38, 265–280. [Google Scholar] [CrossRef]

- Wei-Ying, W.u.; Yu, W.; Qin, L.; Xing-Jie, Y.; Tian-Yuan, C.; Fand, P. Positioning error and its spatial distribution of motion capture with Kinect. Beijing Biomed. Eng. 2014, 4, 344–348. [Google Scholar]

- Poncet, P.; Casset, F.; Latour, A.; Domingues Dos Santos, F.; Pawlak, S.; Gwoziecki, R.; Fanget, S. Static and dynamic studies of electro-active polymer actuators and integration in a demonstrator. Actuators 2017, 6, 18. [Google Scholar] [CrossRef] [Green Version]

| Kinect Joint | Humanoid Joint |

|---|---|

| SpineBase | Hips |

| SpineMid | Spine |

| Neck | Neck |

| ShoulderLeft | LeftUpperArm |

| ElbowLeft | LeftLowerArm |

| WristLeft | LeftHand |

| ShoulderRight | RightUpperArm |

| ElbowRight | RightLowerArm |

| HipLeft | LeftUpperLeg |

| KneeLeft | LeftLowerLeg |

| AnkleLeft | LeftFoot |

| HipRight | RightUpperLeg |

| KneeRight | RightLowerLeg |

| AnkleRight | RightFoot |

| WristRight | RightHand |

| Guidance Information | Output Instructions | ||

|---|---|---|---|

| NONE | “−1” | ||

Additionally, points to the positive direction of x-axis | Right motor vibrates Move toward right | “002” | |

And points to the negative direction of x-axis | Left motor vibrate Move toward left | “004” | |

| and points to the positive direction of y-axis | Up motor vibrates Move toward up | “001” | |

| and points to the negative direction of y-axis | Down motor vibrates Move toward down | “003” | |

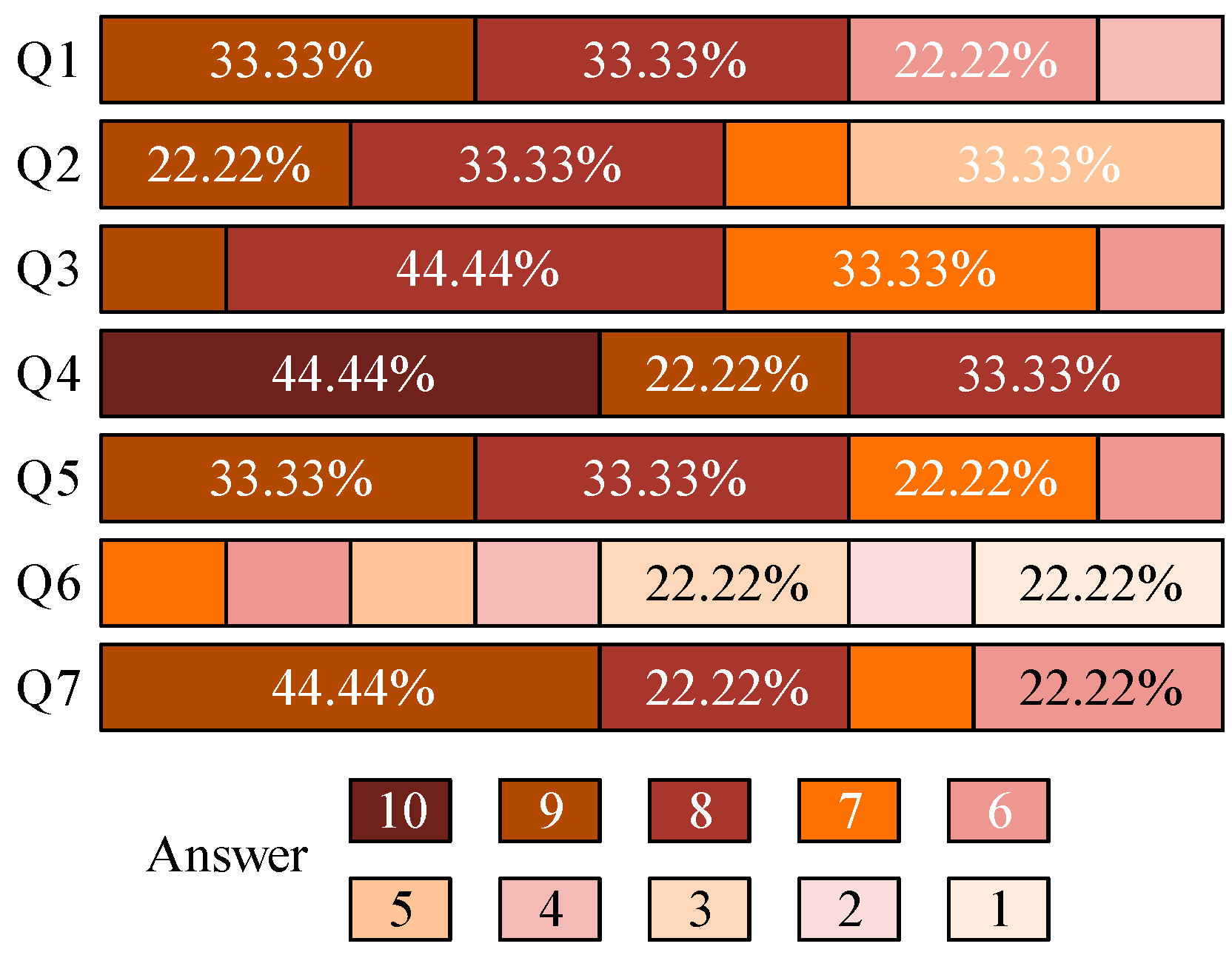

| Questions | Mean | Std. Dev |

|---|---|---|

| Q1 I am familiar with the wearable haptic device. | 7.22 | 1.97 |

| Q2 It was easy to wear the haptic device. | 7.44 | 1.24 |

| Q3 I was feeling comfortable while wearing and using the device. | 7.56 | 0.88 |

| Q4 The intensity of the vibration does not make me feel uncomfortable. | 9.11 | 0.93 |

| Q5 It is very clear to recognize vibrations in different positions. | 7.89 | 1.05 |

| Q6 The noise from vibrating motors affects the recognition of the vibration position. | 3.56 | 2.13 |

| Q7 I can clearly understand the meaning of the wearable device’s guidance. | 7.89 | 1.27 |

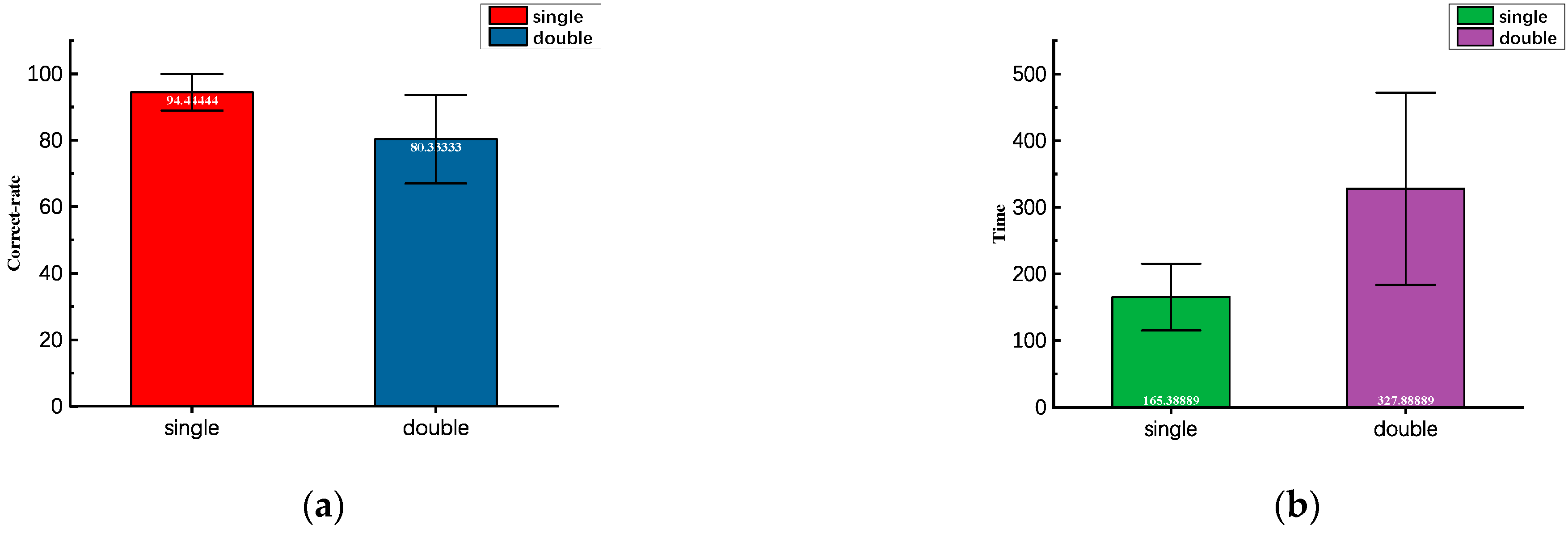

| Accuracy of C1 | Accuracy of C2 | |||

|---|---|---|---|---|

| Subjects | First | Second | First | Second |

| 1 | 98% | 86% | 72% | 76% |

| 2 | 94% | 98% | 92% | 76% |

| 3 | 94% | 94% | 90% | 76% |

| 4 | 98% | 94% | 70% | 62% |

| 5 | 94% | 96% | 94% | 90% |

| 6 | 92% | 94% | 82% | 70% |

| 7 | 100% | 96% | 90% | 86% |

| 8 | 90% | 88% | 84% | 78% |

| 9 | 98% | 96% | 80% | 78% |

| Average | 95.33% | 93.56% | 83.78% | 76.89% |

| Number of Attempts | |||

|---|---|---|---|

| Subjects | Task 1 | Task 2 | Task 3 |

| 1 | 2 | 2 | 2 |

| 2 | 1 | 3 | 2 |

| 3 | 1 | 3 | 1 |

| 4 | 1 | 2 | 1 |

| 5 | 1 | 3 | 1 |

| 6 | 2 | 4 | 2 |

| 7 | 1 | 2 | 1 |

| 8 | 1 | 1 | 1 |

| 9 | 2 | 1 | 3 |

| Average | 1.33 | 2.33 | 1.56 |

| Mean | Std Dev | |

|---|---|---|

| Task 1 | 1.33333 | 0.50 |

| Task 2 | 2.33333 | 1.00 |

| Task 3 | 1.55556 | 0.73 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Yu, G.; Liu, G.-Y.; Huang, C.; Wang, Y.-H. Vibrotactile-Based Operational Guidance System for Space Science Experiments. Actuators 2021, 10, 229. https://doi.org/10.3390/act10090229

Wang Y, Yu G, Liu G-Y, Huang C, Wang Y-H. Vibrotactile-Based Operational Guidance System for Space Science Experiments. Actuators. 2021; 10(9):229. https://doi.org/10.3390/act10090229

Chicago/Turabian StyleWang, Yi, Ge Yu, Guan-Yang Liu, Chao Huang, and Yu-Hang Wang. 2021. "Vibrotactile-Based Operational Guidance System for Space Science Experiments" Actuators 10, no. 9: 229. https://doi.org/10.3390/act10090229

APA StyleWang, Y., Yu, G., Liu, G.-Y., Huang, C., & Wang, Y.-H. (2021). Vibrotactile-Based Operational Guidance System for Space Science Experiments. Actuators, 10(9), 229. https://doi.org/10.3390/act10090229