Abstract

This paper begins with a refutation of the orthodox model of final Pleistocene human evolution, presenting an alternative, better supported account of this crucial phase. According to this version, the transition from robust to gracile humans during that period is attributable to selective breeding rather than natural selection, rendered possible by the exponential rise of culturally guided volitional choices. The rapid human neotenization coincides with the development of numerous somatic and neural detriments and pathologies. Uniformitarian reasoning based on ontogenic homology suggests that the cognitive abilities of hominins are consistently underrated in the unstable orthodoxies of Pleistocene archaeology. A scientifically guided review establishes developmental trajectories defining recent changes in the human genome and its expressions, which then form the basis of attempts to extrapolate from them into the future. It is suggested that continuing and perhaps accelerating unfavorable genetic changes to the human species, rather than existential threats such as massive disasters, pandemics, or astrophysical events, may become the ultimate peril of humanity.

1. Introduction

Among the most profound questions humans can ask themselves is the issue of humanity’s future: what is to become of our species? For centuries this subject has been at the core of many enquiries, most of which seem to have been framed with specific predictions in mind, and these were often concerned with aspects of technology. In more recent times, especially over the course of the second half of the 20th century, rising environmental concerns prompted a gradual increase in the preoccupation with the ecological sustainability of humanity. With the early 21st century, this anxiety is taking on a new urgency, as society’s unease with humanly caused climate change and several other threats to the environment is becoming universal. Some of these issues have been investigated and modeled in countless scientific studies, and are the subject of ongoing work by numerous think-tanks around the world.

Efforts to predict the course of humanity over the longer term have been considerably less prominent, even though this would clearly be just as important. Nevertheless, there have been many such initiatives, and some of their main protagonists are contributing to this special issue of Humanities. Most of these studies deal with the effects of advances in technology and science, while the perhaps more fundamental aspects of the human future receive somewhat less attention: concerns with exponentially increasing population size; whether the human species will evolve into another; whether life on planet Earth will become extinct; or whether artificial intelligence will surpass biological intelligence. As Bostrom [1] poignantly observes, “it is relatively rare for humanity’s future to be taken seriously as a subject matter on which it is important to try to have factually correct beliefs.” He notes that, apart from climate change, “national and international security, economic development, nuclear waste disposal, biodiversity, natural resource conservation, population policy, and scientific and technological research funding are examples of policy areas that involve long time-horizons.”

But how does one acquire factually correct beliefs about the future, and is it even possible to do so? As it happens there are numerous aspects of the future, including the quite distant future, about which we can formulate perfectly plausible predictions. We can be fairly certain that in two million years from now, Pioneer 10, which was launched in 1972, will pass near Aldebaran, some 69 light-years away. We can be fairly certain that in 50 million years, the northward drift of Africa and Australia will have connected them with the landmasses of Europe and South-East Asia respectively, just as California will have slid up the coast to Alaska. These and many other certainties are available to us because they can be deduced from known trajectories that, in the greater context, are not expected to change in any significant way. Numerous aspects of humanity’s future are also determined by fairly well understood uniformitarian trajectories. For instance any survey of the technological development of hominins shows that, overall, there has been an exponential increase in the complexity and sophistication of technology. From a level perhaps similar to today’s chimpanzees some 5 to 7 million years ago, there has been only minimal change over the first half of that period. From the first appearance of formal stone implements it took another couple of million years to reach the perfectionist template of the Acheulian handaxe. A million years ago hominins mastered seafaring colonization of several islands [2] and began to produce exograms (external memory records of ideas, symbols). Although the pace of development quickened throughout it needs to be remembered that societies remained excruciatingly conservative for most of their remaining histories. Even toward the end of the Ice Ages, a millennium’s progress seemed insignificant, yet the last millennium of human history delivered the species from the Middle Ages to the “Space Age.” Moreover, the last century, for much of mankind, involved almost as many technological changes as the previous millennium, a fair indication of the exponential nature of the process. Today this phenomenon is clear for anyone to observe from one decade to the next. Unless one were to expect that there would be a permanent abatement of this development it would need to be assumed that technological and scientific progress will continue at a breathtaking rate, and at an exponentially increasing rate. The technological future of humanity, therefore, appears to be fairly predictable, in the overall sense.

However, technology alone cannot define the future of the species; several other factors are at least as important. For instance the extinction risk and the existential risk both override the technological aspects. A number of authors have offered rather pessimistic estimates and scenarios of the probability of humanity surviving another century, or a few centuries [3,4,5,6]. Replacement of the present human species by another, obviously, would be extinction, but not an existential risk; whereas the establishment of a permanent global tyranny would be an existential disaster, although not an extinction [1]. Typical existential risks arising from natural conditions are major volcanic eruptions (leading to massive environmental degradation), pandemics, astrophysical events destructive to life, and major meteor or asteroid impact. Bostrom [7] rates the existential risks from anthropogenic causes as more important, specifically those “from present or anticipated future technological developments. Destructive uses of advanced molecular nanotechnology, designer pathogens, future nuclear arms races, high-energy physics experiments, and self-enhancing AI with an ill-conceived goal system are among the worrisome prospects that could cause the human world to end in a bang.”

Bostrom singles out those potential developments that would enable humans to alter their biology through technological means [8,9]. Controlling the biochemical processes of aging could dramatically prolong human life; drugs and neurotechnologies could be used to modify people and their behavior [10]. The development of ultraintelligence is often invoked in this context, although the prediction that it will occur in the 20th century [11] has not materialized. Less vague is the notion of “whole brain emulation” or “uploading”, which refers to the technology of transferring a human mind to a computer [12]. The idea is to create a highly detailed scan of a human brain, such as by feeding vitrified brain tissue into powerful microscopes for automatic slicing and scanning. Automatic image processing would then be applied to the scanned data to reconstruct a complete model of the brain, mapping the different types of neurons and their entire network. Next, the whole computational structure would be emulated on an appropriately powerful computer, hopefully reproducing the original “mind” qualitatively. Memory and personality intact, the mind would then exist as software on the computer, and it could inhabit a robotic machine or simply exist in virtual reality.

However, as the main-proponents of this idea note, “it remains unclear how much information about synaptic strength and neuromodulator type can be inferred from pure geometry at a given level of resolution” ([12], p. 40). Whether the technology of “nanodisassembly” [13,14,15,16] is feasible or not, this process would only result in a dead brain at best. Neural connections are being forged in their millions per second, and every second the brain is changing at a molecular level. Taking an instantaneous snapshot of the dead whole brain is completely nonsensical from that point of view alone, in that one would emulate only a static state. Moreover, the brain is not a separate entity, it is connected to numerous other parts of the body (e.g., retina, other sensory recorders, spinal cord, proprioceptors), being simply the main part of an entire neural system. For instance the neural circuits contained in the spinal cord can independently control numerous reflexes and central pattern generators. The brain functions as part of that body, storing myriad information about the body and its past experiences (Figure 1). To effectively emulate this one would have to determine at the molecular level the structure of every neuron and support cell in the brain, their connections, data transmission rates etc., and replicate this in electronic form. The most promising approach would be to scan the target brain from birth onwards, with some implanted device, recording all brain activity of every neuron and support cell in real time, and then replay the entire experience into the artificial brain. Next one would have to embed that artificial brain in an artificial body cloned from the original. All of this does not inspire much confidence that this will ever be achieved, or even attempted; in the end it seems like a rather pointless exercise.

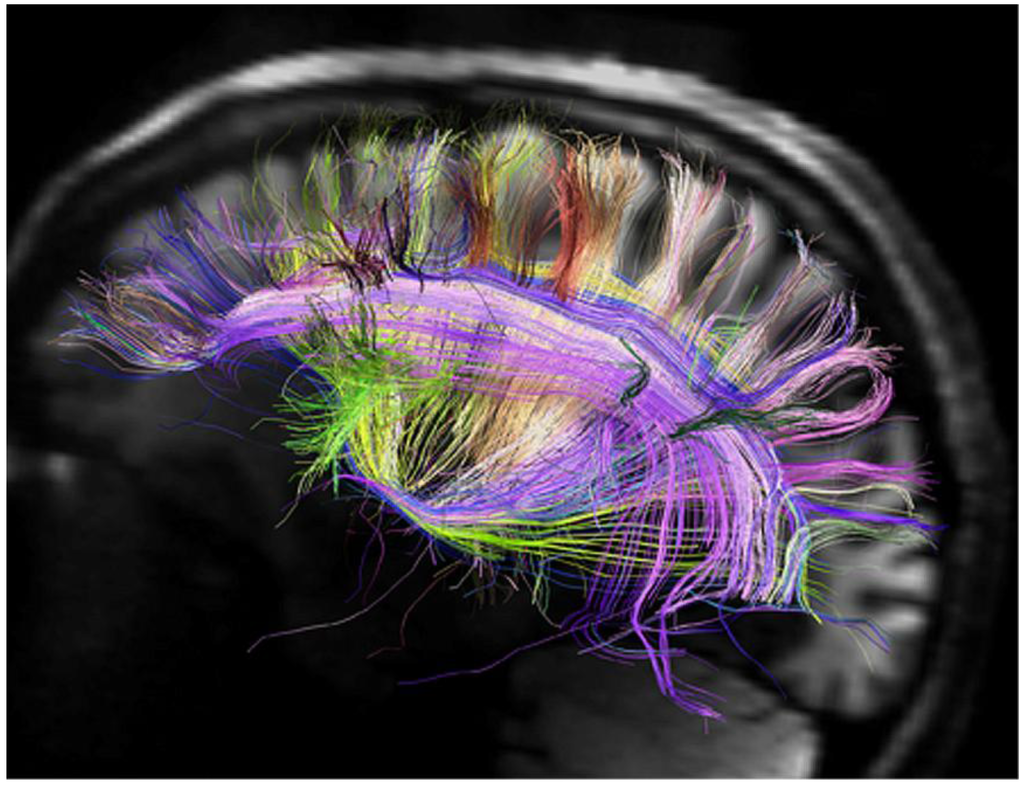

Figure 1.

Brain signals traffic along white matter fibers in the left hemisphere as recorded by diffusion magnetic resonance imaging (Courtesy of MGH-UCLA Human Connectome Project).

As can be seen from this example, some of the futuristic scenarios contemplated are perhaps science-fiction rather than science, while others can be regarded as soundly based, or as deserving serious consideration. In this paper the principle of realistic trajectories will be employed to flesh out some key predictions about the human future. The emphasis will be on first creating a sound empirical base defining such trajectories about the human past, from which viable considerations of the future should evolve quite naturally and logically.

2. The Human Ascent

However, this is where the difficulties begin. The principal disciplines providing information about previous states of humans and human societies, Pleistocene archaeology and paleoanthropology, are not sciences (i.e., not based on falsification and testability) and have an error-prone history. Archaeology has strenuously spurned every major innovation since the Pleistocene antiquity of humanity was rejected by it from the 1830s to the late 1850s. This includes the subsequent reports of fossil humans, of Ice Age cave art, of Homo erectus, of australopithecines, and of practically every major methodological improvement proposed ever since. It took three to four decades to accept the existence of the “Neanderthals”, of the erectines, the australopithecines, just as it took that long to unmask “Piltdown man.” On present indications it will take as long to establish the status of the Flores “hobbits,” one of many examples indicating that neither Pleistocene (and Pliocene) archaeology nor paleoanthropology have learned much from their long lists of blunders [17]. This pattern of denial and much later grudging acceptance of any major innovation has not only continued to the present time, it has even intensified in recent decades. Faddish interpretations dominate the disciplines and distort academic perceptions of the hominin past in much the same measure as they did more than a century ago. Clearly this is not a good starting point to begin establishing trajectories of human development, be they cultural, technological, or cognitive. Before this is realistically possible, these disciplines need to be purged of their current falsities.

Some of the most consequential fallacies concern the model of “cultural evolution” archaeology provides. The notion of such an evolution is itself flawed, because evolution, as a biological concept, is an entirely dysteleological process; it has no ultimate purpose and it is not a development toward increased complexity. The concept of cultural evolution, however, involves the teleologically guided assumption of progress toward greater sophistication—ultimately, in the archaeological mind, resulting in that glorious crown of evolution, Homo sapiens sapiens. This fantasy (the modern human is a neotenous form of ape, susceptible to countless neuropathologies, as will be shown below) implies that archaeology is guided by a species-centric delusion of grandeur. Moreover, its definition of culture is itself erroneous, being based on invented tool types (in the Pleistocene usually of stone implements). Culture, obviously, is not defined by tools or technologies, but by cultural factors. Some of these are available from very early periods, but archaeology has categorically excluded them from delineating the cultures it perceives. Indeed, when it does consider cultural elements such as undated rock art it strenuously tries to insert them into its invented cultures based on stone tools, rather than try to create a cultural history from them. Archaeology goes even further in its obsessive taxonomization by then assuming that these imagined cultures were the work of specific human societies. So for instance certain combinations of invented tool types found in discrete layers of sediments are called the “Aurignacian culture,” and this imaginary culture is seen as the signature of a people called the “Aurignacians.” Although archaeologists lack any significant knowledge of who these imaginary Aurignacians were [18,19,20], they regard these as real, identifiable entities, when in fact there is not one iota of evidence that all the people that produced the tools in question were in any way related, be it ethnically, linguistically, genetically, politically, or even culturally.

This is a fair indication of the misinformation Pleistocene archaeology has inflicted on modern society, and it is greatly attributable to the complete lack of internal falsifiability of the discipline. Many other examples could be cited, but one that is of particular relevance in the context of properly understanding the human past relates to a major archaeological fad of recent decades. The replacement hypothesis, termed the “African Eve” model by the media, derives from an academic fraud begun in the 1970s [21,22,23,24,25,26], which by the late 1980s suddenly gained almost universal acceptance and has since been the de-facto dogma of the discipline, especially in the Anglo-American sphere of influence [27,28,29,30,31,32]. This unlikely hypothesis proposes that all extant humans derive from a small population—indeed, from one single female—at an unspecified location in sub-Saharan Africa that miraculously became unable to interbreed with all other hominins. They then expanded across Africa, then to the Middle East, and colonized all of Eurasia, wiping out all other people in their wake. Reaching South-East Asia they promptly invented seafaring to sail for Australia.

This origins myth is contradicted by so much empirical evidence one must question the academic competence of its protagonists. Based originally on false claims about numerous fossils and their ages, it then resorted to genetics, misusing numerous genetic findings to underpin its demographically and archaeologically naive reasoning. The computer modeling of Cann et al. [27] was botched and its haplotype trees were fantasies that could not be provided with time depth even if they were real. Based on 136 extant mitochondrial DNA samples, it arbitrarily selected one of 10267 alternative and equally credible haplotype trees (which are very much more than the number of elementary particles of the entire universe, about 1070!). Maddison [33] then demonstrated that a re-analysis of the Cann et al. model could produce 10,000 haplotype trees that were actually more parsimonious than the single one chosen by these authors. Yet no method could even guarantee that the most parsimonious tree result should even be expected to be the correct tree [34]. Cann et al. had also mis-estimated the diversity per nucleotide (single locus on a string of DNA), incorrectly using the method developed by Ewens [35] and thereby falsely claiming greater genetic diversity of Africans, compared to Asians and Europeans (they are in fact very similar: 0.0046 for both Africans and Asians, and 0.0044 for Europeans). Even the premise of genetic diversity is false, for instance it is greater in African farming people than in African hunters-foragers [36], yet the latter are not assumed to be ancestral to the former (see e.g., [37]). Cann et al.’s assumption of exclusive maternal transference of mitochondria was also false, and the constancy of mutation rates of mtDNA was similarly a myth [38,39]. As Gibbons [40] noted, by using the modified putative genetic clock, Eve would not have lived 200,000 years ago, as Cann et al. had claimed, but only 6,000 years ago. The various genetic hypotheses about the origins of “Moderns” that have appeared over the past few decades placed the hypothetical split between these and other humans at times ranging from 17,000 to 889,000 years bp. They are all contingent upon purported models of human demography, but these and the timing or number of colonization events are practically fictional: there are no sound data available for most of these variables. This applies to the contentions concerning mitochondrial DNA (“African Eve”) as much as to those citing Y-chromosomes (“African Adam” [41]). The divergence times projected from the diversity found in nuclear DNA, mtDNA, and DNA on the non-recombining part of the Y-chromosome differ so much that a time regression of any type is extremely problematic. Contamination of mtDNA with paternal DNA has been demonstrated in extant species [42,43,44,45], in one recorded case amounting to 90% [46]. Interestingly, when this same “genetic clock” is applied to the dog and implies its split from the wolf occurred 135,000 years ago, archaeologists reject it because there is no paleontological evidence for dogs prior to about 15,000 years ago ([47], but see [48]). The issues of base substitution [49] and fragmentation of DNA [50] have long been known, and the point is demonstrated, for instance, by the erroneous results obtained from the DNA of insects embedded in amber [51]. Other problems with interpreting or conducting analyses of paleogenetic materials are alterations or distortions through the adsorption of DNA by a mineral matrix, its chemical rearrangement, microbial or lysosomal enzymes degradation, and lesions by free radicals and oxidation [52,53].

Since 1987 the genetic distances in nuclear DNA (the distances created by allele frequencies) proposed by different researchers or research teams have produced conflicting results [54,55,56,57], and some geneticists concede that the models rest on untested assumptions; others even oppose them [31,55,57,58,59,60,61,62,63]. The key claim of the replacement theory (the “Eve” model), that the “Neanderthals” were genetically so different from the “Moderns” that the two were separate species, has been under severe strain since Gutierrez et al. [64] demonstrated that the pair-wise genetic distance distributions of the two human groups overlap more than claimed, if the high substitution rate variation observed in the mitochondrial D-loop region [65,66,67] and lack of an estimation of the parameters of the nucleotide substitution model are taken into account. The more reliable genetic studies of living humans have shown that both Europeans and Africans have retained significant alleles from multiple populations of Robusts ([68,69]; cf. [63]). After the Neanderthal genome yielded results that seemed to include an excess of Gracile single nucleotide polymorphisms [70], more recent analyses confirmed that “Neanderthal” genes persist in recent Europeans, Asians, and even Papuans [71]. “Neanderthals” are said to have interbred with the ancestors of Europeans and Asians, but not with those of Africans ([72]; cf. [73]). The African alleles occur at a frequency averaging only 13% in non-Africans, whereas those of other regions match the Neanderthaloids in ten of twelve cases. “Neanderthal genetic difference to [modern] humans must therefore be interpreted within the context of human diversity” ([70], p. 334). This suggests that gracile Europeans and Asians evolved largely from local robust populations, and the replacement model has thus been decisively refuted. While this may surprise those who subscribed to Protsch’s “African hoax,” it had long been obvious from previously available evidence. For instance Alan Mann’s finding that tooth enamel cellular traits showed a close link between Neanderthaloids and present Europeans, which both differ from those of Africans [74], had been ignored by the Eve protagonists, as has much other empirical evidence (e.g., [75,76]). In response to the initial refutations of the Eve model, Cann [77] made no attempt to argue against the alternative proposals of long-term, multiregional evolution.

But faulty genetics are only one aspect of the significant shortcomings of the replacement model; it also lacks any supporting archaeological, paleoanthropological, technological or cultural evidence [17,18,20,78,79,80,81,82]. Nothing suggests that Upper Paleolithic culture or technology originated in sub-Saharan Africa, or that such traditions moved north through Africa into Eurasia. The early traditions of Mode 4 (“Upper Paleolithic”) technocomplexes evolved in all cases in situ, and the Graciles of Australia, Asia, and Europe emerged locally from Robusts, as they did in Africa. By the end of the Middle Pleistocene, 135,000 years ago, all habitable regions of the Old World continents can be safely assumed to have been occupied by hominins. At that time, even extremely inhospitable parts, such as the Arctic [83,84,85], were inhabited by highly adapted Robusts. Therefore the notion that African immigrants from the tropics could have displaced these with their identical technologies is demographically absurd. Wherever robust and gracile populations coexisted, from the Iberian Peninsula to Australia, they shared technologies, cultures, even ornaments. Moreover, the established resident populations in many climatic regions would have genetically swamped any intrusive population bringing with it a much smaller number of adaptive alleles. Introgressive hybridization [86], allele drift based on generational mating site distance [87], and genetic drift [88] through episodic genetic isolation during climatically unfavorable events (e.g., the Campagnian Ignimbrite event, or the Heinrich Event 4 [89,90,91,92] account for the mosaic of hominin forms found.

Mode 4 technocomplexes [93] first appear across Eurasia between 45,000 and 40,000 years ago, perhaps even earlier [94], at which time they existed neither in Africa nor in Australia. In fact right across northern Africa, Mode 3 traditions continued for more than twenty millennia, which renders it rather difficult to explain how Eve’s progeny managed to cross this zone without leaving a trace. None of the many tool traditions of the early Mode 4 which archaeologists have “identified” across Europe have any precursors to the south [17]. Some of these “cultures” have provided skeletal human remains of Robusts, including “Neanderthals” [32,95,96,97,98], but there are no unambiguous associations between “anatomically modern human” remains (Graciles) and “early Upper Paleolithic” assemblages [99]. This is another massive blow to the replacement proponents, who relied on the unassailability of their belief that some of these traditions, especially the Aurignacian, were by Graciles—having fallen victim to Protsch’s hoax. Moreover, these “cultures”, as they are called, are merely etic constructs, “observer-relative or institutional facts” [100]; as “archaeofacts” they have no real, emic existence, as noted above. They are entirely made up of invented (etic) tool types and based on the fundamental misunderstanding of Pleistocene archaeology that tools are diagnostic for identifying cultures.

Pleistocene archaeology as conducted is thus incapable of providing a cultural history, as it relegates the cultural information available (such as rock art) to marginal rather than central status, forcing it into the false technological framework it has created. Instead of beginning with a chronological skeleton of paleoart traditions and then placing tool assemblages into it, invented tool types forming invented cultures of invented ethnic and even genetic groups form the temporal backbone of the academic narrative. The result is a collection of origins myths for human groups, nations, and for “modern humans” generally. It is of little relevance to considering the human ascent, which calls for a far more credible approach. This applies most specifically if the purpose is to establish various trajectories of human development in order to extrapolate from them to the future.

The most central issue is clearly the origin of human modernity [82]: at what point in hominin history can it be detected with reasonable reliability, and how did it come about? The replacement model offers a simplistic answer: modern human behavior was introduced together with the advent of “anatomically modern” humans, which in Europe is thought to date from between 40,000 and 30,000 years ago. Unfortunately the issue is not as simple as that. First of all, no humans of the past were either anatomically or cognitively fully modern [101]. Archaeological notions of modernity, expressed for instance in the semi-naturalistic paleoart first appearing with the “Aurignacian,” are therefore false, irrespective of how modernity is defined. Conflating the literate minds of modern Westerners with the oral minds that inhabited the human past, which “cognitive archaeology” does without realizing, is the result of one of the many epistemological impairments of orthodox archaeology. Even people of the Middle Ages existed in realities profoundly different from those experienced today, as do many extant peoples. For instance the general introduction of writing in recent centuries has dramatically changed the brain of adult humans: although they start out as infants with brains similar to non-literate peoples, these brains are gradually reorganized as demanded by the thinking implicit in literacy, which is totally different from the thought patterns found in oral societies [102]. The use of all symbol systems (be they computer languages, conventions for diagrams, styles of painting) influence perception and thought [103]. Cultural activity modifies the chemistry and structure of the brain through affecting the flow of neurotransmitters and hormones [104] and the quantity of gray matter [105,106,107].

“Modern behavior” does not refer to the behavior of modern Westerners, or to that of any other extant human group. It is defined by the state of the neural structures that are involved in moderating behavioral patterns, which ultimately are determined by inhibitory and excitatory stimuli in the brain. So the question is, what types of evidence would suggest that these neural structures had been established, and at what time does such evidence first occur? When archaeologists refer to human modernity they tend to cite a list of cultural or technological variables, such as projectile weapons, blade tools, bone artifacts, hafting (composite artifacts), “elaborate” fire use, clothing, exploitation of marine resources and large game, even seasonality in the exploitation of resources. This implies that they are not adequately informed about archaeological evidence, because these and other forms of proof cited in this context are available from much earlier periods, including the “Lower Paleolithic.” Similarly, paleoart of various forms can be traced back to the same period [18,79,81,108]; in fact most of the surviving rock art of the Pleistocene is not even of the “Upper Paleolithic,” but of “Middle Paleolithic” (Mode 3) industries [109]. Furthermore, if the cave art and portable paleoart of the “Aurignacian” and other early “Upper Paleolithic” traditions is the work of “Neanderthals” or intermediate humans, as the available record tends to suggest [19], the entire case for conflating perceived anatomical modernity with purported cognitive modernity collapses.

Preferred indicators that the human brain had acquired the kind of structures that underwrite behavior and cognition today are exograms, signs that hominins were capable of storing symbolic information outside their brains. Middle Pleistocene examples of exograms have been classified into beads, petroglyphs, portable engravings, proto-sculptures, pigments and manuports [79,108]. Most of these classes of evidence offer no utilitarian explanations whatsoever. They first appear on the archaeological record with Mode 1 and Mode 2 technocomplexes (handaxe-free and handaxe Lower Paleolithic tool traditions), i.e., at about the same time as the first evidence of pelagic crossings, up to one million years ago [2]. Seafaring colonization, according to the data currently available, began in what is today Indonesia, where the presence of thriving human populations during Middle Pleistocene times has so far been demonstrated on Flores, Timor, and Roti. None of these and other Wallacean islands has ever been connected to either the Asian or the Australian landmass. Viable island populations can only be established by deliberate travel of significant numbers of people providing an adequate gene pool, and none of the sea narrows can be crossed without propellant power. Not only does this necessitate the use of watercraft, almost certainly in the form of bamboo rafts, such missions demanded a certain level of forward planning and the use of effective communication. Although communication is possible by various means, it seems unlikely that maritime colonization is possible without an appropriate form of “reflective” language [2,110].

This provides an important anchor point for a realistic timeline of growing human competence in volitionally driven behavior, one of the quintessential aspects of humanness. Modernity in human behavior had begun, in the sense that the same neural structures and processes that determine this quality today were essentially in place. If this occurred around a million years ago, as appears to be the case, then the archaeological beliefs are hopelessly mired in falsehoods. If, on the other hand, only fully modern behavior qualifies for modernity, then it arose only in recent centuries, and it does not apply to illiterates or to extant traditional societies, or even to non-Western societies. It then becomes such a narrow definition that it is useless as a marker of human development. Either way orthodox archaeology got it completely wrong.

Clearly, then, the current paradigm of Pleistocene archaeology is not a basis on which to expound predictions of the kind to be attempted here. For this it is essential to begin by first purging the record of elements introduced to support dogmas, at the same time considering empirical evidence that has been neglected. Some of the underlying features of hominin evolution have not been adequately factored into the narratives of mainstream archaeology. For instance hominins have been subjected to relentless encephalization for the entire duration of their existence. This astonishing growth of one organ, unprecedented on this planet, has come at massive costs for the species. Not only is the brain the most energy-hungry part of the body, its enlargement has rendered it essential that infants be born at an increasingly earlier fetal stage. They remain helpless for several years, needing to be carried and reducing the mothers’ reproduction rates dramatically. The large brain of the human fetus is a huge cost to the mother, the band, and to the species as a whole. And yet archaeologists believe that for 98% of their existence, humans barely used these brains in any meaningful way. In a biological sense this is most unlikely, and it is not the only flawed conviction in Pleistocene archaeology. For instance archaeologists and paleoanthropologists have invented numerous explanations for why hominins adopted upright walk, waxing lyrically about the benefits of this adaptation. They never asked the obvious: if it is so advantageous, why did other primates fail to adopt it? The same, of course, applies to encephalization or any other development endemic to humans. Similarly, it has not been asked why it was essential for human fetuses to have such large brains; why could the brain not have grown so large after birth, as so many other organs or body parts do in countless species? The answer, apparently, is that the brain has a limited ability of expanding.

If the enlargement of the brain through evolutionary time is taken as an approximate indicator of the cognitive and intellectual complexity of the brain, which is a realistic working hypothesis, all of archaeology’s beliefs about non-somatic development become redundant. Given that natural selection can only select expressed characteristics, not latent ones, the indices Pleistocene archaeology fields in its speculations about behavior, cognition, or even technology are inevitably flawed. For instance, there is no reason to exclude from consideration the notion that upright walk was not selected for, but was imposed on human ancestors. This possibility was never even contemplated, and yet it is obvious that hominins have undergone massive neotenization, and that the human foot closely resembles that of a fetal chimpanzee [17,20,111,112]. Therefore it would be more logical to assume that upright walk was facilitated by somatic changes to the foot and became an “expressed characteristic” available for selection. Indeed, the obvious influence neotenization has had in the development of hominins [99] is never considered by the gatekeepers of the human past. And yet it is among the most important factors of humanization, second only to encephalization.

And then there is the strange fact that, after up to three million years of encephalization, suddenly it ceased, and the human brain began to shrink much more rapidly than it had before expanded. Archaeology and paleoanthropology offer no explanations for this sudden reversal of a process that had become the hallmark of human evolution. In fact this is largely ignored by these fields, and the same applies to the other significant reductions in human fitness. As the brain shrank by about 13% in the course of the last few tens of millennia, skeletal robusticity also declined rapidly, especially that of the cranium, mastoid equipment, and the skull generally. Not only that, sheer physical strength, as indicated by muscle attachments, waned profoundly. And all that has been offered as an explanation is a story about a new species from Africa that was so superior in every way that it outcompeted or exterminated all other humans across the globe.

Similarly, the most dramatic neurological developments in hominin history have remained completely ignored by both archaeology and paleoanthropology, which has resulted in an unbridgeable chasm between these gatekeepers and the sciences. For instance the greatest conundrum of neuroscience is its inability to explain why natural selection has not suppressed the thousands of neuropathologies, genetic disorders and neurodegenerative conditions afflicting modern humans [112]. Within the replacement hoax, which explains the change from Robusts to Graciles by a combination of natural selection and genetic drift [88], the Keller and Miller paradox is indeed unsolvable. That should already have prompted caution, because the sciences are inevitably much better equipped to deal with these issues. Unfortunately, in matters of human evolution, the sciences have relied too much on the myths of the gatekeepers of the human past, but once this paradigmatic obstacle is overcome the uptake of the alternative explanation should swiftly induce a better scientific understanding of the human ascent.

3. Archaeology vs. Science

The rough outline of such an improved understanding is easily sketched out. Hominins evolved initially during the Pliocene period, which lasted from 5.2 to 1.7 million years (Ma) ago. Earlier contenders for human ancestry such as Sahelanthropus tchadensis (7 Ma) and Orrorin tugenensis (6 Ma) are known, but it is the Pliocene’s australopithecines that are more plausibly thought to include a human ancestor. The position of Ardipithecus ramidus and Ardipithecus kadabba (4.4 Ma) remains controversial. The gracile australopithecines (4.2 Ma to 2.0 Ma) were certainly bipedal, and at least some of them (Australopithecus garhi) used stone tools to butcher animal remains. Bearing in mind the common use of tools by modern chimpanzees [112,113] and Brazilian capuchin monkeys it is realistic to credit the australopithecines and Kenyanthropus platyops (3.5 to 3.3 Ma) with better tool-making skills than these extant primates. But what level of cognitive ability might be attributable to them?

Homology can provide some preliminary indications from reviewing ontogenic development. It is roughly at the age of forty months that the human child surpasses the ToM (theory of mind) level of the great apes. Thus the executive control over cognition unique to humans, together with metarepresentation and recursion, would be expected to have developed during the last 5 or 6 million years. Although the brain areas accounting for the latter two faculties remain unidentified, executive control resides in the frontal lobes. Since the frontal and temporal areas have experienced the greatest degree of enlargement in humans [114,115], uniquely human abilities would be expected to be most likely found there, although inter-connectivity rather than discrete loci may be the main driving force of cognitive evolution. It is precisely the expansion of association cortices that has made the human brain disproportionately large. Intentional behavior can be detected by infants 5–9 months old [116], while at 15 months infants can classify actions according to their goals [117]. The same abilities are available to chimpanzees and orang-utans [118], but apparently not to monkeys [119]. Between 18 and 24 months, the child establishes joint attention [120], as well as engages in pretend-play, and it develops an ability to understand desires [121,122,123]. Again, apes use gaze monitoring to detect joint attention [124], but monkeys apparently do not. It is with the appearance of “metarepresentation,” the ability to explicitly represent representations as representations [125,126,127], and with recursion that developed human ToM emerges, as these are lacking in the great apes [128,129]. Similarly, the apes have so far provided no evidence of episodic memory or future planning [130]. Episodic memory, which is identified with autonoetic consciousness, can be impaired in humans, e.g., in amnesia, Asperger’s syndrome, or in older adults [131]. It can be attributed to differential activity in the medial prefrontal and medial parietal cortices, imaging studies of episodic retrieval have shown [132].

Theory of mind defines the ability of any animal to attribute mental states to itself and others, and to understand that conspecifics have beliefs, desires, and intentions; and that these may be different from one’s own [17,133,134,135,136,137,138,139,140,141]. Each organism can only prove the existence of his or her own “mind” through introspection, and has no direct access to others’ “minds.” Although ToM is present in numerous species, at greatly differing levels, it has perhaps attracted most attention in the study of two groups, children and great apes, and the level they conceive of mental activity in others, attribute intention to, and predict the behavior of others [118]. It is thought to be largely the observation of behavior that can prompt a ToM. The discovery of mirror neurons in macaques [142,143] has provided much impetus in the exploration of how a ToM is formed [144,145]. Mirror neurons are activated both when specific actions are executed and when identical actions are observed, providing a neural mechanism for the common coding between perception and action (but see [146]). One of the competing models to explain ToM, simulation theory [147,148,149], is said to derive much support from the mirror neurons, although it precedes their discovery by a decade. These neurons are seen as the mechanism by which individuals simulate others in order to better understand them. However, mirror neurons have so far not been shown to produce actual behavior [150]. Motor command neurons in the prefrontal complex send out signals that orchestrate body movements, but some of them, the mirror neurons, also fire when merely watching another individual (not necessarily a conspecific) perform a similar act. It appears that the visual input prompts a “virtual reality” simulation of the other individual’s actions. However, ToM and “simulation,” though related, may have different phylogenic histories [151,152]. Ramachandran [153] has speculated about the roles of mirror neurons in cognitive evolution [154], in empathy, imitation [155], and language acquisition [156].

Since it is safe to assume that relatively advanced forms of ToM were available to all hominins and hominids, it may be preferable to turn to consciousness and self-awareness for a better resolution in defining their cognitive status. Consciousness focuses attention on the organism’s environment, merely processing incoming external stimuli [157,158], whereas self-awareness focuses on the self, processing both private and “public” information about selfhood. The capacity of being the object of one’s own attention defines self-awareness, in which the individual is a reflective observer of its internal milieu and experiences its own mental events [159,160,161]. What is regarded as the “self” is inherently a social construct [162], shaped by the individual’s culture and immediate conspecifics [163]. But the self is not the same as consciousness [164], as shown by the observation that many attributes seen as inherent in the self are not available to conscious scrutiny. People invent the neurological computation of the boundaries of personhood from their own behavior and from the narratives they form, which also determine their future behavior. Thus it needs to be established how the chain of events from sensory input is established and how behavior is initiated, controlled and produced [165,166,167,168]. It appears that subcortical white matter, brainstem and thalamus are implicated in consciousness [169,170], although the latter is not believed to drive consciousness. Ultimately consciousness is self-referential awareness, the self’s sense of its own existence, which may explain why its etiology remains unsolved [171].

Self-awareness, ToM, and episodic memory derive from the default mode network, which is considered to be a functionally homogeneous system [172]. Conscious self-awareness, the sentience of one’s own knowledge, attitudes, opinions, and existence, is even less understood and accounted for ontologically than ToM. It may derive primarily from a neural network of the prefrontal, posterior temporal, and inferior parietal of the right hemisphere ([173,174,175]; but see critiques in [176,177,178]). In humans, a diminished state of self-awareness occurs for instance in dementia, sleep, or when focusing upon strong stimuli [179]. Basic awareness of the self is determined by the mirror test ([180,181,182,183,184,185,186]; but see [187,188,189] for critical reviews). Some of the great apes, the elephants and bottlenose dolphins are among the species that have passed the mirror test, and interestingly they are much the same species shown to possess von Economo neurons [190,191,192], which seem to be limited to large mammals with sophisticated social systems [17]. As in ToM, various levels apply, probably correlated with social complexity of the species concerned. It is difficult to see how such systems could have developed much beyond those of social insects without some level of self-awareness. It is the result of interplay of numerous variables, ranging from the proprioceptors to distal-type bimodal neurons (moderating anticipation and execution [193]), and the engagement of several brain regions.

Like ToM, self-awareness can safely be attributed to all hominoids and hominins, and there is a reasonable expectation that it became progressively more established with time. Archaeologically, self-awareness can be demonstrated by body adornment, but unfortunately most forms of such behavior (body painting, tattoos, cicatrices) leave no archaeological evidence. Beads and pendants, however, may in rare circumstances survive taphonomy. An incipient form of body decoration may have been observed by McGrew and Marchant ([194]; cf. [195,196]) in 1996. Following the killing and eating of a red colobus monkey by a group of chimpanzees, a young adult female was observed wearing the skin strip draped around her neck, tied in a single overhand knot as if forming a simple necklace. Perhaps the young adult female sought to enhance her appearance and/or status by adorning herself with the remains of a highly valued kill; or perhaps it was just a mistaken observation or an accidental occurrence. However, with a much enhanced self-awareness of the early hominins it should be expected that more pronounced cultural expressions of such behavior would be found in the late Pliocene. Yet the first beads on the archaeological record appear only during the Middle Pleistocene [79,108,197,198,199], and even these are met with dogmatic rejection by most archaeologists. From a biological perspective it is rather surprising that such artifacts appear so late on the available record (during the Middle Acheulian technological traditions [198]). As in so many other issues, biological, empirical and scientific perceptions clash irreconcilably with the unstable orthodoxies of Pleistocene archaeology [2,17,18,19,20,79,80,81,82,99].

The first report of early beads dates from the initial demonstration that humans lived in the Pleistocene [200], which was categorically rejected by archaeology for decades. These beads remained almost ignored for over 150 years [198], until some 325 Cretaceous fossils of Porosphaera globularis collected at Acheulian sites in France and England were shown to have been modified. Many of them show extensive wear facets where they rubbed against other beads while worn on a string for long periods of time. Other Lower Paleolithic beads had in the meantime been recovered from Repolust Cave, Austria [201]; from Gesher Ya’aqov, Israel [202]; and from El Greifa site E, Libya [203,204]. The latter case involves more than 40 disc beads carefully crafted from ostrich eggshell, and yet advocates of the replacement hoax have sought to discredit the case of Acheulian beads [205,206]. For instance they claimed that a perforated wolf’s tooth must be the result of animal chewing, but omitted to clarify why similarly perforated teeth of the Upper Paleolithic were not caused by this. These double standards are routinely applied; for instance any tubular bone fragment with regularly spaced, circular holes from an Upper Paleolithic deposit is inevitably presented as a flute, but when an identical object is recovered from a final Mousterian layer [207,208], which has a two and a half octave compass that extends to over three octaves by over-blowing, it is explained away as the result of carnivore chewing [209]. The purpose is simply to preserve the dogma, according to which the Mousterian “Neanderthals” were primitive and incapable of such cultural sophistication. Archaeologists fail to appreciate that such finds as beads should be expected from hominins that must be assumed to have developed an advanced level of self-awareness, that most beads of the Pleistocene would have been made from perishable materials, and that even most of those that were not have either not survived or not been recovered. Recalling that the magnificent cave art of the Aurignacian [210] and the delicate carvings of Vogelherd and other Swabian sites [211,212] must be the work of these same final “Neanderthals,” the full absurdity of regressive opinions such as those concerning the Mousterian flute becomes apparent.

Beads and cupules (hemispherical cup marks on rock), which are among the exograms that managed to survive from the Middle Pleistocene, derive their only significance from their cultural context. They are social constructions, which as Plotkin [213] reminds us means that they cannot exist in a single mind. These invented qualities—for that is what they represent—have been turned into reality by culture. A string of beads around the neck of a person may signify certain “memes” to people sharing his social construct or culture; it may well evoke a quite different response in people not sharing his reality; but it means absolutely nothing to any other animal. However, the symbolic roles of beads and pendants extend beyond the semiotic message encoded by cultural convention; they also present a “readable” message. They are even proof for a quest for perfection, and in fact their excellence of execution is part of their message; they are aspects of a “costly display” strategy [214,215,216].

Another benchmark in establishing the cognitive complexity of hominins, also completely ignored by mainstream archaeology, is maritime colonization, although demonstrating a whole raft of human capacities. The gracile australopithecines apparently evolved into robust forms, now subsumed under the genus Paranthropus. P. robustus is credited with using both “advanced” tools and fire, which interestingly has been explained away as evidence of imitation of human behavior, an unlikely and awkward explanation encountered elsewhere in hominin history. The two other Paranthropus “species” currently distinguished are P. boisei (2.3–1.4 Ma) and P. aethiopicus. (2.7–2.3 Ma). They co-existed with fully human species, Homo habilis (2.3–1.6 Ma), H. rudolfensis (2.5–1.9 Ma), and H. ergaster (1.8–1.3 Ma). The latter and H. erectus were the first to develop templates for stone implements, especially handaxes, and they colonized much of Asia. By around one million years ago, a coastal H. erectus population in Java, then with Bali part of the Asian continent, had developed seafaring ability to the point of being able to colonize Lombok from Bali, eventually expanding further eastward into the islands of Wallacea. Via Sumbawa they reached Flores [217,218], establishing a thriving colony there by 840,000 years ago at the latest, eventually crossing Ombai Strait to also occupy Timor and Roti [2,110]. During the Middle Pleistocene, at least two or three Mediterranean islands were also reached by hominins: Sardinia [110,219], Crete [2,220,221,222,223], and presumably Corsica. It is also possible that Europe was first occupied via the Strait of Gibraltar rather than from the east [224].

Maritime colonization involves a number of prerequisites. The technological minimum requirements have been established through a series of experiments begun in 1997 and are now well understood [2,17,80,110,218,224,225]. More important, here, are the cognitive and cultural criteria essential for these quests. Reflective language capable of displacement [226] can be realistically assumed to have been in use at that stage, and the effective use of language to convey abstract concepts and refer to future conditions. The successful operation of a collective consciousness (sensu Émile Durkheim) can be assumed, as well as an advanced ToM. To be successful (i.e., to result in archaeologically detectable evidence), seafaring colonization demands also a certain minimum level of social organization and the capacity of long-term forward planning. Just as the author’s First Mariners project has determined minimal technological conditions for successful crossings of the sea by Lower Paleolithic means, the minimum cognitive or linguistic requirements for such quests could also be established within a falsifiable format. In contrast to the déformation professionnelle governing archaeological scenarios, this exercise is strictly scientific and readily testable.

Many other examples show that scientific approaches to the course of the human ascent are possible, and all of them seem to lead in the same direction: this ascent was gradual, over the course of several million years. Communication ability, social complexity, development of ToM and self-awareness, and cultural sophistication can all safely be assumed to have been functions of the gradual growth of the frontal, temporal, and parietal areas of the brain [115]. The apparent reason for the extreme position of Pleistocene archaeologists, believing in a sudden explosion of all these and other developments at the very end of the time span involved, is perhaps a subliminal conviction that the greatest possible intellectual, cognitive, and cultural distance should be maintained between “us” and those savage ancestors, because it is that distance that seemingly justifies the existence of archaeology as well as certain religious beliefs. There is simply no better explanation for this fervent belief in the primitiveness of all humans up to the final Pleistocene, or for the vehement rejections of each and every reasonable contention contradicting that belief. A second reason is that most archaeologists still do not understand taphonomic logic, which decrees that there must be a “taphonomic threshold” for every phenomenon category in archaeology, and that it must always be later (in most cases very much later) than the first appearance of the category in question [227]. This impediment to understanding archaeological evidence, which increases in severity linearly with the age of the evidence, remains the single most effective reason for misinterpreting archaeology. There even seems to be a culture within Pleistocene archaeology of deliberately restraining excessive ideas about hominin advancement, almost as if to leave adequate scope for future generations of researchers to progressively offer sensational revelations. This extreme conservatism or obscurantism is the antithesis of the realistic search for a balanced representation of what might really have happened in the human past that is advocated here.

4. Reviewing Trajectories

The issue is really quite simple. At the time the hominin lineage split from that resulting in the great apes, the human ancestor can safely be assumed to have possessed cognitive and intellectual abilities matching theirs—roughly those of a child of almost forty months. Since then the expansion of the frontal insular cortex, dorsolateral prefrontal cortex, anterior cingulate cortex, temporal and parietal lobes, limbic system, and basal ganglia accounts for the uniquely developed hominin cognition and intellect, which must be assumed to have increased at roughly the same rate as encephalization occurred. Otherwise they would not have been selected for. Ontogenic homology provides a rough guide for the development to be expected; for instance metarepresentation, recursion, and basic language skills need to be attributed to the first representatives of Homo. By the time Homo erectus appeared, the means and volition of colonizing cold climate regions (skilled fire use and probably use of animal furs) had become possible. One million years ago, the complex means of colonizing seafaring had become accessible, and culture had developed to such intricacy that the use of exograms to store memory externally became established. The structures and functions of the human brain had become essentially modern. Any archaeological explanation that cannot accommodate these fundamentals is obliged to provide clearly stated justifications, because it is at odds with a credible predictive outline provided by the sciences, including paleophysiology and linguistics [226,228,229,230,231]. Absence of perceived archaeological evidence of any phenomenon category is not evidence of absence; it is simply a reflection of a contingent state of an embryonic discipline—an “underdeveloped discipline” [232]. Pleistocene archaeology is in no position to attempt finite determination of any past human states and disallow any contradictory evidence just because it conflicts with its entirely provisional notions of the past, which are merely unstable orthodoxies. The discipline’s history demonstrates this amply. Moreover, it possesses no knowledge whatsoever about the no doubt more sedentary and more developed societies occupying the world’s coastal regions, because the vast sea level fluctuations of the Pleistocene have obliterated all traces of them. Therefore the premature narratives of archaeological explanations refer purely to the more mobile hunters of the hinterland that followed the herds, while the achievements of the Lower Palaeolithic seafarers seem inconceivable to this mode of thinking.

Not only does the expansion of the brain areas just listed account for human cognition and intellect, but brain illnesses also predominantly affect these very same areas [17,20,233]. The phenomenal rise of these and thousands of other genetically based disorders and syndromes that has accompanied the most recent development of humans can be explained by the ability of final Pleistocene and Holocene people to suppress natural selection by replacing it with sexual selection and domestication [99]. Thus the future of humanity can no longer be determined by the traditional processes of nature. The effects of the process of human domestication first become evident on the archaeological record between 40,000 and 20,000 years ago, with a distinctive acceleration of the formerly gradual neotenization, expressed as rapid gracilization of robust populations in four continents [17,19,20,83,99]. This gracilization has continued to the present time: 10,000 years ago humans were significantly more robust than today (as expressed by numerous indices), 20,000 years ago they were twice as robust, and so on. This is the first of a number of trajectories of human development that, given the right data, can be applied to extrapolating into the future.

Domestication by sexual selection in favor of neotenous features has had numerous effects in recent human history. The obvious somatic changes are a rapid loss of skeletal robusticity, thinning of the cranium, reduction of physical strength, and of cranial volume. As noted above, these are typical features of domestication. So is the abolition of estrus, which has often accompanied the domestication of various mammals, and which of course has been strongly established in humans. Even exclusive homosexuality, which as Miller [214] poignantly observes, is biologically unexplainable in a sexually reproducing species and may need to be attributed to domestication. Humanity has actively contributed to self-domestication, at least in Holocene times, by systematically eradicating the genetic traits of “noncompliant” individuals. Those who were independently minded, especially gifted or enterprising, those who were recalcitrant or rebellious have for millennia been selectively persecuted, culled, exiled, or burned at the stake. The cumulative effects of systematic selection of compliant personality traits the human species has practiced at least throughout known history is akin to the domesticating selection of compliant traits in other animals—the obvious exception being animals specifically bred for fighting (bulls, bulldogs, roosters). During known history, latent and sometimes real caste systems of various types have been developed and refined, which as Bickerton [226] notes resemble those of social insects. For the participatory organisms it may be difficult to detect these readily, but class systems, systems of academic apartheid, professionalism, of rigid economic affiliations, religious pigeonholes, political allegiances and so forth, even sporting loyalties, can all resemble castes: in some sense pre-ordained adherences. Present-day humans may see these as reassuring, as signs of belonging, and may find certain forms of them quite advantageous. They may also perceive the modern welfare state as expedient, even though most members of such societies would be unable to subsist without the continued support of these structures. Reference to this dependency is not to be implied as a form of criticism; it is merely a clarification of the state of modern society in order to consider the direction of the human genome. Just as domestication has had a profound effect on it, these other currents may do so also, therefore in the present context they need to be appreciated.

In considering the continued neotenization of humans, which is not assumed to discontinue any time soon, it is important to be aware that this process occurs entirely outside of natural selection; it is determined culturally. Although initially selection of neotenous features was apparently limited to the females [99], it prompted universal genetic changes, and a female preference for neotenous males appears to be developing (consider the Bollywood phenomenon). In the most recent history, a cult of youth has arisen, and in the Western world, young people are increasingly shunning the responsibilities of adult life and parenthood. It would of course be premature to attribute these short-term trends to a long-range change; it is more likely a temporary social phenomenon, but in combination with other recent developments it does deserve attention.

Of particular importance are the rapid changes in the neuropathological and neurodegenerative domains. During most of human history, determined as it was by environment and selective pressures, neuropsychiatric disorders could not establish themselves effectively. In other primates they are practically absent [115,234,235,236,237,238,239]. The lack of social and survival skills inherent in these conditions selected strongly against them, socially as well as genetically, and genetic evidence for them is lacking in hominins up to the final Pleistocene. This is the case even though they tend to be polygenic; therefore single genes would not even prove their existence. For instance the schizophrenia susceptibility genes identified (NRG1, NRG3, DTNBP1, COMT, CHRNA-7, SLC6A4, IMPA2, HOPA12bp, DISC1, TCF4, MBP, MOBP, NCAM1, NRCAM, NDUFV2, RAB18, ADCYAP1, BDNF, CNR1, DRD2, GAD1, GRIA1, GRIA4, GRIN2B, HTR2A, RELN, SNAP-25, TNIK, HSPA1B, ALDH1A1, ANK3, CD9, CPLX2, FABP7, GABRB3, GNB1L, GRMS, GSN, HINT1, KALRN, KIF2A, NR4A2, PDE4B, PRKCA, RGS4, SLC1A2, SYN2 e.g., [240,241,242,243,244,245]) are individually of small or non-detrimental effects. Similarly, the genetic basis of bipolar disorder, although unresolved, involves many regions of interest identified in linkage studies, such as chromosome 18, 4p16, 12q23-q24, 16p13, 21q22 and Xq24-q26 [246,247,248], and genes DRD4, SYNJ1 and MAOA, which have so far been implicated [242,249,250,251,252,253]. Again, the illness is clearly polygenic. Nevertheless, when the absence of such schizophrenia susceptibility alleles as NRG3 is demonstrated in ancestral robust humans it confirms the suspected absence of the condition in these populations [254]. Selective sweeps tend to yield relatively recent etiologies, of less than 20,000 years, for all neuropathologies. Indeed, schizophrenia has been suggested to be of very recent etiology [115] and may have appeared only a few centuries ago [255]. Numerous deleterious conditions were derived from recent neoteny, including cleidocranial dysplasia or delayed closure of cranial sutures, malformed clavicles, and dental abnormalities (genes RUNX2 and CBRA1 refer), type 2 diabetes (gene THADA); or the microcephalin D allele, introduced in the final Pleistocene [256]; or the ASPM allele, another contributor to microcephaly, which appeared around 5,800 years ago [257].

A further example of the recent advent of detrimental genes is that of CADPS2 and AUTS2, involved in autism, which are absent in robust humans, i.e., those predating 28,000 years in Europe. The human brain condition autistic spectrum disorder (ASD) [115,233,258,259,260,261,262,263,264,265,266,267,268,269] also seems to have become notably more prominent in recent centuries. Most recently ASD has developed into a very common illness, reported to be affecting one in 5,000 children in 1975, one in 150 by 2002, one in 110 in 2006 [270], and one in 88 U.S. children in 2008. Moreover, these figures very probably underestimate autism’s U.S. prevalence, because they rely on school and medical record reviews rather than in-person screening. A more thorough study conducted on a large population of South Korean children found recently that one in 38 had autism spectrum disorder [271]. It has been emphasized that the epidemic increase in these diagnoses cannot be entirely attributed to changing diagnostic criteria [272].

It is therefore not at all alarmist to speak of an epidemic in detrimental neuropsychiatric conditions. The incidence of various other conditions, such as obsessive-compulsive disorder (OCD), obsessive-compulsive personality disorder (OCPD), and chronic fatigue syndrome (CFS), also appears to be increasing. Similarly, notable increases are observed in the neurodegenerative diseases, although here the recent surge in human longevity probably accounts for much of this. For instance the incidence of Alzheimer’s disease begins to increase significantly at age 80 (from 9 to 23 per 1000 [273]), and a similar pattern applies to Parkinson’s disease [274]. However, about 5% of Alzheimer’s patients have experienced what is termed early onset, and even Parkinson’s can commence before the age of 40 [115]. There is a juvenile form of Huntington’s disease with onset prior to 20 years of age that accounts for about 5–10% of all affected patients, and the most common age of onset is from 35–44 years of age. While it is therefore justified to consider rising life expectancies in the incidence of neurodegenerative conditions as a contributing factor to statistics, this alone does not account fully for epidemiological patterns. Within the overall picture the longevity factor is also more than compensated for by the fact that most mental illnesses and conditions deriving from demyelination or dysmyelination are selecting younger cohorts etiologically, even infants in some cases. Therefore the rise in neuropathology cannot be brushed aside as not affecting reproduction, because it affects young people as well. In contrast to many other health issues, which modern medicine tends to deal with fairly successfully, neuropathologies have been relatively more resistant to palliative treatment and management so far.

The relentless increase in neuropathologies is one of the many effects of human self-domestication and the replacement of natural selection with culturally guided sexual selection. Like gracilization and neotenization, it can provide empirically determined, testable, and cogent trajectories. Unless there were to be unexpected extraordinary changes to the causal variables, these trajectories permit relatively reliable extrapolation into the future. In the absence of the arbitrating force of natural selection, none of the deleterious predispositions can be suppressed in the human genome. This concerns in particular neuropathologies, which arose essentially because the most recent encephalization occurred outside the constraining controls imposed by evolutionary selection. Short of a complete collapse of human culture it should be expected that these encumbrances will continue to rise, and new ones will very probably appear. All things considered, this may well turn out to be the greatest challenge in the future of humanity.

In the case of neotenization, which is perhaps a more protracted and convoluted process, only highly controversial and universally scorned measures could impede or arrest it. Therefore it can safely be expected to continue into the future, and it seems perfectly reasonable to predict some of the directions into which this will take the human species. For one thing, an increase in the volume of the human brain is unlikely, considering the rapid decline of that index over the past forty millennia. This has been more than compensated for by two factors: the growth in the brain’s interconnectivity and the simply massive proliferation in its reliance on exograms, i.e., external storage of symbolic information. Both these influences can be expected to facilitate future reorganization of the human brain, because the ontogenic plasticity noted above will progressively translate into phylogenic changes. To the extent that the external storage is now technologically driven, e.g., through digitization, it should be apparent that this will lead to permanent changes in the operation of the human brain, much in the same way as the introduction of writing has had such effects [102]. Something of this kind was already predicted by Plato, when he noted that learning to write “will implant forgetfulness in (people’s) souls: they will cease to exercise memory because they will rely on that which is written, calling things to remembrance no longer from within themselves but from external marks” [275]. As so often, Plato was right.

Other effects of continued neotenization and general changes to the human genome can also be speculated about with some credibility. However, the ultimate question on the future of humanity concerns the issue of speciation: at what time can the present species be expected to have developed so much that its individuals would no longer be able to viably breed (i.e., produce fertile offspring) with Homo sapiens sapiens? Before addressing this issue it would be expedient to note the lack of consensus among paleoanthropologists concerning the taxonomy of human species of the present and past. The current majority view, that H.s.s. could not effectively interbreed with any robust predecessor, beginning with the so-called Neanderthals, is in all probability false. Some scholars extend the species designation of H.s. as far back as H. erectus, thus implying that a modern person would be able to produce fertile offspring with a H. erectus partner [276]. Others take an intermediate position, either including the robust H.s. subspecies, or also including the preceding H. heidelbergensis and H. antecessor as subspecies of H. sapiens. Since the extreme view, despite being the majority view at present, is presumably erroneous [17,20,82,99], a conservative taxonomy would be to include all robusts of the last several hundred thousand years with H.s. However, the model of the longer duration of the species, of over a million years, cannot be excluded from consideration at this stage.

This infers that the likelihood of a fully new species emerging from present humanity within, say, the next hundred millennia is rather low. Unless there were to be a very incisive bottleneck event, during which the world population might plummet to less than one millionth its present size, speciation would be as far off as a time when the majority of humans would live beyond Earth—or roughly after the end of the next Ice Age. By that time, humans would have long replaced traditional sustenance with intravenous liquid food, and it is possible that technologically driven changes of this kind will also affect the rate of speciation. Nevertheless, it would be entirely unrealistic to expect speciation to occur over the next few millennia, during which time technology can safely be predicted to develop rapidly, if not exponentially.

This raises the specter of the “posthuman condition” [277,278,279]. Bostrom [1] refers to this condition by predicting that at least one of the following characteristics will apply:

- Population greater than 1 trillion persons

- Life expectancy greater than 500 years

- Large fraction of the population has cognitive capacities more than two standard deviations above the current human maximum

- Near-complete control over the sensory input, for the majority of people for most of the time

- Human psychological suffering becoming rare occurrence

- Any change of magnitude or profundity comparable to that of one of the above

Since such hypothetical people would still be humans, the term “post-sapienoid condition” might be preferable to refer to them. Captivated by their tantalizing notions, the promoters of these futuristic scenarios tend to overlook that most of humanity lives subsistence existences currently, and that some of its members still survive with essentially “Paleolithic” to “Neolithic” technologies. Progress in technology might be exponential, but it does not necessarily follow that “a hand-picked portfolio of hot technologies” [1] will determine the overall direction of humanity in the foreseeable future. The pace of “progress” is extremely uneven in different areas. Superintelligence will no doubt be developed at some stage, but before speculating about a “posthuman” condition it needs to be considered that humanity may find itself facing some rather different but more pressing matters well before reaching such a stage. The cumulative probability of extinction increases monotonically over time [1], but what are the most potent threats?

The issues associated with the future of humanity can be divided into two types: the “external” issues, relating to the environment (natural or artificial), and the “internal” issues, relating to the human condition [17,280,281]. “External” existential risks, such as massive disasters, pandemics, and astrophysical events are always present but they are of relatively low probability, whereas the perhaps more insidious “internal” threats have attracted much less attention. Moreover, the external threats may be reduced significantly by the future establishment of extraterrestrial human colonies over multiple planets and solar systems. The internal threats, based on inevitable genetic changes and societal defects (e.g., the tensions Bostrom [7] perceives between “eudaemonic agents” and “fitness-maximizers”), seem much more difficult to avert by future developments; in fact they will very probably be fostered by these changes. As shown in this paper, one of the most important is the trajectory of humanity in acquiring significant genetic burdens, which first appeared in the final Pleistocene, but which has since then apparently gained growing momentum [17,20,82,88,99,282]. There are credible indications that this momentum is accelerating exponentially, and this issue is likely to be clarified within the next few decades.

This trajectory suggests two things: that the greatest threat to the human species is derived from its self-domestication and the genetic changes it engenders, and that it presents for all practical purposes an inevitability. While external existential risks are always present, but statistically modest, the threat of genetic unsustainability is not just real, it is inescapable. Those who trust in the human ingenuity of solving every issue by technology will probably argue that ways will be found to reengineer the human genome to counter these inexorable developments, and perhaps that will be possible. However, such solutions may need to be applied sooner rather than later, because the recent and present trajectories of human development suggest that the processes they need to counter are well under way, and possibly at an accelerating rate. In the final analysis it may be futile to escape the basic nature of human beings: they are still apes, still subject to the canons of nature, and their escape from the confines of natural selection may already have sealed the long-term prospects of the species.

5. Conclusions

This review of the long-term prospects of the human species arrives at findings that are not very encouraging. There is a range of existential threats to human future, essentially of two types. “External” risks are those deriving from environmental, always present hazards, such as planet-wide disasters, astrophysical events elsewhere in the universe, or pandemics. They are, however, relatively low risks. Of course the possibility of a fatal asteroid collision is always present, but the statistical probability of it occurring in the foreseeable future is exceedingly low. Moreover, some of these external threats may become manageable with the help of future technology, in various ways. For instance a collision of Earth with another object may be averted in the distant future, or the existence of extraterrestrial human colonies may preserve humanity in the case that the Earth becomes uninhabitable. It is considered that the risks from “internal” or anthropogenic causes are the more imminent. These include conditions created by humans themselves, be it intentionally or not. For instance dangers inherent in future technologies, whether applied maliciously or not, are statistically more likely to occur in due course, and may be difficult to respond to. These could include self-enhancing artificial intelligence of an ill-conceived type, man-made pathogens, or the unintended or unforeseeable consequences of the ability to manipulate genetics, molecular structures and the matter life is made of.