Evaluation of Gender-Related Digital Violence Training in Catalonia

Abstract

1. Introduction

2. Methodology

2.1. Research Techniques and Data Analysis

2.2. Population, Sample, and the Implementation and Evaluation Teams

3. Results

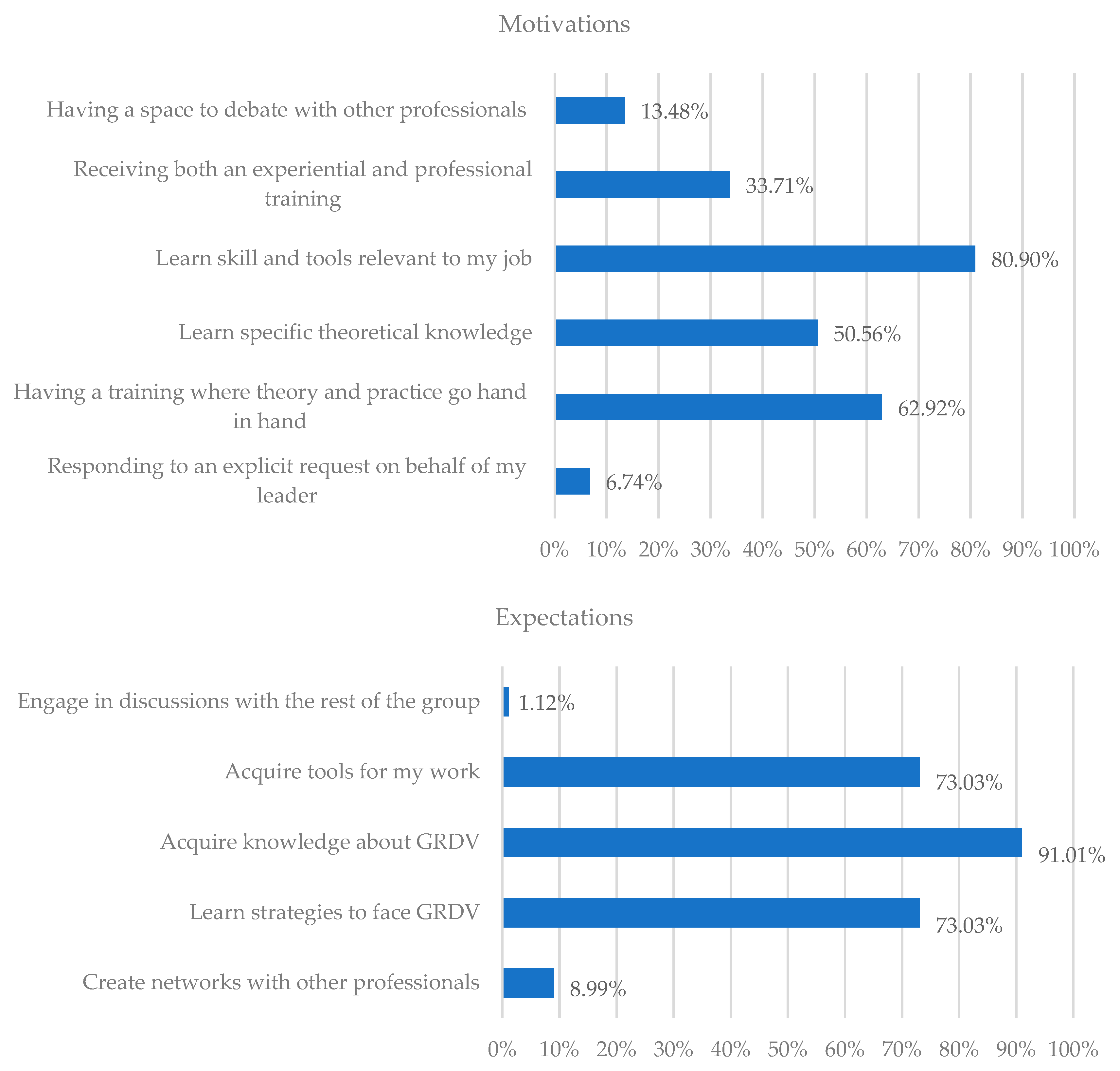

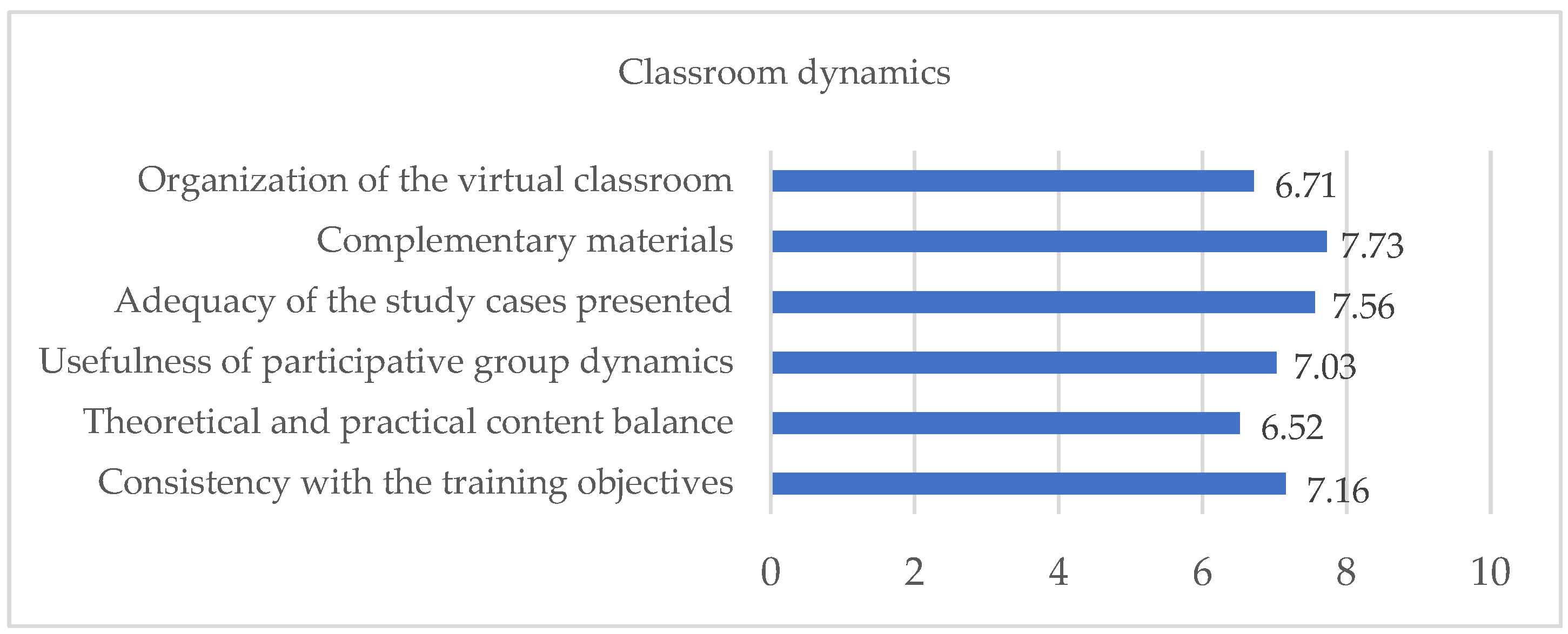

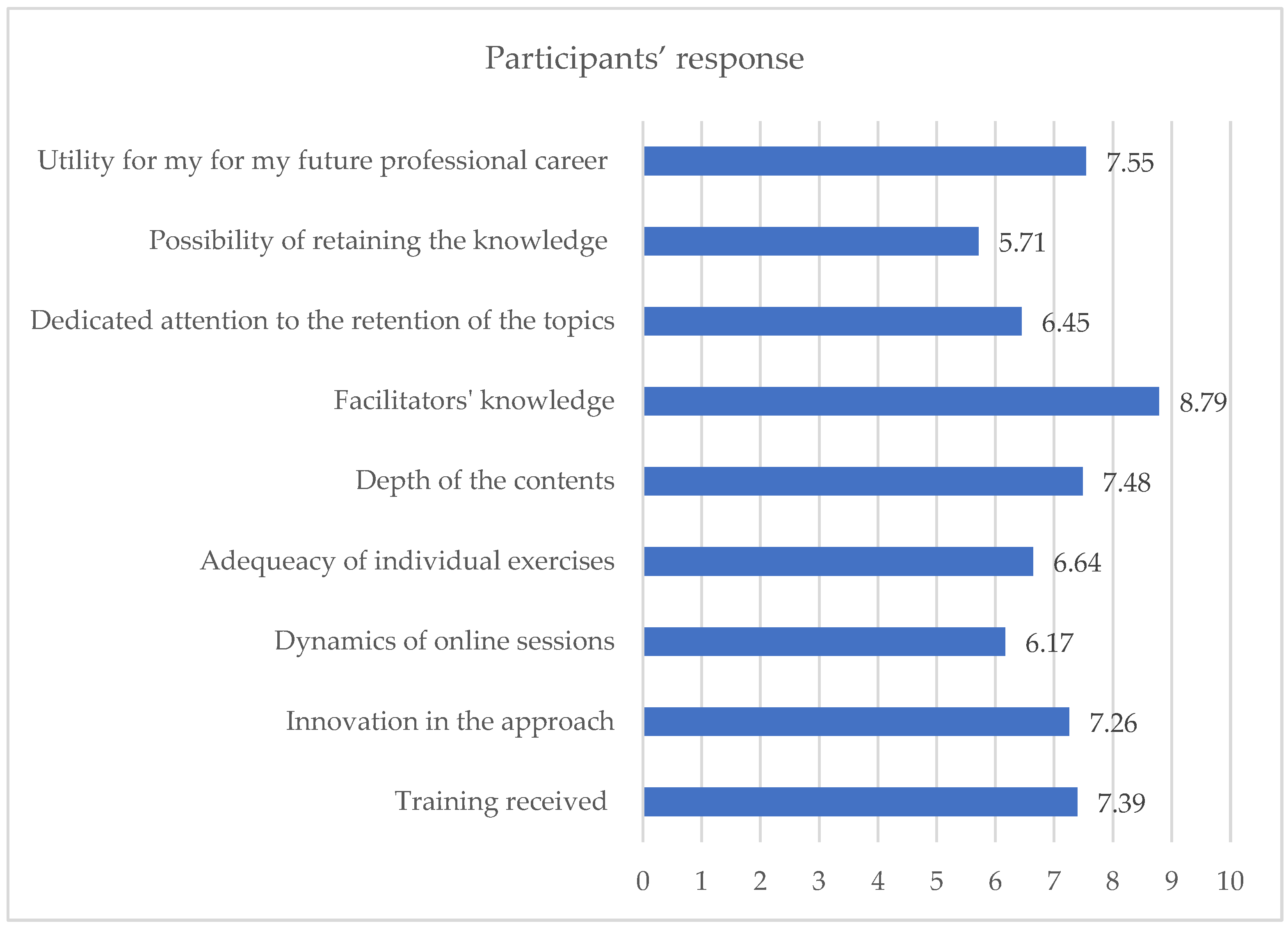

3.1. Training Implementation

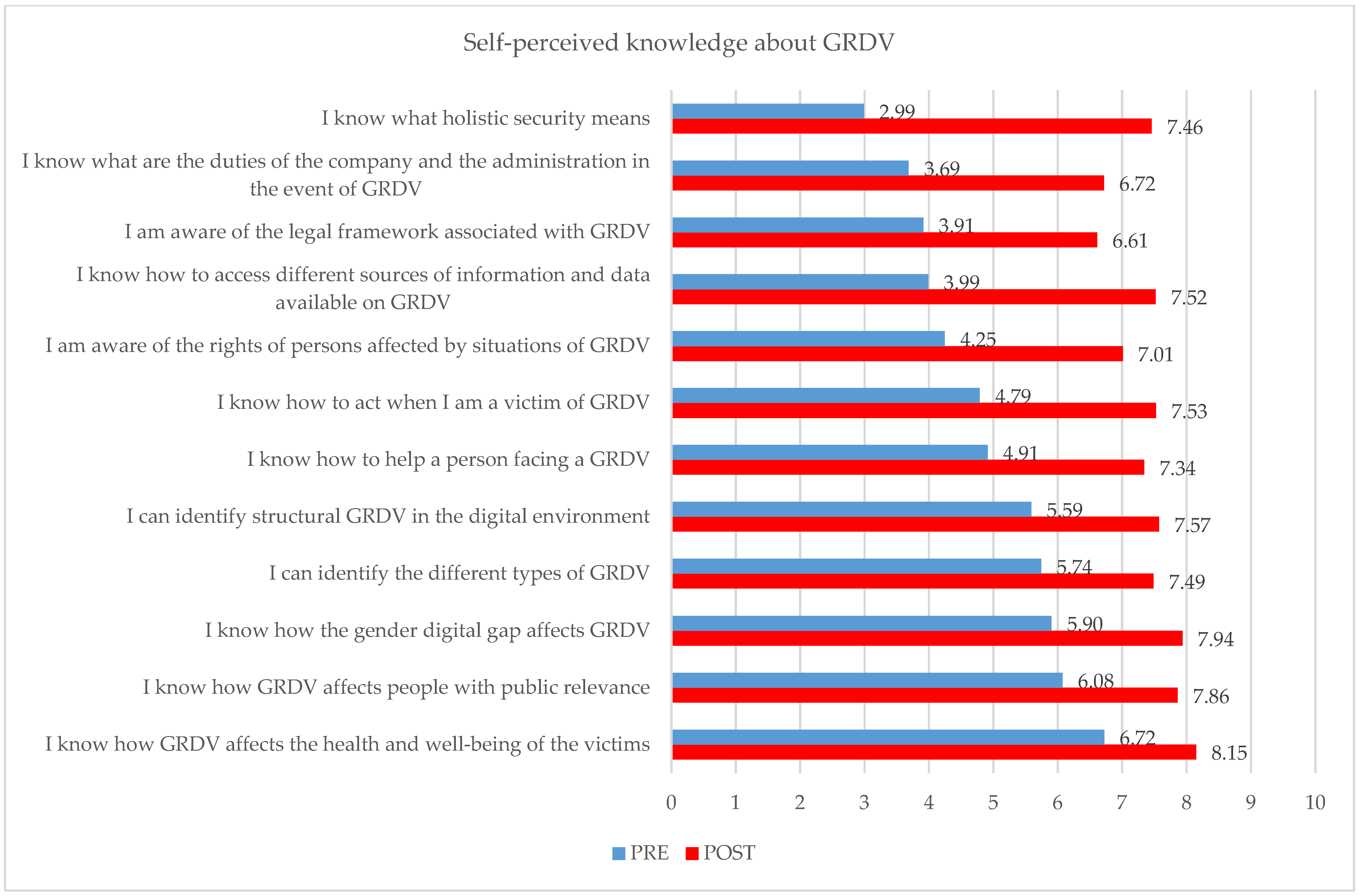

3.2. Self-Perceived Learning

3.3. Skills Improvement (Evaluation Team Perspective)

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alldred, Pam, and Barbara Biglia. 2015. Gender-Related Violence and Young People: An Overview of Italian, Irish, Spanish, UK and EU Legislation. Children & Society 29: 662–75. [Google Scholar] [CrossRef]

- Ato, Manuel, Juan J. López-García, and Ana Benavente. 2013. Un sistema de clasificación de los diseños de investigación en psicología. Anales de Psicología 29: 1038–59. Available online: https://www.redalyc.org/pdf/167/16728244043.pdf (accessed on 1 June 2023). [CrossRef]

- Azpiazu, Jokin, and Marta Luxán Serrano. 2023. Metodología feminista en investigaciones de tesis doctoral. De las epistemologías feministas y críticas a propuestas metodológicas en construcción y revisión. Clivatge. Estudis I Tesitimonis Del Conflicte I El Canvi Social 11: e45304. [Google Scholar] [CrossRef]

- Biglia, Barbara, and Conchi San Martín. 2007. Estado de Wonderbra. entretejiendo narraciones feministas sobre las violencias de género. Barcelona: Virus Editorial. [Google Scholar]

- Biglia, Barbara, Marta Luxán Serrano, and Edurne Jiménez. 2022. Feminist evaluation of gender-related violence educational programs: A situated proposal. Politica y Sociedad 59: e75990. [Google Scholar] [CrossRef]

- Cahill, Helen, Margaret L. Kern, Babak Dadvand, Emilyn Walter Cruickshank, Richard Midford, Catherine Smith, Anne Farrellya, and Lindsay Oades. 2019. An integrative approach to evaluating the implementation of social and emotional learning and gender-based violence prevention education. International Journal of Emotional Education 11: 135–52. Available online: https://files.eric.ed.gov/fulltext/EJ1213610.pdf (accessed on 1 June 2023).

- Crooks, Claire. V., Peter Jaffe, Caely Dunlop, Amanda Kerry, and Deinera Exner-Cortens. 2019. Preventing Gender-Based Violence Among Adolescents and Young Adults: Lessons From 25 Years of Program Development and Evaluation. Violence Against Women 25: 29–55. [Google Scholar] [CrossRef] [PubMed]

- Cruells, Eva, Nagore Gartzia, Núria Vergés, Francisca Perona, Francisca Maya, Edurne Jiménez, Lucía Egaña, Florencia Guzman, Alex Hache, and Laia Serra. 2021. Marc conceptual per a un abordatge de les violències masclistes digitals. Available online: https://fembloc.cat/archivos/recursos/5/legal-conceptual-and-methodological-frameworkdefce.pdf (accessed on 4 June 2020).

- Donoso Vázquez, Trinidad. 2012. Violencia, tolerancia cero: Guía de evaluación pera las intervenciones en violencia de género. Barcelona: Obra Social. Fundació La Caixa. [Google Scholar]

- Dragiewicz, Molly, Jean Burgess, Ariadna Matamoros-Fernández, Michael Salter, Nicolas P. Suzor, Delanie Woodlock, and B. Harris. 2018. Technology facilitated coercive control: Domestic violence and the competing roles of digital media platforms. Feminist Media Studies 18: 609–25. [Google Scholar] [CrossRef]

- Etherington, Cole, Linda Baker, Marlene Ham, and Denise Glasbeek. 2017. Evaluating the Effectiveness of Online Training for a Comprehensive Violence Against Women Program: A Pilot Study. Journal of Interpersonal Violence 36: 160–83. [Google Scholar] [CrossRef] [PubMed]

- Halim, Nafisa, Ester Steven Mzilangwe, Naomi Reich, Lilian Badi, Elizabeth Simmons, Maria Servidone, Nelson Bingham Holmes II, Philbbert Kawemama, and Lisa J. Messersmith. 2019. Together to end violence against women in Tanzania: Results of a pilot cluster randomized controlled trial to evaluate preliminary effectiveness of interpersonal and community level interventions to address intimate partner violence. Global Public Health 14: 1653–68. [Google Scholar] [CrossRef]

- Hall, Budd L., and Darlene Clover. 2005. Social movement learning in International Encyclopedia of Adult Education. Edited by Leona M. English. London: Palgrave Macmillian. [Google Scholar]

- Hill, Casandra, and Holly Johnson. 2019. Online Interpersonal Victimization as a Mechanism of Social Control of Women: An Empirical Examination. Violence Against Women 26: 1681–700. [Google Scholar] [CrossRef]

- Jenkins, Katy, Lata Narayanaswamy, and Caroline Sweetman. 2019. Introduction: Feminist values in research. Gender & Development 27: 415–25. [Google Scholar] [CrossRef]

- Jewkes, Rachel, and Elizabeth Dartnall. 2019. More research is needed on digital technologies in violence against women. The Lancet Public Health 4: e270–e271. [Google Scholar] [CrossRef] [PubMed]

- Jewkes, Rachel, Anik Gevers, Esnat Chirwa, Pinky Mahlangu, Simukai Shamu, Nwabisa Shai, and Carl Lombard. 2019. RCT evaluation of Skhokho: A holistic school intervention to prevent gender-based violence among South African Grade 8s. PLoS ONE 14: e0223562. [Google Scholar] [CrossRef] [PubMed]

- Jiménez, Edurne. 2022. Des de les arrels propostes situades per abordar les violències de gènere amb processos formatius. Ph.D. thesis, Universidad Rovira i Virgili, Reus, Spain. TDX (Theses and Dissertations Online). Available online: http://hdl.handle.net/10803/675501 (accessed on 1 June 2023).

- Kalpazidou Schmidt, Evanthia, Rachel Palmén, and Susanne Bührer. 2023. Policy-making and evaluation of gender equality programmes: Context, power, and resistance in the transformation process. Science and Public Policy 50: 206–18. [Google Scholar] [CrossRef]

- Khoo, Cinthya. 2021. Deplatforming Misogyny—LEAF. Available online: https://www.leaf.ca/wp-content/uploads/2021/04/Full-Report-Deplatforming-Misogyny.pdf (accessed on 15 June 2023).

- Musungu, Stella Muzoka, Sianne Alves, Cal Volks, and Zaaida Vallie. 2018. Evaluating a Workshop on Gender-Based Violence Prevention and Bystander Intervention. Africa Journal of Nursing and Midwifery 20. [Google Scholar] [CrossRef]

- Ogum Alangea, Deda, Adolphina A. Addo-Lartey, Esnat D. Chirwa, Yandisa Sikweyiya, Dorcas Coker-Appiah, Rachel Jewkes, and Richard M. K. Adanu. 2020. Evaluation of the rural response system intervention to prevent violence against women: Findings from a community-randomised controlled trial in the Central Region of Ghana. Global Health Action 13: 1711336. [Google Scholar] [CrossRef]

- Pagani, Stefania, Simon C. Hunter, and Mark A. Elliott. 2022. Evaluating the Mentors in Violence Prevention Program: A Process Examination of How Implementation Can Affect Gender-Based Violence Outcomes. Journal of Interpersonal Violence 38: 4390–415. [Google Scholar] [CrossRef]

- Puigvert, Lidia, Loraine Gelsthorpe, Marta Soler-Gallart, and Ramon Flecha. 2019. Girls’ perceptions of boys with violent attitudes and behaviours, and of sexual attraction. Palgrave Communications 5: 1–12. [Google Scholar] [CrossRef]

- Raab, Michaela, and Wolfgang Stuppert. 2018. Development in Practice Effective evaluation of projects on violence against women and girls. Development in Practice 28: 541–51. [Google Scholar] [CrossRef]

- Seff, Ilana, Luissa Vahedi, Samantha Mcnelly, Elfriede Kormawa, and Lindsay Stark. 2021. Remote evaluations of violence against women and girls interventions: A rapid scoping review of tools, ethics and safety. BMJ Global Health 6: 6780. [Google Scholar] [CrossRef]

- Sharma, Vandana, Emily Ausubel, Christine Heckman, Sonia Rastogi, and Jocelyn T. D. Kelly. 2022. Promising practices for the monitoring and evaluation of gender-based violence risk mitigation interventions in humanitarian response: A multi-methods study. Conflict and Health 16: 1–27. [Google Scholar] [CrossRef]

- Sills, Sophie, Chelsea Pickens, Karishma Beach, Lloyd Jones, Octavia Calder-Dawe, Paulette Benton-Greig, and Nicola Gavey. 2016. Rape culture and social media: Young critics and a feminist counterpublic. Feminist Media Studies 16: 935–51. [Google Scholar] [CrossRef]

- Simões, Rita Basílio, Inês Amaral, and Sofia José Santos. 2021. The new feminist frontier on community-based learning: Popular feminism, online misogyny, and toxic masculinities. European Journal for Research on the Education and Learning of Adults 12: 165–77. [Google Scholar] [CrossRef]

- Turnhout, Esther, Tamara Metze, Carina Wyborn, Nicole Klenk, and Elena Louder. 2020. The politics of co-production: Participation, power, and transformation. Current Opinion in Environmental Sustainability 42: 15–21. [Google Scholar] [CrossRef]

- UNFPA. 2021. Technology-facilitated Gender-based Violence: Making All Spaces Safe. Available online: https://www.unfpa.org/publications/technology-facilitated-gender-based-violence-making-all-spaces-safe (accessed on 12 June 2023).

- Vergés, Núria, and Adriana Gil-Juarez. 2021. Un acercamiento situado a las violencias machistas online y a las formas de contrarrestarlas. Revista Estudios Feministas 29: e74588. [Google Scholar] [CrossRef]

- Wigginton, Britta, and Michelle N. Lafrance. 2019. Learning critical feminist research: A brief introduction to feminist epistemologies and methodologies. Feminism & Psychology, Advance online publication. [Google Scholar] [CrossRef]

| 1. Positionality |

| -To observe if the training design is suitable for the profile, knowledge, or motivations of the participant group, incorporating cases and examples relevant to their experiences. -To observe the extent to which the understanding and addressing of gender-related violence is approached by the facilitators, recognizing its structural and systemic nature, and institutional violence, while addressing the intersectionality of its causes, meanings, and effects. -To evaluate whether experiential knowledge is given a prominent place, offering concrete examples and cases. |

| 2. Classroom interactions |

| -To identify whether the experiences, knowledge, and opinions of the participants are valued by the facilitators. -To identify whether discussion of different perspectives or interpretations is encouraged, with an emphasis on respecting the experiences and emotions triggered during the process. -To evaluate whether a space is provided for participants to question the provided content or interpretations. |

| 3. Care |

| -To observe whether facilitators can identify and respond to difficulties, resistance, or discomfort from participants towards specific dynamics. -To take into account whether schedules and calendars facilitate work–life balance, comfort, and accessibility to the training. -To evaluate the working conditions of team members and the implementation of personal and collective self-care tools. |

| 4. Participants’ response |

| -To analyze participants’ satisfaction with the proposed dynamics. -To demonstrate how participants perceive the personal and/or professional usefulness of the training program. -To indicate whether the participants show interest in finding out more about GRDV. |

| 5. Influence |

| -To highlight participants’ perception of their having internalized knowledge and skills. |

| Questionnaire—pre-test | Goals | -To measure the initial state of self-perception of knowledge and skills in relation to GRDV. -To collect sociodemographic data of the participants, their motivations, expectations and experience (positionality criteria) |

| Target audience | Healthcare professionals beginning the training sessions | |

| When it is applied | At the beginning of the first training session | |

| How it is answered | Individually, online | |

| Time | 15/20 min | |

| Questionnaire—post-test | Goals | -To measure the final state of self-perceived knowledge and perceived skills in relation to GRDV from the point of view of the evaluation team -To gather the participants’ perceptions of interactions and care within the classroom, as well as their responses and the influence of the training |

| Target audience | Professionals who have completed at least three training sessions | |

| When it is applied | At the end of the last training session | |

| How it is applied | Individually, online | |

| Observation diary | Goals | -To collect elements of the training process such as the kind of classroom interactions during the training |

| When it is applied | During five training sessions |

| Levene’s Test for Equal Variances | T-Test for Equality of Means | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| F | Sig. | t | df | Sig. (2-Tailed) | Mean Difference | Std. Error Differ. | 95% Confidence Interv. Difference | |||

| Inferior | Superior | |||||||||

| IDENTIFY GRDV | Equal variances assumed | 8637 | 0.004 | −7945 | 199 | 0.000 | −1830 | 0.230 | −2284 | −1376 |

| Equal variances not assumed | −8088 | 181,063 | 0.000 | −1830 | 0.226 | −2277 | −1384 | |||

| IDENTIFY STRUCTURAL GRDV | E.V.A. * | 5548 | 0.019 | −7711 | 199 | 0.000 | −1899 | 0.246 | −2385 | −1413 |

| E.V.N.A. ** | −7810 | 191,466 | 0.000 | −1899 | 0.243 | −2379 | −1419 | |||

| AFFECTS GRDV | E.V.A. | 8574 | 0.004 | −5420 | 199 | 0.000 | −1422 | 0.262 | −1939 | −0.905 |

| E.V.N.A. | −5513 | 182,981 | 0.000 | −1422 | 0.258 | −1931 | −0.913 | |||

| AFFECTS GRDV PR | E.V.A. | 11,930 | 0.001 | −7148 | 199 | 0.000 | −1788 | 0.250 | −2282 | −1295 |

| E.V.N.A. | −7268 | 184,133 | 0.000 | −1788 | 0.246 | −2274 | −1303 | |||

| GENDER GAP | E.V.A. | 7304 | 0.007 | −7502 | 199 | 0.000 | −2033 | 0.271 | −2567 | −1498 |

| E.V.N.A. | −7614 | 187,954 | 0.000 | −2033 | 0.267 | −2559 | −1506 | |||

| LEGAL FRAMEWORK | E.V.A. | 9658 | 0.002 | −9047 | 199 | 0.000 | −2700 | 0.298 | −3289 | −2112 |

| E.V.N.A. | −9165 | 191,326 | 0.000 | −2700 | 0.295 | −3281 | −2119 | |||

| SURVIVOR’S RIGHTS | E.V.A. | 16,411 | 0.000 | −10,101 | 199 | 0.000 | −2763 | 0.274 | −3302 | −2223 |

| E.V.N.A. | −10,286 | 180,534 | 0.000 | −2763 | 0.269 | −3293 | −2233 | |||

| COMPANY DUTIES | E.V.A. | 13,942 | 0.000 | −10,633 | 199 | 0.000 | −3033 | 0.285 | −3596 | −2471 |

| E.V.N.A. | −10,785 | 188,988 | 0.000 | −3033 | 0.281 | −3588 | −2478 | |||

| HOLISTIC SECURITY | E.V.A. | 17,664 | 0.000 | −14,399 | 199 | 0.000 | −4468 | 0.310 | −5080 | −3856 |

| E.V.N.A. | −14,588 | 191,154 | 0.000 | −4468 | 0.306 | −5072 | −3864 | |||

| SOURCES INFORMATION | E.V.A. | 14,277 | 0.000 | −11,896 | 199 | 0.000 | −3530 | 0.297 | −4116 | −2945 |

| E.V.N.A. | −12,106 | 182,026 | 0.000 | −3530 | 0.292 | −4106 | −2955 | |||

| HELP | E.V.A. | 11,296 | 0.001 | −8983 | 199 | 0.000 | −2429 | 0.270 | −2963 | −1896 |

| E.V.N.A. | −9137 | 183,330 | 0.000 | −2429 | 0.266 | −2954 | −1905 | |||

| ACT | E.V.A. | 12,035 | 0.001 | −9594 | 198 | 0.000 | −2736 | 0.285 | −3298 | −2173 |

| E.V.N.A. | −9810 | 176,180 | 0.000 | −2736 | 0.279 | −3286 | −2185 | |||

| Pairs | Paired Differences | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | Std. Deviation | Std. Error Mean | 95% Confidence Interval of the Difference | t | df | Sig. (2-Tailed) | |||

| Inferior | Superior | ||||||||

| Pair 1 | Diagnosis PRE & POST | −1831 | 0.834 | 0.109 | −2048 | −1613 | −16,867 | 58 | 0.000 |

| Pair 2 | Prescription PRE & POST | −1271 | 1284 | 0.167 | −1606 | −0.937 | −7603 | 58 | 0.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guerrero-Sanchez, C.; Bonet-Marti, J.; Biglia, B. Evaluation of Gender-Related Digital Violence Training in Catalonia. Soc. Sci. 2024, 13, 96. https://doi.org/10.3390/socsci13020096

Guerrero-Sanchez C, Bonet-Marti J, Biglia B. Evaluation of Gender-Related Digital Violence Training in Catalonia. Social Sciences. 2024; 13(2):96. https://doi.org/10.3390/socsci13020096

Chicago/Turabian StyleGuerrero-Sanchez, Catalina, Jordi Bonet-Marti, and Barbara Biglia. 2024. "Evaluation of Gender-Related Digital Violence Training in Catalonia" Social Sciences 13, no. 2: 96. https://doi.org/10.3390/socsci13020096

APA StyleGuerrero-Sanchez, C., Bonet-Marti, J., & Biglia, B. (2024). Evaluation of Gender-Related Digital Violence Training in Catalonia. Social Sciences, 13(2), 96. https://doi.org/10.3390/socsci13020096