Persistence and Attrition among Participants in a Multi-Page Online Survey Recruited via Reddit’s Social Media Network

Abstract

:1. Introduction

Reddit as a Sampling Universe

2. Methodology

2.1. Purpose of the Study

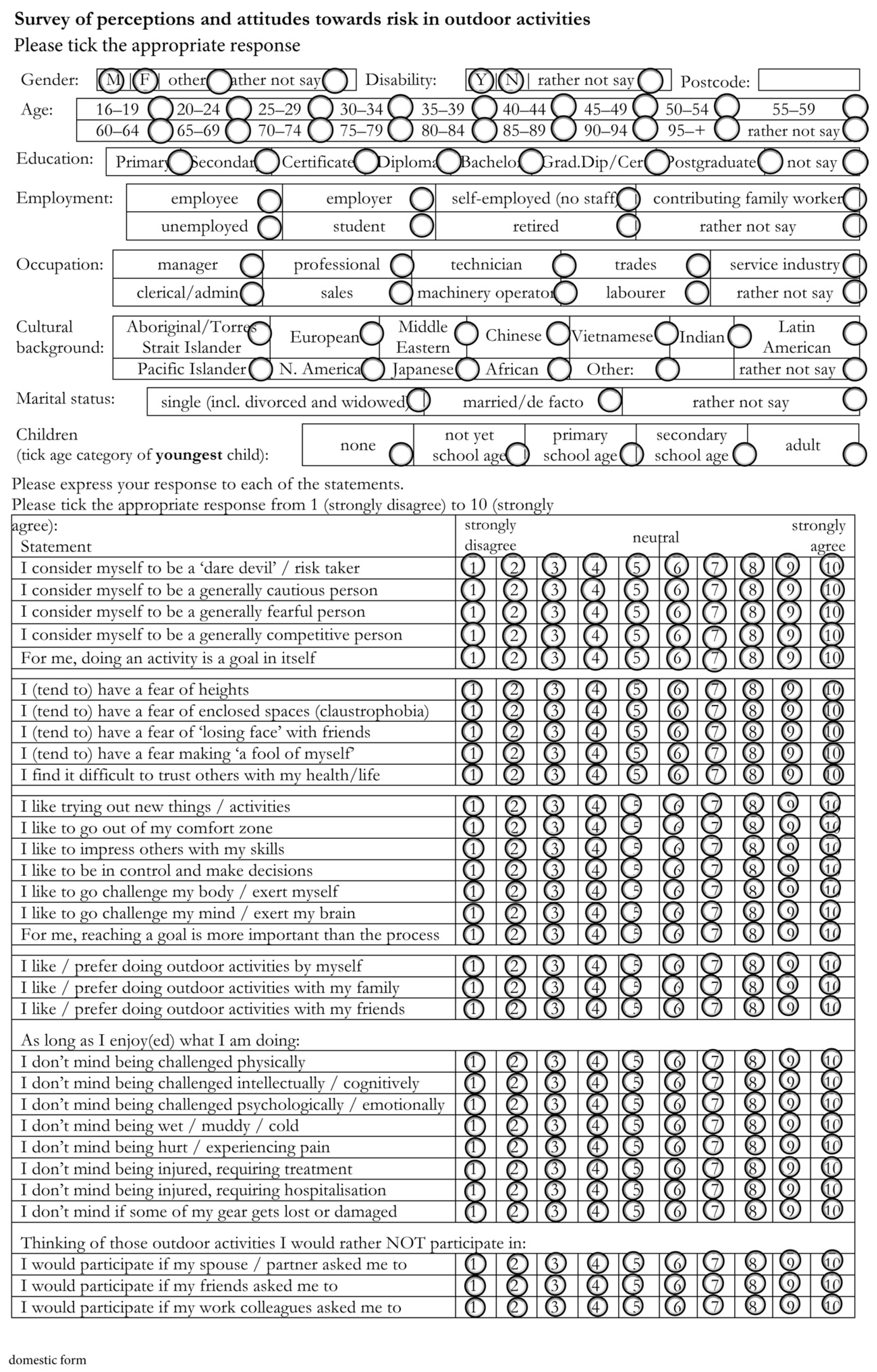

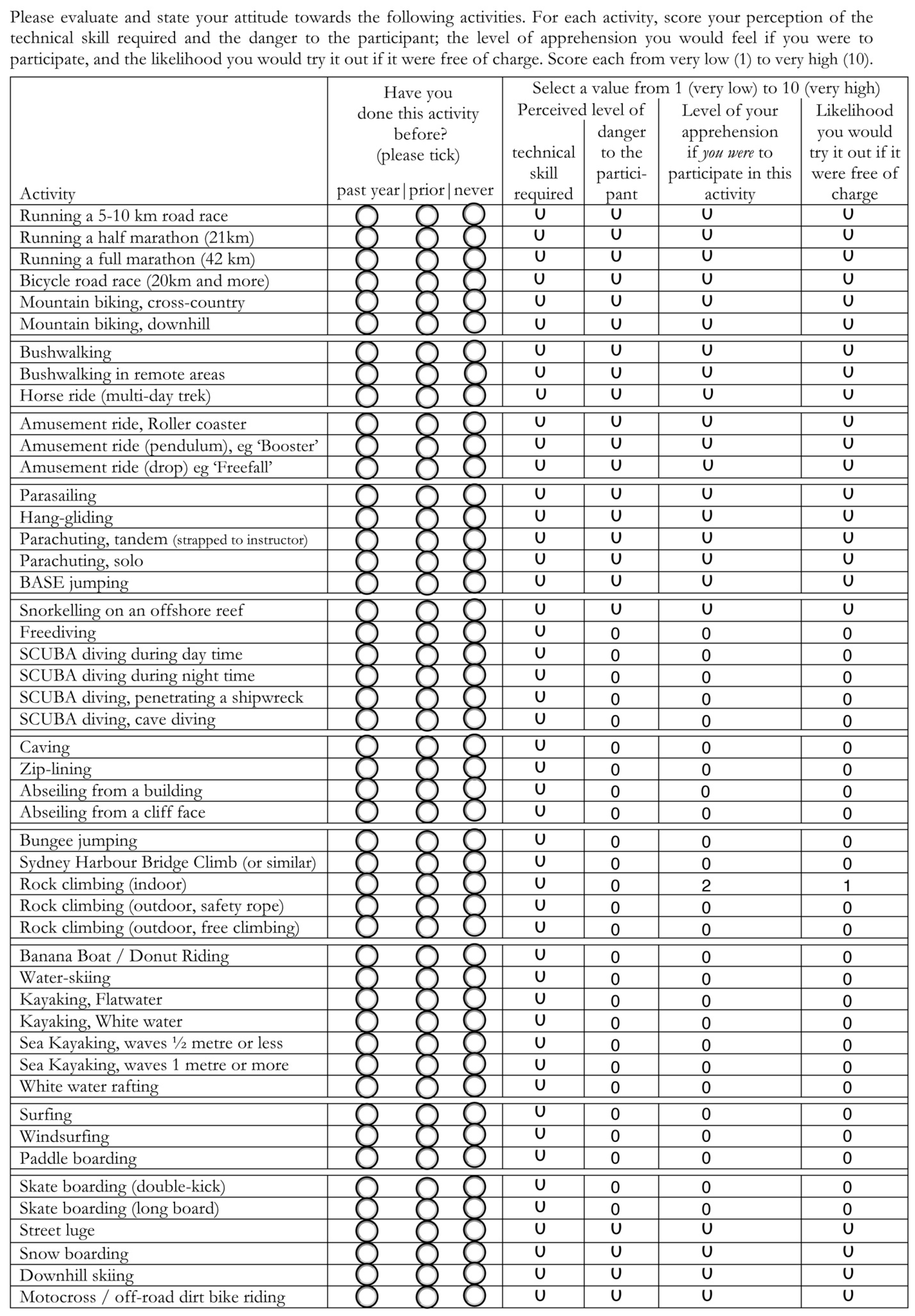

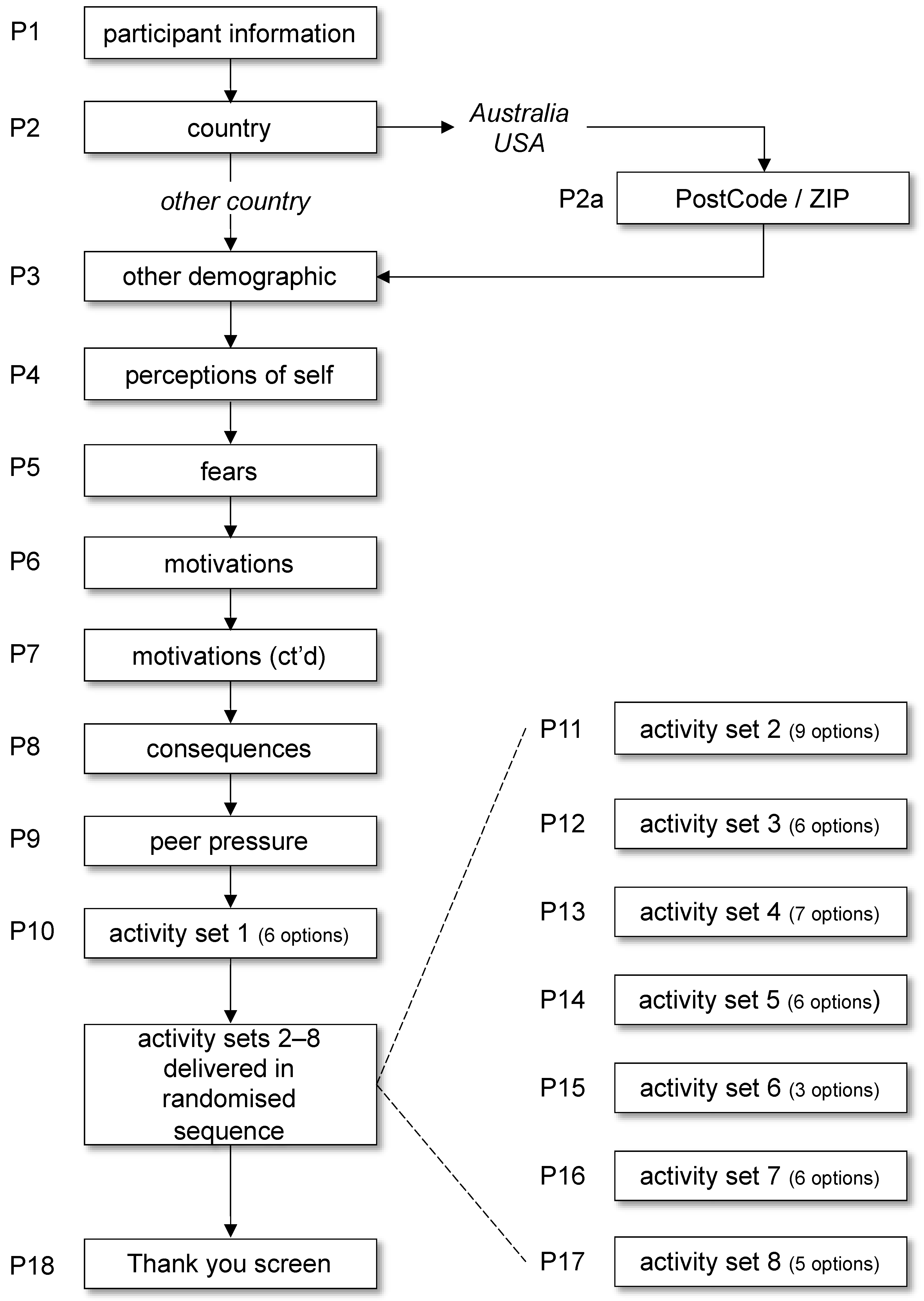

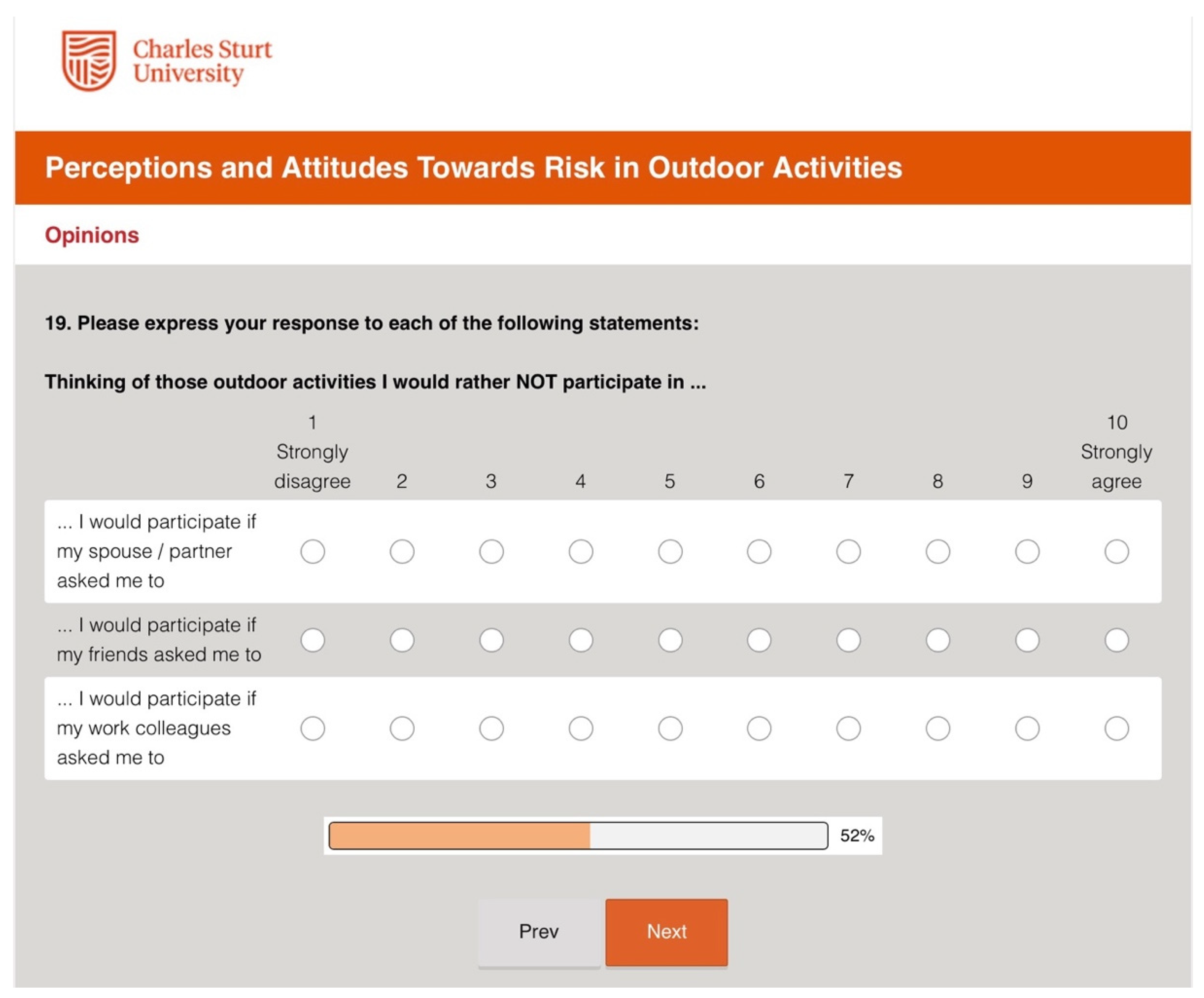

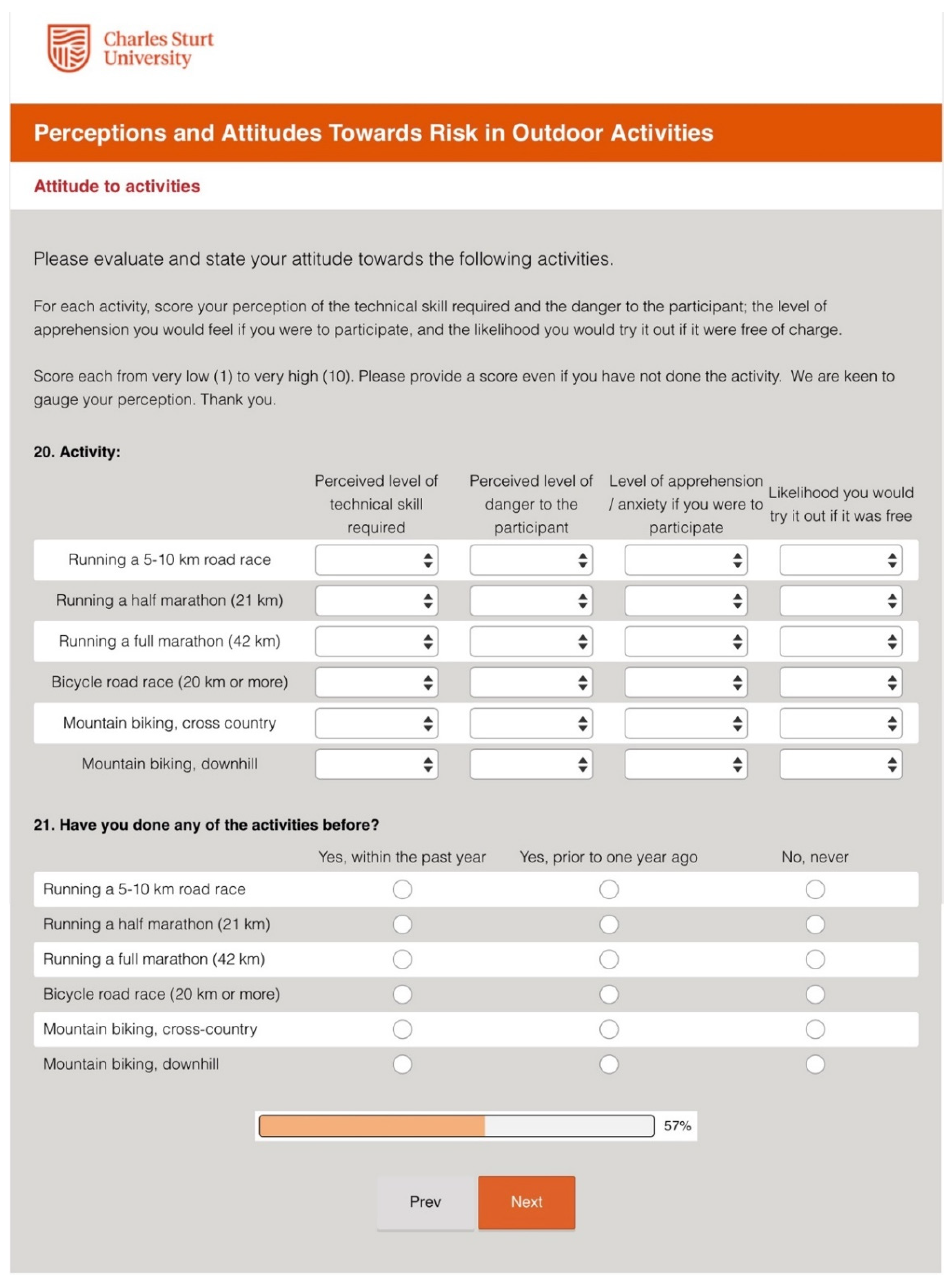

2.2. The Survey Instrument

2.3. Sampling Frames

2.3.1. General Population

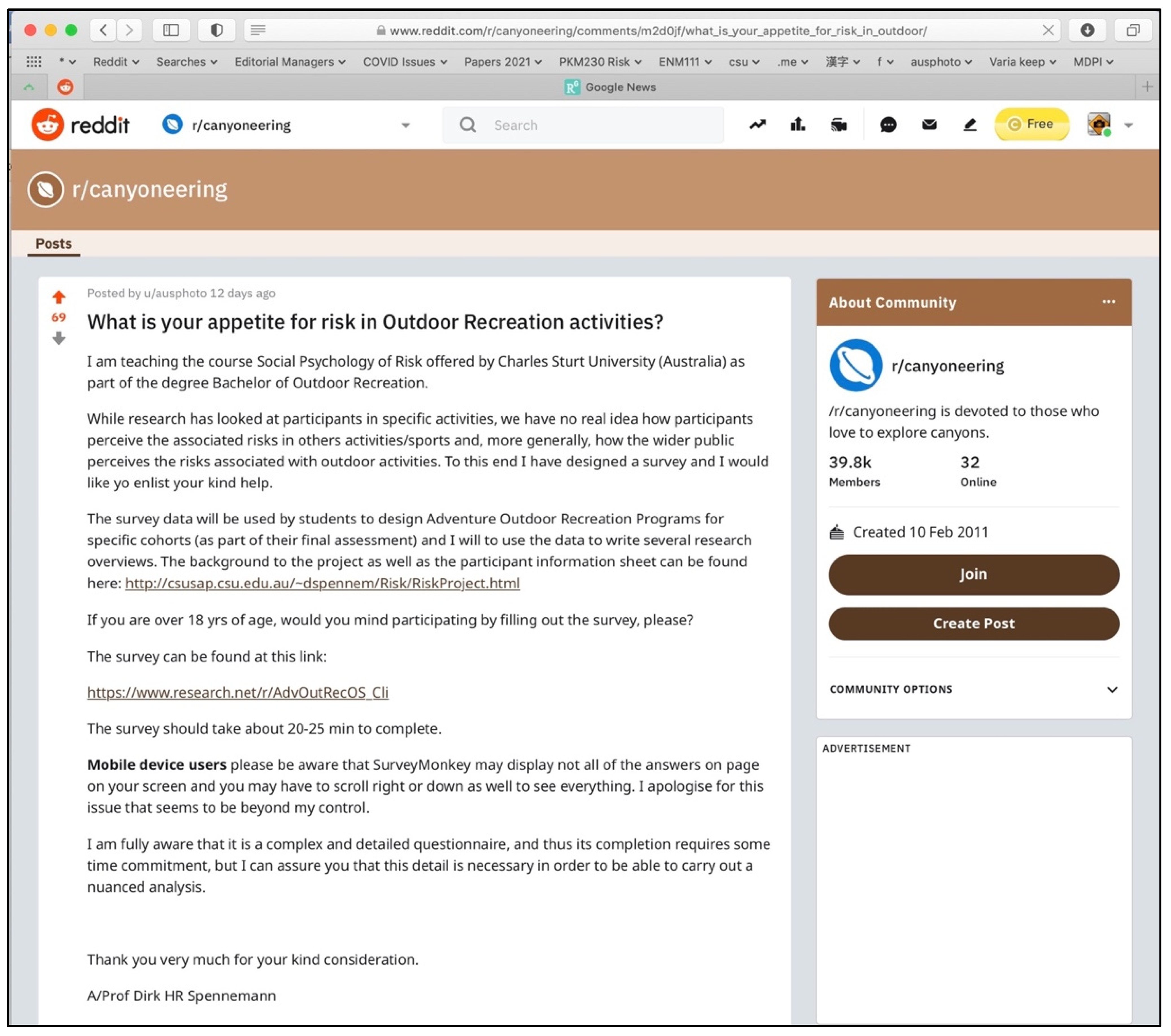

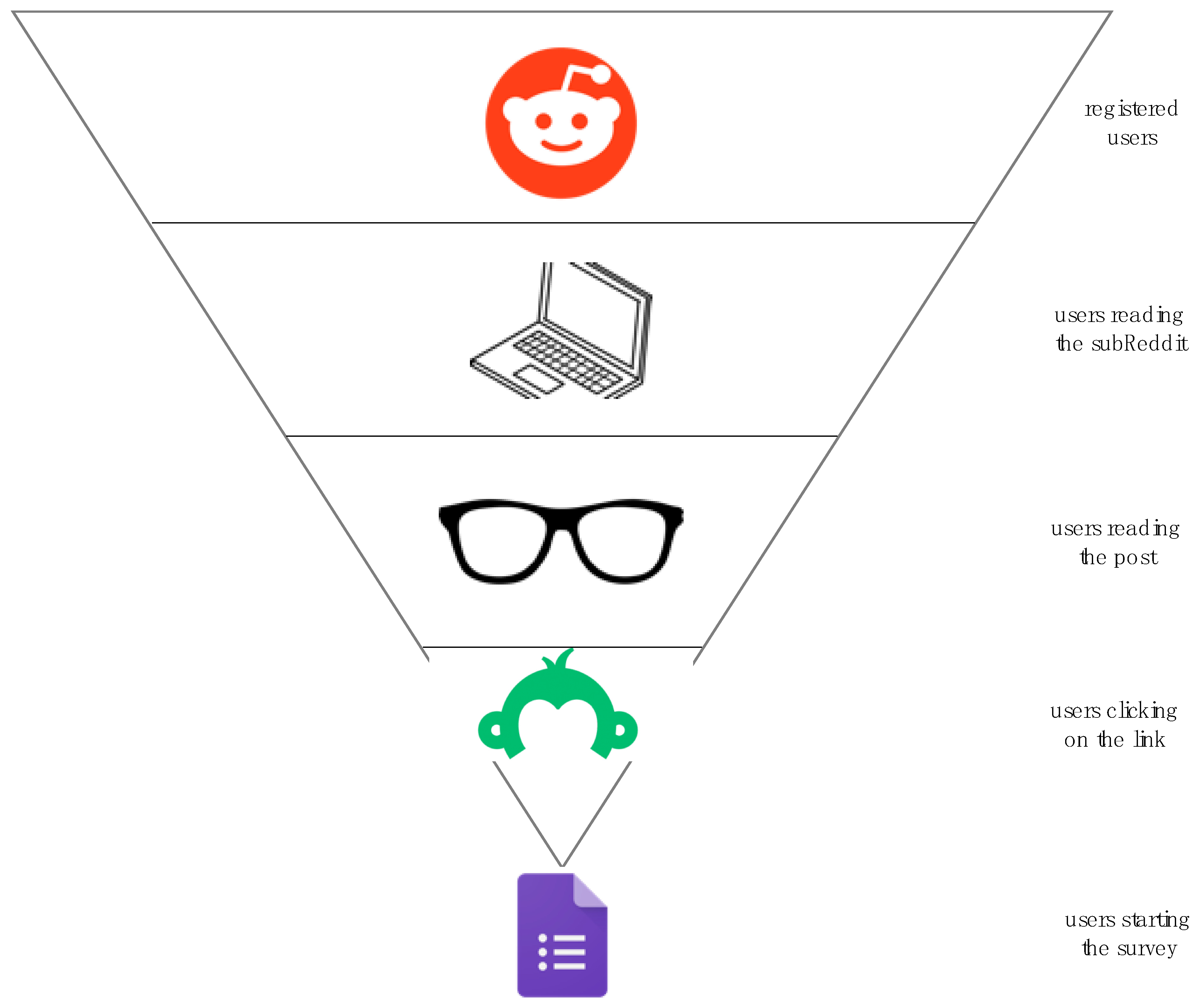

2.3.2. Reddit

2.4. Data Cleaning and Statistical Analysis

2.4.1. Data Cleaning

2.4.2. Statistical Analysis

2.5. Limitations

2.5.1. Data Quality

2.5.2. Participation and Response Rate

3. Results

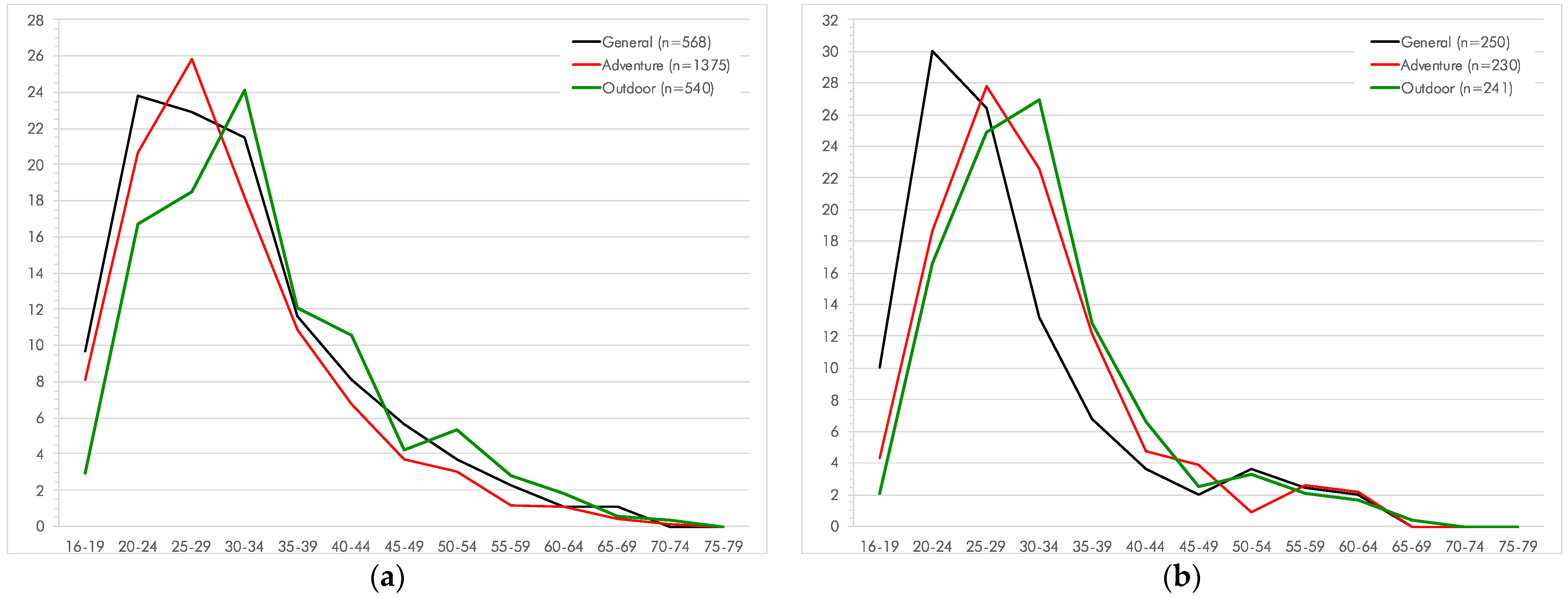

3.1. Demographics

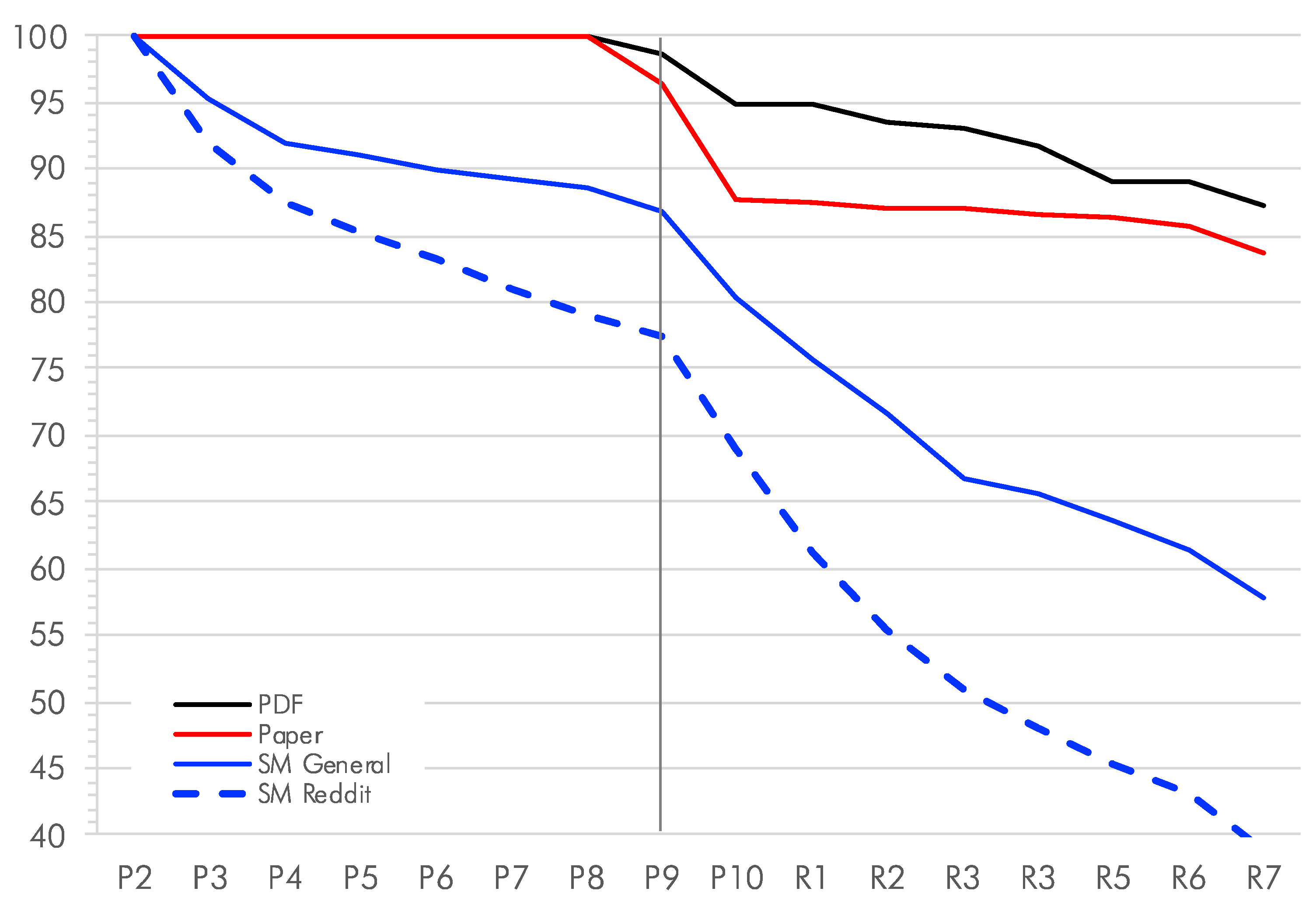

3.2. Participant Attrition

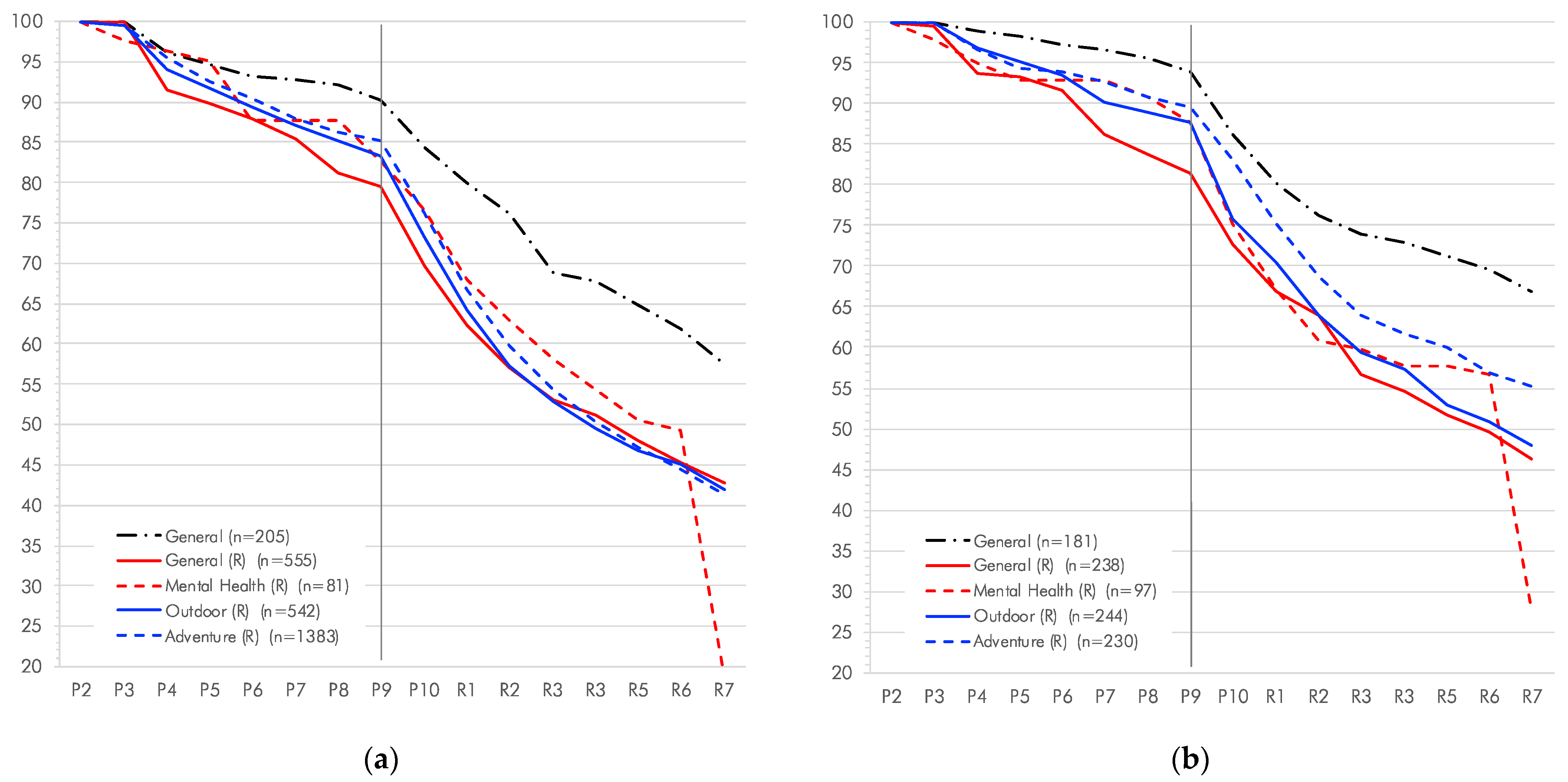

3.2.1. Effects of the Mode of Submission

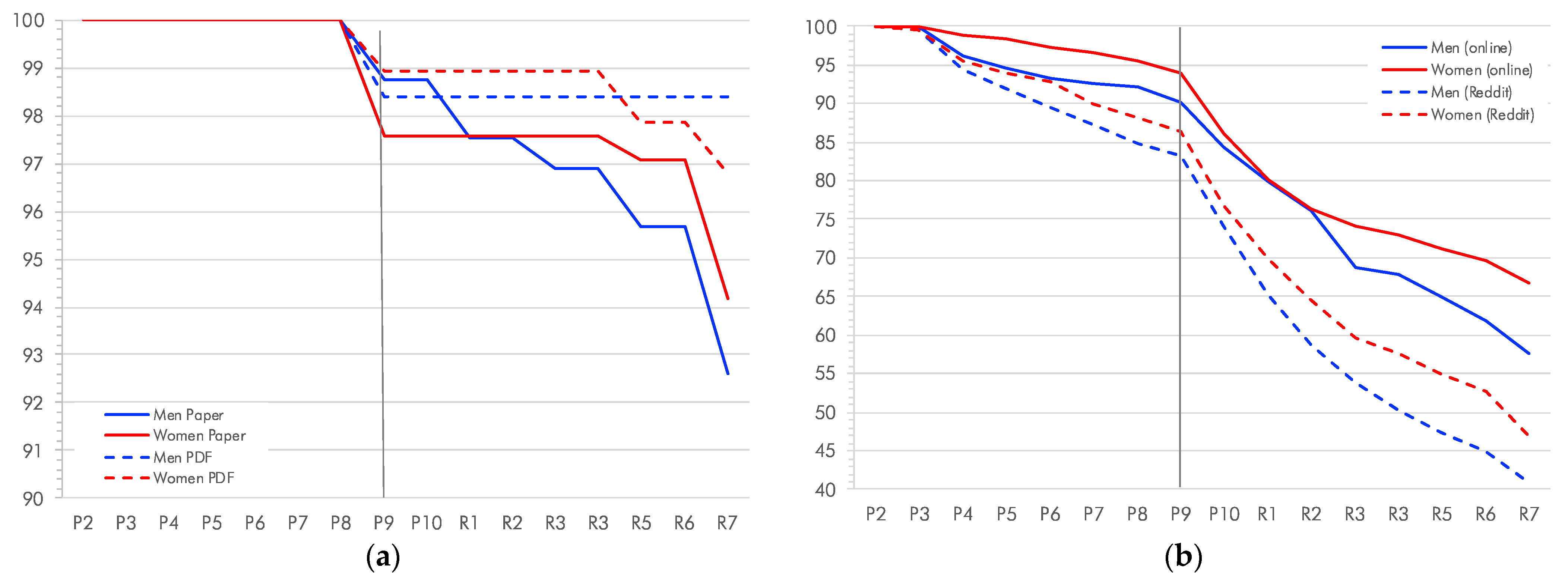

3.2.2. Effects of Gender on Participant Attrition among Reddit and Non-Reddit Cohorts

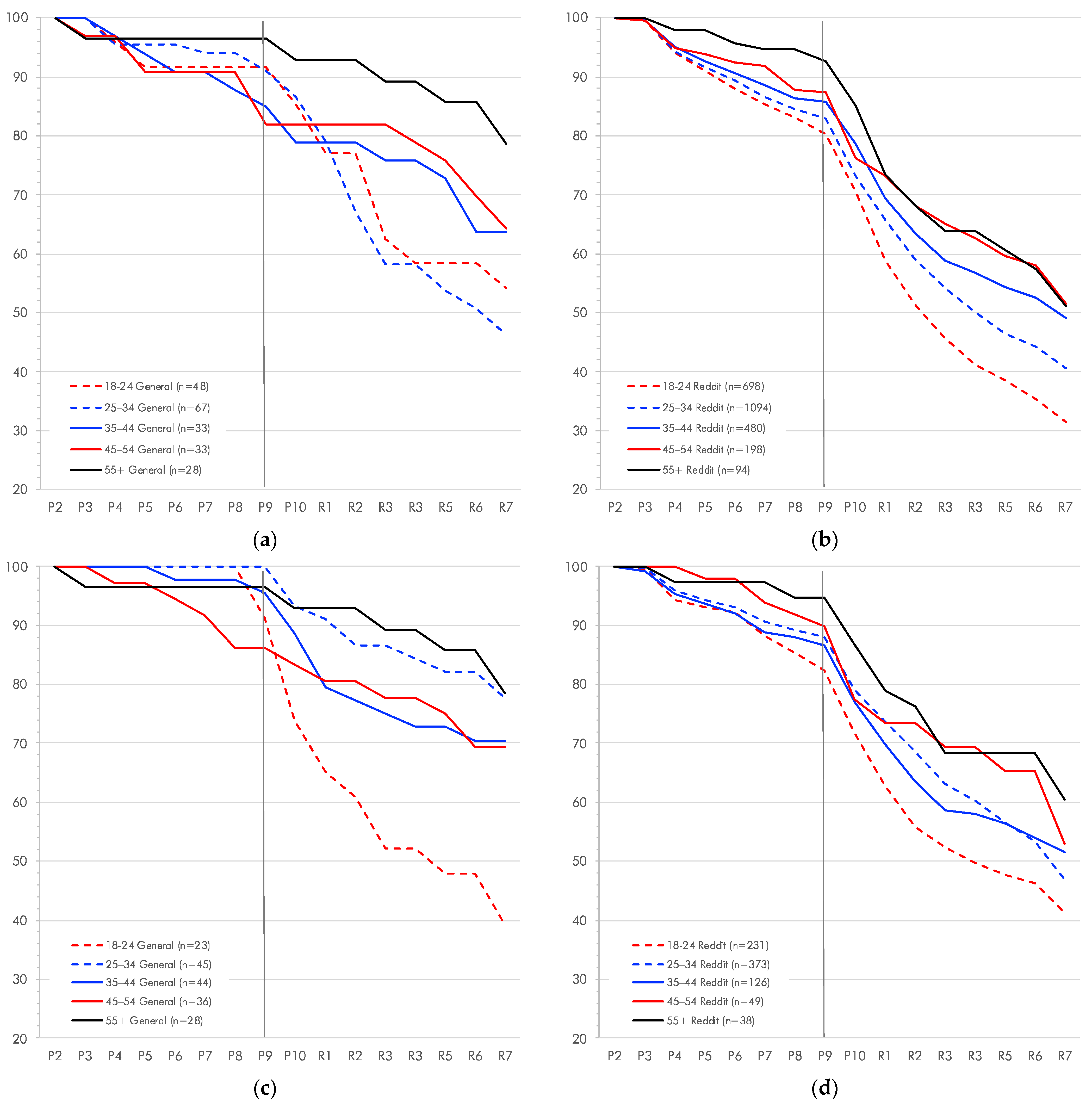

3.2.3. Effects of Age on Participant Attrition among Reddit and Non-Reddit Cohorts

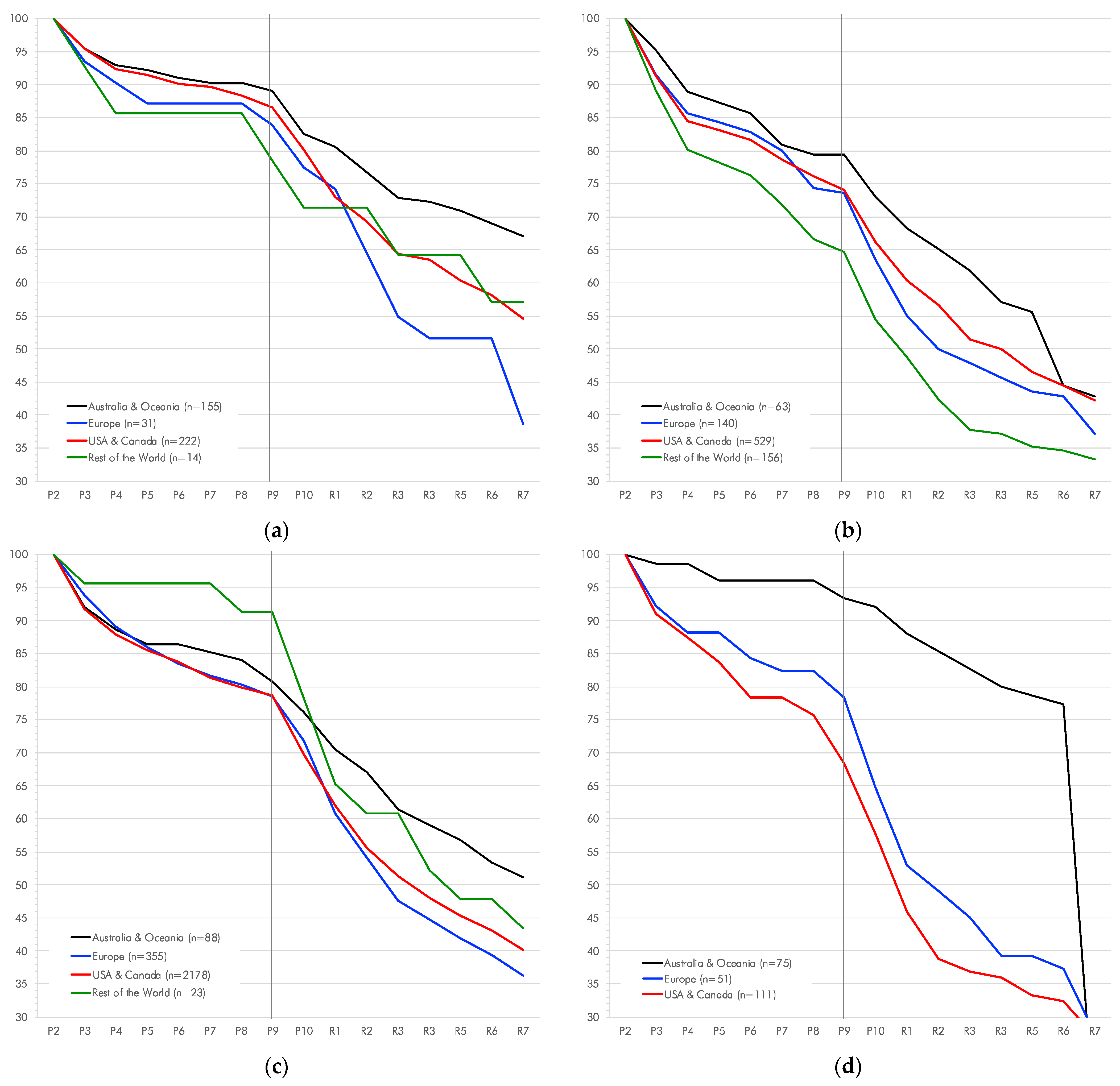

3.2.4. Effects of the Place of Origin on Participant Attrition

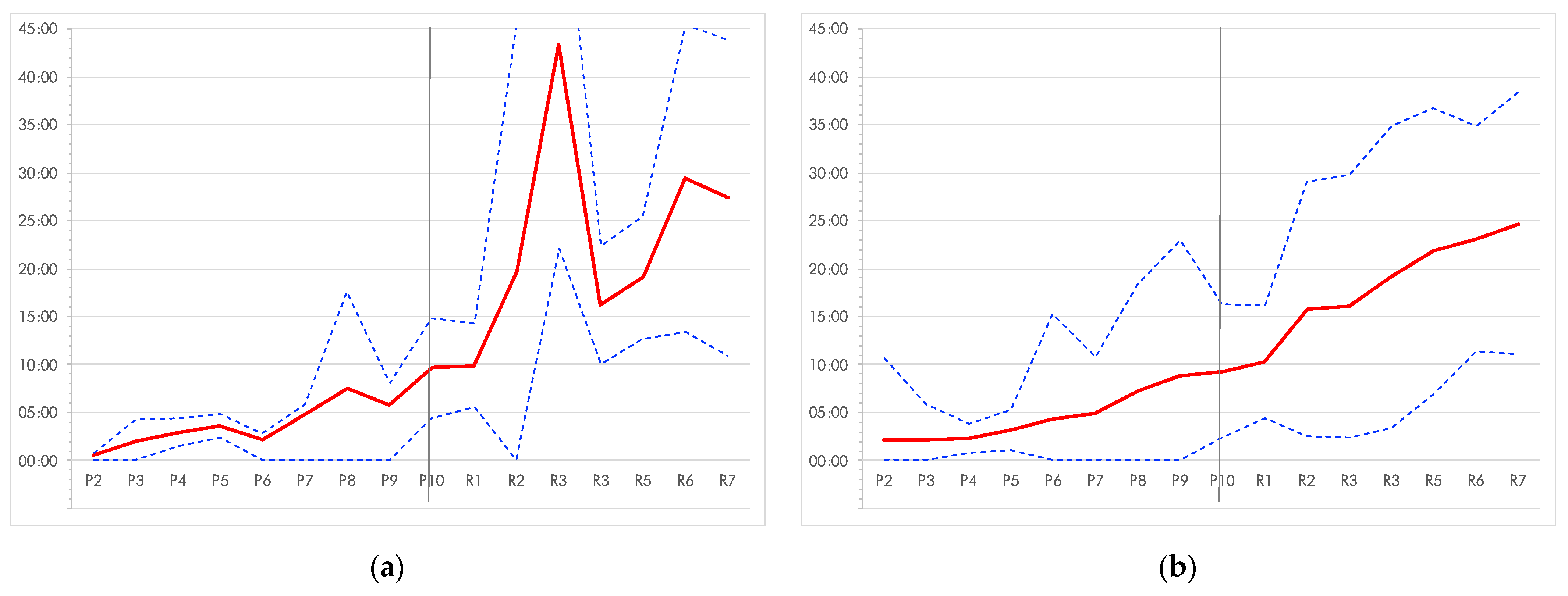

3.2.5. Attrition as a Factor of Time Spent Working on the Survey

4. Discussion

4.1. Effects of Survey Length and Time Investment on Participant Attrition

4.2. Effects of Survey Format on Participant Attrition

4.3. Effects of Gender on Participant Attrition

4.4. Effects of Technology

4.5. Other Effects

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A. Page Images of the Paper-Based Questionnaire

Appendix B. Page Images of the PDF-Based Questionnaire

Appendix C. The Targeted Sub-Reddits

| Code | Sub-Reddit Posted | Sub-Reddit Not-Posted †) |

|---|---|---|

| ADVENTURE: adventure activity specific sub-Reddits | ||

| CAV | caving | |

| CLI | canyoneering, Indoorclimbing, mountaineering | bouldering, climbing |

| DIV | diving, freediving, scuba | |

| IND | india, IndiaMain, IndianArmy, IndiaSpeaks | Indian_Academia |

| KAY | kayaking, rafting, whitewater | |

| MBK | bmx, cyclocross, dirtjumping, fatbike, gravelcycling, mountainbikes, mountainbiking, MTB, xbiking | |

| SNO | skiing, snowboarding | |

| PAR | basejumping, Hanggliding, SkyDiving | |

| r | 2WheelsInTheSnow, adventures, BarefootRunning, bikecommuting, bungeejumping, Equestrian, freeflight, Gliding, rollercoasters, sailing, trailrunning, ultrarunning, WaterSkiing | bicycletouring, bicycling, cycling; wintercycling |

| SLU * | streetluge | |

| SP02 | advrider | |

| SP05 | longboarding, skateboarding | |

| SUR | kiteboarding, windsurfing | surfing |

| OUTDOOR: outdoor activity related sub-Reddits | ||

| OUT | Adirondacks, alaska, AppalachianTrail, CampAndHikeMichigan, coloradohikers, hiking, NationalPark, NCTrails, Outdoor, Outdoors, OutdoorScotland, PacificCrestTrail, PhysicalEducation, PNWhiking, snowshoeing, socalhiking, Survival, TrekkingItaly, UKhiking, vancouverhiking, WAOutdoors, Wilderness, WildernessBackpacking, Yosemite | arizonatrail, backpacking, camping, CampingandHiking, norcalhiking, sports, TrailGuides, walking, |

| MENTAL HEALTH: Mental illness and other disability related sub-Reddits | ||

| SP07 | deaf, disability, disabled, hardofhearing, hearing, HearingAids, spinalcordinjuries | |

| SP08 | BipolarSOs (self) | bipolar, bipolar2, |

| SP09 | Veterans | |

| STr01 | DID | |

| STr02 | depression, mentalillness, PTSD | |

| STr03 | TBI | |

| STr04 | malementalhealth, MentalHealthUK | mentalhealth |

| STr05 | adhd | |

| STr07 | BipolarSOs (support person) | |

| STr08 | TBI (support) | |

| STr09 | OCD | |

| STr10 | AvPD | BPD |

| STr11 | autism (self), neurodiversity | |

| STr13 | autism (support person) | |

| Phobia related sub-Reddits | ||

| SP01 * | acrophobia | claustrophobia, socialanxiety |

| SP02 | thalassohobia | |

| SP04 | anxiety, Phobia | |

| STr06 | claustrophobia | |

| GENERAL: general and research-related sub-Reddits | ||

| OS | self, SeriousConversation | CasualConversation, socialskills |

| SP01 * | NoStupidQuestions | |

| SP03 | psychology, psychologystudents, SampleSize | Teachers |

| SLU * | britishmilitary, RoyalAirForce | AirForce, AustralianMilitary, britisharmy, CanadianForces, Military |

| Str09 | shamelessplug | |

| SP06 | country-specific sub-Reddits: brasil, Jamaica, karachi, Kashmiri, Namibia, Nigeria, pakistan, south Africa, sudan, tanzania | Kenya, Philippines |

References

- Achimescu, Vlad, and Pavel Dimitrov Chachev. 2021. Raising the Flag: Monitoring User Perceived Disinformation on Reddit. Information 12: 4. [Google Scholar] [CrossRef]

- Albayearak, Tahir, and Meltem Caber. 2017. A motivation-based segmentation of holiday tourists participating in white-water rafting. Journal of Destination Marketing & Management 9: 64–7. [Google Scholar] [CrossRef]

- Almerekhi, Hind, Bernard J. Jansen, and Haewoon Kwak. 2020. Investigating Toxicity Across Multiple Reddit Communities, Users, and Moderators. In Paper presented at the Companion Proceedings of the Web Conference 2020, Taipei, Taiwan, April 20–24. [Google Scholar]

- Amaya, Ashley, Ruben Bach, Florian Keusch, and Frauke Kreuter. 2019. New data sources in social science research: Things to know before working with Reddit data. Social Science Computer Review 39: 943–60. [Google Scholar] [CrossRef]

- Balalau, Oana, and Roxana Horincar. 2021. From the Stage to the Audience: Propaganda on Reddit. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume. Stroudsburg: Association for Computational Linguistics. [Google Scholar]

- Balsamo, Duilio, Paolo Bajardi, Alberto Salomone, and Rossano Schifanella. 2021. Patterns of Routes of Administration and Drug Tampering for Nonmedical Opioid Consumption: Data Mining and Content Analysis of Reddit Discussions. Journal of Medical Internet Research 23: e21212. [Google Scholar] [CrossRef]

- Brosnan, Kylie, Bettina Grün, and Sara Dolnicar. 2017. PC, Phone or Tablet?: Use, preference and completion rates for web surveys. International Journal of Market Research 59: 35–55. [Google Scholar]

- Brown, Pike, and Stephen Knowles. 2019. Cash Is Not King in Incentivising Online Surveys. Dunedin: University of Otago. [Google Scholar]

- Buckley, Ralf. 2012. Rush as a key motivation in skilled adventure tourism: Resolving the risk recreation paradox. Tourism Management 33: 961–70. [Google Scholar] [CrossRef] [Green Version]

- Bunting, Amanda M., David Frank, Joshua Arshonsky, Marie A. Bragg, Samuel R. Friedman, and Noa Krawczyk. 2021. Socially-supportive norms and mutual aid of people who use opioids: An analysis of Reddit during the initial COVID-19 pandemic. Drug and Alcohol Dependence 222: 108672. [Google Scholar] [CrossRef]

- Caber, Meltem, and Tahir Albayearak. 2016. Push or pull? Identifying rock climbing tourists’ motivations. Tourism Management 55: 74–84. [Google Scholar] [CrossRef]

- Carron-Arthur, Bradley, John A. Cunningham, and Kathleen M. Griffiths. 2014. Describing the distribution of engagement in an Internet support group by post frequency: A comparison of the 90-9-1 Principle and Zipf’s Law. Internet Interventions 1: 165–8. [Google Scholar] [CrossRef] [Green Version]

- Choga, Ngonidzashe Nicholas. 2019. The Effects of Monetary and Non-Monetary Incentives on Respondent Attrition in Longitudinal Survey. Marter’s thesis, Faculty of Science, University of Cape Town, Cape Town, South Africa. [Google Scholar]

- Conrad, Frederick G., Mick P. Couper, Roger Tourangeau, and Andy Peytchev. 2010. The impact of progress indicators on task completion. Interacting with Computers 22: 417–27. [Google Scholar] [CrossRef] [Green Version]

- Cook, Colleen, Fred Heath, and Russel L. Thompson. 2000. A Meta-Analysis of Response Rates in Web- or Internet-Based Surveys. Educational and Psychological Measurement 60: 821–36. [Google Scholar] [CrossRef]

- Copland, Simon. 2020. Reddit quarantined: Can changing platform affordances reduce hateful material online? Internet Policy Review 9: 1–26. [Google Scholar] [CrossRef]

- Couper, Mick P., Michael W. Traugott, and Mark J. Lamias. 2001. Web survey design and administration. Public Opinion Quarterly 65: 230–53. [Google Scholar] [CrossRef] [PubMed]

- Dillman, Don A., Jolene D. Smyth, and Leah Melani Christian. 2009. Mail and Internet Surveys: The Tailored Design Method, 3rd ed. New York: John Wiley and Sons. [Google Scholar]

- Dosono, Bryan, Bryan Semaan, and Jeff Hemsley. 2017. Exploring AAPI identity online: Political ideology as a factor affecting identity work on Reddit. Paper presented at the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems, Denver, CO, USA, May 6–11. [Google Scholar]

- Duggan, Maeve, and Aaron Smith. 2013. 6% of online adults are reddit users. Pew Internet & American Life Project 3: 1–10. [Google Scholar]

- Duguay, Philippe A. 2021. Read it on Reddit: Homogeneity and Ideological Segregation in the Age of Social News. Social Science Computer Review. [Google Scholar] [CrossRef]

- Edwards, P. 2002. Increasing response rates to postal questionnaires: Systematic review. Bristih Medical Journal 324: 1183. [Google Scholar] [CrossRef] [Green Version]

- Ewert, Alan, Ken Gilbertson, Yuan-Chun Luo, and Alison Voight. 2013. Beyond “because it is there”: Motivations for pursuing adventure recreation activities. Journal of Leisure Research 44: 91–111. [Google Scholar] [CrossRef]

- Fan, Weimiao, and Zheng Yan. 2010. Factors affecting response rates of the web survey: A systematic review. Computers in Human Behavior 26: 132–9. [Google Scholar] [CrossRef]

- Finlay, S. Craig. 2014. Age and gender in Reddit commenting and success. Journal of Information Science Theory and Practice 2: 18–28. [Google Scholar] [CrossRef]

- Gaffney, Devin, and J. Nathan Matias. 2018. Caveat emptor, computational social science: Large-scale missing data in a widely-published Reddit corpus. PLoS ONE 13: e0200162. [Google Scholar] [CrossRef] [Green Version]

- Gasparini, Mattia, Robert Clarisó, Marco Brambilla, and Jordi Cabot. 2020. Participation Inequality and the 90-9-1 Principle in Open Source. Paper presented at 16th International Symposium on Open Collaboration, Virtual, August 26–27. [Google Scholar]

- Gaudette, Tiana, Ryan Scrivens, Garth Davies, and Richard Frank. 2020. Upvoting extremism: Collective identity formation and the extreme right on Reddit. New Media & Society 23: 3491–508. [Google Scholar]

- Glenski, Maria, Corey Pennycuff, and Tim Weninger. 2017. Consumers and curators: Browsing and voting patterns on reddit. IEEE Transactions on Computational Social Systems 4: 196–206. [Google Scholar] [CrossRef] [Green Version]

- Glenski, Maria, Emily Saldanha, and Svitlana Volkova. 2019. Characterizing speed and scale of cryptocurrency discussion spread on reddit. Paper presented at the World Wide Web Conference, WWW ’19, San Francisco, CA, USA, May 13–17. [Google Scholar]

- Hochheimer, Camille J., Roy T. Sabo, Alex H. Krist, Teresa Day, John Cyearus, and Steven H. Woolf. 2016. Methods for evaluating respondent attrition in web-based surveys. Journal of Medical Internet Research 18: e301. [Google Scholar] [CrossRef]

- Hochheimer, Camille J., Roy T. Sabo, Robert A. Perera, Nitai Mukhopadhyay, and Alex H. Krist. 2019. Identifying attrition phases in survey data: Applicability and assessment study. Journal of Medical Internet Research 21: e12811. [Google Scholar] [CrossRef] [PubMed]

- Hoerger, Michael. 2010. Participant dropout as a function of survey length in Internet-mediated university studies: Implications for study design and voluntary participation in psychological research. Cyberpsychology, Behavior, and Social Networking 13: 697–700. [Google Scholar] [CrossRef] [Green Version]

- Holm, Michelle R., Peter Lugosi, Robertico R. Croes, and Edwin N. Torres. 2017. Risk-tourism, risk-taking and subjective well-being: A review and synthesis. Tourism Management 63: 115–22. [Google Scholar] [CrossRef] [Green Version]

- Jamalova, Maral, and Milán György Constantinovits. 2020. Smart for development: Income level as the element of smartphone diffusion. Management Science Letters 10: 1141–50. [Google Scholar] [CrossRef]

- Jhaver, Shagun, Iris Birman, Eric Gilbert, and Amy Bruckman. 2019. Human-machine collaboration for content regulation: The case of Reddit Automoderator. ACM Transactions on Computer-Human Interaction (TOCHI) 26: 1–35. [Google Scholar] [CrossRef]

- Junco, Reynol, Dan Merson, and Daniel W. Salter. 2010. The effect of gender, ethnicity, and income on college students’ use of communication technologies. Cyberpsychology, Behavior, and Social Networking 13: 619–27. [Google Scholar] [CrossRef] [PubMed]

- Kato, Takumi, and Taro Miura. 2021. The impact of questionnaire length on the accuracy rate of online surveys. Journal of Marketing Analytics 9: 1–16. [Google Scholar] [CrossRef]

- Khadjesari, Zarnie, Elizabeth Murray, Eleftheria Kalaitzaki, Ian R. White, Jim McCambridge, Simon G. Thompson, Paul Wallace, and Christine Godfrey. 2011. Impact and costs of incentives to reduce attrition in online trials: Two randomized controlled trials. Journal of Medical Internet Research 13: e26. [Google Scholar] [CrossRef] [PubMed]

- Kilgo, Danielle K., Yee Man Margaret Ng, Martin J. Riedl, and Ivan Lacasa-Mas. 2018. Reddit’s veil of anonymity: Predictors of engagement and participation in media environments with hostile reputations. Social Media+ Society 4: 2056305118810216. [Google Scholar] [CrossRef] [Green Version]

- Koitsalu, Marie, Martin Eklund, Jan Adolfsson, Henrik Grönberg, and Yvonne Brandberg. 2018. Effects of pre-notification, invitation length, questionnaire length and reminder on participation rate: A quasi-randomised controlled trial. BMC Medical Research Methodology 18: 3. [Google Scholar] [CrossRef] [Green Version]

- Liu, Mingnan, and Laura Wronski. 2018. Examining completion rates in web surveys via over 25,000 real-world surveys. Social Science Computer Review 36: 116–24. [Google Scholar] [CrossRef] [Green Version]

- Lu, John, Sumati Sridhar, Ritika Pandey, Mohammad Al Hasan, and Georege Mohler. 2019. Investigate transitions into drug addiction through text mining of Reddit data. Paper presented at the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, August 4–8. [Google Scholar]

- Lugtig, Peter, and Annemieke Luiten. 2021. Do shorter stated survey length and inclusion of a QR code in an invitation letter lead to better response rates? Survey Methods: Insights from the Field (SMIF). Available online: https://surveyinsights.org/?p=14216 (accessed on 29 March 2021).

- Maria Gstaettner, Anna, Kate Rodger, and Diane Lee. 2017. Visitor perspectives of risk management in a natural tourism setting: An application of the Theory of Planned Behaviour. Journal of Outdoor Recreation and Tourism 19: 1–10. [Google Scholar] [CrossRef]

- McCambridge, Jim, Eleftheria Kalaitzaki, Ian R. White, Zarnie Khadjesari, Elizabeth Murray, Stuart Linke, Simon G. Thompson, Christine Godfrey, and Paul Wallace. 2011. Impact of length or relevance of questionnaires on attrition in online trials: Randomized controlled trial. Journal of Medical Internet Research 13: e96. [Google Scholar] [CrossRef]

- Mirta, Galesic, and Bosnjak Michael. 2009. Effects of Questionnaire Length on Participation and Indicators of Response Quality in a Web Survey. Public Opinion Quarterly 73: 349–60. [Google Scholar] [CrossRef]

- Monroe, Martha C., and Damian C. Adams. 2012. Increasing response rates to web-based surveys. Journal of Extension 50: 6–7. [Google Scholar]

- Moore, Carrie, and Lisa Chuang. 2017. Redditors revealed: Motivational factors of the Reddit community. Paper presented at the 50th Hawaii International Conference on System Sciences, Village, HI, USA, January 4–7. [Google Scholar]

- Mouter, Niek, Marion Collewet, G. Ardine de Wit, Adrienne Rotteveel, Mattijs S. Lambooij, and Roselinde Kessels. 2021. Societal Effects Are a Major Factor for the Uptake of the Coronavirus Disease 2019 (COVID-19) Digital Contact Tracing App in The Netherlands. Value in Health 24: 658–67. [Google Scholar] [CrossRef]

- Naidoo, Paidoo, P. Ramseook-Munhurrun, N. Vanessa Seebaluck, and Sharone Janvier. 2015. Investigating the Motivation of Baby Boomers for Adventure Tourism. Procedia-Social and Behavioral Sciences 175: 244–51. [Google Scholar] [CrossRef] [Green Version]

- Nissen, Helge, and Monique Janneck. 2019. Does User Choice of Device Impact the Results of Online Surveys?: An Analysis of the Effects of Screen Widths and Questionnaire Layouts. International Journal of End-User Computing and Development 8: 1–17. [Google Scholar] [CrossRef]

- Okon, Edidiong, Vishnutheja Rachakonda, Hyo Jung Hong, Chris Callison-Burch, and Jules B. Lipoff. 2020. Natural language processing of Reddit data to evaluate dermatology patient experiences and therapeutics. Journal of the American Academy of Dermatology 83: 803–8. [Google Scholar] [CrossRef]

- Patel, Sahil. 2020. Reddit Claims 52 Million Daily Users, Revealing a Key Figure for Social-Media Platforms. Wall Street Journal, December 1. [Google Scholar]

- Pomfret, Gill. 2011. Package mountaineer tourists holidaying in the French Alps: An evaluation of key influences encouraging their participation. Tourism Management 32: 501–10. [Google Scholar] [CrossRef] [Green Version]

- Potter, Martin. 2021. Bad actors never sleep: Content manipulation on Reddit. Continuum 35: 706–18. [Google Scholar] [CrossRef]

- Robb, Kathryn A., Lauren Gatting, and Jane Wardle. 2017. What impact do questionnaire length and monetary incentives have on mailed health psychology survey response? British Journal of Health Psychology 22: 671–85. [Google Scholar] [CrossRef] [PubMed]

- Robinson-Cimpian, Joseph P. 2014. Inaccurate estimation of disparities due to mischievous responders: Several suggestions to assess conclusions. Educational Researcher 43: 171–85. [Google Scholar] [CrossRef]

- Sarantakos, Sotirios. 2012. Social Research, 4th ed. Basingstoke: Macmillan International Higher Education. [Google Scholar]

- Sharma, Nandini, Saurav Basu, and Pragya Sharma. 2021. Sociodemographic determinants of the adoption of a contact tracing application during the COVID-19 epidemic in Delhi, India. Health Policy and Technology 10: 100496. [Google Scholar] [CrossRef]

- Shatz, Itamar. 2017. Fast, free, and targeted: Reddit as a source for recruiting participants online. Social Science Computer Review 35: 537–49. [Google Scholar] [CrossRef]

- SimilarWeb. 2021. Reddit.com April 2021 Overview. Available online: https://www.similarweb.com/website/reddit.com/ (accessed on 10 May 2021).

- Spennemann, Dirk H.R. 1985. Shaded Graphs of Skeletons: A Plea for Standardization. New Zealand Archaeological Association Newsletter 28: 184–86. [Google Scholar]

- Squirrell, Tim. 2019. Platform dialectics: The relationships between volunteer moderators and end users on reddit. New Media & Society 21: 1910–27. [Google Scholar]

- Staudt Willet, K. Bret, and Jeffrey P. Carpenter. 2020. Teachers on Reddit? Exploring contributions and interactions in four teaching-related subreddits. Journal of Research on Technology in Education 52: 216–33. [Google Scholar] [CrossRef]

- Stevic, Anja, Desirée Schmuck, Jörg Matthes, and Kathrin Karsay. 2021. ‘Age Matters’: A panel study investigating the influence of communicative and passive smartphone use on well-being. Behaviour & Information Technology 40: 176–90. [Google Scholar]

- Sue, Valerie M., and Lois A. Ritter. 2012. Conducting Online Surveys. Los Angeles: Sage. [Google Scholar]

- Tangmanee, Chatpong, and Phattharaphong Niruttinanon. 2019. Web Survey’s Completion Rates: Effects of Forced Responses, Question Display Styles, and Subjects’ Attitude. International Journal of Research in Business and Social Science (2147-4478) 8: 20–29. [Google Scholar] [CrossRef] [Green Version]

- Tankovska, H. 2020a. Reddit Usage in Finland in 2020, by Frequency. Available online: https://www.statista.com/statistics/1058811/reddit-usage-frequency-in-finland/ (accessed on 29 March 2021).

- Tankovska, H. 2020b. Reddit Usage in Sweden in 2020, by Frequency. Available online: https://www.statista.com/statistics/860453/reddit-usage-by-frequency-sweden/ (accessed on 29 March 2021).

- Tankovska, H. 2020c. Reddit Usage in Norway in 2020, by Frequency. Available online: https://www.statista.com/statistics/1058795/reddit-usage-frequency-in-norway/ (accessed on 29 March 2021).

- Tankovska, H. 2020d. Reddit Usage in Denmark in 2020, by Frequency. Available online: https://www.statista.com/statistics/860438/reddit-usage-frequency-in-denmark/ (accessed on 29 March 2021).

- Tankovska, H. 2021a. Reddit Usage Reach in the United States 2019, by Annual Household Income. Available online: https://www.statista.com/statistics/261774/share-of-us-internet-users-who-use-reddit-by-annual-income/ (accessed on 29 March 2021).

- Tankovska, H. 2021b. Reddit Usage Reach in the United States 2019, by Education. Available online: https://www.statista.com/statistics/261776/share-of-us-internet-users-who-use-reddit-by-education-level/ (accessed on 29 March 2021).

- Tankovska, H. 2021c. Reddit Usage Reach in the United States 2019, by Gender. Available online: https://www.statista.com/statistics/261765/share-of-us-internet-users-who-use-reddit-by-gender/ (accessed on 29 March 2021).

- Tankovska, H. 2021d. Reddit Usage Reach in the United States 2019, by Age Group. Available online: https://www.statista.com/statistics/261766/share-of-us-internet-users-who-use-reddit-by-age-group/ (accessed on 29 March 2021).

- Tankovska, H. 2021e. Reddit Usage Reach in the United States 2019, by Urbanity. Available online: https://www.statista.com/statistics/261783/share-of-us-internet-users-who-use-reddit-by-urbanity/ (accessed on 29 March 2021).

- Tankovska, H. 2021f. Frequency of Reddit Use in the United States as of 3rd Quarter 2020. Available online: https://www.statista.com/statistics/815177/reddit-usage-frequency-usa/ (accessed on 29 March 2021).

- Tankovska, H. 2021g. Regional Distribution of Desktop Traffic to Reddit.com as of December 2020, by Country. Available online: https://www.statista.com/statistics/325144/reddit-global-active-user-distribution/ (accessed on 29 March 2021).

- Tankovska, H. 2021h. Reddit Usage Reach in the United States 2019, by Ethnicity. Available online: https://www.statista.com/statistics/261770/share-of-us-internet-users-who-use-reddit-by-ethnicity/ (accessed on 29 March 2021).

- Van Mierlo, Trevor. 2014. The 1% rule in four digital health social networks: An observational study. Journal of Medical Internet Research 16: e33. [Google Scholar] [CrossRef]

- Wang, Lei, Yongcheng Zhan, Qiudan Li, Daniel D Zeng, Scott J. Leischow, and Janet Okamoto. 2015. An examination of electronic cigarette content on social media: Analysis of e-cigarette flavor content on Reddit. International Journal of Environmental Research and Public Health 12: 14916–35. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ward, M. K., Adam W. Meade, Christopher M. Allred, Gabriel Pappalardo, and J. William Stoughton. 2017. Careless response and attrition as sources of bias in online survey assessments of personality traits and performance. Computers in Human Behavior 76: 417–30. [Google Scholar] [CrossRef]

- Wenz, Alexander. 2017. Completing Web Surveys on Mobile Devices: Does Screen Size Affect Data Quality? ISER Working Paper Series 2017-05; Wiesbaden: Springer. [Google Scholar]

- Yang, Elaine Chiao Ling, Catheryn Khoo-Lattimore, and Charles Arcodia. 2017. A systematic literature review of risk and gender research in tourism. Tourism Management 58: 89–100. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Haotian, and Ayelet Fishbach. 2016. The pitfall of experimenting on the web: How unattended selective attrition leads to surprising (yet false) research conclusions. Journal of Personality and Social Psychology 111: 493. [Google Scholar] [CrossRef] [Green Version]

| Sub-Reddit | Registered Users in the Sub-Reddit | Average % of Registered Users Online | Total of Poll Participants | Poll Participants in % of Registered Users | Poll Participants in % of Average Number of Registered Users Online |

|---|---|---|---|---|---|

| bmx | 38,200 | 0.585 | 64 | 0.168 | 28.6 |

| cyclocross | 18,000 | 0.266 | 54 | 0.300 | 112.9 |

| dirtjumping | 5900 | 0.538 | 36 | 0.610 | 113.4 |

| fatbike | 8900 | 0.465 | 49 | 0.551 | 118.4 |

| fixed gear | 69,100 | 0.539 | 140 | 0.203 | 37.6 |

| gravelcycling | 45,000 | 0.628 | 97 | 0.216 | 34.3 |

| mountainbiking | 86,200 | 0.283 | 71 | 0.082 | 29.1 |

| MTB | 223,000 | 0.707 | 170 | 0.076 | 10.8 |

| single speed | 6600 | 0.105 | 100 | 1.515 | 1440.0 |

| xbiking | 35,800 | 0.499 | 88 | 0.246 | 49.2 |

| Non-Reddit | ||||||

|---|---|---|---|---|---|---|

| Men | Women | n | Men | Women | n | |

| 16–19 | 77.3 | 22.7 | 242 | 68.8 | 31.3 | 16 |

| 20–24 | 74.4 | 25.6 | 687 | 67.3 | 32.7 | 55 |

| 25–29 | 73.4 | 26.6 | 804 | 64.1 | 35.9 | 64 |

| 30–34 | 76.0 | 24.0 | 663 | 54.2 | 45.8 | 48 |

| 35–39 | 77.5 | 22.5 | 364 | 40.8 | 59.2 | 49 |

| 40–44 | 81.8 | 18.2 | 242 | 46.4 | 53.6 | 28 |

| 45–49 | 81.5 | 18.5 | 130 | 52.0 | 48.0 | 25 |

| 50–54 | 78.6 | 21.4 | 117 | 48.4 | 51.6 | 31 |

| 55–59 | 69.8 | 30.2 | 63 | 46.2 | 53.8 | 26 |

| 60–64 | 64.6 | 35.4 | 48 | 47.4 | 52.6 | 19 |

| 65–69 | 88.2 | 11.8 | 17 | 30.0 | 70.0 | 10 |

| 70+ | 100.0 | 0.0 | 4 | 37.5 | 62.5 | 8 |

| All | 75.8 | 24.2 | 3381 | 53.6 | 46.4 | 379 |

| Women | Men | |||||||

|---|---|---|---|---|---|---|---|---|

| (a) Complete | Median | Avg ± StDev | Min–Max | N | Median | Avg ± StDev | Min–Max | n |

| Non-Reddit | 22:56 | 26:31 ± 14:19 | 11:58–114:28 | 112 | 22:46 | 28:17 ± 18:38 | 11:10–118:25 | 109 |

| General Reddit | 20:05 | 23:50 ± 11:45 | 8:41–70:10 | 107 | 21:44 | 25:08 ± 13:18 | 6:46–99:12 | 230 |

| Outdoor | 21:45 | 23:42 ± 10:01 | 8:10–69:25 | 114 | 21:42 | 25:17 ± 15:09 | 7:54–111:06 | 220 |

| Adventure | 20:30 | 24:46 ± 15:22 | 6:26–115:22 | 123 | 21:12 | 24:31 ± 13:28 | 5:23–112:33 | 545 |

| Mental Health | 21:15 | 23:08 ± 19:03 | 13:26–35:27 | 26 | 22:59 | 22:37 ± 8:30 | 7:51–31:32 | 14 |

| Total | 21:41 | 24:53 ± 13.33 | 6:26–115:22 | 487 | 21:42 | 25:08 ± 14.19 | 5:23–118:25 | 1122 |

| (b) Incomplete | Median | Avg ± StDev | Min–Max | n | Median | Avg ± StDev | Min–Max | n |

| Non-Reddit | 07:20 | 13:22 ± 16:59 | 1:03–98:27 | 58 | 8:56 | 12:45 ± 14:04 | 1:00–87:33 | 83 |

| General Reddit | 5:49 | 8:23 ± 7:41 | 0:41–46:56 | 125 | 6:43 | 10:28 ± 14:12 | 0:37–108:44 | 312 |

| Outdoor | 7:18 | 10:05 ± 11:45 | 0:54–103:53 | 124 | 7:03 | 10:24 ± 12:42 | 0:55–108:03 | 309 |

| Adventure | 8:01 | 10:47 ± 12:28 | 1:11–106:40 | 103 | 7:29 | 10:38 ± 11:50 | 0:40–104:44 | 802 |

| Mental Health | 10:07 | 13:26 ± 9:18 | 1:24–37:55 | 69 | 14:48 | 18:28 ± 15:43 | 1:07–86:22 | 65 |

| Total | 7:30 | 10:40 ± 11.32 | 0:41–106:40 | 489 | 7:26 | 10:59 ± 12:46 | 0:37–108:44 | 1584 |

| P2 | P3 | P4 | P5 | P6 | P7 | P8 | P9 | P10 | R1 | R2 | R3 | R3 | R5 | R6 | R7 | n | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 | 2.2 | 19.4 | 7.8 | 5.0 | 13.3 | 7.2 | 8.3 | 29.4 | 4.4 | 2.2 | 0.6 | 180 | |||||

| 10 | 1.3 | 2.6 | 1.3 | 17.9 | 29.8 | 19.9 | 12.6 | 4.0 | 2.6 | 1.3 | 1.3 | 5.3 | 151 | ||||

| 15 | 0.7 | 4.0 | 6.7 | 8.1 | 10.7 | 4.0 | 6.7 | 2.7 | 4.7 | 51.7 | 149 | ||||||

| 20 | 0.6 | 1.2 | 0.6 | 3.0 | 2.4 | 4.2 | 2.4 | 7.9 | 77.6 | 165 | |||||||

| 25 | 0.8 | 0.8 | 0.8 | 4.6 | 10.7 | 82.4 | 131 | ||||||||||

| 30 | 1.1 | 1.1 | 1.1 | 1.1 | 2.2 | 12.2 | 81.1 | 90 | |||||||||

| 35 | 2.4 | 2.4 | 2.4 | 92.7 | 41 | ||||||||||||

| 40 | 4.3 | 8.7 | 4.3 | 82.6 | 23 | ||||||||||||

| 45 | 12.5 | 87.5 | 8 | ||||||||||||||

| 50 | 12.5 | 87.5 | 8 | ||||||||||||||

| 55 | 12.5 | 12.5 | 12.5 | 12.5 | 50.0 | 8 | |||||||||||

| 60 | 100.0 | 5 | |||||||||||||||

| 90 | 11.1 | 88.9 | 9 | ||||||||||||||

| 120 | 12.5 | 12.5 | 12.5 | 62.5 | 8 | ||||||||||||

| 150 | 20.0 | 20.0 | 60.0 | 5 | |||||||||||||

| 180 | 4.2 | 4.2 | 8.3 | 4.2 | 8.3 | 70.8 | 24 |

0.01–2.0;

0.01–2.0;  2.1–8.2;

2.1–8.2;  8.3–18.4;

8.3–18.4;  18.5–32.7;

18.5–32.7;  32.8–51.0;

32.8–51.0;  51.1–73.5;

51.1–73.5;  73.6–100.

73.6–100.| P2 | P3 | P4 | P5 | P6 | P7 | P8 | P9 | P10 | R1 | R2 | R3 | R3 | R5 | R6 | R7 | n | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 | 1.7 | 23.4 | 11.5 | 9.9 | 9.4 | 8.5 | 4.4 | 22.1 | 6.2 | 2.4 | 0.2 | 0.2 | 0.2 | 585 | |||

| 10 | 0.2 | 0.8 | 1.1 | 1.5 | 3.2 | 17.6 | 31.5 | 20.4 | 11.3 | 4.0 | 2.1 | 1.5 | 0.6 | 4.2 | 476 | ||

| 15 | 0.2 | 0.2 | 2.4 | 6.2 | 11.5 | 11.5 | 8.6 | 7.9 | 4.5 | 5.0 | 41.9 | 418 | |||||

| 20 | 0.2 | 0.2 | 0.7 | 2.1 | 1.9 | 2.4 | 4.0 | 4.8 | 5.5 | 7.1 | 71.0 | 420 | |||||

| 25 | 0.3 | 0.3 | 2.9 | 1.3 | 1.6 | 1.9 | 1.6 | 2.6 | 2.6 | 7.7 | 77.3 | 313 | |||||

| 30 | 0.5 | 1.1 | 1.1 | 2.6 | 1.6 | 1.1 | 0.5 | 5.3 | 86.2 | 189 | |||||||

| 35 | 1.8 | 0.9 | 1.8 | 1.8 | 3.6 | 6.4 | 83.6 | 110 | |||||||||

| 40 | 2.4 | 2.4 | 2.4 | 2.4 | 4.9 | 85.4 | 41 | ||||||||||

| 45 | 2.2 | 2.2 | 2.2 | 11.1 | 2.2 | 4.4 | 8.9 | 66.7 | 45 | ||||||||

| 50 | 4.5 | 9.1 | 4.5 | 4.5 | 4.5 | 4.5 | 4.5 | 63.6 | 22 | ||||||||

| 55 | 6.7 | 6.7 | 13.3 | 73.3 | 15 | ||||||||||||

| 60 | 20.0 | 10.0 | 10.0 | 20.0 | 40.0 | 10 | |||||||||||

| 90 | 2.7 | 2.7 | 16.2 | 8.1 | 2.7 | 5.4 | 5.4 | 56.8 | 37 | ||||||||

| 120 | 4.0 | 16.0 | 4.0 | 4.0 | 4.0 | 4.0 | 64.0 | 25 | |||||||||

| 150 | 12.5 | 12.5 | 6.3 | 68.8 | 16 | ||||||||||||

| 180 | 1.7 | 1.7 | 5.0 | 5.0 | 1.7 | 5.0 | 6.7 | 5.0 | 1.7 | 3.3 | 63.3 | 60 |

0.01–2.0;

0.01–2.0;  2.1–8.2;

2.1–8.2;  8.3–18.4;

8.3–18.4;  18.5–32.7;

18.5–32.7;  32.8–51.0;

32.8–51.0;  51.1–73.5;

51.1–73.5;  73.6–100.

73.6–100.Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Spennemann, D.H.R. Persistence and Attrition among Participants in a Multi-Page Online Survey Recruited via Reddit’s Social Media Network. Soc. Sci. 2022, 11, 31. https://doi.org/10.3390/socsci11020031

Spennemann DHR. Persistence and Attrition among Participants in a Multi-Page Online Survey Recruited via Reddit’s Social Media Network. Social Sciences. 2022; 11(2):31. https://doi.org/10.3390/socsci11020031

Chicago/Turabian StyleSpennemann, Dirk H.R. 2022. "Persistence and Attrition among Participants in a Multi-Page Online Survey Recruited via Reddit’s Social Media Network" Social Sciences 11, no. 2: 31. https://doi.org/10.3390/socsci11020031

APA StyleSpennemann, D. H. R. (2022). Persistence and Attrition among Participants in a Multi-Page Online Survey Recruited via Reddit’s Social Media Network. Social Sciences, 11(2), 31. https://doi.org/10.3390/socsci11020031