1. Introduction

During instrumental performance, musicians are exposed to auditory, visual, and also somatosensory cues. This multisensory experience has been studied since long ago

Campbell (

2014);

Cochran (

1931);

Galembo and Askenfelt (

2003);

Hodges et al. (

2005);

Palmer et al. (

1989);

Turner (

1939); however, the specific interaction between sound and vibrations in musical instrument playing has only been the object of systematic research since the 1980s

Askenfelt and Jansson (

1992);

Fontana et al. (

2017);

Keane and Dodd (

2011);

Marshall (

1986);

Saitis (

2013);

Suzuki (

1986);

Wollman et al. (

2014), with an increasing recognition of the prominent role of tactile and force feedback cues in the complex perception–action mechanisms involved

O’Modhrain and Gillespie (

2018). More recently, research on the somatosensory perception of musical instruments has been consolidated, as testified by the emerging “musical haptics” topic

Papetti and Saitis (

2018a). This growing interest is in part driven by the increased availability of accurate sensors capable of recording touch gestures and vibratory cues, and affordable and efficient actuators rendering vibrations or force. Touch sensors and actuators can be employed first to investigate the joint role of the auditory and somatosensory modalities in the perception of musical instruments, and then to realize novel musical interfaces and instruments, building on the lessons of the previous investigation

Marshall and Wanderley (

2006);

O’Modhrain and Chafe (

2000);

Overholt et al. (

2011). Through this process, richer or even unconventional feedback cues can be conveyed to the performer, with the goal of enhancing engagement and improving the initial acceptability and playability of the new musical instrument

Birnbaum and Wanderley (

2007);

Fontana et al. (

2015);

Michailidis and Bullock (

2011);

Young et al. (

2017).

In this perspective, several musical haptics studies aspired, on the one hand, to design next-generation digital musical interfaces yielding haptic feedback

Papetti and Saitis (

2018b), and, on the other hand, to assess if and how haptic feedback is relevant to the perceived quality of musical instruments, to the performer’s experience and performance, and to the resulting musical outcome

Fontana et al. (

2018);

Saitis et al. (

2018);

Schmid (

2014);

Young et al. (

2018).

While the interest on haptic human–computer interfaces has grown exponentially in recent years, the transition from a human–computer to a performer–digital instrument interaction often requires a shift of the attention on different sets of design requirements and aspects. In addition, musicians establish an intimate physical connection with their instruments, and have a heightened sensitivity to the haptic feedback they receive from them. As a result, creating effective machine interactions with musicians involves much more than simply tailoring the design context to music. It requires implementing sensing and actuation technology with a high degree of accuracy and reliability, capable of recontextualizing the interaction in a way that enables making art. Achieving this requires an understanding of the complex interplay between technical aspects and artistic considerations, and a multidisciplinary approach that draws on expertise from a range of fields, including engineering, musicology, and human–computer interaction. Only by taking this holistic approach can we create digital musical instruments that offer a truly expressive and engaging haptic experience for musicians.

While looking at this pervasive overlap between accurate physical interaction and creative context, this paper summarizes results proposed by the authors over recent years in form of design, application, and evaluation of various digital musical interfaces

Fontana et al. (

2015);

Järveläinen et al. (

2022);

Papetti et al. (

2019,

2021). In contrast to most previous studies on the topic, our research aimed to achieve a high level of scientific rigor, with the goal of producing a solid foundation of empirical evidence that can be replicated by other researchers and practitioners. In particular, we employed relatively large sample sizes and modern statistical analysis techniques, and implemented strict control over experimental conditions. Additionally, we developed measurement tools and conducted several psychophysical experiments to investigate vibration perception under active touch conditions commonly found in musical performance

De Pra et al. (

2021,

2022);

Papetti et al. (

2017), often obtaining radically diverging results when compared to the existing literature

Askenfelt and Jansson (

1992);

Verrillo (

1992). Finally, we ensured accurate measurement and characterization of the haptic feedback provided by our interfaces, on the one hand to comply with psychophysical results, and on the other hand to enable the interpretation of experimental results based on objective quantities.

While limited to its specific scope, our research provides insight into the role of haptic feedback in a broad domain of musical interactions, covering a wide range of interface designs for digital sound generation and control:

Section 2 presents evidence of the significance of incorporating vibrations on a familiar class of interfaces as universal as digital piano keyboards;

Section 3 extends those findings to a less familiar, but more expressive, multidimensional musical interaction scenario through force-sensitive multitouch surfaces;

Section 4 restricts the focus to a specific musical control task such as pitch-bending, so as to investigate the effects of haptic feedback on performance accuracy. Finally, these arguments are discussed together in

Section 5.

The authors hope that, due to the scope of the proposed interactions and the effort to present their characteristics in a coherent development, this survey helps disambiguate what pertains to art—on which we will not dare to claim any result deriving from our work—and what instead concerns science and technology. Such a disambiguation is necessary, in our opinion, to reconcile, rather than divide, art and science within the musical haptics domain.

2. Augmenting the Digital Piano with Vibration

When considering digital musical interfaces augmented with haptic feedback, the piano represents an especially relevant case study, not only for its importance in the history of Western musical tradition, but also for its potential in the musical instruments market and for the universality of the keyboard interface—perhaps the most widely adopted musical interface, which is standard even on modern synthesizers

Moog and Rhea (

1990). When playing an acoustic piano, the performer is exposed to a variety of auditory, visual, kinesthetic, and vibrotactile cues that are combined and integrated to shape the pianist’s perception–action loop. From here, a question about the role of somatosensory feedback in forming the perception of a keyboard instrument naturally arises. Historically, the haptic properties of the musical keyboard were first rendered from a kinematic perspective, with the aim of reproducing the mechanical response of the keys

Cadoz et al. (

1990);

Oboe and De Poli (

2006), also in light of experiments emphasizing the sensitivity of pianists to the keyboard mechanics

Galembo and Askenfelt (

2003). Only recently—also past the lesson learned about the technological challenges posed by active control of the keyboard response using force-feedback devices—have researchers started to analyze the role of vibrotactile feedback as a potential conveyor of salient cues. Indeed, the latter is easier to implement in practice, as testified by early industrial outcomes in digital pianos (e.g., the Yamaha AvantGrand series), however still missing documented feedback from customers

Guizzo (

2010).

An early implementation attempt by some of the present authors claimed possible qualitative relevance of vibrotactile cues

Fontana et al. (

2011). Later, along the same line, ground for a substantial step forward was set when the present authors not only found significant sensitivity to such cues, but also hypothesized that pianists are sensitive to key vibrations also when their amplitude is below the standard subjective thresholds obtained by passively stimulating the human fingertip with purely sinusoidal stimuli

Fontana et al. (

2017);

Verrillo (

1971). This conclusion only apparently contradicts previous experiments

Askenfelt and Jansson (

1992): In fact, some of the authors of this paper demonstrated that vibrotactile sensitivity thresholds measured during active finger pressing are significantly lower than previously reported for passive touch

Papetti et al. (

2017).

In this context, an experiment was set up, on the one hand, to assess the perceived

quality of a digital piano depending on different types of vibrotactile feedback provided at the keyboard, and, on the other hand, to study possible effects of vibration on

performance, namely, accuracy in playing timing and dynamics given a reference. This research is reported in

Section 2.1.

The keyboard vibrations that were recorded on a grand piano for the purpose of the mentioned experiment were later complemented by vibration recordings taken on an upright piano and by binaural recordings taken from the pianist’s listening point. The recorded material was then packaged in a library compatible with a popular software sampler, and released in open-access form. The library is described in

Section 2.4.

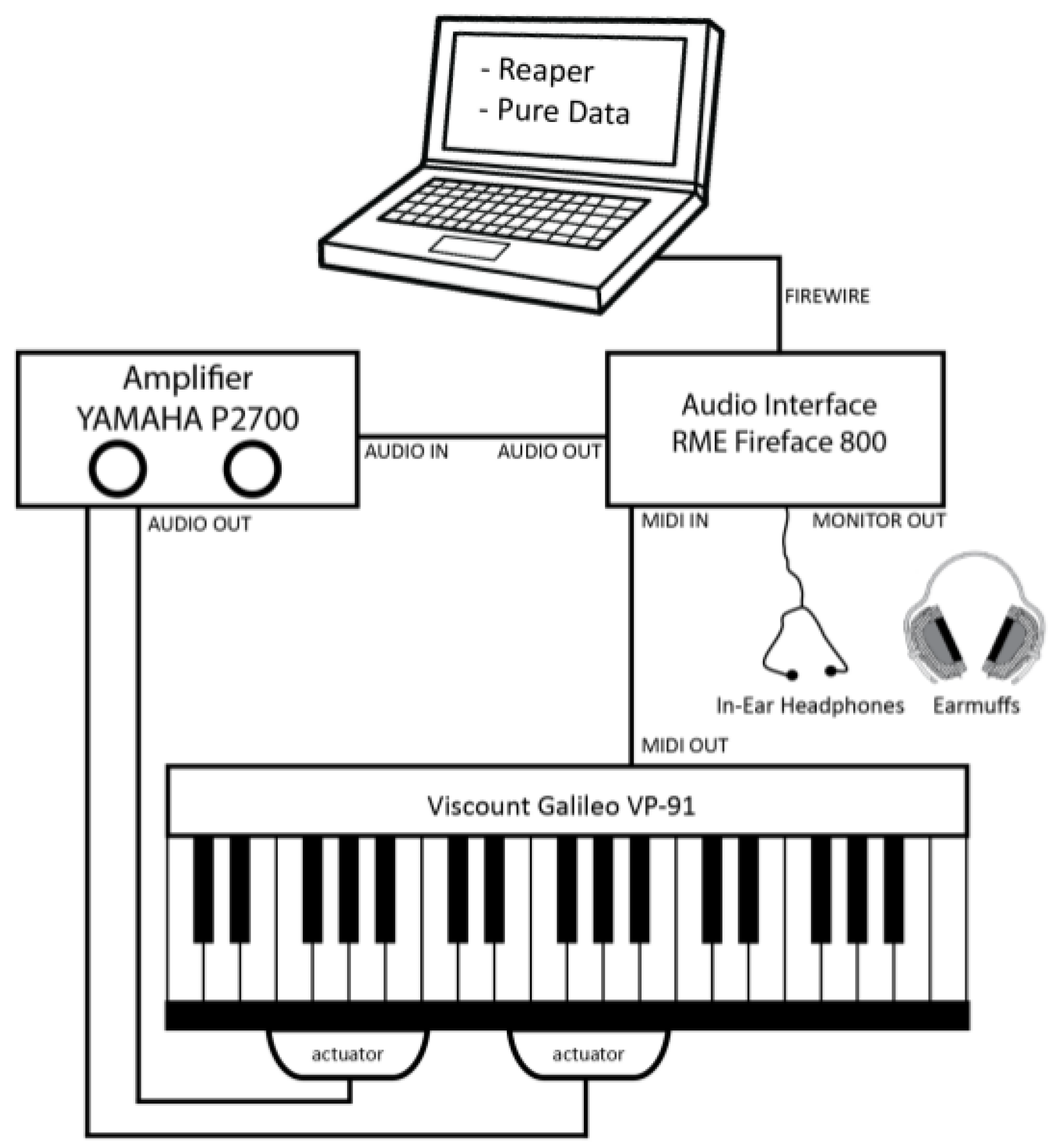

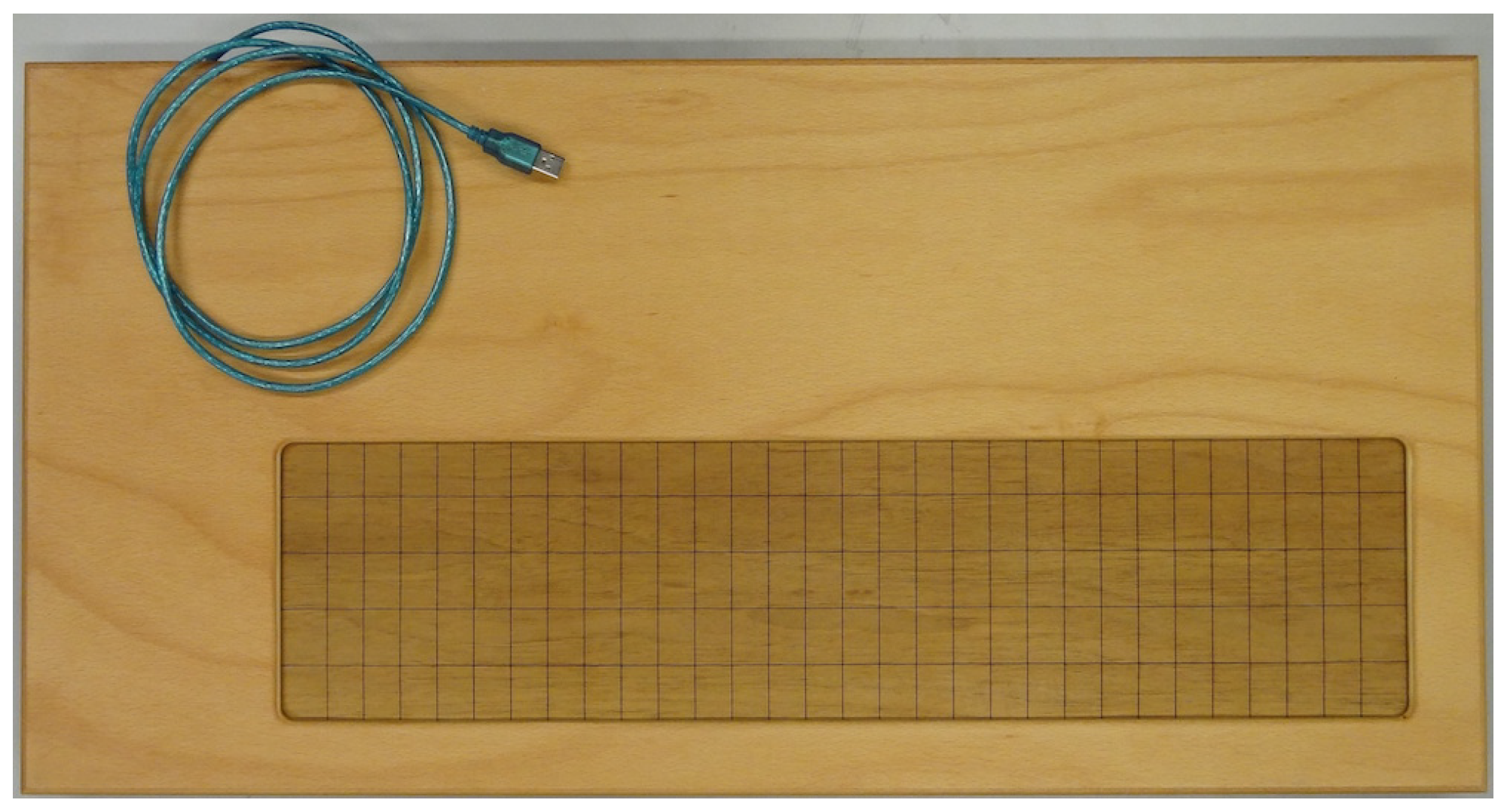

2.1. The VibroPiano

The keyboard of a Viscount Galileo VP-91 digital piano was detached from its metal casing, also containing the electric and electronic hardware, and then fixed to a thick plywood board (see

Figure 1).

Two Clark Synthesis TST239 Silver Tactile Transducers were attached to the bottom of the wooden board, respectively, in correspondence with the lower and middle octaves, in this way enabling to convey vibrations at the most relevant areas of the keyboard

Fontana et al. (

2017). The keyboard was then laid on an X-shaped keyboard stand, interposing foam rubber at the contact points to minimize vibration propagation to the floor while at the same time reducing energy dissipation and optimizing the overall vibratory response.

The transducers were driven by a Yamaha P2700 amplifier fed by an RME Fireface 800 audio interface connected to a laptop. Sound and vibrotactile feedback was generated via software using the Reaper digital audio workstation,

1 hosting an instance of the Native Instruments Kontakt sampler

2 in series with MeldaProduction MEqualizer parametric equalizer

3, as well as an instance of the Modartt Pianoteq 4.5 piano synthesizer

4.

A schematic of the setup is shown in

Figure 2.

Thanks to its physics-based sound engine allowing direct control of several acoustic and mechanical properties, the piano synthesizer was configured to match the sound of the grand piano used as reference (Yamaha Disklavier DC3 M4). Keyboard vibrations were recorded on the same piano (see

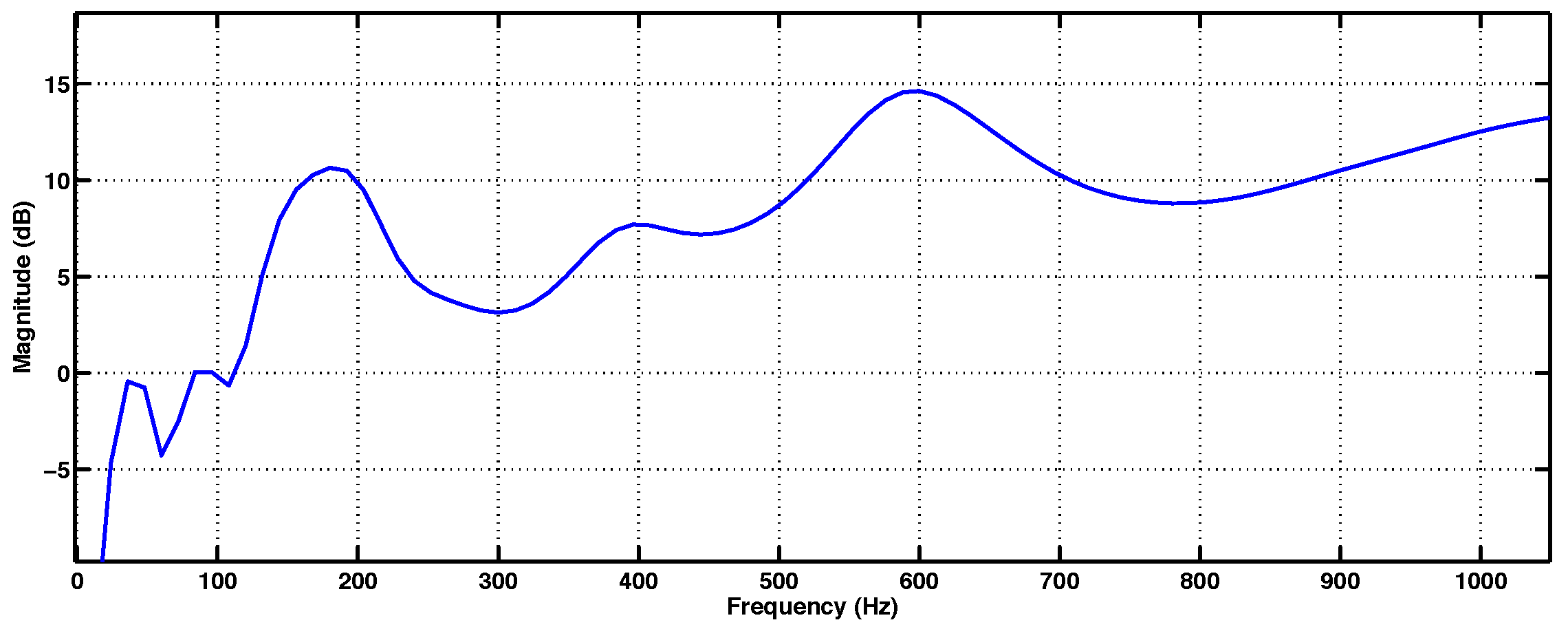

Section 2.4 for more details on the procedure), and their reproduction on the VibroPiano was made to spectrally match the original recorded vibrations by tuning the parametric equalizer as explained below.

Since two transducers are not sufficient for distributing a uniform equalization across the entire keyboard extension, a flattening characteristic was averaged over frequency responses computed for all the A keys (see

Figure 3).

At this point, important dependencies to the reference were still missing, the most important of which were loudness matching and key velocity calibration. Thanks to the fact that the reference piano was a Yamaha Disklavier—an MIDI-compliant acoustic piano equipped with sensors for recording keystrokes and pedaling, and electromechanical motors for actuating the keys and pedals—it was possible to directly match MIDI velocity and the resulting loudness on the VibroPiano. Concerning key velocity calibration, it must be noted that the keys of the Disklavier and the VibroPiano have different response dynamics because of their mechanics. Since pianists adapt their style in consequence of these differences, the digital keyboard had to be subjectively calibrated, aiming at equalizing its dynamics with that of the Disklavier. To this end, the velocity curve calibration routine available in Pianoteq was followed by an expert pianist, first performing on the Disklavier and then on the VibroPiano. As expected, two fairly different velocity maps were obtained. Then, the velocity curve of the VibroPiano was projected point-by-point onto the corresponding map of the Disklavier: the resulting key velocity transfer characteristics therefore allowed playing of the VibroPiano with the dynamics of the Disklavier keyboard, thus ensuring that when a pianist played the digital keyboard at desired dynamics, the corresponding vibration samples recorded on the Disklavier would be triggered. This curve was independently checked by two more pianists to validate its reliability and neutrality.

Concerning loudness matching, the tone produced by each A key of the Disklavier at various velocities was recorded using a KEMAR head positioned at the pianist’s location. Equivalent tones synthesized by Pianoteq were recorded with the KEMAR mannequin wearing a pair of Sennheiser CX 300-II earphones and, on top of them, a pair of 3M Peltor X5 earmuffs. Earmuffs, in fact, ensured sufficient isolation from sounds coming as a byproduct of the vibrating setup in

Figure 2. At this point, the loudness of the VibroPiano was matched to that of the Disklavier by using the volume mapping feature of Pianoteq, which allows one to independently set the volume of each key across the keyboard.

2.2. Experiment: Perceived Quality and Performance

Eleven pianists in professional training (5F/6M, average age 26 years) participated in a quality evaluation test and in a timing performance and dynamic stability test. Vibrotactile stimuli were produced by the VibroPiano. In addition to the vibrations recorded from the Disklavier, a second set of vibration signals was synthetically generated with the purpose of reproducing the same temporal envelope of the former, however with markedly different spectral content. To this end, white noise was generated and then bandpass-filtered in the frequency range 20–500 Hz—i.e., the range of interest for vibrotactile perception

Verrillo (

1971). Several noise signals were then generated—one for each key—by passing them through a resonant filter centered at the fundamental frequency of the respective key. The resulting signal—having a flat temporal envelope—was then modulated by the amplitude envelope of the corresponding recorded note vibration, which was estimated from the energy decay curve of the respective piano sample. Finally, the energy of the synthetic vibration was equalized to that of the corresponding real sample. The two sets of vibratory signals were loaded as two distinct instances of the Kontakt plug-in.

Three vibration conditions were assessed in comparison to a nonvibrating condition labeled A; they were

- B:

Recorded vibrations;

- C:

Recorded vibrations with 9 dB boost;

- D:

Synthetic vibrations.

Auditory feedback was always provided by the Pianoteq synthesizer, with the same settings.

In the first test, the task was to play freely on the VibroPiano and assess the playing experience on five attribute rating scales: dynamic control, richness, engagement, naturalness, and general preference. The dynamics and range of playing were not restricted in any way; participants could switch freely between A and the current test condition until they were ready to make a judgment. Ratings were given on a continuous comparison category rating scale (CCR), ranging from −3 (vibrating setup much worse than the nonvibrating one) to +3 (vibrating setup much better than the nonvibrating one).

In the second test, participants could switch freely only between conditions A and B. In addition, a metronome sound at 120 BPM was delivered through the earphones. Participants were asked to play an ascending and then a descending D-major scale by playing a note at every second metronome beat, and to keep constant dynamics in the meantime. The rationale for using such simple task was to maintain maximum control over the experimental conditions. If any score of musical significance was performed instead, the element of expressivity would come into play, intrinsically introducing individual variations of dynamics and timing, thus making it difficult to achieve the desired experimental goal. Only the three lowest octaves were considered, so as to maximize vibrotactile feedback

Fontana et al. (

2017), this requiring each participant to play with their left hand only. Each participant repeated the task with three dynamic levels (

pp,

mf,

ff) three times each in both conditions, for a total of 18 randomized trials. MIDI data of “note ON”, “note duration”, and “key velocity” messages were recorded across this test for subsequent analysis. The hypothesis was that if the participants’ timing and dynamic behavior was affected by key vibrations, then differences would be seen in means and standard deviations of key velocities and inter-onset intervals (IOIs).

2.3. Results

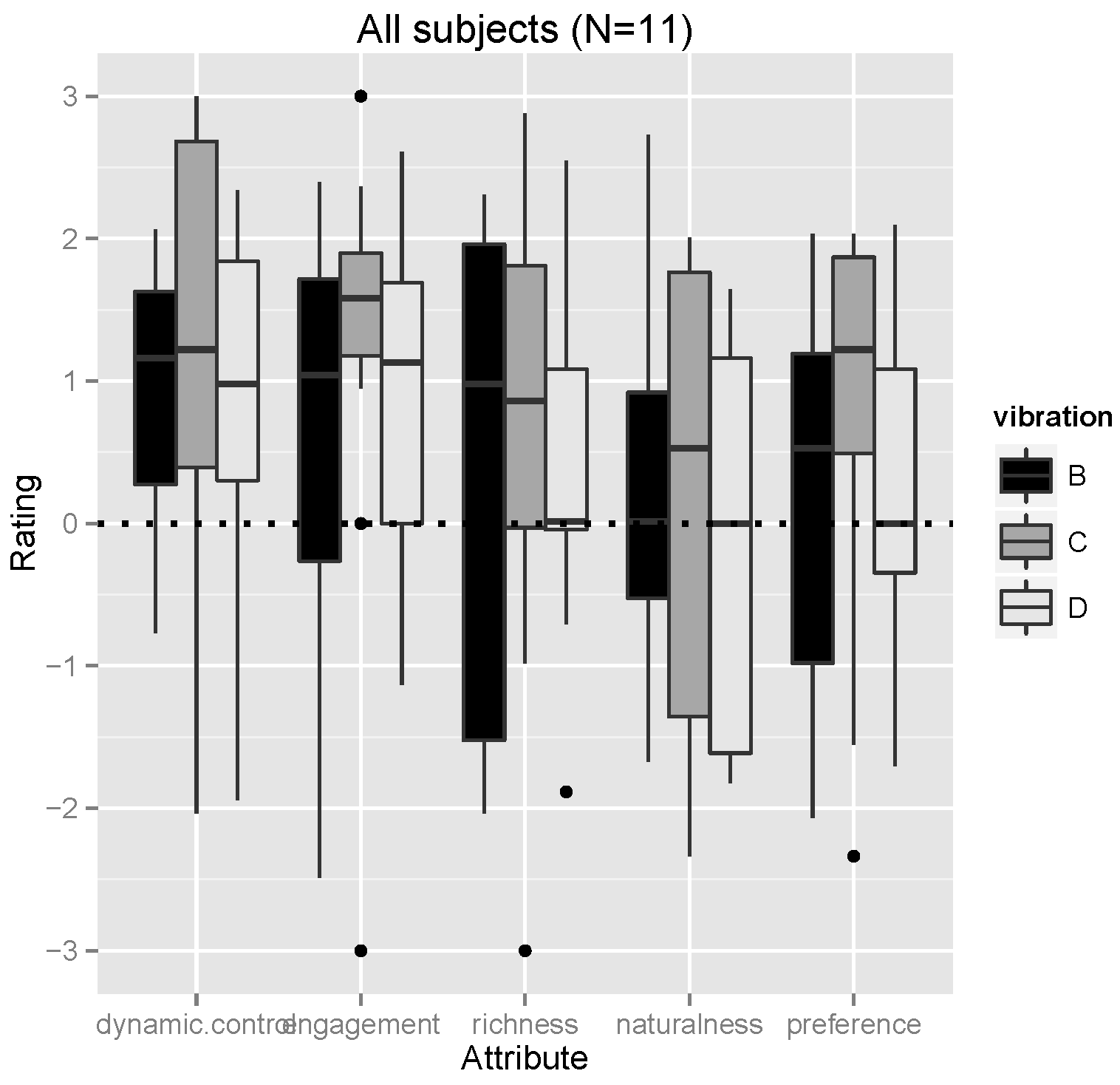

Results from the first test on perceived quality are plotted in

Figure 4.

On average, all vibrating conditions were preferred over A (nonvibrating), the only exception being D (synthetic vibrations) for naturalness. For conditions B and C (real and boosted real vibrations), naturalness received slightly positive scores. The strongest preferences were for dynamic range and engagement. General preference and richness had very similar mean scores, although somewhat lower than engagement and dynamic control. Generally, C was preferred the most: it scored highest on four out of five scales, although B was considered the most natural. Interestingly enough, B scored lowest in all other scales. The analysis is presented in more detail in

Fontana et al. (

2015).

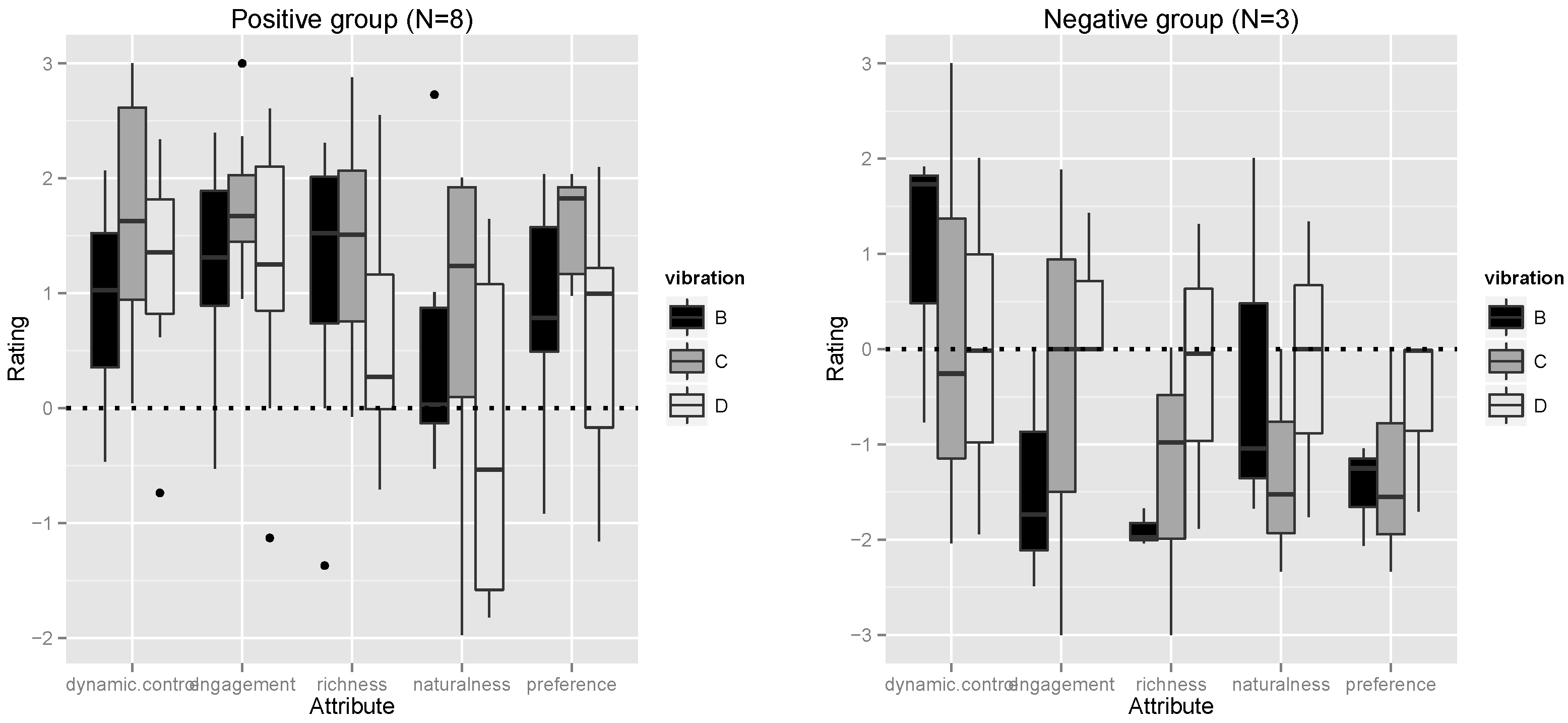

Segmentation performed

a posteriori revealed differences between participants: eight of them preferred vibrations and three preferred the nonvibrating setup. The difference between these groups is evident: the median ratings for the most preferred condition (C) were nearly +2 in the positive group and −1.5 in the negative group concerning general preference.

Figure 5 presents the differences between such groups.

Overall, these results show that key vibrations increase the perceived quality of a digital piano. Although the recorded vibrations were perceived as the most natural, amplified natural vibrations were overall preferred and received the highest scores on all other scales as well. Another interesting outcome is that the setup using synthetic vibrations was considered inferior to the nonvibrating setup only in terms of naturalness: this suggests that pianists are indeed sensitive to the match between the auditory and vibrotactile feedback. The attribute scales with the highest correlation to general preference were engagement (

) and richness (

). A similar result was obtained in a study on violin evaluation, where richness was significantly associated with preference

Saitis et al. (

2012).

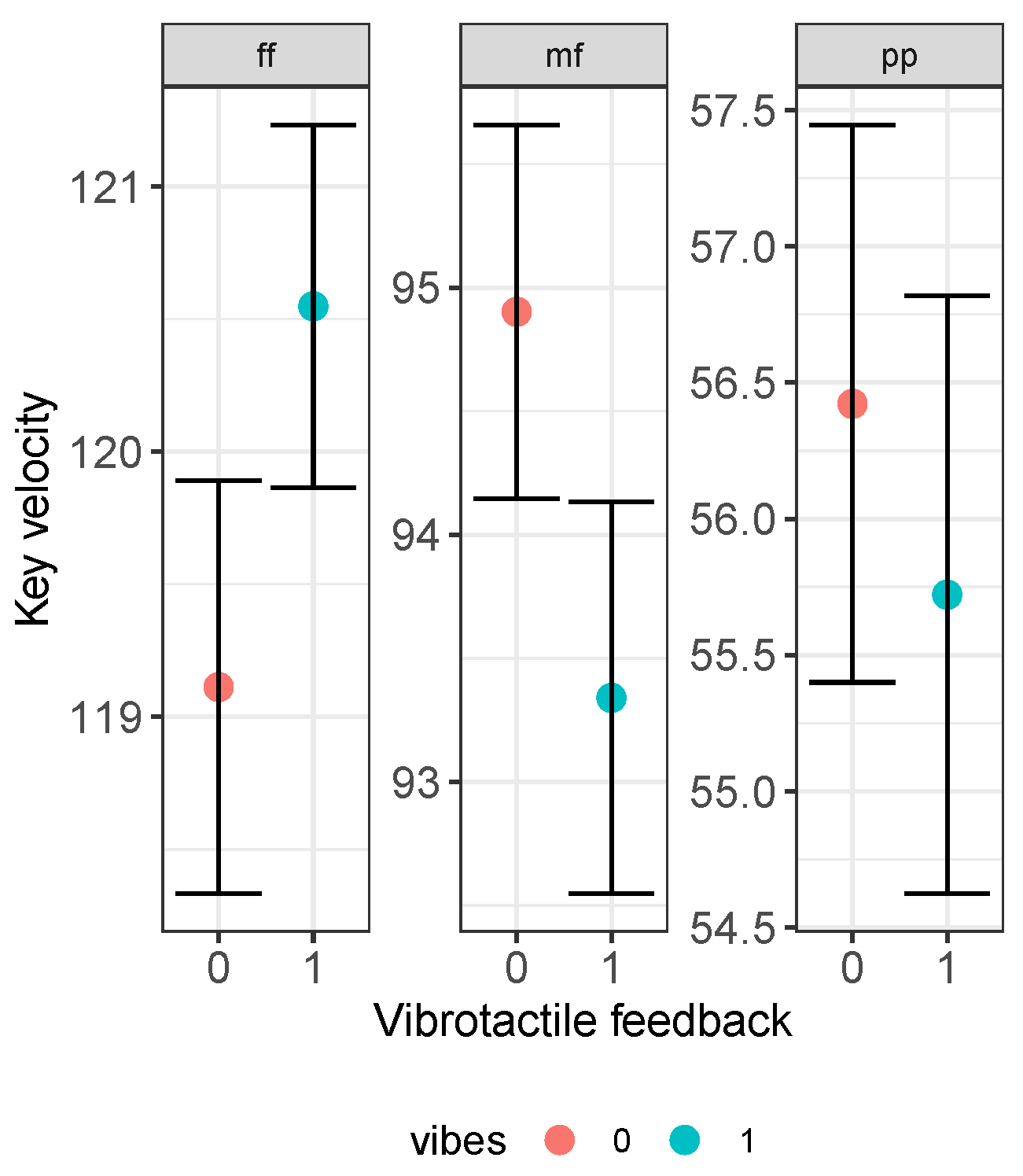

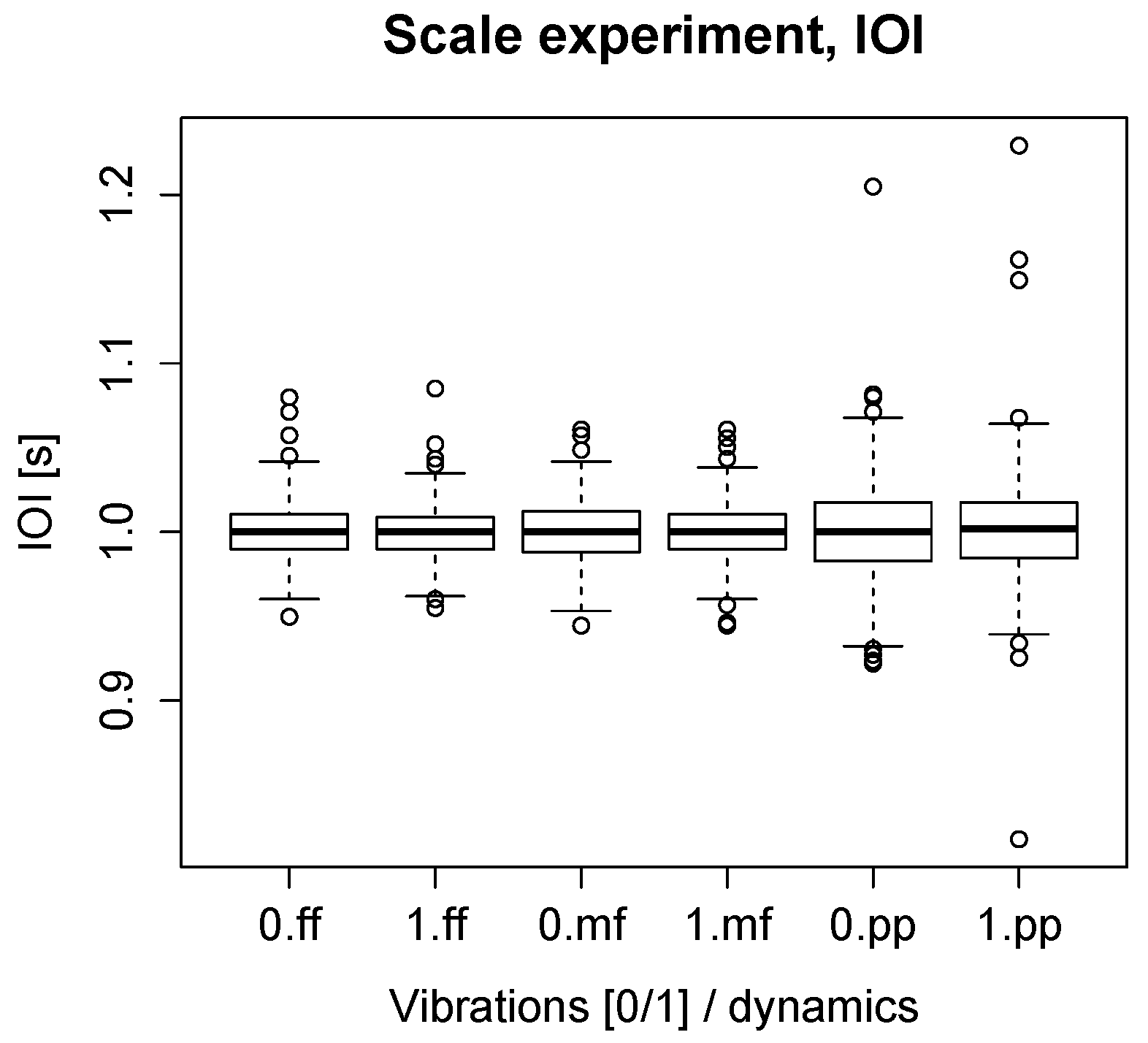

In the second test, mean key velocities were computed for each subject as the average over the three repeated runs for each condition. Results are presented in

Figure 6.

At the dynamic level, subjects played just slightly louder in presence of vibrations than without; conversely, at the level they played slightly softer. However, a repeated measures ANOVA did not reveal a significant effect for either vibrations (, ) or the interaction between vibrations and dynamic level (, ). No effect was observed by studying the lowest octave alone, where the vibrations would be felt strongest, nor was there a significant difference in the standard deviations between conditions A and B.

IOIs were, likewise, stable across the two conditions. Generally, they were slightly more scattered at the

level; however, no effect of vibrations was observed (see

Figure 7).

Notes duration was also stable irrespective of vibrations, suggesting that there was no significant difference in articulation or note overlap.

2.4. BiVib

The development of the VibroPiano required the recording of accurate keyboard vibration by means of calibrated devices. In addition to that, our recent research on piano sound localization

Fontana et al. (

2017,

2018) brought a number of piano sound recordings taken at the pianist’s listening point. In an aim to provide public access to such data, an annotated dataset of audio–tactile piano samples was organized as a library of synchronized binaural piano sounds and keyboard vibrations. The goal of sharing an open-access library was to foster research on the role of vibrations and tone localization in the pianist’s perceived instrument quality, as well as adding knowledge about the importance at cognitive level of multisensory feedback for its use in the design of novel musical keyboard interfaces.

The BiVib (

Binaural and

Vibratory) sample library is a collection of high-resolution audio files (

.wav format, 24-bit @ 96 kHz) containing binaural piano sounds and keyboard vibrations, along with documentation and project files, for their reproduction through a free music software sampler. The dataset—whose core structure is illustrated in

Table 1—is made available through an open-access data repository

5 and released under a Creative Commons (CC BY-NC-SA 4.0) license for direct playback with the Kontakt (version 5 and above) software sampler available for Windows and Mac OS systems. Further directions on how to load BiVib in Kontakt or use it as a standalone dataset in the computer are illustrated in

Papetti et al. (

2019).

The dataset contains samples recorded on two Yamaha Disklavier pianos—a grand DC3 M4 located in Padova (PD), Italy, and an upright model DU1A with control unit DKC-850 located in Zurich (ZH), Switzerland—at several dynamic levels for all 88 keys. The grand piano was located in a large laboratory space (approximately m), while the upright piano was in an acoustically treated small room (approximately m).

For the recordings sessions, each key was triggered via MIDI control at 10 levels of dynamics, chosen between MIDI velocity 12 and 111 by evenly splitting this range (i.e., 12, 23, 34, 45, 56, 67, 78, 89, 100, 111): this choice was motivated by a previous study by the present authors, which reported that both Disklaviers produced inconsistent dynamics outside such velocity range

Fontana et al. (

2017).

Binaural audio recordings made use of a dummy head with binaural microphones for acoustic measurements. The mannequin was placed in front of the piano, approximately where the pianist’s head is located on average (see

Figure 8). The binaural microphones were connected to a professional audio interface. Three configurations of the keyboard lid were selected for each piano. The grand piano (PD) was measured with the lid

closed, fully

open, and

removed (i.e., detached from the instrument). The upright piano was recorded with the lid

closed, in

semi-open position (see

Figure 8), and fully

open. The recording of binaural samples was driven by an automatic procedure programmed in SuperCollider.

6 The recording sessions took place at nighttime, thus minimizing unwanted noise coming from human activity in the building. On the grand piano, note lengths were determined algorithmically depending on their dynamics and pitch, ranging from 30 s when A0 was played at key velocity 111, to 10 s when C8 was played at key velocity 12.

7 These durations allowed each note to fade out completely, while minimizing silent recordings and the overall duration of the recording sessions—still, each session lasted approximately 6 h. On the contrary, an undocumented protection mechanism on the upright piano prevents its electromechanical system from holding down the keys for more than about 17 s, thus making a complete decay impossible for some notes, especially at low pitches and high dynamics. In this case, for the sake of simplicity, all tones were recorded for as long as possible. Because of the mechanics of piano keyboards and the intrinsic limitations of electromechanical actuation, a systematic delay is introduced while reproducing MIDI note ON messages, which mainly varies with key dynamics. For this reason, all recorded samples started with silence of varying duration, which had to be removed in view of their use in a sampler. Given the number of files that had to be preprocessed (880 for each set), an automated procedure was implemented in SuperCollider to cut the initial silence of each audio sample.

Keyboard vibration recordings were acquired with a Wilcoxon Research 736 accelerometer connected to the audio interface. The accelerometer was manually attached with double-sided adhesive tape to each key in sequence, as shown in

Figure 9. Vibration samples were therefore manually recorded, as the vibrometer had to be moved for each key. Digital Audio Workstation (DAW) software was used to play back all notes in sequence at the same ten MIDI velocity values as those used for the binaural audio recordings, using a constant duration of 16 s—certainly greater than the time taken by any key vibration to decay below sensitivity thresholds

Fontana et al. (

2017);

Papetti et al. (

2017). Vibration samples presented abrupt onsets in the first 200–250 ms right after the starting silence, as a consequence of the initial fly of the key and of the following impact against the piano keybed. These onsets are not related to keyboard vibrations; therefore, they had to be removed. As such onset profiles showed large variability, a manual approach was employed here as well: files were imported in a sound editor where the onset was cut off.

BiVib fills two gaps found in the datasets currently available for the reproduction of piano feedback: reproducibility and applicability in experiments. Concerning the former, experiments and applications requiring the use of calibrated data need exact reconstruction of the measured signals at the reproduction side: acoustic pressure for binaural sounds, and acceleration for keyboard vibrations. For instance, the reproduction of vibrations could take place on a weighted MIDI keyboard (as described in

Section 2.1), while binaural sounds may be rendered through headphones. Knowledge of the recording equipment’s nominal specifications enable this reconstruction. Such specifications are summarized in a companion document in the “Documentation” folder. With regard to the latter, the BiVib library has been originally created to support multisensory experiments in which precise control had to be maintained over the simultaneous auditory and vibrotactile stimuli reaching a performing pianist, particularly when judging the perceived quality of an instrument. A precise manipulation of the intensity relations between piano sound and vibrations may be used to investigate the existence of cross-modal effects occurring during piano playing. Such effects have been discovered as part of a more general multisensory integration mechanism

Kayser et al. (

2005) that, under certain conditions, can increase the perceived intensity of auditory signals

Gillmeister and Eimer (

2007), or conversely enhance touch perception

Ro et al. (

2009). In addition to the experiment reported in

Section 2.2, another potential use of BiVib is in the investigation of binaural spatial cues for the acoustic piano. Using the recommendations given in the previous section, single tones of the upright piano can, in fact, be accurately cleared of the room echoes, and then be reproduced in the position of the ear entrance. The existence of localization cues in piano sounds has not been completely understood yet. Even in pianos where these cues are reported to be audible by listeners, their exact acoustic origin is still an open question

Askenfelt (

1993).

Outside the laboratory, the library can reward musicians who simply wish to use its sounds. On the one hand, the grand piano recordings are ready for use, although labeled by the precise acoustic footprint of the recording room. On the other hand, the upright piano recordings are much more anechoic; hence, they can be easily imported and conveniently brought alive using artificial reverb.

3. A Haptic Multitouch Surface for Musical Expression

Moving to the domain of new interfaces for musical expression, touch surfaces have gained popularity as a means of musical interaction. This process has been assisted by the diffusion of touchscreen technology in mobile devices such as smartphones and tablets, and by the growing multitude of high-quality musical applications developed specifically for those platforms. At the same time, research efforts in human-centered and technological domains aimed at developing novel interactive surfaces yielding a rich haptic experience.

Notable early examples of tangible interfaces for music are the successful ReacTable

Jordá et al. (

2005) and Lemur

8 devices. Those interfaces, however, still lack the ability to establish a rich physical exchange with the user.

In this framework, we conducted a subjective assessment making use of the Madrona Labs Soundplane

Jones et al. (

2009)—a force-sensitive multitouch surface aimed at musical expression—which we augmented with multipoint localized vibrotactile feedback. Our study investigated how different types of vibrotactile feedback affect the playing experience and the perceived quality of the interface.

3.1. The HSoundplane

The original Soundplane was equipped with an actuators layer based on piezoelectric discs, while a software system for synthesizing vibrotactile feedback was developed, resulting in the HSoundplane prototype (where “H” stands for “haptic”), shown in

Figure 10. The design of the haptic layer is described in detail in

Papetti et al. (

2018); therefore, only essential information is reported here. In order to drive the piezo discs with standard audio signals, custom amplifying and routing electronics were designed. The piezo elements were arranged on a flexible printed circuit board (PCB) foil in a

matrix configuration, matching the tiled pads on the Soundplane’s surface. The PCB foil connecting the piezo elements was laid on top of a thin rubber sheet with holes corresponding to each piezo disc: this ensured enough free space to allow for mechanical deflection of the actuators, thus optimizing their vibratory response. Such structure was then inserted beneath the touch surface of the interface, conveying vibrotactile signals to each of the tiled pads.

A client software for Mac computers receives multitouch data sensed by the interface and routes them to other applications using the Open Sound Control (OSC) protocol:

9 Such messages carry absolute x, y coordinates (for position) and pressing force values along the z-axis for each contacting finger. An additional software application was developed, making use of Cycling ’74 Max,

10 which receives OSC touch data from the Soundplane application, and uses them to drive two synthesizers, respectively, generating audio and vibration signals.

3.1.1. Sound Feedback

Sound was provided to the participants by means of closed-back headphones (Beyerdynamic DT 770 Pro).

The pitch of the audio feedback was controlled along the x-axis according to a chromatic subdivision which mapped each pad of the tiled surface to a semitone. The available pitch interval ranged, from left to right, from A2 (

) to D5 (

). Similar to the customary string coloring on the harp, the columns corresponding to C and F tones were painted, respectively, in red and blue (see

Figure 11), thus providing a clear pitch reference to the player.

Two types of sonic feedback were designed:

Sound 1: A markedly expressive setting, responding to subtleties and nuances in the performer’s gesture. It consisted in a sawtooth wave filtered by a resonant low-pass, and modulated by a vibrato effect (i.e., with amplitude and pitch modulation).

Y-axis control: The vibrato intensity varied along the y-axis, from no vibrato (bottom) to strong vibrato (top).

Z-axis control: The filter cutoff frequency was controlled by the applied pressing force (i.e., higher force corresponding to brighter sound), and so was the sound level (i.e., higher force for louder sound).

Sound 2: A setting offering a rather limited sonic palette and no amplitude dynamics. It consisted of a simple sine wave to which noise was added depending on the location along the y-axis.

Y-axis control: Moving upwards added white noise of increasing amplitude, filtered by a resonant bandpass. The filter’s center frequency followed the pitch of the respective tone.

Z-axis control: Pressing force data were ignored, resulting in fixed loudness.

All sounds were processed by a subtle reverb effect, which made the playing experience more acoustic-like.

Audio examples of the two sound types are available online

11, demonstrating C3, C4, and C5 tones modulated along the y- and z-axes.

3.1.2. Vibrotactile Feedback

Before being routed to the actuators, vibration signals were bandpass-filtered in the 10–500 Hz range, to remove signals outside of the vibrotactile perception range

Verrillo (

1992), as well as to optimize the actuators’ efficiency and, consequently, the vibratory response of the device.

Three vibrotactile strategies were implemented:

Sine: Pure sinusoidal signals, whose pitch followed the fundamental of the played tones ( within 110–587.33 Hz), and whose amplitude was controlled by the intensity of the pressing forces. By focusing vibratory energy at a single frequency, this setting aimed at producing sharp vibrotactile sensation.

Audio: The same sounds generated by the HSoundplane were used to render vibration by also routing them to the actuators layer. Vibration signals thus shared the same spectrum (within the 10–500 Hz pass-band) and dynamics of the related sound. This approach ensured maximal coherence between auditory and tactile feedback, mimicking what occurs on acoustic musical instruments, where the source of vibration coincides with that of sound.

Noise: A white noise signal was used, whose amplitude was fixed. This setting produced vibrotactile feedback generally uncorrelated with the auditory one, as it ignored any spectral and amplitude cues possibly conveyed by it. The only exception was with Sound 2 and high y-axis values, which resulted in a similar noisy signal.

The intensity of vibration feedback was set so that they felt reciprocally consistent.

3.2. Experiment: Perceived Quality and Playing Experience

The different spectral and dynamics cues of the three available vibration types offered varying degrees of similarity with the audio feedback, thus enabling determination of the importance of the match between sound and vibration. In addition, the two available sound settings offered different degrees of variability and expressive potential, allowing to investigate whether a possible effect depends on audio feedback characteristics.

The assessments were made by comparing each of the vibrating setups against a respective nonvibrating configuration with the same sound setting. The three vibration types were crossed with the two sound settings, and ratings were measured on four attributes: preference, control and responsiveness (referred to as control), expressive potential (referred to as expression), and enjoyment. The attributes were rated by means of continuous sliders, with the extremes at 0 and 1 indicating maximal preference for the nonvibrating and vibrating setups, respectively. The 29 participants (7M/22F, average age 25 years) were either professional musicians or music students, and played keyboard or string instruments.

For each trial, the trajectories drawn across the surface by the participants were recorded.

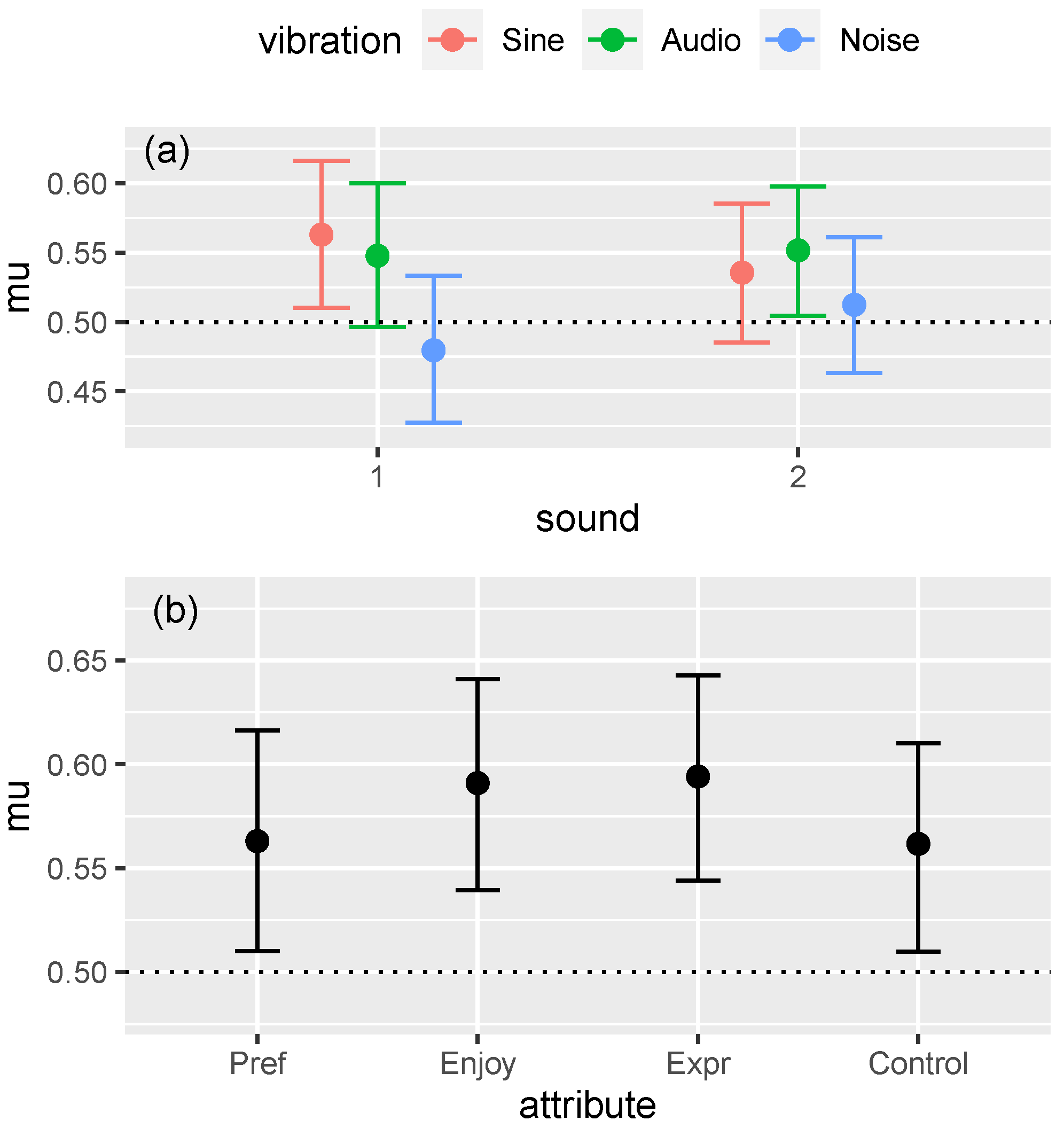

3.3. Results

Mean responses were estimated by Bayesian inference using a zero-one-inflated beta (ZOIB) model

Kruschke (

2014);

Liu and Kong (

2015);

Ospina and Ferrari (

2010). The model and detailed results are described in

Papetti et al. (

2021). On average, the vibrating setups were preferred to their nonvibrating versions: In fact, all mean estimates but one are above 0.50 (the point of perceived equality), as well as most of the respective credible intervals

12. As seen in

Figure 12b, the presence of vibrations had the strongest positive effect on

expression and

enjoyment.

Figure 12a shows that the effects of vibrations were somewhat stronger with the more expressive Sound 1 than with Sound 2. In both cases, audio and sine vibration had a positive effect, while noise did not credibly

13 increase the perceived quality of the device. The marginal effect of sound type was not credible in the ZOIB model. In combination with noise, however, Sound 2 had a slight but credible positive effect. In other words, a more marked effect was found when vibration was more similar to the sonic feedback and consistent with the user’s gesture: indeed, sine and audio vibration follow the pitch of the produced sound and their intensity can be controlled by pressure. Conversely, noise vibration—offering fixed amplitude, independent of the input gesture, and flat spectrum—was rated lowest among the vibrating setups. Noise vibration resulted in slightly better ratings when Sound 2 was used as compared to Sound 1: again, this was likely because vibrotactile feedback is consistent, at least partially, with the noise-like sonic feedback produced for high y-axis values.

In terms of distribution means, audio vibration was not significantly different from sine vibration, while noise vibration was rated credibly lower. Sound type had a credible effect on the estimated mean only in combination with noise vibration.

Some interesting observations were made from the density distributions of recorded x, y, and z trajectory data. Participants did not play very differently in presence or absence of vibrations; however, there were some differences in playing behavior between participants who gave positive or negative ratings to the vibrating setups, respectively. In the most preferred condition—that is, Sound 1 with sine vibrations—participants who gave positive ratings (N = 22) spent more time in the lower pitch range, applied a wider range of vibrato intensity, and pressed slightly harder to produce louder sounds than the participants who gave negative ratings (N = 7). As a result, the positive group would have felt stronger vibrations through playing more at the low-pitch range and applying higher pressing force

Papetti et al. (

2017);

Verrillo (

1992). For the least preferred configuration—that is, Sound 1 with noise vibration—such differences were less apparent.

4. Performance Control: The Case of Pitch-Bending

Novel digital musical interfaces have recently unlocked the possibility to map continuous and multidimensional gestures (e.g., finger pressing, sliding, tapping) to musical parameters, thus offering enhanced expressivity when controlling synthesizers and virtual instruments

Schwarz et al. (

2020);

Zappi and McPherson (

2014). A few noteworthy examples, currently available as commercial products, are the ROLI Seaboard RISE series

14, the Madrona Labs Soundplane (mentioned in

Section 3.2), and the Roger Linn Design Linnstrument

15, as well as various touchscreen-based GUIs designed for devices such as the Apple iPad.

Among the most musically relevant expressive controls, pitch modulation and pitch-bending have been traditionally possible to varying degrees on several musical instruments, for instance, stringed instruments, and even percussion, such as the tabla. On those instruments, pitch is typically controlled by deforming the source of vibration (e.g., by oscillating or bending their strings or drum membrane) with the same hand or fingers that play them. Conversely, on electronic and digital instruments—especially keyboard-based ones—pitch-bending is generally outsourced and assigned to a spring-loaded lever or wheel, which requires players to dedicate their left hand to operate. All of this makes the ergonomics of pitch-bending very different on such devices, as compared to traditional musical instruments. Novel digital musical interfaces such as those mentioned above have now reintroduced more natural and direct ways to modulate/bend pitch, for instance, by sliding up/down a finger on the same surface that is being played. However, despite the ability to fine-tune mapping sensitivity, performing such gestures on digital devices poses a major challenge for control accuracy. This may be due, in part, to the lack of haptic feedback provided by most current digital instruments, among other factors

Papetti and Saitis (

2018b).

It is known from the literature that young normal-hearing adults reach an auditory pitch discrimination accuracy of 0.5% in a wide frequency range, while musicians can reach even 0.1%

Moore and Peters (

1992);

Spiegel and Watson (

1984). Although limited in frequency compared to the audible range, vibrotactile stimuli may also excite a pitch sensation which depends on frequency and amplitude (or energy)

Harris et al. (

2006);

Verrillo (

1992). Discrimination accuracy between 18% and 3% has been reported

Franzén and Nordmark (

1975);

Pongrac (

2006). Audio–tactile interactions exist in perception of consonance, loudness, and pitch through various mechanisms and depending on the task

Okazaki et al. (

2013);

Yau et al. (

2010), but it is unclear if and how a noisy vibrotactile signal might distract auditory pitch control performance, or whether matched auditory and vibrotactile signals might even enhance it.

In the context of novel digital musical interfaces and gesture mapping to pitch control, we set out to investigate the action of pitch-bending.

4.1. Setup

The experiment made use of a self-developed haptic device called TouchBox: this offers a Plexiglas touch panel measuring 3D forces (i.e., along normal, longitudinal, and transverse directions), and provides rich vibrotactile feedback. The device is the latest iteration of a former design previously published in open-access form

Papetti et al. (

2019).

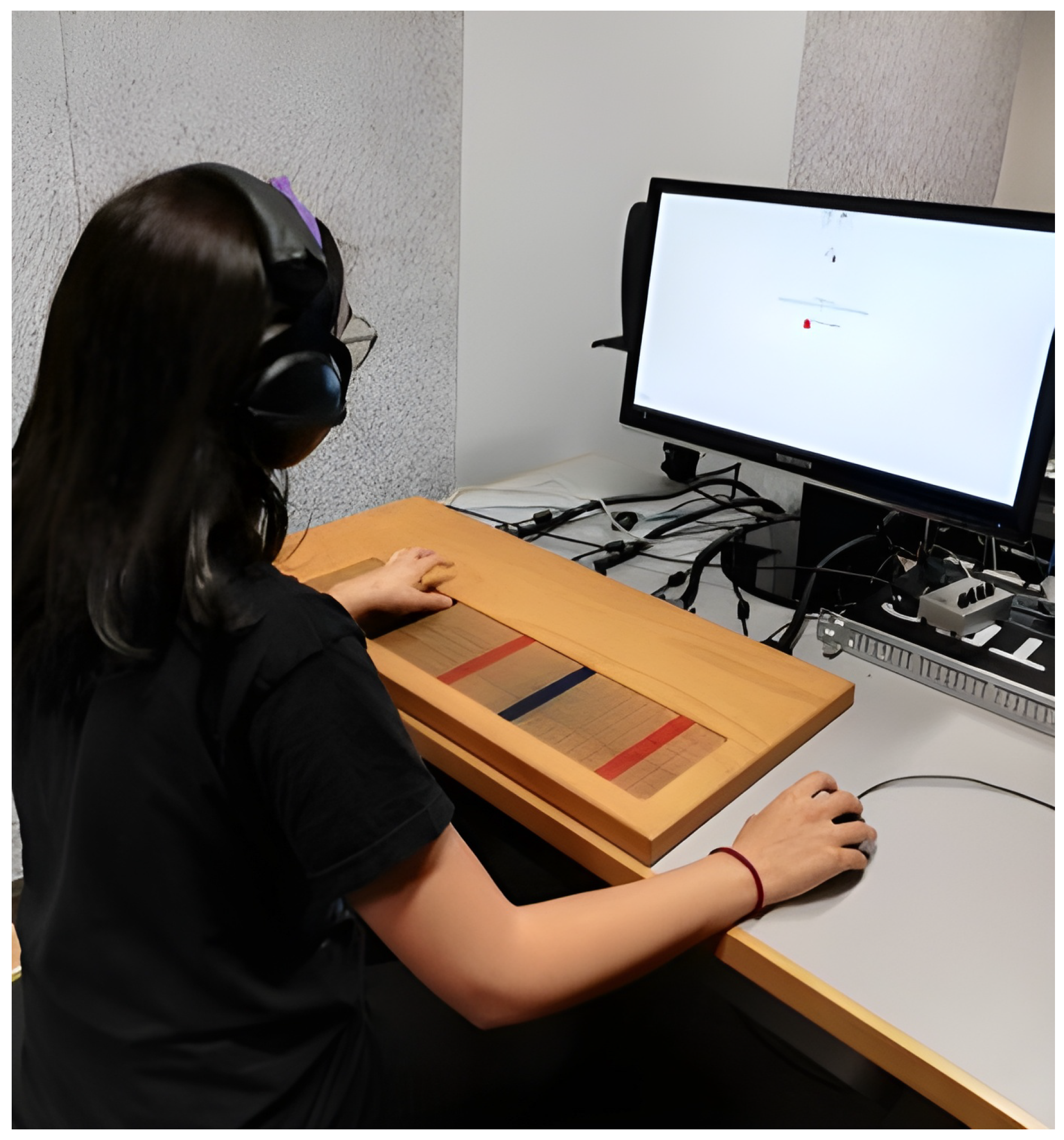

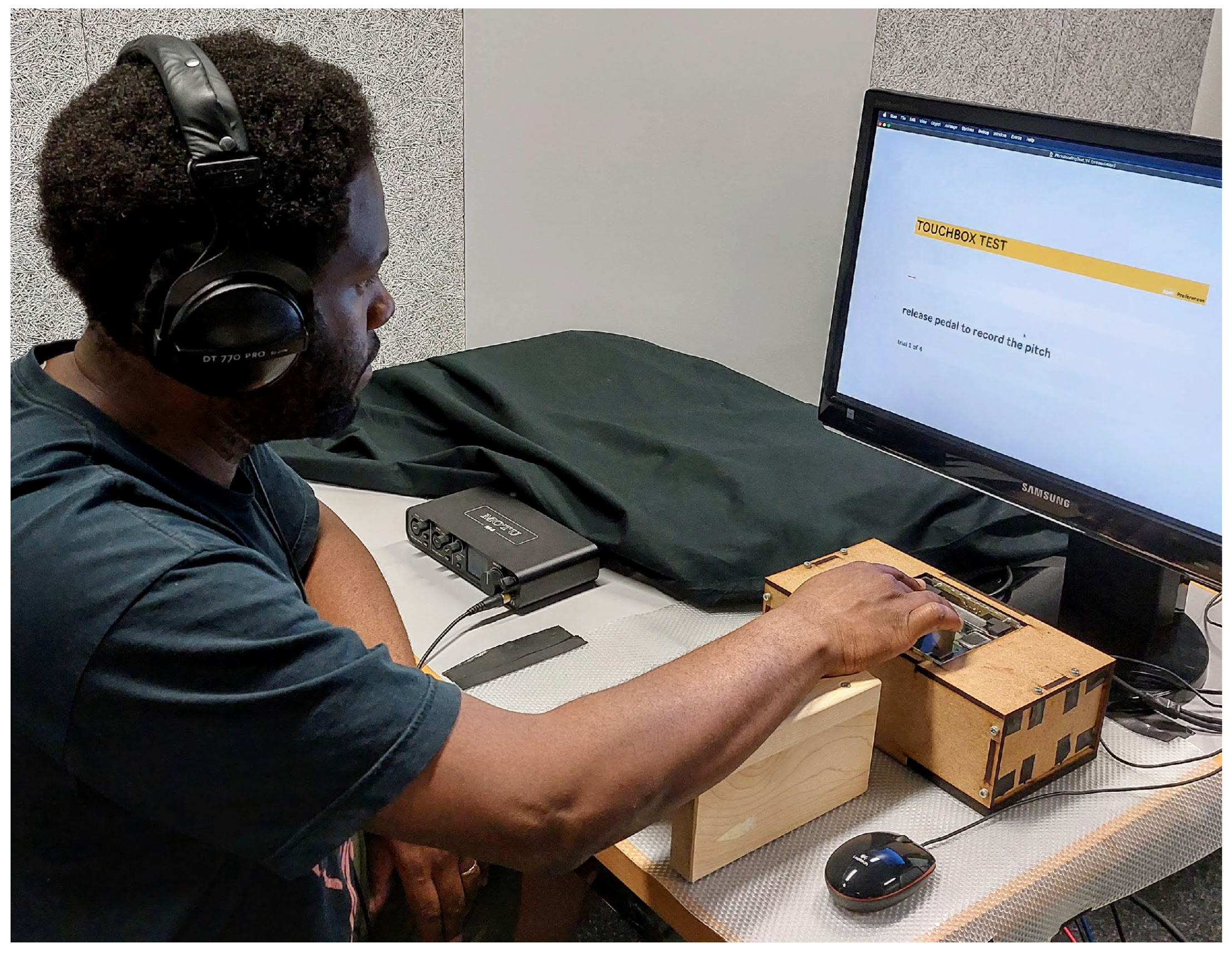

As shown in

Figure 13, participants sat at a table and pressed a finger on top of the TouchBox, which was placed in front of them. In order to comfortably perform this, they could rest their forearm on a support and adjust the height of their seat. A piece of adhesive with fine sandpaper back was stuck at the center of the top panel of the TouchBox, so as to prevent the participants’ fingers from slipping while pushing forward or pulling backward.

Auditory feedback, generated by a software synthesizer implemented with Cycling ’74 Max, was provided through closed-back headphones (Beyerdynamic DT 770 PRO) connected to a MOTU M4 USB audio interface. The synthesizer reproduced a sawtooth waveform with low-pass filtering.

Vibrotactile feedback—also generated in Max in the form of audio signals—was rendered by driving a voice-coil actuator, attached to the bottom of the touch panel, either with the synthesizer’s signal or with noise. In the former setting, the goal was to simulate what happens when playing acoustic or electroacoustic musical instruments, where the sources of vibration and sound coincide. Since humans are maximally sensitive to vibration in the 200–300 Hz range

Verrillo (

1992), in order to provide uniformly effective vibrotactile feedback, the sound synthesizer was limited to a pitch range of

semitones around C4 = 261.6 Hz; therefore, the overall range was 233.08–293.66 Hz (Bb3–D4). Although narrow, the chosen pitch range did not compromise the pitch matching task, as human frequency discrimination is stable across a wide range from 200 Hz to several kHz

Dai and Micheyl (

2011). For the noise feedback, white noise bandpassed in the 40–360 Hz range was used, again with the goal of maximizing the perceivable feedback, this time leaving the auditory and tactile channels uncorrelated. Vibration amplitude in both configurations was normalized to 120 dB RMS acceleration (re

) so as to be always clearly perceivable

Verrillo (

1992). Any sound spillage generated by the actuator was adequately masked by the headphones worn by participants.

A switch pedal, connected via MIDI to the audio interface, allowed participants to record the current pitch of the synthesizer and advance the experiment.

Finally, the table housed a computer screen and a mouse, which were used to give ratings, as described below.

4.2. Experiment: Accuracy of Pitch-Bending

The task was to reproduce a target reference pitch as accurately as possible by controlling a sound synthesizer via the TouchBox. It was carried out under two crossed factors: gesture and vibrotactile feedback. Gesture had two conditions: push forward or pull backward the device’s top panel. The two gestures were, respectively, requested for increasing or decreasing the given initial pitch toward the target. Three vibrotactile feedback conditions were possible: no vibration, noise vibration, and pitched vibration matching the auditory feedback. If vibrotactile feedback was offered in a trial, it was only on while the synthesizer sound was playing.

Target pitches were randomized in a continuous range of semitones around C4 = 261.6 Hz. Initial pitches of the stimuli were one to three semitones above or below the target pitch.

The measured variables were relative pitch accuracy and self-reported ratings on agency (“I felt that I produced the sound”), confidence (“I felt that I gave a correct response”), and pleasantness (“The task felt pleasant”).

Thirty-one normal-hearing, musically trained subjects took part in the experiment (14 F and 17 M, average age 25 years, musical training: m = 16 years). In a preliminary phase, they learned to execute the task correctly and to use one and the same finger of their dominant hand throughout the experiment. During each trial, after hearing the target pitch for 3 s, participants adjusted the stimulus pitch to match the target. Trials were organized in two blocks by gesture in random order, and the same vibrotactile feedback was presented in a block of four successive trials. After each block of four trials, ratings on agency, pleasantness, and confidence were given by operating visual analog sliders shown on screen with the mouse, in the range [0, 1] (0 = very low, 1 = very high).

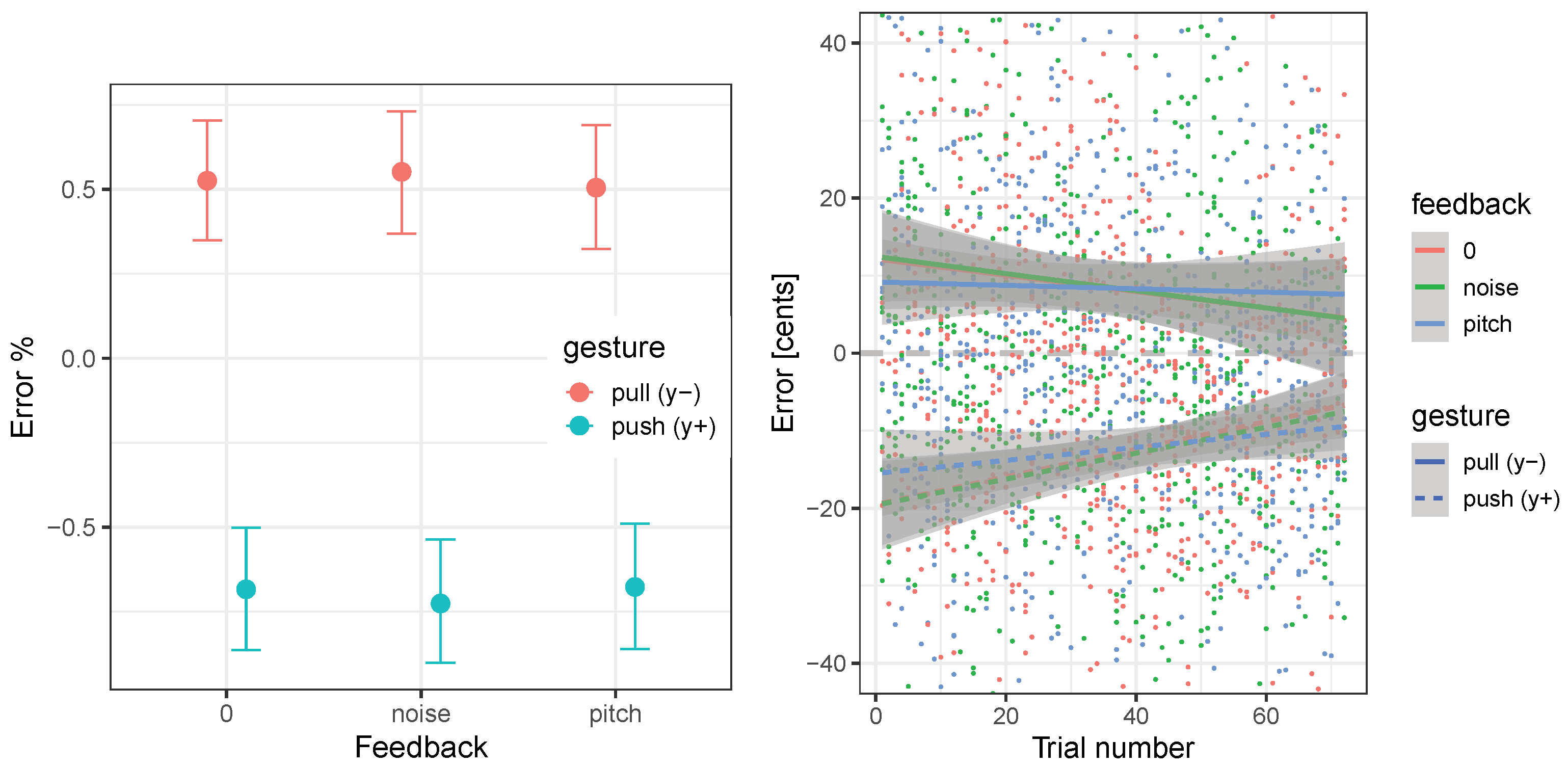

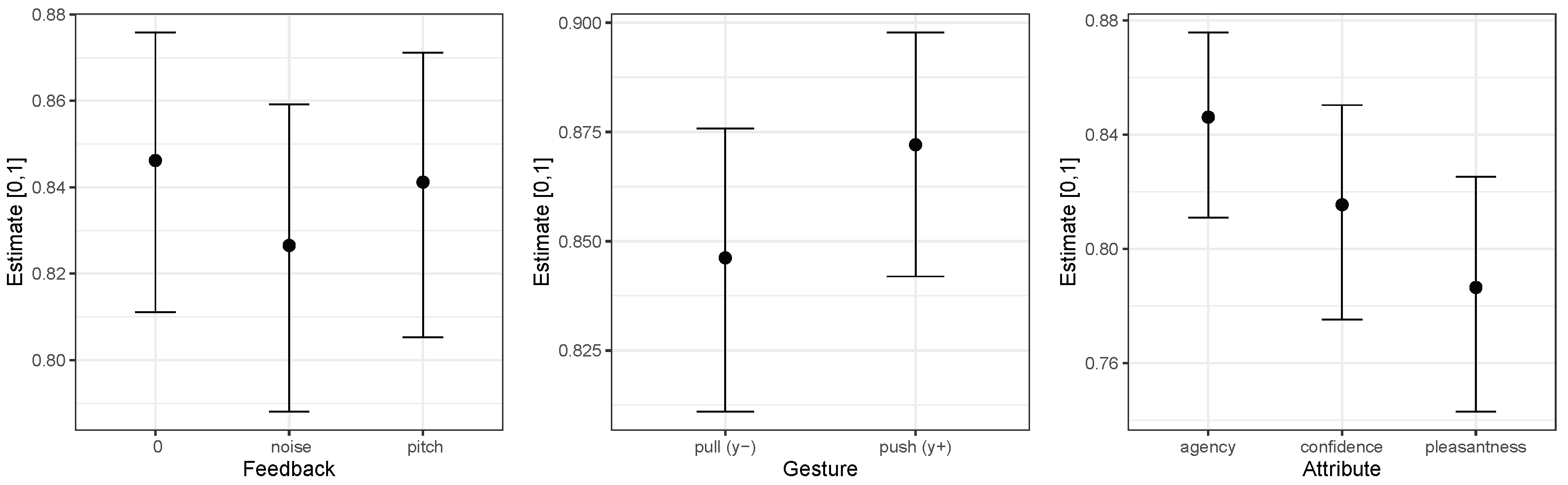

4.3. Results

Pitch adjustment error (in cents, where cent =

of a semitone)

16 was predicted from feedback, gesture, and their interaction.

Statistical models were fit to both accuracy and rating data, and their respective parameters were estimated by Bayesian inference. The models and resulting fits are described in detail in

Järveläinen et al. (

2022). Results are presented in

Figure 14 for the pitch accuracy task.

Slightly larger errors were measured in the push gesture. Notably, participants performed the task somewhat faster with that gesture than with pulling, with mean times per trial being 10.6 s and 12.0 s, respectively. However, as

Figure 14 shows, participants demonstrated slightly more learning with pushing, as both gestures approach 0.5% accuracy in the last trials, in spite of larger differences between them at the beginning of sessions.

The differences in signed errors are indeed obvious, as the estimated error is an overshoot of 8.74 cents (+0.53% of the target frequency) for the pull gesture and an undershoot of −12.1 cents (−0.68% of the target frequency) for push. To investigate the significance of the difference without considering the effect of the opposite signs, a second model was fit for error values mirrored around zero in the push condition. This error estimate was 3.4 cents higher for the push gesture; however, statistical analysis did not produce evidence of a credible effect.

Estimated effects of feedback, gesture, and attribute on mean attribute ratings are presented in

Figure 15: Noise vibration was credibly rated lower, as were the attributes confidence and pleasantness; pushing gesture, in turn, was rated credibly higher.

5. Discussion

The first two experiments (see

Section 2.3 and

Section 3.3) addressed the qualitative impact of vibrotactile feedback. Their results show that carefully designed vibrotactile feedback can enhance the perceived quality of digital piano keyboards and force-sensitive multitouch surfaces. These findings are consistent with previous studies that examined the qualitative effects of haptic feedback in digital musical interfaces

Kalantari et al. (

2017);

Marshall and Wanderley (

2011);

Tache et al. (

2012).

In the case of the VibroPiano, although the realistic (i.e., as recorded) vibrations were perceived as the most natural, amplified natural vibrations were overall preferred and received highest scores for other attributes as well. Moreover, the setup using synthetic vibrations was considered inferior to the nonvibrating setup only in terms of naturalness. In the case of the HSoundplane, the measured effect of the vibration carrying clear pitch and dynamics information (i.e., the sine and audio vibration) consistent with sound was appreciably positive, as compared to the nonvibrating condition; conversely, the noise vibration did not enhance the subjective quality of the interface. In particular, vibrotactile feedback increased the perceived expressiveness of the interface and the enjoyment of playing. Our experiments overall suggest that ensuring consistency between vibration and sound in terms of spectral content and dynamics is a well-founded strategy for the design of a haptic digital music interface.

However, intra- and interindividual consistency is an important issue in instrument evaluation experiments. In the case of the VibroPiano, roughly two-thirds of the subjects clearly preferred the vibrating setup, perhaps less rewarded by the synthetic vibrations, while the remaining one-third had quite the opposite opinion. It is worth observing that the two participating jazz pianists were both in the “negative” minority. While jazz pianists are likely to have more experience of digital pianos than classical pianists, who are instead used to rich vibrotactile cues

Fontana et al. (

2017), the reported study could not specifically assess if pianists used to standard (i.e., nonvibrating) digital pianos would rate them better than a vibrating one.

Understanding why different types of vibratory feedback on the HSoundplane led to varying levels of acceptance among performers is more challenging. Despite the credible effects observed in the test population, a number of inconsistent responses were also recorded. One possible explanation for this variability is individual differences in sensitivity to vibrotactile stimuli among the participants. It is possible that some participants did not feel the vibrations as strongly or at all during some trials. However, since we did not screen participants for vibrotactile sensitivity, it is difficult to definitively determine the exact cause of these inconsistent responses.

The possibility that some participants had lower tactile sensitivity would also contribute to explaining the generally higher scores received by the “boosted vibrations” setting on the VibroPiano. At any rate, also taking into account that a few participants (likely individuals with high sensitivity) found vibrations on the HSoundplane too strong, one can infer that vibration magnitude is a crucial factor in the design of haptic feedback for digital music interfaces. Its role appears easier to identify (and, hence, to reproduce) when the interaction is constrained within firm gestural primitives, such as those offered by the piano keyboard; conversely, assessing the impact of vibration intensity becomes more challenging when the interface is unfamiliar and/or affords multidimensional tactile interaction.

The perceptual variability of vibration strength and audio–tactile congruence may have been modulated by where and how the participants were playing across the keyboard or surface, hence exacerbating the number of inconsistent ratings. For instance, sensation magnitude would increase when the fundamental frequency of the played tone overlapped with the range of vibrotactile sensitivity

Verrillo (

1992), or if higher pressing forces were applied

Papetti et al. (

2017). This could have been especially true for the latter interface, which, unlike the piano, provides localized vibrotactile feedback at each pad. Moreover, free playing was a necessary experimental condition, as it allowed to evaluate the impact of vibrotactile feedback on various aspects of the playing experience. Constraining the task would have limited the natural expression and creativity of the musicians, which would have impacted the validity of the study.

The third experiment reported in this paper (

Section 4.2) and, to a limited extent, the first one too (

Section 2.2), aimed at assessing how vibrotactile feedback may support some aspects of musical performance. As already discussed, the tactile perception of performing musicians can be greatly influenced by various factors, making it challenging to control experimentally. Moreover, in order to make performance measurements reliable, expressive modulation of playing dynamics and timing had to be avoided. For the above reasons, the impact of vibration on musical performance was studied by asking participants to execute musical yet basic tasks, and by measuring their accuracy and stability. However, this countermeasure had the unintended consequence of making the tasks too easy for trained musicians, who were our primary pool of participants. In other words, sacrificing performance complexity for experimental control raises questions about the power of our performance tests.

On the VibroPiano, the task was to play three octaves of a D-major scale with the left hand at relatively slow pace. No differences were observed in timing performance and dynamic stability, regardless of the presence of vibration. Although this result is likely due to the task being too easy for trained pianists, it is noteworthy that the participants rated the perceived dynamic control highly for all vibration settings. Hence, it is reasonable to expect that more challenging tasks may reveal an effect of key vibrations on performance, besides making the digital piano more enjoyable to play. Indeed, recent research shows that pianists do use tactile information as a means of timing regulation

Goebl and Palmer (

2008,

2009), even though the exact role of keyboard vibrations remains unknown. There is also evidence that vibrotactile feedback helps force accuracy in finger-pressing tasks

Ahmaniemi (

2012);

Järveläinen et al. (

2013). Whether vibrations felt on currently depressed key(s) could aid in planning ahead, for example, the dynamics of upcoming key press, is an interesting question that the reported experiment could not answer, unfortunately. As an additional note, during the experiment with the HSoundplane we observed a certain trade-off between execution speed and consistency of responses, which is typical of decision-making tasks

Heitz (

2014). Nevertheless, these findings alone are insufficient to confirm that vibrations had a substantial impact on the playing task.

In the third experiment (

Section 4), emphasis was on sound control rather than production, specifically on the accuracy of pitch control through finger pushing and pulling. Though overall vibrations had basically no demonstrable effect on the task, the results show that pitched vibration led to slightly lower errors, while noise vibration caused slightly higher errors than no vibration. These effects were arguably not null; however, their verification would require a larger-scale experiment. Additionally, noise vibration had a credibly negative impact on all of the rated attributes. The average pitch accuracy in all conditions was roughly 0.6%. This is in line with pitch discrimination thresholds of complex harmonic tones for young normal-hearing adults

Moore and Peters (

1992), although musicians can at best reach a 0.1% accuracy

Spiegel and Watson (

1984). A general finding was a difference in accuracy between pulling and pushing: pulling (approaching target from a higher pitch) produced, on average, +0.53% overshoot, while pushing (approaching target from a lower pitch) resulted in −0.68% undershoot. The data suggest a speed–accuracy trade-off: even though slightly less accurate, pushing took less time and was rated credibly higher in

agency,

confidence, and

pleasantness. Furthermore, mean errors were equalized towards the end of the session, as participants demonstrated that they learned slightly more by pushing than by pulling. All this considered, pushing might be more efficient in tasks that are learned and then performed frequently and require faster execution, such as controlling a musical interface. If the task is new or altogether rare, maximal accuracy seems to be achieved by pulling. Once again, since the task given to participants in the experiment did not require intensive use of their long-practiced musical skills, it is possible that the simplicity of the task itself prevented the measurement of the effect of haptic feedback on their performance. At the same time, the choice of an isolated simple musical task was motivated by a bottom-up approach, while leaving open the possibility to extend to full-fledged musical gestures in the future.

In general, a motivation for the lack of measured effects on performance might be found in the fact that our experiments involved only highly trained musicians—a choice motivated by our preliminary goal of obtaining feedback from experts, as well as due to the available pool of subjects at the Zurich University of the Arts, where all experiments were performed. Indeed, expert musicians’ motor and auditory skills and memory may have been good enough to allow performing the requested tasks while completely ignoring any vibrotactile feedback.

6. Conclusions

The augmentation of digital musical devices with vibratory feedback has the potential to re-establish a consistent physical exchange between musicians and their digital musical devices—similar to what is naturally found on acoustic musical instruments, where the source of sound and vibration coincides—with the demonstrated effect to enhance the playing experience and the perceived quality of the interface. On top of that, almost all participants in our experiments informally reported having enjoyed more playing the instruments when vibration was present, highlighting their “aliveness”. We found this impression to be consistent across all of the interfaces used in our study, which together covered a range of expressive possibilities from familiar to explorative, and from sound synthesis to control.

However, it is yet to be seen if and how such subjective enhancements may be reflected in the quality of playing, and musical performance altogether. Making objective measurements of these aesthetic aspects, however, poses a major research challenge, and the reported studies only scratched the surface of this issue.

Given the lack of significant effects of vibrotactile feedback on performance in specific musical tasks, it would be worth investigating whether early-stage learners, children, or individuals with, e.g., somatosensory or auditory impairments might benefit more from vibratory cues. For these groups, in fact, it would be easier to identify challenging tasks while guaranteeing the necessary experimental control. Furthermore, multisensory enhancement is known to be strongest in conditions where unisensory information is weakly effective

Spence (

2012). For expert musicians—whose performance level in the specific tasks tested was unaffected by the presence of vibratory cues—their resilience to vibratory disturbance might bring benefits in certain performance situations, such as in ensemble playing, or when there is background noise.

The vibrotactile rendering strategies adopted in the reported studies were rather basic by design: this allowed us to highlight which features (e.g., spectrum and dynamics) of vibratory signals would result in stronger effects. From a technological standpoint, the ability to synthesize simple vibrations—as long as they do not disrupt the audio–tactile coherence required to enhance the perceived quality—may result in a technical advantage when designing haptic musical interfaces. For example, it is rather trivial to generate sinusoidal signals at audio rate on low-cost embedded systems, and in this way, the effectiveness of vibrotactile feedback would be always optimized for rendition. More complex approaches were recently proposed in the literature, aiming to enrich the music listening experience

Merchel and Altinsoy (

2018);

Okazaki et al. (

2015)—these may be tested in future experiments.

The key finding of our study is that, by thoroughly testing and evaluating tactile augmentations, we can develop more rewarding musical interactions and potentially discover improvements on musical performance that are yet to be explored.