1. Introduction

The haptic channel plays an important role in the perception of the world and music practice, and is essential in the action-perception loop, especially in instances where technological mediation is absent. The physical interaction of a player with an acoustic musical instrument is crucial for the finesse control and skilful generation of sound. Additionally, the mechanical response of the instrument and the energetic exchange that takes over the interaction shape and define the expressive qualities of the musical performance. The same stands for the relationship of the craftsman with their tools during the making process.

In the field of computer-mediated artistic practices, digital music or digital art, the area of haptics and the haptic modality have been overlooked when compared to the other ones. In such practices, whenever human expressivity is in high demand during the interaction with virtual environments and virtual tools, the sense of touch is undervalued most of the time and the related technology is underdeveloped. Even though the importance of the dynamics of the performer-instrument interaction, especially in the digital realm, has been emphasised extensively by Claude Cadoz and his colleagues since the late 1970s with their research work in ACROE

Cadoz et al. (

1984), only recently has the wider research community turned its attention collectively to this topic

Papetti and Saitis (

2018).

Nevertheless, there have been very few publications focused on the questions that arise from the compositional or creative process when working with haptic interactions: How do we design those interactions? What is their role in the artwork? What function does the artistic concept serve? How can the audience or the public engage and appreciate these interactions? How can we develop them without losing our creative initiative? What is the role of the physical interface in those works?

Gurtner and O’Modhrain introduced the notion of tactile composition in 2002 and presented the compositional and artistic dimension of working with vibrotactile transducers in their paper

Gunther and O’Modhrain (

2003). Hayes also described her compositional process when working with a force-feedback device in

Hayes (

2012) as

Berdahl et al. (

2018) and Cadoz did briefly

Cadoz (

2018). A series of artistic works based on force-feedback devices have been analysed together in a previous publication by the author and other collaborators in

Leonard et al. (

2020) or as individual pieces:

Berdahl and Kontogeorgakopoulos (

2012);

Kontogeorgakopoulos et al. (

2019). In 2016, Wanderley organised the symposium “Force-Feedback and Music” in Montreal, where various researchers from the musical haptics community, working with force-feedback devices musically and technologically, gave a series of presentations.

1The purpose of this current work is to attempt to address those questions, to an extent, and to predominantly offer few directions and thoughts on the design, development and creative use of force-feedback haptic systems for musical composition, performance and artistic creation in general. These directions will be provided mainly through the lens of artistic creation and technological experimentation. The value of programmed haptic interactions has been examined on a more scientific basis and has been published in the past by other researchers in this field, including the author. The audiovisual content related to these works can be accessed on the author’s website.

2 2. Brief Technological Background

Haptic technologies consist of both a hardware component, which is the haptic device or interface with its electromechanical transducers, in the most typical case, that enable the required bidirectional coupling, and a software simulation environment where signals for the haptic channel are programmed alongside the acoustic and/or visual components. Vibrotactile-augmented interfaces and other types of tactile stimulators that cause skin deformation are not considered in this current paper since they do not produce substantial mechanical forces to the user as stated in

Marshall and Wanderley (

2006). An informative tutorial on haptic interfaces and devices can be found in

Hayward et al. (

2004), while an overview of haptic devices and rendering environments developed or used for music purposes can be found in

Leonard et al. (

2020). Some notable research works on the development of haptic devices specifically in the field of musical haptics come from

Florens (

1978);

Gillespie (

1992);

Nichols (

2002);

Verplank et al. (

2002);

Oboe (

2006);

Berdahl and Kontogeorgakopoulos (

2013).

Equally, on the software side, a few frameworks and environments have been developed, such as the CORDIS system

Cadoz et al. (

1984), which eventually became the CORDIS-ANIMA physical modelling framework by Cadoz, Luciani and Florens, the DIMPLE software by Sinclair and Wanderley in 2007

Sinclair and Wanderley (

2007), the HSP library by Berdahl, Kontogeorgakopoulos and Overholt in 2010

Berdahl et al. (

2010), the SYNTH-A-MODELER by Berdahl and Smith in 2012

Berdahl and Smith (

2012), the MSCI platform by Leonard, Castagné and Cadoz in 2015

Leonard et al. (

2018) and their whole recent works with Villeneuve as mi-Creative

Leonard and Villeneuve (

2019);

Leonard et al. (

2019). It is important to mention that in order to preserve the energetic coherence, only physical modelling techniques can be employed

Luciani et al. (

2009). Other well-known haptic simulation tools, such as CHAI3D, which the DIMPLE software was based on, OpenHaptics or other C/C++ frameworks for developing rigid dynamics models had never been used directly in a music or artistic context as far as the author of this paper knows.

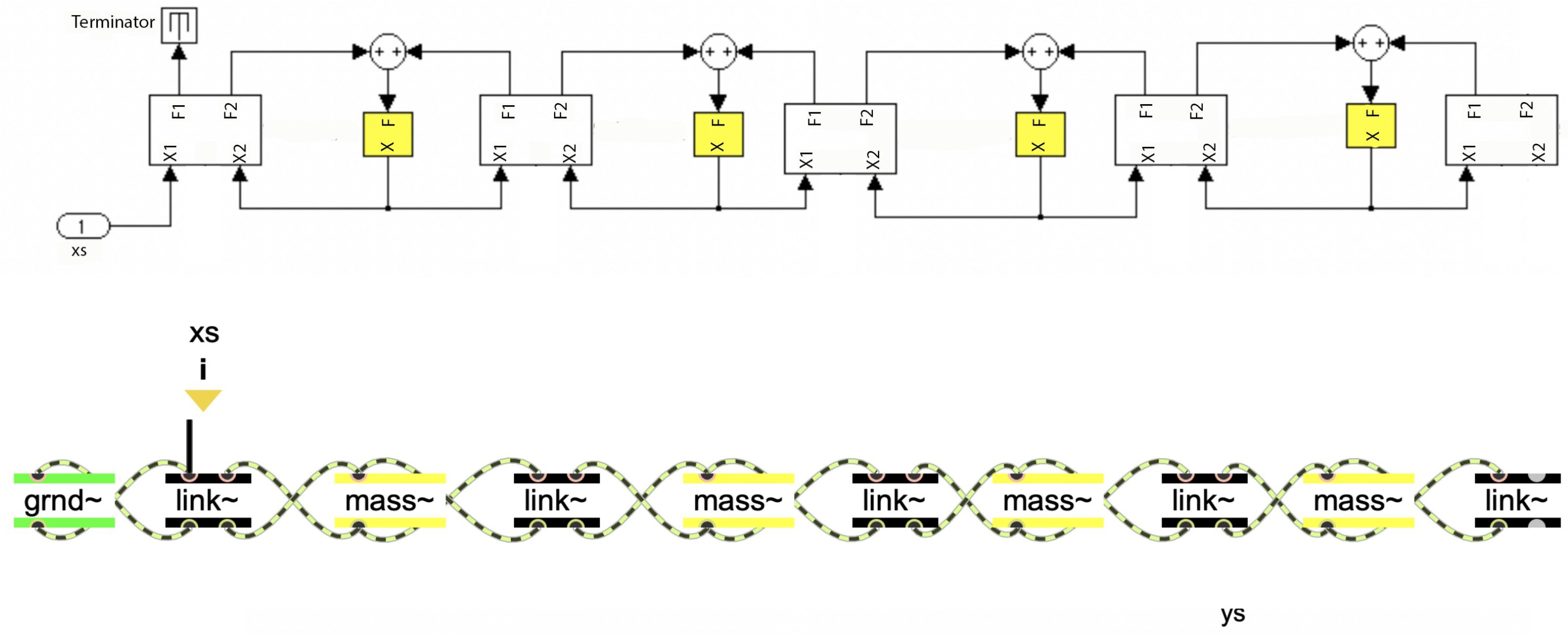

One of the most generic and easily accessible frameworks for haptic technologies, particularly for small models that can be combined with other algorithms in computer music for sound synthesis, processing and algorithmic composition, is the HSP framework. This framework is mainly based on the mass-interaction physical modelling scheme running on Max or Pure Data music programming languages. Its second version was partly based and built upon a Simulink framework (Simulink is a MATLAB-based graphical programming environment for modelling and simulating dynamical systems), developed by the author for his doctoral research in 2007

Kontogeorgakopoulos (

2008). This framework has been employed for the artistic and technical work presented in the following sections. The Simulink and HSP version for Max is illustrated in

Figure 1.

It is important to emphasise that computer music languages offer a canvas where the user may explore unique ideas easily and discover unexpected paths. Creative coding based on domain-specific programming languages and frameworks generalised this for the generation of video, graphics and interactive systems. Haptic interactions for artistic purposes should be approached in a similar exploratory way. Possibly the primary impediment to creating this type of haptic-based artistic work was the lack of availability of such types of frameworks in the past. This argument applies to the availability of low-cost haptic interfaces too.

3. Exploring the Possibilities of Haptics for Artist Creation

Each medium and material has its unique characteristics and qualities and brings new possibilities for artistic exploration. Haptic interfaces for musical composition engage the tactile and kinesthetic senses apart from the visual and auditory ones. Hence, when we work with this medium and we develop artworks and systems for composition and music performance, our aim is to equally address the sense of touch. We are, therefore, requested to develop models that sound right, feel right and meet our artistic vision. Our scope in the end is to not only build engaging digital musical instruments but also what we could call interesting digital feels.

Haptic perception, and its attributes, inform our design decisions at every step of the process. We create systems that synthesise the sonic output and render the desired interaction forces in real-time. Software-based haptic rendering algorithms in this context do not have to fulfil a purely scientific or engineering goal. Researchers in human-computer interaction with a focus on haptics investigate, in rigorous ways, how to simulate realistic sensations and construct accurate haptic virtual objects. In the context of art, the objective is to work with software and hardware environments that allow users to quickly explore and program interactions, and create expressive haptic, audio and visual content that may not necessarily be realistic.

It needs to be mentioned that low-cost haptic interfaces and simple haptic rendering environments, which offer flexibility in programming interactions and content, are limited and may have significant constraints. In the case of haptic devices, these constraints are related to the workspace, degrees of freedom, friction, bandwidth and peak forces whereas, on the software, they are related mainly to input-output latency and computation load. When exploring the possibilities of haptic interaction for artistic creation, we compose material properties, such as surface texture (roughness/smoothness, hardness/softness and sticky/slippery), compliance, viscosity, weight and geometric characteristics (less common in music applications), which can be perceived with exploratory procedures. Clearly, we need to develop an artistic language that embraces the constraints of the medium, its limitations and lack of precision and even accept its faulty behaviour, such as instabilities or other calculation artefacts.

In the past ten years, the author, together with other colleagues, has delved into five distinct directions in their artistic exploration with force-feedback devices. While some of the experiments and research questions have resulted in artistic creations, others have yet to be implemented in a finished artwork. The following section will provide a detailed presentation of these directions as they have been manifested in the author’s personal and collaborative artistic work.

3.1. Haptic Algorithms and Systems

There are two distinctive ways of developing haptic digital musical instruments and, in general, haptic virtual scenes as described in

Leonard et al. (

2020). The first direction involves a distributed system that consists of a gestural control section responsible for haptic rendering. This section is then mapped into a synthesis and processing one, using the common and widely established mapping paradigm. The second direction involves a single multisensory physical model that is exclusively described by physical laws and implemented and approximated computationally through various mathematical schemes. The same physical model is responsible for providing haptic, acoustic and visual outputs within the virtual scene. Both approaches have their own strengths and weaknesses, and the choice between them should be based on which one serves the artistic intention best. Finally, we could also imagine a hybrid approach where both directions are used simultaneously. In this scenario, the system is composed of distributed and unitary sub-systems accordingly.

3.1.1. Distributed Audiovisual-Haptic Systems

The distributed model allows for the independent development of the haptic interaction and the synthesis or processing algorithms. The signals calculated by the haptic rendering algorithms controlling the haptic interface are mapped into music signal processing and/or image signal processing parameters. Since the system is distributed, the haptic rendering algorithms may even run on separate computing machines, such as a microcontroller and generic computer as described in

Kirkegaard et al. (

2020).

Many new possibilities for musical expression and creation may be opened up by this approach and various haptic effects may be designed that enhance the perception of a virtual scene. The haptic rendering algorithms may be simple but effective and can consist of a few typical signal processing operations as reported in

Verplank (

2005) or in a more recent publication

Frisson et al. (

2022). When using mass-interaction models on the HSP framework, the force or position signals obtained from the mechanical network are fed into the synthesis/processing section of the system. The developer is able to empirically and interactively design the mass-spring system, which is thus (or even simultaneously) mapped to the sound engine.

It is relatively straightforward to create and integrate these types of haptic models with other audiovisual systems. Typically, the artist/designer can create a series of independent haptic effects (which can be time-based as reported before), such as detents, walls, sticky or slippery surfaces, magnetic fields, constant forces like gravity, pluck-type or bow-type interactions, arbitrary force profiles created by lookup tables, etc., and then map them directly to digital audio effects or a sound synthesiser’s input controls. More elaborate audio-haptic models, such as a position-based playback buffer, where the index is given by the position of a wiggled virtual mass (like a pendulum), can become very engaging. If the virtual mass is detached and reattached back to the virtual spring with a button, the model becomes even more stimulating because the interaction with the dynamics of the system becomes very pronounced. The physical models can become even more complex and larger, such as a plucked low-frequency string, where the masses’ position controls the envelopes of additive synthesis or the spatial image of sound on a multichannel system (an ideal setup would include as many speakers as there are masses). Many of those models have been designed by the author and Edgar Berdhal when they were, collectively and individually, exploring the possibilities of the HSP library. A small selection of them appears as examples that accompany the library.

Haptic technology can be useful and interesting in the context of DJing performances. The author, who has been a DJ for a long time, has explored the use of haptic technology in this domain through his project “Dj ++”. This project aims to extend traditional DJing techniques and combine them with more experimental music production and sound and music computing approaches. As part of this project, the author has conducted research on haptic digital audio effects using both the distributed and unitary approaches described earlier. One example of this work is the development of haptic crossfaders, which can provide a more expressive control element in a DJ’s performance.

Crossfaders allow smooth transitions between tracks and may be used to create rhythmic effects by rapidly switching back and forth between two music tracks. The haptic fader can be connected virtually to a damper with a negative damping coefficient in order to facilitate the speed of those transitions (which is not a realistic condition but an interesting one). Moreover, a low-pass filtered part of the music signal may be re-ejected to the faders (the audio signal is just filtered, scaled and connected to the avatar of the fader) in order to feel and control the sound itself, in synergy with the fader movement. The damping coefficient can also be modulated dynamically with envelopes triggered at specific cue points in the track or even by an LFO to create a haptic modulation effect. In the latter case, when the faders are moved by the Dj’s hand, they adhere to the oscillator rate.

3.1.2. Unitary Audiovisual-Haptic Systems

The unitary audiovisual-haptic systems approach the virtual scene with a single multisensory physical object instead of a distributed one. This type of modelling of virtual scenes is the only one that preserves the instrumental relation. The type of human-computer interaction in which the energy is preserved is called ergotic interaction

Luciani and Cadoz (

2007). In this scenario, as previously mentioned, the mechanical (for the gestural interaction), the acoustical (for the sound synthesis) and the visual properties (for visual synthesis) of the physical object are generated computationally from a single physical model that controls the auditory, graphical and haptic display or interface accordingly.

A single multisensory physical model is much more challenging to design with a multitude of constraints, but it has the potential to become even more intimate with the user. The developer must have more in-depth knowledge of physical modelling formalisms in order to develop virtual mechanical objects that can generate the complete multimedia scene. The author’s PhD research proposed the use of a mass-interaction network as a means of designing musical sound transformations under the concept of instrumental interaction. A series of classic digital audio effect algorithms were developed (filters, delay-based effects, distortions and amplitude modifiers) that could be directly controlled by a haptic device

Kontogeorgakopoulos (

2008). The basic concept was to be able to interact instrumentally when performing live sample-based digital music, like in a DJ set, as mentioned in

Section 3.1.1.

A representative example of haptic digital audio effects is haptic distortion. In this case, a physical model is employed that compresses the motion of a mechanical oscillator put in a forced vibration by a music signal. The user interacts with the model with a two-degree-of-freedom FireFader haptic device. The masses of the faders’ knobs, which appear as avatars in the virtual scene, compress and squeeze the vibrating mass of the oscillator and, therefore, distort the audio signal. The user feels the interaction haptically and accordingly interacts with the model. Other similar models have been developed and tested in the past

Kontogeorgakopoulos and Kouroupetroglou (

2012).

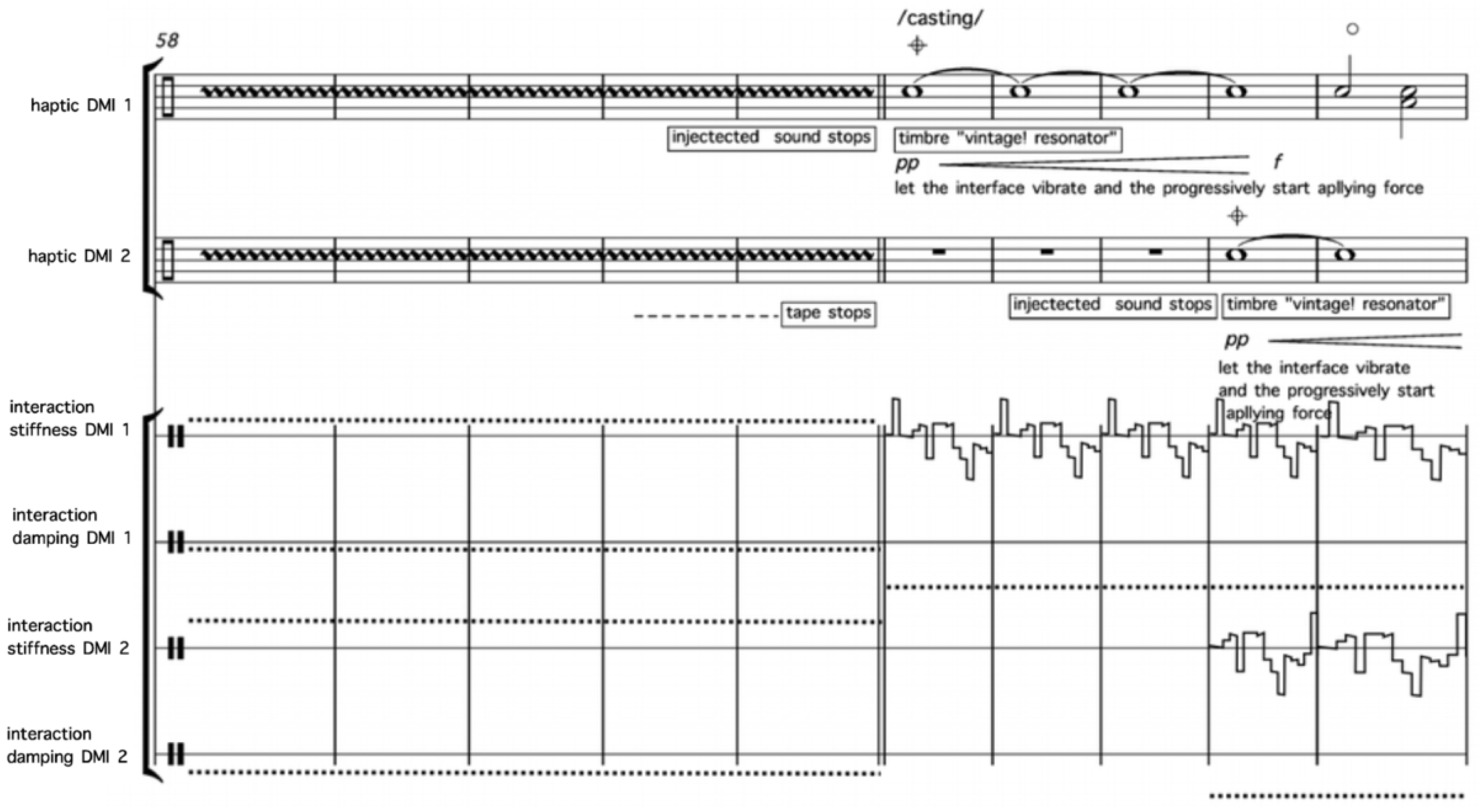

Even though the author has been musically experimenting with these concepts long before, the first time they were officially put in practice, live in an artistic context, was in 2012 with the musical composition for two force-feedback devices,

Engraving–Hammering–Casting, which was composed in collaboration with Edgar Berdahl (

Berdahl and Kontogeorgakopoulos 2012). In order to create both sound and haptic force-feedback, a virtual physical model of vibrating resonators was designed and utilised both for sound synthesis and as haptic digital audio effect. The Falcon 3D robotic feedback device from HapticsHouse (NovIt in the past) was selected as the haptic device since the HSP framework included the appropriate drivers. Ergotic interaction was an integral part of the compositional medium. The music celebrated materiality in the digital realm and the way craftsmen skillfully interact with their tools.

Figure 2 depicts a section of the music score where the interaction and physical model parameters are notated.

The overall objective of haptic digital audio effects was to create and perform music, or to DJ, in a way that allows the performer to be intimately connected with the sound processing algorithm as a virtuoso performer with the instrument. As

Section 3.1.1 highlights, ergotic interaction is not the sole means of achieving this goal. The purity of having one physical model performing both the audio and interaction is very interesting conceptually and has opened a few new possibilities. It is, however, very challenging and it is not clear if the end result satisfies the effort—especially in the digital audio effects case or if the composer wants to deviate from the physical modelling type of sounds. It is worth noting that the mass-interaction formalism is capable of synthesising more abstract timbres, close enough, for example, to an FM or granular synthesiser but it clearly does not have the flexibility to emulate every other sound synthesis or sound processing paradigm. Furthermore, there are many problems related to the impedance mismatching of physical models that can arise and overcomplicate the modelling process. An in-depth examination of this subject can be found in

Kontogeorgakopoulos (

2008).

In Engraving–Hammering–Casting, the goal was to experimentally explore, in full, the potential of the ergotic medium when using a very elementary physical model. The composers aspired to create a music performance in which the system amplified every gestural nuance. For that reason, their hand movements were also captured by cameras and projected on big screens. The composition lacked timbral richness, but it successfully celebrated the expressivity of the hand. The form and content of the composition were informed by the capabilities of the force-feedback device. The Falcon device was an appropriate budget choice to explore the interaction with tools within part of the sonic-ergotic medium since it is designed to simulate virtual tool interactions. Clearly, in this case, the concept of craftsmanship led to the development of the physical modelling system and the whole composition accordingly.

3.2. Performers Intercoupling

One of the interesting aspects of computer haptics is the ability to collaboratively work and manipulate the same virtual object or the same virtual scene. This opens up the possibility of intercoupling at the gestural level. In a musical context, virtual mechanical coupling allows musicians and performers to co-influence their gestures. The performers are able to feel each other and co-create the musical material.

Probably the first piece that explored this artistic direction was the musical composition

Mechanical Entanglement by Kontogeorgakopoulos, Siorros and Klissouras in 2016

Kontogeorgakopoulos et al. (

2019). The piece is designed for three performers, each of whom uses a force-feedback device equipped with two haptic faders. The devices are connected to one another through virtual linear springs and dampers and their characteristics are modified during the different sections of the composition. Other endeavours that followed a similar trajectory include Cadoz’s piece

Quetzalcoatl and Berdahl’s

thrOW, as analysed in the paper by

Leonard et al. (

2020).

The aspiration for this project was to question and examine the idea of co-influence artistically, at a bodily level, through the means of haptic technology. Concepts such as productive or unproductive synergy, collaboration and co-creations drove the development of the technological aspects of the project and the whole piece in itself. Haptics offer this unique opportunity to address these ideas on a very intimate and controllable setup. By blending research and artistry, the composition sought to create a setting where the audience could also partially experience these concepts during the performance.

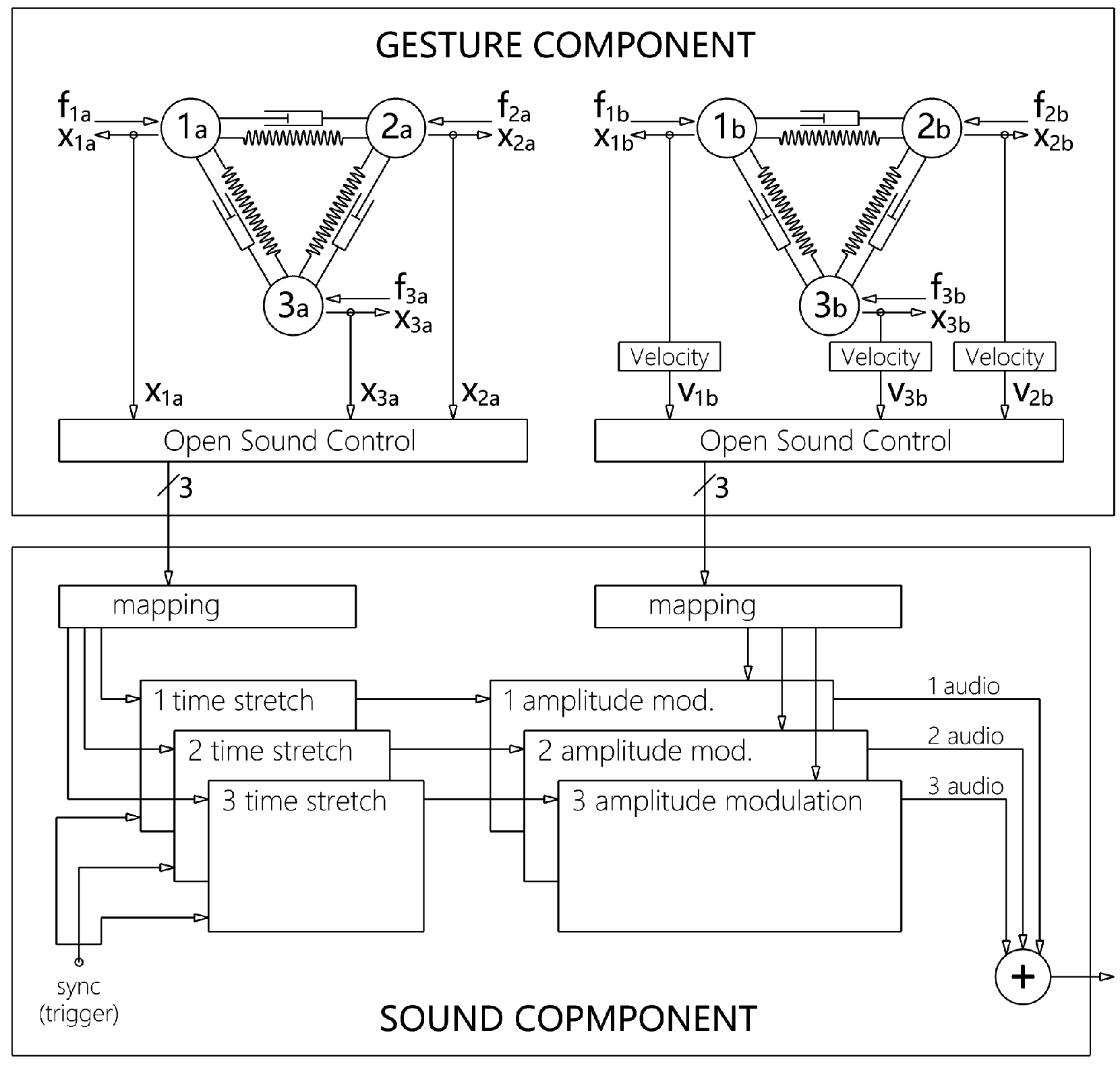

The physical model designed and developed for the project is based once again on the lumped element modelling paradigm implemented on the HSP framework. The three haptic devices are integrated into a single linear oscillatory system. The performers use their gestures to control the same sound processing algorithms, and they also interact with each other “internally” through virtual links within the virtual network. It is a distributed haptic system where a simple mapping scheme is employed to control time stretching and amplitude modulation algorithms. The model was conceived in order to ensure that the co-manipulation of the music content through a virtual-mechanical medium is meaningful and perceived by the performers and, to an extent, by the audience. For that reason, the whole composition was organised around the action of stretching: physically stretching a simulated material while simultaneously time-stretching a prerecorded music material. Moreover, the force signals were visualised by dimmed LED lights that are part of the original FireFader design. The system architecture of

Mechanical Entanglement is illustrated in

Figure 3.

3.3. Haptic Interfaces as Part of the Artistic Practice

Haptic interfaces may use a range of actuator technologies from DC motors to pneumatic, hydraulic or shape-memory alloys. In any case, those devices are capable of generating motion. Therefore, it seems logical that artists may explore those kinetic qualities of the interfaces and create artworks that are in dialogue with them. However, there are not sufficient examples of artistic practices that embrace this aspect of technology.

Kinetic art and, more specifically, kinetic sculptures have a long history since the beginning of the 20th century from Futurists to Dadaists and notable artists, like Marcel Duchamp, Gabo and Moholy-Nagy, up to contemporary sound artists, such as Zimoun. Therefore, motorised movement appears in the vocabulary of technologically based art

Brett (

1968).

Under this perspective, we may see the potential of haptic interfaces to enrich this artistic practice. In 2013, the author attempted to create an audiovisual performance, where the kinetic aspects of the haptic interface were at the forefront.

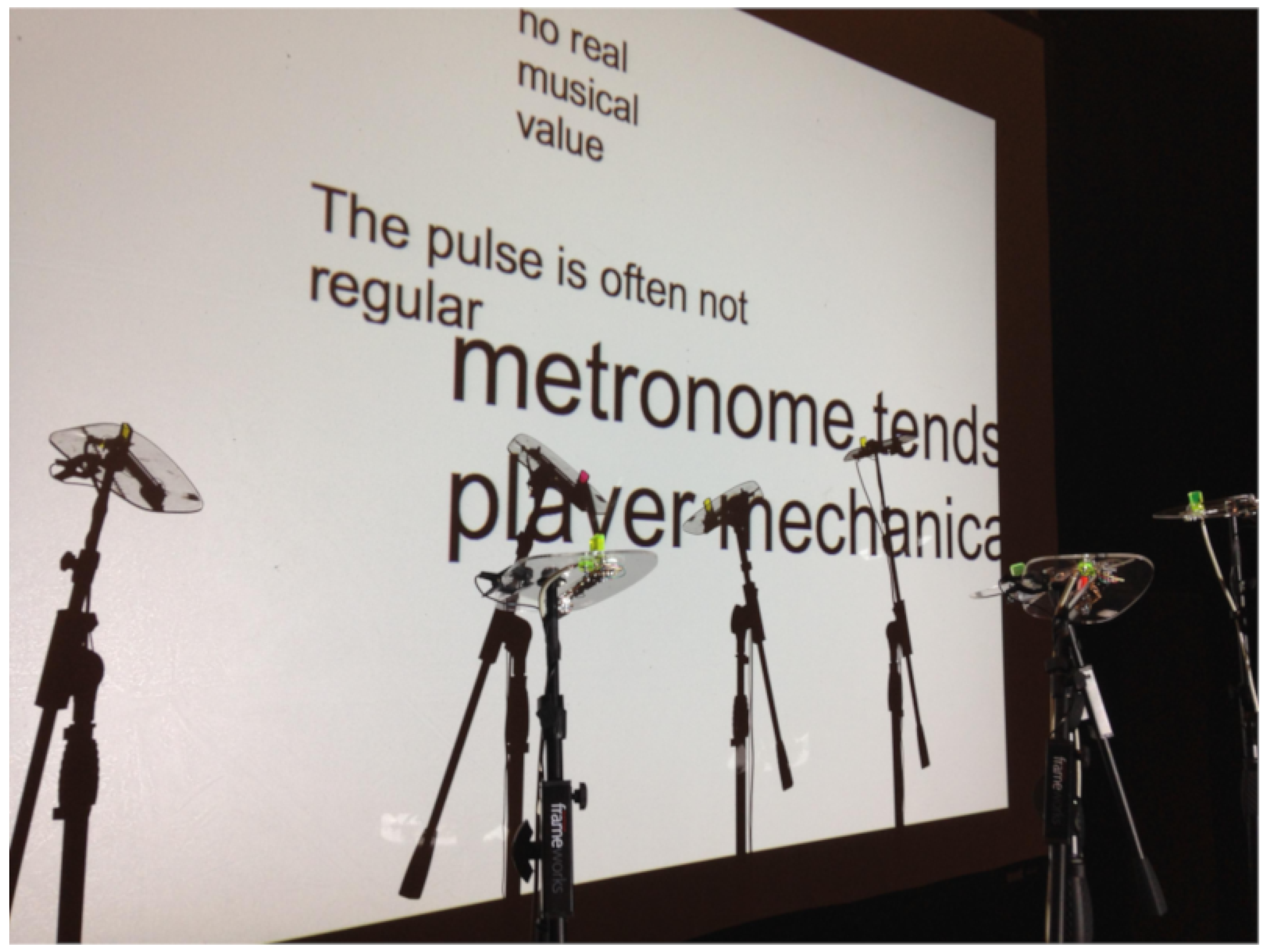

Metronom is essentially a live audiovisual composition that utilises the haptic interface as an important visual element of the artwork

Leonard et al. (

2020). For that reason, a custom haptic interface based on the FireFader has been designed and fabricated by the author and Olivia Kotsifa, a designer and architect who specialises in digital fabrication. The open-source hardware elements of the FireFader offer the possibility to modify and readapt the original design to the needs of each individual project.

During the performance, the motion and shape of the motorised faders cast shadows on a white screen, or wall in the case of a gallery space, in sync with the interactive projected typographical elements. The structure of the interface is designed in such a way that refracts the light of the projector and creates interesting semi-transparent forms. The knobs and the top plate of the device are made out of different laser-cut acrylic colours and have curvy shapes as seen in

Figure 4. In the piece, the performer interacts with the device or lets the faders oscillate without any further human intervention. Therefore, the device appears as a kinetic sound sculpture since the movement of the faders generates sound too as we will see in the following section. The work has a very clear structural clarity. The faders appear and behave in the 3D space as a type of metronome, with very clear-cut machine aesthetics.

The interaction model, in order to achieve the rhythmic motion of the faders in that piece, is the most basic one: The motor is carefully and directly driven by force signals of various intensities and directions. Those signals are represented as MIDI notes of different velocities and are prearranged on the timeline of a typical sequencer or digital audio workstation, such as Ableton Live. The MIDI signals are retrieved by the Max programming environment, become scaled and are directed as inputs on the four faders used for the piece. In the HSP framework, the FireFader appears as an external object, with audio inlets and outlets for the position and force signals accordingly. This was the most simple, efficient and accurate way to move the faders at the desired speeds. Other methods, such as interacting directly with the simple virtual mechanical oscillators linked to the physical knob of the fader, did not perform well due to stability issues. Control mechanisms, such as the proportional–integral–derivative control of the fader, worked better but an elementary model with force control signals was adapted for simplicity. Moreover, on a conceptual level, the author wanted to interact and “dance” with virtual agents that were taking the control of the faders. On the firmware programming side, the program had to be modified slightly in order to deactivate the protection procedures that would turn off the force of the fader when it is not touched or when it reaches the end of its travel. Those procedures are described in detail in

Berdahl and Kontogeorgakopoulos (

2013).

Since the first development of the FireFader in 2011, the device took a few other different forms without changing the electronic circuits or the firmware run on the microcontroller almost at all. More aesthetic, or even artistic, factors and less ergonomic ones informed the design. As seen in previous paragraphs, the version developed for the audiovisual composition

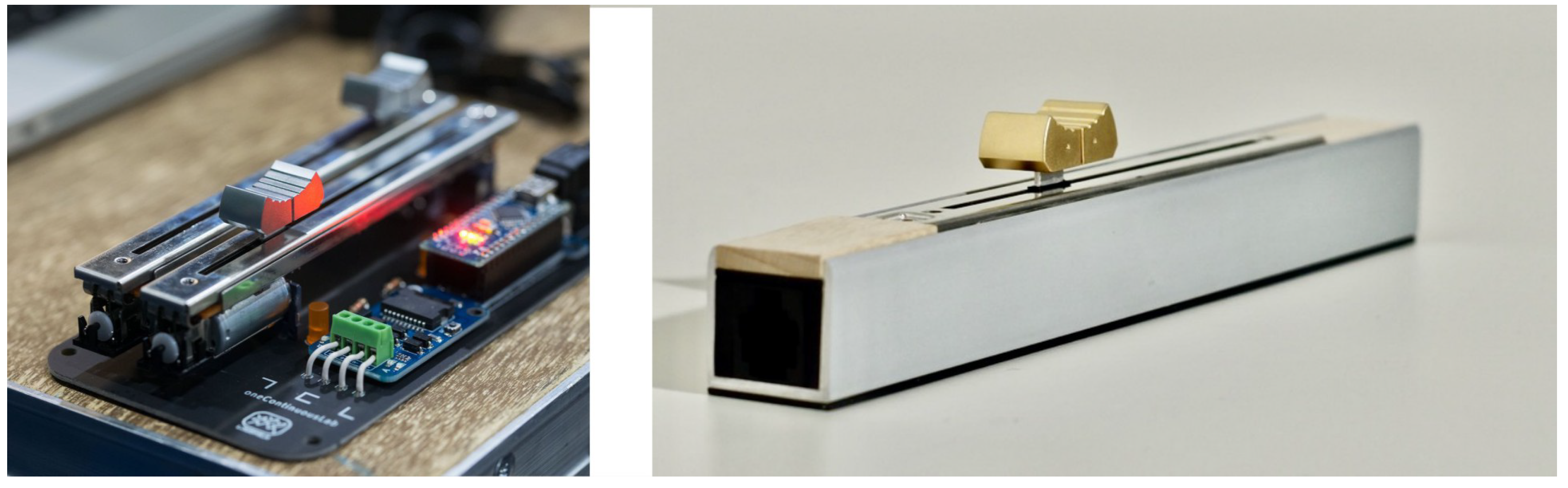

Metronom in 2013 had sculptural qualities. Since then, two alternative versions were designed and developed by the author and other collaborators (we kept the name FireFader for the first design), which are illustrated in

Figure 5.

The initial iteration aimed to produce a single-fader instrument with a minimalistic design that uses high-quality materials like wood and has a pure, unadorned form. The microcontroller board was not placed together with the motorised fader in order to preserve the simple design language. This edition was specifically built for the Ableton Loop Festival in 2017 by the author and his collaborator from

oneContinuousLab,

3 an art-science studio-lab, Odysseas Klissouras. It was used mainly in conjunction with a series of audio-haptic models, such as haptic digital audio effects, and was designed for DJing performances.

The other version depicted in the same picture was developed for the author’s haptic faders making workshops.

4 It was co-designed by the author and Staš Vrenko from the Ljudmila Science and Art Laboratory in Slovenia, based on Berdahl’s PCB design.

5 It was co-designed by the author and Staš Vrenko from the Ljudmila Science and Art Laboratory in Slovenia, based on Berdahl’s PCB design. It was essential to design a haptic fader for such a type of workshop that was very easy to build, so all the non-crucial functionalities, like the visual feedback coming from the LEDs, were removed.

3.4. Electromechanical Sound Generation

Another interesting aspect related to the motion of the force-feedback devices is their capability to physically interact with real mechanical objects. The actuators of such devices can induce energy and make physical objects move and vibrate. This approach is reminiscent of Berdahl’s approach to the force-feedback teleoperation of acoustic musical instruments

Berdahl et al. (

2010) and blurs the boundaries between the physical and virtual. In an extreme scenario, those physical structures may be part of the haptic device itself.

Obviously, robotic mechanical instruments

Kapur (

2005) have a long history. Equally, there are numerous sound art and experimental music examples where physical structures and objects are actuated in order to produce a sonic output that is often captured by contact mics

Licht (

2019). Developing a haptic device with interesting acoustic qualities will probably never be in the design specifications (it is not even a desirable option) or will never become a criterion in the performance measures of those devices. However, in an artistic context, it makes sense to be able to experiment with their material and sonic aspects. The artist-designer must have the possibility and potential, in a piece of art with haptics, to develop his own version or variation of a haptic device and explore those tools freely, even from the hardware side and their physical-material form.

In

Section 3.3, the audiovisual performance

Metronom was presented, where the kinetic qualities of haptic devices are explored. The same piece makes use of their sonic characteristics and thus the interface becomes a type of sound sculpture. The faders’ mechanical sounds are recorded by microphones from the acrylic plate of the device and processed in real time by digital signal processing algorithms. The performer’s gestures interfere with the faders’ motion and/or slightly alter the acoustic properties of the acrylic material. An interesting dialogue occurs between the acoustic sound and the more synthetic sound coming from the algorithms: in order to preserve the more natural and organic textures from the microphones, resonators and impulse responses of physical models have been used to alter the recorded sound. The physical and the virtual interact in order to create a more rich and more intimate sonic environment.

A handful of similar approaches exist for sound generation by acoustic excitation, directly feeding digital resonators

Neupert and Wegener (

2019). In the typical case, the performer interacts mechanically with a rigid surface by rubbing it, tapping it, etc. in order to generate rich excitation signals. Every type of physical modelling scheme may be used for this hybrid controller. In

Metronom, the faders create those excitation signals, impact sounds and friction sounds, while the performer interferes with their motion. The digital signal processing algorithms mentioned before, together with other sound synthesis algorithms, controlled by the position of the faders, create the sonic environment of the audiovisual performance.

3.5. Media Art and Art Installations

Haptic technology can be utilised in various projects that fall within the scope of visual and media arts, in addition to the musical and audiovisual works discussed previously. Clearly, computer systems for modelling, sculpting, painting and drawing have benefited from the potential of such interfaces

Lin and Baxter (

2008). In this section, however, the goal is not to present the benefits of the tools for those creative ventures, but to discuss occasions where the haptic technology is not merely a tool for artistic creation but employs its features as its very own medium as the theorist Christian Paul would argue

Paul (

2015).

Stahl Stenslie created the

Inter-Skin Suit in 1994, a piece that involves both participants wearing a sensoric outfit that is capable of transmitting and receiving various multi-sensory stimuli. Stelarc’s renowned work

Ping Body (1996) equally utilised a muscle-stimulation mechanism that employed electric signals. Eric Gunther and Sile O’Modhrain have proposed the idea that the tactile sensation could have an aesthetic value by itself, independent of any other sensory experiences

Gunther and O’Modhrain (

2003). More recently, in 2015, Klissouras created a haptic installation named

Skin Air | Air Skin, which consists of a rhythmical exploration of air pressure on the skin. The same technology has been used for the work

Air of Rhythms—Hand Series, created in collaboration with the author of the paper. Hayes and Rajko published a paper where they explore an interdisciplinary approach towards the aesthetics of touch

Hayes and Rajko (

2017). Papachatzaki created the piece

Interpolate (2019), an interactive choreographic performance that utilises wearable technologies and pneumatics to create a connection between two dancers and the audience.

The works that explore haptics beyond or in combination with music are typically based on vibrotactile stimulation, or the deformation of the skin, rather than force-feedback technology or haptic interfaces. The author, together with Klissouras, is currently working on a project under the title Interpersonal that aims to provide a more kinesthetic experience using force-feedback devices.

Interpersonal is a haptic-audio-light interactive networked installation that interrogates the idea of physical intimacy in our media-driven world. It is distributed geographically in two locations and consists of a physical interface with a single motorised fader in each location. The visitors of the distributed exhibition will communicate haptically by manipulating the fader, feel each other’s gestures remotely and co-create a unified soundscape and lightscape. The physical interface is based on the FireFader technology and has a formal simplicity and physical structure that avoids the complexity of modern devices. The aim is to create an intimate experience where the focus will be primarily on the bodily encounter of the viewer and the work. Each hand gesture is unique, like the voice, face or handwritten signature; it’s an individual gestural signature of the person that performs it but only in the context of existing haptic communication.

The haptic system is a distributed one in which the haptic rendering is calculated by a mass-interaction physical model with two haptic avatars and a separate process, which controls the DMX lights and the sound generation algorithms. Similar to the musical composition

Mechanical Entanglement presented in

Section 3.2, the aim is to develop a telematic participatory experience where the visitors will communicate and interact remotely by exchanging forces and not tactile stimuli. The first version of this art project is almost ready and is envisaged to be installed soon in a single space, where the visitors are placed on the opposite side of a wall in the middle of the gallery space as seen in

Figure 6. The latency technical problems that occur in the long-distance networked version need to be addressed with more systematic research.

4. Conclusions

This paper explored five different creative and research directions in which computer haptics can be utilised within a musical and an artistic context. As previously stated, these directions are by no means exhaustive and are primarily derived from a collection of collaborative artworks, creative investigations and personal open-ended explorations with this technology. It may be considered partially as a secondary type of research since it draws on results from other published academic papers co-written by the author and other colleagues mentioned in the previous sections. The goal was to contextualise and classify those explorations, to reflect on them and to identify the five research directions that derive from them. Nevertheless, the paper includes a substantial amount of primary research since, for the first time, it presents models and artworks in progress, carried out uniquely by the author.

Most of these findings did not include formal user testing or were not developed upon a rigid mathematical framework. Despite lacking formal user testing, or a rigorous mathematical framework, they still have the potential to be valuable and shed light on areas for future exploration and creative possibilities.

These areas can be summarised below:

The empirical design and development of distributed and unitary haptic systems based on accessible software platforms;

Creative research on the performer’s virtual intercoupling;

The exploration of haptic hardware as an artistic medium;

The creative investigation of the electromechanical sonic aspects of haptic devices;

The usage of force-feedback technology on media-art projects and interactive installations.

The software models and the hardware developed during the creative research all have a minimalistic aesthetic and quality that derives largely from the author’s personal design philosophy. Additionally, they were primarily conceived to fulfil a specific artistic agenda aligned with the demands of particular projects. Since the relationship between creators and their tools is interdependent, it is not always favourable to separate the roles of creators and tool designers/developers, particularly in novel areas such as this one. In that case, as long as the artistic enquiries continue, the software and hardware will further evolve into many new unanticipated directions. Creating with the sense of touch in mind and building tools that integrate haptic feedback is a new area of innovation and research that is now becoming more and more accessible. Artist researchers should be an active part of that dialogue.

Undeniably, there are several other basic areas that were not researched, such as artworks that involve teleoperation or works that approach haptics in more abstract ways. The last one includes the full design of an abstract immersive virtual reality environment, where the haptic sense plays a prominent role above the other senses.

Haptic sensing plays a fundamental role in our interaction with the physical world. It is a crucial part of our sensory experience, along with vision, hearing, taste and smell. Since our perception is inherently multisensory, one could easily argue that digital art and music should aim to engage with all of the senses. This approach can provide a more holistic and embodied experience for the performer, viewer or participant, allowing them to engage with the artwork and instrument in a more visceral and impactful way.